Abstract

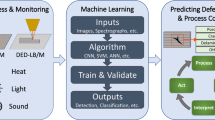

One of the primary challenges associated with bolts, frequently used in assembly structures and systems, is the issue of torque loosening. This problem can arise due to shock and vibration, potentially leading to significant damage and structural failure. The difficulty in identifying and monitoring torque loosening arises from the variability and nonlinear effects present in bolted joints. In this paper, we proposed a machine-learning algorithm architecture designed for pattern recognition, detection, and quantification of torque loosening in bolted joints. This approach combines unsupervised and supervised machine learning algorithms to address the challenges of assessing the bolt torque loosening issue. Our algorithm utilises a damage index, calculated from the frequency response of the jointed system using the Frequency Response Assurance Criterion, as input data for the unsupervised–supervised classification algorithm. This classification ML algorithm effectively identifies and categorises instances of torque loss by analysing indirect vibration measurements, even in situations where the bolted system’s state is unknown. Additionally, we introduce a regression algorithm to quantify torque loosening levels. The results obtained from our proposed machine learning algorithm to overcome torque loosening in bolted joints show that the inherent uncertainties of a data-driven approach intrinsically influence torque-related issues. This assessment is based on experimental raw data collected under diverse test conditions for the bolted structure. We employ a range of validation and cross-validation metrics to evaluate the effectiveness and accuracy of these ML algorithms in detecting and diagnosing torque-related issues. These metrics play a crucial role in assessing the algorithms’ efficiency and precision in determining the state of the bolted connection and the corresponding torque levels with associated uncertainty quantification.

Similar content being viewed by others

References

Zaman I, Khalid A, Manshoor B, Araby S, Ghazali MI (2013) The effects of bolted joints on dynamic response of structures. IOP Conf Ser Mater Sci Eng 50:012018

Zhang M, Shen Y, Xiao L, Qu W (2017) Application of subharmonic resonance for the detection of bolted joint looseness. Nonlinear Dyn 88:1643–1653

Nikravesh SMY, Goudarzi M (2017) A review paper on looseness detection methods in bolted structures. Latin Am J Solids Struct 14:2153–2176

Miao R, Shen R, Zhang S, Xue S (2020) A review of bolt tightening force measurement and loosening detection. Sensors 20:3165

Eraliev O, Lee K-H, Lee C-H (2022) Vibration-Based loosening detection of a multi-bolt structure using machine learning algorithms. Sensors 22:1210

Huang J, Liu J, Gong H, Deng X (2022) A comprehensive review of loosening detection methods for threaded fasteners. Mech Syst Signal Process 168:108652

Dutkiewicz M, Machado MR (2019) Measurements in situ and spectral analysis of wind flow effects on overhead transmission lines. Sound Vib 53(4):161–175

Machado MR, Dos Santos JMC (2015) Reliability analysis of damaged beam spectral element with parameter uncertainties. Shock Vib 2015:574846. https://doi.org/10.1155/2015/574846

Machado MR, Adhikari S, Dos Santos JMC (2018) Spectral element-based method for a one-dimensional damaged structure with distributed random properties. J Braz Soc Mech Sci Eng 40:415

Machado MR, Dos Santos JMC (2021) Effect and identification of parametric distributed uncertainties in longitudinal wave propagation. Appl Math Model 98:498–517. https://doi.org/10.1016/j.apm.2021.05.018

Sousa AASR, Coelho JS, Machado MR, Dutkiewicz M (2023) Multiclass supervised machine learning algorithms applied to damage and assessment using beam dynamic response. J Vib Eng Technol. https://doi.org/10.1007/s42417-023-01072-7

Murphy KP (2012) Machine learning: a probabilistic perspective. The MIT Press

Zhou Y et al (2022) Percussion-based bolt looseness identification using vibration-guided sound reconstruction. Struct Control Health Monit 29:2–5

Wang F, Song G (2020) 1D-TICapsNet: an audio signal processing algorithm for bolt early looseness detection. Struct Health Monit. https://doi.org/10.1177/1475921720976989

Wang F, Song G (2020) Bolt-looseness detection by a new percussion-based method using multifractal analysis and gradient boosting decision tree. Struct Health Monit 19(6):2023–2032. https://doi.org/10.1177/1475921720912780

Tran DQ, Kim JW, Tola KD, Kim W, Park S (2020) Artificial intelligence-based bolt loosening diagnosis using deep learning algorithms for laser ultrasonic wave propagation data. Sensors 20:1–25

Zhang Y, Zhao X, Sun X, Su W, Xue Z (2019) Bolt loosening detection based on audio classification. Adv Struct Eng 22:2882–2891

Kong Q, Zhu J, Ho SCM, Song G (2018) Tapping and listening: a new approach to bolt looseness monitoring. Smart Mater Struct 27:07LT02

Gong H, Deng X, Liu J, Huang J (2022) Quantitative loosening detection of threaded fasteners using vision-based deep learning and geometric imaging theory. Autom Constr 133:104009

Zhao X, Zhang Y, Wang N (2019) Bolt loosening angle detection technology using deep learning. Struct Control Health Monit 26:e2292

Zhang Y et al (2020) Autonomous bolt loosening detection using deep learning. Struct Health Monit 19:105–122

Yu Y et al (2021) Detection method for bolted connection looseness at small angles of timber structures based on deep learning. Sensors 21:3106

Pham HC et al (2020) Bolt-loosening monitoring framework using an image-based deep learning and graphical model. Sensors 20:1–19

Ramana L, Choi W, Cha YJ (2019) Fully automated vision-based loosened bolt detection using the Viola-Jones algorithm. Struct Health Monit 18:422–434

Cha YJ, You K, Choi W (2016) Vision-based detection of loosened bolts using the Hough transform and support vector machines. Autom Constr 71:181–188

Razi P, Esmaeel RA, Taheri F (2013) Improvement of a vibration-based damage detection approach for health monitoring of bolted flange joints in pipelines. Struct Health Monit 12:207–224

Ziaja D, Nazarko P (2021) SHM system for anomaly detection of bolted joints in engineering structures. Structures 33:3877–3884

Miguel LP, Teloli RD, da Silva S, Chevallier G (2022) Probabilistic machine learning for detection of tightening torque in bolted joints. Struct Health Monitor 21:2136

Teloli RD, Butaud P, Chevallier G, da Silva S (2022) Good practises for designing and experimental testing of dynamically excited jointed structures: The Orion beam. Mech Syst Signal Process 163:108172. https://doi.org/10.1016/j.ymssp.2021.108172

Chen R et al (2017) Looseness diagnosis method for connecting bolt of fan foundation based on sensitive mixed-domain features of excitation-response and manifold learning. Neurocomputing 219:376–388

Zhuang Z, Yu Y, Liu Y, Chen J, Wang Z (2021) Ultrasonic signal transmission performance in bolted connections of wood structures under different preloads. Forests 12:652

Wang F (2021) A novel autonomous strategy for multi-bolt looseness detection using smart glove and Siamese double-path CapsNet. Struct Health Monitor 21:2329

Wang F, Chen Z, Song G (2020) Monitoring of multi-bolt connection looseness using entropy-based active sensing and genetic algorithm-based least square support vector machine. Mech Syst Signal Process 136:106507

Zhou L, Chen SX, Ni YQ, Choy AWH (2021) EMI-GCN: a hybrid model for real-time monitoring of multiple bolt looseness using electromechanical impedance and graph convolutional networks. Smart Mater Struct 30:035032

Mariniello G, Pastore T, Menna C, Festa P, Asprone D (2021) Structural damage detection and localization using decision tree ensemble and vibration data. Comput Aided Civ Infrastruct Eng 36(9):1129–1149

de Oliveira Teloli R, Butaud P, Chevallier G, da Silva S (2021) Dataset of experimental measurements for the Orion beam structure. Data Brief 39:107627. https://doi.org/10.1016/j.dib.2021.107627

Bishop CM (2006) Pattern recognition and machine learning. Springer, Berlin

Webb AR, Copsey KD (2011) Statistical pattern recognition. Wiley

Fieguth P (2022) An introduction to pattern recognition and machine learning. Springer, Cham. https://doi.org/10.1007/978-3-030-95995-1

Figueiredo E, Brownjohn J (2022) Three decades of statistical pattern recognition paradigm for SHM of bridges. Struct Health Monitor 21(6):3018–3054. https://doi.org/10.1177/14759217221075241

Farrar CR, Worden K (2013) Structural health monitoring: a machine learning perspective. Wiley

Sinou JJ (2009) A review of damage detection and health monitoring of mechanical systems from changes in the measurement of linear and non-linear vibrations. Measurement, effects and control, june, mechanical vibrations, pp 643–702

Barreto LS, Machado MR, Santos JC, Moura BB, Khalij L (2021) Damage indices evaluation for one-dimensional guided wave-based structural health monitoring. Latin Am J Solids Struct 1:1–10

Zang C, Friswell MI, Imregun M (2003) Structural health monitoring and damage assessment using measured FRFs from multiple sensors, part I: the indicator of correlation criteria. Key Eng Mater 245–246:131–140

Moura BB, Machado MR, Mukhopadhyay T, Dey S (2022) Dynamic and wave propagation analysis of periodic smart beams coupled with resonant shunt circuits: passive property modulation. Eur Phys J Special Top 231(8):1415–1431

Moura BB, Machado MR, Dey S, Mukhopadhyay T (2024) Manipulating flexural waves to enhance the broadband vibration mitigation through inducing programmed disorder on smart rainbow metamaterials. Appl Math Model 125:650–671

Machado MR, Adhikari S, Dos Santos JMC (2017) A spectral approach for damage quantification in stochastic dynamic systems. Mech Syst Sig Process 88:253–273

Dallali M, Khalij L, Conforto E, Dashti A, Gautrelet C, Machado MR, De Cursi ES (2022) Effect of geometric size deviation induced by machining on the vibration fatigue behaviour of Ti-6Al-4V. Fatigue Fract Eng Mater Struct 45(6):1784–1795. https://doi.org/10.1111/ffe.13699

Saeed Z, Firrone CM, Berruti TM (2021) Joint identification through hybrid models improved by correlations. J Sound Vib 494:115889

Tharwat A (2021) Classification assessment methods. Appl Comput Inf 17:168–192

Markoulidakis I, Kopsiaftis G, Rallis I, Georgoulas I (2021) Multi-class confusion matrix reduction method and its application on net promoter score classification problem. ACM Int Conf Proc Ser 10(1145/3453892):3461323

Galar M, Fernández A, Barrenechea E, Bustince H, Herrera F (2011) An overview of ensemble methods for binary classifiers in multi-class problems: experimental study on one-vs-one and one-vs-all schemes. Pattern Recognit 44:1761–1776

Ezugwu Absalom E, Ikotun Abiodun M, Oyelade Olaide O, Abualigah Laith, Agushaka Jeffery O, Eke Christopher I, Akinyelu Andronicus A (2022) A comprehensive survey of clustering algorithms: State-of-the-art machine learning applications, taxonomy, challenges, and future research prospects. Eng Appl Artif Intell 110:104743. https://doi.org/10.1016/j.engappai.2022.104743

Cutler J, Dickenson M (2020) Introduction to machine learning with python. O’Reilly. https://doi.org/10.1007/978-3-030-36826-5-10

Malekloo A, Ozer E, AlHamaydeh M, Girolami M (2021) Machine learning and structural health monitoring overview with emerging technology and high-dimensional data source highlights. Struct Health Monitor. https://doi.org/10.1177/14759217211036880

Kurian B, Liyanapathirana R (2020) Machine learning techniques for structural health monitoring. In Lecture notes in mechanical engineering. Springer Singapore. https://doi.org/10.1007/978-981-13-8331-1-1

Zhou Q, Ning Y, Zhou Q, Luo L, Lei J (2013) Structural damage detection method based on random forests and data fusion. Struct Health Monit 12(1):48–58. https://doi.org/10.1177/1475921712464572

Otchere DA, Arbi Ganat TO, Gholami R, Ridha S (2021) Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: Comparative analysis of ANN and SVM models. J Pet Sci Eng. https://doi.org/10.1016/j.petrol.2020.108182

Flach PA, Lachiche N (2004) Naive Bayesian classification of structured data. Mach Learn 57(3):233–269. https://doi.org/10.1023/B:MACH.0000039778.69032.ab

Russell S, Norvig P (2003) [1995]. Artificial intelligence: a modern approach(2nd ed.). Prentice Hall. ISBN978-0137903955

Chen T, Guestrin C (2016) XGBoost: A scalable tree boosting system. Cornell University

Chen M, Liu Q, Chen S, Liu Y, Zhang C-H, Liu R (2019) XGBoost-Based algorithm interpretation and application on post-fault transient stability status prediction of power system. IEEE Access 7:13149–13158. https://doi.org/10.1109/ACCESS.2019.2893448

Acknowledgements

The authors would like to acknowledge the research support of the POLONEZ BIS project SWinT, reg.no. 2022/45/P/ST8/02123 co-funded by the National Science Centre and the European Union Framework Programme for Research and Innovation Horizon 2020 under the Marie Skłodowska-Curie grant agreement no. 945339. M.R.Machado would like to acknowledge the support from the CNPq Grants no. 404013/2021-0.

Author information

Authors and Affiliations

Corresponding author

Additional information

Technical Editor: Adriano Todorovic Fabro.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Machine learning techniques

Appendix A: Machine learning techniques

An appendix contains supplementary information that is not an essential part of the text itself but may help provide a more comprehensive understanding of the research problem, or it is information that is too cumbersome to be included in the body of the paper.

1.1 A.1: K-Means clustering

The K-Means clustering algorithm is an unsupervised ML in which data objects are distributed into a specified number of k clusters [53]. The K is a hyperparameter that specifies the number of clusters that should be created. It is a useful approach for clustering (labelling) or partitioning the data prior to feeding the labelled data as the output of a supervised ML algorithm. The aim is to find centroids that are a measure of the centre point of the cluster, such that the sum of the squared distances of each data sample to its nearest cluster centre is minimal. The nearest here is with respect to the Euclidean norm (L2 norm). Thus, the objective function is

where \(x_{i}\) is referred to as the ith instance in cluster k and \(\mu _{k}\) is referred to as the mean of the samples or “centroid” of cluster k.

The K-Means algorithm is widely used due to its simplicity of implementation and low computational complexity, but one of the biggest problems of K-Means clustering algorithms is the initial definition of the number of clusters that must be used. When dealing with highly complex problems where the cluster count is hard to define, the “elbow” method can provide insights into the potential number of required clusters. Another disadvantage of k-means is that it is very sensitive to outlier points, which can distort the centroids and the clusters [54].

1.2 A.2: K-Nearest-neighbour classifier

K-nearest neighbour is one of the simplest supervised learner methods [54, 55] and widely used for pattern recognition [56]. k-NN can be used for classification and regression, where data with discrete labels usually uses classification and data with continuous labels regression. The classification is calculated from a simple majority vote of the nearest neighbours of each point: a query point is assigned the data class with more representatives within the nearest neighbours of the point. For this, a metric between the points is used spaces [54].

The k-NN algorithm, in its simplest version, only considers exactly one nearest neighbour, which is the closest training data point to the point we want to predict. The prediction is then simply the known output for this training point. Depending on the value of ‘k’, each sample is compared to find similarity or closeness with ‘k’ surrounding samples. For example, when k = 5, the individual samples compare with the nearest five samples; hence, the unknown sample is classified accordingly [54]. The optimal choice of the value of ’k’ is highly data-dependent. In general, a larger suppresses the effects of noise but makes the classification boundaries less distinct.

1.3 A.3: Decision tree and random forest

Decision tree supervised algorithm can target categorical variables such as the classification of a damaged or undamaged statement and continuous variables as regression to compare the signal with the healthy state of the system [55]. Learning a decision tree means learning the sequence of if/else questions that gets us to the true answer most quickly. A tree contains a root node representing the input feature(s) and the internal nodes with significant data information. Each node (also called a leaf or terminal node) represents a question containing the answer. The interactive process is repeated until the last node (leaf node) is reached such that the node becomes impure [54]. The data get into the form of binary features in our application, and a classification procedure is performed.

Random Forest ML algorithm is an ensemble classifier that consists of many decision trees where the class output is the node composed of individual trees. The RF has high prediction accuracy, robust stability, good tolerance of noisy data, and the law of large numbers they do not overfit, and it has been used for structural damage detection. It has shown a better performance [57].

1.4 A.4: Support vector machine

Support Vector Machines are supervised machine learning techniques developed from the statistical learning theory that can be used for classifying and regressing clustered data. In the case of linear classification, with two classes, let \(\{(x_{i}, y_{i}),...,(x_{n}, y_{n})\}\), a training dataset with n observations, where \(x_{i}\) represents the set of input vectors and \(y_{i}(+1, -1)\) is the class label of \(x_{i}\), the hyperplane is a straight line that separates the two classes with a marginal distance (as seen in Fig. 11). The purpose of an SVM is to construct a hyperplane using a margin, defined as the distance between the hyperplane and the nearest points that lie along the marginal line termed as support vectors [58].

One can define the hyperplane by Eq. (A2), where we have the dot product between x and w added to the term b:

where x represents the points within the hyperplane, w is the weights that determine the orientation of the hyperplane, and b is the bias or displacement of the hyperplane. When \(c = 0\), the separating hyperplane is in the middle of the two hyperplanes with \(c= 1\) and \({-}1\). An SVM aims to maximise the data separation margin by minimising w. This optimisation problem can be obtained as the quadratic programming problem given by

where w is the Euclidean norm.

SVM algorithm encompasses not only linear and nonlinear classification but also linear and nonlinear regression. The main idea of the algorithm consists of fitting as many instances as possible a “tube” while limiting margin violations. Therefore, SVR wants to find a hyperplane that minimises the distance from all data to this hyperplane. The width of the “tube” is controlled by a hyperparameter, which has an error “insensitive” area, defined by \(\epsilon \), as illustrated by Fig. 11. The larger the \(\epsilon \), the larger the diameter of this tube, and the less sensitive the model is in predicting points within it. In contrast, the smaller \(\epsilon \), the smaller the diameter of the tube, the greater the chances of points being on the edges of the tube, making the model more robust. The samples that fall into the \(\epsilon \)-margin do not incur any loss. Points outside the tube are examined and considered with respect to the \(\epsilon \)-insensitive region. Compared to a previously defined error called slack variables (\(\xi \)). This approach is similar to the “soft margin” concept in SVM classification because the slack variables allow regression errors to exist up to the value of \(\xi \) and \(\xi _{i}^{*}\), yet still satisfy the required conditions. Including slack variables leads to the objective function given by Eq. (A4).

1.5 A.5: Naïve bayes

Naïve s classification method based on Bayes theorem with the assumption of independence between features, considered a simple technique for constructing classifiers with models that assign class labels to problem instances, represented as vectors of feature values, where the class labels are drawn from some finite set. There are three classes in sk-learn, the Gaussian-NB, Multinomial-NB, and Bernoulli-NB. The first assumes a Gaussian distribution, the second is for discrete occurrence counters, and the third is for discrete boolean attributes [59].

Naive Bayes classifiers are highly scalable, requiring several parameters linear in the number of variables in a learning problem. Maximum-likelihood training can be done by evaluating a closed-form expression. In other words, one can work with the naive Bayes model without accepting Bayesian probability or using any Bayesian methods. An advantage of naive Bayes is to train a model with few samples [60].

1.6 A.6: Extreme gradient boosting (XGBoost)

XGBoost (short for Extreme Gradient Boosting) is an efficient implementation of Gradient Boosting Machines (GBM), developed by Tianqi Chen [61], widely recognised for its superior performance in supervised learning. This versatile algorithm is also considered to be an ensemble tree technique that can be used for both regression and classification tasks.

XGBoost follows the concept of weak-learner, where each predictor could be improved by sequentially training new trees to the model [62]. In other words, the XGBoost makes predictions by creating numerous smaller decision trees, also known as subtrees. Each subtree makes predictions for the data, and their individual predictions are combined to form the final prediction for the given input. This ensemble approach helps improve the accuracy and generalisation ability of the predictive model. The process involves iterative training of these subtrees to correct the errors made by the previous subtrees, gradually refining the overall prediction as more trees are added.

Another feature related to XGBoost is that it uses L1 and L2 regularisation, which helps with model generalisation and overfitting reduction. It uses an optimisation strategy that produces better weights as it calculates the weights of the component models. It also uses slightly less tiny component models.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Coelho, J.S., Machado, M.R., Dutkiewicz, M. et al. Data-driven machine learning for pattern recognition and detection of loosening torque in bolted joints. J Braz. Soc. Mech. Sci. Eng. 46, 75 (2024). https://doi.org/10.1007/s40430-023-04628-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40430-023-04628-6