Abstract

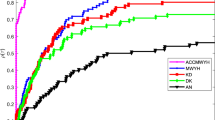

This paper proposes an adaptive two-parameter accelerated conjugate gradient method, which satisfies the sufficient descent condition in the search direction. The Powell restart strategy is designed in the algorithm to improve its numerical performance. Our proposed method does not add extra computational effort compared to other methods. Furthermore, under general assumptions, we demonstrate the global convergence of our proposed method under the Wolfe line search. Finally, we compare with other methods on the unconstrained optimization and image restoration problems. Numerical experiments are presented to show that our proposed method is feasible.

Similar content being viewed by others

Data Availability

No datasets were generated or analysed during the current study.

References

Andrei N (2007) Scaled conjugate gradient algorithms for unconstrained optimization. Comput Optim Appl 38(3):401–416

Andrei N (2008) An unconstrained optimization test functions collection. Adv Model Optim 10(1):147–161

Andrei N (2009) Acceleration of conjugate gradient algorithms for unconstrained optimization. Appl Math Comput 213(2):361–369

Andrei N (2013) On three-term conjugate gradient algorithms for unconstrained optimization. Appl Math Comput 219:6316–6327

Andrei N (2017) Accelerated adaptive Perry conjugate gradient algorithms based on the self-scaling memoryless BFGS update. J Comput Appl Math 325:149–164

Bovik AC (2010) Handbook of image and video processing. Academic Press, New York

Cai JF, Chan R, Morini B (2007a) Minimization of an edge-preserving regularization functional by conjugate gradient type methods. In: Image Processing based on partial differential equations. Springer, Berlin, Heidelberg, pp 109–122

Cai JF, Chan RH, Fiore CD (2007b) Minimization of a detail-preserving regularization functional for impulse noise removal. J Math Imaging Vis 27:79–91

Chan RH, Ho CW, Nikolova M (2005) Salt-and-pepper noise removal by median-type noise detectors and detail-preserving regularization. IEEE Trans Image Process 14(10):1479–1485

Chen Y, Cao M, Yang Y (2019) A new accelerated conjugate gradient method for large-scale unconstrained optimization. J Inequal Appl 1:1–13. https://doi.org/10.1186/s13660-019-2238-9

Dai YH, Liao LZ (2001) New conjugacy conditions and related nonlinear conjugate gradient methods. Appl Math Optim 43:87–101

Dai YH, Yuan Y (1999) A nonlinear conjugate gradient method with a strong global convergence property. SIAM J Optim 10(1):177–182

Deng SH, Wan Z (2015) A three-term conjugate gradient algorithm for large-scale unconstrained optimization problems. Appl Numer Math 92:70–81

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Progr 91(2):201–213

Fletcher R (1987) Practical method of optimization, Vol I: unconstrained optimization. Wiley, New York

Fletcher R, Reeves CM (1964) Function minimization by conjugate gradients. Comput J 7:149–154

Hager WW, Zhang H (2005) A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J Optim 16:170–192

Hager WW, Zhang H (2006) Algorithm 851: CG_DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans Math Softw 32:113–137

Hestenes MR, Stiefel E (1952) Method of conjugate gradient for solving linear equations. J Res Natl Bureau Stand 49:409–436

Jiang XZ, Liao W, Yin JH, Jian JB (2022) A new family of hybrid three-term conjugate gradient methods with applications in image restoration. Numer Algorithms 91:161–191

Liu Y, Storey C (1991) Efficient generalized conjugate gradient algorithms, part 1: theory. J Optim Theory Appl 69:129–137

Liu YF, Zhu ZB, Zhang BX (2022) Two sufficient descent three-term conjugate gradient methods for unconstrained optimization problems with applications in compressive sensing. J Appl Math Comput 68:1787–1816. https://doi.org/10.1007/s12190-021-01589-8

Nocedal J, Wright SJ (2006) Numerical optimization. Springer Science+Business Media, New York

Oren SS (1974) Self-scaling variable metric (SSVM) algorithms, II: implementation and experiments. Manag Sci 20:863–874

Perry A (1978) Technical note—a modified conjugate gradient algorithm. Oper Res 26:1073–1078

Polak E, Ribière G (1969) Note sur la convergence de méthodes de directions conjuguées. Rev Franşaise Inf Rech Oper 3(16):35–43

Polyak BT (1969) The conjugate gradient method in extreme problems. USSR Comput Math Math Phys 9(4):94–112

Powell MJD (1977) Restart procedures of the conjugate gradient method. Math Progr 12:241–254

Sun W, Yuan Y (2006) Optimization theory and methods: nonlinear programming. Springer Science+Business Media, New York

Wolfe P (1968) Convergence conditions for ascent methods. SIAM Rev 11(2):226–235

Wolfe P (1971) Convergence conditions for ascent methods, (II): some corrections. SIAM Rev 13(2):185–188

Yao SW, Ning LS (2018) An adaptive three-term conjugate gradient method based on self-scaling memoryless BFGS matrix. J Comput Appl Math 322:72–85

Zoutendijk G (1970) Nonlinear programming, computational methods. In: Abadie J (ed) Integer and nonlinear programming. North-Holland, Amsterdam, pp 37–86

Funding

This work is supported by the National Natural Science Foundation of China under Grant 61967004 and Grant 11901137, Guangxi Key Laboratory of Cryptography and Information Security under Grant GCIS201927, Guangxi Key Laboratory of Automatic Detecting Technology and Instruments under Grant YQ20113, Innovation Project of Guangxi Graduate Education under Grant 2021YCXS118, and Innovation Project of GUET Graduate Education under Grant 2023YCXS113.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare to have no competing interests.

Additional information

Communicated by Vinicius Albani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, Z., Zhu, X. & Tan, Z. An accelerated conjugate gradient method with adaptive two-parameter with applications in image restoration. Comp. Appl. Math. 43, 116 (2024). https://doi.org/10.1007/s40314-023-02521-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02521-5

Keywords

- Sufficient descent condition

- Powell restart strategy

- Unconstrained optimization

- Image restoration

- Global convergence