Abstract

You may very well be familiar with the Gini Coefficient, also known as the Gini index: a quantitative gauge with which socioeconomic inequality is measured, e.g. income disparity and wealth disparity. However, you may not know that the Gini Coefficient is an exquisite mathematical object. Enter this review paper—whose aim is to showcase (some of) the mathematical beauty and riches of the Gini Coefficient. The paper does so, in a completely self-contained manner, by illuminating the Gini Coefficient from various perspectives: Euclidean geometry vs. grid geometry; maxima and minima of random variables; statistical distribution functions; the residual lifetime and the total lifetime of renewal processes; increasing and decreasing failure rates; socioeconomic divergence from perfect equality; and weighted differences of statistical distribution functions. Together, these different perspectives offer a deep and comprehensive understanding of the Gini Coefficient. In turn, a profound understanding of the Gini Coefficient may lead to novel ‘Gini applications’ in science and engineering—such as recently established in the multidisciplinary field of restart research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Named in honor of the Italian scientist Corrado Gini [1,2,3], the “Gini Coefficient” is arguably the best known quantitative gauge of socioeconomic inequality [4, 5]. Indeed, also termed “Gini index” and “Gini concentration ratio”, the Gini Coefficient is widely applied in economics and in the social sciences to quantify the inequity of wealth distributions and of income distributions in human societies [6,7,8].

More generally, in the context of statistical distributions of non-negative quantities with positiveFootnote 1 means, the Gini Coefficient is a quantitative gauge of statistical heterogeneity [9,10,11,12]. Going beyond socioeconomic applications, examples of recent uses of the Gini Coefficient include: earthquake damages to buildings [13]; profiles of gene expressions [14]; vehicular terror attacks [15]; demands in public transport [16]; homogeneity quantification of periodic surfaces [17]; error distributions in computational chemistry [18]; coronavirus infection rates in cities [19]; and sustainable health monitoring [20].

There are various approaches to quantifying statistical heterogeneity. Perhaps the most prevalent approach in science is the standard deviation. In the context of non-negative quantities with positive means, the standard deviation induces the Coefficient of Variation (CV): the “noise-to-signal” ratio of the standard deviation to the mean. Different fields of science devised custom-tailored approaches to statistical heterogeneity, such as: entropy measures in information theory and in statistical physics [21,22,23]; diversity measures in biology and in ecology [24,25,26]; and inequality measures in economics and in the social sciences [27,28,29], which include the Gini Coefficient.

When analyzing the statistical heterogeneity of a quantity of interest, researchers are free to choose the statistical-heterogeneity gauge(s) to work with. Indeed, the quantity of interest does not dictate specific gauges that should be applied. However, when the quantity of interest arises in the context of a particular problem, the problem itself may point out at specific gauges. Such a scenario takes place in restart research—a multidisciplinary scientific field that attracted significant interest in the recent years [30,31,32,33,34,35,36,37,38] (for reviews of restart research see [39, 40], and for the scope of the field see [41]). A fundamental restart problem points out at two specific gauges of statistical heterogeneity: the CV and the Gini Coefficient.

The CV is well known to scientists, and it is commonly used by both experimentalists and theoreticians. Conversely, the Gini Coefficient is less familiar to scientists at large, and its use outside economics and the social sciences is relatively limited. And here enters this review paper—whose goal is to showcase the Gini Coefficient to general scientific audiences. To that end the paper illuminates many fascinating facets, and many profound mathematical linkages, of the Gini Coefficient. The paper is structured as follows.

Section 2 starts the Gini journey with a ‘restart compass’ that points at the CV and at the Gini Coefficient. Taking on a Euclidean-geometry perspective, Sect. 3 shows how the notion of Mean Square Deviation (MSD) leads naturally to the CV. Shifting from the ‘aerial’ Euclidean perspective to a ‘pedestrian’ grid-geometry perspective, Sect. 4 shows how the MSD shifts to the notion of Mean Absolute Deviation (MAD), and consequently how the CV shifts to the Gini Coefficient. Having introduced the Gini Coefficient, it is then explored from various statistical perspectives: maximum and minimum formulations (Sect. 5), followed by examples of calculating the Gini Coefficient via these formulations (Sect. 6); distribution-functions formulation (Sect. 7); probabilistic formulations (Sect. 8), followed by renewal interpretations of these formulations (Sect. 9); an integral formulation that connects the Gini Coefficient to increasing and decreasing failure rates (Sect. 10); an integral formulation that provides a socioeconomic interpretation of the Gini Coefficient (Sect. 11); and weighted-difference formulations that accommodate the CV and the Gini Coefficient under one unified setting (Sect. 12).

This review paper is entirely self-contained, and it requires no prior knowledge albeit basic calculus and probability. Having read this paper, an appreciation of the depth and the riches of the Gini Coefficient shall be earned. Moreover, having read this paper, scientists and practitioners may come up with new Gini applications a-la the aforementioned novel restart-research application: problems that point out at the Gini Coefficient as a ‘natural’ statistical-heterogeneity gauge to be used.

2 Restart prolog

Consider a stochastic process that is poised to accomplish a certain task; as the process is stochastic, the task’s completion time is a (non-negative) random variable. Further consider restarting the process: as long as the task is not accomplished, the process is every so often reset to its initial state. Restart research is a multidisciplinary scientific field that attracted substantial scientific interest in the recent years (see the references noted in the introduction [30,31,32,33,34,35,36,37,38,39,40,41]). A main topic in restart research addresses the following keystone question: does restart expedite or impede mean-performance? Namely, does the mean completion time decrease or increase when restart is applied?

The resetting epochs are governed, in general, by a stochastic timer. Measuring the timer’s randomness via Shannon’s entropy, and with regard to a pre-set mean value of the timer [22]: the least random timer is constant (the pre-set mean value); and the most random timer is exponentially distributed (with exponential rate that is the reciprocal of the pre-set mean value). Restart with these two diametric timers—termed, respectively and in short, “sharp” and “exponential”—is most prevalent in restart research.

Addressing the aforementioned mean-performance question, two specific statistical-heterogeneity gauges of the task’s completion time (without restart) happen to play key roles: the Coefficient of Variation (CV) and the Gini Coefficient—which were noted in the introduction, and which will be introduced in detail in Sects. 3 and 4 below. These key roles shall now be described.

Firstly, consider exponential restart with small exponential rates, i.e. with large mean-values of the timer. The CV determines the effect of exponential restart (with small exponential rates) as follows [31, 32]: if the CV is smaller than one then restart impedes mean-performance; and if the CV is larger than one then restart expedites mean-performance.

Secondly, consider exponential restart with small exponential rates, and with branching of the underlying stochastic process [34, 42] (the branching takes place at the resetting epochs). The CV and the Gini Coefficient determine, jointly, the effect of exponential restart (with small exponential rates) as follows [34]: if a certain combination of the CV and the Gini Coefficient is smaller than one then restart impedes mean-performance; and if this combination is larger than one then restart expedites mean-performance.

Thirdly, consider sharp restart. The CV determines the effect of sharp restart as follows [43, 44]: if the CV is smaller than one then there exist constant timers with which restart impedes mean-performance; and if the CV is larger than one then there exist constant timers with which restart expedites mean-performance. Also, the Gini Coefficient determines the effect of sharp restart as follows [44]: if the Gini Coefficient is smaller than half then there exist constant timers with which restart impedes mean-performance; and if the Gini Coefficient is larger than half then there exist constant timers with which restart expedites mean-performance.

A foundational mean-performance result in restart research further asserts that [32, 33]: the CV and Gini criteria regarding sharp restart hold with regard to resetting at large, i.e. resetting with general stochastic timers. So, pointing out at the CV and at the Gini Coefficient, restart research motivates setting spotlights on these gauges of statistical heterogeneity. And indeed, using geometric-distance spotlights, the next two sections shall follow this motivation: Sect. 3 will illuminate the CV from an ‘aerial’ Euclidean-geometry perspective; and Sect. 4 will illuminate the Gini Coefficient from a ‘pedestrian’ grid-geometry perspective.

3 Euclidean perspective

Commonly, distance is measured a-la Euclid. Consider two n-dimensional vectors, \(\textbf{v}=\left( v_{1},\ldots ,v_{n}\right) \) and \(\textbf{u} =\left( u_{1},\ldots ,u_{n}\right) \). The Euclidean distance between these two vectors is: the square root of \(\sum _{i=1}^{n}\left| v_{i}-u_{i}\right| ^{2}\), the sum of the squared differences between the vectors’ matching coordinates. Shifting from vectors to random variables—from \(\textbf{v}\) and \(\textbf{u}\) to two real-valued random variables V and U—distance is also commonly measured in a Euclidean fashion. Namely, the Euclidean distance between the two random variables is: the square root of \(\textbf{E}[|V-U|^{2}]\), the Mean Square Deviation (MSD) between V and U. The MSD \(\textbf{E}[|V-U|^{2}]\) is a weighted-average analogue of the sum of squares \(\sum _{i=1}^{n}\left| v_{i}-u_{i}\right| ^{2}\). In statistical physics, the MSD is applied prevalently in anomalous diffusion (see, for example, [45,46,47]).

The Euclidean distance can be used in order to quantify the statistical heterogeneity of random variables. Given a real-valued random variable of interest X, generate two Independent and Identically Distributed (IID) copies of it, \(X_{1}\) and \(X_{2}\). The Euclidean distance between these two IID copies—the square root of the MSD \(\textbf{E}[|X_{1}-X_{2}|^{2}]\)—is a quantitative measure of the statistical heterogeneity of the random variable X. As shall now be explained, the Euclidean distance \(\sqrt{ \textbf{E}[|X_{1}-X_{2}|^{2}]}\) is equivalent to the standard deviation of the random variable X.

Denote by \(\mu =\textbf{E}[X]\) the mean of the random variable X, and assume that this mean is finite; the notations \(\mu \) and \(\textbf{E}[X]\) shall be used interchangeably along this paper. The variance \(\sigma ^{2}\) of the random variable X is the MSD between X and its mean \(\mu \). In turn, the standard deviation \(\sigma \) of the random variable X is the Euclidean distance between X and its mean \(\mu \). Namely, \(\sigma ^{2}= \textbf{E}[|X-\mu |^{2}]\) and \(\sigma =\sqrt{\textbf{E}[|X-\mu |^{2}]}\). A straightforward calculation (detailed in the Appendix) implies that half the MSD between the two IID copies of X is equal to the variance: \(\frac{1}{2} \textbf{E}[|X_{1}-X_{2}|^{2}]=\sigma ^{2}\). Consequently, as stated above, the Euclidean distance between the two IID copies of X is equivalent to the standard deviation: \(\sqrt{\textbf{E}[|X_{1}-X_{2}|^{2}]}=2\sigma \).

From now on, consider the random variable X to be non-negative, and consider its mean \(\mu \) to be positive. The Coefficient of Variation (CV) of the random variable X is its “noise-to-signal” ratio: the ratio of its standard deviation to its mean, \(\sigma /\mu \). As the mean \(\mu \) is positive, the CV is well defined, and it admits non-negative values. In terms of the Euclidean distance between the two IID copies of the random variable X, the CV is formulated as follows:

The CV is a quantitative measure of the statistical heterogeneity of X, and it has two principal properties. P1) The CV vanishes if and only if the random variable X is deterministic: \(X=\mu \), where the equality holds with probability one. P2) The CV is invariant with respect to scale transformations: the random variable sX, where s is a positive scale factor, has the same CV as the random variable X.

4 Pedestrian perspective

As noted above, distance is commonly measured a-la Euclid. However, real-world distances may not be Euclidean. To illustrate non-Euclidean distances envisage Manhattan (above 14\(^{\text {th}}\) street), and consider a pedestrian that intends to walk from one junction to another. The Euclidean distance manifests the aerial distance between the two junctions. For the pedestrian—as he or she cannot march in a straight aerial line through buildings and walls—the distance between the junctions is not Euclidean. Rather, the actual distance for a Manhattan pedestrian is the ‘grid distance’ between the junctions, which is traversed by walking along streets and avenues.

The grid distance between two n-dimensional vectors, \(\textbf{v}=\left( v_{1},\ldots ,v_{n}\right) \) and \(\textbf{u}=\left( u_{1},\ldots ,u_{n}\right) \), is: \(\sum _{i=1}^{n}\left| v_{i}-u_{i}\right| \), the sum of the absolute differences between the vectors’ matching coordinates. Shifting from vectors to random variables—from \(\textbf{v}\) and \(\textbf{u }\) to two real-valued random variables V and U—the grid distance between the two real-valued random variables is: \(\textbf{E}[|V-U|]\), the Mean Absolute Deviation (MAD) between V and U. The MAD \(\textbf{E} [|V-U|] \) is a weighted-average analogue of the sum of absolute values \( \sum _{i=1}^{n}\left| v_{i}-u_{i}\right| \). In financial engineering, the MAD is applied in portfolio optimization (see, for example, [48,49,50]).

As described in Sect. 3 above, the statistical heterogeneity of a real-valued random variable of interest X can be quantified by \(\sqrt{ \textbf{E}[|X_{1}-X_{2}|^{2}]}\), the Euclidean distance between two IID copies of X. Shifting the perspective from ‘aerial’ to ‘pedestrian’, the Euclidean distance \(\sqrt{\textbf{E}[|X_{1}-X_{2}|^{2}]}\) shifts to the grid distance \(\textbf{E}[|X_{1}-X_{2}|]\). In turn—considering X to be a non-negative random variable with a positive mean—the CV of Eq. (1) shifts to:

In the transition from Eqs. (1)–(2), the multiplicative factor was changed from \(1/\sqrt{2}\) (appearing on the right-hand side of Eq. (1)) to 1/2 (appearing on the right-hand side of Eq. (2)); the reason for this technical change will soon become apparent.

Equation (2) is a formulation—one of many—of the Gini Coefficient of the random variable X [51,52,53]. Comparing Eq. (2) to Eq. (1), the Gini Coefficient is a grid-distance counterpart of the CV. In other words, while the CV is based on the MSD between two IID copies of X, the Gini Coefficient is based on the MAD between these copies.

The aforementioned principal properties of the CV are shared by the Gini Coefficient. P1) The Gini Coefficient vanishes if and only if the random variable X is deterministic: \(X=\mu \), where the equality holds with probability one. P2) The Gini Coefficient is invariant with respect to scale transformations: the random variable sX, where s is a positive scale factor, has the same Gini Coefficient as the random variable X.

The range of the Gini Coefficient is dramatically different from that of the CV. While the CV admits non-negative values, the values of the Gini Coefficient are restricted to the unit interval. Indeed, the triangle inequality implies that the absolute difference between the two IID copies of X is no-larger than their sum, \(\left| X_{1}-X_{2}\right| \le X_{1}+X_{2}\). Applying expectation to both sides of this triangle inequality implies that the MAD between the two IID copies is no-larger than twice the mean, \(\textbf{E}[|X_{1}-X_{2}|]\le 2\textbf{E}[X]\), and hence: Eq. (2) implies that the Gini Coefficient is no-larger than one.

So, on the one hand, the CV \(\sigma /\mu \) can be perceived as an unbounded ‘temperature of randomness’ of the random variable X—a quantitative gauge whose range is the non-negative half line: \(\sigma /\mu \ge 0\). On the other hand, the Gini Coefficient \(\gamma \) can be perceived as a bounded ‘score of randomness’ of the random variable X—a quantitative gauge whose range is the unit interval: \(0\le \gamma \le 1\). The multiplicative factor 1/2 in the right-hand side of Eq. (2) was set so as to calibrate the range of the Gini Coefficient to the unit interval.

5 Max and Min perspectives

The Gini-Coefficient formulation of Eq. (2) involves two IID copies, \( X_{1}\) and \(X_{2}\), of the random variable X. The Gini Coefficient shall now be formulated in terms of the maximum and the minimum of the two IID copies: the maximum random variable \(\vee =\max \left\{ X_{1},X_{2}\right\} \); and the minimum random variable \(\wedge =\min \left\{ X_{1},X_{2}\right\} \). To that end, we note two observations.

The first observation is that the sum of the two IID copies is equal to the sum of their maximum and their minimum, \(X_{1}+X_{2}=\vee +\wedge \). In turn, applying expectation to both sides of this equality implies that: twice the mean of X is equal to the sum of the means of the maximum and the minimum, \(2\textbf{E}[X]=\textbf{E}[\vee ]+\) \(\textbf{E}[\wedge ]\).

The second observation is that the absolute difference between the two IID copies is equal to the difference between their maximum and their minimum, \( \left| X_{1}-X_{2}\right| =\vee -\wedge \). In turn, applying expectation to both sides of this equality implies that: the MAD between the two IID copies is equal to the difference between the means of the maximum and the minimum, \(\textbf{E}[|X_{1}-X_{2}|]=\textbf{E}[\vee ]-\) \(\textbf{E} [\wedge ]\). In turn, Eq. (2) implies that \(2\gamma \textbf{E}[X]= \textbf{E}[\vee ]-\) \(\textbf{E}[\wedge ]\).

Summing the equality \(2\textbf{E}[X]=\textbf{E}[\vee ]+\) \(\textbf{E}[\wedge ] \) and the equality \(2\gamma \textbf{E}[X]=\textbf{E}[\vee ]-\) \(\textbf{E} [\wedge ]\) yields \(2(1+\gamma )\textbf{E}[X]=2\textbf{E}[\vee ]\). Consequently, the following ‘maximum formulation’ of the Gini Coefficient is obtained:

Equation (3) manifests the Gini Coefficient as a relative ‘overshoot’: that of the mean of the maximum \(\textbf{E}[\max \left\{ X_{1},X_{2}\right\} ]\), above the ‘benchmark’ mean \(\textbf{E}[X]\).

Subtracting the equality \(2\gamma \textbf{E}[X]=\textbf{E}[\vee ]-\) \(\textbf{ E}[\wedge ]\) from the equality \(2\textbf{E}[X]=\textbf{E}[\vee ]+\) \(\textbf{E }[\wedge ]\) yields \(2(1-\gamma )\textbf{E}[X]=2\textbf{E}[\wedge ]\). Consequently, the following ‘minimum formulation’ of the Gini Coefficient is obtained:

Eq. (4) manifests the Gini Coefficient as a relative ‘undershoot’: that of the mean of the minimum \(\textbf{E}[\min \left\{ X_{1},X_{2}\right\} ]\), below the ‘benchmark’ mean \(\textbf{E}[X]\). The minimum formulation of Eq. (4) played a key role in the mean-performance analysis of exponential restart with branching [34].

In addition to the maximum and minimum formulations of Eqs. (3) and ( 4), the equality \(2\gamma \textbf{E}[X]=\textbf{E}[\vee ]-\) \(\textbf{E }[\wedge ]\) also yields the following ‘maximum-minimum formulation’:

Eq. (5) is the arithmetic average of Eqs. (3) and (4). Namely, summing up Eqs. (3) and (4), and then dividing their sum by two, yields Eq. (5).

Underlying the formulations of this section is a sample \(\left\{ X_{1},X_{2}\right\} \) comprising two IID copies of the random variable X. The sample’s maximum is \(\max \left\{ X_{1},X_{2}\right\} \), the sample’s minimum is \(\min \left\{ X_{1},X_{2}\right\} \), and the sample’s range is \( \max \left\{ X_{1},X_{2}\right\} -\min \left\{ X_{1},X_{2}\right\} \); the nominator on the right-hand side of Eq. (5) is the mean of the sample’s range. This sample, whose size is 2, can be generalized to a sample of an arbitrary size: a sample \(\left\{ X_{1},\ldots ,X_{n}\right\} \) comprising n IID copies of the random variable X (where n is an integer that is larger than one). Doing so, and properly calibrating Eqs. (3)–(5), yields generalizations of the Gini Coefficient [54].

6 Examples

The ‘maximum formulation’ of Eq. (3) and the ‘minimum formulation’ of Eq. (4) turn out to be quite handy for calculating the Gini Coefficient. We shall now demonstrate the use of Eqs. (3) and (4) via four examples of statistical distributions (of the random variable X): Frechet, Weibull, Inverted Pareto, and Pareto.

The Frechet and Weibull distributions emerge via Extreme Value Theory [55,56,57]. Specifically, the Fisher-Tippett-Gnedenko theorem of Extreme Value Theory asserts that: the universal scaling limits—which are positive valued—of the maxima and minima of IID random variables are, respectively, Frechet and Weibull.

Many non-negative quantities happen to display power-law statistics either for small values, or for large values [58,59,60]. Pareto distributions are the paradigmatic models for such behaviors [61, 62]. Specifically, the Inverted Pareto distribution models power-law statistics in the vicinity of zero. And, the Pareto distribution models power-law statistics in the vicinity of infinity.

In what follows \(F\left( x\right) =\Pr \left( X\le x\right) \) (\(x\ge 0\)) denotes the cumulative distribution function of the random variable X. And, \(\bar{F}\left( x\right) =\Pr \left( X>x\right) \) (\(x\ge 0\)) denotes the tail distribution function of the random variable X. As above, \(X_{1}\) and \(X_{2}\) are two IID copies of the random variable X. Each of the four examples—Frechet, Weibull, Inverted Pareto, and Pareto—has two parameters: a positive scale s, and a positive exponent \(\epsilon \).

Frechet. A Frechet random variable X is characterized by the cumulative distribution function \(F\left( x\right) =\exp [-(s/x)^{\epsilon }] \), and it has a finite mean if and only if the Frechet exponent is in the range \(\epsilon >1\). As the cumulative distribution function of the maximum random variable \(\vee =\max \left\{ X_{1},X_{2}\right\} \) is \(F\left( x\right) ^{2}\) (see the Appendix), note that: the maximum random variable \( \vee \) is Frechet with scale \(2^{1/\epsilon }s\) and with exponent \(\epsilon \). Consequently, the maximum random variable \(\vee \) is equal in law to the random variable \(2^{1/\epsilon }X\), and hence (for \(\epsilon >1\)): \(\textbf{E }[\max \left\{ X_{1},X_{2}\right\} ]=2^{1/\epsilon }\textbf{E}[X]\). Substituting this relation into Eq. (3) yields the Frechet Gini Coefficient \(\gamma =2^{1/\epsilon }-1\) (for \(\epsilon >1\)).

Weibull. A Weibull random variable X is characterized by the tail distribution function \(\bar{F}\left( x\right) =\exp [-(x/s)^{\epsilon }]\), and it always has a finite mean. As the tail distribution function of the minimum random variable \(\wedge =\min \left\{ X_{1},X_{2}\right\} \) is \(\bar{ F}\left( x\right) ^{2}\) (see the Appendix), note that: the minimum random variable \(\wedge \) is Weibull with scale \(2^{-1/\epsilon }s\) and with exponent \(\epsilon \). Consequently, the minimum random variable \(\wedge \) is equal in law to the random variable \(2^{-1/\epsilon }X\), and hence: \(\textbf{ E}[\min \left\{ X_{1},X_{2}\right\} ]=2^{-1/\epsilon }\textbf{E}[X]\). Substituting this relation into Eq. (4) yields the Weibull Gini Coefficient \(\gamma =1-2^{-1/\epsilon }\) (for \(\epsilon >0\)).

Inverted Pareto. An Inverted-Pareto random variable X is characterized by the cumulative distribution function \(F\left( x\right) =(x/s)^{\epsilon }\) (\(0\le x\le s\)), and it always has a finite mean—which is \(\textbf{E}[X]=\frac{\epsilon }{\epsilon +1}\). As the cumulative distribution function of the maximum random variable \(\vee =\max \left\{ X_{1},X_{2}\right\} \) is \(F\left( x\right) ^{2}\) (see the Appendix), note that: the maximum random variable \(\vee \) is Inverted Pareto with scale s and with exponent \(2\epsilon \). Consequently, the mean of the maximum random variable is \(\textbf{E}[\max \left\{ X_{1},X_{2}\right\} ]=\frac{2\epsilon }{ 2\epsilon +1}\). Substituting the two Inverted Pareto means into Eq. (3 ) yields the Inverted Pareto Gini Coefficient \(\gamma =\frac{1}{2\epsilon +1} \) (for \(\epsilon >0\)).

Pareto. A Pareto random variable X is characterized by the tail distribution function \(\bar{F}\left( x\right) =(s/x)^{\epsilon }\) (\(x\ge s\)), and it has a finite mean if and only if the Pareto exponent is in the range \(\epsilon >1\)—in which case \(\textbf{E}[X]=\frac{\epsilon }{ \epsilon -1}\). As the tail distribution function of the minimum random variable \(\wedge =\min \left\{ X_{1},X_{2}\right\} \) is \(\bar{F}\left( x\right) ^{2}\) (see the Appendix), note that: the minimum random variable \( \wedge \) is Pareto with scale s and with exponent \(2\epsilon \). Consequently, for \(\epsilon >1\), the mean of the minimum random variable is \( \textbf{E}[\min \left\{ X_{1},X_{2}\right\} ]=\frac{2\epsilon }{2\epsilon -1} \). Substituting the two Pareto means into Eq. (4) yields the Pareto Gini Coefficient \(\gamma =\frac{1}{2\epsilon -1}\) (for \(\epsilon >1\)).

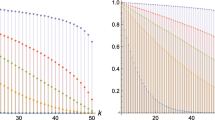

In accord with its scale-invariance property, the Gini Coefficient \(\gamma \)—in all the four examples—does not depend on the scale parameter s. In all the four examples, the Gini Coefficient \(\gamma \) is a decreasing function of the exponent \(\epsilon \). Also, in all the four examples, the Gini Coefficient attains its ‘zero score’ value \(\gamma =0\) in the exponent limit \(\epsilon \rightarrow \infty \). The Gini Coefficient attains its ‘unit score’ value \(\gamma =1\) in the following exponent limits: \(\epsilon \rightarrow 1\) in the Frechet and Pareto examples; and \(\epsilon \rightarrow 0\) in the Weibull and Inverted Pareto examples.

7 Distribution perspective

The Gini Coefficient shall now be formulated in terms of the two distribution functions of the random variable X: the cumulative distribution function \(F\left( x\right) =\Pr \left( X\le x\right) \) (\(x\ge 0\)); and the tail distribution function \(\bar{F}\left( x\right) =\Pr \left( X>x\right) \) (\(x\ge 0\)). Evidently, these two distribution functions sum up to one, \(F\left( x\right) +\bar{F}\left( x\right) =1\). As above, \(X_{1}\) and \(X_{2}\) denote two IID copies of the random variable X.

The mean of a general non-negative random variable is equal to the integral of its tail distribution function. Hence, for the random variable X, the mean formula \(\textbf{E}[X]=\int _{0}^{\infty }\bar{F}\left( x\right) dx\) holds. As the tail distribution function of the minimum random variable \( \min \left\{ X_{1},X_{2}\right\} \) is \(\bar{F}\left( x\right) ^{2}\) (see the Appendix), also the following mean formula holds: \(\textbf{E}[\min \left\{ X_{1},X_{2}\right\} ]=\int _{0}^{\infty }\bar{F}\left( x\right) ^{2}dx\). Substituting these two mean formulae into Eq. (4), and using a bit of algebra, yields the following distribution-functions formulation of the Gini Coefficient:

The distribution-functions formulation of Eq. (6) was derived via Eq. (4). An alternative derivation of Eq. (6) is via Eq. (3 ). To that end note that the mean formula for the random variable X can be re-written in the form \(\textbf{E}[X]=\int _{0}^{\infty }[1-F\left( x\right) ]dx\). Also, as the cumulative distribution function of the maximum random variable \(\max \left\{ X_{1},X_{2}\right\} \) is \(F\left( x\right) ^{2}\) (see the Appendix), the following mean formula holds: \(\textbf{E}[\max \left\{ X_{1},X_{2}\right\} ]=\int _{0}^{\infty }[1-F\left( x\right) ^{2}]dx\). Substituting these two mean formulae into Eq. (3), and using a bit of algebra, yields Eq. (6).

In the transition from Eq. (1) to Eq. (2), the Euclidean-geometry perspective shifted to the grid-geometry perspective. Equation (6) goes back to the Euclidean-geometry perspective—albeit in a space of functions: real-valued functions that are defined over the non-negative half-line. Indeed, the Euclidean distance is based on the Euclidean inner product. Namely, in the n-dimensional space, the Euclidean inner product of the vectors \(\textbf{v}=\left( v_{1},\ldots ,v_{n}\right) \) and \(\textbf{u}=\left( u_{1},\ldots ,u_{n}\right) \) is \( \sum _{i=1}^{n}v_{i}u_{i}\)—the sum of the products of the vectors’ matching coordinates. Analogously, in the space of functions (over the non-negative half-line, \(x\ge 0\)), the Euclidean inner product of the functions \(\varphi \left( x\right) \) and \(\psi \left( x\right) \) is the integral \(\int _{0}^{\infty }\varphi \left( x\right) \psi \left( x\right) dx\). Hence, the nominator appearing on the right-hand side of Eq. (6) manifests the Euclidean inner product of the distribution functions \(F\left( x\right) \) and \(\bar{F}\left( x\right) \).Footnote 2

8 Probabilistic perspectives

As explained above, the range of the Gini Coefficient is the unit interval, \( 0\le \gamma \le 1\). Consequently, one may suspect that perhaps the Gini Coefficient manifests a probability. It will be shown now that this probabilistic intuition is correct, and that the Gini Coefficient indeed admits probabilistic formulations.

The derivation of Eq. (6) used the formula \(\mu =\int _{0}^{\infty } \bar{F}\left( x\right) dx\) for the mean of the random variable X. This mean formula implies that \(f_{res}\left( x\right) =\frac{1}{\mu }\bar{F} \left( x\right) \) (\(x\ge 0\)) is a density function: its values are non-negative, and its integral sums up to one. The density function \( f_{res}\left( x\right) \) governs the statistical distribution of a non-negative random variable \(X_{res}\) that is commonly termed the “residual lifetime” of the random variable X (the renewal meaning of \(X_{res}\) shall be described in Sect. 9 below).

Using the density function \(f_{res}\left( x\right) =\frac{1}{\mu }\bar{F} \left( x\right) \), Eq. (6) can be re-written in the form \(\gamma =\int _{0}^{\infty }F\left( x\right) f_{res}\left( x\right) dx\). In turn, this form and Eq. (14) imply the following probabilistic formulation of the Gini Coefficient:

where the random variable X and its residual lifetime \(X_{res}\) are mutually independent. Namely, Eq. (7) manifests the Gini Coefficient as the probability of the event \(\left\{ X\le X_{res}\right\} \).

Assuming that the cumulative distribution function \(F\left( x\right) \) is smooth, denote by \(f\left( x\right) =F^{\prime }\left( x\right) \) the density function of the random variable X. In terms of the density function \(f\left( x\right) \), the mean of X is given by the formula \(\mu =\int _{0}^{\infty }xf\left( x\right) dx\). This mean formula implies that \( f_{tot}\left( x\right) =\frac{1}{\mu }xf\left( x\right) \) (\(x\ge 0\)) is a density function: its values are non-negative, and its integral sums up to one. The density function \(f_{tot}\left( x\right) \) governs the statistical distribution of a non-negative random variable \(X_{tot}\) that Ward Whitt termed the “total lifetime” of the random variable X (the renewal and the socioeconomic meanings of \(X_{tot}\) shall be described, respectively, in Sects. 9 and 11 below).

Consider, as above, the maximum random variable \(\vee =\max \left\{ X_{1},X_{2}\right\} \), where \(X_{1}\) and \(X_{2}\) are two IID copies of the random variable X. As the cumulative distribution function of the maximum random variable \(\vee \) is \(F\left( x\right) ^{2}\) (see the Appendix), its density function is \(2F\left( x\right) f\left( x\right) \). Consequently, for the maximum random variable \(\vee \), the following mean formula holds: \( \textbf{E}[\vee ]=\int _{0}^{\infty }x[2F\left( x\right) f\left( x\right) ]dx\).

Using the density function \(f_{tot}\left( x\right) =\frac{1}{\mu }xf\left( x\right) \), the mean formula for the maximum random variable \(\vee \) implies that \(\frac{1}{\mu }\textbf{E}[\vee ]=2\int _{0}^{\infty }F\left( x\right) f_{tot}\left( x\right) dx\). Thus, Eq. (3) can be re-written in the form \(\gamma =2\int _{0}^{\infty }F\left( x\right) f_{tot}\left( x\right) dx-1 \). In turn, this form and Eqs. (14) and (16) imply the following probabilistic formulation of the Gini Coefficient:

where the random variable X and its total lifetime \(X_{tot}\) are mutually independent. Namely, Eq. (8) manifests the Gini Coefficient as the extent by which the probability of the event \(\left\{ X\le X_{tot}\right\} \) is greater than the probability of the complementary event \(\left\{ X>X_{tot}\right\} \).

9 Renewal interpretations

The probabilistic formulations of Eq. (7) and of Eq. (8) have renewal meanings, i.e. interpretations that are based on a renewal-process setting. These interpretations shall now be described.

The renewal process that is formed by the random variable X is a sequence of ‘renewal points’, over the non-negative half-line, that is constructed as follows [63,64,65]: initiating from the origin, the gaps between the consecutive renewal points are IID copies \(\left\{ X_{1},X_{2},X_{3},\ldots \right\} \) of the random variable X. Specifically: point zero is \(P_{0}=0\); point one is \(P_{1}=X_{1}\); point two is \(P_{2}=X_{1}+X_{2}\); point three is \(P_{3}=X_{1}+X_{2}+X_{3}\); and so on.

Placing an observer at a random point along the interval \([P_{0},P_{n}]\), set the two following distances: \(L_{n}\) is the distance between the observer and the nearest renewal point to its left; and \(R_{n}\) is the distance between the observer and the nearest renewal point to its right. Also, set \(\Delta _{n}=L_{n}+R_{n}\) to be the inter-renewal distance, i.e.: the distance between the renewal point to the left of the observer, and the renewal point to the right of the observer. In other words, the inter-renewal distance \(\Delta _{n}\) is the length of the inter-renewal gap in which the observer was placed.

Taking the limit \(n\rightarrow \infty \), the theory of renewal processes yields the following limit-law results regarding the three distances [65]. (1) The left distance \(L_{n}\) converges, in law, to the residual lifetime \(X_{res}\). (2) The right distance \(R_{n}\) converges, in law, to the residual lifetime \(X_{res}\). (3) The inter-renewal distance \(\Delta _{n}\) converges, in law, to the total lifetime \(X_{tot}\).

So, for the ‘random observer’ (in limit \(n\rightarrow \infty \)): the distance to the nearest renewal point to the left, and the distance to the nearest renewal point to the right, are both equal in law to the residual lifetime \(X_{res}\). On the other hand, for an observer that stands at a non-zero renewal point (\(n\ne 0\)): the distance to the nearest renewal point to the left, and the distance to the nearest renewal point to the right, are both equal in law to the random variable X. Thus—looking either to the left or to the right—the event \(\left\{ X\le X_{res}\right\} \) that appears in Eq. (7) manifests the following scenario: the ‘renewal observer’ observes a distance that is no-larger than what the ‘random observer’ observes.

Also, for the ‘random observer’ (in limit \(n\rightarrow \infty \)): the inter-renewal distance is equal in law to the total lifetime \(X_{tot}\). On the other hand, for an observer that stands at a non-zero renewal point (\( n\ne 0\)): the inter-renewal distance—either to the left or to the right—is equal in law to the random variable X. Thus, the events that appear in Eq. (8) are interpreted as follows: \(\left\{ X\le X_{tot}\right\} \) is the scenario in which the ‘renewal observer’ observes an inter-renewal distance that is no-larger than what the ‘random observer’ observes; and \( \left\{ X>X_{tot}\right\} \) is the complementary scenario.

Renewal processes are often presented in a temporal context. To illustrate this context, assume that the renewal points represent the arrival times of busses (of a certain bus line) to a bus station. In this illustration the aforementioned ‘random observer’ is a passenger that gets to the station at a random time point. And, the aforementioned ‘renewal observer’ is a passenger that gets to the station just after a bus left the station. The waiting times—till a bus arrives—are as follows: for the ‘random passenger’ the waiting time is equal in law to \(X_{res}\); and for the ‘just-missed-a-bus passenger’ the waiting time is equal in law to X. Hence, with regard to the temporal illustration: Eq. (7) manifests the probability that the waiting time of the ‘just-missed-a-bus passenger’ be no-larger than that of the ‘random passenger’.

Continuing with the temporal illustration, consider the inter-arrival durations, i.e. the temporal durations between consecutive bus arrivals. The inter-arrival durations are as follows: for the ‘random passenger’ the inter-arrival duration is equal in law to \(X_{tot}\); and for the ‘just-missed-a-bus passenger’ the inter-arrival duration is equal in law to X. Hence, with regard to the temporal illustration, Eq. (8) manifests the extent by which the probability of the event {the ‘just-missed-a-bus passenger’ encounters an inter-arrival duration that is no-larger than that of the ‘random passenger’} is greater than the probability of the complementary event.

10 Hazard perspective

The Gini-Coefficient formulation of Eq. (7) ‘contests’ the random variable X against its residual lifetime \(X_{res}\). Continuing with this approach, this section will contest the corresponding cumulative distribution functions: \(F\left( x\right) =\Pr \left( X\le x\right) \) and \( F_{res}\left( x\right) =\Pr (X_{res}\le x)\) (\(x\ge 0\)). Along this section \(Q_{res}\left( u\right) =F_{res}^{-1}\left( u\right) \) (\(0\le u\le 1\)) denotes the quantile function of the residual lifetime \(X_{res}\).Footnote 3

The derivation of Eq. (7) involved the Gini-Coefficient form \(\gamma =\int _{0}^{\infty }F\left( x\right) f_{res}\left( x\right) dx\). Applying the change-of-variables \(x\mapsto u=F_{res}\left( x\right) \) to this form yields the following integral formulation of the Gini Coefficient:

where \(K\left( u\right) =F[Q_{res}\left( u\right) ]\) (\(0\le u\le 1\)). Equation ( 9) manifests the Gini Coefficient as the area under the curve \( K\left( u\right) \).

As \(F\left( x\right) \) and \(F_{res}\left( x\right) \) are cumulative distribution functions, the graph of the curve \(K\left( u\right) \) resides in the unit square—where it is non-decreasing from the square’s ‘bottom-left corner’ \(K\left( 0\right) =0\) to the square’s ‘top-right corner’ \(K\left( 1\right) =1\). The curve \(K\left( u\right) \) coincides with the diagonal line of the unit square if and only if the random variable X is equal in law to its residual lifetime \(X_{res}\), i.e.: \(K\left( u\right) \equiv u\Leftrightarrow F\left( x\right) \equiv F_{res}\left( x\right) \). In effect, the curve \(K\left( u\right) \) provides a graphic depiction of the statistical divergence between the cumulative distribution functions \( F\left( x\right) \) and \(F_{res}\left( x\right) \).

As described in Sect. 8, the density function of the residual lifetime \(X_{res}\) is \(f_{res}\left( x\right) =\frac{1}{\mu }\bar{F}\left( x\right) \). Hence, assuming that the random variable X has a density function \(f\left( x\right) =F^{\prime }\left( x\right) \), the derivative of the curve \(K\left( u\right) =F[Q_{res}\left( u\right) ]\) is \(K^{\prime }\left( u\right) =f[Q_{res}\left( u\right) ]/f_{res}[Q_{res}\left( u\right) ] \). In turn, setting \(h\left( x\right) =f\left( x\right) /\bar{F}\left( x\right) \) implies that: \(K^{\prime }\left( u\right) =\mu \cdot h\left[ Q_{res}\left( u\right) \right] \). The function \(h\left( x\right) =f\left( x\right) /\bar{F}\left( x\right) \) is termed the “hazard function” and the “failure rate” of the random variable X, and it is widely applied in survival analysis [66,67,68] and in reliability engineering [69,70,71].

The hazard function \(h\left( x\right) \) has the following temporal meaning. Consider X to be the time epoch at which a random event of interest occurs (the underlying time axis being the non-negative half line). Given the information that the event did not occur up to the positive time point t, the value \(h\left( t\right) \) is the likelihood that the event will occur right at the time point t. In case the event of interest is the failure of a certain system, the hazard function manifests the system’s “failure rate”: \(h\left( t\right) \) is the likelihood that the system will fail at the time point t—given the information that the system did not fail up to the time point t.

Using the hazard function \(h\left( x\right) \), reliability engineering distinguishes two key classes of random variables [69]: the “increasing failure rate” (IFR) class, which is characterized by an increasing hazard function \(h\left( x\right) \); and the “decreasing failure rate” (DFR) class, which is characterized by a decreasing hazard function \(h\left( x\right) \). The IFR class corresponds to systems whose likelihood of failure increases with their age (e.g., machines, cars, planes, and our own human bodies). The DFR class corresponds to things that exhibit the following ‘momentum behavior’: the longer they are in use, the greater the likelihood that they will continue to be in use (e.g., the Gregorian calendar, the metric system, the wheel, and cutlery).

If the hazard function \(h\left( x\right) \) is increasing then so is the derivative \(K^{\prime }\left( u\right) =\mu \cdot h\left[ Q_{res}\left( u\right) \right] \), and hence the curve \(K\left( u\right) \) is convex. In turn, the convexity of the curve \(K\left( u\right) \) implies that its graph resides below the diagonal line of the unit square, \(K\left( u\right) <u\) (\( 0<u<1\)). Thus, as the area below the diagonal line of the unit square is half, Eq. (9) implies that: for the IFR class—the Gini Coefficient is smaller than half, \(\gamma <\frac{1}{2}\).

If the hazard function \(h\left( x\right) \) is decreasing then so is the derivative \(K^{\prime }\left( u\right) =\mu \cdot h\left[ Q_{res}\left( u\right) \right] \), and hence the curve \(K\left( u\right) \) is concave. In turn, the concavity of the curve \(K\left( u\right) \) implies that its graph resides above the diagonal line of the unit square, \(K\left( u\right) >u\) (\( 0<u<1\)). Thus, as the area below the diagonal line of the unit square is half, Eq. (9) implies that: for the DFR class—the Gini Coefficient is greater than half, \(\gamma >\frac{1}{2}\).

The derivative \(K^{\prime }\left( u\right) =\mu \cdot h\left[ Q_{res}\left( u\right) \right] \) implies that the curve \(K\left( u\right) \) coincides with the diagonal line of the unit square if and only if the hazard function \( h\left( x\right) \) is flat, i.e.: \(K\left( u\right) \equiv u\Leftrightarrow h\left( x\right) \equiv \frac{1}{\mu }\). In turn, flat hazard functions characterize the exponential distribution. So, from a hazard perspective, the Gini Coefficient is a quantitative gauge that measures the deviation of IFR and DFR distributions from the ‘benchmark’ exponential distribution.

Setting \(\hat{\gamma }=2\gamma -1\), and using Eq. (9) and the integral \(2\int _{0}^{1}udu=1\), yields

As the range of the Gini Coefficient \(\gamma \) is the unit interval, \(0\le \gamma \le 1\), the range of the quantity \(\hat{\gamma }\) is the symmetric interval \(-1\le \hat{\gamma }\le 1\).

The quantity \(\hat{\gamma }\) admits a representation that is similar to the probabilistic formulation of Eq. (8). Indeed, the probabilistic formulation of Eq. (7) and Eq. (16) imply that:

where the random variable X and its residual lifetime \(X_{res}\) are mutually independent. Namely, the quantity \(\hat{\gamma }\) manifests the extent by which the probability of the event \(\left\{ X\le X_{res}\right\} \) differs from the probability of the complementary event \(\left\{ X>X_{res}\right\} \).

For an IFR distribution the quantity \(\hat{\gamma }\) is negative, and for a DFR distribution the quantity \(\hat{\gamma }\) is positive. For such distributions: the more negative/positive the quantity \(\hat{\gamma }\) is—the more ‘away from exponential’ the distribution is. Thus, the Gini Coefficient, via the quantity \(\hat{\gamma }\), is a quantitative gauge for the extent by which a given IFR/DFR distribution deviates from the exponential-distribution ‘benchmark’.

In the physical sciences, the exponential distribution is the paradigmatic model for ‘regular relaxation’. On the other hand, IFR and DFR distributions offer general models for ‘anomalous relaxation’ [72,73,74]. Specifically, IFR distributions with hazard functions that diverge to infinity, \(\lim _{x\rightarrow \infty }h\left( x\right) =\infty \), model super-exponential anomalous relaxation. And, DFR distributions with hazard functions that decay to zero, \(\lim _{x\rightarrow \infty }h\left( x\right) =0\), model sub-exponential anomalous relaxation.

Arguably, the principal anomalous-relaxation model is the Weibull distribution [75,76,77]. As noted is Sect. 6 above, a Weibull random variable X is characterized by the tail distribution function \(\bar{F}\left( x\right) =\exp [-(x/s)^{\epsilon }]\), where s is a positive scale, and where \(\epsilon \) is a positive exponent. In turn, the Weibull hazard function is \(h\left( x\right) =cx^{\epsilon -1}\) (with \( c=\epsilon /s^{\epsilon }\)), and hence: the exponent range \(0<\epsilon <1\) models sub-exponential anomalous relaxation; the exponent range \(1<\epsilon <\infty \) models super-exponential anomalous relaxation; and the exponent value \(\epsilon =1\) models regular relaxation. As the Weibull Gini Coefficient was shown to be \(\gamma =1-2^{-1/\epsilon }\) (see Sect. 6 above), the corresponding Weibull quantity is \(\hat{\gamma } =1-2^{1-(1/\epsilon )}\). This Weibull quantity is a decreasing function of the exponent \(\epsilon \), and: it attains its upper-bound value \(\hat{\gamma } =1\) in the sub-exponential limit \(\epsilon \rightarrow 0\); it attains its lower-bound value \(\hat{\gamma }=-1\) in the super-exponential limit \(\epsilon \rightarrow \infty \); and it yields the value \(\hat{\gamma }=0\) at the regular-relaxation exponent value \(\epsilon =1\).

11 Socioeconomic perspective

The Gini-Coefficient formulation of Eq. (8) ‘contests’ the random variable X against its total lifetime \(X_{tot}\). Continuing with this approach, this section will contest the corresponding cumulative distribution functions: \(F\left( x\right) =\Pr \left( X\le x\right) \) and \( F_{tot}\left( x\right) =\Pr (X_{tot}\le x)\) (\(x\ge 0\)). Along this section it is assumed that the random variable X has a density function \(f\left( x\right) =F^{\prime }\left( x\right) \), and \(Q\left( u\right) =F^{-1}\left( u\right) \) (\(0\le u\le 1\)) denotes the quantile function of the random variable X.Footnote 4

As the random variable X has a density function, the probability of the event \(\left\{ X=X_{tot}\right\} \) is zero. Hence, using Eq. (16), Eq. (8) can be expressed as follows: \(\gamma =1-2\Pr \left( X_{tot}\le X\right) \). In turn, Eq. (15) implies that Eq. (8) can be re-written in the following form: \(\gamma =1-2\int _{0}^{\infty }F_{tot}\left( x\right) f\left( x\right) dx\). Applying the change-of-variables \(x\mapsto u=F\left( x\right) \) to this form, and using the integral \(2\int _{0}^{1}udu=1\), yields the following integral formulation of the Gini Coefficient:

where \(L\left( u\right) =F_{tot}[Q\left( u\right) ]\) (\(0\le u\le 1\)).

As \(F_{tot}\left( x\right) \) and \(F\left( x\right) \) are cumulative distribution functions, the graph of the curve \(L\left( u\right) \) resides in the unit square—where it is non-decreasing from the square’s ‘bottom-left corner’ \(L\left( 0\right) =0\) to the square’s ‘top-right corner’ \(L\left( 1\right) =1\). The derivative of the curve \(L\left( u\right) =F_{tot}[Q\left( u\right) ]\) is \(L^{\prime }\left( u\right) =f_{tot}[Q\left( u\right) ]/f[Q\left( u\right) ]\). As described in Sect. 8, the density function of the total lifetime \(X_{tot}\) is \(f_{tot}\left( x\right) = \frac{1}{\mu }xf\left( x\right) \), and hence: \(L^{\prime }\left( u\right) = \frac{1}{\mu }Q\left( u\right) \). As the inverse function \(Q\left( u\right) \) is increasing, so is the derivative \(L^{\prime }\left( u\right) =\frac{1}{ \mu }Q\left( u\right) \), and hence the curve \(L\left( u\right) \) is convex. Thus, the curve’s graph resides below the diagonal line of the unit square, \( L\left( u\right) <u\) (\(0<u<1\)). Consequently, Eq. (12) manifests the Gini Coefficient as: twice the area captured between the curve \(L\left( u\right) \) and the diagonal line of the unit square.

The curve \(L\left( u\right) \) provides a graphic depiction of the statistical divergence between the cumulative distribution functions \( F_{tot}\left( x\right) \) and \(F\left( x\right) \). The curve \(L\left( u\right) \) has a profound socioeconomic meaning which shall now be explained. To that end consider a human society comprising n members. Also, consider the personal wealth values of the n members to be IID copies \(\left\{ X_{1},\ldots ,X_{n}\right\} \) of the random variable X. Hence, sampling at random a single member of the society, the personal wealth of the randomly-sampled member is equal in law to the random variable X.

The overall wealth of the society is \(X_{1}+\cdots +X_{n}\). Sampling at random a single Dollar from the society’s overall wealth, denote by \(W_{n}\) the personal wealth of the member to whom the randomly-sampled Dollar belongs. Observe that the wealth value \(W_{n}\) is identical, in law, to the inter-renewal distance \(\Delta _{n}\) that was described in Sect. 9. Hence—in the limit \(n\rightarrow \infty \)—the wealth value \(W_{n}\) converges, in law, to the total lifetime \(X_{tot}\).

So, there are two different methods of sampling wealth. One method is ‘member sampling’—sampling at random a single member of the society; this method yields a wealth value that is equal in law to the random variable X. Another method is ‘Dollar sampling’—sampling at random a single Dollar from the society’s overall wealth; this method yields (in the infinite-population limit \(n\rightarrow \infty \)) a wealth value that is equal in law to the total lifetime \(X_{tot}\). Evidently, the ‘Dollar sampling’ method is more inclined towards large wealth values than the ‘member sampling’ method. Indeed, when sampling a single Dollar at random, it is more likely that the randomly-sampled Dollar shall belong to a rich member of the society than to a poor member.

Named “Lorenz curve” in honor of the American scientist Max Lorenz, the curve \(L\left( u\right) =F_{tot}[Q\left( u\right) ]\) has the following socioeconomic meaning [78,79,80]: \( 100L\left( u\right) \%\) of the society’s overall wealth is held by the low (poor) \(100u\%\) of the society’s members. From a Lorenz-curve perspective, the diagonal line of the unit square manifests the socioeconomic state of ‘perfect equality’: a purely egalitarian distribution of wealth in which all the society members share a common (positive) wealth value. Thus, the Gini Coefficient uses the area captured between the Lorenz curve \(L\left( u\right) \) and the diagonal line of the unit square as: a measure that quantifies the ‘socioeconomic divergence’ of the society from the socioeconomic state of perfect equality.

Evidently, the graph of the Lorenz curve is bounded from below by the zero line of the unit square, \(L\left( u\right) \ge 0\) (\(0<u<1\)). From a Lorenz-curve perspective, the zero line of the unit square manifests the socioeconomic state of ‘perfect inequality’ (in the infinite-population limit \(n\rightarrow \infty \)): a purely non-egalitarian distribution of wealth in which \(100\%\) of the society’s overall wealth is held by an oligarchy comprising \(0\%\) of the society’s members. Setting \(\bar{\gamma } =1-\gamma \), Eq. (12) can be re-written in the form \(\bar{\gamma } =2\int _{0}^{1}\left[ L\left( u\right) -0\right] du\). Namely, the quantity \( \bar{\gamma }\) is twice the area captured between the Lorenz curve \(L\left( u\right) \) and the zero line of the unit square.

As the range of the Gini Coefficient \(\gamma \) is the unit interval, \(0\le \gamma \le 1\), the range of the quantity \(\bar{\gamma }\) is also the unit interval, \(0\le \bar{\gamma }\le 1\). The quantity \(\bar{\gamma }\) can be perceived as a ‘mirrored’ Gini Coefficient. While the Gini Coefficient \( \gamma \) is a score of socioeconomic inequality, the mirrored Gini Coefficient \(\bar{\gamma }\) is a score of socioeconomic equality. Analogously to the Gini Coefficient, the quantity \(\bar{\gamma }\) uses the area captured between the curve \(L\left( u\right) \) and the zero line of the unit square as: a measure that quantifies the ‘socioeconomic divergence’ of the society from the socioeconomic state of perfect inequality.

The probabilistic formulation of Eq. (7) implies that the quantity \( \bar{\gamma }\) admits the following probabilistic formulation: \(\bar{\gamma } =\Pr \left( X>X_{res}\right) \), where the random variable X and its residual lifetime \(X_{res}\) are mutually independent. Also, the probabilistic formulation of Eq. (8) and Eq. (16) imply that the quantity \(\bar{\gamma }\) admits the following probabilistic formulation: \( \bar{\gamma }=2\Pr \left( X>X_{tot}\right) \), where the random variable X and its total lifetime \(X_{tot}\) are mutually independent.

Equation (12) is the common way in which the Gini Coefficient is introduced in economics and in the social sciences [6,7,8]. In terms of the Lorenz curve, the Gini Coefficient has three key features. F1) The lower bound of the Gini Coefficient characterizes the socioeconomic state of perfect equality: \(\gamma =0\) if and only \(L\left( u\right) =u\) (\( 0\le u\le 1\)). F2) The upper bound of the Gini Coefficient characterizes the socioeconomic state of perfect inequality: \(\gamma =1\) if and only \(L\left( u\right) =0\) (\(0\le u<1\)). F3) The Gini Coefficient is a monotone functional of the Lorenz curve: if one Lorenz curve majorizes another Lorenz curve, \(L_{1}\left( u\right) \ge L_{2}\left( u\right) \) (\(0\le u\le 1\)), then the Gini Coefficient of the first Lorenz curve is no-greater than the Gini Coefficient of the second Lorenz curve, \( \gamma _{1}\le \gamma _{2}\).

12 Weighted-differences epilog

As noted above, the mean of the random variable X can be calculated in two ways. One way is via the tail distribution function of X, and this way leads to the residual lifetime \(X_{res}\). The other way is via the density function of X, and this way leads to the total lifetime \(X_{tot}\). A “X vs. \(X_{res}\)” approach yielded the following Gini-Coefficient representations: the probabilistic formulation of Eq. (7), and the integral formulation of Eq. (9). A “X vs. \(X_{tot}\)” approach yielded the following Gini-Coefficient representations: the probabilistic formulation of Eq. (8), and the Lorenz formulation of Eq. (12).

This section will present yet another approach—a weighted-difference approach. Specifically, this approach is based on the integral

where: \(D\left( x\right) \) is the difference between the cumulative distribution functions of any pair of the random variables \(\left\{ X,X_{res},X_{tot}\right\} \); and \(w\left( x\right) \) are non-negative weights.

As detailed in Table 1, the flat weight function \(w\left( x\right) =1/\mu \) yields the squared CV \(\rho =(\sigma /\mu )^{2}\), and also two affine transformations of the squared CV: \(\left( \rho -1\right) /2\) and \(\left( \rho +1\right) /2\). As further detailed in Table 1, the weight function \( w\left( x\right) =2f\left( x\right) \) yields the Gini Coefficient \(\gamma \), and also two affine transformations of the Gini Coefficient: \(\hat{\gamma } =2\gamma -1\), which was described in Sect. 10; and \(\bar{\gamma } =1-\gamma \), which was described in Sect. 11. The derivations of the weighted-difference results presented in Table 1 are based on calculations that were used in the sections above. The weighted-difference results presented in row #1 of the table played key roles in the mean-performance analysis of sharp restart [43, 44].

The starting point of this paper was geometric: two different perspectives of distance—the ‘aerial’ Euclidean-geometry one, and the ‘pedestrian’ grid-geometry one. As shown in Sects. 3 and 4, shifting between these two perspectives results in shifting between the CV \(\sigma /\mu \) and the Gini Coefficient \(\gamma \). The end point of this paper is the weighted average I of Eq. (13), with two different types of weights: a flat weight function which is based on the mean \(\mu \) of the random variable X; and a varying weight function which is based on the density function \(f\left( x\right) \) of the random variable X. As shown in Table 1, shifting between these two weight functions results in shifting between the squared CV \(\rho =(\sigma /\mu )^{2}\) and the Gini Coefficient \( \gamma \). Thus, Table 1 ‘closes the circle’, and it accommodates the CV and the Gini Coefficient under one unified setting.

13 Conclusion

The Gini Coefficient \(\gamma \) is a quantitative score of socioeconomic inequality that takes values in the unit interval \(0\le \gamma \le 1\). With regard to the distribution of wealth among the members of a given human society, the Gini Coefficient displays the following principal properties. P1) It yields its lower bound \(\gamma =0\) if and only if the distribution of wealth is perfectly equal, i.e.: all the society members share a common (positive) wealth value. P2) It is invariant with respect to the specific currency via which wealth is measured. P3) It yields its upper bound \(\gamma =1\) if and only if the distribution of wealth is perfectly unequal, i.e. (in an infinite-population limit): an oligarchy comprising \(0\%\) of the society members possesses \(100\%\) of the society’s overall wealth.

In fact, the Gini Coefficient \(\gamma \) is a quantitative gauge of statistical heterogeneity that is applicable in the context of general non-negative random variables with positive means. From a geometric perspective, the Gini Coefficient is a ‘pedestrian’ counterpart of the Coefficient of Variation—which is based on the ‘aerial’ Euclidean distance. The Gini Coefficient has an assortment of representations which arise via various statistical perspectives. With regard to a random variable X of interest, these representations are summarized in Table 2, and are based on the following foundations.

- \(\blacktriangleright \):

-

The grid distance—i.e. the absolute deviation \(|X_{1}-X_{2}|\)—between two IID copies of X (row 1 of Table 2).

- \(\blacktriangleright \):

-

The maximum \(\max \left\{ X_{1},X_{2}\right\} \), the minimum \(\min \left\{ X_{1},X_{2}\right\} \), and the range \(\max \left\{ X_{1},X_{2}\right\} -\min \left\{ X_{1},X_{2}\right\} \), of a sample comprising two IID copies of X (rows 2–4 of Table 2).

- \(\blacktriangleright \):

-

The cumulative distribution function \(F\left( x\right) =\Pr \left( X\le x\right) \) and the tail distribution function \( \bar{F}\left( x\right) =\Pr \left( X>x\right) \) of X (row 5 of Table 2).

- \(\blacktriangleright \):

-

Contesting X against its residual lifetime \( X_{res}\)—whose statistics are governed by the density function \(\frac{1}{ \mu }\bar{F}\left( x\right) \) (row 6 of Table 2).

- \(\blacktriangleright \):

-

The curve \(K\left( u\right) =F[Q_{res}\left( u\right) ]\), where \(Q_{res}\left( u\right) \) is the quantile function of the residual lifetime \(X_{res}\) (row 7 of Table 2).

- \(\blacktriangleright \):

-

Contesting X against its total lifetime \( X_{tot}\), whose statistics are governed by the density function \(\frac{1}{ \mu }xF^{\prime }\left( x\right) \) (row 8 of Table 2).

- \(\blacktriangleright \):

-

The Lorenz curve \(L\left( u\right) =F_{tot}[Q\left( u\right) ]\), where \(F_{tot}\left( x\right) \) is the cumulative distribution function of the total lifetime \(X_{tot}\), and where \( Q\left( u\right) \) is the quantile function of X (row 9 of Table 2).

- \(\blacktriangleright \):

-

The difference \(F\left( x\right) -F_{tot}\left( x\right) \) between the cumulative distribution functions of X and of its total lifetime \(X_{tot}\) (row 10 of Table 2).

As the Gini Coefficient \(\gamma \) is a quantitative score of socioeconomic inequality, and as it takes values in the unit interval: the ‘mirror’ \(\bar{ \gamma }=1-\gamma \) of the Gini Coefficient is a quantitative score of socioeconomic equality, and it also takes values in the unit interval. In addition to the representations of the Gini Coefficient \(\gamma \), Table 2 provides several representations of the ‘mirrored’ Gini Coefficient \(\bar{ \gamma }\).

The Lorenz-curve representation of the Gini Coefficient (row 9 of Table 2) measures the area captured between the Lorenz curve \(L\left( u\right) \) and the diagonal line of the unit square. This diagonal line manifests a deterministic (i.e. a constant) random variable, and consequently: the Gini Coefficient quantifies the divergence of X from the deterministic benchmark. Shifting from the Lorenz curve \(L\left( u\right) \) to the curve \( K\left( u\right) \) (row 7 of Table 2), the diagonal line of the unit square manifests an exponentially-distributed random variable. In turn, for X with either an increasing or a decreasing failure rate: \(\hat{\gamma } =2\gamma -1\) quantifies the divergence of X from the exponential-distribution benchmark. Representations of the quantity \(\hat{ \gamma }=2\gamma -1\) are specified in Table 3; these representations are analogous to the Gini-Coefficient representations appearing in rows 8–10 of Table 2.

The Gini Coefficient is one of many quantitative scores of socioeconomic inequality. More generally, the Gini Coefficient is one of many quantitative gauges of statistical heterogeneity. To score socioeconomic inequality, and to quantify statistical heterogeneity, scientists are free to choose any gauge(s) they deem appropriate. However, when addressing a certain problem, the problem itself may point out at gauges that are ‘natural’ in its context. Indeed, it was recently shown that addressing the mean-performance of restart protocols points at two ‘natural gauges’ of statistical heterogeneity [31, 32, 34, 43, 44]: the Coefficient of Variation, and the Gini Coefficient \(\gamma \). And, addressing statistical distributions with either increasing or decreasing failure rates, this paper points at the ‘Gini variant’ \(\hat{\gamma }=2\gamma -1\) as a ‘natural gauge’ that quantifies divergence from the exponential-distribution benchmark. With the Gini insights presented in this paper, perhaps readers will discover novel ‘Gini applications’—scientific and engineering problems in which the Gini Coefficient \(\gamma \) emerges as a ‘natural gauge’ to be applied and used.

Notes

Here and henceforth: “positive” means strictly positive, i.e. greater than zero.

The inner product of the distribution functions \(F\left( x\right) \) and \( \bar{F}\left( x\right) \) is finite, despite the fact that the distribution function \(F\left( x\right) \) is not square integrable.

Namely, \(F_{res}^{-1}\left( u\right) \) is the inverse function of \( F_{res}\left( x\right) \). To keep matters technically simple, this section considers the cumulative distribution function \(F_{res}\left( x\right) \) to be increasing. Hence the inverse function \(F_{res}^{-1}\left( u\right) \) is easily defined, and it is also increasing.

Namely, \(F^{-1}\left( u\right) \) is the inverse function of \(F\left( x\right) \). To keep matters technically simple, this section considers the cumulative distribution function \(F\left( x\right) \) to be increasing. Hence the inverse function \(F^{-1}\left( u\right) \) is easily defined, and it is also increasing.

References

Giorgi, G.M.: Gini’s scientific work: an evergreen. Metron 63(3), 299–315 (2005)

Giorgi, G.M.: Corrado Gini: the man and the scientist. Metron 69(1), 1–28 (2011)

Giorgi, G.M., Gubbiotti, S.: Celebrating the memory of Corrado Gini: a personality out of the ordinary. Int. Stat. Rev. 85(2), 325–339 (2017)

Gini, C.: Sulla misura della concentrazione e della variabilita dei caratteri. Atti del Reale Istituto veneto di scienze lettere ed arti 73, 1203–1248 (1914)

Gini, C.: Measurement of inequality of incomes. Econ J 31(121), 124–126 (1921)

Yitzhaki, S., Edna S.: A primer on a statistical methodology. The Gini methodology. Springer (2012)

Giorgi, G.M., Chiara, G.: The Gini concentration index: a review of the inference literature. J. Econ. Surv. 31(4), 1130–1148 (2017)

Giorgi, G.M.: Gini Coefficient. In: SAGE Research Methods Foundations, edited by Paul Atkinson, Sara Delamont, AlexandruCernat, Joseph W. Sakshaug, and Richard A. Williams. SAGE Publications (2020). https://doi.org/10.4135/9781526421036883328

Farris, F.A.: The Gini index and measures of inequality. Am. Math. Mon. 117(10), 851–864 (2010)

Eliazar, I.: Harnessing inequality. Phys. Rep. 649, 1–29 (2016)

Eliazar, I.: A tour of inequality. Ann. Phys. 389, 306–332 (2018)

Eliazar, I., Giorgi, G.M.: From Gini to Bonferroni to Tsallis: an inequality-indices trek. Metron 78(2), 119–153 (2020)

Tu, J., Sui, H., Feng, W., Sun, K., Chuan, X., Han, Qinhu: Detecting building facade damage from oblique aerial images using local symmetry feature and the Gini index. Remote Sens. Lett. 8(7), 676–685 (2017)

O’Hagan, S., Marina W.M., Philip J. D., Emma L., Douglas B. K.: GeneGini: assessment via the Gini coefficient of reference “housekeeping” genes and diverse human transporter expression profiles. Cell Syst. 6(2), 230-244 (2018)

Hasisi, B., Simon P., Yonatan I., Michael W.: Concentrated and close to home: the spatial clustering and distance decay of lone terrorist vehicular attacks. J. Quant. Criminol.: 1-39 (2019)

Horcher, D., Daniel J. G.: The Gini index of demand imbalances in public transport. Transportation 1-24 (2020)

Lechthaler, B., Pauly, C., Mucklich, F.: Objective homogeneity quantification of a periodic surface using the Gini coefficient. Sci. Rep. 10(1), 1–17 (2020)

Pernot, P., Savin, A.: Using the Gini coefficient to characterize the shape of computational chemistry error distributions. Theor. Chem. Accounts 140(3), 1–11 (2021)

Arbel, Y., Fialkoff, C., Kerner, A., Kerner, M.: Do population density, socio-economic ranking and Gini Index of cities influence infection rates from coronavirus? Israel as a case study. Ann. Reg. Sci. 68(1), 181–206 (2022)

Karmakar, A., Partha S.B., Debashis D., Sourav B., Pritam G.: MedGini: Gini index based sustainable health monitoring system using dew computing. Med. Novel Technol. Dev., 100145 (2022)

Shannon, C.E.: A mathematical theory of communication. Bell Syst. Tech. J. 27(3), 379–423 (1948)

Cover, Thomas M., Thomas, Joy A.: Elements of information theory. Wiley-Interscience (2006)

Ben-Naim, Arieh: A Farewell to Entropy: Statistical Thermodynamics Based on Informatio. World Scientific (2008)

Magurran, A.E.: Ecological Diversity and Its Measurement. Princeton University Press, Princeton (1988)

Jost, L.: Entropy and diversity. Oikos 113(2), 363–375 (2006)

Legendre, P., Legendre, L.: Numerical Ecology. Elsevier (2012)

Hao, L., Naiman, D.Q.: Assessing Inequality. Sage Publications (2010)

Cowell, F.: Measuring Inequality. Oxford University Press, Oxford (2011)

Coulter, P.B.: Measuring Inequality: A Methodological Handbook. Routledge (2019)

Evans, M.R., Majumdar, S.N.: Diffusion with stochastic resetting. Phys. Rev. Lett. 106(16), 160601 (2011)

Reuveni, Shlomi: Optimal stochastic restart renders fluctuations in first passage times universal. Phys. Rev. Lett. 116(17), 170601 (2016)

Pal, A., Reuveni, S.: First passage under restart. Phys. Rev. Lett. 118(3), 030603 (2017)

Chechkin, A., Sokolov, I.M.: Random search with resetting: a unified renewal approach. Phys. Rev. Lett. 121(5), 050601 (2018)

Pal, A., Eliazar, I., Reuveni, S.: First passage under restart with branching. Phys. Rev. Lett. 122(2), 020602 (2019)

Tal-Friedman, O., Pal, A., Sekhon, A., Reuveni, S., Roichman, Y.: Experimental realization of diffusion with stochastic resetting. J. Phys. Chem. Lett. 11(17), 7350–7355 (2020)

De Bruyne, B., Randon-Furling, J., Redner, S.: Optimization in first-passage resetting. Phys. Rev. Lett. 125(5), 050602 (2020)

De Bruyne, B., Randon-Furling, J., Redner, S.: Optimization and growth in first-passage resetting. J. Stat. Mech. Theory Exp. 2021(1), 013203 (2021)

Eliazar, I., Reuveni, S.: Tail-behavior roadmap for sharp restart. J. Phys. A: Math. Theor. 54(12), 125001 (2021)

Evans, M.R., Majumdar, S.N., Schehr, G.: Stochastic resetting and applications. J. Phys. A Math. Theor. 53(19), 193001 (2020)

Pal, A., Kostinski, S., Reuveni, S.: The inspection paradox in stochastic resetting. J. Phys. A: Math. Theor. 55(2), 021001 (2022)

Reuveni, S., Kundu, A.: Preface: stochastic resetting—theory and applications. J. Phys. A: Math. Theor. 57, 060301 (2024). (This is the preface to a special issue on restart research)

Eliazar, I.: Branching search. EPL (Europhys. Lett.) 120(6), 60008 (2018)

Eliazar, I., Reuveni, S.: Mean-performance of sharp restart I: statistical roadmap. J. Phys. A Math. Theor. 53(40), 405004 (2020)

Eliazar, I., Reuveni, S.: Mean-performance of Sharp Restart II: inequality roadmap. J. Phys. A Math. Theor. 54, 355001 (2021)

Jeon, J.-H., Leijnse, N., Oddershede, L.B., Metzler, R.: Anomalous diffusion and power-law relaxation of the time averaged mean squared displacement in worm-like micellar solutions. New J. Phys. 15(4), 045011 (2013)

Sikora, G., Burnecki, K., Wylomanska, A.: Mean-squared-displacement statistical test for fractional Brownian motion. Phys. Rev. E 95(3), 032110 (2017)

Bothe, M., Sagues, F., Sokolov, I.M.: Mean squared displacement in a generalized Levy walk model. Phys. Rev. E 100(1), 012117 (2019)

Sharpe, William F.: Mean-absolute-deviation characteristic lines for securities and portfolios. Manag. Sci. 18(2), B-1 (1971)

Konno, H., Yamazaki, H.: Mean-absolute deviation portfolio optimization model and its applications to Tokyo stock market. Manag. Sci. 37(5), 519–531 (1991)

Konno, H., Koshizuka, T.: Mean-absolute deviation model. Lie Trans. 37(10), 893–900 (2005)

Yitzhaki, S.: More than a dozen alternative ways of spelling Gini. Res. Econ. Inequal. 8, 13–30 (1998)

Yitzhaki, S.: Gini’s mean difference: a superior measure of variability for non-normal distributions. Metron 61(2), 285–316 (2003)

Yitzhaki, S., Lambert, P.J.: The relationship between the absolute deviation from a quantile and Gini’s mean difference. Metron 71(2), 97–104 (2013)

Eliazar, I.: Inequality spectra. Phys. A: Stat. Mech. Appl. 469, 824–847 (2017)

Galambos, J.: The Asymptotic Theory of Extreme Order Statistics. No. 04; QA274, G3. (1978)

Beirlant, J., Goegebeur, Y., Segers, J., Teugels, J.L.: Statistics of Extremes: Theory and Applications. Wiley (2006)

Reiss, R.-D., Thomas, M.: Statistical Analysis of Extreme Values, vol. 2. Birkhauser, Basel (2007)

Mitzenmacher, M.: A brief history of generative models for power law and lognormal distributions. Internet Math. 1(2), 226–251 (2004)

Newman, M.E.J.: Power laws, Pareto distributions and Zipf’s law. Contemp. Phys. 46(5), 323–351 (2005)

Clauset, A., Cosma, R.S., Mark, E.J.N.: Power-law distributions in empirical data. SIAM Rev. 51(4), 661–703 (2009)

Arnold, B.C.: Pareto Distributions. Routledge (2020)

Eliazar, I.: Power Laws. Springer, Berlin (2020)

Smith, W.L.: Renewal theory and its ramifications. J. R. Stat. Soc. Ser. B (Methodol.) 20(2), 243–284 (1958)

Cox, D.R. Renewal Theory. Methuen (1962)

Ross, S.M.: Applied Probability Models with Optimization Applications. Courier Corporation (2013)

Kalbfleisch, J.D., Prentice, R.L.: The Statistical Analysis of Failure Time Data, vol. 360. Wiley (2011)

Kleinbaum, D.G., Klein, M.: Survival Analysis. Springer, Berlin (2011)

Collett, D.: Modelling Survival Data in Medical Research. CRC Press (2015)

Barlow, R.E., Frank P.: Mathematical Theory of Reliability. Soc. Ind. Appl. Math. (1996)

Finkelstein, M.: Failure Rate Modelling for Reliability and Risk. Springer (2008)

Dhillon, B.S.: Engineering Systems Reliability, Safety, and Maintenance: An Integrated Approach. CRC Press (2017)

Williams, G., Watts, D.C.: Non-symmetrical dielectric relaxation behaviour arising from a simple empirical decay function. Trans. Faraday Soc. 66, 80–85 (1970)

Phillips, J.C.: Stretched exponential relaxation in molecular and electronic glasses. Rep. Prog. Phys. 59(9), 1133 (1996)

Kalmykov, Y.P., Coffey, W.T., Rice, S.A. (eds.): Fractals, Diffusion, and Relaxation in Disordered Complex Systems. Wiley (2006)

Murthy, D.N.P., Min X., Renyan J.: Weibull Models. Vol. 505. Wiley (2004)

Rinne, H.: The Weibull Distribution: A Handbook. CRC Press (2008)

McCool, J.I.: Using the Weibull Distribution: Reliability, Modeling, and Inference. Vol. 950. Wiley (2012)

Lorenz, M.O.: Methods of measuring the concentration of wealth. Publ. Am. Stat. Assoc. 9(70), 209–219 (1905)

Gastwirth, J.L.: A General Definition of the Lorenz Curve. Econom. J. Econom. Soc., 1037-1039 (1971)

Chotikapanich, D. (ed.): Modeling Income Distributions and Lorenz Curves. Springer, Berlin (2008)

Funding

Open access funding provided by Tel Aviv University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author is not aware of a potential Conflict of interest regarding this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper is dedicated to the blessed memory of Professor Giovanni Maria Giorgi–an outstanding man and scholar.

Appendix

Appendix

1.1 Variance calculation

Consider the random variable \(\Delta =X_{1}-X_{2}\), the difference between two IID copies of the random variable X. If X has a finite mean then \(\Delta \) has a zero mean, and hence: the variance of \(\Delta \) is equal to its second moment, \(\textbf{Var}\left[ \Delta \right] =\textbf{E}[\Delta ^{2}]\). Also, as \(\Delta \) is the difference between two IID copies of X, the variance of \(\Delta \) is twice the variance of X, i.e.: \(\textbf{Var}\left[ \Delta \right] =\textbf{Var} \left[ X_{1}-X_{2}\right] =\textbf{Var}\left[ X_{1}\right] +\textbf{Var} \left[ X_{2}\right] =2\textbf{Var}\left[ X\right] \). So, the second moment of \(\Delta \) is equal to twice the variance of X, and thus \(\textbf{E} [\Delta ^{2}]=\textbf{E}[|X_{1}-X_{2}|^{2}]=2\textbf{Var}\left[ X\right] \).

1.2 Maximum and minimum calculations

Consider the maximum \(\max \left\{ X_{1},X_{2}\right\} \), and the minimum \(\min \left\{ X_{1},X_{2}\right\} \), of two IID copies of the random variable X. As the copies are IID, the cumulative distribution function of the maximum is: \(\Pr \left( \max \left\{ X_{1},X_{2}\right\} \le x\right) =\Pr (X_{1}\le x\) & \( X_{2}\le x)=\Pr \left( X_{1}\le x\right) \Pr \left( X_{2}\le x\right) =F\left( x\right) ^{2}\). And, as the copies are IID, the tail distribution function of the minimum is: \(\Pr \left( \min \left\{ X_{1},X_{2}\right\}>x\right) =\Pr (X_{1}>x\) & \(X_{2}>x)=\Pr \left( X_{1}>x\right) \Pr \left( X_{2}>x\right) =\bar{F}\left( x\right) ^{2}\).

1.3 Probabilistic calculations

Consider a non-negative random variable \(X_{*}\) whose statistics are governed by the density function \(f_{*}\left( x\right) \), and that is independent of the random variable X. Conditioning on the value of the random variable \(X_{*}\), the probability of the event \(\left\{ X\le X_{*}\right\} \) is calculated as follows: \(\Pr \left( X\le X_{*}\right) =\int _{0}^{\infty }\Pr \left( X\le X_{*}|X_{*}=x\right) f_{*}\left( x\right) dx=\int _{0}^{\infty }\Pr \left( X\le x\right) f_{*}\left( x\right) dx=\int _{0}^{\infty }F\left( x\right) f_{*}\left( x\right) dx\). So,

Similarly to the probability of the event \(\left\{ X\le X_{*}\right\} \), the probability of the event \(\left\{ X_{*}\le X\right\} \) is

where: \(F_{*}\left( x\right) \) is the cumulative distribution function of the random variable \(X_{*}\); and \(f\left( x\right) \) is the density function of the random variable X.

Consider an event E whose occurrence probability is \(\Pr \left( E\right) \). In turn, the occurrence probability of the complementary event \(\bar{E}\) (“not E”) is \(\Pr \left( \bar{E}\right) =1-\Pr \left( E\right) \). Consequently, note that:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eliazar, I. Beautiful Gini. METRON (2024). https://doi.org/10.1007/s40300-024-00271-w

Received:

Accepted:

Published: