Abstract

Although the sport of triathlon provides an opportunity to research the effect of multi-disciplinary exercise on health across the lifespan, much remains to be done. The literature has failed to consistently or adequately report subject age group, sex, ability level, and/or event-distance specialization. The demands of training and racing are relatively unquantified. Multiple definitions and reporting methods for injury and illness have been implemented. In general, risk factors for maladaptation have not been well-described. The data thus far collected indicate that the sport of triathlon is relatively safe for the well-prepared, well-supplied athlete. Most injuries ‘causing cessation or reduction of training or seeking of medical aid’ are not serious. However, as the extent to which they recur may be high and is undocumented, injury outcome is unclear. The sudden death rate for competition is 1.5 (0.9–2.5) [mostly swim-related] occurrences for every 100,000 participations. The sudden death rate is unknown for training, although stroke risk may be increased, in the long-term, in genetically susceptible athletes. During heavy training and up to 5 days post-competition, host protection against pathogens may also be compromised. The incidence of illness seems low, but its outcome is unclear. More prospective investigation of the immunological, oxidative stress-related and cardiovascular effects of triathlon training and competition is warranted. Training diaries may prove to be a promising method of monitoring negative adaptation and its potential risk factors. More longitudinal, medical-tent-based studies of the aetiology and treatment demands of race-related injury and illness are needed.

Similar content being viewed by others

The sport of triathlon appears to be relatively safe for the majority of well-trained, well-prepared athletes. |

The demands of triathlon training and racing, and their influence on injury and illness, are not well-described. |

More prospective investigation of the health-related effects of triathlon participation, with a view to producing better training and racing guidelines, is warranted. |

1 Introduction

The sport of triathlon involves a sequential swim, cycle and run over a variety of distances and formats [1]. At any given life-stage, the triathlete is likely to be focusing his or her training on preparation for the shorter-distance sprint or Olympic-distance races, or for longer-distance half-Ironman to Ironman events. Athletes in the 35–39 years and 40–44 years age groups form the majority of participants [2].

Non-elite athletes who compete against other athletes within the same 5-year age range (hereafter referred to as ‘age-groupers’), and particularly those who are less experienced [3], are less likely to be coached than elite athletes. According to a study by the USA Triathlon organization, although only 26 % of athletes did not ‘want or need a coach’, 47 % did not have a precise training plan [4]. The sport of triathlon has been shown not to be ‘the sum of its component sports’ (because the neuromuscular adaptations to cycling training, for example, interfere with those elicited by running [5, 6]). Little research that can help the triathlete train in an optimal, sport-specific, manner has been published, however. The training that is involved in preparation for competition for the various triathlon event formats and distances [7, 8] has been insufficiently quantified [9]. Few detailed longitudinal investigations [10–12] of how changes in training factors may be reflected by changes in injury and illness status are available. The risk profile of the athlete as he or she goes into competition, and the extent to which this is mirrored by race-related problems, has not been investigated. Although training diaries have been cited as a crucial diagnostic aid in the management of ‘tired’ triathletes [10] and are reportedly the triathletes’ most commonly used method of feedback on training efficacy [3, 12], minimal examination of the extent to which such logs may be used to minimize maladaptation has occurred.

This article reviews the literature regarding triathlon training and racing loads and their effects on the immune system, oxidative stress and cardiovascular status. The extent of and putative risk factors for illness and injury in able-bodied athletes participating in road-based triathlons are described. We report how the development of specific illnesses or injuries may be influenced by the environmental conditions and/or cross-training that is involved [1]. The triathlon-specific research that has thus far been conducted into potential indicators of maladaptation is discussed. Issues that will have to be addressed if the results of future studies are to lead to practical improvements in training and racing practice are highlighted.

2 Triathlon Training

Only one calculation of mean weekly training duration data from the literature for each discipline, comparing Olympic-distance and Ironman-distance specialists, has been published [9]. These mean values broadly agree with retrospective data that were obtained 10 years earlier for age-groupers [13, 14]. Weekly training volumes for world-ranked elite triathletes have not been well-documented but are clearly higher [15]. No examination of the extent to which training practice has changed over time has been published. However, several differences between sex, ability and event-distance groups that were noted in 1993 (Table 1) may still hold. Olympic-distance athletes may spend less overall time per week than Ironman athletes doing longer, low intensity, ‘long run’ (p < 0.05 for both sexes) and ‘long bike’ sessions (p < 0.05, for females only). The length of such individual sessions is likely less for Olympic-distance than for Ironman-distance athletes (p < 0.05). Superior Olympic-distance athletes also do more speed work cycle and fewer long-run sessions per week (both p < 0.05), and inferior Olympic-distance athletes do more back-to-back cycle–run transition training than Ironman athletes (p < 0.05) [12].

In addition, nor are many detailed prospective longitudinal training studies [8, 12, 16] available. Neal et al. [16] analyzed the training-intensity distribution of ten recreational-level athletes (mean ± standard deviation [SD] age 43 ± 3 years) over the 6 months leading up to an Ironman race. Three training periods (January–February, March–April, and May–June) and 4 testing weeks, were involved. The athletes spent (mean ± SD) 69 ± 9, 25 ± 8, and 6 ± 2 % of the total training time for the three training periods combined doing low-, mid- and high-intensity exercise, respectively.

Prospective data for ten Olympic-distance athletes who finished within the top 50 at their non-drafting national championships 21 weeks later, in 1994, have also been reported [12]. The athletes were members of a national squad but given that their data pre-date the inception of the drafting rule for elite racing, the increased professionalism of the sport since it gained Olympic status, and that they were focusing on domestic races rather than on the international circuit, they are only likely to be representative of well-trained age-groupers. Approximately 25, 56 and 19 % of training time was spent swimming, cycling and running, respectively. Nearly 70 % of training time in each discipline was spent below racing intensity. The changes in training volume and intensity that occurred in the squad which included the latter athletes are illustrated in Figs. 1 and 2. It is important to note that the relative proportion of training time that was spent at higher intensity levels and the overall weekly rate of overall change in training stress became increasingly greater as the athletes progressed towards the competitive period.

Changes in distribution of training intensity of Olympic-distance triathletes over a two-peak competitive season: (a) swim, (b) bike, (c) run (reproduced from Vleck [12], with permission.) EB endurance base, Pre-comp pre-competition, Comp competition, S swim, B bike, R run, L intensity level (rated as 1–5, with 1 being the lowest intensity)

Changes in weekly rates of total training stress (arbitrary units) across consecutive macro-cycles of a two-peak competitive season in Olympic-distance triathletes: (a) swim, (b) bike, (c) run (reproduced from Vleck [12], with permission). EB endurance base, T transition, PC pre-competition, C competition, S swim, B bike, R run, L intensity level (rated as 1–5 with 1 being the lowest intensity)

Only conference abstracts exist to support the premise that elite athletes [17] with a current world ranking also spend approximately 70 % of their exercise time below racing intensity. Little is known about the training of such athletes other than it can vary widely, even between athletes with the same coach [8], that international travel may be involved, and that altitude training is widely practiced in the lead-up to competition.

3 Triathlon Competition

The length of the competitive season, and the number and type of competitions that it involves, may differ markedly both between elite athletes and age-groupers [12], and with event-distance specialization. The relative intensity at which competition is performed has been insufficiently quantified, but also differs [18–25] (Table 2). The extent to which it does so is unclear given that most studies have used different physiological markers for competition intensity. Few studies [18, 19, 26] have obtained data relating to the physiological and other demands of triathlon swimming. This is despite potentially hazardous interactions between environmental temperature, water temperature, currents, marine life, other athletes, exercise intensity and duration, as well as ‘feed-forward’ fatigue effects from one discipline to the next [27].

As the intensity and duration of competition changes, so may the thermal stress that is experienced by the athlete. Hypoglycaemia, dehydration [28], changes in blood electrolyte concentration and muscle damage [29] may all occur. The relative extent to which they occur in short-distance races is unknown. Muscle damage [30, 31] seems to be the most significant of these issues in half-Ironman-distance events [29]. The extent to which the triathlete may be at risk for hypo/hyperthermia and other heat-related illness in sprint distance events is related to environmental temperatures, humidity and degree of prior heat acclimatization [32]. Water temperatures at International Triathlon Union-sanctioned events start at 13 °C (for 1,500 m) or 14 °C (for 3,000–4,000 m) [33]. The upper allowable limits are 20–24 °C depending on athlete ability and race distance/format. They may be adjusted down according to water–air temperature differences and the weather. Maximum allowable time spent in the water also varies with event distance and athlete ability group. Total body water turnover with Ironman competition can be around 16 L or 1.33 L.h−1 [25]. Dehydration is usually estimated via measurements of body mass loss. With Ironman competition, this may be 3–8 % of the pre-start value (i.e. almost double that of half-Ironman [23, 29]) in males [25, 34, 35]. It was not reported to be significant in female age-groupers [36]. Body weight may also increase with competition in athletes with exercise-associated hyponatremia [34, 37–42]. Both hyponatraemia—which is rare in races lasting less than 4 h, but common in those lasting over 8 h [39], and heat illness [32] are discussed elsewhere [35, 37, 40, 41, 43–46]. However, normally (but not always [25]) plasma volume decreases with short-distance competition [47], and is either maintained or increased (by 8.1–10.8 %) after Ironman competition [48–50].

4 Immune, Oxidative and Cardiovascular Responses to Triathlon Training and Competition

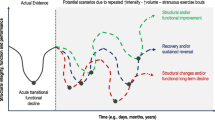

Although the demands of training and competition are not well-described, it has been suggested [51] that triathletes do ‘extreme amounts of exercise’. Some empirical as well as epidemiological data suggest that such excess may be associated with DNA modulation, increased risk of cardiovascular or pulmonary events [52–58], and/or impaired immune status. Cumulative oxidative stress [54], increased oxidation of plasma lipoproteins and a subsequent potential contribution to atherosclerosis may potentially offset the positive effects of endurance training. Indeed, it has been postulated that U- and S-shaped relationships between exercise (load) and health exist in age-groupers [51] and elite athletes, respectively [59].

4.1 The Immune Response

Longitudinal studies of the response of white blood cell (WBC) counts or other immune system markers to triathlon training are scarce. According to a 10-year retrospective study of Australian Institute of Sport (i.e. elite) athletes [60] who presented without illness, triathletes had lower resting total WBC and neutrophil counts than athletes from other sports (Table 3) [61–88]. The authors concluded that this probably reflected a training-induced adaptive anti-inflammatory response operating within broader homeostatic limits rather than any underlying pathology. They also found that the aerobic component of the sports that they surveyed exhibited a large positive correlation with monocyte counts in males (r = 0.51) and a moderate positive correlation in females (r = 0.34). Their group probably involved mostly or all Olympic-distance specialists. Rietjens et al. [65] also observed many elite (probably Olympic distance) triathletes to exhibit haematological values near or below the lower limit of the normal range. However, a 4-year prospective study of Spanish elite triathletes [71] showed WBC counts to lie within the normal limits within both the pre-competitive and competitive periods. However, 16 % of the triathletes in the Australian Institute of Sport study displayed neutropenia and 5 % displayed monocytopenia, respectively. This observation (which was supported by Philip and Bermon [89]) is of clinical interest. Neutropenic individuals are generally more susceptible to bacterial infection, such as might occur after inadequate treatment of a seemingly trivial skin abrasion. The reason for neutropenia, in particular, is unclear. It may be due to exercise-induced neutrophil apoptosis and consequent lower neutrophil lifespan. When the running section of normal triathlon training is intensified [90] (as illustrated in Figs. 1 and 2), infection risk (as measured by symptoms of upper respiratory tract infection [URTI] and increased congestion) may rise. Whether this means that short- and long-distance specialists, who likely differ in the proportion of their training that is spent at higher intensities, may differ in immune status is unknown. A 6-month prospective study of competitive-level athletes preparing for Ironman competition [62] demonstrated accumulation of differentiated and transition T cells, at the expense of naïve T cells. This accumulation could compromise host protection to novel pathogens during periods of heavy training [63] (especially when the athlete is at altitude [91, 92] and/or during excessive international travel [93]). Certainly, Southern Hemisphere athletes were reported to have a lower infection risk in their ‘off-season’ [64]. The opposite has also been reported, however [94].

Competition has been reported not to pose any acute health risks to healthy athletes who come well-prepared and well-supplied [95], but immune suppression can occur within the post-race recovery period (electronic supplementary material [ESM] Table S1) [24, 25, 29–31, 35, 36, 40, 44, 47, 48, 50, 53, 55–58, 67, 69, 70, 95–155]. The observed decreases in WBC, for example, are unlikely to be wholly explainable by plasma volume expansion as the magnitude of cell count differences is larger than the typical race-related change in plasma volume. The suggestion [104] that completing an Olympic-distance triathlon may decrease the level of immunoglobulin A (IgA)-mediated immune protection at the mucosal surface has been supported by data obtained over repeated short-distance races [108]. As triathletes may be exposed to waterborne microorganisms during the swim discipline, such a decreased IgA-mediated immunity may increase the risk of post-race URTI [156, 157]. Neutrophil death [107] has been seen immediately after half-Ironman-distance competition in males. Significant alterations in oxidative stress and immunological markers have also been recorded 20 min after Ironman-distance competition [113].

Nonetheless, such immune system alterations, as well as the muscle damage and metabolic changes that are induced by Olympic-distance competition, decline rapidly [103, 109]. Five days after Ironman competition, all the oxidative stress markers that were assayed by Neubauer et al. [55–58] and Wagner et al. [122]—the changes in which may have partly been due to muscle damage [123]—had returned to baseline levels [129]. The extent to which any postulated ‘infection window’ may exist or persist once the athlete has finished competing appears to be affected by the existence of positive adaptive mechanisms. Such mechanisms, which may include upregulation of repair mechanisms and increased activity of the endogenous antioxidative system, are likely to be highly related to the individual’s training and performance status.

4.2 Oxidative Stress

It is possible that significant differences in the magnitude of oxidative stress markers [68] may be obtained when poorly trained vs. well-trained athletes, athletes with lower vs. higher antioxidant status, or even different periods of the training year [158] are compared. Even minor differences in training status among the same athletes can result in different alterations in markers of lipid peroxidation [55–58]. Data obtained from half-Ironman- and Ironman-distance athletes, as well as controls [67], also suggest the existence of a dose-response relationship between oxidative enzyme adaptation and the response to ultra-endurance exercise. Although it is unclear exactly how triathlon training or race duration, intensity and/or frequency may affect the propensity for DNA damage [122], better training levels may enhance protection against oxidative stress [112, 159].

4.3 Cardiovascular Responses

The other effects of triathlon training and/or competition with potential health-related repercussions include platelet and coagulation activation [64, 68, 119, 130, 138, 159] and other cardiovascular system-related changes [74–78, 80, 132, 135, 143, 145, 150–152, 160–163]. Platelet activation (which may increase the risk of thromboembolytic events) and markedly increased plasmin formation may occur during competitions lasting over 2 h [130, 138, 164]. Both appear to be triggered by run-induced mechanical stress on thrombocytes and/or inflammation [130]. However, Olympic-distance triathlon was found to have no significant negative effects on either left ventricular function or myocardial tissue in adult males [151]; nor was Olympic-distance competition found to affect blood B-natriuretic peptide concentration—a marker of cardiac failure—in regularly-trained triathletes [149]. Elevated levels of troponin and B-type natriuretic peptide were noted 45 min after both half-Ironman- and Ironman-distance races, and both markers correlated with decreased right ventricular ejection fractions [136, 144]. Although the levels of these indicators of myocardial injury were back to normal within 1 week, Ironman competition was reported [141] to often result in persistently raised cardiac troponin T (cTnT) levels (agreeing with Rifai et al. [140]). This increase in CTnT was associated with echocardiographic evidence of abnormal left ventricular function. Therefore, abnormal left ventricular function [144] may increase with race distance [135, 143]. Although such abnormal left ventricular function generally disappears within 24 h [135], it may be linked to the occurrence of pulmonary oedema [165–167].

However, even when short-term right ventricular recovery appears complete, long-term training and competition may lead to myocardial fibrosis and remodeling in a small, genetically susceptible, percentage of athletes [74–77, 168]. This theoretically might provide a foundation for atrial and ventricular arrhythmias and increase cardiovascular risk, particularly in older athletes. La Gerche et al. [144] found increased right ventricular remodeling in well-trained endurance athletes with a longer competitive history. Their results suggest a cumulative effect of repetitive ultra-endurance exercise on right ventricular change and possibly myocardial fibrosis. The long-term sequelae of the structural or other alterations that occur to the adult triathlete heart with training and competition [74–77] warrant further investigation. The long-term consequences of the transient functional abnormalities that have also been observed post-triathlon in children [134] are also unknown. More ventricular premature beats at the end of a maximal exercise test have been noted in well-trained adult triathletes than in controls [75]. However, it was not the triathletes with the best competition results who had the most characteristics of eccentric and concentric left ventricular hypertrophy; nor did the athletes who exhibited the greatest training volumes exhibit the most extensive heart adaptations. Nonetheless, the triathlete who displays the first indications of evolution to a pathological hypertrophic and dilated cardiac myopathy, i.e. ventricular premature beats and other specific electrocardiographic and echocardiographic findings, is a candidate for ‘sudden cardiac death’ [75]. Acute changes in baseline hemodynamics and autonomic regulation (characterized by a decrease in stroke index, blood pressure, total peripheral resistance index, baroreceptor sensitivity, vagal modulation of the sinus node, and increased heart rate, cardiac index, and sympathetically-mediated vasomotor tone) that occur with competition may also make Ironman-distance athletes vulnerable to orthostatic challenge post-race [145, 169].

4.4 Other Responses with Potential Health Consequences

The other responses to triathlon training and racing that have potential health consequences include changes in bone mineral density. One study involving adolescent females [170] concluded that the generalised anatomical distribution of triathlon training load does not significantly enhance total bone mineral density. Junior males, on the other hand, exhibited lower bone mineral density than athletes from other sports [81]. They had significantly elevated levels in most femoral regions, but exhibited no differences from untrained controls at L2 and L3 of the lumbar spine. The authors concluded that training regimes with high volume but low intensities do not, or only slightly, induce osteogenic effects, while a variable training protocol with short-lived but high-intensity forces will have the highest positive stimulatory effects on bone formation. The implications for fracture risk (e.g. in the Wards triangle, as a result of cycle falls) are unknown. Thinner anterior tibiae and the presence of oedema on magnetic resonance imaging (MRI) appears to be a precursor to stress fracture development, however [86]. In Ironman triathletes, the spectrum of abnormal MRI findings of the knee and shoulder was no greater than age-related changes previously reported for other athletic populations and non-athletes [82, 171]. Little else is known regarding the extent to which the susceptibility to skeletal problems of triathletes [81, 83, 170, 172, 173] is affected by training-induced modulation of circulating hormone levels [85, 87] and/or relative energy deficiency in sport [174]. Some triathletes exhibit disordered eating [175, 176] and may suffer from anorexia nervosa [177], bulimia nervosa [178], or other nutritional disorders [172, 173, 179], all of which may influence susceptibility to injury and/or illness.

5 Illness

Our knowledge of the degree to which the immunological/oxidative stress of training and racing is reflected by the occurrence of illness is limited. Only six groups [10–12, 64, 71, 94] have prospectively investigated triathlon illness. Vleck collected 25.1 ± 5.6 weeks (mean ± SD) of Olympic-distance national squad athlete daily training diary data in 1994. The eight athletes concerned trained 8:10 ± 2:06 hh:mm (mean ± SD) per week, and raced (mean ± SD) 20.3 ± 10.9 times. They rated (mean ± SD) 6.4 ± 3.4 of such events as ‘best performances’. Training and injuries were recorded. The athletes also logged the occurrence of each one of the highest cited symptoms within each of Fry et al.’s 12 classes of putative overtraining symptoms [180–182]. These symptoms [12] were interpreted as symptoms of ‘illness’. The athletes logged 247 such separate incidents. Delayed-onset muscle soreness (DOMS) was the most commonly reported symptom, followed by ‘heavy legs’, loss of appetite and then virus-related symptoms. Such symptoms coincided with self-diagnosed performance decrement on 15 % of occasions. Performance [135] also declined on 34.7 and 21.5 % of the occasions that DOMS and headaches were reported, respectively. It declined on less than 7 % each of the times that the athlete recorded heavy legs, a sore throat, gastric problems, or reported viral infection. In 66.7 % of DOMS cases and 76.9 % of cases of heavy legs, the performance decrement could have been due to another illness-related symptom, or even to an injury. Interestingly, the athletes never reported a drop in performance on the same day that they reported a ‘stuffy nose’, ‘loss of appetite’, ‘chest cold’, ‘head cold’, ‘sleeplessness’ or ‘nausea’. The athletes neither explicitly stated the criteria that they used to decide whether a drop in performance had occurred or not, nor how training was interrupted or modified because of illness.

Andersen et al. [94] implemented a slightly different illness definition, with Ironman athletes. They defined illness as any health problem that was not related to the musculoskeletal system (e.g. respiratory tract infections, influenza or gastrointestinal infections, and not DOMS). Over 26 weeks, 156 cases affecting 104 athletes (i.e. 60 %) were reported, equating to 5.3 illnesses per 1,000 athlete days. Nine percent of cases did not lead to any time loss, 34 % led to 1–3 days off, 36 % led to 4–7 days off, 19 % led to 8–28 days off and 3 % led to more than 28 days off.

Medical diagnosis of illness can itself be problematic [183]. It is certainly unclear to what extent upper respiratory symptoms in triathletes may be due to infection or to other non-infectious inflammatory symptoms that mimic a URTI [183]. Of 25 cases of URTI symptoms that were reported for 63 triathletes and cyclists [72], 28 % each were due to rhinovirus and influenzae (A and B), 16 % to parainfluenzae, 8 % each to Streptococcus pneumoniae and coronavirus, and 4 % each to Epstein–Barr virus reactivation and metapneumovirus. Four percent of URTI symptoms were unaccounted for and could have been due to local drying out of the mucosal surfaces and increased exposure to airborne pathogens [184], to bronchial hyperreactivity (the rate of development of which has been reported to be 195–286 faster in elite athletes than is normal for asthma development [72, 73, 185–187]) or to muscle damage-induced migration of inflammatory cytokines [183]. The incidence of URTI in both the triathletes and the untrained controls who were assessed by a year-long study [64] was lower than the international average of two per year.

Thus, the extent to which the immune changes that occur as a result of the stress of triathlon training and/or racing alter overall disease susceptibility [156, 157] is not usually likely to be major, but is unclear. However, the conditions that are involved in open-water swimming may increase the risk for specific conditions [187] such as Acanthamoeba keratitis [188], and for uncommon diseases such as schistosomiasis [189, 190] and leptospirosis. Leptospirosis has been incurred by triathletes training [191] and competing [192–197] in contaminated surface water. Crucially, the affected athletes were only diagnosed as having been infected after awareness of a leptospirosis outbreak [198] was independently established for the race locality. The clinical presentation of leptospirosis varies and may present similar symptoms to common febrile illnesses. Thus, there is also a potential problem in triathletes of illness being misdiagnosed [199, 200]. The fact that an inappropriate management strategy (with potentially negative repercussions for rehabilitation time) may then be implemented was recently highlighted [199]. However, the extent to which such issues occur is unknown. At present, the overall outcomes of triathlete illness in terms of economic cost, training time loss and/or even performance decrement [201] are unquantified. Only (potentially) indirect evidence of the extent to which illness may lead to changes in training load exists [12, 202]. The national squad triathletes who were examined by Vleck in 1994 [12, 202] logged lower average weekly training durations than were expected of top-level athletes of that time [9]. Unfortunately, as illness and injury can overlap, it can prove difficult to ascertain the real outcome of either in isolation [12].

6 Injury

Injury ‘causing cessation of training for at least one day, reduction of training, or seeking of medical aid’ has been reported to affect 29 % [13] to 91 % [202, 203] of adult triathletes at any one time (ESM Table S2) [12, 14, 32, 39, 42, 94, 204–226, 228–232, 238]. The wide range of reported values is likely due to a failure to standardize methodology or surveillance between studies as the International Olympic Committee (IOC) guidelines recommend [233]. The other methodological difficulties with the triathlon injury literature have been reviewed [234–237] and are not repeated here. Only one retrospective study has compared the prevalence of training-related injury between different sex, ability-level and event-distance specialization groups, using the same definition and reporting methods in each case [12, 13], with no difference being found. No one has yet conducted a similar comparative study across all the triathlon age groups. Nor does the proportion of athletes who report for medical aid at sprint distance events [32] appear to be influenced by age, sex or competitive experience. Whether the same consistently applies to all the other triathlon distances and formats [238] is unknown.

Obtaining meaningful injury incidence values for triathletes is a challenging task. This is partly because of difficulties in quantifying and weighting overall training stress across (at least) swimming, cycling, running and weight-training [239]. The typical presentation and characteristics of overuse injuries also makes them difficult to record in epidemiological studies when time-loss definitions are used [240]. No sudden death rates for training exist and there is no long-term international registry system for this within races. The sudden death rate for USA Triathlon-sanctioned events over 2006–2008, involving 959,214 participants, was estimated by Harris et al. [241] at 1.5 (0.9–2.5) deaths per 100,000 participations, with an average age at death of (mean ± SD) 42.8 ± 10.1 years. It was (but not significantly) greater in males and in races with more participants. When data from 2003 to 2011 (for triathlon, duathlon, aquathlon, and off-road triathlon events) were examined [242], an approximate figure of one death per 76,000 participants per year was obtained. The absolute fatality rate increased with participation rates. Most were rated as sudden cardiac death events, yielding a higher rate than reported for half marathons and marathons between 2000 and 2010 [243] (i.e. 0.28 and 0.52 per 100,000, respectively). According to Harris et al. [241], sudden death during swimming accounted for 1.4 (0.8–2.3) deaths per 100,000 participations per year. The equivalent values for triathlon cycling and running were 0.1 (0.01–0.07) and 0.0 (0.0–0.3). Slightly, but not significantly, higher death rates were recorded for the races with short (<750 m) or longer (>1,500 m) swims than for those with 750–1,500 m swims. It is not known why.

Self-assessed overuse injury incidence rates of 0.74–76.7 per 100 athletes, and of 10.0–23.8 per 1,000 training and racing hours, respectively (depending on the month of the year), have been obtained prospectively for small (n = 11–43) samples of Olympic-distance triathletes [12]. Values of 1.39 and 18.45 incidences per 1,000 training and racing hours over various distances, respectively, have also been obtained [232]. The injuries were not confirmed by medical diagnosis. A total of 20.1 presentations for medical assistance per 1,000 h of sprint-distance, Olympic-distance and fun-distance (i.e. 0.15–0.3 km swim, 7–10 km cycle, 1–3 km run) competition has been recorded [238]. Although few directly comparable data exist, injury rates are usually thought to be higher within competition [94, 221, 222, 232]. The incidence of (traumatic) crowding-, hydration- and/or heat-related injuries in particular is also thought to be higher (ESM Table S3) [39, 203, 206–209, 212–214, 222, 226, 229, 230, 238], although no training-related studies appear to have assessed these issues. The lack of detail of assessment that has been involved in most larger-scale studies also makes it difficult to assess how widespread the problems that have only or mostly been reported by case studies (e.g. ESM Table S4) [41, 54, 166, 177, 188, 191, 196, 200, 244–274, 321–324], and that may to some extent be ‘triathlon specific’, actually are.

No prospective intergroup (age, sex, ability or event distance) comparisons of injury incidence rates exist for the endurance base, pre-competitive and competitive periods. Only one study [230] has investigated the effect of race distance and athlete ability level on the temporal occurrence of race injuries—a topic with clear implications for the depth and timing of provision of medical support. Wind speed, humidity, and dry-bulb temperatures in the study in question varied widely, but the extent to which this was over each race or between events is unclear. Injury (defined in this case as a presentation for medical assistance) affected 10.8 % of half-Ironman- and 37.7 % of Ironman-distance age-group starters, respectively. Previously, it was reported to affect 15–25 % of elite Ironman-distance competitors [275, 276]. Most athletes took 5–9 h to finish. A total of 72.2 % of half-Ironman injuries occurred between hours 6 and 7, during which time medical personnel needed to be prepared for 78 presentations for assistance per 1,000 race starters. No equivalent rates exist for shorter-distance events. The proportion of injuries that were severe was higher during the Ironman event than for the half-Ironman, and was calculated to be (mean ± 95 % confidence interval) 38.2 ± 6.0 % of those receiving treatment at any given time. Treatment duration increased with finishing time. The highest proportion of severe injuries occurred in the half-Ironman athletes who took longer to finish, or the Ironman athletes who were faster, than the rest of their cohort.

Contusions, abrasions/grazes and blisters are the most commonly reported short-distance race injuries [238]. At half-Ironman events, dehydration (50.8 %) and muscle cramps (36.1 %) are the primary medical diagnoses. Both have been reported in almost equal proportions (38.9 vs. 37.7 %) at an Ironman-distance event [230]. The percentage of so-called race injuries that are actually existing, training-related injuries that have been exacerbated by competition is unknown. Injury outcome after a race has finished (e.g. death from complications arising from chest infection) is also not described (ESM Table S5) [12–14, 32, 206, 208, 211, 212, 214–218, 220, 222, 226]. Gradual-onset overuse injuries are the most commonly reported training injuries. They have been reported to occur in approximately three times as many athletes as do acute injuries [209, 215, 232, 277] (ESM Table S6) [12, 14, 94, 203, 206, 209–211, 213, 214, 216, 217, 221, 222, 225, 226, 232, 237, 238]. The true value may be higher given the fact that retrospective recall is generally poorer for overuse injuries than for traumatic injuries [232].

Most athletes rate their training-related injuries as ‘minor’ to ‘moderate’ (i.e. incurring up to 21 days off) when a time-loss definition is used. However, according to Finch “it is often the medically less severe injuries that are considered to be more severe by the athlete, although they do not require medical treatment, as they have the potential to severely limit an athlete’s performance” [278]. Many injured triathletes may continue training [12, 217, 226, 277]. Running, cycling and swimming training is modified in 17–21, 26.2–75 and 42–78 % of injury cases [202], respectively, and injury recurrence is probably a major issue [202, 279].

We highlighted the fact that the influence of certain injury risk factors may differ with sex, format and event-distance specialization (Table 1 and Sect. 2) [12, 280]. Minimal examination into which putative risk factors are most highly linked to injury in each group has taken place (Table 4 and ESM Tables S4 and S5) [12–14, 32, 41, 54, 166, 177, 188, 191, 196, 200, 203, 206–222, 224–226, 232, 238, 244–274, 281, 315, 317, 318, 321–324]. Although various potential (and even triathlon cross-training-specific) mechanisms of injury have been speculated upon [217, 236, 237, 282–284], they are largely unverifiable. For example, drowning was the reported cause of death for the swim fatalities recorded by Harris et al. [241], but drowning lacks the accurate methods of risk exposure that are needed to establish aetiology [285]. The actual cause could be something else (e.g. autonomic conflict [286, 287], deterioration in performance [288] in cold water, swim-induced pulmonary oedema [249], or hyperthermia). It is noteworthy that all the swim deaths occurred in open water, raising the question as to whether there is something about mass participation competition that is significant [286]. Periodic health screening (such as the IOC Periodic Health Evaluation [289]) is not routinely implemented in the sport of triathlon to screen for risk factors for sudden cardiac death [290]. With only one abstract on the topic published thus far, the extent to which triathletes enter races with pre-existing medical conditions is unknown. Importantly, of the sudden deaths reported by Harris et al. [241], seven of nine athletes were found on autopsy to have had cardiovascular abnormalities. Six had mild left ventricular hypertrophy. Two years later, the USA triathlon fatality incidents study [242] concluded, despite incomplete access to relevant medical data, that most non-traumatic deaths were likely due to sudden cardiac death. However, injuries are usually attributed to “a result of failure to adjust pace within safe limits for specific environmental conditions” [209, 237], or to “inadequate implementation of (race) safety precautions”[247].

7 Training and Performance Status Indicators

It has been said that “a fine line exists between the level of training that is required for optimal performance, and that which induces problems” [291]. Laboratory-based (physiological, immunological, haematological, cardiorespiratory and biochemical) testing may therefore sometimes be conducted to ascertain the individual’s health status. Only some markers have been shown to be related to triathlon performance and thereby possess criterion validity (Table 5) [10–12, 29, 30, 65, 68, 90, 145, 223, 292–305]. Whether they are sensitive enough to detect a drop in performance before it becomes competitively meaningful [306] is unclear. To date, peak power output and blood pressure variability appear to be the only variables that are correlated with triathlon performance that have been used [300] in prospective investigations of the links between training and health in triathletes [10–12, 16, 304]. Peak power output appeared not sensitive enough to detect the early signs of overreaching in well-trained males [300]. Whether it may react later to more extended exhaustive training is unknown.

In any case, by the time an underlying problem has been confirmed in the laboratory, it may be too late. Ideally the individual’s distress markers should be monitored far more regularly, in conjunction with his/her training, and on an ongoing basis. Indeed, it has been observed that as regards heart rate variability (HRV) related data [305], for example, attempting to diagnose the athletes’ physical status from records obtained on a single isolated day may be a somewhat meaningless exercise. Weekly averages and rolling averages of RR-interval (the interval from the peak of one QRS complex to another on an electrocardiogram)-related values and the coefficient of variation of HRV, on the other hand, were shown to differ between an athlete who developed non-functional overreaching and a control. These results complemented data obtained in swimmers—in which a shift in autonomic balance towards sympathetic predominance 1 week earlier was linked to increased risk for URTI and muscular problems [307].

Although HRV holds promise as an indicator of maladaptation, as do baroreflex sensitivity and blood pressure variability [145], monitoring it may only prove realistic for some. Dolan et al. [3] reported that only 20.9 % of triathletes used a heart rate monitor. In contrast, 45.5 % kept a training diary [12]. Training diary compliance is therefore likely to be higher. The question arises as to whether the right things are being monitored in the diary, as well as how the data are being analyzed. Scores on questionnaires such as the Daily Analysis of Life Demands for Athletes (DALDA) [90], the Recovery-Stress Questionnaire for Athletes (RESTQ-Sport) [303], the Perceived Stress Scale (PSS), the Training Distress Scale (TDS), the Athlete Burnout Questionnaire (ABQ) and the Multi-component Training Distress Scale (MTDS) [10, 11], as well as on a combination of shortened versions of the Profile of Mood States (such as the Brunel Mood Scale [BRUMS] and the Profile of Mood States for Children [POMS-C]) [10, 12, 300] and various signs and symptoms of illness and injury [12], assess mood disturbance, perceived stress and training or other distress symptoms to various degrees. They may all potentially be incorporated into such logs.

Main et al. [11] found, using linear mixed modeling, that both various combinations of training factors and psychological stressors (as monitored on a weekly basis via the PSS, BRUMS, TDS and ABQ) were linked with signs and symptoms of both illness and injury in age-group triathletes. The number of training sessions and the number of completed run sessions per week, as well as perceived programme difficulty (see Tables 1 and 4), had significant effects on signs and symptoms of URTI, injuries or minor aches and pains, although less so than did individual athlete scores on the PSS [308]. We note that the TDS itself (which was developed from the list of distress symptoms that Fry et al. [309] identified from interviews with fit individuals who were exposed to repeated intense training) was later validated against performance in a laboratory time-to-fatigue trial. TDS responses were also compared across a high-intensity training group and a control group of triathletes, and decreases in running performance in the training but not the control group were reflected by the athletes’ TDS scores [310]. However, neither actual nor self-assessed performance was assessed in the study by Main et al. [11].

Certainly, potential indicators of the fitness fatigue response, or of performance (as indeed may training-related risk factors for injury and illness), are likely to function better if they have been tailored to the individual athlete. Vleck [12] retrospectively calculated individual specific peak performance norms for various indicators on Fry et al.’s (longer) 1991 list [180, 181] of potential overtraining symptoms, for each of eight national squad triathletes. The fact that these norms were only obtainable over an average of six ‘best performance’ occasions rather than the recommended eight [311], even though the study lasted approximately 6 months, underlines the difficulties in producing such norms. The extent that the weekly values for each distress indicator diverged from the individual athlete’s peak performance norm were then modelled together with composite training load scores and self-reports of performance decrement, using binary logistic regression. The combination of the heavy legs and DOMS scores for the same week, the composite appetite score for the previous week, the POMS-C confusion factor score for both 2 and 3 weeks before, and the POMS-C anger factor score for the previous week increased the predictive power of the model for performance decrement. New overuse injury had previously been shown to be associated with an increase in combined weighted cycle and run training at higher intensity levels 2 weeks prior to onset. Interestingly, prediction was not improved by incorporation of any derived training:stress recovery variables for each of the athletes into the model. This may have been due to the difficulty in producing valid, individual-specific indices that account for relative rather than absolute changes over time in the training stress to which each athlete is exposed.

Despite its problems (which may have been partially due to improper parameters being used to indicate training strain and performance), the Banister ‘fitness-fatigue’ model has also been used [304], in this case to examine the effect of training on self-perceived fatigue and anxiety. The study is noteworthy because self-report measures arguably exhibit reliable dose-response relationships with training load. Self-assessment of fatigue also circumvents the problem of obtaining sufficient race or time-trial performance data for the modelling process. Both measures were found to hold potential for the early detection of training-related problems.

Feelings of fatigue, in addition to loss of performance, can have a major impact on the self-efficacy of the athlete. Dynamic systems modelling of performance-related self-efficacy, in conjunction with mediation analyses of ‘being in shape’ and ‘fatigue/lack of energy’, has also therefore been used to track longitudinal training adaptation in two separate elite females [303, 312]. In this case, the ‘norm’ on each of the scales of the RESTQ-Sport values that was required for the model was obtained from questioning the athlete rather than from long-term data collection. Positive effects of training on self-efficacy, which were partly explained by feelings of ‘being in shape’ and suppressed by feelings of ‘fatigue/lack of energy’, were observed. Promisingly, modification of the relationship between lagged training load and ‘fatigue/lack of energy’ was seen and was particularly pronounced in the temporal proximity of an injury. Although no attempt to actually predict injury or illness was made, the dynamic systems approach may hold especial promise as a potential method of modelling the relationships between training and illness in triathletes because it can avoid the possible problems with athletes’ or coaches’ (as opposed to researchers’) reliance on mainly visual analyses [10] of graphical profiles. Visual analysis is easily done and facilitates athlete–coach discussions. It is therefore ‘friendly’. However, visual analysis may not account for the effects of factors that mask the true relationship between explanatory and outcome variables, or for auto-correlation between successive observations. It can neither quantify dose-response relationships between training/racing and signs and symptoms of illness/injury, nor their temporal variation. This complicates the design of an appropriate programme of intervention. It also means that much work still remains to be done in this field before clear guidelines as to what the athlete should do and what he/she should monitor, if health and performance are to be maximised, can be arrived at.

8 Conclusion

Neither the stress to which triathletes subject themselves nor what this means for their wellbeing has been comprehensively evaluated. Little scientific data are available to aid triathletes, most of whom are older age-groupers, balance the multi-discipline training that is required in their sport. Any negative effects of racing on immunological, oxidative, cardiovascular and humoral parameters appear, for the majority of athletes, to be transient and non-severe. For most athletes, injury and illness incurred whilst training also appears to be of minor or moderate severity. However, injury recurrence rates have not been investigated and the long-term effects on health of triathlon training and racing are relatively unknown. For both to be fully elucidated, issues such as the development of a consensus statement on the definition and reporting of both (first time and recurring) injury and illness, and the development of an international registry for sudden death incidents, need to be addressed (Table 6) [243, 313].

Some clues exist as to whether the degree of influence of specific risk factors for maladaptation may differ with different athlete attributes such as sex, age group and event-distance specialization. Both injury and infection risk may be greater within periods of higher intensity work. They may also be greater at specific points within competition (e.g. when fatigue is setting in). These clues should be followed up by (possibly training diary-based) longitudinal prospective studies. Such studies would allow more comprehensive evaluation of the risk factors for, and warning signs of, any negative outcomes of training and racing stress. Better management strategies may then be developed for any negative health issues that may arise as a result of triathlon training and racing.

References

Bentley DJ, Millet GP, Vleck VE, et al. Specific aspects of contemporary triathlon: implications for physiological analysis and performance. Sports Med. 2002;32(6):345–59.

USA Triathlon. USA Triathlon membership breakdown. June 2012. Available at: http://www.usatriathlon.org/about-multisport/demographics.aspx. Accessed 1 Mar 2013.

Dolan SH, Houston M, Martin SB. Survey results of the training, nutrition, and mental preparation of triathletes: practical implications of findings. J Sports Sci. 2011;29(10):1019–28.

TribeGroup LLC. The mind of the triathlete: market research report 2009. Available at: http://www.usatriathlon.org/about-multisport/demographics.aspx. Accessed 1 Mar 2013.

Millet GP, Vleck VE, Bentley DJ. Physiological differences between cycling and running: lessons from triathletes. Sports Med. 2009;39(3):179–206.

Bonacci J, Chapman A, Blanch P, et al. Neuromuscular adaptations to training, injury and passive interventions: implications for running economy. Sports Med. 2009;39(11):903–21.

Banister EW, Carter JB, Zarkadas PC. Training theory and taper: validation in triathlon athletes. Eur J Appl Physiol Occup Physiol. 1999;79(2):182–91.

Millet GP, Candau RB, Barbier B, et al. Modelling the transfers of training effects on performance in elite triathletes. Int J Sports Med. 2002;23(1):55–63.

Vleck VE, Bentley DJ, Cochrane T. L’entraînement en triathlon: synthèse et perspectives de recherche [Triathlon training programmes: overview and recommendations for future research]. Sci et Mot. 2003;50:33–53.

Main LC, Landers GJ. Overtraining or burnout: a training and psycho-behavioural case study. Int J Sports Sci Coach. 2012;7(1):23–32.

Main LC, Landers GJ, Grove JR, et al. Training patterns and negative health outcomes in triathlon: longitudinal observations across a full competitive season. J Sports Med Phys Fitness. 2010;50(4):475–85.

Vleck VE. Triathlon training and injury analysis: an investigation in British National Squad and age-group triathletes. 1st ed. Germany: Verlag Dr Mueller (now LAP LAMBERT Academic Publishing, AV Akademikerverlag GmbH & Co.); 2010.

Vleck VE, Bentley DJ, Millet GP, et al. Triathlon event distance specialization: training and injury effects. J Strength Cond Res. 2010;24(1):30–6.

Vleck VE, Garbutt G. Injury and training characteristics of male elite, development squad, and club triathletes. Int J Sports Med. 1998;19(1):38–42.

Buergi A, Vanderperre D, Jeanneret O. Training, wettkampf und coaching in der karriereentwicklung von weltklassetriathleten. Schweiz Z Sportmed Sporttraumatol. 2013;61(3):5–9.

Neal CM, Hunter AM, Galloway SD. A 6-month analysis of training-intensity distribution and physiological adaptation in Ironman triathletes. J Sports Sci. 2011;29(14):1515–23.

Seiler S. What is best practice for training intensity and duration distribution in endurance athletes? Int J Sports Physiol Perf. 2010;5(3):276–91.

Taylor D, Smith M, Vleck V. Reliability of performance and associated physiological responses during simulated sprint-distance triathlon. Sci Cycl. 2012;1(1):21–9.

Binnie MJ, Landers G, Peeling P. Effect of different warm-up procedures on subsequent swim and overall sprint distance triathlon performance. J Strength Cond Res. 2012;26(9):2438–46.

Gonzalez-Haro C, Gonzalez-de-Suso JM, Padulles JM, et al. Physiological adaptation during short distance triathlon swimming and cycling sectors simulation. Physiol Behav. 2005;86(4):467–74.

Bernard T, Hausswirth C, Le Meur Y, et al. Distribution of power output during the cycling stage of a Triathlon World Cup. Med Sci Sports Exerc. 2009;41(6):1296–302.

Le Meur Y, Hausswirth C, Dorel S, et al. Influence of gender on pacing adopted by elite triathletes during a competition [published erratum appears in Eur J Appl Physiol. 2011;111(6):1231–3]. Eur J Appl Physiol. 2009;106(4):535–45.

Gillum TL, Dumke CL, Ruby BC. Muscle glycogenolysis and resynthesis in response to a half Ironman triathlon: a case study. Int J Sports Physiol Perform. 2006;1(4):408–13.

Laursen PB, Suriano R, Quod MJ, et al. Core temperature and hydration status during an Ironman triathlon. Br J Sports Med. 2006;40(4):320–5 (discussion 5).

Cuddy JS, Slivka DR, Hailes WS, et al. Metabolic profile of the Ironman World Championships: a case study. Int J Sports Physiol Perf. 2010;5(4):570–6.

Peeling PD, Bishop DJ, Landers GJ. Effect of swimming intensity on subsequent cycling and overall triathlon performance. Br J Sports Med. 2005;39(12):960–4 (discussion 964).

Millet GP, Vleck V. Triathlon specificity. In: Seifert L, Chollet D, Mujika I, editors. World book of swimming: from science to performance. New York: Nova Science Publishers Inc.; 2010.

Aragon-Vargas LF, Wilk B, Timmons BW, et al. Body weight changes in child and adolescent athletes during a triathlon competition. Eur J Appl Physiol. 2013;113(1):233–9.

Del Coso J, Gonzalez-Millan C, Salinero JJ, et al. Muscle damage and its relationship with muscle fatigue during a half-iron triathlon. PLoS One. 2012;7(8):e43280.

Margaritis I, Tessier F, Verdera F, et al. Muscle enzyme release does not predict muscle function impairment after triathlon. J Sports Med Phys Fitness. 1999;39(2):133–9.

Suzuki K, Peake J, Nosaka K, et al. Changes in markers of muscle damage, inflammation and HSP70 after an Ironman Triathlon race. Eur J Appl Physiol. 2006;98(6):525–34.

Gosling CM, Gabbe BJ, McGivern J, et al. The incidence of heat casualties in sprint triathlon: the tale of two Melbourne race events. J Sci Med Sport. 2008;11(1):52–7.

International Triathlon Union. ITU competition rules on medical issues. Available at: http://www.triathlon.org/uploads/docs/itusport_2013_medical_itu-competition-rules-on-medical-issues-1.pdf. Accessed 29 Mar 2014.

Laursen PB, Watson G, Abbiss CR, et al. Hyperthermic fatigue precedes a rapid reduction in serum sodium in an ironman triathlete: a case report. Int J Sports Physiol Perform. 2009;4(4):533–7.

Speedy DB, Noakes TD, Rogers IR, et al. Hyponatremia in ultradistance triathletes. Med Sci Sports Exerc. 1999;31(6):809–15.

Knechtle B, Wirth A, Baumann B, et al. An ironman triathlon does not lead to a change in body mass in female triathletes. Res Sports Med. 2010;18(2):115–26.

Speedy DB, Rogers I, Safih S, et al. Hyponatremia and seizures in an ultradistance triathlete. J Emerg Med. 2000;18(1):41–4.

Hew-Butler T, Anley C, Schwartz P, et al. The treatment of symptomatic hyponatremia with hypertonic saline in an Ironman triathlete. Clin J Sport Med. 2007;17(1):68–9.

Hiller WD. Dehydration and hyponatremia during triathlons. Med Sci Sports Exerc. 1989;21(5 Suppl):S219–21.

Speedy DB, Noakes TD, Rogers IR, et al. A prospective study of exercise-associated hyponatremia in two ultradistance triathletes. Clin J Sport Med. 2000;10(2):136–41.

Stefanko G, Lancashire B, Coombes JS, et al. Pulmonary oedema and hyponatraemia after an ironman triathlon. BMJ Case Rep. Epub 17 Aug 2009. doi:10.1136/bcr.04.2009.1764.

Richter S, Betz C, Geiger H. (Severe hyponatremia with pulmonary and cerebral edema in an Ironman triathlete). Dtsch Med Wochenschr. 2007;132(36):1829–32.

Mountjoy M, Alonso JM, Bergeron MF, et al. Hyperthermic-related challenges in aquatics, athletics, football, tennis and triathlon. Br J Sports Med. 2012;46(11):800–4.

Speedy DB, Faris JG, Hamlin M, et al. Hyponatremia and weight changes in an ultradistance triathlon. Clin J Sport Med. 1997;7(3):180–4.

Speedy DB, Rogers IR, Noakes TD, et al. Diagnosis and prevention of hyponatremia at an ultradistance triathlon. Clin J Sport Med. 2000;10(1):52–8.

Speedy DB, Rogers IR, Noakes TD, et al. Exercise-induced hyponatremia in ultradistance triathletes is caused by inappropriate fluid retention. Clin J Sport Med. 2000;10(4):272–8.

McNaughton LR. Plasma volume responses associated with a sprint triathlon in novice triathletes. Int J Sports Med. 1989;10(3):161–4.

Knechtle B, Baumann B, Wirth A, et al. Male ironman triathletes lose skeletal muscle mass. Asia Pac J Clin Nutr. 2010;19(1):91–7.

Kimber NE, Ross JJ, Mason SL, et al. Energy balance during an ironman triathlon in male and female triathletes. Int J Sport Nutr Exerc Metab. 2002;12(1):47–62.

Speedy DB, Noakes TD, Kimber NE, et al. Fluid balance during and after an ironman triathlon. Clin J Sport Med. 2001;11(1):44–50.

Poulsen HE, Weimann A, Loft S. Methods to detect DNA damage by free radicals: relation to exercise. Proc Nutr Soc. 1999;58(4):1007–14.

Knez WL, Coombes JS, Jenkins DG. Ultra-endurance exercise and oxidative damage: implications for cardiovascular health. Sports Med. 2006;36(5):429–41.

Tao K, Davenport M. Deep venous thromboembolism in a triathlete. J Emerg Med. 2010;38(3):351–3.

Shah NR, Iqbal MB, Barlow A. Severe physical exertion, oxidative stress, and acute lung injury. Clin J Sport Med. 2011;21(6):537–8.

Neubauer O, Konig D, Kern N, et al. No indications of persistent oxidative stress in response to an ironman triathlon. Med Sci Sports Exerc. 2008;40(12):2119–28.

Neubauer O, Konig D, Wagner KH. Recovery after an Ironman triathlon: sustained inflammatory responses and muscular stress. Eur J Appl Physiol. 2008;104(3):417–26.

Neubauer O, Reichhold S, Nersesyan A. Exercise-induced DNA damage: is there a relationship with inflammatory responses? Exerc Immunol Rev. 2008;14:51–72.

Neubauer O, Reichhold S, Nics L, et al. Antioxidant responses to an acute ultra-endurance exercise: impact on DNA stability and indications for an increased need for nutritive antioxidants in the early recovery phase. Br J Nutr. 2010;104(8):1129–38.

Malm C. Susceptibility to infections in elite athletes: the S-curve. Scand J Med Sci Sports. 2006;16(1):4–6.

Horn PL, Pyne DB, Hopkins WG, et al. Lower white blood cell counts in elite athletes training for highly aerobic sports. Eur J Appl Physiol. 2010;110(5):925–32.

Diaz V, Montalvo Z, Banfi G. White blood cell counts in elite triathletes over four consecutive seasons. Eur J Appl Physiol. 2011;111(5):893–4.

Cosgrove C, Galloway SD, Neal C. The impact of 6-month training preparation for an Ironman triathlon on the proportions of naive, memory and senescent T cells in resting blood. Eur J Appl Physiol. 2012;112(8):2989–98.

Pool EJ, Robson PJ, Smith C, et al. In vitro interleukin-6 release in whole blood cultures in samples taken at rest from triathletes and professional rugby players. Eur J Appl Physiol. 2002;87(3):233–7.

Broadbent S. Seasonal changes in haematology, lymphocyte transferrin receptors and intracellular iron in Ironman triathletes and untrained men. Eur J Appl Physiol. 2011;111(1):93–100.

Rietjens GJ, Kuipers H, Hartgens F, et al. Red blood cell profile of elite Olympic Distance triathletes. A three-year follow-up. Int J Sports Med. 2002;23(6):391–6.

Gouarne C, Groussard C, Gratas-Delamarche A, et al. Overnight urinary cortisol and cortisone add new insights into adaptation to training. Med Sci Sports Exerc. 2005;37(7):1157–67.

Knez WL, Jenkins DG, Coombes JS. Oxidative stress in half and full Ironman triathletes. Med Sci Sports Exerc. 2007;39(2):283–8.

Medina S, Dominguez-Perles R, Cejuela-Anta R, et al. Assessment of oxidative stress markers and prostaglandins after chronic training of triathletes. Prostaglandins Other Lipid Mediat. 2012;99(3–4):79–86.

Banfi G, Migliorini S, Pedroni F, et al. Strenuous exercise activates growth factors and chemokines over-expression in human serum of top-level triathlon athletes during a competitive season. Clin Chem Lab Med. 2008;46(2):250–2.

Konig D, Bisse E, Deibert P, et al. Influence of training volume and acute physical exercise on the homocysteine levels in endurance-trained men: interactions with plasma folate and vitamin B12. Ann Nutr Metab. 2003;47(3–4):114–8.

Diaz V, Martinez ED, Peinado AB, et al. Control biologico del sobreentrenamiento en un mesociclo precompetitivo en triatletas de elite: un estudio piloto [Biological control of training during the precompetitive perio in elite triathletes: a pilot study]. Arch Med Deporte. 2010;27(135):31–40.

Spence L, Brown WJ, Pyne DB, et al. Incidence, etiology, and symptomatology of upper respiratory illness in elite athletes. Med Sci Sports Exerc. 2007;39(4):577–86.

Knopfli BH, Luke-Zeitoun M, von Duvillard SP, et al. High incidence of exercise-induced bronchoconstriction in triathletes of the Swiss national team. Br J Sports Med. 2007;41(8):486–91 (discussion 491).

Claessens C, Claessens P, Bloemen H, et al. Structural heart adaptations in triathletes. Acta Cardiol. 1999;54(6):317–25.

Claessens P, Claessens C, Claessens M, et al. Ventricular premature beats in triathletes: still a physiological phenomenon? Cardiology. 1999;92(1):28–38.

Claessen C, Claessens P, Claessens M, et al. Echocardiographic and physiological performance characteristics of triathletes. Can J Cardiol. 2000;16(8):993–1002.

Claessens PJ, Claessens CW, Claessens MM, et al. Supernormal left ventricular diastolic function in triathletes. Tex Heart Inst J. 2001;28(2):102–10.

Douglas PS. Cardiac considerations in the triathlete. Med Sci Sports Exerc. 1989;21(5 Suppl):S214–8.

Knez WL, Sharman JE, Jenkins DG, et al. Central hemodynamics in ultra-endurance athletes. J Sci Med Sport. 2008;11(4):390–5.

Scharf M, Brem MH, Wilhelm M, et al. Atrial and ventricular functional and structural adaptations of the heart in elite triathletes assessed with cardiac MR imaging. Radiology. 2010;257(1):71–9.

Platen P, Chae EH, Antz R, et al. Bone mineral density in top level male athletes of different sports. Eur J Sport Sci. 2001;1(5):1–15.

Shellock FG, Hiller WD, Ainge GR, et al. Knees of Ironman triathletes: magnetic resonance imaging assessment of older (>35 years old) competitors. J Magn Reson Imaging. 2003;17(1):122–30.

Smith R, Rutherford OM. Spine and total body bone mineral density and serum testosterone levels in male athletes. Eur J Appl Physiol Occup Physiol. 1993;67(4):330–4.

Muhlbauer R, Lukasz TS, Faber TS, et al. Comparison of knee joint cartilage thickness in triathletes and physically inactive volunteers based on magnetic resonance imaging and three-dimensional analysis. Am J Sports Med. 2000;28(4):541–6.

Maimoun L, Galy O, Manetta J, et al. Competitive season of triathlon does not alter bone metabolism and bone mineral status in male triathletes. Int J Sports Med. 2004;25(3):230–4.

Newsham-West RJ, Lyons B, Milburn PD. Regional bone geometry of the tibia in triathletes and stress reactions—an observational study. J Sci Med Sport. 2013;17(2):150–4.

Lucia A, Chicharro JL, Perez M, et al. Reproductive function in male endurance athletes: sperm analysis and hormonal profile. J Appl Physiol. 1996;81(6):2627–36.

Vaamonde D, Da-Silva Grigoletto ME, Garcia-Manso JM, et al. Response of semen parameters to three training modalities. Fertil Steril. 2009;92(6):1941–6.

Philip P, Bermon S. Intensive triathlon training induces low peripheral CD34+ stem cells. Br J Haematol. 2003;120(5):914–5.

Robson-Ansley PJ, Blannin A, Gleeson M. Elevated plasma interleukin-6 levels in trained male triathletes following an acute period of intense interval training. Eur J Appl Physiol. 2007;99(4):353–60.

Wilber RL, Drake SD, Hesson JL, et al. Effect of altitude training on serum creatine kinase activity and serum cortisol concentration in triathletes. Eur J Appl Physiol. 2000;81(1–2):140–7.

Schommer K, Menold E, Subudhi AW, et al. Health risk for athletes at moderate altitude and normobaric hypoxia. Br J Sports Med. 2012;46:828–32.

Schwellnus MP, Derman WE, Jordaan E, et al. Elite athletes travelling to international destinations >5 time zone differences from their home country have a 2–3-fold increased risk of illness. Br J Sports Med. 2012;46(11):816–21.

Andersen CA, Clarsen B, Johansen TB, et al. High prevalence of overuse injury among iron-distance triathletes. Br J Sports Med. 2013;47(13):857–61.

Gastmann U, Dimeo F, Huonker M, et al. Ultra-triathlon-related blood-chemical and endocrinological responses in nine athletes. J Sports Med Phys Fitness. 1998;38(1):18–23.

Farber HW, Schaefer EJ, Franey R, et al. The endurance triathlon: metabolic changes after each event and during recovery. Med Sci Sports Exerc. 1991;23(8):959–65.

Holly RG, Barnard RJ, Rosenthal M, et al. Triathlete characterization and response to prolonged strenuous competition. Med Sci Sports Exerc. 1986;18(1):123–7.

Jurimae T, Viru A, Karelson K, et al. Biochemical changes in blood during the long and short triathlon competition. J Sports Med Phys Fitness. 1989;29(4):305–9.

Lamon-Fava S, McNamara JR, Farber HW, et al. Acute changes in lipid, lipoprotein, apolipoprotein, and low-density lipoprotein particle size after an endurance triathlon. Metabolism. 1989;38(9):921–5.

Caillaud C, Serre-Cousine O, Anselme F, et al. Computerized tomography and pulmonary diffusing capacity in highly trained athletes after performing a triathlon. J Appl Physiol. 1995;79(4):1226–32.

Hill NS, Jacoby C, Farber HW. Effect of an endurance triathlon on pulmonary function. Med Sci Sports Exerc. 1991;23(11):1260–4.

Staats R, Balkow S, Sorichter S, et al. Change in perforin-positive peripheral blood lymphocyte (PBL) subpopulations following exercise. Clin Exp Immunol. 2000;120(3):434–9.

Shinkai S, Kurokawa Y, Hino S, et al. Triathlon competition induced a transient immunosuppressive change in the peripheral blood of athletes. J Sports Med Phys Fitness. 1993;33(1):70–8.

Steerenberg PA, van Asperen IA, van Nieuw Amerongen A, et al. Salivary levels of immunoglobulin A in triathletes. Eur J Oral Sci. 1997;105(4):305–9.

Levada-Pires AC, Cury-Boaventura MF, Gorjao R, et al. Induction of lymphocyte death by short- and long-duration triathlon competitions. Med Sci Sports Exerc. 2009;41(10):1896–901.

Gomez-Merino D, Drogou C, Guezennec CY, et al. Comparison of systemic cytokine responses after a long distance triathlon and a 100-km run: relationship to metabolic and inflammatory processes. Eur Cytokine Netw. 2006;17(2):117–24.

Levada-Pires AC, Cury-Boaventura MF, Gorjao R, et al. Neutrophil death induced by a triathlon competition in elite athletes. Med Sci Sports Exerc. 2008;40(8):1447–54.

Libicz S, Mercier B, Bigou N, et al. Salivary IgA response of triathletes participating in the French Iron Tour. Int J Sports Med. 2006;27(5):389–94.

Lopez RF, Osiecki R, Rama LMPL. Biochemical markers during and after an Olympic triathlon race. J Exerc Physiol Online. 2011;14(4):87–96.

Nieman DC, Henson DA, McAnulty SR, et al. Vitamin E and immunity after the Kona Triathlon World Championship. Med Sci Sports Exerc. 2004;36(8):1328–35.

Rohde T, MacLean DA, Hartkopp A, et al. The immune system and serum glutamine during a triathlon. Eur J Appl Physiol Occup Physiol. 1996;74(5):428–34.

Margaritis I, Tessier F, Richard MJ, et al. No evidence of oxidative stress after a triathlon race in highly trained competitors. Int J Sports Med. 1997;18(3):186–90.

Pinho RA, Silva LA, Pinho CA, et al. Oxidative stress and inflammatory parameters after an Ironman race. Clin J Sport Med. 2010;20(4):306–11.

Ginsburg GS, O’Toole M, Rimm E, et al. Gender differences in exercise- induced changes in sex hormone levels and lipid peroxidation in athletes competing in the Hawaii Ironman triathlon. Clin Chim Acta. 2001;305:131–9.

Camus G, Nys M, Poortmans JR, et al. Endotoxaemia, production of tumour necrosis factor alpha and polymorphonuclear neutrophil activation following strenuous exercise in humans. Eur J Appl Physiol Occup Physiol. 1998;79(1):62–8.

Lehmann M, Huonker M, Dimeo F, et al. Serum amino acid concentrations in nine athletes before and after the 1993 Colmar ultra triathlon. Int J Sports Med. 1995;16(3):155–9.

Ginsburg GS, Agil A, O’Toole M, et al. Effects of a single bout of ultraendurance exercise on lipid levels and susceptibility of lipids to peroxidation in triathletes. JAMA. 1996;276(3):221–5.

Sagnol M, Claustre J, Cottet-Emard JM, et al. Plasma free and sulphated catecholamines after ultra-long exercise and recovery. Eur J Appl Physiol Occup Physiol. 1990;60(2):91–7.

Long D, Blake M, McNaughton L, et al. Hematological and biochemical changes during a short triathlon competition in novice triathletes. Eur J Appl Physiol Occup Physiol. 1990;61(1–2):93–9.

Edes TE, Shah JH, Thornton WH Jr. Spontaneous decline in exercise-induced proteinuria during a 100-mile triathlon. South Med J. 1990;83(9):1044–6 (1052).

Wagner KH, Reichhold S, Holzl C, et al. Well-trained, healthy triathletes experience no adverse health risks regarding oxidative stress and DNA damage by participating in an ultra-endurance event. Toxicology. 2010;278(2):211–6.

Wagner KH, Reichhold S, Neubauer O. Impact of endurance and ultraendurance exercise on DNA damage. Ann NY Acad Sci. 2011;1229:115–23.

Sampaio de Mara L, de Carvalho T, Lineburger AA, et al. Muscle damage and immunological profile in the Ironman Brazil triathlon. Rev Bras Med Esporte. 2013;19(4):236–40.

Jeukendrup AE, Vet-Joop K, Sturk A, et al. Relationship between gastro-intestinal complaints and endotoxaemia, cytokine release and the acute-phase reaction during and after a long-distance triathlon in highly trained men. Clin Sci (Lond). 2000;98(1):47–55.

Fellmann N, Sagnol M, Bedu M, et al. Enzymatic and hormonal responses following a 24 h endurance run and a 10 h triathlon race. Eur J Appl Physiol Occup Physiol. 1988;7(5):545–53.

Guadalupe-Grau A, Ara I, Dorado C, et al. Osteocalcin as a negative regulator of serum leptin concentration in humans: insight from triathlon competitions. Eur J Appl Physiol. 2010;110(3):635–43.

Guezennec CY, Giaoui M, Voignier JP, et al. Evolution des taux plasmatiques des LDH (lacticodeshydrogénases), CPK (créatine phosphokinase) et de la myoglobine à l’issue d’une course de 100 km et d’un triathlon. Sci Sports. 1986;1:255.

Prou E, Margaritis I, Tessier F, et al. Effects of strenuous exercise on serum myosin heavy chain fragments in male triathletes. Int J Sports Med. 1996;17(4):263–7.

Machado CN, da Silva Gevaerd M, Goldfeder RT, et al. Efeito do exercício nas concentrações séricas de creatina cinase em triatletas de ultradistância. [Exercise effects on serum levels of creatine kinase in ultra-distance triathletes in the course of a competition period]. Rev Bras Med Esporte. 2010;16(5):378–81.

Hanke AA, Staib A, Gorlinger K, et al. Whole blood coagulation and platelet activation in the athlete: a comparison of marathon, triathlon and long distance cycling. Eur J Med Res. 2010;15(2):59–65.

Glaner MF, Lima WA, Jovita LCC. Absence of acute skeletal and cardiac muscle injuries in amateur triathletes. Rev Bras Cineantropom Desempenho Hum. 2009;11(1):37–42.

Douglas PS, O’Toole ML, Hiller WD, et al. Cardiac fatigue after prolonged exercise. Circulation. 1987;76(6):1206–13.

Hassan MY, Noakes TD, Berlyn P, et al. Preload maintenance protects against a depression in left ventricular systolic, but not diastolic, function immediately after ultraendurance exercise. Br J Sports Med. 2006;40(6):536–40 (discussion 540).

Hauser M, Petzuch K, Kuhn A, et al. The Munich Triathlon heart study: ventricular function, myocardial velocities, and two-dimensional strain in healthy children before and after endurance stress. Pediatr Cardiol. 2012;34(3):576–82.

Haykowsky M, Welsh R, Humen D, et al. Impaired left ventricular systolic function after a half-Ironman race. Can J Cardiol. 2001;17(6):687–90.

La Gerche A, Connelly KA, Mooney DJ, et al. Biochemical and functional abnormalities of left and right ventricular function after ultra-endurance exercise. Heart. 2008;94(7):860–6.

Gough CE, Eastwood A, Saunders PU, et al. Spurious Hb mass increases following exercise. Int J Sports Med. 2012;33(9):691–5.

Möckel M, Ulrich NV, Heller G Jr, et al. Platelet activation through triathlon competition in ultra-endurance trained athletes: impact of thrombin and plasmin generation and catecholamine release. Int J Sports Med. 2001;22(5):337–43.

Nottin S, Doucende G, Schuster I, et al. Alteration in left ventricular strains and torsional mechanics after ultralong duration exercise in athletes. Circ Cardiovasc Imaging. 2009;2(4):323–30.

Rifai N, Douglas PS, O’Toole M, et al. Cardiac troponin T and I, echocardiographic [correction of electrocardiographic] wall motion analyses, and ejection fractions in athletes participating in the Hawaii Ironman Triathlon. Am J Cardiol. 1999;83(7):1085–9.

Tulloh L, Robinson D, Patel A, et al. Raised troponin T and echocardiographic abnormalities after prolonged strenuous exercise-the Australian Ironman Triathlon. Br J Sports Med. 2006;40(7):605–9.

Warburton DER, Welsh RC, Haykowsky MJ, et al. Effects of half Ironman competition on the development of late potentials. Med Sci Sports Exerc. 2000;32(7):1208–13.

Whyte GP, George K, Sharma S, et al. Cardiac fatigue following prolonged endurance exercise of differing distances. Med Sci Sports Exerc. 2000;32(6):1067–72.

La Gerche A, Burns AT, Mooney DJ, et al. Exercise-induced right ventricular dysfunction and structural remodelling in endurance athletes. Eur Heart J. 2012;33(8):998–1006.

Gratze G, Rudnicki R, Urban W, et al. Hemodynamic and autonomic changes induced by Ironman: prediction of competition time by blood pressure variability. J Appl Physiol. 2005;99(5):1728–35.

Reichhold S, Neubauer O, Bulmer AC, et al. Endurance exercise and DNA stability: is there a link to duration and intensity? Mutat Res. 2009;682(1):28–38.

Reichold S, Neubauer O, Ehrlich V, et al. No acute and persistent DNA damage after an Ironman triathlon. Cancer Epidemiol Biomarkers Prev. 2008;17(8):1913–9.

McGavock J, Haykowsky M, Warburton D, et al. Left ventricular systolic performance during prolonged strenuous exercise in female triathletes. Dyn Med. 2003;2(1):2.

Banfi G, Migliorini S, Dolci A, et al. B-type natriuretic peptide in athletes performing an Olympic triathlon. J Sports Med Phys Fitness. 2005;45(4):529–31.

Douglas PS, O’Toole ML, Katz SE. Prolonged exercise alters cardiac chronotropic responsiveness in endurance athletes. J Sports Med Phys Fitness. 1998;38(2):158–63.

Leetmaa TH, Dam A, Glintborg D, et al. Myocardial response to a triathlon in male athletes evaluated by Doppler tissue imaging and biochemical parameters. Scand J Med Sci Sports. 2008;18(6):698–705.

Shave R, Dawson E, Whyte G, et al. Altered cardiac function and minimal cardiac damage during prolonged exercise. Med Sci Sports Exerc. 2004;36(7):1098–103.

Yaguchi H, Ishigooka M, Hayami S, et al. The effect of triathlon on urinary excretion of enzymes and proteins. Int Urol Nephrol. 1998;30(2):107–12.

Hartmann A, Pfuhler S, Dennog C, et al. Exercise-induced DNA effects in human leukocytes are not accompanied by increased formation of 8-hydroxy-2′-deoxyguanosine or induction of micronuclei. Free Radic Biol Med. 1998;24(2):245–51.

Driver HS, Rogers GG, Mitchell D, et al. Prolonged endurance exercise and sleep disruption. Med Sci Sports Exerc. 1994;26(7):903–7.

Walsh NP, Gleeson M, Pyne DB, et al. Position statement. Part two: maintaining immune health. Exerc Immunol Rev. 2011;17:64–103.

Walsh NP, Gleeson M, Shephard RJ, et al. Position statement. Part one: immune function and exercise. Exerc Immunol Rev. 2011;17:6–63.

Palazzetti S, Richard MJ, Favier A, et al. Overloaded training increases exercise-induced oxidative stress and damage. Can J Appl Physiol. 2003;28(4):588–604.

Brites F, Zago V, Verona J, et al. HDL capacity to inhibit LDL oxidation in well-trained triathletes. Life Sci. 2006;78(26):3074–81.

Dawson E, George K, Shave R, et al. Does the human heart fatigue subsequent to prolonged exercise? Sports Med. 2003;33(5):365–80.

Douglas PS, O’Toole ML, Hiller WD, et al. Left ventricular structure and function by echocardiography in ultraendurance athletes. Am J Cardiol. 1986;58(9):805–9.

Douglas PS, O’Toole ML, Hiller WD, et al. Different effects of prolonged exercise on the right and left ventricles. J Am Coll Cardiol. 1990;15(1):64–9.

Douglas PS, O’Toole ML, Woolard J. Regional wall motion abnormalities after prolonged exercise in the normal left ventricle. Circulation. 1990;82(6):2108–14.

Bartsch P, Welsch B, Albert M, et al. Balanced activation of coagulation and fibrinolysis after a 2-h triathlon. Med Sci Sports Exerc. 1995;27(11):1465–70.

Zavorsky GS, Anholm JD. Comments on point:counterpoint: pulmonary edema does/does not occur in human athletes performing heavy sea-level exercise. J Appl Physiol. 2010;109(4):1276–80.