Abstract

Objectives

The aim of this study was to assess the performance and impact of multilevel modelling (MLM) compared with ordinary least squares (OLS) regression in trial-based economic evaluations with clustered data.

Methods

Three thousand datasets with balanced and unbalanced clusters were simulated with correlation coefficients between costs and effects of − 0.5, 0, and 0.5, and intraclass correlation coefficients (ICCs) varying between 0.05 and 0.30. Each scenario was analyzed using both MLM and OLS. Statistical uncertainty around MLM and OLS estimates was estimated using bootstrapping. Performance measures were estimated and compared between approaches, including bias, root mean squared error (RMSE) and coverage probability. Cost and effect differences, and their corresponding confidence intervals and standard errors, incremental cost-effectiveness ratios, incremental net-monetary benefits and cost-effectiveness acceptability curves were compared.

Results

Cost-effectiveness outcomes were similar between OLS and MLM. MLM produced larger statistical uncertainty and coverage probabilities closer to nominal levels than OLS. The higher the ICC, the larger the effect on statistical uncertainty between MLM and OLS. Significant cost-effectiveness outcomes as estimated by OLS became non-significant when estimated by MLM. At all ICCs, MLM resulted in lower probabilities of cost effectiveness than OLS, and this difference became larger with increasing ICCs. Performance measures and cost-effectiveness outcomes were similar across scenarios with varying correlation coefficients between costs and effects.

Conclusions

Although OLS produced similar cost-effectiveness outcomes, it substantially underestimated the amount of variation in the data compared with MLM. To prevent suboptimal conclusions and a possible waste of scarce resources, it is important to use MLM in trial-based economic evaluations when data are clustered.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Ignoring clustering of data in the analysis of trial-based economic evaluations overestimates the probability of cost effectiveness. |

It is recommended to use multilevel modelling for trial-based economic evaluations with clustered data. |

Further research should investigate how to best combine multilevel modelling with resampling approaches. |

1 Introduction

Because resources available for healthcare are scarce, policy makers need to decide which healthcare interventions to reimburse and which not to [1]. Policy makers increasingly use information from economic evaluations, which assess whether the additional costs of a new intervention are justified by its additional effects compared with one or more alternative interventions [1, 2]. In many countries, the results of economic evaluations are even established as a formal decision criterion for the reimbursement and/or pricing of healthcare interventions [1].

Economic evaluations are often performed alongside a randomized controlled trial. Ideally, participants of such so-called trial-based economic evaluations are randomized to an intervention or control group at the individual level. Sometimes this is not possible, and clusters of patients (e.g. at the hospital or general practice level) are randomized instead. Participants within clusters are likely to be more similar than participants between clusters and, consequently, cost and effect data are considered to be clustered [3,4,5,6]. This is due to the fact that participant and/or healthcare provider characteristics influencing costs and effects are similar within a cluster and highly likely to vary across clusters due to variations in disease severity, training level of healthcare providers, adherence to treatment protocols by healthcare providers or type of hospital [6].

Statistical methods such as ordinary least squares (OLS) regression assume that outcomes among participants are independent and such methods are, therefore, likely to underestimate the total amount of sampling variability when data are clustered [7]. Ignoring the clustered nature of data results in inaccurate estimates of statistical uncertainty [8,9,10], and consequently may lead to suboptimal conclusions [11,12,13,14]. Typically, multilevel modelling (MLM) results in larger standard errors (SEs) than OLS, because in MLM information provided by participants belonging to the same cluster contributes less than 100% new information [4]. The more alike participants are within a cluster, which is quantified using the intraclass correlation coefficient (ICC), the less new information is provided by a participant belonging to that same cluster. Despite the fact that statistical methods for dealing with clustered data are available and their use in effectiveness studies is well established [8, 15,16,17], these methods are hardly used in trial-based economic evaluations [5, 18]. In addition, no clear recommendations on how to deal with clustered data are available in pharmacoeconomic guidelines [19].

Methods that can be used to deal with clustered data in trial-based economic evaluations [20,21,22] include MLM, two-stage bootstrapping (2SB), seemingly unrelated regression (SUR) and generalized estimating equations (GEE) with robust SEs [23,24,25,26,27]. Of these, simulation studies found MLM to be the preferred method, as MLM resulted in more precise point estimates and better statistical performance compared with the other methods [5, 25, 28]. So far, studies evaluating the relative performance of methods for analyzing clustered data in trial-based economic evaluations mainly assumed a normal distribution for costs and effects or used other approaches, such as Bayesian statistical methods [20, 22, 24, 25, 27]. Although Bayesian methods may also be used for analyzing clustered data, we focused on frequentist statistical methods in our study, because they are better known by the majority of (applied) researchers and are easier to implement in standard statistical software packages. Therefore, we think that frequentist methods are currently most likely to improve practice. Also, most papers only assessed the impact of the different methods on cost and effect differences and/or incremental cost-effectiveness ratios (ICERs), but not on the joint uncertainty surrounding costs and effects. Therefore, the aim of this study was to assess the performance and show the impact on cost-effectiveness outcomes of using MLM compared with OLS in trial-based economic evaluations using clustered data.

2 Methods

The performance and impact of MLM compared with OLS in trial-based economic evaluations using clustered data was assessed using simulated data.

2.1 Data Generation Mechanisms

Datasets were simulated using simstudy [29] in R [30]. In order to estimate the key performance measure, coverage probability, with an acceptable degree of imprecision (i.e. to reach a maximal Monte Carlo SE of 0.5), 3000 datasets were used [31]. The coverage probability refers to the probability that the true value falls within the estimated confidence intervals (CIs) (see Sect. 2.4). Moreover, simulation studies are empirical experiments, in which performance measures such as the aforementioned coverage probability are estimated, which means that these estimates of performance measures are subject to error. Monte Carlo SEs quantify this simulation error by providing an estimate of the SE of performance measures as a result of using a finite number of simulations (nsims) [31]. Both balanced and unbalanced clusters were simulated. For the balanced clusters (i.e. all clusters of equal size), 30 clusters were simulated with 30 individuals per cluster. To simulate 30 unbalanced clusters (i.e. clusters are not equal in size), a zero-truncated Poisson distribution was used with a mean of 30 individuals per cluster. Clusters were equally randomized to an intervention or control group. Thirty clusters were simulated, as a total of 20 clusters or more is suggested for asymptotic assumptions to hold, which means that the sample size (i.e. observations at both cluster and individual levels) needs to be sufficiently large [32, 33].

In all scenarios, costs were expressed in Euros (€) and effects were expressed in quality-adjusted life-years (QALYs). A cost difference (∆C) of €100 and an effect difference (∆E) of 0.05 were specified as true reference values. The latter is in line with the minimally clinically important difference for QALYs [34]. QALYs were assumed to be normally distributed [35, 36]. Cost data in trial-based economic evaluations typically have a distribution that is heavily right skewed [37] with a point mass at zero costs and a small number of outliers [38]. To account for this, costs were simulated using a gamma distribution.

2.2 Correlation Structures

We accounted for two types of correlations that are present in trial-based economic evaluations with clustered cost and effect data, which are graphically presented in Fig. 1. First, the correlation between costs and effects is depicted as Corr(Costs, QALYs) in Fig. 1 [39, 40]. This correlation can range from − 1, meaning that higher costs are associated with worse effects, to 1, meaning that higher costs are associated with better effects. If data are clustered, this type of clustering may exist at both the individual level and the cluster level. Datasets were simulated with a correlation between costs and effects of − 0.5, 0, and 0.5, at both the individual level and the cluster level [6].

Second, the intraclass correlation coefficient (ICC) is a measure of the correlation between the observations of participants belonging to the same cluster, and is estimated using the between-cluster variance and within-cluster variance (see Fig. 1) [4]. The ICC provides an indication of how much the observations from participants within a cluster are similar. This correlation can range from 0, meaning that none of the observations from participants within a cluster are alike, to 1, meaning that all the observations from participants within a cluster are the same [41]. When the ICC is 0, all the observations within the cluster are unique, and the effective sample size is equal to the number of participants. In a situation where all the observations within a cluster are similar (i.e. ICC = 1), the effective sample size is reduced to the number of clusters [4, 41, 42]. The ICC was set at 0.05, 0.10, 0.20 and 0.30 for both costs and effects. Although in empirical studies, ICCs are typically smaller than 0.20 [4], a higher ICC was also used to evaluate whether the applied methods are robust in situations with larger ICCs. For a detailed explanation of how the ICC was specified, we refer the reader to Online Resource 1 (see electronic supplementary material [ESM]).

An overview of all parameter ranges, as well as their motivation, can be found in Table 1. In total, 3000 datasets for each of the 24 different scenarios were simulated (Online Resource 2, see ESM). The range of values for the different parameters are based on values typically found in trial-based economic evaluations. The simulation code is provided in Online Resource 3 (see ESM).

2.3 Data Analysis

Two statistical approaches were used to estimate the cost effectiveness of the intervention compared with the control. The first approach was OLS, which does not take into account the hierarchical structure of the data. Two OLS models were specified, one for costs and one for effects (formulas 1 and 2):

where \({\text{Costs}}_{i}\) and \({\text{QALY}}_{i}\) are the observed costs and QALYs of participant i, \(\beta_{0c}\) and \(\beta_{0e}\) refer to the models’ intercept, β1c and β1e refer to the regression coefficient for the independent variable ‘treatment arm’ [i.e. the mean difference in costs (∆C) and QALYs (∆E) between treatment groups], and \(\varepsilon_{ic}\) and \(\varepsilon_{ie}\) refer to the unexplained variance at the individual level.

The second approach was MLM, which does take into account the hierarchical structure of the data. Two MLMs were specified, one for costs and one for effects (formulas 3 and 4), assuming a two-level structure and using maximum likelihood estimation [4]:

where \({\text{Costs}}_{ij}\) and \({\text{QALY}}_{ij}\) are the observed costs and QALYs of participant i in cluster j, \(\beta_{0c}\) and \(\beta_{0e}\) refer to the models’ intercept; β1jc and β1je refer to the regression coefficient for the variable ‘treatment arm’ (i.e. the mean difference in costs [∆C] and QALYs [∆E] between treatment groups); \(\varepsilon_{ijc}\) and \(\varepsilon_{ije}\) refer to the unexplained variance at the individual level; and \(\mu_{jc}\) and \(\mu_{je}\) refer to the unexplained variance (random effects) at the cluster level [4].

For effects, normal-based 95% CIs were estimated. For costs, 95% CIs were estimated using bias-corrected and accelerated (BCa) bootstrapping with 2000 replications [48]. OLS was combined with bootstrapping at the individual level, and the bootstrap procedure was stratified for treatment arm. MLM was combined with cluster bootstrapping, which is recommended for resampling clustered data [49]. In this approach, whole clusters instead of individuals are resampled, which maintains the hierarchical structure of the data.

ICERs were calculated by dividing the difference in costs by the difference in effects (i.e. ∆C/∆E) [50]. The incremental net monetary benefit (INMB) was estimated as

where λ refers to the ceiling ratio (i.e. the maximum amount of money decision makers are willing to pay per unit of effect gained) for cost effectiveness. In this study, the British threshold of 23, 300 €/QALY was used.

The joint uncertainty surrounding costs and effects was summarized using cost-effectiveness acceptability curves (CEACs) [51], which were estimated using the parametric p-value approach for INMBs [52]. CEACs show the probability of an intervention being cost effective in comparison with control for a range of different ceiling ratios [51, 53]. Online Resource 4 contains a ready-to-use Stata® script for conducting trial-based economic evaluations with clustered data (see ESM).

2.4 Comparison of Methods

The performance of the two statistical approaches was compared using different performance measures [31]. These performance measures were estimated for cost differences, effect differences and INMBs using a threshold of 23,300 €/QALY.

-

1.

Empirical bias: the mean difference between the estimated value in the simulated datasets (\(\widehat{{\theta_{i} }})\) and the true value (\(\theta\)):

$${\text{Bias}} = \frac{1}{{n_{{{\text{sims}}}} }} \mathop \sum \limits_{i = 1}^{{n_{{{\text{sims}}}} }} (\widehat{{\theta_{i} }}{ } - \theta ),$$(6)which indicates how far the estimated value is from the true value. Values closer to zero imply less bias.

-

2.

Root mean squared error (RMSE): the square root of the quadratic mean difference between the estimated values (\(\widehat{{\theta_{i} }})\) and the true values (\(\theta\)):

$${\text{RMSE}} = \sqrt {\frac{1}{{n_{{{\text{sims}}}} }} \mathop \sum \limits_{i = 1}^{{n_{{{\text{sims}}}} }} \left( {\widehat{{\theta_{i} }}{ } - \theta } \right)^{2} , }$$(7)which integrates the squared bias and variance in one performance measure. A RMSE of 0 indicates a perfect fit to the data, and lower RMSEs, thus, indicate better performance.

-

3.

Coverage probability: the percentage of times that the true value (\(\theta\)) is covered in the 95% CI around the estimated value (\(\widehat{{\theta_{i} }})\):

$${\text{Coverage probability}} = \frac{1}{{n_{{{\text{sims}}}} }} \mathop \sum \limits_{i = 1}^{{n_{{{\text{sims}}}} }} 1\left( {\widehat{{\theta_{{{\text{lower}}, i}} }} \le \theta \le \widehat{{\theta_{{{\text{upper}}, i}} }}} \right).$$(8)

Coverage probabilities (expressed in %) close to the nominal level of 1 − α (α = 0.05), together with narrow CI width, indicate higher power and greater accuracy. Coverage probabilities below 90% indicate an increased chance of type-I error (i.e. ‘false positive’), while coverage probabilities above 97% indicate an increased chance of type-II error (i.e. ‘false negative’) [54].

To assess the impact of using MLM versus OLS on cost-effectiveness outcomes, cost and effect differences between groups including their CIs and SEs, as well as ICERs, INMBs and the probabilities of cost effectiveness were compared.

R [30] (version 3.5.2) was used to simulate datasets and the cost-effectiveness analyses were performed in StataSE 16® (StataCorp LP, CollegeStation, TX, USA).

3 Results

In Table 2, performance measures are summarized for OLS and MLM. Table 3 summarizes the cost-effectiveness outcomes as estimated by OLS and MLM. Both tables present estimates for all 24 scenarios.

3.1 Performance Measures

For all outcomes, bias and RMSE were roughly similar for MLM and OLS. However, for all outcomes, MLM resulted in coverage probabilities closer to nominal levels compared with OLS (Table 2). The differences between MLM and OLS in terms of coverage probabilities became more pronounced with increasing ICCs (Table 2).

3.2 Cost-Effectiveness Outcomes

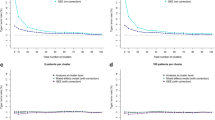

Table 3 shows that cost differences, effect differences, ICERs and INMBs were exactly similar for OLS and MLM when clusters were balanced, and only slightly differed between the two methods with unbalanced clusters. In all scenarios, the CI width increased considerably when using MLM instead of OLS for cost and effect differences as well as INMBs. This increase in CI width was found to increase with increasing ICCs (Table 3). In several scenarios, QALY and cost differences between groups and INMBs were not statistically significant when using MLM, whereas they were significant when using OLS. This is graphically illustrated in Fig. 2. MLM and OLS also resulted in different CEACs, with the difference in probabilities of the intervention being cost effective compared with control becoming larger with higher ICCs (Fig. 3).

Graphical presentation of confidence interval width and mean point estimates with increasing ICCs and correlation for costs, QALYs and INMBs with unbalanced clusters. ICC intracluster correlation coefficient, INMB incremental net monetary benefit, MLM multilevel modelling, OLS ordinary least squares regression, QALY quality-adjusted life-year

4 Discussion

Using MLM instead of OLS in trial-based economic evaluations with clustered data showed better statistical performance, specifically in terms of coverage probabilities that were closer to the nominal level of 1 − α. Regarding cost-effectiveness outcomes, using MLM instead of OLS had a large impact on the level of statistical uncertainty surrounding cost differences, effect differences and INMBs. Generally, MLM resulted in a larger amount of statistical uncertainty than OLS, especially for higher ICCs. In some scenarios, this even resulted in cost and/or effect differences being statistically significant when using OLS, but statistically non-significant when using MLM. The impact of using MLM instead of OLS on the CEACs was substantial. These findings indicate that ignoring the clustered nature of data in economic evaluations alongside cluster randomized trials is inappropriate. Thus, if data are clustered in a trial-based economic evaluation, researchers are highly encouraged to use MLM over OLS.

The rationale behind using MLM when data are clustered is to accurately estimate the amount of variation in the data, which is typically underestimated when using OLS to analyze such data [4, 55]. Even at a relatively small ICC (i.e. 0.05), the amount of statistical uncertainty was found to be considerably larger when using MLM instead of OLS. In line with previous studies, we also found that the degree of underestimation in statistical uncertainty increased with larger ICCs [4, 55, 56]. The underestimation of statistical uncertainty when using OLS may increase the probability of falsely rejecting the null-hypothesis (type-1 error), meaning that researchers may incorrectly claim that an intervention is cost effective when in truth this is not the case. This is emphasized by the fact that the estimated coverage probabilities of OLS were further from the nominal level of 0.95 than MLM. Thus, ignoring clustering of data in economic evaluations alongside cluster randomized trials may lead to suboptimal conclusions.

It is worth noting that point estimates of the differences in costs and effects between treatment groups only differed between MLM and OLS when clusters were unbalanced. This is due to the fact that, if clusters are unbalanced, a heterogeneous treatment effect is present between clusters, whereas this is not the case if clusters are balanced [4]. However, the identified differences between statistical approaches were relatively small, which is likely the result of the moderate degree of imbalance that was generated in the simulated clusters [57,58,59].

Due to the higher levels of statistical uncertainty as estimated by MLM compared with OLS, the probability of cost effectiveness was lower for MLM compared with OLS. At larger ICCs (i.e. ICC = 0.30), the maximum difference in the probability of cost effectiveness between both methods was relatively large (i.e. 0.27), which might have implications on reimbursement decisions. Even at a small ICC (ICC = 0.05), a notable difference in the probability of cost effectiveness was apparent (i.e. max 0.08).

Although point estimates were roughly similar between MLM and OLS, MLM was found to estimate the amount of variation in the simulated data more appropriately with coverage probabilities closer to the nominal level of 0.95 than OLS. For effect differences and INMBs, coverage probabilities reached nominal levels. For cost differences, this was not the case, which is likely due to the highly skewed nature of cost data. Previous research showed that when sampling from a skewed distribution, coverage probabilities tend to be substantially lower than the nominal 1-α, and this effect will increase if sampling is done from more heavy-tailed distributions [60].

4.1 Comparison with Other Studies

Previous studies also found MLM to be preferred over OLS [24, 25, 27]. However, these studies assumed a normal distribution for costs and effects. Although some authors [40, 61,62,63] showed that MLMs assuming a normal distribution can adequately handle skewed distributions, the current study extends the multilevel framework by providing insight into how a frequentist MLM combined with a cluster-bootstrap that accounts for the skewed distribution of costs performs in comparison to a naïve analysis such as a bootstrapped OLS. Ren et al. [49] showed that bootstrapping at the cluster level is preferred over bootstrapping at the individual level when resampling clustered data. The main reason for this is that resampling at the cluster level accurately reflects the original sample information.

4.2 Strengths and Limitations and Implications for Further Research

A strength of this study was the comparison of both the performance and impact of MLM and OLS for a wide range of scenarios. Based on empirical datasets, different parameters were specified and varied to simulate data that resembled ‘real’ data as closely as possible. One of the main advantages of this method is that it avoids the need for a large number of empirical datasets, which is generally not feasible [64]. A second strength is that the multilevel framework for trial-based economic evaluations alongside cluster-randomized trials is extended by accounting for the right skewed nature of cost data using a non-parametric cluster-bootstrap. Also, to the best of our knowledge, this is the first study that assessed the impact of adjusting for the clustered nature of cost data in trial-based economic evaluations on the resulting cost differences, effect differences, ICERs and CEACs.

This study also has some limitations. First, when simulating effects, QALYs were assumed to be normally distributed, although they may sometimes be left skewed. This was done because it enabled precise specification of variances and correlations between costs and effects. Second, no other methods than OLS and MLM were considered. MLM was chosen because previous studies evaluating the performance of different methods to deal with clustered data [20,21,22,23,24,25,26,27] concluded that MLM was one of the most appropriate methods [25]. Third, although efforts were made to simulate data as appropriately as possible, it is possible that empirical cost and effect data still have certain characteristics that we did not simulate in the current study, for example baseline imbalances and missing data. Fourth, although within the statistical literature, different bootstrapping techniques have been discussed and recommended [6, 20, 23, 49, 65], there is a lack of consensus on how to combine bootstrapping techniques with a cluster-adjusting analysis such as MLM. In the current study, the resampling approach of Ren et al. [49] was used, but coverage probabilities for costs did not reach nominal levels. Future research should, therefore, investigate which bootstrap approach is most optimal in situations with right-skewed cost data and take other characteristics into account in the simulations.

5 Conclusion

Although OLS produced roughly similar point estimates to MLM in trial-based economic evaluations with clustered data, it substantially underestimated the amount of variation compared with MLM. In all scenarios, OLS overestimated the probability of cost effectiveness compared with MLM. To prevent suboptimal conclusions, it is important to use MLM in trial-based economic evaluations when data are clustered.

References

Drummond M, Sculper M, Claxton K, Stoddart G, Torrance G. Methods for the economic evaluation of Health Care Programmes. 4th ed. Oxford: Oxford University Press; 2015.

Petrou S, Gray A. Economic evaluation alongside randomised controlled trials: design, conduct, analysis, and reporting. BMJ. 2011;342:d1548.

Manca A, Rice N, Sculpher MJ, Briggs AH. Assessing generalisability by location in trial-based cost-effectiveness analysis: the use of multilevel models. Health Econ. 2005;14(5):471–85.

Twisk JW. Applied multilevel analysis: a practical guide for medical researchers. Cambridge: Cambridge University Press; 2006.

Gomes M, Grieve R, Nixon R, Edmunds WJ. Statistical methods for cost-effectiveness analyses that use data from cluster randomized trials: a systematic review and checklist for critical appraisal. Med Decis Mak. 2012;32(1):209–20.

Flynn T, Peters T. Conceptual issues in the analysis of cost data within cluster randomized trials. J Health Serv Res Policy. 2005;10(2):97–102.

McNeish DM. Analyzing clustered data with OLS regression: the effect of a hierarchical data structure. Mult Linear Regres Viewp. 2014;40(1):11–6.

Campbell MJ, Donner A, Klar N. Developments in cluster randomized trials and statistics in medicine. Stat Med. 2007;26(1):2–19.

Donner A, Klar N. Design and analysis of cluster randomization trials in health research. England: Arnold London; 2000.

Hayes R, Moulton L. Cluster randomised trials. Boca Raton: Taylor & Francis; 2009.

Austin PC. A comparison of the statistical power of different methods for the analysis of cluster randomization trials with binary outcomes. Stat Med. 2007;26(19):3550–655.

Feng Z, McLerran D, Grizzle J. A comparison of statistical methods for clustered data analysis with Gaussian error. Stat Med. 1996;15(16):1793–806.

Nixon RM, Thompson SG. Baseline adjustments for binary data in repeated cross-sectional cluster randomized trials. Stat Med. 2003;22(17):2673–92.

Ukoumunne OC, Thompson SG. Analysis of cluster randomized trials with repeated cross-sectional binary measurements. Stat Med. 2001;20(3):417–33.

Omar RZ, Thompson SG. Analysis of a cluster randomized trial with binary outcome data using a multi-level model. Stat Med. 2000;19(19):2675–88.

Spiegelhalter DJ. Bayesian methods for cluster randomized trials with continuous responses. Stat Med. 2001;20(3):435–52.

Turner RM, Omar RZ, Thompson SG. Bayesian methods of analysis for cluster randomized trials with binary outcome data. Stat Med. 2001;20(3):453–72.

El Alili M, van Dongen JM, Huirne JAF, van Tulder MW, Bosmans JE. Reporting and analysis of trial-based cost-effectiveness evaluations in obstetrics and gynaecology. Pharmacoeconomics. 2017;35(10):1007–333.

van Dongen JM, El Alili M, Varga AN, Guevara Morel AE, Jornada Ben A, Khorrami M, et al. What do national pharmacoeconomic guidelines recommend regarding the statistical analysis of trial-based economic evaluations? Expert Rev Pharmacoecon Outcomes Res. 2020;20(1):27–37.

Bachmann MO, Fairall L, Clark A, Mugford M. Methods for analyzing cost effectiveness data from cluster randomized trials. Cost Eff Resour Alloc. 2007;5:12.

Flynn TN, Peters TJ. Cluster randomized trials: another problem for cost-effectiveness ratios. Int J Technol Assess Health Care. 2005;21(3):403–9.

Grieve R, Nixon R, Thompson SG. Bayesian hierarchical models for cost-effectiveness analyses that use data from cluster randomized trials. Med Decis Mak. 2010;30(2):163–75.

Davison AC, Hinkley DV. Bootstrap methods and their application. Cambridge: Cambridge University Press; 1997.

Goldstein H. Multilevel statistical models. New York: Wiley; 2011.

Gomes M, Ng ES, Grieve R, Nixon R, Carpenter J, Thompson SG. Developing appropriate methods for cost-effectiveness analysis of cluster randomized trials. Med Decis Mak. 2012;32(2):350–61.

Hardin JW. Generalized estimating equations (GEE). New York: Wiley Online Library; 2005.

Leyland AH, Goldstein H. Multilevel modelling of health statistics. New York: Wiley; 2001.

Gomes M, Grieve R, Nixon R, Ng ES, Carpenter J, Thompson SG. Methods for covariate adjustment in cost-effectiveness analysis that use cluster randomised trials. Health Econ. 2012;21(9):1101–18.

Goldfeld, K. S. simstudy: Simulation of study data (2018). R package version 0.1.16 retrieved from https://cran.r-project.org/web/packages/simstudy/index.html.

Team RC. R: a language and environment for statistical computing. Vienna: R Foundation for statistical computing; 2017.

Morris TP, White IR, Crowther MJ. Using simulation studies to evaluate statistical methods. Stat Med. 2019;38(11):2074–102.

O'Hagan A, Stevens JW. Assessing and comparing costs: how robust are the bootstrap and methods based on asymptotic normality? Health Econ. 2003;12(1):33–49.

Donner A. Some aspects of the design and analysis of cluster randomization trials. J R Stat Soc: Ser C (Appl Stat). 2002;47(1):95–113.

Coretti S, Ruggeri M, McNamee P. The minimum clinically important difference for EQ-5D index: a critical review. Expert Rev Pharmacoecon Outcomes Res. 2014;14(2):221–33.

Whitehead SJ, Ali S. Health outcomes in economic evaluation: the QALY and utilities. Br Med Bull. 2010;96(1):5–21.

Barrie S. QALYs, euthanasia and the puzzle of death. J Med Ethics. 2015;41(8):635.

Mihaylova B, Briggs A, O'Hagan A, Thompson SG. Review of statistical methods for analysing healthcare resources and costs. Health Econ. 2011;20(8):897–916.

Gilleskie DB, Mroz TA. A flexible approach for estimating the effects of covariates on health expenditures. J Health Econ. 2004;23(2):391–418.

Polsky D, Glick HA, Willke R, Schulman K. Confidence intervals for cost-effectiveness ratios: a comparison of four methods. Health Econ. 1997;6(3):243–52.

Willan AR, Briggs AH, Hoch JS. Regression methods for covariate adjustment and subgroup analysis for non-censored cost-effectiveness data. Health Econ. 2004;13(5):461–75.

Aarts E, Dolan CV, Verhage M, van der Sluis S. Multilevel analysis quantifies variation in the experimental effect while optimizing power and preventing false positives. BMC Neurosci. 2015;16:94.

Killip S, Mahfoud Z, Pearce K. What is an intracluster correlation coefficient? Crucial concepts for primary care researchers. Ann Fam Med. 2004;2(3):204–8.

Walters SJ, Brazier JE. Comparison of the minimally important difference for two health state utility measures: EQ-5D and SF-6D. Qual Life Res. 2005;14(6):1523–32.

Kim S-K, Kim S-H, Jo M-W, Lee S-I. Estimation of minimally important differences in the EQ-5D and SF-6D indices and their utility in stroke. Health Qual Life Outcomes. 2015;13:32.

Pickard AS, Neary MP, Cella D. Estimation of minimally important differences in EQ-5D utility and VAS scores in cancer. Health Qual Life Outcomes. 2007;5:70.

Gray AM, Clarke PM, Wolstenholme JL, Wordsworth S. Applied methods of cost-effectiveness analysis in healthcare. Oxford: Oxford University Press; 2011.

Eldridge SM, Ashby D, Kerry S. Sample size for cluster randomized trials: effect of coefficient of variation of cluster size and analysis method. Int J Epidemiol. 2006;35(5):1292–300.

Barber JA, Thompson SG. Analysis of cost data in randomized trials: an application of the non-parametric bootstrap. Stat Med. 2000;19(23):3219–36.

Ren S, Lai H, Tong W, Aminzadeh M, Hou X, Lai S. Nonparametric bootstrapping for hierarchical data. J Appl Stat. 2010;37(9):1487–98.

Glick H, Doshi JA, Sonnad SS, Polsky D. Economic evaluation in clinical trials. 2nd ed. Oxford: Oxford University Press; 2015.

Fenwick E, O'Brien BJ, Briggs A. Cost-effectiveness acceptability curves—facts, fallacies and frequently asked questions. Health Econ. 2004;13(5):405–15.

Hoch JS, Dewa CS. Advantages of the net benefit regression framework for economic evaluations of interventions in the workplace: a case study of the cost-effectiveness of a collaborative mental health care program for people receiving short-term disability benefits for psychiatric disorders. J Occup Environ Med. 2014;56(4):441–5.

van Hout BA, Al MJ, Gordon GS, Rutten FF. Costs, effects and C/E-ratios alongside a clinical trial. Health Econ. 1994;3(5):309–19.

Diaz-ordaz K, Kenward M, Gomes M, Grieve R. Multiple imputation methods for bivariate outcomes in cluster randomised trials. Stat Med. 2016;35(20):3482–96.

Huang FL. Multilevel modeling myths. Sch Psychol Q. 2018;33(3):492.

Huang FL. Multilevel modeling and ordinary least squares regression: how comparable are they? J Exp Educ. 2018;86(2):265–81.

Astin AW, Denson N. Multi-campus studies of college impact: which statistical method is appropriate? Res High Educ. 2009;50(4):354–67.

Huang FL. Alternatives to multilevel modeling for the analysis of clustered data. J Exp Educ. 2016;84(1):175–96.

Lai MH, Kwok O-M. Examining the rule of thumb of not using multilevel modeling: the “design effect smaller than two” rule. J Exp Educ. 2015;83(3):423–38.

Wilcox R. Chapter 11—more regression methods. In: Wilcox R, editor. Introduction to robust estimation and hypothesis testing. 3rd ed. Boston: Academic Press; 2012. p. 533–629.

Briggs A, Nixon R, Dixon S, Thompson S. Parametric modelling of cost data: some simulation evidence. Health Econ. 2005;14(4):421–8.

Nixon RM, Wonderling D, Grieve RD. Non-parametric methods for cost-effectiveness analysis: the central limit theorem and the bootstrap compared. Health Econ. 2010;19(3):316–33.

Pinto EM, Willan AR, O'Brien BJ. Cost-effectiveness analysis for multinational clinical trials. Stat Med. 2005;24(13):1965–82.

Law AM, Kelton WD, Kelton WD. Simulation modeling and analysis. New York: McGraw-Hill; 1991.

Van der Leeden R, Meijer E, Busing FM. Resampling multilevel models. Handbook of multilevel analysis. Berlin: Springer; 2008. p. 401–433.

Author information

Authors and Affiliations

Contributions

ME: study rationale and design, writing the manuscript, analysis, interpretation and reflection, rewriting the manuscript. JMVD: study rationale and design, interpretation and reflection, reviewing the manuscript. KSG: analysis, interpretation and reflection, reviewing the manuscript. MWH: interpretation and reflection, reviewing the manuscript. MWT: interpretation and reflection, reviewing the manuscript. JEB: study rationale and design, interpretation and reflection, reviewing the manuscript. All authors have participated in the planning, execution, interpretation and/or reporting of the study and all of them approved the final version of the manuscript.

Corresponding author

Ethics declarations

Funding

No financial support for this study was provided.

Conflict of interest

The authors declare that there is no conflict of interest.

Availability of data and material

Data can be generated using the provided simulation code.

Code availability

Analysis can be performed using the provided software code (Stata®).

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

El Alili, M., van Dongen, J.M., Goldfeld, K.S. et al. Taking the Analysis of Trial-Based Economic Evaluations to the Next Level: The Importance of Accounting for Clustering. PharmacoEconomics 38, 1247–1261 (2020). https://doi.org/10.1007/s40273-020-00946-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-020-00946-y