Abstract

Time is an important aspect of health economic evaluation, as the timing and duration of clinical events, healthcare interventions and their consequences all affect estimated costs and effects. These issues should be reflected in the design of health economic models. This article considers three important aspects of time in modelling: (1) which cohorts to simulate and how far into the future to extend the analysis; (2) the simulation of time, including the difference between discrete-time and continuous-time models, cycle lengths, and converting rates and probabilities; and (3) discounting future costs and effects to their present values. We provide a methodological overview of these issues and make recommendations to help inform both the conduct of cost-effectiveness analyses and the interpretation of their results. For choosing which cohorts to simulate and how many, we suggest analysts carefully assess potential reasons for variation in cost effectiveness between cohorts and the feasibility of subgroup-specific recommendations. For the simulation of time, we recommend using short cycles or continuous-time models to avoid biases and the need for half-cycle corrections, and provide advice on the correct conversion of transition probabilities in state transition models. Finally, for discounting, analysts should not only follow current guidance and report how discounting was conducted, especially in the case of differential discounting, but also seek to develop an understanding of its rationale. Our overall recommendations are that analysts explicitly state and justify their modelling choices regarding time and consider how alternative choices may impact on results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Time is an important aspect of the accurate modelling of cost effectiveness in ways that are not always obvious or made explicit in cost-effectiveness analyses. |

The choice of cohorts and time horizons in the model should depend on which cohorts will be affected by the policy decision and how long that policy will apply. |

Cost-effectiveness estimates can be very sensitive to discounting; therefore, not only should discounting be applied correctly but alternatives to the standard discounting model should not be adopted without careful consideration. |

1 Introduction

Time is a continuous measure used to order sequences of events and to quantify the periods between them. It is an important aspect of health economic evaluation, as the timing and duration of clinical events, healthcare interventions and their consequences all have implications for estimated costs and effects [1, 2]. In addition, the policy questions informed by health economic analyses can vary in their scope in time. Some policy choices only have short-term implications, whereas the outcomes of others may be felt decades later, either because the effects are not realised immediately or because they affect both current and future cohorts. Therefore, health economic models need to simulate time appropriately if they are to reliably inform resource allocation. Despite their central importance the choices regarding time in modelling are often not explicitly stated. Consequently, it is often unclear how time influences both the technical adequacy of models and their correspondence to the policy choices they seek to inform.

This article reviews three principal methodological issues in the modelling of time in cost-effectiveness analysis (CEA). First, we address the structure of models with respect to time, including which cohorts to simulate and the implications this has for how far into the future to extend the analysis. Second, we address time with respect to the running of simulation models, including the difference between discrete-time and continuous-time models, how to choose the cycle length and how to accurately convert rates to probabilities. Finally, we address the theory and practice of discounting future costs and effects to their present values to account for time preference and intertemporal opportunity costs.

The purpose of this article is to provide both a guide to the concept of time for those conducting health economic evaluations and an aid to those interpreting findings from the literature. It considers time primarily in the context of simulation modelling for CEA. However, the issues discussed are also relevant for related forms of evaluation, such as budget impact analysis and comparative effectiveness analysis. Although this article cannot be exhaustive in scope or detail, it seeks to provide an accessible overview and an introduction to the relevant literature. It is hoped that this article will encourage analysts to consider time carefully and write more explicitly about how time relates to the modelling of decision problems.

2 Cohort Selection and Time Horizons

An important modelling choice is how long to simulate the implementation of an intervention and its consequent effects. This choice largely depends on whether the intervention’s effects are transitory or permanent, how many cohorts are simulated and whether the intervention has any shared effects. A number of factors that influence cost effectiveness vary with both age and calendar time, therefore these questions of how long to simulate an intervention’s implementation and over how many cohorts are important for model estimates. For example, rates of disease progression vary with age and health technologies and epidemiological characteristics change over time. Consequently, an intervention’s cost effectiveness should not be considered a fixed property, but specific to the population and time period in question. The following sections further describe cohort selection before considering the implications for model time horizons.

2.1 Cohort Selection

A cohort is a number of intervention recipients grouped together for analytical purposes. Many CEAs use single-cohort models [3], which simulate one cohort of patients over time. By contrast, multi-cohort models simulate multiple recipient cohorts. Although cohorts can be differentiated by other factors, such as sex, race and year of diagnosis, this article focuses on cohorts that are differentiated by their birth year. Closed models are defined as models that simulate only those cohorts present in the initial period, whereas open models allow new cohorts to enter over time [4, 5]. Some refer to such open models as population models [6, 7].

The policy choices facing decision makers are usually not for a single cohort of intervention recipients. Nevertheless, single-cohort models have the benefit of conceptual simplicity and can be adequate to answer the resource allocation decision in certain circumstances. Indeed, the widespread use of single-cohort models may reflect an often implicit assumption that the cost effectiveness demonstrated in a given cohort is representative for other cohorts, both now and in the future. Such an assumption is reasonable if there are no relevant differences between cohorts, there are no (relevant) shared effects between cohorts and if large real price changes or technological innovations are not anticipated in the near future.

In some cases there will be important differences in cost effectiveness between cohorts; therefore, it can be helpful to differentiate between types of cohorts. We can define the new recipient cohorts in any given year as the incident cohorts and the cohorts already eligible for the intervention as the prevalent cohorts. For example, the introduction of an age-based prevention programme such as screening will affect three principal categories of cohorts: (1) the current incident cohort with current age equal to the screening start age; (2) the prevalent cohorts already aged within the screening age range; and (3) future incident cohorts that will age into the screening age range as time progresses. These three categories are represented in Fig. 1 in a hypothetical intervention that takes 3 years per patient to complete as cohorts 3, 1 and 2, and 4–7, respectively. The distinction between incident and prevalent cohorts only applies to interventions that take a long time to complete per cohort. This example can be contrasted with infant bloodspot screening. Since infant bloodspot screening only occurs once and the duration of intervention for a given cohort is short, such an intervention will only have one eligible cohort in any given year.

A multi-cohort model illustrating a 3-year intervention duration, a 5-year implementation period and a lifetime analytic time horizon. Each bar represents the lifespan of a cohort with a life expectancy of 80 years. The oldest cohort is born in 1943 and dies in 2023. The intervention starts at age 70 years and implementation begins in 2015; consequently, the two oldest cohorts start the intervention after age 70 years. If the implementation period were sufficiently long, all cohorts would complete the intervention. However, imposing a 5-year implementation period will censor some implementation for the youngest two cohorts. If effects are assessed until the death of the youngest cohorts then the analytic horizon is the year 2029

Differences between the current incident cohort and future incident cohorts are one obvious reason for using a multi-cohort model [8]. For example, in the context of screening for the prevention of cervical cancer, the vaccination of teenage girls against human papillomavirus (HPV) means that the expected incidence of disease in future cohorts is lower, with the result that screening is anticipated to be less cost effective than in the current incident cohort of unvaccinated women [9]. Multi-cohort models that include future incident cohorts can account for such differences.

A second, but less obvious, reason for using multi-cohort models is the differences between the current incident and prevalent cohorts [10]. For example, in an aged-based screening programme the prevalent cohorts start screening part-way through the eligible screening age range, and thus only receive what remains of the screening schedule. The cost effectiveness of this remaining portion of the intervention will likely differ from the complete screening schedule. Multi-cohort models can be used to account for such differences.

A third and important reason for using multi-cohort models is to simulate shared effects between cohorts, such as herd immunity due to vaccination against an infectious disease. These simulations often involve multiple cohorts and employ transmission dynamic models, in which the risk of infection varies with the proportion of the population infectious at a given time [11]. Such shared effects models typically simulate all individuals in whom transmission may be affected rather than only those who receive the intervention in question. For example, in the case of childhood influenza vaccination the entire population will often be modelled, as transmission can take place across all age groups [12].

When using multi-cohort models, analysts should consider whether to report estimates aggregated over all cohorts, or to report disaggregated results for specific subgroups [9, 13]. This choice largely depends on whether subgroup-specific policy choices are feasible. Cohort-specific estimates are less meaningful where there are shared effects between cohorts, such as in infectious diseases, as it is not possible to isolate cohort-specific effects. If estimates for multiple cohorts are aggregated together, the number of cohorts modelled and the consequences this has for incremental cost-effectiveness ratios (ICERs) and the correspondence to policy questions should be carefully considered [9].

We recommend that analysts explicitly define the simulated cohorts and describe how they relate to the policy choices faced by decision makers with respect to the patient population and time period in question. If none of the reasons for including multiple cohorts apply, we recommend using single-cohort models in the interests of parsimony. For multi-cohort models we recommend that the choice of reporting aggregate or disaggregated estimates is justified.

2.2 Intervention Duration, Implementation Period and Analytic Horizon

The number of cohorts modelled and the simulation of shared effects have implications for how long an intervention should be simulated and how long to assess its effects. There is a lack of consensus terminology regarding time horizons in CEA modelling. To address this we define and then provide advice around three important choices. The first is the intervention duration, which we define as the length of time over which the intervention is applied per person or cohort. This may be short in the case of infant bloodspot screening or be lifelong where a medication is taken daily until death. The intervention duration is inherent to the intervention itself and generally we recommend modelling until the completion of the intervention duration for all recipient cohorts. Curtailing the intervention duration, therefore, is to model a shorter course of an intervention than would be expected in reality, and, hence, may be unrepresentative of actual costs and effects.

Next, we define the implementation period as the period over which the intervention is applied over all simulated cohorts. This is identical to the intervention duration in a single-cohort model. In a multi-cohort model it is typically the time between the start of the intervention for the first cohort and the end of the intervention for the last cohort. However, in some cases analysts constrain the implementation period to some point in time before the conclusion of the intervention for all cohorts [14, 15]. For example, in Fig. 1 a five year implementation horizon would curtail implementation for some cohorts at year 2020. Whereas some cohorts (cohorts 3–5) complete the full intervention, other cohorts (cohorts 6 and 7) are constrained from completing the full course. To avoid curtailing the intervention duration we recommend specifying a sufficiently long implementation period.

Finally, we define the analytic horizon as the period over which costs and effects are assessed. The time horizon should generally be long enough to capture all meaningful differences in costs and effects between alternatives considered [16], which in many cases will be the lifetime of the cohorts modelled. Analyses with analytic horizons shorter than the cohorts’ lifetimes potentially omit relevant outcomes and therefore may provide incorrect results [13]. Some modellers adopt a cross-sectional approach that imposes a common implementation and analytic horizon over multiple cohorts; in some cases, this horizon is shorter than the cohorts’ lifetimes [17]. This cross-sectional interpretation contrasts with the more conventional longitudinal approach that appraises outcomes over the lifetime of the modelled individuals [18]. The cross-sectional approach has been recognised as less useful for appraising cost effectiveness and more relevant for assessing population health and budget impact [19, 20]. Such cross-sectional models are sometimes described as population models, although this is not necessarily the same interpretation that population models are open models.

There are some common issues that apply to these three aspects of models. Models are typically used only to simulate a finite period of implementation and consequent effects [21], whereas the actual period of implementation and effects is often open-ended. For example, the intervention itself may be implemented permanently (e.g. having a continuous implementation period) or the resulting effects may be unending (e.g. with the eradication of an infectious disease). While decision models can be extended to include additional incident cohorts and the scope of appraisal may extend long into the future, this inevitably comes at the costs of increasingly uncertain estimates. Therefore, it is often appropriate to limit the analysis to the most immediate cohorts for which policy decisions are required, provided that the intervention has no shared effects.

In the case of interventions with shared effects, the choice of the number of cohorts to simulate, the implementation period and the analytic horizon all require particular consideration. Infectious disease models often apply a long implementation period simulating multiple future cohorts [22]. This is to capture the changes in disease incidence and transmission dynamics over time following the introduction of the intervention. Since some of the effects of the intervention may occur in cohorts other than the intervention’s direct recipients, there is often no natural lifetime horizon to apply [23]; however, the simulation still needs to cease somewhere. Accordingly, the cross-sectional approach described above is sometimes used. While this inevitably censors some implementation and effects, the omission of future outcomes can become negligible when the model is run far into the future due to discounting (simulating 100 years of vaccination is not uncommon [22]). Current practice for most of such models is to halt the simulation once infection rates stabilise [22]; alternatively, the time horizon may be selected using the near-stabilisation of ICERs as a criterion. Although the uncertainty of estimates for the distant future may be high, a long timeframe seems to be the only way to obtain unbiased cost-effectiveness estimates for many interventions with shared effects.

Analyses typically assume that the intervention and related technologies remain stable over the period modelled. This is a pragmatic assumption as the emergence of new technologies typically cannot be anticipated. Therefore, it is advisable that all simulated cohorts complete their intervention duration, even though in actuality the intervention may become obsolete before the model’s implementation horizon [9]. This is preferable to assuming the intervention ceases, as that knowingly introduces bias by arbitrarily curtailing implementation. Similarly, a pragmatic approach commonly adopted is that prices are held constant [24]. The cost year from which prices are taken or inflated to should be as recent as possible and clearly stated [25]. Although the assumption that real prices remain constant is unlikely to hold, relatively small price changes are unlikely to alter the conclusions of most analyses. However, abrupt price reductions occurring on patent expiry of either the intervention of interest or its comparators might be relevant for reimbursement decisions [26, 27]. Nevertheless, the consequences of such future price changes are complex and it is unclear how they should be best handled in CEA modelling.

3 Simulating Time in Economic Evaluations

An important modelling consideration in CEA is exactly how the timing of clinical events is simulated. The level of realism used to simulate the timing of events also determines how accurately amounts of time (durations) are estimated. The main outcomes of CEAs are often defined in terms of amounts of time, such as the duration of a disease, quality-adjusted life-years (QALYs) and the duration (and thus costs) of an intervention. An incorrect assessment of these durations can therefore lead to inaccurate cost-effectiveness estimates.

3.1 Modelling Techniques

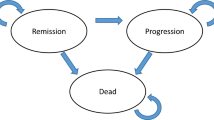

There are several possible modelling techniques for CEAs, the choice of which affects the way time is simulated. These modelling techniques can generally be divided into (1) decision tree models [28, 29]; (2) Markov and other state transition models [1, 16, 30]; and (3) discrete-event simulation models [31]. Decision trees simulate which clinical events will occur and in what order, to calculate expected costs and effects. They typically do not simulate the timing of events (i.e. time is not modelled explicitly). In state transition models, cohorts of patients are followed over time as they transition between a finite number of mutually exclusive possible health states. Most state transition models are discrete-time models, in which state membership (i.e. the number of individuals in a given health state) is only simulated at the beginning and end of each cycle and so is unknown between cycles. In discrete-event simulation models the timing of clinical events is simulated, rather than the transitions between states over fixed cycles. Therefore, such models are not restricted to pre-defined paths between health states. Many discrete-event simulations are continuous-time models that simulate the exact timing of each event, but a discrete-time approach is also possible. Note that the distinctions between modelling techniques are not always clear-cut, e.g. Markov models and state transition models can also be evaluated using discrete-event simulation.

Recommendations on when to use which modelling technique have been published previously [32–34]. However, as a general rule, for interventions with a short duration and only short-term effects (e.g. painkillers for a transient migraine), a model that does not explicitly simulate time, such as a decision tree, may be sufficient. Decision trees may also suffice for one-off treatments with long-term effects (e.g. a life-saving appendectomy), provided that the pay-offs at the end of the decision tree can be readily appraised and are adjusted to their present value (see Sect. 4). For interventions with complex long-term effects (e.g. gastric bypass surgery which may prevent or delay the onset of diabetes mellitus) or a long duration (e.g. a cancer screening programme with multiple screening rounds), it is necessary to use a model that explicitly models time [1].

3.2 Cycle Lengths and Half-Cycle Corrections

Modelling in discrete time can yield inaccurate cost-effectiveness estimates. This is because time is inherently continuous; treating it as discrete within simulation models leads to an approximation error in the estimation of state membership, because state membership (and thus treatments and patient characteristics) are then assumed to remain constant during cycles. Figure 2 shows a continuous survival function, the area under which represents the total time spent in a state over many individuals. The discrete approximation of that survival curve is shown by the step function. The resulting discrete-time approximation error is given by the lightly-shaded area between the step function and the continuous survival function. A continuous-time model best represents reality and is thus the most accurate approach. A practical reason for discrete-time modelling is that state membership after each cycle is easily calculated, whereas continuous-time modelling requires more advanced modeling techniques, such as discrete-event simulation.

The half-cycle correction (HCC) applied in two discrete-time models approximating a continuous survival function with long (a) and short (b) cycle lengths. The discrete-time approximation error is the lightly shaded area between the continuous survival curve and its discrete approximation represented by the step function. This error is larger in the model with a longer cycle length (a). The standard HCC is shown as the sum of the lightly and darkly shaded areas between the step function and a piecewise linear function joining state membership at the beginning and end of each cycle. a Shows how the HCC from each cycle of length t can be gathered, as represented by the column of shaded triangles, and then summed to yield an area equivalent to half a cycle of state membership at the start of the first cycle, represented by the shaded column

Discrete-time models generally give sufficient realism regarding the timing of events, provided that the cycle length is short enough to ensure that the approximation error is small. Figures 2a and b show discrete-time approximations of the same continuous survival function with long and short cycles, respectively. The figure shows that the approximation error is larger with the long cycle length and that the short cycle model better approximates the continuous-time reality. However, even with a shorter cycle length the time spent in the state is underestimated. Furthermore, the solution of using short cycles is not always practical, as shorter cycles impose larger computational burdens and very short cycles may be required to make the approximation error negligible.

To reduce the approximation error without employing very short cycles, analysts often apply a half-cycle correction (HCC) or another type of continuity correction in state transition models [35]. The standard HCC consists of adding half a cycle of the state membership at the beginning of the first cycle and subtracting half a cycle of the membership at the end of the last cycle (see Fig. 2) [36]. While a HCC may substantially reduce the approximation error, the accuracy of the standard HCC has been debated [37–40], leading to agreement among these authors that the standard HCC is flawed and the recommendation to use the cycle-tree HCC (also known as the life table method), which calculates state membership as the average of state membership at the beginning and end of each cycle [40].

The cycle-tree HCC is visible in Fig. 2a as the sum of the lightly and darkly-shaded areas between the discrete-time step function and the piecewise linear function that joins state membership at the beginning and the end of each cycle. In the case of this convex survival function the HCC slightly overestimates total state membership, and the approximation error with a cycle-tree HCC shown by the darkly shaded area. In this example the standard HCC and the cycle-tree HCC are equivalent. This is illustrated by summing the cycle-tree HCCs from each cycle, yielding the area of the grey column, which represents half a cycle of state membership at the beginning of the first cycle.

Although an HCC can reduce the discrete-time approximation error, it cannot fully correct it if the cycle length is too long. While the remaining approximation error is small when a short cycle length is used as in Fig. 2b (it is too small to be visible in the figure), this error can remain large if the cycle length is long. In Fig 2a for example, although the HCC reasonably approximates the survival function in cycles 2 and 3, it overestimates survival in the first cycle due to the large per-cycle transition probability. Consequently, the HCC is not sufficient in a model with a long cycle. Unfortunately, this dependence of the adequacy of the HCC on the cycle length is not always reflected in existing guidelines [41]. So, although a cycle-tree HCC will generally improve accuracy, analysts should be aware both that the gain in accuracy may be negligible if the cycle length is already short and that estimates may remain biased if the cycle length is too long.

When choosing the cycle length, modellers should take into account the accuracy of the results as well as the computation time and complexity of the analysis. As computation time increases with the inverse of the cycle length, using short cycles may result in impractically long run times in some models. However, advances in computing power have generally alleviated this concern. Given this, we recommend not choosing the cycle length simply based on data availability (e.g. the interval between measurements in a longitudinal study) or using default values (e.g. 1 year). If the computation time is acceptable, we recommend using a cycle length that is sufficiently short that a HCC is not required. Such a cycle length may be much shorter than is considered clinically relevant, e.g. chronic diseases that take years to progress may be simulated using a cycle as short as 1 week.

The size of the discrete-time approximation error, and thus the accuracy of the model results, depend on whether an HCC is applied and on the size of the transition probabilities in the model, which in turn depends on the cycle length. An analysis of how the approximation error varies with these two factors is given in the Appendix, using an example of a state transition model with two states. To assess whether a given cycle length is short enough, we recommend checking the largest probability of leaving the current state per cycle (i.e. the sum of the transition probabilities out of the current state). An HCC is likely not necessary if this probability is lower than 5 % for all states and time periods, as the relative approximation error must be smaller than 2.5 % in that case. If this probability exceeds 40 % for some states, then it is possible that the results will be inaccurate even with an HCC. In such cases, we recommend reducing the cycle length or performing a sensitivity analysis on the cycle length to prove that the approximation error does not substantially affect the results. In models with a maximum per-cycle probability between 5 and 40 %, an HCC is probably necessary. An adequately implemented HCC (we recommend the cycle-tree HCC) should be sufficient for accurate estimates in that case.

Note that it is also possible to evaluate a state transition model as a continuous-time model, so that the approximation error disappears and no HCC is needed. This is typically done using discrete-event simulation, but analytic approaches are sometimes also possible [42].

3.3 Changing the Cycle Length

Calculating the transition probabilities associated with different cycle lengths in state transition models can be difficult. For transition probabilities where there is only one possible transition (and no reverse transitions), the calculations are relatively straightforward; a transition probability \( P_{{t_{2} }} \) using a cycle of t 2 years can be transformed into a transition probability \( P_{{t_{1} }} \) with a cycle of t 1 years using \( P_{{t_{1} }} = 1 - (1 - P_{{t_{2} }} )^{{t_{1} /t_{2} }} \), and the transition probability \( P_{{t_{1} }} \) can be calculated from a transition rate r as \( P_{{t_{1} }} = 1 - {\text{e}}^{{ - rt_{1} }} \) [43, 44]. As the transition probabilities are often estimated using observational data that do not have the same measurement interval as the cycle length, such conversion formulas are typically required.

These conversion formulas are not necessarily exact for transition probabilities in models with multiple states. Consider the example of a three-state model with an annual cycle shown in Fig. 3a [45]. Patients progress from decompensated cirrhosis (DeCirr) to hepatocellular carcinoma (HeCarc) or death, and patients with HeCarc have an increased risk of dying. The annual transition probability from DeCirr to death of 0.14 includes two possible paths: (1) patients can develop HeCarc and die from HeCarc in the same year; or (2) patients can die without developing HeCarc. The univariate conversion of probabilities to a different cycle length ignores the first possibility, and can thus lead to incorrect transition probabilities. Figure 3 shows that the annual transition probabilities out of DeCirr are quite different between the initial values and those based on conversion to and back from monthly probabilities. Note that this bias cannot be reduced by using an HCC.

The effect of ignoring the possibility of multiple events per cycle when changing the cycle length in state transition models. A Markov model with three health states is shown: decompensated cirrhosis (DeCirr), hepatocellular carcinoma (HeCarc) and death. a Shows the model with annual cycle; b shows the model transformed to monthly cycles using a univariate conversion of probabilities; and c shows the implied annual transition probabilities of the Markov model using monthly cycles (adapted from Chhatwal et al. [45])

In some cases it is possible to use matrix roots based on eigendecompositions to change the cycle length in a Markov model [45]. These matrix roots take into account the possibility of multiple events per cycle, and thus yield accurate results. Another solution is to choose a short cycle length, such that the probability of multiple clinical events occurring during a cycle is very small, thereby minimising the bias shown in Fig. 3. Estimating transition probabilities from observational data for models with short cycles can be difficult if the observational intervals are long; however, appropriate statistical methods have been described in the literature [46].

4 Discounting and Time Preference

People typically prefer to enjoy good things sooner rather than later. This tendency is called positive time preference and it means that the present value of future costs or health effects is less than at the time they will be realised [47]. Discounting is the method used to find the present value of future outcomes in economic evaluation. Discounting is generally easy to implement and country-specific CEA guidelines usually give clear advice on what rates to apply. The near-universal consensus is to discount costs and health effects at an equal discount rate that remains constant over time, typically ranging from 3 to 5 % [47]. Nevertheless, discounting remains a much-debated topic [48], undoubtedly partly because it leads to a worsening of ICERs for many interventions.

4.1 The Standard Discounting Model

Discounting is usually applied as a discrete function, using an annual discount factor of δ t = (1 + r)−(t−1), where r is the annual discount rate and t is the time period starting at t = 1 in the discount year. Accordingly, all outcomes within each given year are discounted equally, irrespective of when in the year they occur. Although it may be more theoretically consistent to apply a continuous discount factor of the form δ t = e−r(t−1), this is rarely applied in practice.

The literature discussing the rationale for discounting is extensive [47, 49, 50]. There are two principal arguments supporting discounting [51]. The first is that positive time preference is an undeniable aspect of human behaviour and that social decision makers should reflect private preferences [50]. The second is that there is an opportunity cost of spending on health rather than other goods and services: if money is spent now to achieve future health gains, these future gains need to be sufficiently large to outweigh the return on funds allocated to other uses [51]. While there are competing arguments on what should represent the rate of return of alternative uses of funds, government borrowing costs as represented by bond yields are typically used to represent this intertemporal opportunity cost [48]. Note that inflation is not a reason to discount and that discounting should be applied irrespective of any anticipated real price changes [52].

Most countries recommend discounting costs and effects at a common rate, in which case the practical advice on discounting is straightforward: both costs and effects should be discounted according to national guidelines and the analysis should clearly state the rates applied. In the interests of comparability between countries, it is useful to supplement the base case with estimates for the rates of 3 and 5 %, as recommended by The Panel on Cost-Effectiveness in Health and Medicine [48]. These rates are also a reasonable recommendation for countries without official discounting guidance.

A univariate sensitivity analysis featuring discount rates ranging from 0 to 6 % is a common requirement of CEA guidelines. When conducting such sensitivity analyses, the discount rates on costs and effects should be varied together, so that equal discounting is maintained. Such sensitivity analyses usefully illustrate the effects of discounting to decision makers and should be reported. Nevertheless, analysts should not overemphasise undiscounted outcomes, as this may give the impression that the intervention is more cost effective than in reality. What is often not appreciated is that the relative ranking of interventions in terms of ICERs may be less sensitive to discounting than the ICER itself. Discount rates should not be varied during probabilistic sensitivity analysis as the appropriate discount rate is not usually subject to parameter uncertainty.

Discounting is used to estimate the present value of future costs and effects at a single point in time known as the discount year. Although the choice of discount year will affect the absolute size of discounted costs and effects, it does not influence ICERs under equal discounting, as adjusting the discount year results in equiproportionate changes in the numerator and the denominator of the ICER. Accordingly, the choice of discount year is usually not critical to the outcome of a CEA [53], but may be relevant to cost analyses. Nevertheless, it is conventional practice to use the year the intervention begins as the discount year, as this represents a decision year in which the intervention may or may not be implemented. However, it is necessary to maintain a common discount year when comparing multiple possible initiation years, for example with alternative start ages for prophylactic statin therapy in a given cohort.

The adequacy of the standard discounting model with constant, equal discount rates for costs and effects has been debated, as there is considerable evidence that individuals’ time preferences do not accord with this model [54]. For instance, individuals tend to demonstrate decreasing rates of time preference as outcomes become more distant [55]. This has prompted proposals of non-constant discounting in which the discount rate falls over time [49, 56]. However, such discounting results in dynamic inconsistency, whereby the optimal policy choice can alter as time advances and outcomes become more immediate [48]. Apparently, the only national CEA guidelines recommending a declining discount rate are those of France, which permit a fall in the discount rate after 30 years [57], although the guidance is ambiguous regarding implementation. A declining discount rate has also been recommended by the UK Treasury [55], but this guidance is not CEA specific.

4.2 Differential Discounting

Differential discounting is one deviation from standard discounting that has been adopted in several CEA guidelines. It is the application of a different (typically lower) discount rate to health effects than to costs [58]. The primary justification for differential discounting is the expectation of growth in the willingness to pay for health over time, represented either by the value of health or the cost-effectiveness threshold [58, 59]. The CEA guidelines of Belgium, The Netherlands and Poland require differential discounting, as did the UK’s prior to 2004 [53, 60]. Differential discounting improves the ICERs of many interventions, especially those with a long lag between costs and benefits, such as preventive interventions.

Differential discounting can complicate the interpretation of cost-effectiveness evidence, presenting problems that have yet to be documented or resolved. One long-recognised issue is the so-called postponing paradox, whereby an intervention’s ICER declines if implementation is postponed to a cohort in the following year, supposedly leading decision makers to eternally postpone implementation [61]. The paradox has been dismissed as irrelevant, because CEA informs what interventions to implement in a given period rather than when to allocate resources [62]. Despite this, there remain two closely related problems.

The first is that the choice of discount year can influence ICERs. Setting the discount year to a period before treatment initiation will reduce the ICER relative to the more common practice of discounting to the year of treatment initiation. For example, a Dutch CEA of cervical screening strategies starting at age 30 years in women who received HPV vaccination discounted the costs and effects of screening to the age of vaccination at 12 years [63]. Under the Dutch discount rates of 4 % for costs and 1.5 % for effects, the 18 additional years of discounting resulted in ICERs that were approximately 35 % lower than would have been the case had outcomes been discounted to the initiation of screening. This should be avoided by setting the discount year to the year of treatment initiation. In the case of comparisons of multiple possible intervention ages for a given cohort, the discount year should be held as the earliest intervention year.

The second problem related to the postponing paradox is that ICERs fall with the addition of future cohorts to a model [53]. As mentioned above, the simulation of indirect effects of vaccination requires the inclusion of multiple future cohorts, which will improve the ICER under differential discounting, all else being equal. Indeed, this effect can become so pronounced when the number of future cohorts is large that ICERs under differential discounting can become even lower than ICERs without discounting. For example, a CEA of HPV vaccination that employed 100 years of incident cohorts found an ICER of £2385/QALY under differential discounting rates of 6 and 1.5 % for costs and effects, respectively, whereas the undiscounted ICER was £4320/QALY [64]. So, although discounting typically inflates ICERs when beneficial effects occur after costs are incurred, this can be outweighed by the implicit increase in the value of health effects implied by differential discounting if the analysis extends sufficiently far into the future. This effect of differential discounting compromises the comparability of estimates between CEAs with different numbers of future cohorts.

We make three recommendations for those countries requiring differential discounting. The first is to also report outcomes for equal discounting at both 3 and 5 %. Second, clearly define the simulated cohorts, including their ages at the date the intervention begins. Third, always report the discount year and intervention start year for all strategies modelled.

5 Discussion

The methodological issues regarding time have important implications for evaluating the cost effectiveness of healthcare strategies. This applies to each of the three principal issues considered in this article: (1) the choice of which cohorts to simulate in a model and for how long; (2) how to simulate time in a CEA model; and (3) how to discount future costs and effects. Aspects of these questions can be complex and abstract (e.g. the postponing paradox); nevertheless, these are important elements of the correspondence between models and the real-life policy choices they inform. Therefore, applied CEA modellers should have an appreciation of the issues regarding time and reflect them appropriately in the simulations they conduct to help inform policy makers.

Single-cohort models are often sufficient to simulate an intervention’s cost effectiveness and are widely used. However, some situations require the simulation of multiple cohorts, which then raises questions of how the implementation period and analytic time horizon should be extended to accommodate future cohorts and whether to report disaggregated subgroup-specific estimates. This article’s key recommendations are summarised in Table 1. The choice of cohorts simulated should be made explicit, as should the decision to report aggregated results or otherwise. Implementation periods and analytic time horizons should be sufficiently long to avoid curtailing the simulation of interventions and the assessment of their outcomes. One exception to this recommendation is the multi-cohort modelling of interventions with shared effects, in which the imposition of finite time horizons that censor the assessment of effects for at least some cohorts seems largely inevitable. Further research is required into the most appropriate approach in this case.

The issue of how to simulate the timing of events using different modelling techniques has received much attention in the literature. Although the limitations of models not explicitly simulating time have previously been recognised, decision trees are still used. As long as models do simulate time explicitly and cycle lengths are set appropriately within discrete time models, the choice between discrete-time and continuous-time modelling is not necessarily critical. The cycle length determines how well discrete-time models approximate continuous-time processes and thus is an important modelling choice. Although HCCs can reduce some of the inaccuracies associated with long cycle lengths, we generally recommend making the cycle length sufficiently short to ensure the differences between continuous-time and discrete-time models are negligible, provided that a short cycle is computationally feasible. Finally, adjusting transition probabilities for different cycle lengths is an underappreciated issue and analysts should always take care when making such conversions.

Discounting remains a much-debated topic in CEA, probably partly due to the deterioration of ICERs after discounting in many cases. Discounting is not always intuitive, especially to non-economists, and it is understandably disconcerting that ICERs can vary profoundly with small changes in the discount rate, especially when the rationale for one rate over another appears weak. The particular rates and form of discounting applied should have a sound normative basis and not be driven by a desire to find some interventions cost effective. Alternatives to constant-rate discounting (e.g. hyperbolic discounting) do not accord with standard economic theory and may potentially lead to logical inconsistencies and unanticipated consequences. Accordingly, we strongly recommend applying the discount rates stipulated in the relevant CEA guidance.

Differential discounting is one departure from standard discounting that has achieved sufficient support among health economists to be adopted in some national CEA guidelines. However, questions remain whether the differentials between rates currently employed are empirically justified given plausible rates of threshold growth [65]. Similarly, it is unclear how differential discounting should be applied in multi-cohort models. This is especially relevant for infectious disease models that extend far into the future, as the implied growth in the willingness to pay for health over a long period can be substantial.

A common thread linking the three topics considered in this article is the correspondence of models to the policy questions they guide. Recognising that models need to closely correspond to policy questions if they are to provide reliable guidance prompts two further important considerations. First, judging how well a given model corresponds to a particular policy question requires a clear model description. Unfortunately, many aspects of time are often not explicitly noted or are incompletely described, including the implementation period, simulation cycle length and discount year. Therefore, it can be unclear what analytic approach has been adopted and why. Although technical details regarding model implementation that do not affect results can sometimes be omitted in the description of applied CEAs, many issues regarding time can have a strong impact on the estimated cost effectiveness and should thus be reported.

Second, it is important that policy questions are framed in a way that is meaningful to CEA. For example, a decision maker may quite naturally ask what the cost effectiveness of a given intervention in the population over the next 5 years is. Such a question may not be meaningfully applicable to many long-duration interventions such as screening programmes. Accordingly, dialogue between modellers and decision makers is essential, not only to ensure that models correspond with policy questions but also that the right policy questions are posed in the first place.

A limitation of this article is that it naturally cannot address all relevant issues regarding time in CEA modelling. It has not considered extrapolation of trial data and the influence of alternative survival functions on cost-effectiveness estimates, which have been addressed elsewhere [66, 67]. Similarly, it has not reviewed recent work on the graphical presentation of net benefit and its uncertainty over time [68, 69], or how time affects value of information analysis and the possibility of delaying implementation for further research [70, 71]. We have investigated the effects of the cycle length and the HCC on the discrete-time approximation error, leading to suggested bounds on the appropriate size of the per-cycle transition probabilities. Further research is necessary to provide a full exposition of these results. More generally, our recommendations are based on the literature and the authors’ CEA modelling experience rather than being substantiated with examples.

6 Conclusions

Choices around time in health economic evaluations can be influential, but unfortunately they can also be conceptually challenging for both analysts and policy makers. We make a number of specific recommendations, but the most important is the general suggestion that analysts think carefully about how considerations of time may impact on results and that they explicitly state and justify their modelling choices.

References

Beck JR, Pauker SG. The Markov process in medical prognosis. Med Decis Mak. 1983;3(4):419–58.

Torrance GW, Siegel JE, Luce BR, Gold MR, Russell LB, Weinstein MC. Framing and designing the cost-effectiveness analysis. In: Gold MR, Siegel JE, Russell LB, Weinstein MC, editors. Cost-effectiveness in health and medicine. New York: Oxford University Press; 1996. p. 54–81.

Ethgen O, Standaert B. Population- versus cohort-based modelling approaches. Pharmacoeconomics. 2012;30(3):171–81.

Goldie SJ, Goldhaber-Fiebert JD, Garnett GP. Public health policy for cervical cancer prevention: the role of decision science, economic evaluation, and mathematical modeling. Vaccine. 2006;24(S3):S155–63.

Chhatwal J, He T. Economic evaluations with agent-based modelling: an introduction. Pharmacoeconomics. 2015;33(5):423–33.

Habbema J, Boer R, Barendregt J. Chronic disease modeling. In: Killewo J, Heggenhougen K, Quah SR, editors. Epidemiology and demography in public health. Amsterdam: Academic Press; 2008. p. 704–9.

Cooper K, Brailsford S, Davies R. Choice of modelling technique for evaluating health care interventions. J Oper Res Soc. 2007;58(2):168–76.

Hoyle M, Anderson R. Whose costs and benefits? Why economic evaluations should simulate both prevalent and all future incident patient cohorts. Med Decis Mak. 2010;30(4):426–37.

O’Mahony JF, van Rosmalen J, Zauber AG, van Ballegooijen M. Multicohort models in cost-effectiveness analysis: why aggregating estimates over multiple cohorts can hide useful information. Med Decis Mak. 2013;33(3):407–14.

Dewilde S, Anderson R. The cost-effectiveness of screening programs using single and multiple birth cohort simulations: a comparison using a model of cervical cancer. Med Decis Mak. 2004;24(5):486–92.

Brisson M, Edmunds WJ. Economic evaluation of vaccination programs: the impact of herd-immunity. Med Decis Mak. 2003;23(1):76–82.

Newall AT, Dehollain JP, Creighton P, Beutels P, Wood JG. Understanding the cost-effectiveness of influenza vaccination in children: methodological choices and seasonal variability. Pharmacoeconomics. 2013;31(8):693–702.

Karnon J, Brennan A, Akehurst R. A critique and impact analysis of decision modeling assumptions. Med Decis Mak. 2007;27(4):491–9.

de Gelder R, Bulliard J-L, de Wolf C, Fracheboud J, Draisma G, Schopper D, et al. Cost-effectiveness of opportunistic versus organised mammography screening in Switzerland. Eur J Cancer. 2009;45(1):127–38.

Stout NK, Rosenberg MA, Trentham-Dietz A, Smith MA, Robinson SM, Fryback DG. Retrospective cost-effectiveness analysis of screening mammography. J Natl Cancer Inst. 2006;98(11):774–82.

Weinstein MC, O’Brien B, Hornberger J, Jackson J, Johannesson M, McCabe C, et al. Principles of good practice for decision analytic modeling in health-care evaluation: report of the ISPOR Task Force on Good Research Practices—modeling studies. Value Health. 2003;6(1):9–17.

Knudsen AB, McMahon PM, Gazelle GS. Use of modeling to evaluate the cost-effectiveness of cancer screening programs. J Clin Oncol. 2007;25(2):203–8.

Mandelblatt JS, Fryback DG, Weinstein MC, Russell LB, Gold MR. Assessing the effectiveness of health interventions for cost-effectiveness analysis. Panel on Cost-Effectiveness in Health and Medicine. J Gen Intern Med. 1997;12(9):551–8.

Mauskopf J. Prevalence-Based Economic Evaluation. Value Health. 1998;1(4):251–9.

Standaert B, Demarteau N, Talbird S, Mauskopf J. Modelling the effect of conjugate vaccines in pneumococcal disease: cohort or population models? Vaccine. 2010;28(S6):G30–8.

Sonnenberg FA, Beck JR. Markov models in medical decision making: a practical guide. Med Decis Mak. 1993;13(4):322–38.

Mauskopf J, Talbird S, Standaert B. Categorization of methods used in cost-effectiveness analyses of vaccination programs based on outcomes from dynamic transmission models. Expert Rev Pharmacoecon Outcomes Res. 2012;12(3):357–71.

Marra F, Cloutier K, Oteng B, Marra C, Ogilvie G. Effectiveness and cost effectiveness of human papillomavirus vaccine. Pharmacoeconomics. 2009;27(2):127–47.

Newall A, Reyes J, Wood J, McIntyre P, Menzies R, Beutels P. Economic evaluations of implemented vaccination programmes: key methodological challenges in retrospective analyses. Vaccine. 2014;32(7):759–65.

Siegel JE, Weinstein M, Torrance G. Reporting cost-effectiveness studies and results. In: Gold MR, Siegel JE, Russell LB, Weinstein MC, editors. Cost-effectiveness in health and medicine. New York: Oxford University Press; 1996. p. 276–303.

Hoyle M. Future drug prices and cost-effectiveness analyses. Pharmacoeconomics. 2008;26(7):589–602.

Shih Y-CT, Han S, Cantor SB. Impact of generic drug entry on cost-effectiveness analysis. Med Decis Mak. 2005;25(1):71–80.

Detsky AS, Naglie G, Krahn MD, Redelmeier DA, Naimark D. Primer on medical decision analysis: part 2—building a tree. Med Decis Mak. 1997;17(2):126–35.

Roberts M, Russell LB, Paltiel AD, Chambers M, McEwan P, Krahn M, ISPOR-SMDM Modeling Good Research Practices Task Force. Conceptualizing a model: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force–2. Med Decis Mak. 2012;32(5):678–89.

Briggs A, Sculpher M. An introduction to Markov modelling for economic evaluation. Pharmacoeconomics. 1998;13(4):397–409.

Caro JJ. Pharmacoeconomic analyses using discrete event simulation. Pharmacoeconomics. 2005;23(4):323–32.

Barton P, Bryan S, Robinson S. Modelling in the economic evaluation of health care: selecting the appropriate approach. J Health Serv Res Policy. 2004;9(2):110–8.

Karnon J. Alternative decision modelling techniques for the evaluation of health care technologies: Markov processes versus discrete event simulation. Health Econ. 2003;12(10):837–48.

Karnon J, Afzali HHA. When to use discrete event simulation (DES) for the economic evaluation of health technologies? A review and critique of the costs and benefits of DES. Pharmacoeconomics. 2014;32(6):547–58.

Soares MO, Canto ECL. Continuous time simulation and discretized models for cost-effectiveness analysis. Pharmacoeconomics. 2012;30(12):1101–17.

Naimark DM, Bott M, Krahn M. The half-cycle correction explained: two alternative pedagogical approaches. Med Decis Mak. 2008;28(5):706–12.

Barendregt JJ. The half-cycle correction: banish rather than explain it. Med Decis Mak. 2009;29(4):500–2.

Barendregt JJ. The life table method of half cycle correction: getting it right. Med Decis Mak. 2014;34(3):283–5.

Naimark DM, Kabboul NN, Krahn MD. The half-cycle correction revisited: redemption of a kludge. Med Decis Mak. 2013;33(7):961–70.

Naimark DM, Kabboul NN, Krahn MD. Response to “the life table method of half-cycle correction: getting it right”. Med Decis Mak. 2014;34(3):286–7.

Siebert U, Alagoz O, Bayoumi AM, Jahn B, Owens DK, Cohen DJ, et al. State-transition modeling: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force-3. Value Health. 2012;15(6):812–20.

van Rosmalen J, Toy M, O’Mahony JF. A mathematical approach for evaluating Markov models in continuous time without discrete-event simulation. Med Decis Mak. 2013;33(6):767–79.

Miller DK, Homan SM. Determining transition probabilities: confusion and suggestions. Med Decis Mak. 1994;14(1):52–8.

Fleurence RL, Hollenbeak CS. Rates and probabilities in economic modelling: transformation, translation and appropriate application. Pharmacoeconomics. 2007;25(1):3–6.

Chhatwal J, Jayasuriya S, Elbasha EH. Changing cycle lengths in state-transition models: doing it the right way. ISPOR Connect. 2014;20(5):12–4.

Welton NJ, Ades A. Estimation of Markov chain transition probabilities and rates from fully and partially observed data: uncertainty propagation, evidence synthesis, and model calibration. Med Decis Mak. 2005;25(6):633–45.

Severens JL, Milne RJ. Discounting health outcomes in economic evaluation: the ongoing debate. Value Health. 2004;7(4):397–401.

Lipscomb J, Weinstein MC, Torrance GW. Time preference. In: Gold MR, Siegel JE, Russell LB, Weinstein MC, editors. Cost-effectiveness in health and medicine. New York: Oxford University Press; 1996. p. 214–35.

Olsen JA. On what basis should health be discounted? J Health Econ. 1993;12(1):39–53.

Goodin RE. Discounting discounting. J Public Policy. 1982;2(1):53–71.

Shepard DS, Thompson MS. First principles of cost-effectiveness analysis in health. Public Health Rep. 1979;94(6):535.

Weinstein MC, Stason WB. Foundations of cost-effectiveness analysis for health and medical practices. N Engl J Med. 1977;296(13):716–21.

O’Mahony JF, de Kok IM, van Rosmalen J, Habbema JDF, Brouwer W, van Ballegooijen M. Practical implications of differential discounting in cost-effectiveness analyses with varying numbers of cohorts. Value Health. 2011;14(4):438–42.

Frederick S, Loewenstein G, O’Donoghue T. Time discounting and time preference: a critical review. J Econ Lit. 2002;40(2):351–401.

Westra TA, Parouty M, Brouwer WB, Beutels PH, Rogoza RM, Rozenbaum MH, et al. On discounting of health gains from human papillomavirus vaccination: effects of different approaches. Value Health. 2012;15(3):562–7.

Harvey CM. The reasonableness of non-constant discounting. J Public Econ. 1994;53(1):31–51.

Haute Autorité de Santé (HAS). Choices in methods for economic evaluation. Saint-Denis: Haute Autorité de Santé; 2012.

Gravelle H, Smith D. Discounting for health effects in cost–benefit and cost-effectiveness analysis. Health Econ. 2001;10(7):587–99.

Claxton K, Paulden M, Gravelle H, Brouwer W, Culyer AJ. Discounting and decision making in the economic evaluation of health-care technologies. Health Econ. 2011;20(1):2–15.

Agencja Oceny Technologii Medycznych (AOTM). Guidelines for conducting Health Technology Assessment (HTA). Warsaw: Agencja Oceny Technologii Medycznych; 2009.

Keeler EB, Cretin S. Discounting of life-saving and other nonmonetary effects. Manag Sci. 1983;29(3):300–6.

van Hout BA. Discounting costs and effects: a reconsideration. Health Econ. 1998;7(7):581–94.

Coupé VM, de Melker H, Snijders PJ, Meijer CJ, Berkhof J. How to screen for cervical cancer after HPV16/18 vaccination in The Netherlands. Vaccine. 2009;27(37):5111–9.

Jit M, Choi YH, Edmunds WJ. Economic evaluation of human papillomavirus vaccination in the United Kingdom. BMJ. 2008;337:a769.

Paulden M, Claxton K. Budget allocation and the revealed social rate of time preference for health. Health Econ. 2012;21(5):612–8.

Gerdtham U-G, Zethraeus N. Predicting survival in cost-effectiveness analyses based on clinical trials. Int J Technol Assess Health Care. 2003;19(03):507–12.

Latimer NR. Survival analysis for economic evaluations alongside clinical trials—extrapolation with patient-level data inconsistencies, limitations, and a practical guide. Med Decis Mak. 2013;33(6):743–54.

McCabe C, Edlin R, Hall P. Navigating time and uncertainty in health technology appraisal: would a map help? Pharmacoeconomics. 2013;31(9):731–7.

Mahon R. Temporal uncertainty in cost-effectiveness decision models [Doctoral thesis] York, UK: University of York, Economics and Related Studies; 2014. http://etheses.whiterose.ac.uk/id/eprint/8268.. Accessed 1 April 2015.

Philips Z, Claxton K, Palmer S. The half-life of truth: what are appropriate time horizons for research decisions? Med Decis Mak. 2008;28(3):287–99.

Eckermann S, Willan AR. Time and expected value of sample information wait for no patient. Value Health. 2008;11(3):522–6.

Acknowledgments

The authors would like to thank Dr. Josephine Reyes and Dr. James Wood of The University of New South Wales for their useful comments. JFOM is funded by the Health Research Board of Ireland under the CERVIVA II inter-disciplinary enhancement grant. ATN, JvR and JFOM contributed to this work equally, having primarily written Sects. 2, 3 and 4, respectively, and the writing of the remaining material was shared. The authors have no conflicts or interests, financial or otherwise, to declare. JvR acts as overall guarantor.

Conflict of interest

None.

Author information

Authors and Affiliations

Corresponding author

Appendix: Effects of Cycle Length and Half-Cycle Correction on Discrete-Time Approximation Error

Appendix: Effects of Cycle Length and Half-Cycle Correction on Discrete-Time Approximation Error

Consider a simple two-state state transition model in which the only possible transition is from alive to dead. The model can be analysed using either discrete or continuous time. Let t denote the time in the model, with the unit of measurement equal to the cycle length in a discrete-time analysis, so that t = 1 corresponds to the end of the first cycle. The proportion of a cohort alive at any point between the cycles can be calculated under the assumption of constant hazard and using the per-cycle transition probability, p. For a cohort alive at t = 0, the proportion alive at time t is given by f(t) = elog(1−p)t, where t is constrained to integer values for the discrete-time model, so that the proportion alive at time t = 1 is f(1) = 1 − p. When evaluated in continuous time, the amount of time spent in the alive state during the first cycle can be calculated by integrating f(t), which yields that \( T_{\text{exact}} = \int_{0}^{1} {{\text{e}}^{\log (1 - p)t} {\text{d}}t} = \frac{ - p}{{{ \log }(1 - p)}} \). When evaluated in discrete time without HCC, the time spent in this state over the first cycle is T NoHCC = 1 − p, i.e. the state membership at the end of the cycle; with a cycle-tree HCC, this is T HCC = 1 − 0.5p, which is the average state membership at the beginning and the end of the cycle. The state membership as a function of time is illustrated in Fig. 4, for both the continuous-time model and the discrete-time model with HCC.

The discrete-time approximation error can be defined as the relative difference in the time spent between the continuous-time approach and the discrete-time approach, which is given by \( \frac{{T_{\text{NoHCC}} - T_{\text{Exact}} }}{{T_{\text{Exact}} }} = \frac{p - 1}{p}\log \left( {1 - p} \right) - 1 \) and \( \frac{{T_{\text{HCC}} - T_{\text{Exact}} }}{{T_{\text{Exact}} }} = \frac{0.5p - 1}{p}\log \left( {1 - p} \right) - 1 \), without HCC and with HCC, respectively. The absolute values of these functions are shown in Fig. 5, as the approximation errors with and without HCC have a different sign in this case due to the convexity of the survival function.

In models with more than two states, for each state there is not only a bias associated with patients leaving the state before the end of a cycle, but also a bias due to patients entering the state during the cycle. These two biases have opposite directions, and so will offset each other to a degree. Consequently, the total approximation error cannot be larger than the maximum of these two biases. The size of the bias associated with patients leaving the state before the end of a cycle can be described by the functions in Fig. 5, with these functions applied to the probability that a patient leaves a state during a cycle, i.e. the sum of all transition probabilities out of this state.

The bias associated with patients entering a state during a cycle has the same size in absolute terms (i.e. T HCC − T Exact or T NoHCC − T Exact) as the bias associated with the probability of leaving the state during a cycle for the state from which the patients are coming. This fact is illustrated in Fig. 4, where the area between the exact state membership curve and the HCC approximation is identical for both the dead state and the alive state: the overestimation in the time spent in the alive state (with HCC) equals the underestimation of the time spent in the dead state. Although the approximation error relative to the time spent in the initial state (i.e. alive) remains constant over cycles, the approximation error relative to the time spent in the absorbing state (i.e. dead) is initially high and decreases over cycles. Note, therefore, that the relative error for the absorbing state will be larger than shown in Fig. 5 in early cycles. These large approximation errors typically only occur in the first cycles of the model, when there can be large relative increases in the state membership during a cycle for states with initial state membership equal to 0. When summed over a number of cycles of the model, the total relative bias due to patients entering a state during a cycle will thus be attenuated. Numerical experimentation with state transition models with more than two states suggests that the approximation error in any state in a state transition model is rarely larger than the relative bias given in Fig. 5, unless the model is run for a small number (e.g. less than 50) of cycles.

The benefits of the HCC can be shown in the illustrative example of a two-state model. An illustrative maximum bias of 2.5 % is achieved when the probability of leaving the current state does not exceed 0.05 in a model without HCC or 0.40 in a model with HCC. Thus, a tolerable level of approximation error can be achieved at much higher transition probabilities if a HCC is applied. We did not study the effect of the approximation error on comparative cost-effectiveness outcomes such as ICERs, and we also ignored the effects of discounting in our analysis. However, the biases due to the discrete-time approximation in the costs and health effects under different treatments should all have the same direction, so that the bias in cost-effectiveness ratios may be much smaller than the bias in the time spent in a state.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

O’Mahony, J.F., Newall, A.T. & van Rosmalen, J. Dealing with Time in Health Economic Evaluation: Methodological Issues and Recommendations for Practice. PharmacoEconomics 33, 1255–1268 (2015). https://doi.org/10.1007/s40273-015-0309-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-015-0309-4