Abstract

Background

Discrete choice experiments are increasingly used to assess preferences for vaccines and vaccine service delivery.

Objectives

To synthesize and critically assess the application of discrete choice experiments in childhood/adolescent vaccines, to describe how discrete choice experiments have been applied to understand preferences, and to evaluate the use of discrete choice experiment data to inform estimates of vaccine uptake.

Methods

We conducted a systematic review of six electronic databases. Included studies were discrete choice experiments and conjoint analyses published from 2000 to 2016 related to childhood/adolescent vaccines where respondents were parents, children/adolescents, or service providers. Validity assessment was used to assess study quality and risk of bias.

Results

In total, 27 articles were included, representing 21 different studies. A majority of articles were published between 2011 and 2016. Vaccines studied included human papillomavirus (24%), influenza (19%), meningococcal vaccines (14%), childhood vaccines (14%), hypothetical vaccines (10%), hepatitis B (5%), and diphtheria, tetanus, pertussis, hepatitis B, poliomyelitis, and Haemophilus influenzae type b (5%). Most studies assessed parent preferences (67%). The most common attributes were risk (24%), degree/duration of protection (21%), and cost (15%). Commonly reported outcome measures were estimates of uptake (33%), willingness-to-pay (22%), and other marginal rates of substitution (14%). Validity assessments yielded high scores overall. Areas of weakness included low response rates, inefficient experimental design, and failure to conduct formative qualitative work and a pilot of the discrete choice experiment.

Conclusion

This is the first systematic review of childhood/adolescent vaccine-related discrete choice experiments. In future, special attention should be paid to ensuring that choice context and discrete choice experiment design are compatible to generate reliable estimates of uptake.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

There has been a marked increase in the use of choice-based stated preference methods to study individual preferences for childhood and adolescent vaccines from 2011 to 2016. Of 27 articles identified in the review period, 81% were published in this time period compared with 19% published from 2000 to 2010. |

Of the 21 included studies, more than half provided a discussion of vaccine uptake. Methods used to estimate uptake were varied. Only one study compared estimated uptake with observed vaccine acceptance. |

Overall, the quality of included studies was high. Areas of weakness included failing to use formative qualitative work or piloting of the discrete choice experiment, not reporting on statistical design, and low or unreported response rates. |

1 Introduction

There is growing concern that childhood immunization coverage is below the level required to achieve herd immunity and may be decreasing owing to parents’ hesitancy to accept vaccines for their children [1, 2]. Studies of the uptake of existing vaccines have identified a variety of factors influencing the decision to accept vaccines. Recent reviews of qualitative and quantitative literature suggest that vaccine acceptance is influenced the characteristics of the vaccine, awareness of immunization recommendations, knowledge and beliefs about vaccines and vaccine-preventable diseases, and characteristics of the health system or immunization program [3,4,5].

In the context of an expanding routine childhood immunization schedule, understanding preferences for new vaccines is important. This is particularly true as many new vaccines are individual rather than combination vaccines, which may add to the number of injections that a child receives in a single visit. Similarly, the addition of an oral rotavirus vaccine and nasal influenza vaccine may prove overwhelming to parents if offered in the context of a single visit, or may add to the number of visits required to complete the full set of recommended childhood vaccines, which may lower rates of uptake as parents opt out of vaccines that they perceive to be of relatively less importance.

In economics, a distinction is made between ‘revealed preferences’ based on analysis of observed choices and ‘stated preferences’, which are obtained by asking individuals to indicate their preference between hypothetical alternatives [6]. Stated preference data can be particularly useful in understanding how individuals value goods that are traded on the market or are not yet available in the market. Stated preference methods are increasingly used in health economics to address a wide range of issues related to health policy and health outcomes [7]. Discrete choice experiments (DCEs) or choice-based conjoint analyses are survey-based tools designed to elicit and quantitatively evaluate individual preferences. This approach is grounded in Lancaster’s Theory of Demand, which suggests that utility from consuming goods or services can be described by the utility derived from the individual attributes. Therefore, the overall utility derived from consuming a good is a function of the combination of attributes that a good possesses and the quantities in which those attributes are manifested in the good [8]. Using this approach, individuals are presented with a series of hypothetical choice scenarios in which alternatives are described by a common set of attributes, which take on different levels over the course of a series of choice sets. Based on individual responses to repeated choices, the trade-offs that respondents make as the attribute levels change can be observed and the value that respondents place on each individual attribute estimated [6].

In the context of vaccine preferences, DCEs have been used to understand the relative importance of existing vaccines attributes as well as the factors that may underlie preferences for hypothetical or new vaccines. An advantage of using stated preference data generated by a DCE over revealed preference to understand factors motivating uptake is that revealed preference data is limited to the vaccine alternatives that are currently available. This is further compounded by the fact that consumers often do not have a choice between vaccines or between providers at the point of service delivery, as many childhood and adolescent vaccines are publicly funded and only one alternative or one provider is available. Even where private options exist, there may be limited choice or availability of vaccines and providers. Using a DCE, preference for attributes that are outside the parameters of currently available vaccines may be included to identify how changes to vaccine or service delivery attributes may impact uptake.

Qualitative studies and surveys of parents’ attitudes and beliefs have also been used to explore factors related to vaccine uptake. However, these approaches are limited in terms of the extent to which the results can be used to inform policy, as the results are often limited to identifying factors that are important or not important in motivating the choice to vaccinate and cannot be used to understand trade-offs between characteristics or demonstrate how changes in vaccine characteristics or service delivery may impact uptake. In contrast, DCEs can be used to quantify the trade-offs that parents make between characteristics and coefficients derived from modeled results. The probability that respondents will choose a vaccine over available alternatives can be calculated and an estimate of overall vaccine uptake in different scenarios obtained.

The specific objectives of this review were to synthesize and critically assess the application of DCEs in the study of childhood and adolescent vaccines, to describe how DCEs have been applied to understand preferences, and to evaluate the use of DCE data to inform estimates of vaccine uptake.

2 Methods

2.1 Search Strategy

Six electronic indexed databases were searched: PubMed, Web of Science, EconLit, CINAHL Plus, EMBASE, and MEDLINE. Search terms were: vaccin* OR immunis* OR immuniz* AND discrete choice OR stated preference OR DCE OR conjoint analysis. A detailed search strategy is provided in Online Resource 1. The search was limited to peer-reviewed articles published in English from January 2000 to December 2016.

Studies included in the review met the following inclusion criteria: presented the results of DCEs and choice-based conjoint analysis studies; aimed at eliciting preferences among patients, parents, and healthcare workers; related to childhood and adolescent vaccines and immunization programs; research conducted in high- and upper-middle-income settings [9]. Studies employing contingent valuation, time trade-off, standard gamble, ranking, or scaling approaches that did not include an element of choice were not included.

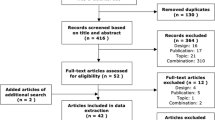

Duplicates were removed electronically and all unique studies were screened for relevance based on title and abstract. The full text of potentially eligible references was reviewed and retained if the study met inclusion criteria. An update to the review conducted in January 2017 identified two additional references, which were included following full-text screening.

2.2 Data Extraction and Validity Assessment

Data extraction was performed by C.M.I. for all studies using a standardized template including fields related to study setting, survey administration, experimental design, choice task construction, analytic approach, outcome measures, and source of funding. Threats to the validity of all included studies were assessed based on the 13 criteria checklist developed by Mandeville et al. [10]. This checklist incorporates elements of a quality checklist developed by Lancsar and Louviere [11] and correlates well with the ten domains of good research practice in conjoint analyses identified by Bridges et al. [12].

3 Search Results

A total of 563 records were returned from initial searches. After removing duplicates, 340 records remained. A further 253 were excluded based on title and abstract screening. Full text was retrieved for 65 references and 25 were selected for final inclusion. Following search updates in June 2016 and January 2017, two additional references were identified for inclusion in the final sample (see Fig. 1). The final sample is therefore made up of 27 full-text articles representing results from 21 different studies. A data extraction table for each study is presented in Online Resource 2. For the remainder of the review, the term ‘study’ refers to a group of publications based on one stated preference survey unless otherwise noted.

3.1 Year and Discrete Choice Experiment Country of Origin

Twenty-two (81%) of the included articles were published from 2011 to 2016 [13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34], two (7%) were published from 2006 to 2010 [35, 36], and three (11%) were published from 2000 to 2005 [37,38,39]. Of the 21 studies, six (29%) were conducted in USA [15, 17,18,19, 21, 26, 27], five (24%) were conducted in the Netherlands [16, 20,21,22, 31,32,33,34, 36], and two (10%) were conducted in Australia [24, 37, 38], Japan [29, 30], and the UK [13, 14, 28] each. The remaining studies were conducted in Germany [23, 35], France [35], Thailand [25], and Europe [39] (countries not specified).

3.2 Study Topic and Respondents

The 21 included studies covered nine different topics. Five studies (24%) were related to human papillomavirus [15, 16, 20, 21, 25, 36], four (19%) were related to influenza [17, 18, 26, 29], and three (14%) were related to meningococcal vaccines [24, 27, 35]. Three studies (14%) assessed preferences for childhood vaccines in general [13, 14, 19, 30] and two studies (10%) were related to hypothetical vaccines that were either not available on the market or not publicly funded or privately sold in the study context [28, 39]. One such study covered vaccines for rotavirus and invasive and non-invasive pneumococcal disease [28] and the other did not specify the specific disease that the vaccine was intended to provide protection against [39]. The remaining four studies were related to hepatitis B vaccine for newborns [22], varicella [37, 38], rotavirus [31,32,33,34], and diphtheria, tetanus, pertussis, hepatitis B, poliomyelitis, and Haemophilus influenzae type b vaccine [23] (5% each) (see Table 1).

Articles were eligible for inclusion if responses were elicited from parents, adolescents/children, or service providers. Fourteen studies (67%) included responses only from parents [13, 14, 18,19,20, 22, 25, 28,29,30,31,32,33,34,35, 37,38,39], three studies (14%) surveyed children or adolescents only [16, 17, 21, 36], three studies (14%) surveyed providers [23, 26, 27], and one (5%) surveyed both parents and adolescents [24].

3.3 Research Aims

Included studies reported a variety of research aims, with most studies reporting more than one aim. The most commonly reported aim was to assess preferences, either for vaccines as a whole, for specific attributes, or variations in preferences across population groups (15 articles) [13, 15, 17, 18, 20, 21, 23,24,25,26,27, 29, 30, 32, 36]. Eight articles aimed to estimate vaccine uptake [13, 15, 20, 21, 24, 26, 32, 37] and seven aimed to assess willingness to pay (WTP) [15, 19, 24, 25, 29, 35, 39]. Seven articles either focused on methodological questions or included a methodological research component [14, 16, 21, 31, 33, 34, 38]. Three articles each aimed to assess the trade-offs that respondents made between attributes [20, 28, 36] and to make policy recommendations about the design of vaccine service delivery [13, 21, 32]. One study aimed specifically to compare the results of the DCE (stated preference) with actual vaccine uptake (revealed preference) [22] (see Fig. 2).

3.4 Methods Used to Develop Discrete Choice Experiments

Selection of relevant attributes and levels is critical to the design and development of a DCE that can adequately reflect participants’ preferences. Authors reported using a range of methods to identify attributes and levels in included studies. Seventeen studies used a literature review to identify attributes and levels [13,14,15,16,17,18,19,20, 22,23,24,25, 27,28,29,30,31,32,33,34, 36,37,38], 13 consulted with experts [15,16,17,18,19,20, 22,23,24, 26, 27, 31,32,33,34, 36,37,38], and 11 conducted qualitative work [13, 14, 16,17,18,19,20, 22, 23, 26, 31,32,33,34, 36, 39]. One study used only a previous DCE to identify attributes and levels [21] and one did not clearly state how attributes and levels were identified [35]. Eight studies used three of the methods listed [16,17,18,19,20, 22, 23, 31,32,33,34, 36], six used two methods [13,14,15, 24, 26, 27, 37, 38], and six studies used only one method [21, 25, 28,29,30, 39].

A multinomial choice structure requires that choice profiles be paired or grouped to create choice sets. Amongst studies using a multinomial choice approach, nine studies (47%) did not specify the method used for creating choice sets [15, 17,18,19, 24,25,26, 35, 39]. Five studies (26%) created choice sets based on d-efficiency [13, 14, 20, 21, 27, 31,32,33,34], which seeks to combine profiles in such a way that both the variance and covariance of parameters are minimized [40]. Three studies used a fold-over approach [22, 23, 28] and two combined choice profiles randomly [29, 30]. One study used a software program developed by Street and Burgess [16, 36].

3.5 Choice Task Structure

Twenty studies used a multinomial choice structure in which respondents were asked to choose between two or more alternatives. A binary choice task structure was used in one study (5%) [37, 38]. This approach involved a choice between accepting one vaccine scenario and choosing not to accept vaccination in this scenario.

Five of the 21 studies (25%) presented respondents only with a choice between two alternatives with no possibility to ‘opt out’ [17,18,19, 23, 26]. This is referred to as a forced choice and may be appropriate where individuals have already decided to vaccinate and are being offered a choice between two different types of vaccines. Another five studies (25%) used a forced choice task in the first instance and followed this up with the option to ‘opt out’ or indicate that they would not actually choose the alternative they selected in the force choice task [22, 24, 31,32,33,34,35, 39]. In four studies, this was done by asking respondents to indicate that they would not choose the preferred scenario in real life [24, 31,32,33,34,35, 39], and, in one study, respondents were asked to indicate how likely it was that they would choose their preferred scenario in real life on a 10-point scale [22].

Nine studies (42%) presented respondents with only an unforced choice task [15, 16, 20, 21, 25, 27,28,29,30, 36], meaning that respondents were asked to choose between two or more alternatives and an ‘opt-out’ alternative. This approach is appropriate where an individual’s choice may be influenced by the acceptability of the alternatives presented. Of these studies, the ‘opt out’ was described as ‘no vaccine’ in six studies [16, 20, 21, 25, 28, 30, 36] and ‘neither vaccine’ in two studies [15, 27]. One study described the ‘opt out’ as ‘neither vaccine’ in the article text, and ‘no vaccine’ in the sample choice task [29]. One study presented respondents with an unforced choice task followed by a forced choice [13, 14]. In this case, the unforced choice was between two vaccine programs or to choose neither and not have their child immunized. The forced choice was then framed by asking which of the two alternatives the respondent would choose if immunization was mandatory.

The number of choice tasks presented to each participant ranged from four to 18. Five studies (24%) included fewer than nine choice tasks [15, 22, 25, 29, 30], nine studies (43%) included nine to 16 choice tasks [16, 20, 21, 24, 26,27,28, 31,32,33,34, 36,37,38], and four studies (19%) included more than 16 tasks per participant [13, 14, 19, 35, 39]. In three studies (14%), the number of choice tasks was not reported [17, 18, 23].

3.6 Experimental Design

Following the identification of attributes and levels, the use of an experimental design provides a framework for generating the choice profiles that will be presented to respondents. In terms of experimental design, one study (5%) used a full factorial design [22], meaning all possible combinations of attribute levels were included and 15 studies (70%) used a fractional factorial design, which incorporates only a fraction of the total number of possible choice profiles [13,14,15,16, 19,20,21, 23,24,25, 27,28,29,30,31,32,33,34, 36,37,38]. Five studies (25%) did not provide any detail on experimental design [17, 18, 26, 35, 39]. Of the 16 studies reporting the type of experimental design used, seven (44%) generated the design using a software package [13, 14, 20, 21, 24, 25, 27, 31,32,33,34], four (25%) used a catalog approach [16, 28,29,30, 36], and the specific approach was unclear for the remaining five (31%) [15, 19, 22, 23, 37, 38]. Eight studies reported using software to generate an experimental design; five (63%) reported using Ngene [13, 14, 21, 24, 25, 31,32,33,34], two (25%) used SAS [20, 27], and one (13%) used Sawtooth [35].

3.7 Attributes Used

The number of attributes included in each DCE ranged from three to eight and the number of levels per attribute ranged from two to seven. A total of 100 attributes were used in the 21 studies. Figure 3 outlines the number of attributes categorized into 11 domains. Twenty-four attributes were related to risk, 21 were related to the degree or duration of vaccine protection, and 15 were related to cost. Risk-related attributes were used differently across studies and included risk of mild or severe side effects, infection risk, and risks associated with dosing and handling. Duration and degree of vaccine protection included the length of time that a vaccine would confer immunity, vaccine effectiveness/efficacy, and the number of serogroups covered. Vaccine costs were described as the cost per dose, the cost for a full course of doses or visits, out-of-pocket costs, price, and the structure of a reward for obtaining immunizations. Other attribute domains included dosing and number of visits (8%), elements of health status (7%), service delivery (5%), information (5%), vaccine administration (5%), time (4%), vaccine contents (3%), and other (3%).

The relative statistical significance of an attribute is conditional on the set of attributes included in the particular study, making it difficult to draw firm conclusions about the relative importance of attributes in influencing individual preferences. This is further complicated by variation in how studies defined statistical significance; some studies reported p values of <0.1, <0.5, and <0.01 while others reported p values of <0.05, <0.01, and <0.001, making it difficult to draw strong conclusions. With these caveats in mind, Table 2 shows that attributes related to risk, cost, and the degree or duration of protection were commonly statistically significant across 16 studies that reported statistical significance [13,14,15,16, 19,20,21,22,23,24,25,26, 28,29,30,31,32,33,34,35,36]. Four studies did not report statistical significance [17, 18, 37,38,39] and one study reported statistical significance but did not provide a legend [27], thus it was not possible to appropriately categorize the p values.

3.8 Survey Administration and Sample Size

Surveys were interviewer administered in three of the 21 studies (14%) [28, 37,38,39] and self-administered in 15 studies (71%). Of the self-administered studies, ten were completed online [13,14,15, 17,18,19, 23, 24, 27, 29, 30] and five were paper based [16, 20,21,22, 31,32,33,34, 36]. In three studies, the mode of administration was not reported (14%).

Until recently, there has been limited guidance available around methods to determine the minimum sample size needed to detect differences in preferences, leading many researchers to rely on rules of thumb or practical considerations in determining sample size [41]. Sample sizes of included studies ranged from 50 respondents to over 2000, with the majority of studies including 100–500 respondents. Thirteen studies did not report any sample size calculations or provide a justification for the choice of sample size [15, 17,18,19, 22,23,24,25, 27,28,29, 35, 37, 38]. Six studies indicated that sample size calculations were guided by a ‘rule of thumb’ [13, 14, 16, 20, 21, 30, 36, 39], and two studies mentioned that sample size calculations had been performed but did not provide details of the calculations [26, 31,32,33,34].

3.9 Source of Funding

Previous comprehensive reviews of DCE applications across all areas of health have not reported on sources of funding. Of the 21 studies included in this review, eight (35%) [17, 18, 23, 24, 26, 27, 35, 39] were funded by pharmaceutical companies involved in manufacturing the specific vaccines under study.

3.10 Estimation Procedures and Outcome Measures

A concise summary of approaches to analyzing DCE data is provided by de Bekker-Grob et al. [42]. The estimation procedures, subgroup analyses, and outcome measures used in the included studies are summarized in Table 3. Six studies used a conditional or multinomial logit approach to analysis [17, 18, 23, 25, 27, 29]. These models have long been considered the workhorse of DCE analysis; however, they have fallen out of favor more recently owing to restrictive assumptions around substitution between alternatives when one alternative is removed from the choice set or an additional alternative is added (commonly known as independence of irrelevant alternatives), homogeneity of error terms, and uncorrelated choices [43]. Seven studies used random parameters logit (also referred to as mixed logit) models [15, 16, 22, 24, 27, 30,31,32,33,34, 36], which relax the assumptions around the independence of irrelevant alternatives as well as allowing for the heterogeneity of error terms and can account for the panel nature of DCE data [43]. Four studies used random or mixed-effects logit models [13, 14, 26, 28, 37, 38], three (13%) used hierarchical Bayes’ estimation [17, 18, 39], and two used latent class analysis [21, 31,32,33,34]. A generalized mixed-logit model was used in one study [31,32,33,34]. Four studies used other model types [16, 19, 26, 28, 35, 36]. Overall, this is in keeping with the trend of a recent systematic review of all DCE applications in health, which found an increasing reliance on multinomial logit and random parameter logit approaches in recent years [7].

Identification of variations or differences in preferences across groups may be achieved either by specifying separate models for different groups or incorporating interaction terms into the model. Such subgroup analyses were reported in 17 of the 21 included studies (81%). Sociodemographic characteristics such as age, sex, income, educational attainment, or ethnicity were included 13 times (some studies included more than one sociodemographic characteristic) [15, 17,18,19,20,21, 24, 26, 28,29,30,31,32,33,34,35, 37, 38], vaccine knowledge or beliefs were incorporated five times [15, 28, 31,32,33,34,35, 39], and vaccination status was included two times [21, 29]. Methodological aims drove eight other types of subgroup analyses [13, 14, 19, 23, 26, 27, 29, 31,32,33,34, 39] including split samples where respondents were provided with different educational materials, different formats for presenting risk, different ranges for attribute levels, or comparisons of modeled results with and without respondents who selected the ‘opt-out’ option or failed dominance or consistency tests.

The sign of modeled coefficients can provide an indication of whether respondents value an attribute positively or negatively; however, the absolute value of coefficients produced by the above-mentioned modeling approaches is not directly interpretable. Interpretation of modeled results therefore requires additional analysis to produce meaningful outputs. Of the studies reviewed, 12 used modeled coefficients to estimate vaccine uptake under different scenarios [13,14,15,16,17,18, 20, 22, 28, 31,32,33,34,35,36,37,38,39], eight estimated individual WTP [15, 19, 24,25,26, 28,29,30], and five produced other marginal rates of substitution to illustrate the rate at which respondents would trade between a decrease in one attribute level to obtain more of another attribute [16, 20, 21, 27, 31,32,33,34, 36]. Three studies reported the odds ratio or change in log odds for each parameter to indicate how much more/less likely an individual would be to choose an alternative with a given attribute level (all else being held constant) [23, 26, 27] and two each reported individual attribute importance [17, 18], individual utility levels [22, 39], and willingness to accept [13, 14]. One used both market simulation and revenue calculation approaches [39].

Of eight studies reporting WTP, six used data from an unforced choice scenario [15, 24, 25, 28,29,30] and two used forced choice data [19, 26]. Estimates of WTP derived from a forced choice may overestimate actual WTP because it is assumed that all individuals will purchase one of the alternatives provided.

3.11 Estimates of Uptake

Twelve of the included articles (48%) provided a discussion of uptake [13,14,15,16,17,18, 20, 22, 28, 31,32,33,34,35,36,37,38,39]. This is more than the eight articles that reported this as a research aim [13, 15, 20, 21, 24, 26, 32, 37]. Approaches to calculating potential uptake included estimating marginal effects, elasticities, and calculating the probability that sampled individuals would choose a specific vaccine scenario (either based on total estimated utility or by establishing a participation threshold). Marginal effects and elasticities are both used to describe the impact of a unit change in one variable on the probability that an alternative will be chosen. Marginal effects are appropriate where variables are dummy or effect coded and elasticities are appropriate for continuous variables [44]. This approach was used in two of the 12 studies discussing uptake [28, 35]. The remaining ten used a scenario analysis approach whereby a full choice scenario was specified and the probability of choosing this scenario in relation to a baseline was calculated [13,14,15,16,17,18, 20, 22, 31,32,33,34, 36,37,38,39]. The baseline level or scenario was clearly outlined in six studies, allowing the reader to interpret the relative change in reported uptake [13, 15, 16, 20, 32, 37]. Of all ten studies using the scenario approach, the correct formula for calculating probability was provided in three cases [15, 32, 37]. In the remaining seven studies, a formula for calculating probability estimates was either incorrect or not reported.

In estimating uptake using DCE data, it is important to differentiate between conditional and unconditional choices. A conditional choice is modified by a prior decision to undertake a given course of action, where an unconditional choice has no such prerequisite [6]. Unconditional choice is commonly represented in DCEs by the inclusion of an opt-out alternative, which allows respondents to indicate that none of the alternatives offered are acceptable. In the case of vaccination, selection of an opt-out alternative may indicate that the respondent would prefer not to vaccinate at all or simply that the configuration of attributes and levels in the specific choice task is not acceptable (but an alternative configuration not presented may be acceptable). If an opt-out alternative is not provided and the respondent is presented with a ‘forced choice’ between alternatives, the associated choice is interpreted as being conditional on the decision to vaccinate. Therefore, associated estimates of uptake may represent the preference of respondents who have already decided to accept a vaccination, but will likely significantly overestimate uptake where individuals have the option to choose no vaccination in real life. Of the 12 studies reviewed that provided an estimate of uptake, eight estimated unconditional uptake (data included an opt-out alternative) [13, 15, 20, 22, 28, 36, 37, 39] and three either estimated conditional uptake (forced choice data) [17, 18, 35] or, in the case of a choice structure that included both a forced and unforced choice, did not specify which data set was used [32].

Five studies related to vaccines that were already available on the market in the study setting compared modeled uptake with observed uptake in the target group. In three studies, all related to human papillomavirus vaccination, modeled uptake among sampled respondents was 10–50% higher than observed population uptake [15, 16, 20]. In one study, related to newborn hepatitis B vaccination, the highest estimate of uptake among sampled respondents was 9% lower than observed participation in the national program [32]. A second study on preferences for newborn hepatitis B vaccination compared modeled estimates of uptake based on sampled respondents stated preferences with the actual uptake (revealed preference) of the same respondents [22]. In this study, 80% of actual choices were predicted correctly by the model (including choices to both accept and reject vaccination when offered). In the remaining cases, the model either predicted that a parent would accept vaccination for their newborn when offered but the vaccine was not received (13%), or the model predicted that vaccination would not be accepted but when the offer was made, parents accepted it (6%) [22].

3.12 Journal

Included studies were published in journals with a variety of disciplinary focuses. Thirteen studies published articles in clinical journals [5, 17,18,19, 21, 23,24,25,26, 29, 30, 32, 33, 36], six studies published articles in health economics journals (including one health technology assessment report) [13, 16, 34, 35, 37, 38], two studies each published in general journals [21, 28] or journals with a research methodology focus [22, 31], and one study was published in a marketing journal [39].

3.13 Validity Assessment

A summary of the validity assessments for all 21 included studies is provided in Table 4. For each item, a score of 0, 0.5, or 1 was assigned based on whether an item was not present (not reported), partly present, or present. Assessments were conducted based on the full-text article as well as online supplementary material. With a total of 13 items, each study could achieve a maximum score of 13. The average score across all studies was 8.4 with a range of 4–12.5.

Included studies generally scored well on three out of four elements related to choice task design, two of four items related to conduct, and all items related to analysis. In terms of choice task design, attributes in 13 of 21 studies (62%) were conceptually distinct, varied independently of each other, and were unidimensional [15, 16, 20, 21, 24,25,26,27, 29, 31,32,33,34,35,36,37,38,39]. Fifteen studies (71%) included an opt-out alternative or justified the use of a forced-choice approach [13,14,15,16, 20, 21, 23,24,25,26,27,28,29,30, 35,36,37,38]. With respect to the conduct of the DCE, 16 studies (76%) elicited respondents from target populations that were appropriate for the research [13, 14, 16, 19,20,21,22,23,24,25, 27,28,29,30,31,32,33,34, 36,37,38] and 12 studies (57%) used a sampling frame that was representative of the target population [16,17,18, 20,21,22, 24, 26, 27, 29,30,31,32,33,34, 36]. More than half of all studies scored 1 on each of the four elements related to design. Twelve studies (57%) appropriately pooled or separated analyses for different groups [13, 14, 16, 19, 21, 23, 24, 27, 29,30,31,32,33,34,35,36,37,38], 16 studies (76%) used an econometric model that accounted for correlation in choices made by the same individuals [13,14,15,16, 19,20,21,22, 24, 26,27,28, 30,31,32,33,34,35,36,37,38,39], 17 studies (81%) used an econometric model that was appropriate for the choice task design [13,14,15,16, 20,21,22,23,24,25, 27,28,29,30,31,32,33,34,35,36,37,38,39], and 17 studies (81%) compared relative attribute effects using a common metric [13,14,15,16, 19,20,21,22, 24, 26,27,28,29,30,31,32,33,34,35,36,37,38,39].

Areas of particular weakness in the included studies related to the identification and inclusion of appropriate individuals in formative qualitative work used to identify attributes and levels (five of 21 studies scored 1 on this item) [13, 14, 17, 20, 22, 31,32,33,34], conducting piloting amongst the target population (three studies scored 1 on this item) [15, 24, 31,32,33,34], the use of optimal or statistically efficient experimental designs (seven studies scored 1 on this item) [13,14,15,16, 20, 21, 27, 31,32,33,34, 36], and ensuring that the response rate was sufficient to minimize response bias (five studies scored 1 on this item) [16, 19, 21, 22, 31,32,33,34, 36]. Failing to include the target population in formative qualitative work or piloting of a DCE may mean that relevant attributes and levels are not included or that the description and presentation of the attributes are not well suited to the target population. This could lead to inappropriate interpretation of the DCE results where important attributes are excluded or misinterpreted by respondents. Using an experimental design that is not statistically efficient or optimal may result in more choice tasks being presented to each respondent, which may increase respondent fatigue, or could mean that standard errors around parameter estimates are wider (meaning there is less certainty about the true value) than they would be using a statistically efficient design. Finally, a low response rate can lead to bias where some individuals (or groups) are systematically excluded or less likely to respond. This can mean that results are not generalizable to the population that they are intended to apply to.

4 Discussion

The increasing use of DCEs observed during the study period suggests a growing interest in the application of choice-based stated preference methods to understand the factors contributing to the preference for childhood and adolescent vaccines. This review retrieved 27 studies linked to 21 separate DCEs covering nine different vaccines or topic areas. Given the range of different vaccines covered and respondent populations included, this review focused on study methodology rather than providing a summary or comparison of specific findings.

More than half of the included studies included a discussion of vaccine or service uptake, an explicit research aim of only eight articles. Methods used to estimate uptake were poorly reported in a number of cases with authors reporting poor concordance between modeled uptake from DCEs and observed coverage rates in settings where assessed vaccines were currently available on the market. This may be owing to flaws in the DCE design or the use of an inappropriate analytical approach, both elements covered by the validity assessment tool that highlighted several weaknesses. It may also be the result of a poor understanding and characterization of the choice context. For example, many DCEs focused on vaccine attributes alone and there was an overall lack of service delivery components in included studies. Where respondents are not routinely offered a choice between vaccines with alternative risk profiles, focusing on vaccine characteristics may not provide reasonable insight into vaccine uptake. Indeed, this is akin to a conditional choice context in which one assumes that respondents have already decided to accept a vaccine. Where the research aim is to stimulate demand and increase uptake, additional elements may be required to more fully understand the choice context, drivers of vaccine uptake, and strategies to optimally target groups at risk of a low vaccine uptake.

Only one included article specifically aimed to assess variation in preferences across population groups, though this was an implicit aim of studies that recruited respondents from specific groups and analyzed responses separately. Overall, the most commonly reported subgroup analyses were according to sociodemographic characteristics, with fewer studies investigating the link between preferences and knowledge and beliefs or vaccine status. In terms of policy, it may be more straightforward to target individuals based on individual characteristics because without a clear understanding of how these characteristics relate to knowledge and beliefs and vaccine status, it may prove difficult to effectively tailor service delivery to groups at risk of low vaccine uptake.

Several high-quality studies applying best practices in design and analysis were included in the review; however, a number of studies were lacking in rigour. Quality assessment scores of included studies varied widely, from 4 to 12 out of 13 possible points. Scores did not appear to improve substantially over time, with the average scores of studies published in 2000–5, 2006–10, and 2011–16 approximately 8.2, 10, and 9.1 over the three time periods. This suggests that there has not been a general trend toward improved quality in this field. Studies with articles published in health economics journals or journals with a methodological focus had an average quality assessment score of 10 and 10.75, respectively. Studies with articles published in general or clinical journals had average quality assessment scores of 8.5 and 8.35. The one study published in a marketing journal had a quality assessment score of 7.5. Across studies that were industry sponsored, the average score on the quality assessment tool was 7.1, compared with 9.3 among non-industry-funded studies. Weaknesses were seen across all four dimensions of the quality assessment tool, including choice task design, experimental design, conduct, and analysis.

The strengths of this review include a comprehensive search strategy and the use of a formal quality assessment tool. This review focused only on studies involving parents, children, and adolescents and healthcare providers as respondents. Studies including individuals responsible for making decisions around vaccine acceptance, purchasing, or delivery are most likely to provide insight into uptake and coverage. However, several studies have also assessed societal values for vaccines to estimate WTP for disease prevention. These studies may provide useful information for resource allocation purposes and should be considered for inclusion in reviews assessing the use of DCEs as an input into economic evaluations.

4.1 Implications for Research and Policy

All studies included in this review used an unlabeled or generic choice task design, meaning that respondents were asked to choose between alternatives A and B for example, with no specific descriptor such as a service provider type, a mode of administration, or a brand name. Hensher et al. have suggested that while it is possible to estimate uptake using such designs, forecasting may be improved by accounting for differences in scale and calibration of parameter estimates, which would require alternative specific constants and therefore a labeled design [44]. In the case of vaccines, identifying a label that accurately reflects the choice alternatives may prove challenging and further work would be required to identify appropriate labels. While forecasting may still be achieved with an unlabeled design and generic parameters, this approach is also improved by model calibration [6], an approach that has not been widely adopted in health economics.

The external validity of DCEs is a topic of much concern amongst state preference researchers in health economics [45]. Two articles included in this review explicitly investigated the choice processes that participants used in responding to DCE choice tasks [31, 38]. Further work may be useful in interrogating discrepancies between preferences and vaccine uptake or in understanding the effect of information and education materials on choice.

As further research aims to better understand preferences for vaccines and vaccine program alternatives, DCEs can be used to provide important insight into acceptable alternatives. In conjunction with monitoring of vaccine acceptance rates and changes in uptake over time and across population groups, DCEs may be used to provide evidence for interventions that are most likely to work in what contexts. On the supply side, studies included in the current review focus on attributes that are largely not amenable to policy changes. For example, the risk of adverse events or the degree and duration of coverage may be altered in the long term in the vaccine development process, but in the short term may not be altered by changes made on the front line in service delivery or programming.

Therefore, efforts to understand the drivers of preferences and uptake and future research aiming to inform program design and improve uptake should include elements of service delivery. On the demand side, additional efforts to understand heterogeneity in preferences may provide insight into how to better target programming to individuals that may be less likely to accept vaccinations or not receive all recommended doses. Moving forward, adherence to a common set of criteria in the design and development of DCEs, such as the checklist developed by the International Society of Pharmacoeconomics and Outcomes Research Conjoint Analysis Task Force [12], would ensure that studies are adequately reported and make it easier for readers to judge study quality.

5 Conclusion

The use of DCEs to assess preferences for vaccines has expanded rapidly in the last 5 years. While several high-quality studies have been identified, we have identified areas for improvement in the design and analysis of DCE data.

As stated preference approaches are adopted to address new and varied research questions, we suggest that special attention be paid to ensuring that the choice context is well understood and that DCEs are designed to reflect research aims, particularly where estimating or forecasting uptake is a specific research goal.

References

Omer SB, Salmon DA, Orenstein WA, et al. Vaccine refusal, mandatory immunization, and the risks of vaccine-preventable diseases. N Engl J Med. 2009;360(19):1981–8.

Dubé E, Laberge C, Guay M, et al. Vaccine hesitancy: an overview. Hum Vaccine Imunother. 2013;9(8):1763–73.

Thomson A, Robinson K, Vallée-Tourangeau G. The 5As: a practical taxonomy for the determinants of vaccine uptake. Vaccine. 2016;34(8):1018–24.

Mills E, Jadad AR, Ross C, Wilson K. Systematic review of qualitative studies exploring parental beliefs and attitudes toward childhood vaccination identifies common barriers to vaccination. J Clin Epidemiol. 2005;58(11):1081–8.

Brown KF, Kroll JS, Hudson MJ, et al. Factors underlying parental decisions about combination childhood vaccinations including MMR: a systematic review. Vaccine. 2010;28(26):4235–48.

Hensher D, Rose J, Greene W. Applied choice analysis. 2nd ed. Cambridge: Cambridge University Press; 2015.

Clark MD, Determann D, Petrou S, et al. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32(9):883–902.

Lancaster KJ. A new approach to consumer theory. J Polit Econ. 1966;74:132–57.

The World Bank. World Bank country and lending groups. 2016. http://data.worldbank.org/about/country-and-lending-groups. Accessed 23 Apr 2017.

Mandeville KL, Lagarde M, Hanson K. The use of discrete choice experiments to inform health workforce policy: a systematic review. BMC Health Serv Res. 2014;14(1):367.

Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making. Pharmacoeconomics. 2008;26(8):661–77.

Bridges JF, Hauber AB, Marshall D, et al. Conjoint analysis applications in health: a checklist: a report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14(4):403–13.

Adams J, Bateman B, Becker F, et al. Effectiveness and acceptability of parental financial incentives and quasi-mandatory schemes for increasing uptake of vaccinations in preschool children: systematic review, qualitative study and discrete choice experiment. Health Technol Assess. 2015;19(94):1–176.

Adams J, McNaughton RJ, Wigham S, et al. Acceptability of parental financial incentives and quasi-mandatory interventions for preschool vaccinations: triangulation of findings from three linked studies. PloS One. 2016;11(6):e0156843.

Brown DS, Poulos C, Johnson FR, et al. Adolescent girls’ preferences for HPV vaccines: a discrete choice experiment. Adv Health Econ Health Serv Res. 2014;24:93–121.

de Bekker-Grob EW, Chorus CG. Random regret-based discrete-choice modelling: an application to healthcare. Pharmacoeconomics. 2013;31(7):623–34.

Flood EM, Ryan KJ, Rousculp MD, et al. A survey of children’s preferences for influenza vaccine attributes. Vaccine. 2011;29(26):4334–40.

Flood EM, Ryan KJ, Rousculp MD, et al. Parent preferences for pediatric influenza vaccine attributes. Clin Pediatr. 2011;50(4):338–47.

Gidengil C, Lieu TA, Payne K, et al. Parental and societal values for the risks and benefits of childhood combination vaccines. Vaccine. 2012;30(23):3445–52.

Hofman R, de Bekker-Grob EW, Raat H, et al. Parents’ preferences for vaccinating daughters against human papillomavirus in the Netherlands: a discrete choice experiment. BMC Public Health. 2014;14:454.

Hofman R, de Bekker-Grob EW, Richardus JH, et al. Have preferences of girls changed almost 3 years after the much debated start of the HPV vaccination program in the Netherlands? A discrete choice experiment. PloS One. 2014;9(8):e104772.

Lambooij MS, Harmsen IA, Veldwijk J, et al. Consistency between stated and revealed preferences: a discrete choice experiment and a behavioural experiment on vaccination behaviour compared. BMC Med Res Method. 2015;15:19.

Lloyd AJ, Nafees B, Ziani E, et al. What are the preferences of health care professionals in Germany regarding fully liquid, ready-to-use hexavalent pediatric vaccine versus hexavalent pediatric vaccine that needs reconstitution? Patient Prefer Adherence. 2015;9:1517–24.

Marshall H, Chen G, Clarke M, Ratcliffe J. Adolescent, parent and societal preferences and willingness to pay for meningococcal B vaccine: a discrete choice experiment. Vaccine. 2016;34(5):671–7.

Ngorsuraches S, Nawanukool K, Petcharamanee K, Poopantrakool U. Parents’ preferences and willingness-to-pay for human papilloma virus vaccines in Thailand. J Pharm Policy Pract. 2015;8(1):20.

Pereira CCA, Mulligan M, Bridges JFP, Bishai D. Determinants of influenza vaccine purchasing decision in the US: a conjoint analysis. Vaccine. 2011;29(7):1443–7.

Poulos C, Reed Johnson F, Krishnarajah G, et al. Pediatricians’ preferences for infant meningococcal vaccination. Value Health. 2015;18(1):67–77.

Sadique MZ, Devlin N, Edmunds WJ, Parkin D. The effect of perceived risks on the demand for vaccination: results from a discrete choice experiment. PloS One. 2013;8(2):e54149.

Shono A, Kondo M. Parents’ preferences for seasonal influenza vaccine for their children in Japan. Vaccine. 2014;32(39):5071–6.

Shono A, Kondo M. Mothers’ preferences regarding new combination vaccines for their children in Japan, 2014. Hum Vaccin Immunother. 2017;13(4):766–71.

Veldwijk J, Determann D, Lambooij MS, et al. Exploring how individuals complete the choice tasks in a discrete choice experiment: an interview study. BMC Med Res Methodol. 2016;16:45.

Veldwijk J, Lambooij MS, Bruijning-Verhagen PCJ, et al. Parental preferences for rotavirus vaccination in young children: a discrete choice experiment. Vaccine. 2014;32(47):6277–83.

Veldwijk J, Lambooij MS, van Til JA, et al. Words or graphics to present a discrete choice experiment: does it matter? Patient Educ Couns. 2015;98(11):1376–84.

Veldwijk J, van der Heide I, Rademakers J, et al. Preferences for vaccination: does health literacy make a difference? Med Decis Mak. 2015;35(8):948–58.

Bishai D, Brice R, Girod I, et al. Conjoint analysis of French and German parents’ willingness to pay for meningococcal vaccine. Pharmacoeconomics. 2007;25(2):143–54.

de Bekker-Grob EW, Hofman R, Donkers B, et al. Girls’ preferences for HPV vaccination: a discrete choice experiment. Vaccine. 2010;28(41):6692–7.

Hall J, Kenny P, King M, et al. Using stated preference discrete choice modelling to evaluate the introduction of varicella vaccination. Health Econ. 2002;11(5):457–65.

Kenny P, Hall J, Viney R, Haas M. Do participants understand a stated preference health survey? A qualitative approach to assessing validity. Int J Technol Assess Health Care. 2003;19(4):664–81.

Sapede C, Girod I. Willingness of adult’s in Europe to pay for a new vaccine: the application of discrete choice-based conjoint analysis. Int J Market Res. 2002;44(4):463–76.

Ngene 1.1: user manual and reference guide. Version 13/05/2011 ed. Australia: ChoiceMetrics Pty Ltd.; 2011.

de Bekker-Grob EW, Donkers B, Jonker MF, Stolk EA. Sample size requirements for discrete-choice experiments in healthcare: a practical guide. Patient. 2015;8(5):373–84.

de Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ. 2012;21(2):145–72.

Amaya-Amaya M, Gerard K, Ryan M. Discrete choice experiments in a nutshell. In: Ryan M, Amaya-Amaya M, Gerard K, editors. Using discrete choice experiments to value health and health care: the economics of non-market goods and resources. Dordrecht: Springer; 2008. p. 13–46.

Hensher D, Rose J, Greene W. Applied choice analysis: a primer. 1st ed. Cambridge: Cambridge University Press; 2005.

Lancsar E, Swait J. Reconceptualising the external validity of discrete choice experiments. Pharmacoeconomics. 2014;32(10):951–65.

Acknowledgements

Support was received from the Alberta Provincial Pediatric EnTeric Infection Team funded by Alberta Innovates. C. Michaels-Igbokwe is funded by a Postgraduate Fellowship from Alberta Innovates. S. MacDonald was funded by an Alberta Innovates Clinician Fellowship and a Postgraduate Fellowship funded by the Canadian Institutes of Health Research. The authors thank Daniel Wagner for assistance with data extraction.

Author information

Authors and Affiliations

Contributions

C. Michaels-Igbokwe assisted with identifying search terms and inclusion criteria; conducted the systematic review, quality assessment, data extraction; and drafted the manuscript. S. MacDonald assisted with identifying search terms and inclusion criteria; assisted with title and abstract and full-text screening; and critically reviewed and contributed to the manuscript. G.R. Currie assisted with identifying search terms and inclusion criteria; assisted with quality assessment; and critically reviewed and contributed to the manuscript

Corresponding author

Ethics declarations

Funding

No funding was used for the preparation of this article.

Conflict of Interest

C. Michaels-Igbokwe, S. MacDonald, and G. R. Currie have no conflicts of interest directly relevant to the content of this article.

Ethics Approval

Ethics approval was not required for this article.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Michaels-Igbokwe, C., MacDonald, S. & Currie, G.R. Individual Preferences for Child and Adolescent Vaccine Attributes: A Systematic Review of the Stated Preference Literature. Patient 10, 687–700 (2017). https://doi.org/10.1007/s40271-017-0244-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40271-017-0244-x