Abstract

Materials modeling technologies are fundamental to explore, understand, and ultimately predict materials behavior. They are essential to solve challenges posed by the need to reduce human impact on the environment. Modeling and simulation of materials behavior have been recognized over the years as fundamental as an asset in industrial R & D, guiding the decision-making process regarding the design or optimization of new products and manufacturing processes. At the same time, it reduces product cost and development time. However, highlighting the revenue brought by using such tools is not trivial, especially because they mainly affect the complex activities such as the innovation process, whose return only becomes available in the long run and it is difficult to measure. This means that the materials modeling field is often overlooked in an industry setting, where it is not integrated in the company workflow. In some cases, modeling provides the potential to capture tacit knowledge preventing the loss of capability in an aging specialist community, that why its industrial integration is important. This paper explores the reason behind this dichotomy, presenting first what it is intended for the modeling process, and the main types used in materials application. The current industrial adoption is reviewed by outlining success stories, economic impact, business uptake, and barriers. Past and current approaches and strategies are also presented and discussed. In prospective, materials modeling plays a key role in developing material-centric industry for sustainable economy, providing physical understating (physics-based models) and fast approaches (data-driven solutions). Digitalization is the mean for the green economy and it needs to push for a more integration at the core of the business of materials modeling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Over the last few decades, Modeling and Simulations (M & S) have become familiar assets used in different sectors from weather forecasting to medicine. From an engineering and science point of view, these technologies are nowadays ubiquitous tools, including the understanding and prediction of materials behavior. Modeling techniques have in fact accelerated materials development, leading to discovery of new materials and applications, reducing expensive and time-consuming physical tests [1]. The Integrated Computational Materials Engineering (ICME) [2] paradigm has demonstrated to be a great success in promoting progress in the aerospace industry for example. Materials models and simulations are today exploited to effectively and efficiently design new products and/or optimize processes by reducing the need and time of prototyping and testing [3]. Materials modeling has driven the acceleration of the better-performing product design, with a corresponding reduction in the production costs and time-to-market. For sectors that are typically characterized by highly volatile and competitive markets, materials modeling plays a key role for the innovation and competitiveness.

However, technological and cultural barriers still remain, and the full potential of these methods has been not achieved [4]. Materials modeling is often not considered as an essential component of commercial and business processes, and is not well integrated with other tools such as Business Decision System, stopping it to become a decisive rather than supportive tool in the material design and selection [5]. Modeling tools are often regarded as too complicated to use, inaccurate, and/or time consuming. Their development and maintenance require specific expertise (mathematics, physics, materials science, computer science, and engineering), infrastructure (high performance computing (HPC)), and trusted databases. There are different stakeholders (scientists, modelers, and end-users) involved in the development and application of modeling tools. They often do not share common language, backgrounds, goals or methods, therefore making communication and project delivery difficult.

Today, sustainability policies and increasing market competition have put materials in the center of the product and process design, with materials modeling as a key part of the design process. Actions are needed to facilitate the use and development of mature models and simulation tools across various industries and application areas.

There are national and international projects that try to assess these challenges, including the Materials Genome Initiative (MGI) [1], NASA Vision 2040 [4], European Materials Modeling Council (EMMC) [6], and in both academia and industry materials science communities. The aim of the MGI project is to facilitate materials development by exploiting computational techniques, use of standards, and data management by all stakeholders in the materials development community: academic institutions, small businesses, large industrial enterprises, professional societies, and government. The NASA roadmap identifies the technical and cultural challenges, deficiencies, and the technical work areas required to build a collaborative digital environment for the aerospace industry. Similarly, the EMMC mission is to improve collaboration and integration between all the stakeholders involved in modeling and digitalization of materials, by increasing awareness of the materials modeling industry, supporting the software development industry, and creating collaborative partnerships between academia and industry.

The purpose of this paper aligns with aims of these activities to create awareness of the materials modeling sector outside the community, to review the challenges that obstruct industrial adoption, and to analyze the promotion methods that have been pursued over the years in the UK and internationally. For this reason, the paper is divided in to three main sections. The first presents the modeling background, introducing the terminology behind the model design process, from real world problems to the verification and validation process, including a general categorization of models. Multiscale materials modeling approaches are then illustrated. The uncertainties affecting the model output and their quantification is also discussed. The current state of industrial adoption is then examined in the second part of the paper. The methodology used to asses the maturity level of the technology is presented, followed by a review of application and economic revenue in industry. This section closes with discussion on the current industrial integration of materials modeling and the barriers and challenges that impede industrial adoption. The final parts regards a review of initiatives adopted by the community to overcome these barriers and the needs for materials modeling integration via digitalization to promote a more sustainable business.

Background

Modeling Process

The aim of any scientific research is to investigate, understand, and ultimately predict the evolution of the system under study. Given a real problem situation, the first step in research study is defining a so called problem entity which identifies the system and process to be investigated [7] (see Fig. 1). The conceptual model is then defined to represent the physical system that is appropriate to solve the problem entity [7]. Due to the physical world complexity, conceptual models are hardly an exhaustive description of the system, and need to be regarded as an accurate approximation of the reality, where only the most-effecting physics phenomena are considered. For this reason, conceptual models need to be validated by executing a series of investigations, either experimentally and computationally, to establish the accuracy and precision of the conceptual model.

In the computational modeling route, the conceptual model is translated into a computerized model. The computerized model consists in a numerical formulation of the conceptual model and its implementation in computational formthrough an appropriate algorithm using a chosen computer language. It is important to ensure that the code solves correctly the mathematical model. This step is called model verification. It consists of two parts: the code verification and the solution verification. Code verification compares the simulation results with a known solution in order to determine if the computerized model is a good representation of the conceptual model. The known solution used to test the code can be an analytical approach or assumed \(a\ priori\) (Method of Manufactured Solutions [8]). Solution verification is applied to minimize the numerical errors without excessively increasing the computational expenses. Similarly, to the code verification, the numerical error is evaluated by comparing the results with a known solution, either analytically or arbitrarily. Once the verification process is concluded, the validation process can begin. The validation process requires the comparison of simulation results with real physical data. The set of simulations and experiments to be performed need to be carefully designed accounting for the accessibility of methodologies, facilities, and data (e.g., characterization techniques, computational time on HPC), operability of selected conditions (e.g., high temperature, boundary conditions, input parameter availability), ability to perform measurement with an acceptable degree of confidence (e.g., 95\(\%\) confidence interval is usually accepted in industrial settings). This process is called experiment design, and it requires the formulation of a physical model to reproduce the conceptual model. During the experiment design, a set of variable values that can be obtained from both experiments and simulations for comparison. These variables are called Quantities of Interest (QoI).

Both modeling and experiment QoIs are affected by uncertainty due to the variability of physical parameters within the system itself, and simplification within the model. Model uncertainties can be only partially reduced, so it is important to estimate how much they affect the results. Similarly, the blocking process of arranging experimental units into homogenous groups aims to reduce the variability of experiment results. The experimental and simulation outcomes are then compared during validation to understand how accurately the simulations reproduce the experiments. If the agreement is acceptable the model is validated, otherwise the model and experiments are revised. This process is called Verification and Validation (V & V) of the model. V & V assesses the accuracy of the computational and conceptual model to assure researchers that they well represent the problem entity to solve. This process is not trivial and requires expertise in model development, software development, simulation analysis, and experimental methodology. Figure 2 summarizes the flowchart of the V & V process as presented in the American Society of Mechanical Engineers (ASME) standard for verification and validation of modeling [9].

Verification and Validation framework of modeling technology showing the fundamental passages in blue boxes: code verification, design of validation experiments and preliminary calculations, calculation/solution verification, uncertainty quantification on the model and on the experiments, and validation. The green boxes define the fundamental entities in V & V process: conceptual model, mathematical and physical model, computational model and experiment design, simulation and experimental results, and experimental and model results [9]

Model Types and Definition

Computational models can be divided in two main categories: physics-based and empirical models, which can include data-driven. Physics-based modeling translates our understanding of the physical system into a mathematical form [10]. These models are less biased with respect to unverified assumptions or spurious correlations, because they follow the underlying principles governing the behavior of the material. Biases can be still introduced by the choice of the laws, but it remains a transparent choice. These models can be applied to different systems with the same underlying physics, and not restricted to the application they were created for. However, spatial and temporal resolution required by this models to simulate physical phenomena can impose high computational times, limiting their use to the design stage. Outputs are affected by errors and uncertainties in parameter values as well as lack of understanding of the system.

While physics-based models are extensively used in research and academia, they struggle to be widely accepted in industry. The main objection is that they are slow compared to the empirical methods and experimental trials [11]. However, they provide a wide range of data that would take a considerable amount of time and money to generate experimentally. For example, in addition to simulation of processes, simulation datasets can include microstructure and defect distributions as well as variations in property scatter. The underline problem is that this is not always well perceived.

For empirical models, the opposite is true, with the input/output relationship extrapolated from observed data. This may be done by the researchers directly, using experimental data to find correlations, or by using Data-driven modeling like Artificial Intelligence (AI) and Machine Learning (ML) approaches. Data-driven modeling is becoming popular especially in the “digital twin” context, due to the availability of vast quantities of digital data and the increasing availability of low-cost, high-performance computer hardware. For example, in the image-recognition field, digital capture of images is the norm, effectively automating the data capture process and readily creating libraries of images to process. This results in a rise of open-source platform for AI/ML such as TensorFlow [12] and scikit-learn [13].

Compared to physical-based models, empirical-based models suffer less of instability issues. They take into account long-term historical data and experiences. They are significantly cheaper in terms of time and computational resource in comparison to a physical-based model. However, they cannot be easily generalized and the choice of the training data is subject to bias, which can influence the results. The validity of their outputs generates debate, especially in the materials field. The output behavior is controlled by a large number of variables and obtaining a reliable dataset is a non-trivial process, due to the complexity associated with the effect of composition, microstructures, and process parameters. However, some argue that since each manifestation of the system is a result of both known and unknown physics, data-driven models can account for the full physics and potentially help to uncover the unknown phenomena [10]. However, for any set of data an arbitrary relationship can be always drawn. Traditionally in industry, empirical models are preferred over physics-based models, because in many cases, the computational resources and skills necessary are not available [11]. The empirical models used/developed rely on data availability, and their applicability is restricted to the particular condition modeled. They require the calibration of a number of parameters, often obtained through extensive experimental trials [11, 14]. They represent are computationally fast and once calibrated can provide readily solutions for a specific system, but the extrapolation, such as to another alloy, can result in severe drop in accuracy of the prediction [11, 15]. Accounting for some physical knowledge in the building of the data sample and in design of the model relations can increase the chance these models can capture the full physics. For example, physical-based models can be used to inform data-driven methods, providing the relevant physics to guide training of these the algorithms, by adding physics-based constraints in the optimization process [16], or by a physics-based architecture of neural network [17]. These types of hybrid methods are known as Reduced Order Models (ROMs), Surrogate Models, or Metamodels, and they aim to reduce the complexity of a fully physics-based model and maintain the fundamental physics and features. They provide a balance between fidelity and required computational power. In materials science, the Hall–Petch relationship [18] is an example of a ROM, quantitatively describes the grain size strengthening effect due to dislocation pile-up mechanisms.

Big Data are also gaining attention as tools to estimate the QoIs. Big data are very large structured and unstructured sets of information that can not be handled in a traditional way. Data Assimilation is a type of hybrid technique, where the model predictions of a physics-based model are improved by using Big Data. This approach is mainly used in weather forecasting [19]. The major challenges are the quality, standardization and processing the data [20]. A part from the Materials Genome Initiative in the US [21], which has substantially funded Open Science, Big Data, and AI initiative, Big Data applications in materials has been met with resistance especially from industry, due to the lack of specialized skills, knowledge, and resources in this field to transform industries into data-driven organizations [22]. Calibration of the Master Curve transition toughness model through Bayesian analysis is an example of this approach in materials science [23]. Data-driven models are also applied in the context of Big Data to establish trends. This type of modeling capability is referred to as Non-intrusive data-driven model. They are defined as Non-intrusive, because they are based solemnly on data, and there is no intervention or modification of the algorithm. A new field called Big Data Cybernetics or Hybrid Analysis and Modeling is arising, where the combination of data-driven and physical-based models with Big Data allows the inclusion of more complex physics to solve the problem under study. Digital twins are an example of hybrid analysis, where the architecture combines different types of modeling to inform a decision-making process. Figure 3 summarized all the different types of modeling.

Diagram of the three main modeling areas, physic-based, data-driven and Big Data, and their hybrid methods [10]

Multi-scale Materials Modeling

Materials are highly complex systems, whose performances are governed by convoluted physical and chemical mechanisms occurring at different spatial and time scales. For example, atomic-scale defects like dislocations and grain boundaries are well known to have an impact on the macro-scale mechanical behavior [24]. Macrosegregation during casting is micro-scale segregation during solidification [25]. Experimental characterization of these phenomena are difficult or impossible to perform as real-time observations cannot be readily made. Nevertheless, understanding these mechanisms is fundamental to predict materials behavior during each phase of the product life, from design to service. Application of modeling tools plays a significant role to accelerate discoveries in materials science. However, the complexity of the material system is such that one monolithic approach to model all aspects of the physical system across different length and time scales is not presently feasible.

Multi-scale approaches, instead, use the “divide and conquer” concept: the problem is solved by using a framework of simulations, each one describing a mechanism happening at the nanoscale, microscale or macroscale, which are then connected together like in a jigsaw puzzle. While models at each scale have their own limitations and difficulties, the greater, more central challenge in multi-scale modeling is the parsing and integration of information between scales. This methodology is called Scale bridging, and the approach can be divided into hierarchical and concurrent techniques [26].

The hierarchical method involves separating the different scales, and connecting them in an sequential manner through, for example, volume averaging of a field variable or simple parameter identification from one model to another. Each model in a hierarchical framework runs independently from the others. A homogenization technique using a Representative Volume Element (RVE) is an example of the hierarchical method [27]. A fine-scale model is run on a series of RVEs, each with a given microstructure to calculate the QoIs, usually the mechanical properties [28]. This process generates a distribution of microstructure-informed QoIs which are then input in the higher scale model. Another hierarchical approach consists of developing scaling laws to describe the lower level mechanisms, and incorporating them in a higher-scale model: e.g., a constitutive model [29].

Alternatively, in the concurrent approach, the domain is divided in two or more subdomains that are treated with different scale approaches within the same code and time step. This approach is useful in fracture mechanics, where the crack nucleation and propagation at the macro-scale result from a series of underlying atomistic processes, such as dislocation kinetics and/or nano-void formation, which in turn are affected by the stress field at the continuum level [30]. Quasicontinuum Modeling is another example of the concurrent approach where the atomistic calculation replaces the constitutive law in the continuum framework [31].

The multi-scale approach is an especially powerful technique in the industrial sector, by increasing product development efficiency, developing new materials, and improving materials quality [32]. However, there are still challenges to resolve. Coupling different scales requires the generation of usable and transferable information. In some cases, information transferability is limited by the different assumptions, physics-based mathematical equations, and parameters, which may not be valid at each level [33, 34]. Determining the link between the various scales is important but not trivial to do. In other cases, the assumption of scale separation cannot be applied [33]. Transferibility is also limited by the output form of each model, such as deterministic to stochastic, or discrete to continuous descriptions. Defining the output form is necessary to develop bridging techniques to link the multi-scale information together [35, 36]. The set-up of the architecture to integrate the modeling framework is also challenging. The architecture needs to be appropriate to handle the information transfer output, long simulation time, pre- and post-processing, capturing and managing data [36].

There is also a need for balance between predictability and computational cost of modeling tools. The choice of the model to use at a specific scale is not trivial and it should consider the system under consideration. Smaller-scale models have higher accuracy in describing the physical system but running these models for large domains becomes unfeasible. In order to reduce the computational cost, the appropriate number of physical phenomena should be modeled. From an engineering point of view, this is achieved by inductive goal-mean or top-down approach where the problem is solved from the component level going down in scale. However, materials scientists use a bottom-up approach, aiming to defined a cause-effect relation [37]. The level of details in modeling tools should be chosen in a way to reduce execution time guaranteeing the appropriate accuracy, since a grade of uncertainty is associated with any simulation. Therefore, uncertainty quantification of the model output is important to make appropriate use of them. It is also beneficial to identify the validity range of the model itself. In multiscale modeling frameworks, it is also essential understand how the uncertainty propagates from one scale to another and for it to remain acceptable. The uncertainty quantification process can also identify the most influential model on the overall error, so action can be taken to improve its accuracy.

Multi-scale Materials Model Classification

There are different modeling techniques used to study materials behavior, many of which overlap different length and time scales. Therefore, it is difficult to identify each modeling technique based only on the spacial and temporal scales. Classification based on four fundamental entities has been suggested to be more appropriate: electrons, atoms, mesoscale entities (e.g., particles, grains and molecules), and continuum (see Fig. 4) [38].

Diagram shows the four typologies of modeling used in materials science based on the described entity: electrons, atoms, mesoscale entities such as particles, and continuum [38]

Electron models simulate the evolution of molecules and atomic systems by describing their electronic interactions. They resolve systems at atomic to nanometer length scales and their evolution between pico to nanosecond time frames. The position, momentum, and spin of electrons in the system are described as an electronic wave function, whose Hamiltonian operator represents the total energy. In most of cases, the Schrödinger equation [39] is the physical model, while the Hamiltonian can be approximated by the Kohn-Sham theory [40] or the impurity model [41], to name a few, to solve many-particle problems. The equations are commonly solved using plane-wave basis because it allows an easier implementation of the total energy expressions and the Hamiltonian, and the use of Fourier transforms [42]. The plane-wave basis allows to compute the electrostatic interaction, however they are limited to small systems with period boundaries that restricted their applications to bulk properties of perfect materials [42, 43]. Orbital-free approaches have recently gained attention thanks to a reduced computational complexity that allows application to systems with larger number of electrons and their implementation in concurrent scheme with finite-element approaches [43, 44]. Green’s functions can also be applied to solve the Schrödinger equation [45]. These models are used to provide input parameters for atomistic, mesoscopic and continuum simulations, such as activation energies [46], or giving an insight on phenomena such as thermodynamic stability [47], kinetics of atomic defects [48], and reaction pathways [49]. Their applications include the evaluation of electronic, conductive, and optical properties too, as well as being used to calibrate the inter-atomic potentials or partial atomic charge distributions used for atomistic simulations [50].

Atomistic models describe the behavior of atoms and molecules using molecular or classic mechanics. The atomic interactions (e.g., force fields or inter-atomic potentials) are used instead of the electronic field. This allows simulation of a larger system, spanning from nanometer to micronmeter, and nano to microsecond, with respect to the electronic based methods. The atomic system can be treated statistically or by deterministic methods. The most common statistical methods are Monte Carlo (MC) [51] based approaches like Markovian Chain or kinetic Monte Carlo [52]. Molecular Dynamic (MD) methods [53] are instead deterministic as they solve the classical dynamic equations to determine the atom motions. Some applications of these model methods include diffusion mechanisms [54], phase transformations (evaporation [55], melting and solidification [56]), evaluation of surface [57] and interface energies [58], characterization of fundamental dislocation properties, such as core energies [59], or grain boundary evolution [60].

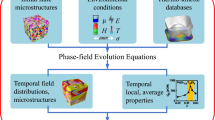

Mesoscale models focus on the behavior of nano-particles, grains or molecules in a length scale that spans from \(10^{-9}\) to \(10^{-3}\) m, from \(10^{-6} s\) to seconds. They do not resolve the atomic motions, instead, these are averaged out or replaced by stochastic terms. They comprise methods to predict microstructural evolution such as Phase Fields (PF) [61] and Cellular Automaton (CA) [62], and material mechanics behavioral methods, such as Discrete Dislocation Dynamics (DDD) methods [63]. These three types of models are all Discrete models, meaning the state variables of the system change only with a countable number of points, while Continuum models treat the system as a continuum, where the space is occupied by the substance of the object in the domain. The continuum volume is divided either into finite volumes, cells or elements. They can be applied to a large range of processes, from nano to macro level, and they are commonly sorted by their applications. Solid mechanics modeling, for example, studies the behavior in terms of deformation and motion of solid matter under one or more external influences including thermal and/or mechanical loading, or electrochemical reactions. Fluid mechanics modeling regards the motion of fluids, such as liquids, gases or dense plasma. Thermodynamics models are used to study energy conservation. Chemistry reaction models and electromagnetism investigate chemical reactions and electrically charged particles, respectively. These models are based on a set of equations that describe the process under investigation and constitutive laws describing the material behavior. For example, fluid mechanics theory is based on solving the conservation laws of energy, momentum and mass to predict velocity, temperature, pressure and density fields. Linear elasticity is an example of a constitutive law that describes the material strain response as directly proportional to the stress applied.

Model Uncertainties

Computational models are powerful tools and have accelerated scientific progress in many fields; however, they can be subject to errors. There are four types of error: implementation mistake, numerical error, parameter uncertainty, and model form error.

Implementation mistakes are the errors made during the implementation of the conceptual model. The code verification is the practical process to correct these errors and make sure the model solution is what is expected. Along with techniques presented in previous sections, practices like cross-check and visualization of the output are good methods to find and eliminate this type of error. It is also a good practice to write the code in distinct modules that can be independently reviewed.

Numerical errors arise from the computational approximations that are introduced to practically solve the equations. For example, each number is represented by computer with a finite number of digits, known as finite precision, that round-off its value. In addition, most of the equations cannot be solved analytically, but they are solved in an iterative way. This introduces an error called convergence error, which is the difference between the exact value and the iterative solution. The exact solution is defined as the solution to the equations with zero residual. This error can be reduce by imposing a smaller residual tolerance. Another type of numerical error is the simplification introduced in the equations so that they can be handled by the machine, for example, the truncation of an infinite series expansion. Likewise, continuum variables, and their function, are treated as discrete quantities by computer leading to discretization error. Discretization errors are reduced by increasing the mesh resolution. The reduction in numerical errors comprises higher computational cost. The solution verification process should provide a balance between accuracy and computational power requirements.

Parameter uncertainty concerns the errors deriving from input parameters. The uncertainty is due to the natural variability of the system such as microstructure variability in a component. It can also arise from the ability to measure the QoI in an accurate way. By nature, this last error can be reduced by advances in characterization techniques, therefore obtaining higher quality data. On the other hand, the natural randomness of the system is not reducible, but it can be quantified usually as a probability distribution.

Finally, model form error is due to the disparity between the real system and the conceptual model. This error depends on a lack of knowledge on the physics governing the system, and model simplifications assumed to reduce the computational expense. It is the most difficult error to quantify, because it requires comparisons with true experimental values that also contain uncertainties, and may not be easy to obtain. The uncertainties in experimental data are due to human errors (e.g., misreading instrument scale), systematic errors (e.g., faulty calibration, poor maintenance), and random errors (e.g., reading fluctuations).

Uncertainty Quantification

The quantifying of uncertainties is very important during the validation process of a model, which ideally involves comparing the amount of scatter in values from the model and experiment. The scientific approach to measure the uncertainty in modeling and experimental is called Uncertainty Quantification (UQ). UQ methods are classified as forward or inverse analysis (see Fig. 5). The UQ forward problems focus on the analysis on the uncertainty of the model outcomes, and they are conducted to assess the uncertainties of the QoIs (i.e., computed volume mechanical properties) as a function of small scale-features (i.e., microstructure features). Forward studies can be applied to both experimental and computational researches. In materials science, a typical example of a forward problem is the investigation and determination of process-structure–property relationships. Conversely, UQ of an inverse problem aims to optimize the distribution of the stochastic inputs to obtain the target features. Materials design and calibration are examples of inverse problems. The evaluation of uncertainty in an inverse problem is more difficult than a forward analysis, because of the larger number of variables and the possibility of multiple solutions.

Forward and inverse UQ methods are then subdivided in non-intrusive and intrusive methods. Non-intrusive methods treat the model as a black box, as they are implemented along side the conceptual model. MC approaches are an example of non-intrusive methods, largely applied to study the uncertainty in forward problems. They are robust techniques but require a large number of samples for each input, becoming costly quickly both in terms of physical measurements and computation. The efficiency can be improved by using design sampling techniques such as Latin Hypercube Sampling [64, 65] to better cover the input space, or Importance Sampling [66] to reduce the sample size. The input sample can be built by statistical methods such as Karhunen-Loeve expansion [67, 68] or Auto-regressive moving average [69].

Alternative to MC, surrogates models are less expensive. They use outcomes of a physic-based model as training points for the calibration. The selection of these data points needs to be made in a sensible way, balancing accuracy without excessively increasing the number of simulations. Surrogate models speed up the process, however, they are prone to oversimplification, and they can be unreliable in the case of complex systems. Examples of surrogate models are Polynomial Chaos Expansion [70], Singular Value Decomposition [71], which are forward analyses, or the Bayesian method [72], and Gaussian Process regression [73], which can be used for both forward and inverse problems. If the UQ approaches are implemented directly in the original model to take into account the uncertainty internally, they are called intrusive methods. Polynomial Chaos Expansion can be used as a non-intrusive or intrusive surrogate model [74, 75].

The identification of the source of uncertainty reduces the risk of an incorrect outcome in pass-fail decision making. Where possible, these errors can be reduced by improving our knowledge (more accurate models of the system, and/or model parameter) or by increasing the accuracy of the calculation (higher resolution, lower tolerance). When the errors cannot be reduced (usually due to computational constraints and/or to physical measurement limitations), their quantification indicates the level of trust one can put in the predictions. The sources of uncertainty can vary from model to model; here, a summary of the most common in multi-scale materials modeling is presented.

In electronic models, uncertainties are mainly derived from the approximation of the electronic wave functions. In density functional theory (DFT) [40], the electrons interactions are approximated by fitted exchange-correlation functions introducing a model-form type of error in the outputs. Another model error is the use of pseudopotentials to reduce the computational cost. Pseudopotentials consider only the effect of valence electrons, while the core electrons are considered frozen. This approximation can affect the calculated properties. The choice of the exchange-correlation functionals and pseudopotentials is fundamental and there are a considerable number of publications addressing the fidelity of the results of these simulations. There are also numerical errors due to the threshold on the energy and force convergence calculations, and the size of the simulations domains. In this case, convergence studies are needed to reduce these errors.

In atomistic models like MD, the interatomic potential function introduces model-form type and parameter errors in the results. Other model form errors are the choice of the boundary conditions that can introduce artifacts, and the deviation of the constructed model from the real process. Numerical errors are caused by the picked cut-off distance, round-offs in floating-point, task distribution and sequencing in parallel computation. In kinetic Monte Carlo simulations, the incomplete list of events or wrong event rates are model-form and parameters uncertainties. Another source of model-form uncertainty are the unknown correlations between the events.

In mesoscale modeling, model-form type of errors are introduced by using an empirical model for the stress field and dislocation interactions in DDD simulations or energy functionals in the PF model. Uncertainties also come from the numerical treatment to solve the ordinary or partial differential equations. At the continuum level, sources of model-form errors are wrong or incomplete constitutive laws, and the assumptions made to reduce the computational cost of the simulation. Uncertainties are introduced by the mesh size and numerical calculation to solve the set of differential equations.

Materials Modeling in Industry

Modeling and simulation are valuable assets for industry. Industrial interests on these methodologies are several, from a better insight of materials behavior to cost efficiency in materials and process designs. These tools are used to find Process-Structure–Property-Performance (PSPP) relationships in a material system [37]; a key link between composition, process parameters, microstructure features, and final material properties. This enables the forecast of the behavior of materials during production and service, optimizing the manufacturing process, and accelerating the design of new alloys. Modeling outcomes can also guide experimental trials, by scaling down to the elements or features that affect the final results. They are suitable tools to reduce time, cost, and risks associated with the materials design processes, by minimizing expensive or dangerous experiments. A well-documented model additionally formalizes knowledge that could be easily lost over time in order to avoid mistakes and repetition. They are also useful to scale up production and reduce time-to-market through process design.

Model Quality Levels

As with any other new technology, models lead to improved performance and advance understanding but also introduce a great deal of uncertainty and risk in terms of capabilities, limitations, and development trajectory. It is very important to assess the quality and maturity of any technology, including modeling tools, to ensure they are integrated into engineering projects.

The widely used methodology to assess the maturity level is the Technology Readiness Level (TRL) scale developed by NASA in the 1970s [76] to assure safety, and define emergency strategies before commencing missions. The TRL scale comprises nine levels as summarized in Table 1. There is an initial research phase consisting of levels one to four. In this phase the scientific knowledge (observations) is translated into applied research by the formulation of practical concepts (level 1) into an actual technology or applications (level 2). Level 3 concerns the proof-of concept of the technology through analytical and experimental studies. Low-fidelity laboratory-scale validation of the component assembly is performed at level 4. Following this level, it starts a second phase of fidelity tests conducted in relevant ambient conditions and more representative of service conditions. The component assembly is validated in the relevant environment (TRL 5), then a prototype is created and tested in the relevant ambient conditions (TRL 6) and in an operational system (TRL 7). After level 7, the realize phase starts, where the technology is in its final form and a series of final tests and demonstrations are performed (TRL 8), following by adoption and application of the technology (TRL 9).

In case of modeling, the TRL maturity levels can be translated, as summarized in Fig. 6. There are three milestones defining the three phases earlier described. The research phase corresponds to the V & V process that starts with the formulation of a conceptual model (TRL 1) and its translation to a mathematical form (TRL 2). The computational code is implemented and verified (code and solution verification) at the TRL 3 of the chart. This stage also includes the design of the experiment for the validation. The validation process (TRL 4) concludes this phase together with the UQ on the experimental and model data. After V & V, a so-called black-box testing phase starts, where end-users, who have no knowledge of the implementation, test the code. The code is initially tested by few selected end-users, who can be from outside or inside the company (TRL 5). The testing is then enlarged to invited end-users, both internal and external (TRL 6). At the last stage, the testing is open to any end-users who sign up (TRL 7). The fulfillment of the testing phase corresponds to the second milestone. After the black box testing phase, the first version of the software can be realized in the market (TRL 8), and its updated versions are realized over time until it satisfies the application requirements or it becomes obsolete (TRL 9). In recently years, the Air Force Research Laboratory (AFRL) in the United States sponsored a Technical Interchange Meeting aiming to develop strategies for V & V within integrated computational materials engineering, in order to upraise computational tools to more fundamental role in design and decision-making [77]. The principal outcome was the draft of guidelines for software evaluation called Tool Maturity Levels (TML) [78]. The guidelines consider six categories: model basis and definition, complexity and documentation, supporting data, model verification, rules of applicability, uncertainty quantification, and validation. The maturity of a models is assessed using 5 levels. The first level required definition of the model, inputs and outputs, its application, and its flow diagram. The supporting data are identified. The code, verification and uncertainty quantification plans need to be developed. Level two is reached when the sub-models are also defined with their inputs and outputs, with definition of ranges of model inputs and outputs as well as the sequential or interdependent computations. The supporting data is documented and archived and used to verified the model computations. The code verification is also completed. A range of applications is defined with the inputs required. The sensitivity analysis is completed and the results trends are consistent with known data. A validation plan is drawn out with risks assessment. Level three consists of finalization of the user guide, definition of dependence of inputs data from other analytical tools, implementation and documentation of version control. The model is verified for range of applications with definition of the supporting data and limitations for each of the applications. Risks assessment is performed for each application. Validation is carried out for sub-models and selected cases. The user guide is updated with reference to the supporting data, uncertainty quantification and validated cases at level four. The model and its accuracy is validated for a range of cases. In the last level, benchmarking cases are carried out and validated with UQ. This assessment was developed for ICME applications and it is thought to be flexible allowing tailoring for the specific case. A model is assessed with gated process meaning it moves to the next level only if it meets all the TML criteria of the current level.

In terms of technology development from R & D to commercialization, these three milestones correspond to failure points, as commonly referred to in the Technology-to-market flow as valley of death as shown in see Fig. 7. The diagram indicates the involvement level of academia (green area), industry (blue area), investment (red area), and bank loan (purple area), in the Technology-to-market flow. Initially, the maximum involvement is represented by university research with a relatively small amount of support from industry. The first valley of death is the technological one that occurs at TRLs 3 and 4. In the case of computational modeling, it corresponds to the failure of the V & V process. Gated reviews are performed to assess the commercial risk of pursuing further into product development. Evidence needs to be provided that the technology could be successful. At this stage, the modeling tools review processes correspond to proof of concept and V & V. The second block corresponds to the testing procedure. In this case, the university drives this process at the beginning, but industry takes slowly over from TRL 6 onward. During this process, there is the second valley of death: commercialization. Another series of reviews is conducted between level 6 and 7 to assess the economic impact of the technology development. If the review is successful, further investments are made to deploy the commercial software in TRLs 8 and 9. Industry leads over the commercialization of the technology with little-to-no effort from academia. The final failure point, preventing the distribution in the market (e.g., constant improvement, standardization), is the profitability valley of death. This failure is driven by the small revenue at market entry of a new technology. As shown in Fig. 7, loans and/or investments are required to overcome this last valley.

Application Cases and Economic Revenue

There are few examples of successful industrial applications of multi-scale materials modeling within industry in the literature. The Virtual Aluminum Castings (VAC) software package [79] was developed, for example, in 2006 by Ford Motor Company to simulate casting and heat treatment. The framework predicts microstructure and residual stress, and it forecasts virtual performance of the service life of the component. It consists of an integrated multi-scale modeling framework including atomic to component level models. It is the result of a deep understanding of physical phenomena involved through theoretical, experimental and computational studies. It has enabled a 15–25\(\%\) reduction in the development time of a component, such as a cylinder head [79]. More recently, Rolls-Royce has developed precipitation modeling tools in collaboration with the University of Birmingham [80]. This model allows simulation of the precipitation kinetics as a function of their position in the matrix to optimize the heat treatment and component life of the alloys developed [81]. The tool was successfully used to predict a quantitative trend of the manufacturing process, and accelerate the design of new alloys for gas turbine application within five years [81].

In terms of economic revenue, a survey conducted by Goldeck Consulting Ltd in 2015 reports that the adoption of material modeling resulted in improved innovation accomplishment of 80\(\%\) and revenue increase for 30\(\%\) of interviewed companies [82]. The average investment in material modeling was \(\pounds \) 1 M, with an average cost saving due to materials modeling equal to \(\pounds \) 12 M [82]. Continuum Materials models are the most ubiquitous in industry with an estimated 75\(\%\) of the materials software market [83]. The total market size of Computer Aided Engineering (CAE) modeling is estimated to be in a range of \(\$\)5–8bn [83], where the software development for materials applications is 5–10\(\%\) of the market [82]. The discrete modeling (electronic, atomistic and mesoscale) softwares started to be developed in the 1970s concomitantly with the spread of HPC in the life science industries. In the 1990s, HPC was adopted by other industrial sectors such as chemistry and materials engineering. Since then, the market of discrete modeling outside the pharmaceutical field has increased, reaching a value of \(\sim \$\)50 M in 2012 [84].

Company Values

These are valid examples of how materials modeling is a credible tool for industry, in terms of cost and time saving, and technological and scientific advancement. However, like other technologies, a consistent advantage through time can only be achieved if materials modeling is valued through the core of the industrial business. A protocol to monitor the level of materials modeling adoption in industry from a business point of view has been suggested by Goldbeck and Simperler [85]. The method is based on Capability Maturity Model Integration (CMMI) [86] and it assesses the impact of material modeling on four factors: People, Process, Tool and Data. The People factor includes all the relevant personnel within the organization to materials modeling, from the expert modelers to occasional end-user. The Process factor regards not only the modeling workflow itself but also the workflow within the whole organization. The Tool factor comprehends the selection and application of software and hardware within an organization IT infrastructure. The Data factor concerns the storage, handling and management of the information obtained within the organization.

For each of these factors, a five-level scale (see Fig. 8) was set to measure the exploitation of modeling techniques in the company. In the initial stage, the knowledge is restricted to individual people, and the tool choice is subjective to the modeler. At this stage, the workflow is not established and inefficient, and there are insufficient data management policies to reliably record parameters and simulation outputs. The next level is called defined, the knowledge is shared within a larger group of interested subject matter experts (a research group or specialized department). The workflows inside this materials modeling group is consistent but not shared outside the group with the extended organization. The individual tools have been validated internally, but they are not yet integrated into an automated system to share information. Data policies have been established but they are not consistently applied across the organization. At the next step (integrated level), the research strategy is consolidated at the business level, resulting in the integration of policies and objectives for materials modeling. The process workflow is well-documented, assimilated, and straightforward to follow. The selection and application of modeling tools is standardized and integrated on a synthetic level. The data are managed at an organization level through an integrated information architecture defined by uniform data policies. At the managed level, the evaluation performance and objectives of the modeling teams performance is standardized. The modeling process is integrated in the business workflow, and all data and metadata are traceable. The modeling tools acquisition is now centrally managed, and the models are inter-operable across domains and cross-functional across the workflow. The company has data-dedicated personnel, provenance, and security, allowing data sharing along the value chain. The highest level of adoption, the optimized level, requires full integration of the modeling process in the business plan, and the highest level data-infrastructure management where all data are available, prompt and relevant across the entire organization. The modeling team is part of the enterprise level viewpoint, and resources are spent toward continuous improvement of modeling tools and data infrastructure. The tools are fully integrated with semantic data flows.

In 2018, this protocol was used by Goldbeck and Simperler to assess the adoption of materials modeling in industry [85]. They interviewed 120 materials modelers across 16 companies, from pharmaceutical through special chemical and materials fields, to the manufacturing industry [85]. Their study showed that the majority of companies reached the integrated level in regards of people and tool factors, while process and data factors lag behind between defined and integrated levels. Overall the average CMMI level was 3.2 in manufacturing industries and 2.5 in chemical and materials industries [85]. These data show that materials modeling technologies are recognized as valuable tools mainly by specialized personnel. Each modeling group reaches an advanced level of knowledge and standardization. However, this is not translated at the management and business levels. The materials modeling development and evaluation are not considered as part of the business values. The know-how acquired is not immediately traceable, readable, transferable, or retainable. The Goldbeck and Simperler study concluded that, although there remain barriers for their complete endorsement, modeling tools have demonstrated themselves to be technologically invaluable and successful.

Barriers

The report of Goldbeck and Simperler [85] shows that materials modeling is not considered as a core indutry value and barriers to full adoption still exist. In general, the main obstacles to the adoption of materials modeling can be summarized in a lack of communication and governance, high complexity, and difficulties with data management (see summery in Fig. 9). The trust in integrated modeling tools is severely effected by these problems, and in some cases the loss of confidence can completely undermine developments in industry and discourage final users from embracing these technologies.

Various actors are involved in the development and use of these technologies: modelers, software developer and end-user who work as researchers in academia, research technology organization, or R & D intensive industry. The variety of understanding of the technology between these groups can be stark, leading to potentially contrasting goals. Communication between these roles can be complicated due to the different backgrounds, motivations, and terminologies, and it is recognized as one of the main barriers to adoption. Academics, for example, are interested in solving very complicated problems, and looking in depth at the physics and chemistry that govern the phenomena involved. They sometimes underestimate the problems that occur in an industrial setting, or find them unengaging [87]. Their research interests are also not static but they evolve moving from one subject to another. They do not concentrate on one aspect, but they like to keep exploring and pushing the boundaries of their knowledge Moreover, this community tends to be more motivated by exploration and pushing the boundaries of the state of the art, rather than long-term continuous focus on a particular solution or improved user-experience. These aspects may conflict with the industrial point of view. Industry organizations tend to push for simple solutions that are fast and easy to implement. This is clearly at odds with the university experts who tend to push for more complicated solutions. In the academia-industry relationship, these cultural barriers limit the creation of strong collaborations, and the communication can be difficult in both directions. Industry partners sometimes fail on synthesizing and communicating the problem they want to solve. As discussed earlier, defining the problem entity is an important step for the development of modeling tools. With a vast community like materials modeling, where research groups tend to focus on a specific methodology and/or materials application, a well-defined question assists with identifying the appropriate technologies and expertise. At the same time, academics and modelers may struggle to transfer efficiently the technologies to people that are not highly-trained across an industrial organization. Alternatively, end-users frequently apply modeling tools as black boxes, which can lead to improper, potentially dangerous, results. Academics can also over-estimate the scope of applicability of modeling capabilities in more complex settings, reducing the confidence of the industrial partner in case of large errors and/or failure. Strong communication is also needed between modelers and experimentalists. As already said, experiments are essential to provide data for validation of the modeling tools and to provide model parameters. The experiment design is, in fact, a fundamental step during the V & V process, and it requires a mutual understanding of problem system and conceptual model. Modelers need to explain how the model works, and what inputs and outputs are required. Experimentalists need to make clear what the type of experiment can be conducted to validate the model. It is important to know what can be measured, monitored, the limitations of technologies used, and how to quantify the uncertainties. A mutual understanding from the beginning is important for the success of the V & V process and can require the dedication of time and resources to ensure it is is done correctly. Like the academic experts, the experimentalists can also overestimate their in-house capabilities, undermining the V & V process. Modelers may struggle to provide a complete description of their work, impairing the communication of the advantages of these technologies. Often, their work is not recognized, either because it requires specific knowledge, or because of skepticism in the accuracy/ability of the model [85]. The skepticism with regard to modeling tools arises as a result of a lack of awareness or complete description of the quantitative accuracy of a solution for a specific problem. Models can provide, instead, direction and/or reasonable range (output that includes uncertainties) to decision-making direction. Providing realistic and transparent information from the start of the process would assist the integration of materials modeling in industry. In addition,there is an evident gap between modelers and other important figures such as design engineers and business managers who are not be able to recognize the potential benefits gleaned from R & D, product development, process optimizations, and cost effectiveness of materials modeling. In general, outside of the modeling community, modeling is not well understood, defined, or well-established within industrial organizations [85]. This impedes the integration of materials modeling in the vertical value chain of the company process [85]. The materials modeling position is not consolidated or, worse, not accounted for in the business processes, policies, and standardization of the organization. In many cases, materials modelers are only involved in a problem as a last resort, while to fully exploit their potential, modelers should be involved as early as is practical for a given project.

Related to poor communication links, the lack of workflows and standardization in the organization can significantly affect the governance of materials data and information [22]. The first barrier in the workflow is between the university and industry during the TRLs 1–4 as shown in the technology development chart (see Fig. 7). The V & V process and software development are demanding and require skills across multiple disciplines. Academic research groups, for example, alone do not have the capability to deliver user-friendly software. The adequate standard to produce documentation and software, and perform a V & V process that can be easily translated to industry may not be followed by university researchers. In particular, the ability to create a user-friendly code that has been verified across several use-cases is out of scope for most of the academics [88]. Even when modeling tools are successfully developed, there are barriers inside the industrial organization that stop modeling tools from being effectively used. Modeling tools available are not well integrated with other tools in multidisciplinary design and optimization architecture [5]. This makes it difficult to access these technologies, especially for end-users. Developed codes can be archived even if they are successful as they are created to solve a specific problem. The absence of standards also leads to bias in the choice of which tool to be used. The materials modeling field is populated by a vast heterogeneity of codes and software to chose from. As already discussed, the choice of modeling tool to be used is a difficult one, and it needs to be addressed in relation to the problem to be solved. The lack of standardization and policies can lead to an arbitrary choice of the modeler who may favor one technique over another depending on their background. The lack of governance is frequently due to the fact that materials modeling is not well enough established as an asset to business, which is often connected to insufficient ability of modelers of shining a light on the business advantages in terms of spending and time. This affects the recruiting process, making it difficult to attract and retain a valuable workforce.

Complexity is also a major barrier to the adoption of these techniques and it affects mainly Small and Medium Enterprises (SMEs), which do not undertake a dedicated research activity or have access to HPC facilities [85]. Establishing a hardware ecosystem from scratch is neither trivial nor cheap, and it requires a costly process that involves putting in place specialized tools, processes, data management, and a trained workforce. Making materials modeling technologies more accessible to all industries including SMEs is a requirement for their integration across the supply chain. Nonetheless, problems can arise from over-simplification. The naive use of desktop software can, in fact, lead to misleading results if used with the wrong set-up for the simulation (e.g., boundary conditions, mesh size), effecting the reliability of the output. The expertise of end-users required for model development (mathematical methods, physics, materials science, and computing) may not be at an appropriate level to prevent escapes of inaccurate outputs. However, these challenges existed equally in other modeling domains, such as finite element analysis (FEA) and computational fluid dynamics (CFD), and have been overcome and matured for wide-scale industrial adoption and standardization.

Another obstacle to modling is the absence of extensive and reliable materials data. In fact, modeling activity needs data as input parameters, boundary conditions, and output for validations. Data availability needs to be considered in the building of a model and their capital investment should be evaluated early on [87]. The difficulties to gather data both for inputs and outputs leads to technical issues and cost effecting even more accepted methods such FEA and CFD. Another problem that affects data is the presence of different types of format, which makes it difficult in the handling and storage [85]. The variety of data formats is driven by the prevalence of proprietary data formats used in scientific instrumentation and modeling technologies. This variation makes it difficult to extract, establish, and support metadata for the the creation and implementation of a Materials Data Infrastructure (MDI) [89].

Initiatives

Materials modeling is recognized as fundamental for the discovery and development of new materials and processes [90]. Many initiatives have been proposed over the years to tackle the barriers discussed in the previous section [3, 4, 21, 83, 87]. The main areas recognized to be essential to advocate for materials modeling in industry are collaboration, disclosure, education, accessibility, integration and data [3, 4, 21, 85, 87]. Collaboration, disclosure and education directly effect the people factor. The governance is affected by the integration, and complexity by accessibility (see Fig. 10).

Collaboration

In term of collaboration, it has been recognized that cooperative work between academic model developers, industrial stakeholders, and across industrial departments and research modeling groups, is not only beneficial but essential to the development of tools and frameworks ready for industrial applications [87]. Materials modeling does require different skills, involving relatively large research groups (up to 20 or more people). Alternatively, collaboration between smaller groups can achieve the same outcomes. Promotion of collaborative work between modeling groups can help the creation of a framework that can span across length scales and purposes. In order to promote it, cooperation between universities is now a prerequisite to apply for funding. Other activities to support partnerships between universities are the organization of workshops and seminars, possibly extended to a wider group of researchers and industry. These can also help to find new applications for existing techniques, especially for the physics-based models, in other fields [87]. Research centers can have an important role on encouraging collaboration. It has been proposed that a one-day meeting between modeling and non-modeling experts (experimentalists and industrial users) organized by the Engineering and Physical Sciences Research Council (EPSRC) or a center of excellence would be beneficial to bridge this gap [87]. They can play a key role by creating international relationships with universities and organizations outside the UK. Collaboration is promoted by communities such as nanoHUB [91] which aims to gather together students, researchers and instructors working and teaching in the nanotechnology field around the world. The sharing of tools and teaching materials are encouraged in exchange of benefits including wider visibility, automatic data usage, possibility to discover new applications in other fields, the usage of HPC resources, citation tracking, and control of reuse and assets via licensing.

Conflict of Interest

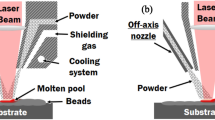

The modeling community needs to put major effort on disclosure and reaching out. Activities such as training, showcases, software benchmarking and consultancy can speed up the adoption of these technologies. Centers of excellence are points of aggregation for academics and industry, therefore, ideally placed to run these activities. At a European level, the European Materials Modelling Council [6] was created in 2019 aiming to overcome the barriers to industrial integration of materials modeling. The EMMC focus is divided into six areas: Model development, interoperability, digitalization, software, impact of industry, and policy. They produce survey studies, whitepapers, roadmaps, and run workshops to identify the barriers and suggest strategies. Another example is the Interdisciplinary Centre for Advanced Materials Simulation (ICAMS), in Germany, which is a research center at the Ruhr-Universität Bochum [92] focusing on the development and application of simulation tools for multi-scale materials modeling [92]. They actively contribute to simulation software development that can be found in their GitHub repositories [93]. The knowledge and know-how was transferred to two new enterprises, one of which offers support for microstructure modeling. They are active in industrial research, and they organize showcases of three of the six project groups every two years, to show the last development and get input from the industrial stakeholders. In France, the French National Centre for Scientific Research (Centre national de la Recherche Scientifique, CNRS) [94] is a state research organization, which coordinates different institutes. Each institute promotes cooperation between disciplines and oversees the research activities of laboratories in the country such as the Institut Jean Lamour (IJL) [95], which focuses on materials science research including the use and development of in-house software. Five companies have been established as spin-outs from this research laboratory, two of which are modeling and simulation focused. The activities of benchmark exercises are also a good way to showcase the state-of-the-art technology and disclose the latest achievement in the community. The Additive Manufacturing Benchmark Test Series (AM-Bench) activities promoted by the American National Institute of Standards and Technology (NIST) [96] is a good example of benchmark exercises for additive manufacturing applications. A first benchmark exercise was run in 2018, the results of which were disclosed in a series of articles and at the conference organized by The Minerals, Metals & Materials Society (TMS), which saw the participation of academics; software companies such as Dassault Systèmes SIMULIA [97] and QuesTek Innovations LLC [98]; research centers such as the Advanced Manufacturing Research Center (AMRC) [99]; and industry such as Rolls-Royce [100] and Siemens [101]. The French national research agency (Agence Nationale de la Recherce, ANR) [102] also promotes benchmark exercises, for example on macrosegregation simulations during solidification run in 2010 [103], which involved different research centers in France including IJL. The aim of the project was to refine the processes of macrosegregation in the final product. At the time of publishing this review, there is no existing research center that specializes in materials modeling, because the decision was to create research centers focusing on specific processes [87]. Nowadays, most of these centers have materials modeling departments as they conduct projects involving modeling and simulations. In terms of SME engagement, there are projects founded by the European Regional Development Fund (EDRF) that aim to facilitate the access to materials modeling technologies for SMEs [104]. Other fundamental actors for materials modeling disclosure are communities such as nanoHUB [91] or the International Association for the Engineering Modeling, Analysis and Simulation Community (NAFEMS) [105]. They offer a vast selection of resources including software that can be run in the cloud, and learning materials such as videos of lectures and courses.

Education

It is clear that industrial adoption of materials modeling requires the formation of a new generation of modelers, engineers, and materials scientists in this discipline. The importance of education in materials modeling was already recognized by Sheriff [87]. He recommended to develop appropriate degrees both at undergraduate and postgraduate levels, which foster an open-minded approach, ability to work in a team, and the clear idea that models are not the final goal but useful tools to accomplish the aims of research, design or monitoring activities. In his final remarks, he indicated the EPSRC as the main actor in promoting and developing materials modeling education. Most undergraduate studies in materials science in the UK university offer some materials modeling course in their curricula. Most of these courses focus on continuum techniques, but with very few on discrete modeling tools. In terms of higher education, two Centers of Doctoral Training (CDT) have been founded by EPSRC, Theory and Simulation of Materials (CDT-TSM) at Oxford [106] and Computational Methods for Materials Science (CDT-CMMS) [107] in Cambridge. Both courses aim to generate materials scientists and researchers in the state-of-the-art of materials modeling, helping them to develop and select the right modeling tools for their research. The state of materials modeling in university teaching in the USA seems similar to the UK, as reported surveys conducted by Thorton et al. [108] and by Enrique et al. [109]. The surveys were conducted nine years apart between 2009 and 2018, proposing the same questions to a select group of American academics [110]. The comparison between answers show an increment of Computational Materials Science and Engineering (CMSE) courses and a clear support for including them in the core curriculum. In nine years, the number of universities offering one or two CMSE undergrad courses almost doubled, and 96\(\%\) of institutions interviewed has at least one CMSE module in their graduate courses while it was 41\(\%\) in 2009. The surveys also show that there is no strong preference for standalone courses or the introduction of specific modules in the existing materials science courses. Some interviewees indicated that barriers stopping further development were the lack of teaching resources such as graphics-based operating system interface (GUI) software, and preparation of the students in mathematics, physics, and computer science fundamentals. In Europe, the teaching includes some complete courses in materials modeling for graduate students [111, 112]. In addition, short training courses and seminars for industry are organized by universities [113], software companies [97] and associations [105]. Online resources are also available such as the nanoHUB catalog of courses [91] or other sources [114]. These are fundamental activities for promoting a wider use of materials modeling tools outside the modeling community.

Accessibility

The complexity characterizing these technologies is also a technical barrier that prevents users from taking advantage continuously and efficiently applying these tools. Most of the people working in industry today do not have a very strong background in modeling, especially in discrete techniques, program languages and operating systems. The EMMC suggested that the community of modeling experts, the software developers, and the funding agencies should focus on transforming a promising tool into a technology that can be easily adopted throughout the industrial stakeholders [3]. The development of GUI-based or browser software is a fundamental process in the industrial distribution of these methodologies. According to the EMMC, the technology transfer and software development do not need to be performed by university, actually small companies and research institutions are better intermediate entities between university and industry [87]. While user friendly software should not be one of the objectives of academic work, more effort should be dedicated to write clear documentation of the code developed, to make the conversion easier for software developers, and help to set the training of users [3]. Different university spin-out companies have created web-based interfaces that simplify the use of techniques such as CALPHAD [115] and MD [3]. The use of an open source web app framework, such as the Django project [116], Flask [117], and Pyramid [118] that allows running remote applications using a web interface should be also investigated.

Integration

Even when the technology is accessible to industry, it is still not widely used. As shown in the surveys of Goldbeck and Simperler [85] materials modeling is not well integrated in the industrial processes or workflows. The authors believe that this is a sign of the fact that materials modeling is not \(de\ facto\) recognized as value in the organization. Work should be done in promoting these technologies, emphasizing the benefits, efficiency and effectiveness. As already mentioned, benchmark activities, showcases, seminars and workshops organized by research centers can help show evidence of the benefits coming from the use of materials modeling. Internally to the organization, activities such as Business Processes analysis and Quantitative Benchmark for Time to Market framework (QBTM) [119] should be performed to show the impact of materials modeling [85]. Materials modeling and simulation need to be part of Business Process Integration (BPI) Paradigm. BPI aim is to connect systems and information efficiently allowing the automation of business processes, integration of systems and services, and the secure sharing of data across numerous applications internally and externally [120]. Belouettar and co-workers [5] have proposed an integrated multi-disciplinary, multi-model and multi-field approach. The tool developed integrates materials modeling with business tools and database in a single open platform, connecting a rich materials modeling layer with industry standard business process models. In terms of modeling governance, the non-government organization, Modelica Association, aims to develop and promote a standard language for modeling, simulation and programming of physical and technical systems and processes [121]. The standards include an object-oriented, equation based language (Modelica Language) to describe cyber-physical systems, zip-file (Functional Mock-Up Interface) container for the description, exchange and storage of simulation input and outputs, a workflow (System Structure and Parameterization) that describes the logical connections between the different models and how data are stored and exchanged, and a communication protocol (Distributed Co-Simulation Protocol) for the integration of models and real-time system into simulation environments. This organization includes members from around the world, from academia, industry, and software developer companies. This project started in 1996, and it has promoted the developing of a series of open source and commercial tools such as simulation environments, developer, and data visualization, managing and storage. In industry, the Modelica language and libraries are used in automotive industries and by plant power providers.

Data