Abstract

For over 100 years, designers of aerospace components have used simple requirement-based material and process specifications. The associated standards, product control documents, and testing data provided a certifiable material definition, so as to minimize risk and simplify procurement of materials during the design, manufacture, and operation of engineered systems, such as aerospace platforms. These material definition tools have been assembled to ensure components meet design definitions and design intent. They must ensure the material used meets “equivalency” to that used in the design process. Although remarkably effective, such traditional materials definitions are increasingly becoming the limiting challenge for materials, design, and manufacturing engineers supporting modern, model-based engineering. Demands for cost-effective, higher performance aerospace systems are driving new approaches for multi-disciplinary design optimization methods that are not easily supportable via traditional representations of materials information. Furthermore, property design values having the definitions based on statistical distributions from testing results can leave substantial margin or material capability underutilized, depending on component complexity and the application. Those historical statistical approaches based on macroscopic testing inhibit innovative approaches for enhancing materials definitions for greater performance in design. This can include location-specific properties, hybrid materials, and additively manufactured components. Development and adoption of digital and model-based means of representing engineering materials, within a design environment, is essential to span the widening gap between materials engineering and design. We believe that the traditional approach to defining materials by chemistry ranges, manufacturing process ranges, and static mechanical property minima will migrate to model-based material definitions (MBMDs), due to the many benefits that result from this new capability. This paper reviews aspects of the challenges and opportunities of model-based engineering and model-based definitions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

For over a century, the procurement of general industrial and aerospace products has relied on materials and process specifications. Specifications typically set a minimum level of requirements based on pragmatic chemistry control and/or mechanical properties, with minimal or subjective microstructural controls. These standards, product control documents, and testing data largely comprise procurement documents that ensure components produced from specified materials meet minimum design intent. Component production is enabled by design methods (with minimum property representation), quality inspection standards, and component testing and qualification procedures, yet all depend on these basic documents aimed at minimizing programmatic and operational risks by “locking down” materials and processes–and therefore specific component material behavior and variation. Very often this approach is sufficient given the specific combination of design, material, process, and application. However, a consequence of this approach are tables of conservative design values that are static representations of a material’s worst-of-the-worst capability via statistical analysis within limited application of specific requirements for particular gauge ranges. Further, static material property minimum representations only apply for the process ranges that are physically produced and tested and cannot be extrapolated to account for process variations or changes. It is somewhat ironic that while it is well-established that composition and process produce a specific range of microstructures, and such microstructures control mechanical properties, microstructure is often not monitored nor controlled. Thus, the lack of microstructure focus forces the design community to a traditional approach of collapsing natural property variations into a single minimum value. While practical, this simplification inhibits application of a material’s full capability and can lead to considerable suboptimization in the design process. It can also inherently limit component design to material conditions for which extensive test data have been generated. This contrasts sharply with other aspects of engineering for which digital methods are widely applicable at the design stage.

Unlike structural materials and processes engineering, nearly all other engineering design has converted to digital methods and model-based optimization. Physics-based methods, such as finite element analysis of structures and computational fluid dynamics, have enabled other engineering disciplines to adopt practices based upon digital representations. These are often referred to as Model-Based Engineering (MBE). Current MBE of components uses advanced optimization methods to ensure the final component and system efficiently meets customer requirements. Parametric models are used to seek optimal configurations based on the range of objective functions for the parametric search and the features included in the parametric analysis. MBE uses models on an application-specific basis as an integral part of the engineering technical baseline that includes the requirements, analysis, design, implementation, and verification of a capability, system, and/or product throughout the product life cycle [1]. Unlike materials and processes engineering, MBE relies on digital representations, or a model-based definition (MBD), to define a product throughout design, manufacturing and sustainment. Analogously, model-based materials definitions (MBMD) are needed to provide parametric materials-based optimization capabilities to engineering for better achieving customer needs. However, the application of MBE in the materials domain will be initially limited to components that due to their criticality, complexity, or cost justify the added expense this incurs.

In recognition of this shift, the US Department of Defense issued MIL-STD-31000A in 2013 to incorporate digitally-based, 3D Computer Aided Design (CAD) models of components [2]. However, even this revised standard, which supports post-production component procurement (spares and replacement parts), still relies on traditional representation of materials information through material composition and associated process specifications. Unfortunately, today this type of analysis is performed while having material properties being represented as constants that are set at the same minimum values across the entire bounds (spatial range) of a component. Refined optimization of components needs to include the ability to design, specify, and certify the material requirements locally within a high-performance part (i.e., location-specific designing) through which the entire capability of a material can be enabled and utilized.

In response, the nascent field of Integrated Computational Materials Engineering (ICME) and the Materials Genome Initiative (MGI) are moving the materials and manufacturing communities toward model-based materials representations [3, 4]. However, materials engineering to date has lacked the quantitative rigor, concerted community-wide actions, and practical methods of digital representation in an MBE environment. Other challenges include how to ensure design certifications are maintained and how supplier quality systems can adapt as digital approaches emerge. These elements cannot be lost in the endeavor to build materials standards upon digital MBMDs and are essential to speeding the development and deployment of materials while managing risk to system design, manufacture, and sustainment.

Material properties are functions of chemistry and hierarchical microstructures stemming from processing. Microstructural features (e.g., grain size, precipitate morphology, crystallographic texture, etc.) are path-dependent state variables that depend upon initial state and subsequent processing procedures. As such, the best metrics for material quality should be tied to chemistry and microstructure measurements. However, it is impractical to conduct detailed microstructural evaluations for the frequently occurring need of material lot-release testing. Additionally, not all aspects of the microstructural state are readily amenable to industrial production quantification, nor are there established practices to manage spatially varying hierarchical microstructural information within engineering design systems. Current practices typically only sample chemistry and control process, collect easy to evaluate mechanical properties (e.g. tensile strength, hardness), and either forgo or use limited microstructure metrics. Said differently, the current practice is largely a composition-process-properties paradigm. Although commonplace, this limiting paradigm adds cost, time and risk to the product development value stream since production of full-scale parts is needed to establish capabilities. Further, the present paradigm does not result in either a spatially resolved or model-based digital representation of the full material capability that could enhance design practices and be more easily transported in other component design efforts.

In contrast, material performance requirements have increased in recent years as aerospace customers and certification agencies demand more reliability, durability and safety. This has resulted in burdensome supplier qualification exercises and complicated document management systems. Consequently, it is time to consider a paradigm shift for representing materials knowledge as a series of models (physics-based) that integrate material chemistry and process-path dependence to represent local microstructure, properties, and performance. Materials and process engineers need to devise a digital and model-based composition-process-structure-properties paradigm (i.e., model-based materials definition or MBMD) to offer efficiency and flexibility to design engineering and to fully exploit conventional materials and processes, and enable proactive and practical use of digital processes, such as additive manufacturing.

The Traditional Process for Representing Materials Information in Design and Manufacture

To better understand the essence and needs for an MBD materials and processes engineering paradigm, here we examine the current paradigm in more detail. Traditional document-based modes of sharing materials information have served both the scientific and engineering communities extraordinarily well. As a consequence, current modes of information generation and flow within materials science and engineering from discovery through development, scale-up, product design and qualification, manufacture and sustainment have changed little over the past decades. This process is depicted in Fig. 1 and highlights the well-defined boundaries between the stages in a material’s lifecycle.

The discovery stage of material development is largely through evolutionary changes to materials that are already known or through basic research, where new materials systems are being explored. These efforts are driven extensively by Edisonian trial-and-error approaches that are time consuming and costly. It is common to use simulation and predictive science tools in the materials discovery stage, but these are far from being trusted at a production scale, where most of the cost is incurred. Thus, once a new material has been investigated to a level where potential advantageous property values are observed, this material will be moved to the next stage of preliminary property development for engineering purposes. Typically, a series of sub-scale samples are produced and physically tested to enable a larger quantity of property tests to be conducted to study variability and feasibility for specific end use. These activities are conducted during the discovery and development stages shown in Fig. 1.

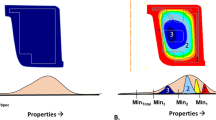

When a production application is identified and an adequate business case exists, a specific component or family of components are usually selected for application. Note that assessing the business case for implementing a given advancement may require considerable time, and that may itself evolve over time. Scale-up and production methods for a new material are usually based on knowledge of similar materials, which sets chemistry and process parameter targets and tolerance controls. Large numbers of mechanical property tests, usually in the 100’s or 1000’s of coupons, are then carried out on production-representative component configurations. Figure 2 shows a schematic example of a full-scale component where mechanical properties are measured throughout the part volume [5]. The color contours and the associated color-coded property distributions depict how material properties inherently vary due to component processing.

Simulation of a forging showing the gradient of mechanical properties and the associated property distributions within specific locations. Traditional material definitions would assume all locations should have the same property, so all test values would be combined and statistically analyzed as one population resulting in a very low minimum property capability. Analyzing material properties within individual zones result in greatly increased local-specific minimum properties

If a new or modified material and/or process have been determined to be adequate to meet product requirements, either prior supplier experience, supplier control capabilities, or a rigorous statistical process are used to empirically define the boundaries of chemistry and processing conditions in order to minimize material variability for design. Specifications may be issued from that information, either publicly as in the case of Aerospace Materials Specifications (AMS) published by the Society of Automotive Engineers (SAE), such as AMS4928 or AMS4911 for wrought Ti-6w/o Al-4w/o V (Ti-6-4) forged and rolled plate material, or they may be internal to companies and organizations as proprietary specifications. This provides a Quality System-based aspect to current material definitions. The specification typically contains allowable chemistry ranges, process method, microstructure descriptors (selectively), inspection indication limits, and static surrogate property minima for limited property types that represent the entire range of static and dynamic property design capabilities. It is important to note that the specification does not typically provide the detailed process parameters such as temperature, strain, strain rate, number of passes, recrystallization schedule, etc. Rather, the suppliers have some latitude to operate within their equipment capabilities and the broad aspects of the specification.

Most specification and design allowable documents establish compositional ranges and minimum static properties, having occasional special requirements, such as fatigue or corrosion requirements. However, in the age where digital representation and transfer of information is the norm, the complexities of extracting key microstructural and limiting parameters from certified material-lot samples, in an efficient manner, remain formidable. Even if such methods existed, the sheer magnitude of data overwhelms human capacity to reduce sufficient information for decision support. Therefore, a disciplined approach to data management employing digital, machine-based methods and frameworks are essential to reach MBMD goals. It is important to have data management plans during development and production deployment of materials. Use of MBMDs, drawing on well-structured digital data, can and should be used to drive the identification of critical test locations and test types to represent the entirety of a component and material capability.

Once material and process specifications have been established, a complete set of material property allowables are developed and are captured in company-proprietary internal engineering documents. Within the jet engine industry statistically-based property allowables are developed, whereas within the airframe industry mean properties are often used in conjunction with design safety factors and design life scatter factors to account for behavior below the mean. Sometimes, the allowables are communicated within industry-wide manuals, such as the Metallic Materials Properties Development and Standardization (MMPDS) manual, or the Composite Materials Handbook, CMH-17 [6, 7], in tabular or design-curve formats. From the statistically-based minimum-property values, formalized design methods (e.g., allowable stress versus temperature, Larsen-Miller curves, and Goodman diagrams, etc.) are employed that draw on statistically determined minimum property capabilities for this new material. Importantly, the design and qualification processes are intentionally conservative and treat materials by continuum mechanics analysis (for metallics) based on allowable expected properties. Thus, in general, components are designed assuming uniform, minimum (“worst-of-the-worst”) properties throughout a component.

When selecting materials and specimens for property capability testing, one typically uses a wide range of spatial locations within a manufactured component. Differences in mechanical properties from samples obtained from disparate locations within a component volume are typically treated as variability, instead of as normal microstructure dependent differences. For statistical purposes, this location–specific “scatter” within components is then analyzed as a part of the single population used to establish minimum design-property values. This is the case presented in Fig. 2 having all test data being assumed to be the same property capability as an integral part of the overall definition assumption. In other words, property variability across a component has been most often treated by designers as though it were a condition of aleatory uncertainty (inherent randomness) when in fact it is more appropriately described as epistemic uncertainty (limited by our ability to describe). However, materials engineers are now able to reduce epistemic uncertainty for location-specific design through predictive modeling. Obviously, this practice of combining location dependent, different material behavior into a single population of material performance values adds scatter to the population and thus conservatism to the design allowables. Pragmatic methods such as ‘gauge bracketing’ are often used to sub-divide minum properties for mill products (plates, sheets, bars, rods, etc.) based solely on product form cross-sectional dimensions, which minimizes this issue, but results in a chemistry, process, geometry, property based material definition.

Limitations of the Traditional Requirements-Based Approach

Traditional verification of static material definitions includes material specifications and empirical design curves. Standard test methods are applied to enable pragmatic evaluation of material to assess its equivalency to that of the materials used to generate the associated specification. ASTM International has established numerous methods for measurement of chemistry, structure and mechanical properties. These methods largely describe how to test and analyze material for compliance to material specifications. Note, however, that so far those methods are not well developed for obtaining property values by material zone, location or component feature, especially if the zone/feature sizes are smaller than test specimen standards. This current state is a barrier for better coupling testing to materials models. This becomes particularly troublesome when one recognizes that many engineering structures are designed and manufactured from materials that are produced at dimensions smaller than those required for standardized ASTM tests (for example turbine airfoil wall cross-sections).

Current quality control and material analysis methods only provide for a level of comparison to ensure compliance of a material to a specification, that often includes nondestructive inspection requirements. Specifications often ignore microstructure, or place minimal requirements on parameters such as grain size or volume fraction of phases, typically as average values only. Single crystal turbine blades, because of their extreme performance requirements, are an exception to this practice. Almost universally, such parameters are those obtained via traditional light microscopy and do not take advantage of the more sophisticated materials characterization capabilities of the last few decades. This limits microstructure feature resolution to around 1 micron. By default, statistical metrics of microstructural features, where distribution extremes often control dynamic properties, are not commonly incorporated into industrial specifications and associated quality control requirements. To some degree, this is due to the fact that single material specifications are used across multiple suppliers, industries, and applications of varying design criticality. Not all of these uses warrant sophisticated characterization, the associated changes to engineering workflows, and the cost impacts that microstructure specification would typically demand. Thus, the status quo for material property and microstructure definitions does not include physics-based mechanisms that truly drive mechanical property and component behavior. In large part, this is because the microstructural features and heterogeneities that drive material behavior occur at length scales that are microscopic or are complex, and require characterization techniques that are thought to take too much time, not readily available, and require specialized skills to obtain and interpret.

Also, present day specifications typically begin with a composition range and specified properties of the final produced material. Specifications for the process are only progressively refined as needed so as to give materials producers and component suppliers as much freedom as possible to alter processes for cost control. However, Fig. 2 suggests that properties can vary for cause (physics-based mechanisms) that are tied to manufactured geometries and processing details, and they are spatially varied in a non-random way. The distribution of properties by location is shown below the forging shape, with each narrow distribution profile representing the behavior of a specific region. Note that there is significant variation in properties within both the forged shape as well as the final machined part. However, as previously noted for most current design practices, property domains are sampled without regard to location, essentially assuming that all tested variation is random variation (though, it is common to create “gauge breaks” or section-size criteria in regular mill product forms, where it is assumed process controls provide reproducible spatially distributed properties within section-size). This aggregated sampling of properties can lead to a statistical representation that extends the tails of the aggregated property curve in an overly conservative fashion. The result is underutilization of material capability and therefore sub-optimized component design if all material tests are assumed to be the same, and the variations are assumed to arise from random scatter.

Once a material having a traditional static, empirical definition is assigned to a new component design, the component is assumed to have the same set of minimum properties throughout the component volume, often regardless of processing path or testing orientation. For some materials and processes, such as rolled sheet materials, anisotropy is represented by property measurements parallel- and transverse-to the rolling direction. Only rarely are more sophisticated measures of anisotropy, such as crystallographic texture, included in material definitions and design systems. The “homogeneous and isotropic” assumption also drives the testing and qualification process for new components. Locations of components that are deemed to be most demanding to a given application are often chosen for qualification testing. Test plans for a new component are then formalized and executed and equivalency of the material tested to the quality requirements, specification, and ultimately the design curves, is then assessed. One can see that this method for obtaining materials definitions inherently ties the definitions to a specific application space and demands that definitions be re-established for every change in application space (component configuration or utilization environment). The traditional material definitions are simply not flexible nor portable.

Traditional material definitions and associated mechanical properties derived from coupons are surrogates for other material with the same chemistry and structure. Coupons from a sub-scale test article may not provide the equivalent structure that may be present in a larger component made from the same material, for example. This means that the material definition must include specific chemistry and structural feature descriptions that bound the application of the empirical test data design curves. For materials that are applied to many component configurations, which result in manufacturing path differences, there then is a need to develop new, individual empirical design systems. In the MBMD approach, models that cover the entire application space for the material can be successfully established and applied to a wide range of components.

It is important to understand the further limitations of materials defined by this traditional approach to the range of the potential design or application space. Under this practice, it may be possible that the initially sampled scale-up component configurations or processing methods do not represent the design space for a final new component configuration. As such, simply applying qualification testing for selected experience-based locations to represent component challenges, may miss potential challenges for the new component. Also, alternate processing methods to achieve equivalency to the original empirical-based definition may be missed, regardless of application demands set on specific locations. It is also possible that standard test plans identify test locations that are of little actual use in discriminating material behavior, while locations of steep structure and property gradients may be overlooked. To ensure conservatism, large numbers of test locations and quantities of tests are required to qualify new component configurations or processing methods used to produce components from a traditionally defined material. The number of tests required can range into the thousands, depending on the size and design criticality of the component. Use of models is required to systematically define test locations of greatest value in determining overall component and material equivalency. Such a formalized infrastructure and workflow has been recently demonstrated for the specification, modeling and critical testing of bulk residual stresses developed and controlled within heat treat quenching processes [8].

Employing a Model-Based Approach

Models for Material and Component Design

We know, in fact, that material properties are generally not uniform throughout a manufactured component; rather they are path-dependent and location-specific. They are the result of process path-dependent physical mechanisms, such as local recrystallization, grain growth, rate of precipitation or growth of precipitates or other phase transformation mechanisms for example. It is proposed that location-specific definitions of material microstructure should be applied to provide needed chemistry-processing-structure-property relationship information for design, and this information should not be lost within an assumption of random scatter from sampling various regions of a part. This means that models for deriving material structure from processing, and for defining properties as a function of materials structure, form the backbone of MBMDs. Figure 3 shows an example processing, structure, property, performance map that depicts relationships and associated model linkages. The models need to operate within simulation environments that track spatio-temporal variations in materials chemistry, as well as the location-specific variations in process history.

Toward this end, DARPA sponsored a unique program in the early 2000’s that was targeted at demonstrating the feasibility of decreasing the time needed for material development through the use of computational materials engineering tools and methods. The program, entitled Accelerated Insertion of Materials (AIM), was successful in demonstrating the feasibility of using physics–based models to develop and optimize materials and associated components. An overall framework for linking processing-structure-property-performance parameters was established and further demonstrated by many researchers and organizations [9,10,11]. Figure 3 shows an example of an AIM design map established for an advanced steel [9]. This systematic approach for materials design enables the co-development of a MBMD that can be used within design, structural analysis, lifing, manufacturing and quality control functions. The approach maps out the relationships that need to be represented within materials models, or when specific models do not exist, by measured materials data.

The transition from a static, empirically generated design minimum property to a MBMD will require advanced models and simulations for mechanical properties based on chemistry and spatio–temporal hierarchical materials structure (including heterogeneities or defect structure), together with their parameterization and validation protocols. As structure is path dependent, this approach requires accurate simulations that provide representations of location–specific structure resulting from manufacturing processes for the geometry or geometries being considered for the final component application, and the consequences of possible heterogeneities occurring at those locations. Our ability to model and simulate thermomechanical processes has progressed rapidly over the past couple decades, allowing reasonably high fidelity prediction of spatially varying mechanical properties for specific well–characterized materials and processes. As an example, Figs. 2 and 4 depict the results of a forging simulation where the color contours depict the gradient of mechanical properties through the forging and final machined components [12, 13].

In Fig. 4, like Fig. 2, a component’s location-specific properties have been simulated based on path-dependent evolution of structure. These contours of predicted mechanical properties were shown to be accurate and, to the level of uncertainty, enable application to new component design optimization functions. These examples are for the nominal alloy composition of Ti-6-4, although the MBMD includes critical elements, such as oxygen, iron, and hydrogen. The MBMD provides predictive capabilities for this material based on chemistry and microstructure through simulation of manufacturing processing sequences, including forging and heat treatment. Unlike the AMS4928 specification, this MBMD enables prediction of unique location-specific properties. Design for Variation (DFV) methods [14] allow for local minimum property variations (from both simulation predictions and measurements) based on uncertainty of chemistry and processing conditions (Fig. 5). This type of MBMD can also help explore alloy chemistry sensitivity and can aid in driving specific chemistry specifications. Figure 6 shows the sensitivity of the mechanical properties of a nominal Ti-6-4 alloy to variations in specific alloying elements. Advanced mechanical properties have been successfully modeled as a function of microstructure as shown in Fig. 7 [15].

Modeling tools can enable assessment of mechanical property sensitivities as a function of specific alloy chemistry. In this example for a nominal Ti-6-4 alloy, the alloy composition has a significant impact on component properties. Iron and oxygen content are independently varied with all other elements and material conditions held at nominal values

The use of physics-based material and process models to define and predict properties of a volume of material within a component design allows for optimization and maximum use of a material’s capability and provides direct guidance relative to critical test locations for validation and qualification, or model-guided testing. DFV methods have been selectively applied in proprietary application to enable engineered selection of test locations that provide the most useful information relative to capabilities and equivalency [8]. This approach also minimizes non-value added testing, where test results do not provide critical assessment to the MBMD or support further uncertainty quantification for the predicted component location-specific properties.

Importantly, the models used to describe the path dependency of microstructure evolving from processes and the mechanical properties resulting from microstructure should be mechanism-based [16, 17]. Thus, physics-based models must form the foundation for MBMDs. A mechanistic basis establishes limits for model applicability and suggests transitions to other types of models as the operational environments explored in design impose different mechanistic conditions on the materials. For example, it would be of little value to use a model for athermal strength prediction outside the range of low temperatures for the actual occurrence of the mechanisms it represents. At higher temperatures, models based on thermally-activated creep mechanisms must be employed. This requires detailed understanding of the operative mechanisms, especially within the context of the model that will represent them. This is where “multi-scale” modeling comes to bear. Multi-scale models are needed to describe and establish the understanding of the underlying mechanisms. Some of these may require first-principles modeling [18, 19], or they may be built at meso- and macro-scale levels. Once the operative mechanisms are understood to the level of acceptable uncertainty, then surrogate models or response surfaces may be employed to support integration of computationally efficient MBE at the component level.

Location-specific control of chemistry and microstructure will ultimately be the future for MBMDs, where the features that control damage generation and, to the degree possible, ultimate failure of a component are contained within the model representations. Some of these features may be at the macro-scale (macro-texture), meso-scale (grain flow), micro-scale (grain, precipitate and defect structures) and atomistic-scale (grain boundary elemental segregation or compositionally dependent anti-phase boundary (APB) energies) [18–20]. Location-specific design and manufacturing control can and is being linked with probabilistic lifing tools and component application-level predictions [21]. The ability and application of linking MBMDs and component design discipline tools and methods will enable maximized use of material capabilities and optimal component designs and performance.

The materials engineering discipline is rapidly advancing toward physics-based understanding of many materials and metallurgical phenomena, which are in turn being expressed in model format which will become the backbone of the MBMD paradigm. Since the materials community has long since expressed materials behavior and property capability to other engineering disciplines through empirical testing and reporting approaches, this new approach of providing design and structural analysis communities with material definitions in the form of models will result in initial reluctance and potential skepticism. To overcome this and enable MBMD integration into other engineering disciplines, the materials community will need to speak the language of verification, validation and uncertainty of models, computational codes and output predictions.

Verification and validation (V&V) are critical elements of MBMD and must be woven into the process to develop, communicate and use models that will be incorporated as part of materials definitions. Naturally, the specific protocols for V&V need to be tailored to the level of system risk that each component and model carries, in order to manage costs within the MBMD framework. Such tailoring demands input from experts within both the materials and design engineering disciplines. Uncertainty quantification is a critical element of verification and validation. This systematic approach focuses the need for critical, highly sensitive inputs required for successful application of such models. The methodology of careful verification and validation of software and models has been previously described [22] and will enable further understanding by the greater engineering community. All models, regardless of sophistication and fidelity, have finite applicability for particular kinds of designs and given design attributes. For each of these, a critical indicator of applicability is the quantification of the model uncertainty. Thus, the MBMD must contain formal uncertainty quantification methods. Knowing and understanding this at the onset is critical.

Qualification of MBMD approaches and components designed and manufactured using these approaches will rely highly on understanding of the capabilities of models that make up the model-based definition. Industry requirements and standards will require that MBMD systems meet or exceed current levels of uncertainty and probabilistic risk. Physics-based understanding of materials and associated validated models will have an advantage over traditional empirical methods to establish design minima and can further support intelligent design of component testing and monitoring processes, which focus on critical tests and locations that more readily support determination of material equivalency and component acceptability. The US Department of Defense and the Federal Aviation Administration are assessing and supporting the use of model-based engineering tools, as with the case for probabilistic lifing methods.

Engineering Interoperability and Materials-Based Engineering Optimization

As MBMDs are established and used, parametric optimization of component designs will include location-specific properties. Through models, this approach directly links component configuration to potential capability of the component and local property capabilities, thus permitting materials capability and true variation to enter the design optimization and MBE. The goal is to obtain local property predictions having quantified uncertainty, and accurate analyses that represent how the component configuration and processing path control material behavior and component performance [23]. As material properties are path dependent, ICME will fully couple manufacturing methods into the design process and enable further advantages to be gained from advancing manufacturing processing methods and controls, which are largely left suboptimal today. MBMD enables complete materials, design, and manufacturing engineering interoperability, as well as materials-based parametric optimization within the paradigm. That is a marked change from the relatively separated engineering domains of today.

Further, aerospace component design and life-assessment (lifing) methods include probabilistic methods. Such probabilistic methods can readily include MBD into the current framework. Engineering (software) tools such as DARWIN can incorporate location-specific material properties and associated uncertainties to support further refined probabilistic predictions of component and system performance [24].

Not only is a MBMD able to specify location-specific properties via simulation, but there are also recent focused projects to support MBD through spatially-resolved physical measurements. One example is the US Air Force sponsored the Foundational Engineering Problems (FEP) program. Project efforts within that program are demonstrating an ability to spatially distribute specific properties throughout a component volume that can support MBMD [8]. For this example, bulk residual stress is the material-based feature that is being predicted on a location-specific basis along with associated uncertainty quantification, with predictions that are supported by location-specific measurements.

Finally, integration of MBMD into the design community has been a challenge in the past. However, recent focused efforts on structure and topological optimization, and associated design tools targeted for advanced manufacturing methods, such as Additive Manufacturing, have opened up the world to highly linked, multiple objective function optimization capabilities. These capabilities can readily incorporate materials and manufacturing models, and associated path-dependent structure and property models. Many efforts today in Additive Manufacturing are actually employing MBMD through design of materials and components for additive manufacturing methods. These model-based tools are being applied to optimization of both material and component properties. Whether the material definition includes deposition defect prediction capabilities and associated impact on mechanical properties, or predictions of residual stresses that are built-in to a component from a specific additive manufacturing process, models are being used to guide material and component design, and control and monitoring of component manufacturing operations. It is possible to predict the formation of various types of void feature, which in turn enables optimization of processing path and elimination of unwanted void features. These same design tools and approaches established for additive manufacturing can and will be applied to conventional and emerging processes with legacy and next-generation materials.

Model-Based Materials Definition in Sustainment Engineering

Sustainment engineering is a critical area of systems engineering and product life-cycle management. Understanding and defining component and system life is critical for all product forms and is largely based on the application space for which the component has been designed and the material from which the component has been produced. Topics such as mission life extension and retirement for cause (RFC) are aiming to expand the application space (time or durability) for fielded components and systems. There are several pieces of information required to arrive at optimal sustainment engineering analyses for operational aircraft. First is the as-manufactured state at the component level in terms of the chemistry-processing-structure-property-performance paradigm. Second is a representation of the time-resolved operational environment experienced by the component. The acronym “TEST” provides a convenient way of summarizing the relevant parameters: Temperature, Environment, Stress, and Time. Third is a description of the current material/component state due to operational use, including inspection indications and decisions about them found during maintenance. Fourth is a relevant MBMD for the material, manufacturing process, and application. As MBMDs are enabling spatial resolution and certification of component properties, it will also be enabling for temporal resolution, and evaluating the consequences of deviations from the initial state developed during service. That is, how will location-specific properties change as a function of time and environment of missions? Use of a MBMD will enable identification of critical properties and critical locations within components that will require defined periodic inspections along with mission cycle monitoring to establish an integrated local-life capability understanding.

In the US Department of Defense, a Technical Data Package (TDP) is often used to capture and convey product detail. These electronic drawings provide the shape of the component and are annotated with additional metadata “necessary to provide the design, engineering, manufacturing, inspection, packaging and quality assurance provisions information necessary to enable the procurement or manufacture of an item.” [25]. The most recent definition of a TDP, defined in MIL-STD-31000A, provides for representation of components using 3D digital solid models. However, the definition of a TDP is built on a traditional, document-based view of materials, treating components as having uniform minimum mechanical properties throughout.

Given a change in the way in which we envision a description of materials, the current TDP structure is insufficient to fully transmit the detailed digital information needed to describe a material for remanufacture or sustainment. As an example, industry is beginning to earnestly rely on modeling of material behavior to inform design and sustainment engineering. Lockheed Martin and Alcoa have recently demonstrated the ability to model the residual stresses created in a large and complex aluminum forging through its various processing steps with requisite fidelity [26]. The resulting model-based, 3D residual stress profiles are integral to the analysis of these components by design engineers, manufacturing engineers, and sustainment engineers. However, the current TDP paradigm might possibly choose to add this information, or not, as supplemental material within the documentation, and not within the core digital definition of the component. Furthermore, under current practice, there are no formal frameworks or standards for relating digital models of various types to each other or to the engineered component itself.

Current structural maintenance practices in the DoD are generally based on a measure of engine operating cycles, meaning maintenance is performed at set intervals nearly regardless of how aggressively the aircraft is flown. The emerging concepts of Digital Twin and Digital Thread seek to build model-based engineering tools and a supporting digital infrastructure, respectively, to provide the needed data and models to shift the maintenance paradigm to one based on actual use [27]. These emerging concepts for total system lifecycle management add to the drivers for MBMDs. With access to a model-based description of a material, a part serial number, and digital thread data on aircraft use, sustainment engineers would be able to rapidly and accurately assess the effects of damage or mission profile changes onto component health. Additionally, operational systems could serve as flying laboratories, providing crucial performance data back to materials and design engineers in validating lifing models, or providing new insights for improvements [28].

Technical Challenges to Achieving a Model-Based Definition of Materials

Material Volume Elements

Though it is possible to use MBMD on discrete and specific small volume elements, it is believed to be most practical and potentially more appropriate to establish Statistically Equivalent Representative Volume Elements (SERVEs) [29]. SERVEs provide for the ability to use small volume elements to represent entire zones of components (larger volumes) deemed to have statistically-equivalent material microstructure and response. The SERVE methods are grounded within objective and quantitative definitions of materials structure, developing performance models specifically tied to those structure definitions, and coupling the responses of that structure to the simulations of the SERVE response. Through the use of SERVEs and direct numerical simulation, the properties of a given part zone are tied to the distribution of all microstructure features included in the SERVE, rather than being related only to specific scalar microstructure descriptors, such as average grain size or phase volume fraction alone. The specific microstruture descriptors linked to the associated failure mechanisms for the specific material and application must be accounted for within each SERVE. The established mechanism-based materials behavior models provide guidance toward establishment of iso-property structure definitions that make-up each SERVE for a material.

For the SERVE methods and MBMD to be successful within and engineering standard practice or protocol, testing methods and requirements need to be adapted for the specific purposes of model parameterization, validation and uncertainty quantification [22, 23]. This differs somewhat from today’s experimental requirements and protocols. For example, within today’s common practice, experiments are often designed to evaluate selected challenging aspects of component designs. However, within MBMD, the experiments need to be designed to evaluate selected challenging aspects of models and SERVES. That requirement changes the experimental plan, materials and specimen preparation, and the instrumentation used. However, the SERVE approach has the payoff that the SERVE (models, structure definitions and response tests) become portable to any component that is designed within the applicable validated domain of the SERVE. Thus, the material is no longer only defined by its use for a specific application. Rather, the material is defined by its validated model/SERVE framework; hence, it is a model-based definition. Physical property testing of material during initial development provides for the validation of the model-based definitions and establishes initial uncertainty bounds for simulation-based predictions. Further use of the MBMD and SERVE in successful engineering designs, captures quality data from engineered test locations and provides the opportunity for further enhancement of the MBMD through Bayesian updating. These in turn can enable further reduction in prediction uncertainty [30].

Quantifying Structure

Within the chemistry–processing–structure–property–performance paradigm, there remains a key barrier: the quantitative description of a material’s internal structure to enable the mathematical linkage between processing and properties through models. However, new means of quantitative analysis to mathematically describe microstructure have recently been developed or are currently under study; for example by Niezgoda, Kalidindi, and Groeber and Jackson [31,32,33,34]. These methods make it possible to represent microstructural features at all relevant scales, from Guinier-Preston Zones, to majority phases, to grain size, and crystallographic texture. One approach employs an n-point correlation functional at features sized from nanometers to millimeters and, of course, multiple characterization methods that are integrated. Such a function has been described as [33]:

Microstructure Function:

Influence Function:

However, over the past 2 decades, 3D microstructural characterization has also begun to have an impact on model building and predictions of materials performance [35]. Those techniques and enabling new software tools, such as DREAM.3D, are being developed for automated 3D microstructural feature analysis, providing a means to structure and analyze multi-modal hierarchical, spatial microstructural and property information [34]. These new tools seek to provide the quantitative means for addressing the explicit representation of microstructure and location specific properties needed for design. Within DREAM.3D, the experimental data from measured microstructures, the analysis tools for processing the microstructure data, the tools for repeatedly and objectively quantifying and analyzing the microstructure, and the tools for representing the microstructure as a SERVE, all come together within a hierarchical digital environment. Figure 8 shows examples of the quantitative microstructural analysis that is used regularly to characterize material and enable linkage to structure-property models in a probabilistic manner. While these software tools and capabilities are in their infancy, they are enabling for the MBMD approach. Thus, for full implementation of the MBMD approach, the materials and structures disciplines will need to work together to understand the tools and their limitations, to develop specification and standards around them, and to fully manage their use in the engineering design system.

Example of statistically relevant microstructure characterization of a Ti64 alloy material relative to special characteristics of micro-textured regions (MTR) that have mechanistic impact on material properties. Specific features are required to be quantified to enable to capture the appropriate statistics that successfully describe MTRs

Finally, recent advances in constitutive modeling, nondestructive evaluation technology and computing power suggest that it should be possible to develop methods for defining and certifying materials in a more direct manner that measures salient microstructural features (including heterogeneities) that control the properties of interest, and simulates the consequences of them. Such methods could be automated. They would also generate digital materials descriptors, permitting the mathematical description of a material, ready for constitutive modeling. Models can be used to define needed structure—property testing for a target level of uncertainty. SERVEs linked to specific microstructural features will drive the level of testing required based on the distribution and effectively the tails of the distribution of the defined metric.

Digital Infrastructure

A model-based engineering approach will require a robust digital infrastructure within which to securely capture, analyze, store and share data and models throughout a supply chain. For materials and manufacturing, development of such an infrastructure is still in its embryonic stage. This is not a new need, and organizations have struggled to even reach common means to describe digital materials data for the past couple decades [36]. However, the rapid move to a digital economy has added incentive to reinvigorate this necessary work. In this new paradigm data is ideally both readable by human and machine to maximize efficiency and information extraction. The requirement for human and machine readable data relies on well-defined vocabularies, schemas, formats, and ontologies, where ontologies define the relationship between terms and concepts within a topical area. By providing these definitions, one can provide unambiguous data to a human and enable a computer to evaluate both complex narrative and structured data to extract new information. Some of these concepts that are so important in data science, such as ontologies, are new to the materials community and relatively little work has been done to date in this area [37].

Importantly, some of the needed methods for handling complex spatio-temporal materials and manufacturing data are being developed under such tools as DREAM.3D [34]. However, agreed upon standards for conveying materials information, such as for microstructure, defects and material properties, are still evolving [38,39,40,41,42]. In order to minimize the barrier to internal data collection, analysis, storage and publishing, e-Collaboration platforms are being recognized as essential components of an organization’s infrastructure [43,44,45]. Collaboration and sharing data and models outside an organization can become quite complicated due to the security required to protect proprietary information. However, recent industry ICME programs have shown that the security concern can be overcome with careful design of the software platform and security protocols [46].

Today, material and component properties are measured, collected and communicated from suppliers to customers by means of certificates of conformance (certs) that define and validate that the component is comprised of material that is equivalent to that used in the design definition. The infrastructure for communicating and capturing cert information is currently significantly inadequate. There is no schema or report format standard. Efforts have been initiated to enable increasingly seamless transfer of such data in digital format without radical change in data structure and management at suppliers and customers. Data recognition tools that can automate the identification, tagging, and parsing of arbitrary material cert data are being developed and will enable a significant shift in materials data management capabilities. This will make all measured data available for component and model updating and further capability advancement.

Living Model-Based Materials Definitions and Continuous Feedback

Once a methodology has been developed to seamlessly capture and share materials data and information throughout the materials life cycle, one can intuitively see the significant benefits to the community. New models for material behavior could be validated much more readily on broader sets of data. Feedback loops could reduce the need for rediscovery of issues and solutions along the entire process and provide new insight to validity of assumptions made earlier in the process. Data analytic techniques could allow new material compositions to be discovered more readily, while operation of systems could effectively become field laboratories used to refine component lifing models using complex and long-term data streams [28, 47, 48]. MBMDs are ideal platforms for continuous improvement and harvesting of production and operational data for increased knowledge. The goal of a material definition is to provide the design community with specific capabilities for a given material along with specific uncertainties. Reincorporation of production data from varied component configurations produced from myriad methods provides for an opportunity to develop high fidelity models based on rich and varied data sets. This continuous life-cycle loop of using mechanistic models to develop materials and the use of production data to further refine the uncertainty of model predictions, provides a framework for a modern multi-scale materials and structure infrastructure that beneficially changes the interactions between design, materials and manufacturing disciplines. This material, process, and component design infrastructure will enable a continuous improvement process of Cradle-to-Cradle learning.

Summary and Conclusions

Model-based materials definitions are seen as the next step in the evolution of engineering disciplines where materials and manufacturing technology and capabilities are integrally linked to component and systems design and structural analysis. Use of physics-based materials models to define the location-specific properties within components, based on path-dependent processing methods, will provide a means to include both materials and manufacturing processes into parametric component design processes. Critical elements of this approach will include accurate physics-based models, automated and noninvasive methods to quantify microstructure, methods for defining and managing the spatio-temporal information, linkage of MBMDs to design processes, engineering test program data, production certification reports, and in-service performance data to validate MBMDs. Many of these critical elements are readily available, but some need further development along with means to holistically link each element into an auditable, revision controlled, self-consistent design system. A holistic data and informatics backbone is needed throughout.

Materials science and engineering tools and methods have been changing at increasing rates over the past several decades. Increases in characterization method capabilities have led to greater physics-based understanding of materials phenomena and associated mechanisms. Computational methods have likewise advanced at unprecedented rates and are allowing accurate prediction of path-dependent microstructural state. By extension, advances in theoretical and applied mechanics have led to the ability to accurately predict a wide range of material properties based on representative microstructural volume elements. Collectively, it is now possible to predict physical and mechanical properties for near-arbitrary compositions, by through-process modeling from raw form (e.g., ingot, compact, etc.) through finished component. Applying and developing these capabilities within an affordable, ubiquitous, and available infrastructure remains a challenge as the practice evolves.

Industry is already taking advantage of modeling tools and methods [8]. Step-wise introduction of the larger MBMD system would be useful so that it does not create too much disruption to standard processes and protocols. There are efforts on-going where MBMD is being applied in parallel to conventional methods to help understand the benefits of greater fidelity of material definition and process control. This “soft” use is opening-up eyes to how parts can be enhanced and to what may not be currently understood about parts based on conventional definition methods.

It is the opinion of the authors that the traditional approach to defining a material by chemistry ranges, material process ranges, and static property minima will migrate to MBMDs, due to the many benefits that result from this new capability, including:

-

Development and modification of materials for specific design requirements

-

Integration of MBDs into parametric design processes, enabling location-specific properties to be incorporated

-

Maximizing the use of legacy materials via more efficient tailoring of processing and properties

-

Elimination of minimum-property focus in materials development and application

-

Enabling probabilistic design and component lifing methods that include location-specific mechanical property capabilities

-

Condition-based in-service component assessment and maintenance

-

Engineered test plans that decrease the quantity (and associated costs) and increase the value of quality certification tests

-

Digital material specifications that are largely mathematical in nature and focused on key microstructural and chemical attributes, instead of sampling test material

While not specifically developed and discussed in this manuscript, the authors also see benefits to applying MBMD in the following areas:

-

Improved material quality and reduced rejection rates

-

Simpler and more rapid heat lot / batch certifications for shipment

-

More rapid facility and product qualification demonstrations

-

More rapid field-based service inspections and repair validation

References

National Defense Industrial Association (2011) Final report, Model-based engineering, NDIA M and S, Feb. 2011

Whittenburg M, Whittenburg R (2013) Using MIL-STD-31000a to support better buying power 2.0, contract management, October 2013, 32–43

Committee On Integrated Computational Engineering (2008) Integrated computational materials engineering: a transformational discipline for improved competitiveness and national security. National Academies Press, Washington, DC

The White House (2011) The materials genome initiative. https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/materials_genome_initiative-final.pdf. Accessed 21 March 2017

Furrer D, Liu X, Naik R, Venkatesh V (2016) Model-based materials definitions for design and structural analysis. Paper presented at the Annual Meeting of The Minerals, Metals, and Materials Society, Nashville, TN, 15–18 February 2016

Battelle Memorial Institute (2016) Metallic materials properties development and standardization (MMPDS), https://www.mmpds.org/ Accessed 4 March 2017

Wichita State University (2016) Composite materials handbook, http://www.cmh17.org/. Accessed 24 March 2017

Cernatescu I, Venkatesh V, Glanovsky JL, Landry LH, Green RN, Gynther D, Furrer DU, Turner TJ (2015) Residual stress measurements implementation in model validation process as applied in the United States Air Force foundational engineering problem program on ICME of bulk residual stress in Ni Rotors. In: 56th AIAA/ASCE/AHS/ASC structures, structural dynamics, and materials conference, AIAA SciTech Forum, Kissimmee, FL, 5–9 January 2015. https://doi.org/10.2514/6.2025-0387

Olson GB (1997) Computational design of hierarchically structured materials. Science 277:1237–1242. https://doi.org/10.1126/science.277.5330.1237

Reed RC, Tao T, Warnken N (2009) Alloys-by-design: application to nickel-based single crystal superalloys. Acta Mater 57(19):5898–5913. https://doi.org/10.1016/j.actamat.2009.08.018

Allison J, Li M, Wolverton C, Su X (2006) Virtual aluminum castings: an industrial application of ICME. JOM 58(11):28–35. https://doi.org/10.1007/s11837-006-0224-4

Furrer D, Chatterjee A, Shen AG, Semiatin S L, Miller J, Glavicic M, Goetz R, Barker D (2007) Development and application of microstructure and mechanical property models for titanium alloys. In: Minomi M, Akiyama S, Ikeda M, Hagiwara M, Maruyama K (eds) Ti2007 science and technology, the Japan institute of metals, pp 781–788

Glavicic MG, Venkatesh V (2014) Integrated computational materials engineering of titanium: current capabilities being developed under the metals affordability initiative. JOM 66(7):1310–1320. https://doi.org/10.1007/s11837-014-1013-0

Reinman G, Ayer T, Davan T, Devore M, Finley S, Glanovsky J, Gray L, Hall B, Jones C, Learned A, Mesaros E, Morris R, Pinero S, Russo R, Stearns E, Teicholz M, Teslik-Welz W, Yudichak D (2012) Design for variation. Qual Eng 24(2):317–345. https://doi.org/10.1080/08982112.2012.651973

Adams P (2012) Presented at Aeromat, Charlotte, NC, June 19, 2012

Furrer DU (2011) Application of phase-field modeling to industrial materials and manufacturing processes. Curr Opinion Solid State Mater Sci 15(3):134–140. https://doi.org/10.1016/j.cossms.2011.03.001

Sangid MD, Sehitoglu H, Maier HJ, Furrer DU, Glavicic MG, Stillinger J (2012) Role of microstructure in predicting fatigue performance. In: 53rd AIAA/ASME/ASCE/AHS/ASC structures, structural dynamics and materials conference, 23–26 April 2012, Honolulu, Hawaii

Gorbatov OI, Lomaev IL, Gornostyrev YN, Ruban AV, Furrer D, Venkatesh V, Novikov DL, Burlatsky SF (2016) Effect of composition on antiphase boundary energy in Ni3Al based alloys: ab initio calculations. Phys Rev B 93:224106

Woodward C (2011) Ab-initio molecular dynamics simulation of molten Ni-based superalloys. AFRL-RX-WP-TP-2011-4370 air force research laboratory. OH, Wright-Patterson AFB. http://dtic.mil/cgi-bin/GetTRDoc?AD=ADA553357, Accessed 15 May 2017

Rugg D, Furrer D, Brewitt N (2008) Textures in titanium alloys—an industrial perspective on deformation. In: Rollett A D (ed) American ceramics society, ceramic transactions, volume 200, a collection of papers presented at the 15th international conference on texture of materials (ICOTOM 15), June 1–6, 2008, Pittsburgh, Pennsylvania, pp 521–533

Enright MP, McFarland J, McClung R, Wu W-T, Shankar R (2013) Probabilistic integration of material process modeling and fracture risk assessment using gaussian process models. In: 54th AIAA/ASME/ASCE/AHS/ASC structures, structural dynamics, and materials conference, Boston. https://doi.org/10.2514/6.2013-1851

Cowles BA, Backman DG, Dutton RE (2015) Update to recommended best practice for verification and validation of ICME methods and models for aerospace applications. Integrating Mater Manuf Innov 4:2. https://doi.org/10.1186/s40192-014-0030-8

Panchal JH, Kalidindi SR, McDowell DL (2013) Key computational modeling issues in integrated computational materials engineering. Comput Aided Des 45:4–25. https://doi.org/10.1016/j.cad.2012.06.006

Chan KS, Moody J (2016) A Hydrogen-Induced decohesion model for treating cold dwell fatigue in Ti-Based alloys. Metall Mater Trans A 47A:2058–2072. https://doi.org/10.1007/s11661-016-3367-0

Department of Defense (2013) Standard practice, Technical data packages. MIL-STD-31000A, 26 February 2013

Ball DL, James MA, Bucci R, Watton J, DeWald AT, Hill MR, Popelar CF, Bhamidipati V, McClung RC (2015) The impact of forging residual stress on fatigue in aluminum, SciTech 2015, Kissimmee, Florida, 5–9 January 2015

Kobryn P, Tuegel E, Zweber J, Kolonay R (2017) Digital thread and twin for systems engineering: EMD to disposal. In: 55th AIAA aerospace sciences meeting 9–13 January 2017, Grapevine. https://doi.org/10.2514/6.2017-0876

Larsen JM, Jha SK, Szczepanski CJ, Caton MJ, John R, Rosenberger AH, Buchanan DJ, Golden PJ, Jira JR (2013) Reducing uncertainty in fatigue life limits of turbine engine alloys. Int J Fatigue 57:103–112

Ghosh S, Dimiduk DM (eds) (2011) Computational methods for microstructure-property relationships. Springer, Berlin

Kennedy MC, O’Hagan A (2001) Bayesian calibration of computer models. J R Stat Soc Ser B (Stat Methodol) 63(3):425–464. https://doi.org/10.1111/1467-9868.00294

Niezgoda SR, Yabansu YC, Kalidindi SR (2011) Understanding and visualizing microstructure and microstructure variance as a stochastic process. Acta Mater 59:6387–6400

Niezgoda SR, Kanjarla AK, Kalidindi SR (2013) Novel microstructure quantification framework for databasing, visualization, and analysis of microstructure data. Integrating Mater Manuf Innov 2:3. https://doi.org/10.1186/2193-9772-2-3

Kalidindi SR (2016) Hierarchical materials informatics. Butterworth-Heinemann, ISBN: 9780124103948

Groeber MA, Jackson MA (2014) DREAM. 3D: a digital representation environment for the analysis of microstructure in 3D. Integrating Mater Manuf Innov 3(5). https://doi.org/10.1186/2193-9772-3-5

Kral M, Spanos G (1999) Three-dimensional analysis of proeutectoid cementite precipitates. Acta Metall 47(2):711–724

Rumble J (2014) E-materials data. ASTM international. Standardization news, http://www.astm.org/standardization-news/perspective/ematerials-data-ma14.html. Accessed 22 March 2017

Cheung K, Hunter J, Drennan J (2009) Matseek: an ontology-based federated search interface for materials scientists. IEEE Intell Syst 24:47–56. https://doi.org/10.1109/MIS.2009.13

Austin T, Bullough C, Gagliardi D, Leal D, Loveday M (2013) Prenormative research into standard messaging formats for engineering materials data. Int J Digit Curation 8(1):5–13. https://doi.org/10.2218/ijdc.v8i1.245

Michel K, Meredig B (2016) Beyond bulk single crystals: a data format for all materials structure–property–processing relationships. MRS Bull 41(8):617–623

Diehl M, Eisenlohr P, Zhang C, Nastola J, Shanthraj P, Roters F (2017) A flexible and efficient output file format for grain scale multiphysics simulations. Integrating Mater Manuf Innov. https://doi.org/10.1007/s40192-017-0084-5

Schmitz G, Prahl U, Farivar H (2017) Scenario for data exchange at the microstructure scale. Integrating Mater Manuf Innov. https://doi.org/10.1007/s40192-017-0092-5

MATerials Innovation Network (2017) Georgia tech institute for materials, Georgia institute of technology. https://matin.gatech.edu/. Accessed 15 May 2017

Jacobsen MD, Fourman JR, Porter KM, Wirrig EA, Benedict MD, Foster BJ, Ward CH (2016) Creating an integrated collaborative environment for materials research. Integrating Mater Manuf Innov 5:12. https://doi.org/10.1186/s40192-016-0055-2

Carey NS, Budavári T, Daphalapurkar N, Ramesh KT (2016) Data integration for materials research. Integrating Mater Manuf Innov 5:7. https://doi.org/10.1186/s40192-016-0049-0

Puchala B, Tarcea G, Marquis EA, Hedstrom M, Jagadish HV, Allison JE (2016) The materials commons: a collaboration platform and information repository for the global materials community. JOM 68. https://doi.org/10.1007/s11837-016-1998-7

Metals Affordability Consortium (2017) MAIHub. https://maihub.org/. Accessed 24 January 2017

Kalidindi SR (2015) Data science and cyberinfrastructure: critical enablers for accelerated development of hierarchical materials. Int Mater Rev 60(3):150–168

Kalidindi SR, de Graef M (2015) Materials data science: current status and future outlook. Annu Rev Mater Res 45:171–193. https://doi.org/10.1146/annurev-matsci-070214-020844

Acknowledgements

The authors wish to thank V. Venkatesh, Pratt and Whitney, P. Kobryn, Air Force Research Laboratory, for valuable conversations that helped inspire this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Furrer, D.U., Dimiduk, D.M., Cotton, J.D. et al. Making the Case for a Model-Based Definition of Engineering Materials. Integr Mater Manuf Innov 6, 249–263 (2017). https://doi.org/10.1007/s40192-017-0102-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40192-017-0102-7