Abstract

Age-related macular degeneration (AMD) is a leading cause of severe vision loss worldwide, with a global prevalence that is predicted to substantially increase. Identifying early biomarkers indicative of progression risk will improve our ability to assess which patients are at greatest risk of progressing from intermediate AMD (iAMD) to vision-threatening late-stage AMD. This is key to ensuring individualized management and timely intervention before substantial structural damage. Some structural biomarkers suggestive of AMD progression risk are well established, such as changes seen on color fundus photography and more recently optical coherence tomography (drusen volume, pigmentary abnormalities). Emerging biomarkers identified through multimodal imaging, including reticular pseudodrusen, hyperreflective foci, and drusen sub-phenotypes, are being intensively explored as risk factors for progression towards late-stage disease. Other structural biomarkers merit further research, such as ellipsoid zone reflectivity and choriocapillaris flow features. The measures of visual function that best detect change in iAMD and correlate with risk of progression remain under intense investigation, with tests such as dark adaptometry and cone-specific contrast tests being explored. Evidence on blood and plasma markers is preliminary, but there are indications that changes in levels of C-reactive protein and high-density lipoprotein cholesterol may be used to stratify patients and predict risk. With further research, some of these biomarkers may be used to monitor progression. Emerging artificial intelligence methods may help evaluate and validate these biomarkers; however, until we have large and well-curated longitudinal data sets, using artificial intelligence effectively to inform clinical trial design and detect outcomes will remain challenging. This is an exciting area of intense research, and further work is needed to establish the most promising biomarkers for disease progression and their use in clinical care and future trials. Ultimately, a multimodal approach may yield the most accurate means of monitoring and predicting future progression towards vision-threatening, late-stage AMD.

Plain Language Summary

Age-related macular degeneration, or AMD, is an eye disease that causes vision loss. Worldwide, the number of people with AMD is increasing. It is difficult for doctors to know who, among those with AMD, will get worse and lose some of their sight, and who will not. Researchers are trying to find early signs that predict whether AMD will get worse and ways to track AMD progression over time. These signs are known as “biomarkers.” They can be structural (seen in the structures inside the eye), functional (a change in how well someone sees), genetic, or proteins found in the blood. Being able to identify people with AMD that are most at risk of losing their vision will help to make sure they get more frequent review so that interventions can be started quickly before vision is lost permanently. Some structural and functional biomarkers are already well known, while others may be useful and are being intensively researched. Changes in the blood markers need much more research to be useful. Researchers are also looking at how to combine data from different biomarkers. This may be a better way to follow worsening of AMD over time compared to using a single biomarker. In the future, we may also be able to use artificial intelligence to help combine all biomarker data. This is an exciting area of research that will be important to help improve the vision outcomes for people with AMD.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Age-related macular degeneration (AMD) is a leading cause of severe irreversible vision loss, with a global prevalence that is predicted to substantially increase. |

Identifying early biomarkers indicative of risk for progression from intermediate AMD to vision-threatening late stages is key to ensuring individualized management and timely intervention. |

There are already several well-established structural biomarkers, including drusen volume and pigmentary abnormalities, as well as a range of multimodal biomarkers under investigation that provide additional information about risk in the future. |

Further work is needed to establish the most promising functional biomarkers indicative of risk for disease progression, as well as other risk factors, and their utility in clinical care and future trials. |

Introduction

Age-related macular degeneration (AMD) is a leading cause of severe irreversible vision loss in high-income countries [1,2,3,4]. The global prevalence of AMD is estimated at 170 million people, and this is expected to increase to 288 million people by 2040; in Asia, prevalence is expected to double over this time [5, 6]. Risk factors for AMD include age, genotype, smoking, and other lifestyle factors [7].

AMD is a progressive disease, and the Beckman classification can be used clinically to define disease stage as early, intermediate, or late depending on the worse-affected eye [8, 9]. Early AMD is typically defined as the presence of medium drusen (≥ 63–124 µm) without pigmentary abnormalities [8, 9]. Intermediate AMD (iAMD) may be defined as the presence of at least one large druse (125 µm or larger in size) and/or pigmentary abnormalities, or as the presence of medium drusen with pigmentary abnormalities [8, 9]. Finally, late AMD is classified as either neovascular AMD (nAMD) and/or geographic atrophy (GA) [8, 9].

A systematic review in 2020 estimated the prevalence of early AMD or iAMD in Europe at 25% in people aged ≥ 60 years [10]. The rate of progression from iAMD to late AMD is estimated at approximately 27% over 5 years [11]; however, data are highly variable and depend on definitions used to define the earlier stages of disease [12]. Among eyes with iAMD, progression to late-stage disease is to either nAMD and GA, or both [13, 14]. Although lifestyle modifications and supplements can be beneficial in reducing the risk of progression from earlier stages of AMD to late AMD, no specifically targeted intervention has been approved to prevent development of iAMD or to slow its progression to late AMD once developed [11, 15]. To help develop interventions that aim to prevent or delay progression, or even cause regression of disease, it is essential to better understand who is at greatest risk of progression from iAMD to late AMD and improve our ability to quantifiably track disease progression over time. This would allow clinicians not only to identify patients at greater risk of progression to late-stage, vision-threatening disease, so that their monitoring and counselling can be individualized, but would also allow priority inclusion or stratification of high-risk groups into future interventional clinical trials. Furthermore, it would provide parameters to monitor over time for the assessment of disease progression and help us to better understand potential mechanisms that contribute to different risk profiles and progression rates.

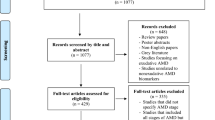

However, the structural biomarkers used as endpoints in current clinical trials of AMD (presence and/or growth of exudation or atrophy [16, 17]) are associated with late stages of the disease when photoreceptor death and loss of best-corrected visual acuity (BCVA) have already occurred. Identification of biomarkers that could act as early-stage surrogate endpoints in clinical trials, prior to substantial visual decline, would be of great clinical utility and increase the feasibility of earlier interventional studies. This will be critical in reducing the growing burden of AMD in the coming decades [5, 6]. In this review, we evaluate potential early structural, functional, genetic, and systemic biomarkers that could be used to assess the risk of progression of iAMD to late vision-threatening stages of disease. Thus, we reviewed the available literature for iAMD biomarkers and their association with progression to late AMD. Structural biomarkers, both conventional and emerging, are reviewed, followed by functional and blood-based parameters as well as genetic biomarkers, selecting only those biomarkers for which there is a minimum body of evidence available (Fig. 1). This article is based on previously conducted studies and does not contain any new studies with human participants or animals performed by any of the authors.

Biomarkers that may be used to assess the progression of intermediate age-related macular degeneration (AMD). AMD age-related macular degeneration, BCVA best-corrected visual acuity, cRORA complete RPE and outer retinal atrophy, EZ ellipsoid zone, GA geographic atrophy, iRORA incomplete RPE and outer retinal atrophy, LLVA low-luminance visual acuity, nAMD neovascular AMD, nGA nascent GA, OCT optical coherence tomography, PRO patient-reported outcome

Structural Biomarkers

Conventional Drusen

Conventional drusen (hereafter referred to as “drusen”) are sub-retinal pigment epithelial (RPE) deposits of lipids and proteins that vary in size; small drusen are classified as < 63 μm, intermediate as 63–124 μm, and large as ≥ 125 μm [18]. Drusen are the hallmark of AMD, and disease progression is strongly associated with an increasing load of drusen [9, 19,20,21,22,23]. However, drusen may also occur at younger ages, often forming recognizable patterns suggestive of an inherited disease, such as the dominantly inherited drusen phenotypes of Malattia leventinese and Doyne’s honeycomb dystrophy rather than typical age-related deposits [24]. Cuticular drusen are another drusen subtype of round yellow lesions between 50 and 75 µm in diameter that can appear at a relatively young age [25, 26]. In time, genetic analysis may be able to differentiate individuals with different drusen phenotypes from those with typical AMD drusen and may help to uncover their unique etiology; these subtypes may require a different treatment strategy to the more typical conventional drusen phenotypes.

Increasing drusen volume is a risk factor for AMD progression; patients with a drusen volume of ≥ 0.03 mm3 are four times as likely to develop any late AMD as those with a volume of < 0.03 mm3 [27]. Baseline RPE/drusen complex (RPE/DC) thickness (a metric for assessing drusen volume) is significantly correlated with progression to central and non-central GA (both p < 0.001), with the odds of developing central GA increasing by 32% for every 0.001 mm3 increase in baseline RPE/DC thickness [21, 28]. The location of drusen may also affect their impact on AMD progression: current evidence suggests that extramacular drusen are not directly associated with progression from iAMD to late AMD but may lead to greater macular drusen size, while increasing central drusen volume does increase the risk of disease progression [27, 29, 30]. Thus, drusen volume remains a major risk factor for AMD progression [21, 31].

Other aspects of drusen may increase their utility as a biomarker, such as internal drusen reflectivity. The internal reflectivity of drusen can vary in appearance, from low-reflective cores to conical debris [20]. Veerappan et al. proposed four subtypes for reflective drusen sub-structures: high-reflective cores (H-type), low-reflective cores (L-type), conical debris (C-type), and split drusen (S-type) [20]. Hyporeflective cores have been associated with increased likelihood of progression to late AMD, although some data indicate that they are not an independent risk factor [32,33,34].

Drusen regression is strongly correlated with progression to late AMD and subsequent atrophy [35]. However, it is recognized that drusen can fluctuate, with some regressing completely without subsequent atrophy [36, 37]. Overall, drusen are a well-established and well-studied biomarker; tracking the lifecycle of drusen shows them increasing in volume with increasing disease severity, followed by regression and atrophy [38]. It is therefore difficult to use the tracking of drusen volume alone as a biomarker for disease progression, as drusen increase or decrease depending on their lifecycle stage. Thus, change in drusen volume alone is insufficient for use as a biomarker of disease progression or regression or as an endpoint in early disease intervention studies, but could be complementary in conjunction with other functional or structural biomarkers if considered to be associated with mechanism of action of the intervention.

Reticular Pseudodrusen

Reticular pseudodrusen (RPD), also known as subretinal drusenoid deposits, are distinguished from conventional drusen by their location above the RPE [39,40,41]. With the advent of multimodal imaging, RPD are recognized as being more frequent than previously realized based on clinical examination of color fundus photography (CFP) alone. They are an area of research focus, as they appear to be a critical sub-phenotype in AMD. RPD can occur in eyes with or without other retinal diseases but appear highly prevalent in AMD [41].

RPD are particularly prevalent in eyes with late AMD and are considered to be a risk factor for progression in the fellow eye of those with late AMD [41,42,43,44]. Although not all studies report RPD as an independent risk factor in individuals with non-late AMD, a post hoc analysis of data from the Age-Related Eye Disease Study (AREDS) 2 study reported that the presence of RPD carried a significant risk for the development of late AMD, especially GA (p < 0.0001) [45]. Eyes with RPD have also been reported to have more rapid growth of atrophic lesions, and with lesions growing towards the RPD [46, 47]. The presence of RPD has been reported to modify the effect of treatment in people with iAMD, suggesting that the RPD phenotype may require different intervention compared with eyes with only drusen [48]. Therefore, determining the presence of RPD assessed by multimodal imaging in patients with AMD is important if we are to fully understand the factors that drive disease progression. Further research that assesses the extent of RPD (e.g., by volume and/or area) could assist in establishing a more quantitative relationship between RPD and progression from iAMD to late AMD, and algorithms are being developed to help with this task [49].

Pigmentary Abnormalities

In addition to drusen volume, pigmentary abnormalities assessed by CFP are the other major traditional risk factor used when assessing AMD severity [23] and the risk for progression to late AMD [50]. Such changes are seen either as regions of hypopigmentation or hyperpigmentation [23]. Pigmentary abnormalities, together with drusen size, make up the factors used in the AREDS classification system for risk prediction [23, 51]. In the AREDS study, the presence of hyperpigmentation and hypopigmentation preceded the onset of GA in 96% and 82% of eyes, respectively, with a mean time to onset of 5 and 2.5 years, respectively [52].

Hyperreflective Foci

Hyperreflective foci (HRF) are defined as lesions seen on optical coherence tomography (OCT) as roundish, hyperreflective lesions in any of the retinal layers. They vary in appearance and location and were originally associated with hyperpigmentation as seen on CFP, calcified drusen, and RPE elevation in AMD [53]. HRF have been generally believed to be migrating RPE cells, but recent work has suggested that many HRF may reflect macrophages that engulf RPE pigment [54, 55]. Not all HRF have detectable hyperpigmentation seen on CFP, suggesting origins other than the RPE [53, 54].

There is some spatial correlation between macular hyperpigmentation as visualized using CFP and hyperreflective foci imaged with OCT, although it is not absolute [56]. The area of HRF in eyes with iAMD correlates with the likelihood of progression to late AMD and development of GA over different periods of time from 1 to 2 years [57, 58]. The topography of HRF may be of specific importance in predicting progression to GA; one retrospective analysis suggested that the concentration of HRF in a given area has a significant impact (p < 0.0001) on local atrophy progression [59]. Intraretinal HRF have also been associated with the development of type 3 macular neovascularization in patients with iAMD and have been in some cases posited to represent early stages of intraretinal neovascularization [60,61,62]. A better understanding of the origins and differences between HRF may well be important when considering predictors of risk.

OCT Signs of Early Atrophy

OCT imaging has allowed us to visualize the very first signs of atrophy, long before they are noted on a CFP or on clinical examination. Incomplete RPE and outer retinal atrophy (iRORA) and complete RPE and outer retinal atrophy (cRORA) are relatively new terms proposed to describe changes seen on OCT as atrophy develops in eyes with AMD [63]. In 2018, the international consensus of the Classification of Atrophy Meeting (CAM) group proposed OCT nomenclature to describe the OCT changes seen [63]. The key structural OCT criteria for cRORA are: a region of signal hypertransmission into the choroid of ≥ 250 μm, colocalized with a zone of attenuation or disruption in the RPE band of ≥ 250 μm in length, and evidence of overlying photoreceptor degeneration. These changes can only be assessed in the absence of an RPE tear [63]. A CAM report in 2020 focused on the defining features of iRORA, which was considered to precede cRORA [64]. iRORA is defined on OCT as a region with the same criteria as cRORA except that the signal hypertransmission into the choroid and zone of RPE attenuation are < 250 μm in length [64]. This terminology was proposed as unifying nomenclature such that researchers could then continue to research these changes in a longitudinal manner and determine the risk associated with their presence [64]. Several studies have now looked at the associated risk of progression in eyes with iRORA to either cRORA or GA [65]. In the sham arm of the LEAD study, which enrolled people with bilateral large drusen, without nascent GA (nGA, see below; [66]), 21% of the cohort had iRORA at baseline; an additional 31% developed iRORA over a 3-year period [67]. Prevalent or incident iRORA was associated with an increased rate of GA development (adjusted hazard ratio [HR] 12.1) [48, 67]. Another study reported the prevalence of iRORA in AMD to be around 16%, similar to that reported from LEAD [68]. No reports have, as yet, been published for the prevalence of cRORA in non-late AMD, although the LEAD cohort, which only included eyes with iAMD without nGA, had 3% with cRORA at baseline (personal communication).

“Nascent GA” (nGA) is a term that was proposed in 2014 and suggested as an early surrogate endpoint for studies of iAMD progression; it was defined by the presence of subsidence of the outer plexiform layer (OPL) and inner nuclear layer, and/or a hyporeflective wedge-shaped band within Henle’s fiber layer of the OPL [66]. In one longitudinal study of eyes with iAMD, nGA was shown to be highly predictive of progression to GA (probability of progression at 24 months was 38%; adjusted HR 78.1; p < 0.001), and the development of nGA explained 91% of the variance in time to development of GA [65].

Currently, these early signs of atrophy are not regulatory authority-approved endpoints that can be used in registration studies of potential interventions that aim to slow progression of non-late AMD. However, they can be used to enrich a population of high-risk iAMD that are more likely to progress and could potentially provide an earlier endpoint for use in early phase clinical trials to determine efficacy of interventions. Continued efforts are required to better define the changes that occur as cell death commences and eyes progress towards vision-threatening lesions. Precise anatomical definitions may require refinement as researchers and reading centers try to implement the current definitions into pragmatic clinical trial risk factors and endpoints [69]. The required OCT signs may also vary depending on the design and aims of a study. For example, a study might enrich a high-risk cohort with iRORA or nGA or could use nGA or cRORA as a surrogate endpoint for GA. Ultimately, linking these anatomical changes to functional correlates will be important to understand the functional implication of these anatomical signs.

OCT Signs of Neovascular Conversion

Nonexudative macular neovascularization (neMNV) has only recently been appreciated as an entity and, when present, signifies a high risk of conversion to exudation MNV [70,71,72]. As such, recognizing OCT signs that suggest their presence is invaluable for appropriate counselling of patients. One such sign is a specific subset of the double-layer sign, characterized by the presence of a shallow, irregular RPE elevation (SIRE) [73]. In a cohort of 233 eyes, all those with neMNV had the SIRE sign, while 92% of eyes without neMNV similarly lacked SIRE. SIRE was found to have a positive predictive value of 25% and negative predictive value of 100% for neMNV [73].

One ongoing research study (EYE-NEON) is investigating the prevalence and incidence of neMNV and the role of several biomarkers as predictors for conversion to nAMD [72]. Thickening of the subretinal layers and presence of subretinal hyperreflective material (SHRM) are also early signs that may suggest the development of neovascularization and potential exudation [31, 74, 75].

Emerging Structural Biomarkers

Several emerging structural biomarkers, currently less established than those previously discussed, may play an important future role in determining the risk of progression of iAMD. Although they require further investigation and validation, their inclusion in studies may enrich the information available for triaging patients at high risk of progression to late AMD.

The term “ellipsoid zone” was first used in 2014 to describe a hyperreflective band thought to represent the photoreceptor inner segment ellipsoid [76,77,78,79], which is dense with mitochondria and thus central to the health and function of photoreceptors [78]. Changes in the intensity of the ellipsoid zone, as measured indirectly by OCT, may therefore be a biomarker for retinal disease severity and progression [76]. However, ellipsoid zone reflectivity is not directly measurable by OCT but instead calculated through complex post processing; therefore, its use may be limited in clinical settings, at least perhaps until artificial intelligence (AI) will be able to assist with the analysis. Decreased intensity of ellipsoid zone reflectivity has been reported in a study of eyes with iAMD or in another of eyes with non-neovascular AMD and may indicate early photoreceptor damage [76, 80, 81].

Persistent hypertransmission defects (assessed by human graders using en face swept-source OCT as bright lesions with greatest linear dimension > 250 µm [82]) have also been proposed as an early predictor of GA formation [82, 83]. In one study of iAMD, 96% developed persistent hypertransmission defects of ≥ 250 μm before GA formation [82]. Furthermore, the development and growth of hypertransmission defects can be tracked in individuals with iAMD [83, 84]. The grading of persistent hypertransmission defects was found to be repeatable in one study reporting 98.2% accuracy and 97% agreement between graders over 1177 defects [83].

Choroidal thickness maps can be constructed with enhanced-depth imaging of wide-field swept-source OCT and typically include the layers between Bruch’s membrane and the chorioscleral interface [85]. Choroidal thickness is thinner in patients with AMD than in those without retinal pathology [86]. In one study, reduction in the choriocapillaris complex thickness was significantly associated with AMD progression over 5 years of follow-up (p < 0.001): a thickness of < 10.5 µm was associated with a high probability of progression, whereas a thickness of > 10.5 but < 20.5 µm was associated with moderate probability [87]. However, the relationship between choroidal thickness and AMD status is affected by several other factors, such as axial length, age, and possibly the presence of RPD, which complicates the reported associations [86, 88].

With the recent advances in imaging, it is possible to examine the choroid, including the choriocapillaris, without using invasive dye-based tests. OCT angiography (OCTA) has propelled increased interest in studying the blood supply to the outer retina, its characteristics, and their association with AMD. Interpretation of the images and acknowledging the need to deal with artifacts (such as the shadows cast by drusen) are both areas that require considerable expertise to ensure that correct associations are drawn between potential blood flow deficits and AMD [89,90,91]. With these caveats in mind, data from a number of small studies suggest that flow deficits increase as AMD progresses and may be linked to the development of iRORA and cRORA [92,93,94,95,96]. However, the relationship between choriocapillaris alterations and iAMD progression has not been consistently observed in all studies. One study found no significant difference in nonperfused areas between patients with unilateral, bilateral, or no iAMD [97]. It is therefore not yet clear what the temporal association is between flow deficits and the beginning of atrophy and what role, if any, flow deficits may play as risk factors for AMD disease progression.

The precise relationship between these emerging structural risk factors and disease progression requires further research. Additional published data, including the ability to consistently grade across reading centers, will help draw firm conclusions about the relationship of these biomarkers and disease progression.

Functional Markers and Patient-Reported Outcomes

Alongside patient-reported outcomes (PROs), functional vision tests are an important aspect of disease monitoring. Functional tests can be conducted under high-luminance and high-contrast conditions or under reduced-luminance and/or varying contrast conditions. Functional deficits within a cohort, all with the same stage of disease (e.g., iAMD), can vary along a broad spectrum, with many patients being functionally indistinguishable from those without disease. In addition, given the subjective nature of functional tests, their reproducibility both between and within patients is likely to be more variable than grading structural changes. This provides challenges when considering the longitudinal tracking of these parameters and their potential as surrogate endpoints.

Best correct visual acuity (BCVA), a high-luminance, high-contrast test commonly used to assess visual function [98], is often normal in people with iAMD. Data from the large-scale MACUSTAR study indicate that the majority of patients with iAMD can be functionally indistinguishable from control populations [99, 100]. However, a few studies have shown that, compared with controls, patients with iAMD can have significant deficits in BCVA (p < 0.05) [101] and that BCVA declines in iAMD patients over 12 months [99, 102]. Unlike late-stage disease, in which vision is imminently threatened, BCVA is not considered a useful parameter to track progression of iAMD. As such, other functional measures need to be considered.

Low-luminance visual acuity (LLVA) can be measured by placing a neutral density filter in front of either the study eye or an illuminated letter chart and asking the participant to read the chart [103, 104]. Compared with controls, patients with iAMD have significant deficits in LLVA (p < 0.05) [101]. Nevertheless, research examining LLVA along with microperimetry in the same cohort has suggested that LLVA has limited sensitivity for detecting progression to late AMD [105, 106].

Microperimetry is a form of visual field test that examines retinal sensitivity to light in multiple locations across the macula, in conjunction with direct fundus examination, permitting correlation between pathology and function [107]. Furthermore, microperimetry can track a change in function over time [108]. However, microperimetry remains a subjective test, requiring engagement and concentration from the patient as well as a reasonable level of vison to ensure good fixation. Its accuracy is therefore reduced at lower levels of visual acuity, such as when disease advances [108]. Both mesopic and scotopic microperimetry show significant reduction in eyes with iAMD, although like BCVA, there is considerable variation among non-late AMD cohorts [109,110,111]. Mesopic microperimetry has a structure-function relationship in eyes with drusen [112], and reduction in mesopic sensitivity has been demonstrated in early and iAMD [113,114,115]. One strategy to improve the ability to show change over time could be to individualize the test grid and so gain information in specific regions identified as being at risk of progression, such as areas showing early OCT signs of atrophy. Although time intensive to perform, scotopic microperimetry may be of particular use in iAMD cohorts [116]. In eyes with earlier stages of AMD, scotopic retinal sensitivity is more decreased than photopic or mesopic sensitivity compared to healthy controls [117, 118]. With reasonable test-retest reliability [111], retinal sensitivity as assessed by microperimetry may be a useful functional early biomarker for patients with iAMD, being able to track progression.

Patients with non-late AMD will often volunteer that they have challenges with dark adaptation; they have difficulty going from a bright area into one that is dimly lit, often needing to wait some time before they can see again [119, 120]. This can happen when driving into a dark tunnel or underground car park or going indoors after being outside on a bright day. This functional deficit is known as dark adaptation [119] and has been shown to be one of the earliest functional deficits in AMD [121,122,123]. There is much interest in early deficits in dark adaption in AMD, with prolongation seen in patients with iAMD and decline shown over 24 months as disease progresses [121, 122]. The dynamic processes of rod recovery, such as using the rod intercept time (RIT), seem most promising as a biomarker [124]; absolute threshold testing is impractical as it can take well beyond a reasonable time to complete the test. Even in normal elderly populations without signs of AMD, those with an abnormal dark adaptation time (mean RIT of 15.1 versus 9.1) were almost twice as likely to develop AMD 3 years later [124]. Dark adaptation deficits also appear to be considerably worse in those with AMD and RPD [121, 123, 125] and may therefore help us to understand the underlying pathology associated with RPD. Dark adaptation may be one of the most promising functional tests in terms of identifying early deficits but is not simple to conduct, can be lengthy, and is not enjoyed by participants, making it difficult to scale up and implement in large trials, or in clinical practice.

Contrast sensitivity is impaired in patients with iAMD [126]. Examining mesopic (compared to photopic) contrast sensitivity with the Pelli-Robson chart has been shown to identify functional deficits in iAMD that can be differentiated from normal aging [127]. However, inter-session variability for Pelli-Robson scores is high, which could limit their utility as a biomarker that tracks progression in clinical trials [128]. The cone-specific contrast test (CCT), which was recently employed in a natural history study [121], is an alternative-varying contrast test that presents randomized colored letters that are visible only to L, M, or S cones in decreasing steps of contrast [129]. Compared with controls, patients with iAMD have significant deficits in red cone-specific contrast (p < 0.05) [101, 121]. The CCT is yet to be widely adopted; therefore, limited data are available. Automated assessment of contrast sensitivity has also been examined under low-luminance conditions, with deteriorating performance correlating with disease progression [130]. Although contrast sensitivity is a simple test to perform in the clinic, further work is needed to determine whether it can be used as a biomarker for iAMD progression.

At present, there are limited published data on PROs as a means to track and/or measure iAMD progression, and further formal validation will be needed to assess their utility [131]. Common PRO measures, including the 25-item National Eye Institute Visual Function Questionnaire (NEI-VFQ-25) [132], Functional Reading Independence (FRI) Index [133], and Impact of Vision Impairment Questionnaire (IVI) [134], do not appear sensitive enough to capture changes at early disease stages [135]. The Night Vision Questionnaire (NVQ-10) attempts to capture difficulties experienced by patients in low-light conditions and has been used to assess function in patients with iAMD [135]; nonetheless, its utility in iAMD remains unclear. The MACUSTAR study has developed a new PRO for patients with AMD (the 37-item Vision Impairment in Low Luminance [VILL-37]) [131, 136], which will potentially provide improved metrics.

Although functional testing and identifying the best parameters to measure are challenging, functional measures better reflect the impact of the disease on patients. As such, they are extremely important to include in any interventional trial and are of great interest to regulatory authorities. Using a combination of both structural and functional biomarkers may potentially, as combined endpoints, improve our ability to track and/or predict progression of iAMD. Such an approach has been used in glaucoma in which optic disc biomarkers are combined with visual field testing [137, 138].

Blood and Plasma Biomarkers

Over the past decade, there has been discussion on whether AMD should be categorized as a systemic inflammatory disease [139, 140]. To support this contention, there have been reported alterations in peripheral blood flow in those with AMD compared with normal control populations, and there are a number of studies suggesting potential associations between changes in protein or lipid levels detectable in the blood and plasma and risk of AMD and its progression [141, 142]. C-reactive protein (CRP) level has been suggested as a possible independent risk factor for progression from iAMD to late AMD, as elevated CRP levels have been associated with late AMD (odds ratio [OR] 3.12; 95% confidence interval [CI] 1.38–7.07) and AMD progression (OR 1.90; 95% CI 0.88–4.10) [143,144,145]. Interestingly, a combination of elevated CRP and the CC (Y402H) genotype in the CFH gene resulted in a superadditivity of risks, with an OR of 19.3 (95% CI 2.8–134) for late AMD and 6.8 (95% CI 1.2–38.8) for AMD progression [146]. In another study, CRP was significantly associated with choroidal thinning in patients with iAMD (p = 0.01) [88].

High levels of high-density lipoprotein cholesterol (HDL-C) in plasma have been reported and are a risk factor for progression to late AMD [147, 148]. HDL-C levels of one standard deviation higher than the mean have been associated with ORs for AMD of 1.17 in European populations (p < 0.001) and 1.58 in Asian populations (p < 0.001) [147]. Regarding newer findings, one study found that patients who progressed to late AMD (median time to conversion: 25.2 months) could be differentiated from those who did not progress according to levels of lysozyme C, trefoil factor 3, ribonuclease KS6, and SAP3 [149]. Although these biomarkers could indicate the risk of progression for an individual patient, more work is needed to validate the findings and to determine how best to use them to assess risk of progression.

Other markers of chronic inflammation, most notably interleukin (IL)-6, have been associated with late-stage AMD; one systematic review found that although early AMD was not strongly associated with elevated IL-6 levels, late AMD (both GA and nAMD) was associated with significantly elevated IL-6 levels (p = 0.003) [150]. In one small prospective study elevated plasma levels of both IL-6 and IL-8 were apparent in patients with GA compared with healthy controls; furthermore, plasma levels of IL-6 correlated with GA enlargement rate (R2 = 0.23; p = 0.0035) [151]. However, it is not yet clear what happens to IL-6 levels in earlier stages of disease, and as such we do not know whether IL-6 plasma levels could be used as a biomarker for risk of progression to late AMD.

Plasma metabolomics may also provide some insight into the underlying pathophysiology of AMD and its risk of progression. One study has identified eight baseline metabolites that are significantly (p < 0.01) associated with AMD progression at 3 years: N6, N6, N6-trimethyl-L-lysine, phenylalanine, methylsuccinate, n-methyl-hydroxyproline, ribitol, n-palmitoyl-sphingosine, pregnenolone disulfate, and 1-linoleoyl-2-linolenoyl-GPC [152]. The most significant associations with progression were ribitol (p < 0.0002) and pregnenolone disulfate (p < 0.0014) [152]. These are very early results, with further research needed to validate them.

Genetic Markers

A vast array of genes is associated with the risk of developing AMD; the largest genome-wide association study published to date identified 52 common and rare single nucleotide polymorphisms (SNPs) across 34 loci [153], although these numbers have since increased with additional studies reporting at least 103 loci and 69 SNPs now identified [154, 155]. Genetic associations have strongly implicated the complement system as playing a central role in the pathophysiology of AMD, with the first identified genetic association with AMD being CFH. Since then, SNPs in other loci in the complement system have been linked to AMD, including CFB, C3, and C2 [156,157,158]. In addition, another strong genetic association has been identified with variants in the ARMS2/HTRA1 gene [158,159,160]. The majority of risk genes identified to date are associated with the complement, lipid metabolism and extracellular matrix pathways [46, 160]. Adjusting for phenotype and demographic variables, and dependent on methodology, approximately 40–80% of incident AMD can be attributed to genetic factors [161]. How exactly these genes interact with lifestyle risk and aging to contribute to AMD risk, or its progression, remains unclear.

Identifying genetic associations which are specific for AMD disease progression has, to date, not been particularly fruitful. However one study reported that the TT ARMS2/HTRA1 genotype for rs10490924 increases the risk of late AMD by tenfold [159], with another study reporting that people who carry a risk haplotype at ARMS2/HTRA1 progressed to late AMD an average of 9.6 years earlier than those without the risk haplotype [160]. Furthermore, patients with risk variants in both CFH and ARMS2/HTRA1 appear to progress earlier than those with risk variants in CFH alone [158]. Current work is focusing on compiling genetic data into the development of polygenic risk scores for AMD, which may help identify risk of progression as distinct from risk of the disease per se [162, 163]. As our understanding of the genetics underlying AMD deepens, more key pathways will be highlighted that could be targeted with interventions, exemplified by current activity around the complement pathway [164].

Use of Biomarkers for iAMD in Clinical Trials

Some of the biomarkers described above have been included in interventional iAMD clinical trials, although in many cases as secondary or exploratory rather than primary endpoints (Table 1). The primary endpoint of the completed interventional LEAD study (NCT01790802) in patients with high-risk iAMD was disease progression defined using a multimodal imaging endpoint comprising nGA, as well as traditional late-stage disease stages, and used OCT, FAF, and CFP imaging to define these endpoints. This was the first study to use combined structural endpoints to facilitate the feasibility of doing early intervention studies in AMD [48]. In the LEAD study, change in drusen volume [48, 99], LLVA, and microperimetry mean sensitivity were also included as secondary endpoints [99]. A few other interventional studies have included assessment of drusen (area or volume) as an endpoint for patients with iAMD: a completed Phase I trial examining elamipretide (NCT02848313) [165], a completed pilot study of supplement T7082 (NCT04778436), the ongoing Phase II DELPHI trial examining atorvastatin (NCT04735263), and the ongoing Phase II trial of QA108 granules (NCT05562219); results are not yet available. There is also one ongoing study that includes conversion from iRORA to cRORA as an endpoint to evaluate the progression of iAMD (the REVERS trial, NCT05056025) and one assessing development of new iRORA: a Phase II trial of iptacopan (NCT05230537). A further ongoing Phase Ib trial examining ASP7317 includes changes in the ellipsoid zone as an endpoint for patients with either iAMD or GA (NCT03178149).

Studies examining the natural history of iAMD will provide a wealth of information on biomarkers that could be used to assess progression from iAMD to late AMD, and a number of the biomarkers discussed here are included as endpoints in these observational studies. The Duke FEATURE study (NCT01822873; N = 101) examined longitudinal changes in visual function metrics in patients with iAMD over 24 months, including dark adaptation, CCT red, and microperimetry sensitivity [121]. A slow, non-linear functional decline was reported, with a potential structure-function relationship noted among RPD, hyperreflective foci, and dark adaptation [121]. Endpoints in the MACUSTAR study (NCT03349801; N = 718) included structural, functional, and patient-reported outcomes, such as LLVA and microperimetry, measured at 6-month intervals over ≥ 36 months, with results expected to be reported in the coming years [136]. HONU (NCT05300724; planned N = 400) is an ongoing natural history study that will examine the rate of conversion from iAMD to iAMD with nGA and iRORA and then to GA and cRORA. In addition, the BIRC-01 and BIRC-02 observational studies (NCT04469140; N = 450, NCT03688243; N = 225) include assessment of drusen volume as secondary endpoint, along with changes in choroidal perfusion parameters. The PINNACLE study (NCT04269304; N = 428) is examining patients with iAMD over the course of 1–3 years using a range of OCT, OCTA, and autofluorescence endpoints and will use machine learning to predict disease progression. The longitudinal ALSTAR2 study (NCT04112667; N = 480) is examining patients with either no disease or early AMD over a 3-year period to assess structure-function relationships in aging and early AMD [166]. Finally, the Immuno AMD Study will examine markers of immunosenescence in blood samples, using proteomics to assess biomarker expression in patients at different stages of AMD [167], although further details are yet to be released.

Role of Artificial Intelligence in the Detection and Quantification of iAMD Biomarkers

Emerging AI methods may be used to automatically detect and quantify iAMD biomarkers. A potential advantage of automated detection and quantification of biomarkers is the ability to identify a large number of biomarkers in a cost-effective, rapid throughput manner. This will allow generation of additional evidence and consensus, expedite screening of certain predefined characteristics of AMD, contribute to building a consensus set of biomarkers for iAMD, and assist in the selection of appropriate patients for clinical trials. A number of published methodology papers in the rapidly developing field of AI and iAMD biomarkers are available as well as papers using an increasing number of algorithms to detect specific biomarkers.

One group has recently developed a deep-learning model to classify the presence of iRORA and cRORA in an OCT B-scan [168]. The model predicted the presence of iRORA and cRORA within the entire OCT volume with high area under the receiver-operating characteristic curve (AUROC) performance (N = 1117 volumes; iRORA, 0.61; cRORA, 0.83) [168]. The OptinNet deep learning model has been trained to identify “points of interest” in SD-OCT scans of patients with AMD; it classified drusen, RPE, retinal nerve fiber, and choroidal layers as of interest in 337 scans of 62 eyes with AMD [169]. Deep learning has also been employed to detect RPD from OCT scans; agreement with human graders was 0.6, versus 0.68 agreement between two human graders [49].

In addition, a deep-learning system has been developed by the AREDS2 Deep Learning Research Group to classify AMD according to the presence of RPD, GA, and pigmentary abnormalities [170]. Similarly, CFP images from the AREDS study (N = 4139 participants) have been used to build an automated AMD stage classification model (“iPredict-AMD”), which achieved 99.2% accuracy [171]. Multimodal deep learning approaches have also been used to combine OCTA and structural OCT data to predict AMD biomarkers with resulting accuracy of up to 90% [172].

Machine learning has also been used to predict progression in patients with AMD with a machine-learning model used to assess conversion from iAMD to late AMD [31]. In one study, the most critical quantifiable features for progression were outer retinal thickness, hyperreflective foci, and drusen area. For GA specifically, the model had an AUROC performance of 0.80 when differentiating between converting and non-converting eyes over 24 months [31]. A machine-learning approach has also been used to generate a risk-stratified classification for AMD progression in patients with iAMD based on OCT data and demographic variables [173]. In one study that compared predictive models for progression to late AMD based on manual CFP grading and/or automated OCT imaging analysis, the AUROC for each individual model was similar (0.80 and 0.82, respectively) [174]. However, the combined model was not notably superior to either imaging model alone (AUROC of 0.85) [174], suggesting that the AI method used in this study was not significantly better at predicting progression than traditional manual CFP grading [174]. Finally, one deep learning model that is still under development had an AUROC of 0.945 and 0.937 for predicting short-term (current or following year, respectively) progression from iAMD to GA based on qualitative and quantitative SD-OCT features [175]. AI models are improving rapidly, and the hope is that in time they will provide better prediction of AMD progression to vision-threatening disease than clinician assessment alone is currently able to provide.

Challenges of Using AI

If AI models are able to rapidly and accurately identify key biomarkers under investigation in AMD, this could speed up evidence generation and assist in validation of these biomarkers. Such classification methods could expedite screening of patients with AMD with certain predefined criteria. However, much more needs to be achieved before they can be widely implemented. Foremost among issues to be addressed are a lack of standardization in imaging protocols and competing methodologies as well as limited available datasets for testing machine-learning methods. This currently leads to low external validity and reproducibility and inconsistency in reporting metrics. In addition, large imaging datasets often lack the required metadata, such as demographic data or inclusion criteria, for the development of reliable, generalizable models [176].

In 2021, the American Academy of Ophthalmology in partnership with the National Eye Institute produced a set of recommendations to standardize imaging datasets, recommending that machine-readable, discrete data should be provided and that lossless compression of pixel/voxel data be used to encode raw data [177]. In addition to this, in 2022 the Collaborative Community on Ophthalmic Imaging released guidance on the foundational considerations for use of AI for retinal images in ophthalmology, emphasizing the importance of nonmaleficence, autonomy, and equity in the design, validation, and implementation of AI systems [178]. However, until there is implementation of these consensus guidelines on imaging protocols, as well as regulatory approval to be used outside a research setting, using AI effectively in clinical trials and ophthalmology in general will remain challenging.

Conclusions

The investigation of biomarkers to predict and monitor iAMD progression to vision threatening late-stage disease is an exciting area of intense investigation. There is a potential to greatly improve our ability to research earlier stages of AMD and improve patient outcomes in a disease that is ever increasing in prevalence. However, further work is needed to establish the most promising biomarkers and their use in clinical care and future trials. Central to the discussion of biomarkers for AMD is the need for markers that predict and can be used to follow disease progression itself and those that might be able to be used as endpoints in interventional clinical trials. Ongoing work to identify and validate specific biomarkers that indicate risk of disease progression will improve our ability to assess, counsel, and track the patients that are at greatest risk of progressing from iAMD to late-stage AMD as well as other biomarkers that might be able to act as early surrogate endpoints in clinical trials.

Among the many biomarkers currently being evaluated or developed for use in detecting iAMD progression, structural biomarkers such as drusen volume, pigmentary abnormalities, and early signs of atrophy have the most supporting evidence and are already proving useful as clinical trials. Some emergent biomarkers merit further investigation and development with the ultimate goal of being able to predict and measure progression in AMD. The inclusion of functional markers will also be critical as we move towards identifying interventions that appear to slow progression of disease and seek regulatory approval. These need to include parameters other than BCVA, with changes in perimetry, dark adaptation, and contrast sensitivity appearing to be the most promising early parameters at present. Retinal sensitivity assessed through microperimetry is considered one of the most reliable biomarkers of topographic retinal function in iAMD but is not currently used in regular clinical practice and requires expertise to administer; though informative, at present it would not alter disease management. More work is needed to establish the precise time periods over which changes in these biomarkers can be accurately and reproducibly measured and to find the most pragmatic approach to measuring functional changes in large clinical trials.

Genetic biomarkers may be useful for stratifying patients into different risk categories. Further work is required to identify serum biomarkers that could be used to predict risk of disease or to detect progression. The idea of combining risk factor development as endpoints in trials is being explored, as is the potential role of AI in supporting these endeavors.

Critically, a continued dialogue with regulatory authorities on the establishment of clinical trial endpoints must be maintained to enable the design of earlier interventional trials so that potential treatments can be tested in a timely and cost-effective manner. In addition to the work on structural and functional biomarkers, the development of better PROs will support future regulatory approval and therefore is pivotal to the future of research in AMD.

Although many unanswered questions remain, new developments in the rapidly progressing fields of imaging, functional testing, AI, genetics, and serum biomarker assessments are likely to yield future opportunities that are currently impossible to predict. A multimodal, multifactorial approach may ultimately yield the most accurate means of monitoring and predicting progression towards vision-threatening, late-stage AMD.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Wong WL, Su X, Li X, et al. Global prevalence of age-related macular degeneration and disease burden projection for 2020 and 2040: a systematic review and meta-analysis. Lancet Glob Health. 2014;2(2):e106–16.

Lim LS, Mitchell P, Seddon JM, Holz FG, Wong TY. Age-related macular degeneration. Lancet. 2012;379(9827):1728–38.

Ambati J, Atkinson JP, Gelfand BD. Immunology of age-related macular degeneration. Nat Rev Immunol. 2013;13(6):438–51.

Sivaprasad S, Chong NV. The complement system and age-related macular degeneration. Eye. 2006;20(8):867–72.

Pennington KL, DeAngelis MM. Epidemiology of age-related macular degeneration (AMD): associations with cardiovascular disease phenotypes and lipid factors. Eye Vis (Lond). 2016;3:34.

Keenan TDL, Cukras CA, Chew EY. Age-related macular degeneration: epidemiology and clinical aspects. Adv Exp Med Biol. 2021;1256:1–31.

Chakravarthy U, Wong TY, Fletcher A, et al. Clinical risk factors for age-related macular degeneration: a systematic review and meta-analysis. BMC Ophthalmol. 2010;10(1):31.

García-Layana A, Cabrera-López F, García-Arumí J, Arias-Barquet L, Ruiz-Moreno JM. Early and intermediate age-related macular degeneration: update and clinical review. Clin Interv Aging. 2017;12:1579–87.

Ferris FL 3rd, Wilkinson CP, Bird A, et al. Clinical classification of age-related macular degeneration. Ophthalmology. 2013;120(4):844–51.

Li JQ, Welchowski T, Schmid M, Mauschitz MM, Holz FG, Finger RP. Prevalence and incidence of age-related macular degeneration in Europe: a systematic review and meta-analysis. Br J Ophthalmol. 2020;104(8):1077–84.

Areds A. randomized, placebo-controlled, clinical trial of high-dose supplementation with vitamins C and E, beta carotene, and zinc for age-related macular degeneration and vision loss: AREDS report no 8. Arch Ophthalmol. 2001;119(10):1417–36.

Flores R, Carneiro Â, Tenreiro S, Seabra MC. Retinal progression biomarkers of early and intermediate age-related macular degeneration. Life. 2022;12(1):36.

Chakravarthy U, Bailey CC, Scanlon PH, et al. Progression from early/intermediate to advanced forms of age-related macular degeneration in a large UK cohort: rates and risk factors. Ophthalmol Retina. 2020;4(7):662–72.

Chew EY, Clemons TE, Agrón E, et al. Ten-Year follow-up of age-related macular degeneration in the age-related eye disease study: AREDS report no 36. JAMA Ophthalmol. 2014;132(3):272–7.

Krishnadev N, Meleth AD, Chew EY. Nutritional supplements for age-related macular degeneration. Curr Opin Ophthalmol. 2010;21(3):184–9.

Schaal KB, Rosenfeld PJ, Gregori G, Yehoshua Z, Feuer WJ. Anatomic clinical trial endpoints for nonexudative age-related macular degeneration. Ophthalmology. 2016;123(5):1060–79.

Csaky K, Ferris F III, Chew EY, Nair P, Cheetham JK, Duncan JL. Report from the NEI/FDA endpoints workshop on age-related macular degeneration and inherited retinal diseases. Invest Ophthalmol Vis Sci. 2017;58(9):3456–63.

Klein R, Myers CE, Lee KE, et al. Small drusen and age-related macular degeneration: the beaver dam eye study. J Clin Med. 2015;4(3):425–40.

Brader HS, Ying GS, Martin ER, Maguire MG. Characteristics of incident geographic atrophy in the complications of age-related macular degeneration prevention trial. Ophthalmology. 2013;120(9):1871–9.

Veerappan M, El-Hage-Sleiman AM, Tai V, et al. Optical coherence tomography reflective drusen substructures predict progression to geographic atrophy in age-related macular degeneration. Ophthalmology. 2016;123(12):2554–70.

Waldstein SM, Vogl WD, Bogunovic H, Sadeghipour A, Riedl S, Schmidt-Erfurth U. Characterization of drusen and hyperreflective foci as biomarkers for disease progression in age-related macular degeneration using artificial intelligence in optical coherence tomography. JAMA Ophthalmol. 2020;138(7):740–7.

Thiele S, Nadal J, Pfau M, et al. Prognostic value of intermediate age-related macular degeneration phenotypes for geographic atrophy progression. Br J Ophthalmol. 2021;105(2):239.

Ferris FL, Davis MD, Clemons TE, et al. A simplified severity scale for age-related macular degeneration: AREDS report no 18. Arch Ophthalmol. 2005;123(11):1570–4.

Khan KN, Mahroo OA, Khan RS, et al. Differentiating drusen: drusen and drusen-like appearances associated with ageing, age-related macular degeneration, inherited eye disease and other pathological processes. Prog Retin Eye Res. 2016;53:70–106.

Fragiotta S, Fernández-Avellaneda P, Breazzano MP, Scuderi G. Clinical manifestations of cuticular drusen: current perspectives. Clin Ophthalmol. 2021;15:3877–87.

Sakurada Y, Parikh R, Gal-Or O, et al. Cuticular drusen: risk of geographic atrophy and macular neovascularization. Retina. 2020;40(2):257–65.

Abdelfattah NS, Zhang H, Boyer DS, et al. Drusen volume as a predictor of disease progression in patients with late age-related macular degeneration in the fellow eye. Invest Ophthalmol Vis Sci. 2016;57(4):1839–46.

Folgar FA, Yuan EL, Sevilla MB, et al. Drusen volume and retinal pigment epithelium abnormal thinning volume predict 2-year progression of age-related macular degeneration. Ophthalmology. 2016;123(1):39-50.e1.

Domalpally A, Xing B, Pak JW, et al. Extramacular drusen and progression of age-related macular degeneration: age related eye disease study 2 report 30. Ophthalmol Retina. 2023;7(2):111–7.

Nathoo NA, Or C, Young M, et al. Optical coherence tomography-based measurement of drusen load predicts development of advanced age-related macular degeneration. Am J Ophthalmol. 2014;158(4):757-61.e1.

Schmidt-Erfurth U, Waldstein SM, Klimscha S, et al. Prediction of individual disease conversion in early AMD using artificial intelligence. Invest Ophthalmol Vis Sci. 2018;59(8):3199–208.

Yang S, Gao Z, Qiu H, et al. Low-reflectivity drusen with overlying RPE damage revealed by spectral-domain OCT: hint for the development of age-related macular degeneration. Front Med. 2021;8:706502.

Goh KL, Abbott CJ, Hadoux X, et al. Hyporeflective cores within drusen: association with progression of age-related macular degeneration and impact on visual sensitivity. Ophthalmol Retina. 2022;6(4):284–90.

Nittala MG, Corvi F, Maram J, et al. Risk factors for progression of age-related macular degeneration: population-based Amish eye study. J Clin Med. 2022;11(17):5110.

Amarasekera S, Samanta A, Jhingan M, et al. Optical coherence tomography predictors of progression of non-exudative age-related macular degeneration to advanced atrophic and exudative disease. Graefes Arch Clin Exp Ophthalmol. 2022;260(3):737–46.

Bressler NM, Munoz B, Maguire MG, et al. Five-year incidence and disappearance of drusen and retinal pigment epithelial abnormalities: Waterman study. Arch Ophthalmol. 1995;113(3):301–8.

Zeng R, Garg I, Miller JB. Complete resolution of central soft drusen without geographic atrophy or choroidal neovascularization. J Clin Med. 2022;11(6):1637.

Schlanitz FG, Baumann B, Kundi M, et al. Drusen volume development over time and its relevance to the course of age-related macular degeneration. Br J Ophthalmol. 2017;101(2):198–203.

Zweifel SA, Spaide RF, Curcio CA, Malek G, Imamura Y. Reticular pseudodrusen are subretinal drusenoid deposits. Ophthalmology. 2010;117(2):303-12.e1.

Wightman AJ, Guymer RH. Reticular pseudodrusen: current understanding. Clin Exp Optom. 2019;102(5):455–62.

Wu Z, Fletcher EL, Kumar H, Greferath U, Guymer RH. Reticular pseudodrusen: a critical phenotype in age-related macular degeneration. Prog Retin Eye Res. 2022;88:101017.

Finger RP, Chong E, McGuinness MB, et al. Reticular pseudodrusen and their association with age-related macular degeneration: the Melbourne Collaborative Cohort Study. Ophthalmology. 2016;123(3):599–608.

Finger RP, Wu Z, Luu CD, et al. Reticular pseudodrusen: a risk factor for geographic atrophy in fellow eyes of individuals with unilateral choroidal neovascularization. Ophthalmology. 2014;121(6):1252–6.

Zhou Q, Daniel E, Maguire MG, et al. Pseudodrusen and incidence of late age-related macular degeneration in fellow eyes in the comparison of age-related macular degeneration treatments trials. Ophthalmology. 2016;123(7):1530–40.

Agrón E, Domalpally A, Cukras CA, et al. Reticular pseudodrusen: the third macular risk feature for progression to late age-related macular degeneration: Age-Related Eye Disease Study 2 Report 30. Ophthalmology. 2022;129(10):1107–19.

Agrón E, Domalpally A, Cukras CA, et al. Reticular pseudodrusen status, ARMS2/HTRA1 genotype, and geographic atrophy enlargement: age-related eye disease study 2 report 32. Ophthalmology. 2022. https://doi.org/10.1016/j.ophtha.2022.11.026.

Marsiglia M, Boddu S, Bearelly S, et al. Association between geographic atrophy progression and reticular pseudodrusen in eyes with dry age-related macular degeneration. Invest Ophthalmol Vis Sci. 2013;54(12):7362–9.

Guymer RH, Wu Z, Hodgson LAB, et al. Subthreshold nanosecond laser intervention in age-related macular degeneration: the LEAD randomized controlled clinical trial. Ophthalmology. 2019;126(6):829–38.

Schwartz R, Khalid H, Liakopoulos S, et al. A deep learning framework for the detection and quantification of reticular pseudodrusen and drusen on optical coherence tomography. Transl Vis Sci Technol. 2022;11(12):3.

Wang JJ, Foran S, Smith W, Mitchell P. Risk of age-related macular degeneration in eyes with macular drusen or hyperpigmentation: the Blue Mountains Eye Study Cohort. Arch Ophthalmol. 2003;121(5):658–63.

Davis MD, Gangnon RE, Lee LY, et al. The age-related eye disease study severity scale for age-related macular degeneration: areds report No 17. Arch Ophthalmol. 2005;123(11):1484–98.

Klein ML, Ferris FL 3rd, Armstrong J, et al. Retinal precursors and the development of geographic atrophy in age-related macular degeneration. Ophthalmology. 2008;115(6):1026–31.

Khanifar AA, Koreishi AF, Izatt JA, Toth CA. Drusen ultrastructure imaging with spectral domain optical coherence tomography in age-related macular degeneration. Ophthalmology. 2008;115(11):1883–90.

Fragiotta S, Abdolrahimzadeh S, Dolz-Marco R, Sakurada Y, Gal-Or O, Scuderi G. Significance of hyperreflective foci as an optical coherence tomography biomarker in retinal diseases: characterization and clinical implications. J Ophthalmol. 2021;2021:6096017.

Augustin S, Lam M, Lavalette S, et al. Melanophages give rise to hyperreflective foci in AMD, a disease-progression marker. J Neuroinflammation. 2023;20(1):28.

Folgar FA, Chow JH, Farsiu S, et al. Spatial correlation between hyperpigmentary changes on color fundus photography and hyperreflective foci on SDOCT in intermediate AMD. Invest Ophthalmol Vis Sci. 2012;53(8):4626–33.

Nassisi M, Fan W, Shi Y, et al. Quantity of intraretinal hyperreflective foci in patients with intermediate age-related macular degeneration correlates with 1-year progression. Invest Ophthalmol Vis Sci. 2018;59(8):3431–9.

Christenbury JG, Folgar FA, O’Connell RV, Chiu SJ, Farsiu S, Toth CA. Progression of intermediate age-related macular degeneration with proliferation and inner retinal migration of hyperreflective foci. Ophthalmology. 2013;120(5):1038–45.

Vogl WD, Riedl S, Mai J, et al. Predicting topographic disease progression and treatment response of pegcetacoplan in geographic atrophy quantified by deep learning. Ophthalmol Retina. 2023;7(1):4–13.

Sacconi R, Sarraf D, Garrity S, et al. Nascent type 3 neovascularization in age-related macular degeneration. Ophthalmol Retina. 2018;2(11):1097–106.

Wakatsuki Y, Trejo Corona S, Marion K, Wykoff CC, Sadda SR. Relevance of intraretinal hyperreflective foci and subretinal drusenoid deposits in type 3 macular neovascularization. Invest Ophthalmol Vis Sci. 2023;64(8):2162.

Kikushima W, Sakurada Y, Sugiyama A, et al. Characteristics of intermediate age-related macular degeneration with hyperreflective foci. Sci Rep. 2022;12(1):18420.

Sadda SR, Guymer R, Holz FG, et al. Consensus definition for atrophy associated with age-related macular degeneration on OCT: classification of atrophy report 3. Ophthalmology. 2018;125(4):537–48.

Guymer RH, Rosenfeld PJ, Curcio CA, et al. Incomplete retinal pigment epithelial and outer retinal atrophy in age-related macular degeneration: classification of atrophy meeting report 4. Ophthalmology. 2020;127(3):394–409.

Wu Z, Luu CD, Hodgson LAB, et al. Prospective longitudinal evaluation of nascent geographic atrophy in age-related macular degeneration. Ophthalmol Retina. 2020;4(6):568–75.

Wu Z, Luu CD, Ayton LN, et al. Optical coherence tomography-defined changes preceding the development of drusen-associated atrophy in age-related macular degeneration. Ophthalmology. 2014;121(12):2415–22.

Wu Z, Goh KL, Hodgson LAB, Guymer RH. Incomplete retinal pigment epithelial and outer retinal atrophy: longitudinal evaluation in age-related macular degeneration. Ophthalmology. 2023;130(2):205–12.

Corradetti G, Corvi F, Nittala MG, et al. Natural history of incomplete retinal pigment epithelial and outer retinal atrophy in age-related macular degeneration. Can J Ophthalmol. 2021;56(5):325–34.

Wu Z, Pfau M, Blodi BA, et al. OCT signs of early atrophy in age-related macular degeneration: interreader agreement: classification of atrophy meetings report 6. Ophthalmol Retina. 2022;6(1):4–14.

Laiginhas R, Yang J, Rosenfeld PJ, Falcão M. Nonexudative Macular Neovascularization a systematic review of prevalence, natural history, and recent insights from OCT angiography. Ophthalmol Retina. 2020;4(7):651–61.

de Oliveira Dias JR, Zhang Q, Garcia JMB, et al. Natural history of subclinical neovascularization in nonexudative age-related macular degeneration using swept-source OCT angiography. Ophthalmology. 2018;125(2):255–66.

Thottarath S, Chandra S, Gurudas S, et al. Study protocol on prevalence of non-exudative macular neovascularisation and its contribution to prediction of exudation in fellow eyes with unilateral exudative AMD (EYE-NEON). Eye. 2023. https://doi.org/10.1038/s41433-023-02460-9.

Narita C, Wu Z, Rosenfeld PJ, et al. Structural OCT signs suggestive of subclinical nonexudative macular neovascularization in eyes with large drusen. Ophthalmology. 2020;127(5):637–47.

Guo Y, Wu J, Zheng X, Yin C, Wu Z. The first-year variation in central retinal thickness predicts legal blindness in patients with neovascular age-related macular degeneration. Ophthalmic Res. 2022;66(1):406–12.

Willoughby AS, Ying GS, Toth CA, et al. Subretinal hyperreflective material in the comparison of age-related macular degeneration treatments trials. Ophthalmology. 2015;122(9):1846-53.e5.

Gin TJ, Wu Z, Chew SKH, Guymer RH, Luu CD. Quantitative analysis of the ellipsoid zone intensity in phenotypic variations of intermediate age-related macular degeneration. Invest Ophthalmol Vis Sci. 2017;58(4):2079–86.

Staurenghi G, Sadda S, Chakravarthy U, Spaide RF. Proposed lexicon for anatomic landmarks in normal posterior segment spectral-domain optical coherence tomography: the IN OCT consensus. Ophthalmology. 2014;121(8):1572–8.

Tao LW, Wu Z, Guymer RH, Luu CD. Ellipsoid zone on optical coherence tomography: a review. Clin Exp Ophthalmol. 2016;44(5):422–30.

Borrelli E, Sacconi R, Zuccaro B, et al. Photoreceptor alteration in intermediate age-related macular degeneration. Sci Rep. 2020;10(1):21036.

Toprak I, Yaylalı V, Yildirim C. Early deterioration in ellipsoid zone in eyes with non-neovascular age-related macular degeneration. Int Ophthalmol. 2017;37(4):801–6.

Thiele S, Wu Z, Isselmann B, Pfau M, Guymer RH, Luu CD. Natural history of the relative ellipsoid zone reflectivity in age-related macular degeneration. Ophthalmol Retina. 2022;6(12):1165–72.

Laiginhas R, Shi Y, Shen M, et al. Persistent hypertransmission defects detected on en face swept source optical computed tomography images predict the formation of geographic atrophy in age-related macular degeneration. Am J Ophthalmol. 2022;237:58–70.

Liu J, Laiginhas R, Corvi F, et al. Diagnosing persistent hypertransmission defects on en face OCT imaging of age-related macular degeneration. Ophthalmol Retina. 2022;6(5):387–97.

Liu J, Shen M, Laiginhas R, et al. Onset and progression of persistent choroidal hypertransmission defects in intermediate age-related macular degeneration: a novel clinical trial endpoint. Am J Ophthalmol. 2023;254:11–22.

Hirano M, Muraoka Y, Kogo T, et al. Analysis of widefield choroidal thickness maps of healthy eyes using swept source optical coherence tomography. Sci Rep. 2023;13(1):11904.

Capuano V, Souied EH, Miere A, Jung C, Costanzo E, Querques G. Choroidal maps in non-exudative age-related macular degeneration. Br J Ophthalmol. 2016;100(5):677.

Amato A, Arrigo A, Borghesan F, et al. Baseline Sattler layer-choriocapillaris complex thickness cutoffs associated with age-related macular degeneration progression. Retina. 2022;42(9):1683–92.

Chen RC, Palestine AG, Lynch AM, et al. Increased systemic C-reactive protein is associated with choroidal thinning in intermediate age-related macular degeneration. Transl Vis Sci Technol. 2021;10(12):7.

Hou KK, Au A, Kashani AH, Freund KB, Sadda SR, Sarraf D. Pseudoflow with OCT angiography in eyes with hard exudates and macular drusen. Transl Vis Sci Technol. 2019;8(3):50.

Boyer DS, Schmidt-Erfurth U, van Lookeren CM, Henry EC, Brittain C. The pathophysiology of geographic atrophy secondary to age-related macular degeneration and the complement pathway as a therapeutic target. Retina. 2017;37(5):819–35.

Camino A, Guo Y, You Q, et al. Detecting and measuring areas of choriocapillaris low perfusion in intermediate, non-neovascular age-related macular degeneration. Neurophotonics. 2019;6(4):041108.

Tiosano L, Corradetti G, Sadda SR. Progression of choriocapillaris flow deficits in clinically stable intermediate age-related macular degeneration. Eye (Lond). 2021;35(11):2991–8.

Corradetti G, Tiosano L, Nassisi M, et al. Scotopic microperimetric sensitivity and inner choroid flow deficits as predictors of progression to nascent geographic atrophy. Br J Ophthalmol. 2021;105(11):1584–90.

Corvi F, Corradetti G, Tiosano L, McLaughlin JA, Lee TK, Sadda SR. Topography of choriocapillaris flow deficit predicts development of neovascularization or atrophy in age-related macular degeneration. Graefes Arch Clin Exp Ophthalmol. 2021;259(10):2887–95.

Corvi F, Tiosano L, Corradetti G, et al. Choriocapillaris flow deficits as a risk factor for progression of age-related macular degeneration. Retina. 2021;41(4):686–93.

Nassisi M, Tepelus T, Nittala MG, Sadda SR. Choriocapillaris flow impairment predicts the development and enlargement of drusen. Graefes Arch Clin Exp Ophthalmol. 2019;257(10):2079–85.

Borrelli E, Uji A, Sarraf D, Sadda SR. Alterations in the choriocapillaris in intermediate age-related macular degeneration. Invest Ophthalmol Vis Sci. 2017;58(11):4792–8.

Scarinci F, Varano M, Parravano M. Retinal sensitivity loss correlates with deep capillary plexus impairment in diabetic macular ischemia. J Ophthalmol. 2019;2019:7589841.

Wu Z, Luu CD, Hodgson LAB, et al. Secondary and exploratory outcomes of the subthreshold nanosecond laser intervention randomized trial in age-related macular degeneration: a LEAD study report. Ophthalmol Retina. 2019;3(12):1026–34.

Dunbar HMP, Behning C, Abdirahman A, et al. Repeatability and discriminatory power of chart-based visual function tests in individuals with age-related macular degeneration: a MACUSTAR study report. JAMA Ophthalmol. 2022;140(8):780–9.

Chandramohan A, Stinnett SS, Petrowski JT, et al. Visual function measures in early and intermediate age-related macular degeneration. Retina. 2016;36(5):1021–31.

Hsu ST, Thompson AC, Stinnett SS, et al. Longitudinal study of visual function in dry age-related macular degeneration at 12 months. Ophthalmol Retina. 2019;3(8):637–48.

Do DV, Nguyen QD, Mostafa SH, Bressler SB. Evaluation of visual acuity measurements with standard luminance compared with low luminance in eyes with geographic atrophy from age-related macular degeneration. Invest Ophthalmol Vis Sci. 2015;56:2821.

Brodnar Z, Shields AR, Dobrota S, Do DV. Low luminance deficits in retinal disease. Invest Ophthalmol Vis Sci. 2018;59:3424.

Wu Z, Luu CD, Hodgson LAB, et al. Using microperimetry and low-luminance visual acuity to detect the onset of late age-related macular degeneration: a LEAD study report. Retina. 2021;41(5):1094–101.

Wu Z, Luu CD, Hodgson LA, et al. Examining the added value of microperimetry and low luminance deficit for predicting progression in age-related macular degeneration. Br J Ophthalmol. 2021;105(5):711–5.

Laishram M, Srikanth K, Rajalakshmi AR, Nagarajan S, Ezhumalai G. Microperimetry a new tool for assessing retinal sensitivity in macular diseases. J Clin Diagn Res. 2017;11(7):08–11.

Yang Y, Dunbar H. Clinical perspectives and trends: microperimetry as a trial endpoint in retinal disease. Ophthalmologica. 2021;244(5):418–50.

Dinc UA, Yenerel M, Gorgun E, Oncel M. Assessment of macular function by microperimetry in intermediate age-related macular degeneration. Eur J Ophthalmol. 2008;18(4):595–600.

Vujosevic S, Pucci P, Casciano M, et al. Long-term longitudinal modifications in mesopic microperimetry in early and intermediate age-related macular degeneration. Graefes Arch Clin Exp Ophthalmol. 2017;255(2):301–9.

Welker SG, Pfau M, Heinemann M, Schmitz-Valckenberg S, Holz FG, Finger RP. Retest reliability of mesopic and dark-adapted microperimetry in patients with intermediate age-related macular degeneration and age-matched controls. Invest Ophthalmol Vis Sci. 2018;59(4):152–9.

Montesano G, Ometto G, Higgins BE, et al. Structure-function analysis in macular drusen with mesopic and scotopic microperimetry. Translat Vis Sci Technol. 2020;9(13):43.

Tepelus TC, Hariri AH, Al-Sheikh M, Sadda SR. Correlation between mesopic retinal sensitivity and optical coherence tomographic metrics of the outer retina in patients with non-atrophic dry age-related macular degeneration. Ophthalmic Surg Lasers Imaging Retina. 2017;48(4):312–8.

Steinberg JS, Saßmannshausen M, Pfau M, et al. Evaluation of two systems for fundus-controlled scotopic and mesopic perimetry in eye with age-related macular degeneration. Transl Vis Sci Technol. 2017;6(4):7.

Iwama D, Tsujikawa A, Ojima Y, et al. Relationship between retinal sensitivity and morphologic changes in eyes with confluent soft drusen. Clin Exp Ophthalmol. 2010;38(5):483–8.

Fragiotta S, Costanzo E, Viggiano P, et al. Functional correlates of outer retina remodeling in intermediate age-related macular degeneration using microperimetry. Invest Ophthalmol Vis Sci. 2022;63(3):16.

Nebbioso M, Barbato A, Pescosolido N. Scotopic microperimetry in the early diagnosis of age-related macular degeneration: preliminary study. Biomed Res Int. 2014;2014:671529.

Steinberg JS, Fitzke FW, Fimmers R, Fleckenstein M, Holz FG, Schmitz-Valckenberg S. Scotopic and photopic microperimetry in patients with reticular drusen and age-related macular degeneration. JAMA Ophthalmology. 2015;133(6):690–7.

Reuter T. Fifty years of dark adaptation 1961–2011. Vision Res. 2011;51(21):2243–62.

Hecht S, Haig C, Chase AM. The influence of light adaptation on subsequent dark adaptation of the eye. J Gen Physiol. 1937;20(6):831–50.

Lad EM, Fang V, Tessier M, et al. Longitudinal evaluation of visual function impairments in early and intermediate age-related macular degeneration patients. Ophthalmol Sci. 2022;2(3):100173.

Luu CD, Tan R, Caruso E, Fletcher EL, Lamb TD, Guymer RH. Topographic rod recovery profiles after a prolonged dark adaptation in subjects with reticular pseudodrusen. Ophthalmol Retina. 2018;2(12):1206–17.

Tan R, Guymer RH, Luu CD. Subretinal drusenoid deposits and the loss of rod function in intermediate age-related macular degeneration. Invest Ophthalmol Vis Sci. 2018;59(10):4154–61.

Owsley C, McGwin G Jr, Clark ME, et al. Delayed rod-mediated dark adaptation is a functional biomarker for incident early age-related macular degeneration. Ophthalmology. 2016;123(2):344–51.

Tan RS, Guymer RH, Aung KZ, Caruso E, Luu CD. Longitudinal assessment of rod function in intermediate age-related macular degeneration with and without reticular pseudodrusen. Invest Ophthalmol Vis Sci. 2019;60(5):1511–8.

Ridder WH, Comer G, Oquindo C, Yoshinaga P, Engles M, Burke J. Contrast sensitivity in early to intermediate age-related macular degeneration (AMD). Curr Eye Res. 2022;47(2):287–96.

Maynard ML, Zele AJ, Feigl B. Mesopic Pelli-robson contrast sensitivity and mp-1 microperimetry in healthy ageing and age-related macular degeneration. Acta Ophthalmol. 2016;94(8):e772–8.

Patel PJ, Chen FK, Rubin GS, Tufail A. Intersession repeatability of contrast sensitivity scores in age-related macular degeneration. Invest Ophthalmol Vis Sci. 2009;50(6):2621–5.

Rabin J, Gooch J, Ivan D. Rapid quantification of color vision: the cone contrast test. Invest Ophthalmol Vis Sci. 2011;52(2):816–20.

Ou WC, Lesmes LA, Christie AH, Denlar RA, Csaky KG. Normal and low-luminance automated quantitative contrast sensitivity assessment in eyes with age-related macular degeneration. Am J Ophthalmol. 2021;226:148–55.

Finger RP, Schmitz-Valckenberg S, Schmid M, et al. MACUSTAR: development and clinical validation of functional, structural, and patient-reported endpoints in intermediate age-related macular degeneration. Ophthalmologica. 2019;241(2):61–72.

Mangione CM, Lee PP, Gutierrez PR, Spritzer K, Berry S, Hays RD. Development of the 25-item national eye institute visual function questionnaire. Arch Ophthalmol. 2001;119(7):1050–8.

Kimel M, Leidy NK, Tschosik E, et al. Functional Reading Independence (FRI) index: a new patient-reported outcome measure for patients with geographic atrophy. Invest Ophthalmol Vis Sci. 2016;57(14):6298–304.

Goldstein JE, Fenwick E, Finger RP, et al. Calibrating the impact of vision impairment (IVI): creation of a sample-independent visual function measure for patient-centered outcomes research. Transl Vis Sci Technol. 2018;7(6):38.

McGuinness MB, Finger RP, Wu Z, et al. Properties of the impact of vision impairment and night vision questionnaires among people with intermediate age-related macular degeneration. Transl Vis Sci Technol. 2019;8(5):3.

Terheyden JH, Holz FG, Schmitz-Valckenberg S, et al. Clinical study protocol for a low-interventional study in intermediate age-related macular degeneration developing novel clinical endpoints for interventional clinical trials with a regulatory and patient access intention-MACUSTAR. Trials. 2020;21(1):659.