Abstract

We present a derivation of the Schwinger parametrization and the Feynman parametrization in detail and their elementary applications. Although the parametrizations are essential to computing the loop integral arising in relativistic quantum field theory, their detailed derivations are not presented in usual textbooks. Beginning with an integral representation of the unity, we derive the Schwinger parametrization by performing multiple partial derivatives and utilizing the analyticity of the gamma function. The Feynman parametrization is derived by the partial-fraction decomposition and the change of variables introducing an additional delta function. Through the extensive employment of the analyticity of a complex function, we show the equivalence of those parametrizations. As applications of the parametrizations, we consider the combinatorial factor arising in the Feynman parametrization integral and the multivariate beta function. The combinatorial factor corresponds to an elementary integral embedded in the time-ordered product of the Dyson series in the time-dependent perturbation theory. We believe that the derivation presented here can be a good pedagogical example that students enhance their understanding of complex variables and train the use of the Dirac delta function in coordinate transformation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Understanding nature with a minimal number of guiding principles is one of the goals of science. Among innumerable theories in science, quantum electrodynamics [1] has achieved the most accurately tested physics theory constructed ever in human history. The eight-digit agreements between the theory and experiment regarding the anomalous magnetic dipole moment of the electron first computed by Schwinger [2] and the Rydberg constant (see Aoyama et al. [3] for a review) rely only on a single parameter called the fine-structure constant \(\alpha\). Such an unprecedented success of quantum field theory was achieved due to the development of a rigorous quantitative approximation formalism called perturbation theory, which expands a physical measurable in a power series in a small perturbative parameter \(\alpha ={\text{e}}^2/(4\pi \varepsilon _0 \hbar c)\). Here, the coupling constant e is the elementary electric charge representing the strength of the electron–photon interaction, \(\varepsilon _0\) is the vacuum permittivity, \(\hbar\) is the reduced Planck constant, and c is the speed of light in vacuum.

In the perturbative expansion of a physical amplitude involving relativistic particles, an external state, which is directly observable, is on its own mass shell in that the squared four-momentum \(p^2\) satisfies \(p^2=(E/c)^2-{\varvec{p}}^2=m^2c^2\), where E and \({\varvec{p}}\) are the energy and three-momentum of the particle of rest mass m. In that amplitude, a virtual state of a field may also arise as the propagator. Here, a virtual state is off its mass shell: \(p^2=(E/c)^2-{\varvec{p}}^2\ne m^2c^2\). The wave propagation of the virtual state of a field is described by the Feynman propagator [4] that is Green’s function [5] of the equation of motion for the field. The Feynman propagator can be compactly expressed as a Fourier transform in the momentum space. This is a linear combination of free-field waves with the multiplicative factor \(i/(d+i\varepsilon )\), where \(d=p^2-m^2c^2\). Note that we have suppressed the numerator factor that depends on the spin of the particle. Here, \(+i\varepsilon\) is a pure imaginary number with an infinitesimally small positive number \(\varepsilon \rightarrow 0^+\). This additional piece in the propagator denominator respects the causality boundary condition of Green’s function which is required by Huygens’ principle of propagating wave for a relativistic particle. A formal description of the propagator theory can be found, for example, in chapter 6 of Bjorken and Drell [6].

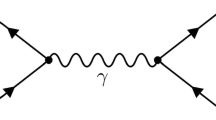

The Feynman diagram is a convenient representation for the perturbative series of an amplitude involving quantum fields. As the order of the perturbative parameter increases, the number of vertices and the number of propagators increase simultaneously. Each vertex has a suppression factor of the coupling such as e. Keeping the external particles unchanged, this leads to a new topological Feynman diagram called the loop. As an example, we consider the magnetic dipole moment of the electron. At leading order in \(\alpha\), the Dirac equation [7, 8], which is the relativistic equation of motion for the electron, gives the gyromagnetic ratio g of the electron exact \(g=2\). (See, for example, p 13 of Bjorken and Drell [6].) The anomalous magnetic moment \(a_e=(g-2)/2\) represents the relative shift from the exact 2 that must come from the perturbative corrections. At the next-to-leading order in \(\alpha\), the Feynman diagram acquires a triangular loop diagram being called the vertex correction as shown in Fig. 1.

This is the leading contribution to \(a_e=(g-2)/2\). This amplitude involves the loop integral with the loop momentum \(k^{\mu}\) whose every component is free to vary from \(-\infty\) to \(\infty\). In that amplitude, there are three propagators of the form \(1/(a_1 a_2 a_3)\), where \(a_1\) and \(a_2\) are the propagator denominators for the electron adjacent to the electron-photon vertex and \(a_3\) is the denominator of the photon propagator that is exchanged by the initial and the final electron states. Readers are referred to chapter 17 of Schwartz [9] or chapter 6 of Peskin and Schroeder [10] for further reading.

In fact, any loop integral can be at first evaluated by integrating over the energy component \(k^0\) of the loop momentum \(k^{\mu}\) by making use of the calculus of residues that derives from the Cauchy integral theorem for an analytic function [11,12,13]. Then we are left with an integral over the three-dimensional Euclidean space. Although the integral must be a Lorentz-covariant quantity, in principle, the Euclidean integral is naturally expressed in terms of non-covariant terms, unfortunately. Furthermore, as the number of denominator factors increases, the number of terms contributing to the residue piles up formidably. Thus, this method is not practically useful. A convenient way to avoid such a messy computation is the Feynman parametrization, which combines all of the denominator factors emerging in the integrand of a loop integral into a single power. An explicit form can be found, for example, in equation (6.42) on p 190 of Peskin and Schroeder [10]. The method is an intricate application of the partial-fraction decomposition for a rational function in combination with integration and differentiation techniques. If the Schwinger parametrization [14] is applied together, then the derivation of the Feynman parametrization becomes particularly straightforward.

In this paper, we focus on the derivation of the Schwinger parametrization and the Feynman parametrization rather than applying the parametrization to compute loop integrals. The fundamental form of the Schwinger parametrization is nothing but the Laplace transform of the Heaviside step function that can be analytically continued to the Fourier-transform representation. The Fourier-transform representation is more effective in physics because the real part of a propagator denominator can hit 0, which is not allowed in the Laplace transform representation. The more general form of the Schwinger parametrization can be derived by performing multiple partial derivatives and utilizing the analyticity of the gamma function. An elementary derivation of the Feynman parametrization involves only the algebra of partial-fraction decomposition and elementary calculus with an additional application of the Dirac delta function for changing variables. If we have a closer look into the derivation in terms of the Schwinger parametrization, then the derivation of the parametrization involves an extensive use of the analytic properties of complex functions. In addition, the combinatorial factor arising in the integration of the Feynman parameters is an elementary integral embedded in the time-ordered product of the Dyson series in the time-dependent perturbation theory. Furthermore, the multivariate beta function can be derived as a byproduct of the parametrization.

The formulation is indeed closely related to the analytic continuation of quantum-mechanical amplitude. Thus, the target reader of this paper is mainly upper-level physics major undergraduate students who have studied quantum mechanics. Graduate students of particle-physics major may have a nice chance to investigate in detail about the derivation because the Feynman parametrization is usually introduced in the appendix of a quantum field theory textbook briefly without a detailed proof. We believe that the derivation presented in this paper is pedagogically useful in that students can train themselves with various mathematical tools widely employed in physics in a single problem.

This paper is organized as follows. In Sect. 2, we investigate the analytic structure of the Feynman propagator and the generic form of loop integrals. In Sect. 3, we review the analytic properties of the Schwinger parametrization that stems from the Laplace transform of the Heaviside step function and derive the most general form of the Schwinger parametrization. Section 4 contains the derivation of the Feynman parametrization with partial-fraction decomposition and the derivation of its general form applicable to parametrizing denominators with arbitrary powers. A set of elementary applications of the Schwinger parametrization and the Feynman parametrization is illustrated in Sect. 5. The applications include a Feynman-parameter integral involving combinatorial factor embedded in the time-ordering operation of the time-dependent perturbation theory and a derivation of the multivariate beta function. The conclusion follows in Sect. 6. An explicit evaluation of the contour integral for the Feynman propagator is given in Appendix A to demonstrate the causality boundary condition for Green’s function that corresponds to the relativistic propagator. The calculation detail of the derivation of the general form of the Feynman parameterization from the Schwinger parametrization is provided in Appendix B.

2 Analytic structure of loop integrals

In this section, we review the analytic properties of the propagator–denominator factors appearing in a loop integral that emerges in the perturbative series of an amplitude in relativistic quantum field theory. Because the propagator–denominator factor is independent of the spin, we simplify our review by considering a scalar field \(\phi (x)\) representing a spin-0 particle of rest mass m.

2.1 Feynman propagator

The relativistic equation of motion for the scalar field \(\phi (x)\) of rest mass m is the Klein–Gordon equation [see equation (8) of Gordon [15]]:

where we have taken the natural unit system in which the speed of light \(c=1\) and the reduced Planck constant \(\hbar =1\). Here, the operator \(\partial ^2\) is defined by

Note that \(x^{\mu} =(x^0,x^1,x^2,x^3)=( t,{\varvec{x}})\) is the space-time coordinate defined in the Minkowski space. The general solution \(\phi _{0}(x)\) for Eq. (1) is given by

where p and x are the four-momentum and the space-time coordinate of the field, respectively, and \(\kappa _i\) are arbitrary constants that are determined by initial conditions. If there is an interaction of \(\phi (x)\) with a current J(x), then the equation is not given as a homogeneous equation like Eq. (1) but given as a nonhomogeneous equation

In that case, a particular solution of \(\phi _{\text{P}}(x)\) can be obtained by convolving with Green’s function \(\Delta _{\text{F}}(x-y)\) as

where Green’s function \(\Delta _{\text{F}}(x-y)\) satisfies the following equation

Here, \(\delta ^{(4)}(x-y)\) is the four-dimensional Dirac delta function. Such a partial differential equation for Green’s function can be solved conveniently by performing the Fourier transform [16,17,18] into the momentum space. The Fourier-transform representations of \(\phi (x)\) and \(\delta ^{(4)}(x-y)\) are expanded in linear combinations of the free-particle wavefunction \(\phi _p(x)\equiv {\text{e}}^{-ip\cdot x}\) in the configuration space, where \(p^{\mu} =(p^0,{\varvec{p}})\) is the four-momentum and \(p\cdot x=p^0x^0-{\varvec{p}}\cdot {\varvec{x}}\),

Here, each of the four components of the momentum \(p^{\mu}\) is integrated over the interval \((-\infty ,\infty )\) and the oscillating factor \({\text{e}}^{-ip\cdot (x-y)}= \phi _p(x)\phi _p^\star (y)\) behaves as the projection operator that projects out the free-particle state of three-momentum \({\varvec{p}}\). \({\tilde{\phi }}(p)\) is the momentum-space wavefunction.

The Feynman propagator \(i\Delta _{\text{F}}(x-y)\) is nothing but Green’s function up to a complex phase as

where \(p^{\mu}\) is the four-momentum of the propagating scalar field and the integration for every component is over \((-\infty ,\infty )\). Here, the factor \(i{\tilde{\Delta }}_{\text{F}}(p)\) is called the momentum-space representation of the propagator with four-momentum \(p^{\mu}\) that can be obtained by substituting the expression (7) for that in Eq. (5) as

Here, \(\varepsilon\) is an infinitesimally small positive number that is introduced to avoid the singularity at \(p^2=m^2\). The choice of the sign \(+i\varepsilon\) corresponds to the causality boundary condition for Green’s function that can be shown by carrying out the integration of Green’s function over \(p^{0}\) as

where \(p^{0}=E_{{\varvec{p}}}=\sqrt{{\varvec{p}}^2+m^2}>0\). The derivation of the result in Eq. (9) is presented in Appendix A. Such a use of \(+i\varepsilon\) to impose a causality boundary condition also can be found in the integral representation of the Heaviside step function, which is well discussed in reference [19]. More detailed explanations on the expression in Eqs. (7) and (8) can be found, for example, on pp 186–188 of Bjorken and Drell [6] and in equation (7.69) of Schwartz [9].

The Fourier-transform representation of the Feynman propagator \(i\Delta _{\text{F}}(x-y)\) in the position space given in Eq. (7) represents the propagation of the scalar field from a space-time coordinate \(y^{\mu}\) to \(x^{\mu}\) expanded in a linear combination of free-particle waves \({\text{e}}^{-ip\cdot (x-y)}\).

The expression in Eq. (7) is the consequence of the Wick theorem [20] for quick rewriting the time-ordered field operator product in perturbation theory that is explained well in Box 7.3 on p 102 of Schwartz [9]. The Wick theorem respects the causality boundary condition of Green’s function which is the fundamental background of the two equivalent formalisms of describing the time evolution of the quantum system: the Feynman propagator [4, 21] and the Dyson series expansion for the S matrix [22, 23].

Under the Lorenz condition \(\partial _{\mu} A^{\mu} =0\), Maxwell’s equation \(\partial _{\mu }F^{\mu \nu }=0\) in free space collapses to \(\partial _{\mu} \partial ^{\mu} A^{\nu} =0\). Hence, every component of the four-vector potential \(A^{\nu}\) satisfies the Klein–Gordon equation (1) in the massless limit, \(m\rightarrow 0\). As a result, the retarded and advanced Green’s functions of classical electrodynamics satisfying the Lorenz gage condition are closely related to the positive-energy contribution \([\theta (x^{0}-y^{0})\) term] and the negative-energy contribution \([\theta (y^{0}-x^{0})\) term], respectively, in the massless limit of the Feynman propagator in Eq. (7), except that the pole structure in Eq. (8) should be modified with the standard prescription in classical electrodynamics as

Readers are referred to Section 12.11 of Jackson [24] for more details including the contour shown in Figure 12.7 of that reference.

2.2 Loop integrals

The perturbative expansion of the momentum-space representation of the amplitude for a process involving N external particles with four-momenta \(p_1,\ldots ,p_N\) is expressed as a series in powers of a perturbative coupling. In quantum electrodynamics, the fine-structure constant \(\alpha = {\text{e}}^2/(4\pi )\), is the perturbative expansion parameter. At the leading order in \(\alpha\), the scattering amplitude has propagators whose momenta are completely fixed in linear combinations of the four-momenta for the external particles. At the next-to-leading order in \(\alpha\), the corresponding Feynman diagram acquires a loop whose loop momentum \(k^{\mu}\) ranges from \(-\infty\) to \(\infty\) for any of the four components. Suppose that the loop involves n \((\le N)\) propagators. As is given in ’t Hooft and Veltman [25], the next-to-leading-order amplitude contains a loop integral as a multiplicative factor whose generic form is

Here, \(\alpha _i=1\) for an ordinary case but we may allow \(\alpha _i\) to be any positive integer since such a power may arise in a specific effective field theory. The integral (12) is over the loop momentum \(k^{\mu}\) whose four components are integrated over \((-\infty ,\infty )\) independently. In fact, we should also consider the numerator that contains the loop momentum. However, we have not specified it explicitly in Eq. (12). One can employ the tensor-integral reduction to remove such a factor in the numerator. The standard method of tensor-integral reduction is the Passarino–Veltman reduction given in Passarino and Veltman [26]. An elementary treatment of the tensor-integral reduction can be found in Ee et al. [27].

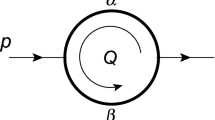

A Feynman diagram of a loop involving n propagators corresponding to the integral in Eq. (12). Here, the bold line and the wavy line represent a fermion of momentum \(q_i\) and a photon of momentum \(p_i\), respectively

There are n vertices and n propagators that are described by the loop integral in Eq. (12). We employ the convention that any one of the external legs has the momentum coming into a vertex as is shown in Fig. 2. The energy–momentum conservation requires that the sum of the external momenta coming into the loop must be the same as the sum of the external momenta going out of the loop. Then, the sum of all of the external momenta always vanishes:

The first propagator has the momentum \(k+p_1\), where k is the loop momentum and \(p_1\) is the momentum of the external particle that couples to the first vertex. The second propagator carries the momentum \(k+p_1+p_2\), where \(p_2\) is the momentum of the external particle that couples to the second vertex. In this manner, the ith propagator has the momentum \(k+p_1+\cdots +p_{i}\). As a result, the real part \(d_i\) of the denominator \(d_i+i\varepsilon\) of the ith Feynman propagator of mass \(m_i\) in Eq. (12) can be defined as

Substituting the momenta in Eq. (14) into the loop integral (12), we find that

The \(k^0\) integral can be evaluated by making use of the calculus of residues. Then the remaining integral over \({\varvec{k}}\) is defined in the three-dimensional Euclidean space. Evidently in Eq. (15), the integral must be a Lorentz-covariant quantity. However, the Euclidean integral is naturally expressed in terms of non-covariant terms and the number of terms contributing to the residue piles up formidably as the number of denominator factors increases. Thus, this method is not practically useful. The parametrizations of Schwinger and of Feynman were developed to combine the propagator–denominator factors into a single power and allow one to compute the four-dimensional loop integral in a convenient way. This approach introduces additional multiple integral over a set of new parameters though. Particle-physics major graduate students are referred to three books [28,29,30] by Smirnov that contain comprehensive guides to advanced loop-integral techniques.

3 Schwinger parameterization

In this section, we present a kind derivation of the Schwinger parameterization. We first identify that the Schwinger parametrization for the Feynman propagator of a scalar field is equivalent to the Laplace transform [31, 32] of the Heaviside step function. By investigating the analytic properties of the transform, we obtain stringent conditions for the applicability particularly involved with \(+i\varepsilon\) term in the propagator denominator and derive the Fourier-transform version of the parametrization. After that, we derive the most general form of the Schwinger parametrization applicable to the parametrization involving multiple denominators with arbitrary positive powers.

3.1 Representations of the unity

We begin with two integral representations of the unity

where the former representation is in terms of a convergent definite integral of an exponential function and the latter is expressed as an integral of the Dirac delta function [33]. As a distribution [34], the Dirac delta function \(\delta (x)\) is defined only through integration:

for any continuous integrable function f(x). According to Eq. (17), \(\delta (x)\) must vanish except at \(x=0\). Apparently, \(\delta (x)\) does have a singularity and discontinuity at \(x=0\). We have restricted the integration limits for the Dirac delta function in Eq. (16) that are identical to those of the first integral to make the two relations consistent with each other. Thus, \(z_0\) in the second line of Eq. (16) must be a positive number. Multiplication of unities involving the Dirac delta function is quite useful in changing variables. Pedagogical examples of applying the Dirac delta function to purely algebraic computations can be found in Ee et al. [35] for an alternative proof of the Cramer’s rule to find the inverse matrix and in Kim et al. for an algebraic derivation of the Jacobian for a multi-variable integral [36].

3.2 Schwinger parametrization of Feynman propagator

If we introduce a positive dimensionless number a as an auxiliary scaling parameter to the integrand, then the first identity in Eq. (16) acquires an additional degree of freedom. The number a can be extended to any complex number provided that the real part \({\mathfrak{Re}}[a]\) is positive to guarantee the convergence:

Apparently, the expression in Eq. (18) has an intrinsic singularity at \(a=0\). This identity can also be understood as the bilateral Laplace transform [31, 32] of the Heaviside step function \(\theta (t)\), which is defined by

While the parametrization in Eq. (18) is convenient for any complex number with positive real part, it is not suitable for parametrizing the momentum-space representation for the Feynman propagator:

where p and m are the four-momentum and mass of the propagating field. Here, we have suppressed the numerator factor that depends on the spin of the corresponding particle. The \(\varepsilon\) is an infinitesimal positive number and \(p^2-m^2\) is real. The condition

required in Eq. (18) is not guaranteed because the sign of the real part \(p^2-m^2\) can vary depending on the explicit values for the four-momentum components. Only the sign of the imaginary part is positive definite.

Julian Schwinger generalized the integral (18) to reparametrize the Feynman propagator in Eq. (20) in equation (2.24) of Schwinger [14] as

This corresponds to the Fourier transform [17, 18] of the Heaviside step function that can be derived from the bilateral Laplace transform (18) by replacing the parameter a with \(-ia\). The requirement of the convergence \({\mathfrak{Re}}[a]>0\) in Eq. (18) is correspondingly modified as \({\mathfrak{Im}}[a]>0\) in the Fourier-transform version in Eq. (21). We call the integration variable s a Schwinger parameter. The relation (21) is applicable to the Feynman propagator in Eq. (20) because

regardless of the value for the real part \(p^2-m^2\). Advanced reviews on the Schwinger parametrization that cover a matrix or a differential operator \({\fancyscript{O}}\) can be found, for example, in Peres [37], González and Schmidt [38], or [39].

3.3 Parametrization of a denominator with exponent α ≥ 1

In the previous section, we considered the parametrization of a single power denominator. In general, the power of a denominator may be given as a positive real number in the parametrization. Let us first consider the case in which the power \(\alpha\) of a denominator factor a is a positive integer. In that case, the parametrization can be derived from the expression in Eq. (18) by taking multiple partial derivatives on both sides of the equation:

The parametrization in Eq. (22) can be transformed into Euler’s definition of a gamma function by multiplying \(a^\alpha (\alpha -1)!\) to both sides of the equation and changing variables \(as\rightarrow t\) in the integral as

Euler’s definition of the gamma function in Eq. (23) is valid even for a complex number \(\alpha\) with \({\mathfrak{Re}}[\alpha ]>0\) because the gamma function is analytic in the region \({\mathfrak{Re}}[\alpha ]>0\). In other words, the Schwinger parametrization can be applied for any complex numbers a and \(\alpha\) satisfying \({\mathfrak{Re}}[a]>0\) and \({\mathfrak{Re}}[\alpha ]>0\) as

The Fourier-transform version of the parametrization corresponding to the expression (24) can be obtained by replacing a with \(-ia\):

3.4 Product of a sequence

In this subsection, we consider the process to combine multiple denominator factors into a single power. For each denominator factor \(a_j=d_j+i\varepsilon\), we need a single independent Schwinger parameter \(s_j\) to perform a single Fourier transform in Eq. (25). We may think of the product of a finite sequence \((a_1^{\alpha _1},\ldots ,a_n^{\alpha _n})\) satisfying \({\mathfrak{Im}}[a_j]=\varepsilon >0\) for all \(j=1\) through n. By applying the identity in Eq. (25) for \(a_j\) from \(j=1\) through n, we can parametrize the product of these factors as

where a bold-italic letter \({\varvec{X}}\) denotes an n-dimensional Euclidean vector whose components are \(X_i\) for \(i=1\) through n and \({\varvec{X}}\cdot {\varvec{Y}}\) is the scalar product of \({\varvec{X}}\) and \({\varvec{Y}}\):

Here, the symbol \(\int _{[0,\infty )} {\text{d}}^n{\varvec{s}}\) in Eq. (26) represents the definite multidimensional integral over the Schwinger parameters \(s_j\)’s as

Readers who want to study the practical use of the parameterization (26) are recommended to refer to the contents involving the alpha representation given in references [28,29,30].

4 Feynman parametrization

In this section, we derive the Feynman parametrization with an arbitrary number of denominator factors and with arbitrary powers in two independent ways: the partial-fraction decomposition and change of variables in the Schwinger parametrization. In those derivations, we employ change of variables by introducing additional delta function as is given in references [35, 36]. The derived parametrization formula coincides with the elementary form of the Feynman parametrization that can be found, for example, in equation (A.39) on p 806 of Peskin and Schroeder [10].

4.1 Partial-fraction decomposition

Feynman parametrization was first introduced in volume 76 of Physical Review in 1949 in equations (14a) and (15a) of Feynman [4] and equation (2.82) of Schwinger [40]. As Feynman wrote ‘suggested by some work of Schwinger’s involving Gaussian integrals’ right after equation (14a) in reference [4], Schwinger introduced the parametrization ahead of Feynman. These early computations involving Feynman parametrization exploited the partial-fraction decomposition,

which is an elementary algebraic method of breaking a rational function apart. One can think of the right-hand side of Eq. (29) as the result of a definite integral:

Note that the integral representation in Eq. (30) is valid only when 0 is not included in the integral domain. Changing variables with

where x runs from 0 to 1, we have \({\text{d}}t=(a-b){\text{d}}x\) and obtain the Feynman parametrization of a rational function 1/(ab):

where \(a_1=a\), \(a_2=b\), and \(x_1=x\). In principle, a and b can be any non-vanishing numbers or functions if 0 does not exist on the line connecting a and b in a complex plane.

As the denominator factors coming from Feynman propagators, a and b are of the form

\(d_1={\mathfrak{Re}}(a)\) and \(d_2={\mathfrak{Re}}(b)\) may vanish as we have stated earlier. However, the presence of the tiny imaginary part \(+i\varepsilon\) forces neither a nor b to be equal to 0. Hence, the combined denominator in Eq. (31) never hits 0 because both \(d_1\) and \(d_2\) are real and the imaginary part \({\mathfrak{Im}}[xa + (1-x)b]=\varepsilon\) remains positive definite,

We continue to construct the identity for more denominator factors. By making use of the expression in Eq. (31), we combine the first two denominator factors in \(1/(a_1a_2a_3)\):

where we have made use of the identity \({\partial }(1/{\mathcalligra {a}})/{\partial {\mathcalligra {a}}}=-1/{\mathcalligra {a}}^2\). We combine the denominator factors in Eq. (34) by making use of the formula (31) once again:

The variables of the multiple integral in Eq. (35) are not appropriate for the Feynman parametrization because the sum of the parameters is not equal to 1.

A convenient way to change the variables into the Feynman parameters for the three denominator factors is to insert the unity. A set of Feynman parameters can be \(y_1=yx_1\), \(y_2=yx_2\), and \(y_3=1-y\) whose sum is 1. This unity is the product of the integrals over a new set of Feynman parameters whose integrands are all Dirac delta functions:

By multiplying the integrand in Eq. (35) by the unity in Eq. (36) and integrating over \(x_1\), \(x_2\), and y, as is shown in [35, 36], we obtain

where we have made use of the property of the Dirac delta function

Here, \(\xi _j\)’s are simple zeros of \(f(\xi )\).

In this way, we can combine an arbitrary number of denominator factors. By inspection, we find that overall factor 2 and the denominator power 3 in Eq. (37) are correlated. As the number of factors increases by unity, the denominator power also increases by unity. Thus, the denominator power is n and the overall factor is \((n-1)!=\Gamma [n]\) when we combine n denominator factors. Mathematical induction can be applied to verify that for any n, the propagator denominator can be combined as

Note that the parametrization in Eq. (39) holds only if \(\sum _{i=1}^{n}x_i a_i\ne 0\) for all \(x_i\). In our case, that condition is always satisfied because \({\mathfrak{Im}}(a_i)>0\).

4.2 General rule

In a similar way illustrated in Sect. 3.3, we derive a more general form of the Feynman parameterization by taking the partial derivatives of the expression in Eq. (39) with respect to \(a_i\)’s as

Here, \({\mathfrak{Im}}(a_i)>0\) and \(\alpha _i\) are positive integers. The form of the Feynman parametrization in Eq. (40) is consistent with equation (6.42) on p 190 of Peskin and Schroeder [10]. We will show that the parameterization in Eq. (40) is the most general form of the Feynman parametrization applicable to any complex numbers \(\alpha _i\) satisfying \({\mathfrak{Re}}(\alpha _i)>0\).

4.3 Derivation of Feynman parametrization from Schwinger’s

The product of a sequence \(1/\prod _{i} a_i^{\alpha _i}\) can be expressed by the Schwinger parameterization given in Eq. (26) as

where \({\varvec{a}}=(a_1,a_2,\ldots , a_n)\), \({\varvec{s}}=(s_1,s_2,\ldots ,s_n)\), and

Note that the parametrization is valid only if \({\mathfrak{Im}}[a_j]>0\) and \({\mathfrak{Re}}[\alpha _j]>0\) for all \(j\in \{1,\ldots ,n\}\). The n independent parameters \(s_j\) in Eq. (41) can be scaled with a single dimensionless parameter s as

We define an n-dimensional Euclidean vector \({\varvec{x}}\) representing the coordinates of \({\varvec{s}}\) in units of s as

According to Eqs. (42) and (43), every element \(x_j\) ranges from 0 to 1 and the sum of the components of \({\varvec{x}}\) must be 1:

Following the way of changing variables with the Dirac delta function described in references [35, 36], one can implement the requirement (42) into Eq. (26) by multiplying the following integral representation of the unity,

Multiplying the integrand on the right-hand side of Eq. (41) by the unity in Eq. (45) and changing the variables as presented in Eq. (44), we find that

where we have substituted \(N\equiv \sum _{j}\alpha _j\) and \({\varvec{a}}={\varvec{d}}+i\varepsilon (1,\ldots ,1)\). Here, the symbol \(\int _{[0,1]} {\text{d}}^n {\varvec{x}}\) represents

In the second line of Eq. (46), we have pulled out the factor of s from the argument of the Dirac delta function as 1/s by making use of the identity given in Eq. (38). We notice that the integral over s in Eq. (46) is convergent because of the infinitesimally positive real parameter \(\varepsilon\) that comes from the tiny imaginary part of the denominator of the Feynman propagator. Were it not for this \(\varepsilon \rightarrow 0^+\), the s integral would have been divergent.

In order to pull out the complex factor \(\varepsilon -i{\varvec{d}}\cdot {\varvec{x}}\) from the exponential function in Eq. (46), we consider the following complex integral

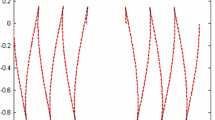

where the contour C shown in Fig. 3 is defined by

Here, we restrict \(\phi _0\) to the region \(\left( -\pi /2,\pi /2\right)\) to make the arctangent uniquely defined. Note that \(|\phi _0|<\pi /2\) because \(\varepsilon \ne 0\) and \({\varvec{d}}\cdot {\varvec{x}}\in (-\infty ,\infty )\).

A diagram of the contour \(C=C_{0}\cup C_{\phi }\cup C_{R}\) given in Eq. (49)

For each contour in Eq. (49), we define

Because the integrand of I in Eq. (48) is an analytic inside the closed contour C, I becomes 0 according to the Cauchy integral theorem:

As is shown in Appendix B,

Substituting 0 for \(I_{\phi }\) in Eq. (51), we get

where \(\Gamma [N]\) is the gamma function defined in Eq. (23). By substituting the result in Eq. (52) for the integral in Eq. (46), we finally obtain the general form of the Feynman parameterization

Note that the expression in Eq. (53) holds for any \(a_j\) and \(\alpha _j\) satisfying \({\mathfrak{Im}}[a_j]>0\) and \({\mathfrak{Re}}[\alpha _j]>0\). Therefore, the same expression in Eq. (40) with the one in Eq. (53) also holds for any complex numbers \(\alpha _i\) satisfying \({\mathfrak{Re}}(\alpha _i)>0\).

As we stated earlier in Sect. 4.2, the derivation of the parametrization in Eq. (40) based on partial derivatives is defined only for positive integers \(\alpha _i\)’s because non-integer powers cannot be generated by partial derivatives in a usual way. Therefore, our derivation of the master formula (53) corresponds to the analytic continuation of the Feynman parametrization in Eq. (40) from positive integers \(\alpha _i\)’s to any complex \(\alpha _i\)’s satisfying \({\mathfrak{Re}}(\alpha _i)>0\).

5 Elementary applications

In this section, we consider elementary applications of our results. The master relation (53) for the product of a sequence contains an integral over the Feynman parameters \(x_i\)’s whose sum is 1. If there is no other \(x_i\) dependence in the integrand, then the integral over the Feynman parameters reduces into a trivial integral involving a combinatorial factor. This is an elementary integral arising in the Dyson series expansion of the time-dependent perturbation theory. In Sect. 5.1, we demonstrate that this integral is expressed as a combinatorial factor \(1/(n-1)!\) if the sequence is the identity sequence of n elements. The derivation of the multivariate beta function from the master relation (53) is presented in Sect. 5.2.

5.1 Combinatorial factor in Feynman-parameter integral

Let us consider the master relation (53) for the product of a sequence with a trivial case of the identity sequence \({\varvec{a}}=(1,\ldots ,1)\) of n elements with unit powers \(\alpha _i=1\) for all i. In that case, the left-hand side of Eq. (53) becomes \(1^n=1\). By substituting the sequence \({\varvec{a}}=(1,\ldots ,1)\) into the integral on the right-hand side of Eq. (53), we have

Therefore, the master relation for that trivial case results in the following integral table

We first check the integral table (55) by computing the integral with an elementary brute-force method without using any advanced techniques. The \(x_n\) integral in Eq. (55) is trivial because of the Dirac delta function. After the \(x_n\) integration, the lower limit of every integral can be fixed to 0. However, the sum of the remaining \(n-1\) Feynman parameters must be 1 because of the Dirac delta function. The upper limit of the \(x_1\) integral can be fixed to 1 at which all of the remaining parameters vanish. Then the upper limit of the \(x_2\) integral is fixed to \(1-x_1\). In a similar manner, the upper limit of the \(x_i\) integral is fixed as \(1-x_1\cdots -x_{i-1}\) for all \(i=1\) through \(n-1\). As a result, the integral in Eq. (55) can be evaluated as

Because the integrand is 1, the \(x_{n-1}\) integral is trivial

The integration over \(x_2\) can be carried out as

where

A recursive evaluation of the integrals leads to

which confirms that the integral table (55) is valid.

An alternative expression of the integral table (55) can be derived by performing a change of variables. If we change variables in Eq. (56) as

then the integral becomes

where \(J\left[ \frac{\partial (x_1,x_2,\ldots ,x_{n-1})}{\partial (u_1,u_2,\ldots ,u_{n-1})}\right]\) is the Jacobian for \(n-1\) variables. The Jacobian matrix of that transformation is always expressed in an upper triangular matrix

due to the following identity

The Jacobian can be evaluated straightforwardly because the determinant of an upper triangular matrix is the product of its diagonal components:

By inserting the Jacobian in Eq. (64) into the one in Eq. (61), we obtain an alternative expression of the integral table (55)

Additionally, let us perform another change of variables in Eq. (70) as

The transformation yields

Then we can express the integral (67) by making use of the Heaviside step function \(\theta (t)\) defined in Eq. (19) as

which can be computed by substituting \(a_i=1\) and \(\alpha _i=1\) in the Feynman parameterization (40). Remarkably, the integral (68) appears in various fields of physics. The time-ordered product appearing in the Dyson series representation for the Schwinger–Dyson equation for the time-dependent perturbation theory [22, 23, 41, 42] indeed has the same integral in Eq. (68). Equation (32) of Dyson [22] and equation (4) of Dyson [23] are the earliest examples. The path-ordered exponential of quantum field theory has the same mathematical structure. Readers are referred to p 85 of Peskin and Schroeder [10] for more detail.

It is worthwhile to mention that the integral (55) can be straightforwardly computed if we apply a combinatorial argument. First of all, the \((n-1)\)-fold multiple integral is the unity if we set all of the limits to \(x_i\) integral as \(x_i\in [0,1]\). In fact, all of the variables \(x_i\)’s can be treated distinctly because any set of variables of equal value does not contribute to the integral since the corresponding integral measure converges to 0. Thus, there are \((n-1)!\) permutations in ordering \((n-1)\) distinct numbers. The product of the Heaviside step functions in the last line of Eq. (55) reflects the fact that only a fraction of \(1/(n-1)!\) of unity contributes to the integral.

5.2 Multivariate beta function

Let us consider a more general case of the master relation (53) for the product of a sequence with an identity sequence \({\varvec{a}}=(1,\ldots ,1)\) of n elements with non-trivial \(\alpha _i\):

where \(N=\sum _{i} \alpha _{i}\). In that case, we obtain the following integral table

which is the multivariate beta function. When \(n=2\), the integral becomes the beta function

When \(n=3\), the integral is identical to the one appearing in the parametrization of the surface integral on a triangle in the barycentric coordinate system as is shown in references [43, 44]

For example, the integral table given in equation (26) in Ref. [43] corresponding to the surface integral over a triangle in the barycentric coordinate is nothing but the case \(n=3\) and \((\alpha _1,\alpha _2,\alpha _3)=(2,1,1)\) of the integral table (70)

for \(i=a,b,c\). The integral table given in appendix A in Ref. [43] can also be derived from the integral table in Eq. (70) by applying the binomial expansion

Here, the integral over \(\kappa _i\) in the second line is the case which \(n=3\) and \((\alpha _1,\alpha _2,\alpha _3)=(p-j+1,q-k+1,1)\).

6 Conclusion

We have investigated detailed nature of analytic properties hidden in the derivation of Schwinger and Feynman parametrizations. Although they are originally developed for combining the Feynman propagator factors in loop integrals in perturbative quantum field theory, the formulation is pedagogically useful far beyond the specific applications to quantum field theoretical computations. The extensive employment of the analyticity of a complex function for the derivation provides a wide variety of exemplary problems with which students can deepen their understanding of complex variables.

The tiny imaginary part \(+i\varepsilon\) appearing in the Feynman propagator has a crucial role of restricting the formulation of the amplitude to respect Huygens’ principle of propagating wave of fields. This is not a mere ad hoc prescription but a rigorous use of mathematical identity based on the Cauchy integral theorem. The significance of the presence of the infinitesimally small positive number has been investigated through our evaluations of contour integrals. Mathematically, the parameter provides a stringent condition of our analytic expression strictly convergent to a physical value.

An application of n-dimensional Fourier transform of multiple Heaviside step functions has led to the Schwinger parametrization of the product of numbers. By multiplying a unity that is parametrized by a convolution of the Dirac delta function, we have demonstrated a straightforward way of changing variables, which leads to the gateway to the Feynman parametrization. Such a living example of the Dirac delta function in coordinate transformation does not have to be known exclusively to particle physicists but undergraduate student can enjoy the tool in various practical computations.

We have made use of the master formula of the Schwinger parameterization of the product of a sequence to compute elementary expressions that are pedagogically useful. We have shown that the combinatorial factor is closely related to the time-ordered product by a brutal-force evaluation of an n-dimensional integral of Feynman parameters whose integral is unity. The multivariate beta function derived from the parametrization is particularly useful in carrying out a surface integral in barycentric coordinate system. In conclusion, we have demonstrated through an explicit derivation of the Schwinger and Feynman parametrizations that the formulation contains rich spectrum of analytic techniques as a state of the art.

References

S. Schweber, QED and the Men Who Made It: Dyson, Feynman, Schwinger and Tomonaga (Princeton University Press, Princeton, 1994)

J. Schwinger, On quantum-electrodynamics and the magnetic moment of the electron. Phys. Rev. 73, 416 (1948). https://doi.org/10.1103/PhysRev.73.416

T. Aoyama, T. Kinoshita, N. Makiko, Theory of the anomalous magnetic moment of the electron. Atoms 7, 28 (2019). https://doi.org/10.3390/atoms7010028

R.P. Feynman, Space-time approach to quantum electrodynamics. Phys. Rev. 76, 769 (1949). https://doi.org/10.1103/PhysRev.76.769

G.F. Roach, Green’s Functions, 2nd edn. (Cambridge University Press, Cambridge, 1982)

J.D. Bjorken, S.D. Drell, Relativistic Quantum Mechanics (McGraw-Hill, New York, 1964)

P.A.M. Dirac, The quantum theory of the electron. Proc. R. Soc. Lond. A 117, 610 (1928). https://doi.org/10.1098/rspa.1928.0023

P.A.M. Dirac, The quantum theory of the electron. Part II. Proc. R. Soc. Lond. A 118, 351 (1928). https://doi.org/10.1098/rspa.1928.0056

M.D. Schwartz, Quantum Field Theory and the Standard Model (Cambridge University Press, Cambridge, 2014), pp.128–130

M.E. Peskin, D.V. Schroeder, An Introduction to Quantum Field Theory (CRC Press, Boca Raton, 2018)

J.W. Brown, R.V. Churchill, Complex Variables and Applications, 8th edn. (McGraw-Hill Higher Education, Boston, 2009)

S. Ponnusamy, H. Silverman, Complex Variables with Applications (Birkhäuser, Boston, 2006)

R.E. Greene, S.G. Krantz, Function Theory of One Complex Variable, 3rd edn. (American Mathematical Society, Providence, 2006)

J. Schwinger, On gauge invariance and vacuum polarization. Phys. Rev. 82, 664 (1951). https://doi.org/10.1103/PhysRev.82.664

W. Gordon, Der Comptoneffekt nach der Schrödingerschen Theorie. Z. Phys. 40, 117 (1926). https://doi.org/10.1007/BF01390840

J.B. Fourier, Théorie analytique de la chaleur (Firmin Didot, père et fils, Paris, 1822). [J.B. Fourier, The Analytical Theory of Heat, translated from French (1878) to English by A. Freeman (Cambridge University Press, Cambridge, 2009)]

H.S. Carslaw, Introduction to the Theory of Fourier’s Series and Integrals, 2nd edn. (Macmillan, London, 1921)

B.G. Osgood, Lectures on the Fourier Transform and Its Applications (American Mathematical Society, Providence, 2019)

U.-R. Kim, S. Cho, W. Han, J. Lee, Investigation of infinitely rapidly oscillating distributions. Eur. J. Phys. 42, 065807 (2021). https://doi.org/10.1088/1361-6404/ac25d1

G.C. Wick, The evaluation of the collision matrix. Phys. Rev. 80, 268 (1950). https://doi.org/10.1103/PhysRev.80.268

R.P. Feynman, The theory of positrons. Phys. Rev. 76, 749 (1949). https://doi.org/10.1103/PhysRev.76.749

F.J. Dyson, The radiation theories of Tomonaga, Schwinger, and Feynman. Phys. Rev. 75, 486 (1949). https://doi.org/10.1103/PhysRev.75.1736

F.J. Dyson, The \(S\) matrix in quantum electrodynamics. Phys. Rev. 75, 1736 (1949). https://doi.org/10.1103/PhysRev.75.1736

J.D. Jackson, Classical Electrodynamics, 3rd edn. (Wiley, New York, 1998)

G. ’t Hooft, M. Veltman, Scalar one-loop integrals. Nucl. Phys. B 153, 365 (1979). https://doi.org/10.1016/0550-3213(79)90605-9

G. Passarino, M. Veltman, One-loop corrections for \(e^+e^-\) annihilation into \(\mu ^+\mu ^-\) in the Weinberg model. Nucl. Phys. B 160, 151 (1979). https://doi.org/10.1016/0550-3213(79)90234-7

J.-H. Ee, D.-W. Jung, U.-R. Kim, J. Lee, Combinatorics in tensor-integral reduction. Eur. J. Phys. 38, 025801 (2017). https://doi.org/10.1088/1361-6404/aa54ce

V.A. Smirnov, Evaluating Feynman Integrals (Springer, Heidelberg, 2004)

V.A. Smirnov, Feynman Integral Calculus (Springer, Heidelberg, 2006)

V.A. Smirnov, Analytic Tools for Feynman Integrals (Springer, Heidelberg, 2012)

P.-S. Laplace, Théorie analytique des probabilités (Ve. Courcier, Paris, 1812). [Translated by A.I. Dale, Philosophical Essay on Probabilities (Springer, New York, 1995)]

J.L. Schiff, The Laplace Transform Theory and Applications (Springer, Heidelberg, 1991)

P.A.M. Dirac, The Principles of Quantum Mechanics, 1st edn. (Clarendon Press, Oxford, 1930)

I. Halperin, L. Schwartz, Introduction to the Theory of Distributions (University of Toronto Press, Toronto, 1952)

J.-H. Ee, C. Yu, J. Lee, Proof of Cramer’s rule with Dirac delta function. Eur. J. Phys. 41, 065002 (2020). https://doi.org/10.1088/1361-6404/abdca9

D. Kim, J.-H. Ee, C. Yu, J. Lee, Derivation of Jacobian formula with Dirac delta function. Eur. J. Phys. 42, 035006 (2021). https://doi.org/10.1088/1361-6404/abdca9

A. Peres, On Schwinger’s parametrization of Feynman graphs. Il Nuovo Cimento 38, 270 (1965). https://doi.org/10.1007/BF02750455

I. González, I. Schmidt, Recursive method to obtain the parametric representation of a generic Feynman diagram. Phys. Rev. D 72, 106006 (2005). https://doi.org/10.1103/PhysRevD.72.106006

L.C. Albuquerque, C. Farina, S. Rabello, Schwinger’s method and the computation of determinants. Am. J. Phys. 66, 524 (1998). https://doi.org/10.1119/1.18894

J. Schwinger, Quantum electrodynamics. III. The electromagnetic properties of the electron—radiative corrections to scattering. Phys. Rev. 76, 790 (1949). https://doi.org/10.1103/PhysRev.76.790

J. Schwinger, On Green’s functions of quantized fields. 1. Proc. Natl. Acad. Sci. 37, 452 (1951). https://doi.org/10.1073/pnas.37.7.452

J. Schwinger, On Green’s functions of quantized fields. 2. Proc. Natl. Acad. Sci. 37, 455 (1951). https://doi.org/10.1073/pnas.37.7.455

U-R. Kim, D.-W. Jung, C. Yu, W. Han, J. Lee, Inertia tensor of a triangle in barycentric coordinates. J. Korean Phys. Soc. 79, 589 (2021). https://doi.org/10.1007/s40042-021-00255-3

U-R. Kim, W. Han, D.-W. Jung, J. Lee, C. Yu, Electrostatic potential of a uniformly charged triangle in barycentric coordinates. Eur. J. Phys. 42, 4 (2021). https://doi.org/10.1088/1361-6404/abf89e

Acknowledgements

As members of the Korea Pragmatist Organization for Physics Education (KPOP\({\fancyscript{E}}\)), the authors thank to the remaining members of KPOP\({\fancyscript{E}}\) for useful discussions. This work is supported in part by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) under Contract no. NRF-2020R1A2C3009918. The work is also supported in part by the National Research Foundation of Korea (NRF) under the BK21 FOUR program at Korea University, Initiative for science frontiers on upcoming challenges.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jungil Lee: Director of the Korea Pragmatist Organization for Physics Education (KPOPE).

Appendices

Appendix A: Feynman propagator

In this appendix, we provide the detail of the integration of the Feynman propagator given in Eq. (7) over \(p_0\) to obtain the result in Eq. (9). The Feynman propagator for a scalar field of four-momentum \(p^{\mu }\) given in Eq. (7) is

where the integration for every component is over \((-\infty ,\infty )\) and \(\varepsilon\) is an infinitesimally small positive real number. We first factorize \(p^{0}\)-dependent contribution in the integral as

where

Here, we have used the identity

In order to evaluate the integration of the propagator over \(p^0\), we introduce the following complex integrals over z

where the contours \(C_{0}\), \(C_{+}\), and \(C_{-}\) shown in Fig. 4 are defined by

If \(x^0>y^0\), then the \(p_0\) integral in Eq. (75) can be evaluated by the following complex integral \(I_{x^0>y^0}\) along the closed contour \(C_{x^0>y^0}\equiv C_0 \cup C_-\):

As is shown in Fig. 4, the closed contour \(C_{x^0>y^0}\) encloses the simple pole at \(z=\sqrt{m^2+{\varvec{p}}^2}-i\varepsilon\) in the lower half-plane. By applying Cauchy’s residue theorem, we compute \(I_{x^0>y^0}\) in Eq. (79) as

Here, the negative sign in the second equality originates from the orientation of the contour \(C_{x^0>y^0}\) that is clockwise. An upper bound of \(|I_{-}|\) can be found as

where

Here, we have made use of the inequality

In the limit \(R\rightarrow \infty\), the denominator of the upper bound in Eq. (84) can be expanded in powers of 1/R as

which implies that

Consequently, the Eq. (80) reduces to

By substituting the result in Eq. (86) for \(p^0\) integral in Eq. (75), we obtain the expression of the propagator for \(x^0>y^0\) as

where \(E_{{\varvec{p}}}\equiv \sqrt{m^2+{\varvec{p}}^2}\).

In a similar manner, we introduce the complex integral \(I_{x^0<y^0}\) along the closed contour \(C_{x^0<y^0}\equiv C_0 \cup C_+\) to evaluate the \(p_0\) integral in Eq. (75) for \(x^0<y^0\) as

As is shown in Fig. 4, the closed contour \(C_{x^0<y^0}\) encloses the simple pole at \(z=-\sqrt{m^2+{\varvec{p}}^2}+i\varepsilon\) in the upper half-plane. According to Cauchy’s residue theorem, \(I_{x^0<y^0}\) in Eq. (88) can be computed as

As we did in Eq. (84), we evaluate an upper bound of \(|I_{+}|\)

where

In the limit \(R\rightarrow \infty\), we have

Hence,

and the Eq. (89) becomes

By substituting the result in Eq. (94) for \(p^0\) integral in Eq. (75), we obtain the expression of the propagator for \(x^0<y^0\) as

Finally, we obtain the expression in Eq. (9) by combining the results in Eqs. (87) and (95),

Here, the zeroth component of the four-momentum \(p^{\mu }\) is now fixed as \(p^{0}=E_{{\varvec{p}}}=\sqrt{{\varvec{p}}^2+m^2}\).

Appendix B: Proof of I ϕ = 0 defined in Eq. (50)

In this appendix, we compute the integral \(I_{\phi }\) defined in Eq. (50):

In order to evaluate an upper bound of \(|I_{\phi }|\), we first factorize the integrand as

where

Then, an upper bound of \(|I_{\phi }|\) can be determined as

In deriving the second inequality in Eq. (100), we have used the following inequality

Note that the inequality in Eq. (101) also holds for negative \(\phi _0\) when \(\phi \in [\phi _0,0]\). Because \(|\phi _{0}|<\frac{\pi }{2}\), R-dependent part of the upper bound in Eq. (100) always vanishes

Consequently,

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kim, UR., Cho, S. & Lee, J. The art of Schwinger and Feynman parametrizations. J. Korean Phys. Soc. 82, 1023–1039 (2023). https://doi.org/10.1007/s40042-023-00764-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40042-023-00764-3