Abstract

We consider the normal mode problem of a vibrating string loaded with n identical beads of equal spacing, which involves an eigenvalue problem. Unlike the conventional approach to solving this problem by considering the difference equation for the components of the eigenvector, we modify the eigenvalue equation by introducing matrix-valued Lagrange undetermined multipliers, which regularize the secular equation and make the eigenvalue equation non-singular. Then, the eigenvector can be obtained from the regularized eigenvalue equation by multiplying the indeterminate eigenvalue equation by the inverse matrix. We find that the inverse matrix is nothing but the adjugate matrix of the original matrix in the secular determinant up to the determinant of the regularized matrix in the limit that the constraint equation vanishes. The components of the adjugate matrix can be represented in simple factorized forms. Finally, one can directly read off the eigenvector from the adjugate matrix. We expect this new method to be applicable to other eigenvalue problems involving more general forms of the tridiagonal matrices that appear in classical mechanics or quantum physics.

Similar content being viewed by others

1 Introduction

The vibration of a loaded string is one of the highest-level problems appearing in classical mechanics curriculum that involves an eigenvalue problem and requires various mathematical techniques to attack. It is a gateway to understanding quantum mechanics, because it has an analogous mathematical structure of discrete eigenvalues like the quantum mechanical infinite potential well problem. The problem is also another gateway to approaching classical and quantum field theories, because the continuum limit that takes \(d\rightarrow 0\) and \(n\rightarrow \infty \) with \(d(n+1)=L\) fixed leads to the concept of fields. Here, n is the number of beads, d is the equal spacing, and L is the length of the string.

In classes for classical mechanics, the string vibration is introduced after the coupled harmonic oscillator [1]. While the mathematical structures of the two problems are essentially the same, the approaches to the two problems in ordinary textbooks are rather different: they solve the eigenvalue equation of the form  for a lower dimensional problem like the coupled oscillator by employing the Gaussian elimination to find the relation among the components of an eigenvector after having found an eigenvalue which is a solution for the secular equation \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]=0.\) Here, \(\mathbb {A}\) is an \(n\times n\) square matrix determined by the system, \(\lambda \) is an eigenvalue, and

for a lower dimensional problem like the coupled oscillator by employing the Gaussian elimination to find the relation among the components of an eigenvector after having found an eigenvalue which is a solution for the secular equation \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]=0.\) Here, \(\mathbb {A}\) is an \(n\times n\) square matrix determined by the system, \(\lambda \) is an eigenvalue, and  is the corresponding eigenvector. This approach is not adequate for the problem of a vibrating string loaded with n beads, because the computation with an \(n\times n\) matrix with a large or arbitrary integer n is too awkward to deal with. Thus, the method of difference equation is employed. Actually, the difference equation for the components of each eigenvector is the same as that for the secular determinant \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\) with different boundary conditions [2, 3]. The approach employing the three-term difference equation stems from the fact that the matrix \(\mathbb {A}\) is a tridiagonal square matrix, because only the nearest neighbors on the left and right of a given bead interact with the bead. Thus, the difference equation consists of three consecutive sequenced terms and the equation can be solved in a manner similar to that used to solve a second-order ordinary linear differential equation [4]. This conventional approach determines every component of the eigenvector with the boundary conditions that the end points are fixed. These boundary conditions determine the eigenvalues simultaneously without solving the secular equation \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]=0\). The eigenvalues for a lower dimensional problem like the coupled harmonic oscillator are usually found by solving the secular equation first.

is the corresponding eigenvector. This approach is not adequate for the problem of a vibrating string loaded with n beads, because the computation with an \(n\times n\) matrix with a large or arbitrary integer n is too awkward to deal with. Thus, the method of difference equation is employed. Actually, the difference equation for the components of each eigenvector is the same as that for the secular determinant \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\) with different boundary conditions [2, 3]. The approach employing the three-term difference equation stems from the fact that the matrix \(\mathbb {A}\) is a tridiagonal square matrix, because only the nearest neighbors on the left and right of a given bead interact with the bead. Thus, the difference equation consists of three consecutive sequenced terms and the equation can be solved in a manner similar to that used to solve a second-order ordinary linear differential equation [4]. This conventional approach determines every component of the eigenvector with the boundary conditions that the end points are fixed. These boundary conditions determine the eigenvalues simultaneously without solving the secular equation \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]=0\). The eigenvalues for a lower dimensional problem like the coupled harmonic oscillator are usually found by solving the secular equation first.

In this paper, we focus on developing a single strategy to solve these two essentially the same problems. By employing the Lagrange-undetermined-multiplier approach recently developed in Ref. [5], we modify the eigenvalue equation into  , where \(\alpha \) is a free parameter that regularizes the original matrix \(\mathbb {A}-\lambda \mathbb {1}\) in the secular determinant and the regularized one \(\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}\) is no longer singular. The identity matrix \(\mathbb {1}\) and an arbitrary column vector \(\mathbb {c}\) multiplied by \(\alpha \) are matrix-valued Lagrange undetermined multipliers. The substitution of the constraint equation \(\alpha =0\) is to be delayed until we solve the eigenvector

, where \(\alpha \) is a free parameter that regularizes the original matrix \(\mathbb {A}-\lambda \mathbb {1}\) in the secular determinant and the regularized one \(\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}\) is no longer singular. The identity matrix \(\mathbb {1}\) and an arbitrary column vector \(\mathbb {c}\) multiplied by \(\alpha \) are matrix-valued Lagrange undetermined multipliers. The substitution of the constraint equation \(\alpha =0\) is to be delayed until we solve the eigenvector  for this modified equation. The regularized eigenvalue equation is then solvable by multiplying it by the inverse matrix \((\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1})^{-1}\). We shall find that the regularized secular determinant \(\mathscr {D}_n(\lambda ,\alpha )\equiv \mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}]\) approaches a linear function of \(\alpha \) in the limit \(\alpha \rightarrow 0\) as long as the system does not have degenerate eigenstates, which is true if the displacement of every bead is along a single axis. As a result, the eigenvector corresponding to the eigenvalue \(\lambda =\lambda _{i}\) can be expressed as

for this modified equation. The regularized eigenvalue equation is then solvable by multiplying it by the inverse matrix \((\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1})^{-1}\). We shall find that the regularized secular determinant \(\mathscr {D}_n(\lambda ,\alpha )\equiv \mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}]\) approaches a linear function of \(\alpha \) in the limit \(\alpha \rightarrow 0\) as long as the system does not have degenerate eigenstates, which is true if the displacement of every bead is along a single axis. As a result, the eigenvector corresponding to the eigenvalue \(\lambda =\lambda _{i}\) can be expressed as  . We shall find that the matrix in front of \(\mathbb {c}\) is indeed the adjugate \({\text {adj}}(\mathbb {A}-\lambda _{i} \mathbb {1})\) of the original matrix and this adjugate is always well defined even when \(\mathbb {A}-\lambda _{i} \mathbb {1}\) is singular. That every column and row of the adjugate matrix \({\text {adj}}(\mathbb {A}-\lambda _{i} \mathbb {1})\) are parallel to the eigenvectors

. We shall find that the matrix in front of \(\mathbb {c}\) is indeed the adjugate \({\text {adj}}(\mathbb {A}-\lambda _{i} \mathbb {1})\) of the original matrix and this adjugate is always well defined even when \(\mathbb {A}-\lambda _{i} \mathbb {1}\) is singular. That every column and row of the adjugate matrix \({\text {adj}}(\mathbb {A}-\lambda _{i} \mathbb {1})\) are parallel to the eigenvectors  and

and  , respectively, is remarkable. To our best knowledge, this result is new.

, respectively, is remarkable. To our best knowledge, this result is new.

This paper is organized as follows: in Sect. 2, we review the conventional approach to find the normal modes of a vibrating string. In Sect. 3, we present an alternative approach employing matrix-valued Lagrange undetermined multipliers to solve the problem given in Sect. 2. Conclusions are given in Sect. 4 and a rigorous derivation of the inverse matrix appearing in the regularized eigenvalue problem is given in appendices.

2 Conventional approach

In this section, we review the conventional approach to find the normal modes of a loaded string that can be found in textbooks such as Refs. [1,2,3] on classical mechanics. Consider a vibrating string of length \(L=(n+1)d\) and tension \(\tau \) loaded with n identical beads of mass m with equal spacing d. Here, we restrict ourselves to the simplest case in which only the transverse motion along a single axis is allowed and the deviation from the equilibrium position of the kth bead at the longitudinal coordinate \(x_k=kd\) is denoted by \(q_k\) for \(k=1\) through n, as shown in Fig. 1.

A vibrating string of length \(L = (n + 1)d\) fixed at both ends with tension \(\tau \) and loaded with n identical beads of mass m with equal spacing d. \(q_k\) represents the transverse displacement from the equilibrium position of the kth bead at the longitudinal position \(x_k=k d\) for \(k=1\) through n

We require both ends \(q_0\) and \(q_{n+1}\) to be fixed: \(q_0=q_{n+1}=0\). In the continuum limit \(q_{k}\rightarrow q(x)\), where q(x) and x are the continuum counterparts of \(q_{k}\) and the longitudinal coordinate \(x_k\) for the kth bead, respectively, the difference equation collapses into a second-order ordinary differential equation for q(x), and the above requirement becomes the Dirichlet boundary condition \(q(0)=q(L)=0\).

The kinetic energy T of the system of particles is given by

where \(\mathbb {M}=m\mathbb {1}\) is the mass matrix, \(\mathbb {1}=(\delta _{ij})\) is the \(n\times n\) identity matrix, and \(\mathbb {q}=(q_1\,q_2\,\ldots \,q_n)^{\text {T}}\). Here, \(\delta _{ij}\) is the Kronecker delta. The potential energy of a string segment between the kth and the \((k+1)\)th beads is \(\frac{1}{2}\tau (q_{k+1}-q_k)^2/d\) neglecting the gravitational potential energy. This approximation is valid in the limit \(|q_{k+1}-q_k|\ll d\) for all k from \(k=0\) through n. Thus, the potential energy of the string is

where \(\mathbb {A}=(A_{ij})\) and for i, \(j=1, \ldots \), n the ij element of the matrix is given by

\(\mathbb {A}\) is apparently real and symmetric. This is a band matrix with the main diagonal elements all equal to 2, and the elements of the first diagonals below and above are all equal to \(-1\). The explicit form of \(\mathbb {A}\) is

The Lagrangian of the system is \(L=T-U\) and the corresponding Euler–Lagrange equations are given by

where \(\mathbb {0}=(0\,\ldots \,0)^{\text {T}}\) is the null column vector. The equation of motion is a system of linear difference equations. The standard approach to solving such a system of equations can be found, for example, in Refs. [3, 4]. Assuming the separation of variables, one can introduce a trial solution in which the space and the time components are completely factored. The normal mode with the normal frequency \(\omega \) is then substituted as a trial solution as

where the eigenvector

for the normal mode with frequency

\(\omega \) is independent of time. Then, we end up with the eigenvalue equation

for the normal mode with frequency

\(\omega \) is independent of time. Then, we end up with the eigenvalue equation

We rescale the equation into

by introducing the dimensionless eigenvalue \(\lambda \) as

Note that we have introduced a superscript

\((\lambda )\) in

to indicate that the eigenvector is for the normal mode corresponding to the dimensionless eigenvalue

\(\lambda \). The eigenvalue equation in Eq. (8) is an indeterminate linear equation, because

\(\mathbb {A}-\lambda \mathbb {1}\) is singular:

\(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]=0\). If

\(\mathbb {A}-\lambda \mathbb {1}\) is not singular:

\(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\ne 0\), then

\((\mathbb {A}-\lambda \mathbb {1})^{-1}\) exists and

to indicate that the eigenvector is for the normal mode corresponding to the dimensionless eigenvalue

\(\lambda \). The eigenvalue equation in Eq. (8) is an indeterminate linear equation, because

\(\mathbb {A}-\lambda \mathbb {1}\) is singular:

\(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]=0\). If

\(\mathbb {A}-\lambda \mathbb {1}\) is not singular:

\(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\ne 0\), then

\((\mathbb {A}-\lambda \mathbb {1})^{-1}\) exists and

and this trivial solution is not an eigenvector. The eigenvalue equation (8) reduces to the following difference equation for components of the eigenvector

and this trivial solution is not an eigenvector. The eigenvalue equation (8) reduces to the following difference equation for components of the eigenvector

:

:

where r runs from 1 through n. Because \(q_0=q_{n+1}=0\) at any time t, we have set \(\psi _0^{(\lambda )}=\psi _{n+1}^{(\lambda )}=0\).

The standard method to solve the difference equation (10) can be found, for example, in Ref. [4]: one can substitute the trial solution \(\psi _r^{(\lambda )}=\kappa ^r\) with \(\kappa \ne 0\) into Eq. (10) to find that

The solution for the parameter \(\kappa \) is

Then, the solution can be expressed as a linear combination \(\psi _r^{(\lambda )}=a_1\kappa _+^r+a_2\kappa _-^r\), where \(a_1\) and \(a_2\) are constants. As is shown in Ref. [4], the difference equation has only the trivial solution \(\psi _r^{(\lambda )}=0\) if \(\kappa \) is real: \(\lambda \ge 4\) or \(\lambda \le 0.\)

-

(i)

If \(\lambda > 4\) or \(\lambda < 0,\) then \(\kappa _+\) and \(\kappa _-\) are real and distinct. In addition, the boundary conditions \(\psi ^{(\lambda )}_0=\psi _{n+1}^{(\lambda )}=0\) require that

$$\begin{aligned} \begin{pmatrix} 1&{}1\\ \kappa _+^{n+1}&{} \kappa _-^{n+1} \end{pmatrix} \begin{pmatrix} a_1\\ a_2\end{pmatrix} = \begin{pmatrix} 0\\ 0\end{pmatrix} . \end{aligned}$$(13)The solution is trivial: \(\psi _r^{(\lambda )}=0\), because \(a_1=a_2=0\). Thus, any \(\lambda \) in the region \(\lambda <0\) or \(\lambda >4\) is not an eigenvalue.

-

(ii)

If \(\lambda =0\) or 4, then \(\kappa _+=\kappa _-\) and \(a_1=-a_2\). These conditions require that \(\psi _r^{(\lambda )}=0\) for any r. Because \(\psi _r^{(\lambda )}=0\) is a trivial solution, neither \(\lambda =0\) nor \(\lambda =4\) is an eigenvalue.

-

(iii)

If \(0< \lambda <4,\) then the roots of Eq. (12) are complex. Substituting the trial solution

$$\begin{aligned} \psi _r^{(\lambda )}=d_1\,{\text {e}}^{+ir\theta }+d_2\,{\text {e}}^{-ir\theta }, \end{aligned}$$(14)into the difference equation (10)

$$\begin{aligned} \psi _r^{(\lambda )}=\frac{\psi _{r+1}^{(\lambda )}+\psi _{r-1}^{(\lambda )}}{2-\lambda }, \end{aligned}$$(15)we find that

$$\begin{aligned} \cos \theta = 1-\frac{\lambda }{2}. \end{aligned}$$(16)

By imposing the boundary conditions \(\psi _0^{(\lambda )}=\psi _{n+1}^{(\lambda )}=0\), we can determine \(d_1\) and \(d_2\). The condition \(\psi _0^{(\lambda )}=0\) requires that \(d_2=-d_1:\)

The condition \(\psi _{n+1}^{(\lambda )}=0\) requires that

Thus, the angle \(\theta \) is determined as

As a result, we find the n eigenvectors and the corresponding eigenvalues as

Here, the superscript (s) stands for \((\lambda _s)\) and \(\lambda _s\) is a discrete eigenvalue \(\lambda \) that depends on the integer s. From Eq. (22), manifestly, no degeneracy occurs among any of the n eigenstates. The dimensionless eigenvalue \(\lambda _s\) is monotonically increasing as s increases from 1 to n. The dimensionless eigenvalue \(\lambda _s\) has the minimum value \(\lambda _1=4\sin ^2[\frac{1}{2}\pi /(n+1)]\) at \(s=1\) and has the maximum value \(\lambda _n=4\sin ^2[\frac{1}{2}n\pi /(n+1)]\) at \(s=n\). In the continuum limit, they approach the limits \(\lambda _1\rightarrow 0\) and \(\lambda _n\rightarrow 4\).

The normalization of the eigenvector is set to be

and one can check the orthonormality of the eigenvectors by making use of the trigonometric identity [6]

Hence, the solution for \(\mathbb {q}\) can be expressed as a linear combination of the eigenvectors

where every eigenvector is independent of time and all of the time dependences are contained only in the normal coordinates \(\eta _s(t)\) satisfying the equation of motion

The orthonormal relation in Eq. (24) can be used to project out the normal coordinate

If the initial condition of the string is given by \(\mathbb {q}(0)\) and its time derivative \(\dot{\mathbb {q}}(0)\), then the corresponding initial conditions for the normal coordinates are determined as  and

and  .

.

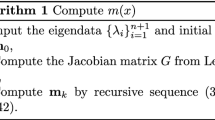

3 Lagrange-multiplier approach

In this section, we adopt the Lagrange-undetermined-multiplier approach developed in Ref. [5], instead of the conventional one described in the previous section. By introducing a parameter \(\alpha \), that is actually vanishing, we modify the indeterminate linear equation in Eq. (8) as

where \(\mathbb {c}\) is an arbitrary complex column vector

The modification involves matrix-valued Lagrange undetermined multipliers \(\mathbb {1}\) and \(\mathbb {c}\) multiplied by the parameter \(\alpha \), and in this case, the constraint equation will be

The role of the matrix-valued multipliers is basically the same as the conventional one that is a number. This trick does not alter the equation at all. However, the modification in Eq. (29) allows one to solve the linear equation by multiplying both sides of Eq. (29) by \((\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1})^{-1}\) as long as we do not impose the condition \(\alpha =0\). While \((\mathbb {A}-\lambda \mathbb {1})^{-1}\) does not exist, \((\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1})^{-1}\) always exists, because \(\mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}]\ne 0\) if \(\alpha \ne 0\). Then, the eigenvector for a given eigenvalue \(\lambda \) is simply determined as [5]

for an arbitrary vector \(\mathbb {c}\) yielding a non-vanishing result.

In order to investigate the structure of Eq. (32), we define the \(\alpha \)-dependent determinant \(\mathscr {D}_n(\lambda ,\alpha )\)

where the subscript n of \(\mathscr {D}_n(\lambda ,\alpha )\) indicates that the matrix \(\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}\) is an \(n\times n\) square matrix. That \(\mathscr {D}_n (\lambda ,\alpha =0)=\mathscr {D}_n (\lambda )\equiv \mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\), which is vanishing for an eigenvalue \(\lambda \), is apparent. Both \(\mathscr {D}_n (\lambda )\) and \(\mathscr {D}_n (\lambda ,\alpha )\) can be derived from the recursion relation among the determinants of the matrices of various dimensions. Details of the derivation are provided in Appendix A. From Eq. (A14), the explicit form of the \(\alpha \)-dependent determinant is given by

The secular equation \(\mathscr {D}_n(\lambda ,0)=0\) determines the eigenvalue \(\lambda \). We shall find in Eq. (44) that no degenerate normal frequency exists. Thus, the determinant at a given eigenvalue \(\lambda =\lambda _i\) must be factorized as

In the limit \(\alpha \rightarrow 0\), the product \(\prod _{j\ne i} (\alpha +\lambda _j-\lambda _i)\) on the right side collapses into a non-vanishing number independent of \(\alpha \), because every eigenvalue is distinct. Thus, \(\lim _{\alpha \rightarrow 0}\mathscr {D}_n(\lambda _i,\alpha )/\alpha =\prod _{j\ne i} (\lambda _j-\lambda _i)\ne 0\). The generalization of the method including degeneracies is presented in Ref. [5].

Hence, the eigenvector  corresponding to a given eigenvalue \(\lambda _i\) can be factorized as the product of two ‘finite’ quantities

corresponding to a given eigenvalue \(\lambda _i\) can be factorized as the product of two ‘finite’ quantities

Because \(\mathbb {c}\) is arbitrary, \(\mathbb {c}\) can absorb the first factor by redefinition: \([\lim _{\alpha \rightarrow 0}\alpha /\mathscr {D}_n(\lambda _i,\alpha )]\mathbb {c} \rightarrow \mathbb {c}\). Then, without loss of generality, the eigenvector can be written in a compact form

which is essentially identical to Eq. (32). However, Eq. (37) is more practical than Eq. (32), because the factor \(1/\mathscr {D}_n(\lambda _i,\alpha )\) coming from the inverse matrix \((\mathbb {A}-\lambda _i\mathbb {1}+\alpha \mathbb {1})^{-1}\) cancels the prefactor in Eq. (37).

The matrix multiplied by an arbitrary column vector \(\mathbb {c}\) in Eq. (36) is the projection operator that projects the arbitrary vector onto the eigenvector for the eigenvalue \(\lambda _i\), and it is nothing but the adjugate of the matrix \(\mathbb {A}-\lambda \mathbb {1}\)

Note that this identity holds for any eigenvalue problem without degeneracy.

If we substitute the explicit matrix elements in Eq. (3) for the loaded string system, then we find that every element of the adjugate can be factorized into the product of two factors of the form \(\mathscr {D}_k\equiv \mathscr {D}et[\mathbb {A}_{[k\times k]}-\lambda \mathbb {1}_{[k\times k]}]\)

Here, \(\mathbb {A}_{[k\times k]}\) and \(\mathbb {1}_{[k\times k]}\) are \(k\times k\) square matrices for \(k\le n\) that are analogous to \(\mathbb {A}\) and \(\mathbb {1}\) and \(\mathscr {D}_n\equiv \mathscr {D}_n(\lambda )\). A rigorous proof of the identity in Eq. (39) is presented in Appendix B.

The matrix representation for \({\text {adj}}(\mathbb {A}-\lambda \mathbb {1})\) for a loaded string system that we consider is explicitly given as

and the jth column of this matrix is

Here, the index of the front factor in each element increases from 0 to \(j-1\) by a single unit as the row index increases and the index reaches \(j-1\) at the jth row and is fixed after that. The index of the next factor in each element remains fixed as \(n-j\) until the row index reaches j, and after that, it starts to decrease from the \((j+1)\)th row and reaches 0 at the bottom.

According to Eq. (A14), an explicit form of \(\mathscr {D}_n\) is given by

If \(\lambda \) is an eigenvalue, then \(\mathscr {D}_n=0\). Thus, the angle \(\theta \) for the eigenvalue \(\lambda _s\) is determined as

Therefore, the eigenvalues are obtained as

While \(\mathscr {D}_n\big |_{\lambda =\lambda _s}\) is always vanishing, \(\mathscr {D}_k\big |_{\lambda =\lambda _s}\) is not vanishing for any \(k<n\)

From here on, we adopt the convention \(\mathscr {D}_k\equiv \mathscr {D}_k\big |_{\lambda =\lambda _s}\). Then, using the trigonometric relation

we deduce a useful relation between \(\mathscr {D}_k\) and \(\mathscr {D}_{n-k-1}\)

With the help of the identity in Eq. (47), the jth column of the adjugate can be simplified as

This indicates that every column of \({\text {adj}}(\mathbb {A}-\lambda \mathbb {1})\) is proportional to a single vector \((\mathscr {D}_0,\ldots ,\mathscr {D}_{n-1})^{\text {T}}\). Employing the normalized column vector  given in Eqs. (20) and (21) that satisfies

given in Eqs. (20) and (21) that satisfies

we find that the jth column of the adjugate can be written in the form

where

As a result, every column of \({\text {adj}}(\mathbb {A}-\lambda _s \mathbb {1})\) is parallel to the eigenvector

Note that for an arbitrary column vector \(\mathbb {c}\) in Eq. (30), one can easily check that  is indeed the eigenvector for the eigenvalue \(\lambda _s\) in Eq. (44)

is indeed the eigenvector for the eigenvalue \(\lambda _s\) in Eq. (44)

where \(c_r\) is the rth element of the column vector \(\mathbb {c}\) and the symbol \(\otimes \) represents the direct product. In conclusion, we can read off the eigenvector directly from the column (or row) of the adjugate, that is represented in a simple form in Eqs. (41) and (50). As a result, we can construct the completeness relation for any eigenvalue equation

We can make an educated guess that the coefficient \(a_s\) of \({\text {adj}}(\mathbb {A}-\lambda _s \mathbb {1})\) in the summation in Eq. (54) depends on \(\mathbb {A}\) and the explicit value \(a_s= 2\sin ^2\frac{s\pi }{n+1}/[(-1)^{s+1}(n+1)]\) represents the nature of the loaded string system.

4 Conclusions

We have considered the normal mode problem of a vibrating string loaded with n identical beads of equal spacing which involves the eigenvalue problem  given in Eq. (8). The conventional approach to solving this problem that can easily be found in most textbooks on classical mechanics is to solve the difference equation for the components \(\psi _r^{(\lambda )}\) of the eigenvector

given in Eq. (8). The conventional approach to solving this problem that can easily be found in most textbooks on classical mechanics is to solve the difference equation for the components \(\psi _r^{(\lambda )}\) of the eigenvector  , which is the same as that for the secular determinant \(\mathscr {D}_n\equiv \mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\) except that the boundary conditions are different: \(\psi _0^{(\lambda )}=\psi _{n+1}^{(\lambda )}=0\), while \(\mathscr {D}_0=1\).

, which is the same as that for the secular determinant \(\mathscr {D}_n\equiv \mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\) except that the boundary conditions are different: \(\psi _0^{(\lambda )}=\psi _{n+1}^{(\lambda )}=0\), while \(\mathscr {D}_0=1\).

Unlike the conventional approach, we have modified the eigenvalue equation as  in Eq. (29), where \(\alpha \) eventually vanishes at the end of problem solving with the constraint equation \(\alpha =0\). The identity matrix \(\mathbb {1}\) and an arbitrary column vector \(\mathbb {c}\) multiplied by \(\alpha \) behave as matrix-valued Lagrange undetermined multipliers. While the matrix \(\mathbb {A}-\lambda \mathbb {1}\) for the original eigenvalue equation is singular, the regularized one \(\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}\) is not. Thus, we can solve the regularized linear equation for the eigenvector

in Eq. (29), where \(\alpha \) eventually vanishes at the end of problem solving with the constraint equation \(\alpha =0\). The identity matrix \(\mathbb {1}\) and an arbitrary column vector \(\mathbb {c}\) multiplied by \(\alpha \) behave as matrix-valued Lagrange undetermined multipliers. While the matrix \(\mathbb {A}-\lambda \mathbb {1}\) for the original eigenvalue equation is singular, the regularized one \(\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1}\) is not. Thus, we can solve the regularized linear equation for the eigenvector  by multiplying the equation by the inverse matrix \((\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1})^{-1}\).

by multiplying the equation by the inverse matrix \((\mathbb {A}-\lambda \mathbb {1}+\alpha \mathbb {1})^{-1}\).

The string vibration with the displacement along only a single axis is non-degenerate. Thus, the regularized secular determinant \(\mathscr {D}_n(\lambda ,\alpha )\) is a polynomial function of \(\alpha \) with the leading contribution of degree 1 as \(\alpha \rightarrow 0\). By making use of this limiting behavior, we have shown that the eigenvector can be expressed as  in Eq. (37). The matrix in front of \(\mathbb {c}\) is well defined even when we take the limit \(\alpha \rightarrow 0\), while \(\mathbb {A}-\lambda _i\mathbb {1}+\alpha \mathbb {1}\) becomes singular. We have indeed shown that the matrix \(\lim _{\alpha \rightarrow 0} \mathscr {D}_n(\lambda _i,\alpha ) (\mathbb {A}-\lambda _i\mathbb {1}+\alpha \mathbb {1})^{-1}\) is actually the adjugate \({\text {adj}}(\mathbb {A}-\lambda _i \mathbb {1})\) of the original matrix in Eq. (38).

in Eq. (37). The matrix in front of \(\mathbb {c}\) is well defined even when we take the limit \(\alpha \rightarrow 0\), while \(\mathbb {A}-\lambda _i\mathbb {1}+\alpha \mathbb {1}\) becomes singular. We have indeed shown that the matrix \(\lim _{\alpha \rightarrow 0} \mathscr {D}_n(\lambda _i,\alpha ) (\mathbb {A}-\lambda _i\mathbb {1}+\alpha \mathbb {1})^{-1}\) is actually the adjugate \({\text {adj}}(\mathbb {A}-\lambda _i \mathbb {1})\) of the original matrix in Eq. (38).

Furthermore, we have shown that every column and every row of the adjugate matrix \({\text {adj}}(\mathbb {A}-\lambda _i \mathbb {1})\) are parallel to the eigenvectors  and

and  , respectively, which is apparent in Eq. (53). As a result, the completeness relation

, respectively, which is apparent in Eq. (53). As a result, the completeness relation  can be expanded as a linear combination of the adjugates \({\text {adj}}(\mathbb {A}-\lambda _s \mathbb {1})\) summed over the eigenvalues \(\lambda _s\) as is shown in Eq. (54). Detailed mathematical proofs for the essential identities used to derive the results listed above are summarized in the appendices. Our derivation reveals that the regularization technique for the eigenvalue equation by employing the matrix-valued Lagrange undetermined multipliers first introduced in Ref. [5] is quite useful in obtaining every eigenvector of an eigenvalue problem. To our best knowledge, the approach we have presented for reading off the eigenvector of any eigenvalue problem from the adjugate matrix and the remainder such as the factorization formula in Eq. (39) and the completeness relation in Eq. (54) that derives from the adjugate formula in Eq. (38) are all new.

can be expanded as a linear combination of the adjugates \({\text {adj}}(\mathbb {A}-\lambda _s \mathbb {1})\) summed over the eigenvalues \(\lambda _s\) as is shown in Eq. (54). Detailed mathematical proofs for the essential identities used to derive the results listed above are summarized in the appendices. Our derivation reveals that the regularization technique for the eigenvalue equation by employing the matrix-valued Lagrange undetermined multipliers first introduced in Ref. [5] is quite useful in obtaining every eigenvector of an eigenvalue problem. To our best knowledge, the approach we have presented for reading off the eigenvector of any eigenvalue problem from the adjugate matrix and the remainder such as the factorization formula in Eq. (39) and the completeness relation in Eq. (54) that derives from the adjugate formula in Eq. (38) are all new.

While we have restricted ourselves to a special case \(\psi _0=\psi _{n+1}=0\) that is consistent with the Dirichlet boundary condition \(\psi (0)=\psi (L)=0\) in the continuum limit, the method developed in this paper can, in principle, be applied to any boundary conditions. One can then consider, for example, a more general Dirichlet boundary condition that \(\psi _0\) and/or \(\psi _{n+1}\) are non-vanishing constants, or even the case analogous to the Neumann boundary condition in which \(\psi '(0)\) and \(\psi '(L)\) are constants in the continuum limit. Slightly more complicated situations, such as a loaded string with a few concentrated masses [7, 8], that with a viscous damping [9], or a hanging string with a tip mass [10, 11] can also be considered. Applying our method to more general cases and comparing the solutions with those in the continuum limit would be instructive. The application of other boundary conditions requires a corresponding modification of the matrix \(\mathbb {A}\), in particular, the first and the last rows and columns of \(\mathbb {A}\). Then, one can obtain the corresponding eigenvectors from the adjugate of the matrix \(\mathbb {A}-\lambda \mathbb {1}\) with the modified matrix, but the complete form of the eigenvectors is expected to depend on the boundary condition.

More generally, the method developed in this paper can be applied to any eigenvalue problem involving a tridiagonal matrix. As an example, one can consider the eigenvalue problem of the angular momentum matrix \(J_x\), which is a tridiagonal matrix, if we choose \(J_z\) to be diagonal as usual. In this case, the first diagonal elements above and below the main diagonal are not universal, but constant only in the submatrix corresponding to the angular momentum J. We can make an educated guess that the adjugate expression for the eigenvectors in Eq. (38) will be useful in this eigenvalue problem and that the complete form of the eigenvectors could be determined from the adjugate form. We expect this method to be applicable to other eigenvalue problems having a more general form of the tridiagonal matrix.

References

J.B. Marion, Classical Dynamics of Particles and Systems, 2nd edn. (Academic Press, Cambridge, 1970). (see, for example)

A.L. Fetter, J.D. Walecka, Theoretical Mechanics of Particles and Continua (McGraw-Hill, New York, 1980). (Chapter 24)

R.A. Matzner, L.C. Shepley, Classical Mechanics (Prentice-Hall, New Jersey, 1991), pp. 232–239

P.D. Ritger, N.J. Rose, Differential Equations with Applications (McGraw-Hill, New York, 1968), pp. 367–372

W. Han, D.-W. Jung, J. Lee, C. Yu, J. Korean Phys. Soc. 78, 1018 (2021)

G.B. Arfken, H.J. Weber, Mathematical Methods for Physicists, 7th edn. (Elsevier, Amsterdam, 2012)

F.C. Santos, Y.A. Coutinho, L. Ribeiro-Pinto, A.C. Tort, Eur. J. Phys. 27, 1037 (2006)

B.J. Gómez, C.E. Repetto, C.R. Stia, R. Welti, Eur. J. Phys. 28, 961 (2007)

N. Gauthier, Eur. J. Phys. 29, N21 (2008)

Y. Verbin, Eur. J. Phys. 36, 015005 (2015)

J.S. Deschaine, B.H. Suits, Eur. J. Phys. 29, 1211 (2008)

Acknowledgements

As members of the Korea Pragmatist Organization for Physics Education (KPOP\(\mathscr {E}\)), the authors thank the remaining members of KPOP\(\mathscr {E}\) for useful discussions. The work is supported in part by the National Research Foundation of Korea (NRF) under the BK21 FOUR program at Korea University, Initiative for science frontiers on upcoming challenges. The work of JL is supported in part by grants funded by the Ministry of Science and ICT under Contract no. NRF-2020R1A2C3009918. The work of DWJ and CY is supported in part by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education 2018R1D1A1B07047812 (DWJ) and 2020R1I1A1A01073770 (CY), respectively. All authors contributed equally to this work.

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jungil Lee: Director of the Korea Pragmatist Organization for Physics Education (KPOP\(\mathscr {E}\)).

Appendices

Appendix A: Evaluation of \(\mathscr {D}_n\)

In this appendix, we derive the expression for \(\mathscr {D}_n\equiv \mathscr {D}_n(\lambda )\equiv \mathscr {D}et[\mathbb {A}-\lambda \mathbb {1}]\), where \(\mathbb {1}\) is the \(n\times n\) identity matrix and the matrix elements of the \(n\times n\) square matrix \(\mathbb {A}\) are defined in Eq. (3). The first three entries of the secular determinant are

We define

The secular determinant \(\mathscr {D}_n\) is a polynomial function in \(\lambda \) of degree n that is vanishing when \(\lambda \) is an eigenvalue satisfying the eigenvalue equation in Eq. (8). Thus, the eigenvalue \(\lambda \) satisfying \(\mathscr {D}_n=0\) is distinguished from the eigenvalue of \(\mathscr {D}_{n'}\) if \(n'\ne n\). If we leave \(\lambda \) as a free variable instead of a specific eigenvalue, then we can find the recurrence relation for the secular determinant \(\mathscr {D}_n=(2-\lambda )\mathscr {D}_{n-1}-\mathscr {D}_{n-2}\) by expanding the determinant in terms of the first row [2, 3]. The recurrence relation constructs a difference equation

We observe that Eq. (A5) is equivalent to the difference equation [Eq. (10)] for \(\psi _r^{(\lambda )}\) except for the boundary conditions. While \(\psi _0^{(\lambda )}=0\), \(\mathscr {D}_0=1\) which can be determined by substituting \(\mathscr {D}_1\) in Eq. (A1) and \(\mathscr {D}_2\) in Eq. (A2A3) into the linear difference equation (A5) for \(n=2\).

Substituting the trial solution

into the difference equation (A5) with the replacement \(n\rightarrow n+1\),

we find that

The first few entries of the secular determinant \(\mathscr {D}_n\) can then be expressed as

By imposing, for example, the first two constraints \(\mathscr {D}_0=1\) and \(\mathscr {D}_1=2\cos \theta \), we can determine \(d_1\) and \(d_2\) as

Substituting these values into Eq. (A6), we find that

The result in Eq. (A14) agrees with the known value that can be found, for example, in Eq. (24.33) of Ref. [2] and in Eq. (14.57) of Ref. [3]. As was stated earlier in this section, the secular determinant in Eq. (A14) is a degree-n polynomial of \(\lambda \) and \(\mathscr {D}_n=0\) if \(\lambda \) is an eigenvalue satisfying the eigenvalue equation in Eq. (8). However, for \(k<n\), \(\mathscr {D}_{k}\ne 0\) if \(\lambda \) is an eigenvalue for the n-bead loaded string satisfying \(\mathscr {D}_n=0\). As is shown in Eq. (A14), the eigenvalue \(\lambda \) must be in the range \(0<\lambda <4\) to have an eigenvector. The parametrization of the secular determinant in Eq. (A14) states that the parameter \(\theta \) is in the range \(0<\theta <\pi \), because \(-1<\cos \theta <1\). This is consistent with the solution for \(\theta \) given in Eq. (19).

Appendix B: Proof of the factorization formula in Eq. (39)

In this appendix, we prove the factorization formula in Eq. (39)

where \(\mathbb {1}\) is the \(n\times n\) identity matrix, the matrix elements of the \(n\times n\) square matrix \(\mathbb {A}\) are defined in Eq. (3), and \(\mathscr {D}_k\equiv \mathscr {D}et[\mathbb {A}_{[k\times k]}-\lambda \mathbb {1}_{[k\times k]}]\). Here, \(\mathbb {A}_{[k\times k]}\) and \(\mathbb {1}_{[k\times k]}\) are \(k\times k\) square matrices for \(k\le n\) that are analogous to the \(n\times n\) counterparts \(\mathbb {A}\) and \(\mathbb {1}\), respectively. As is stated in Appendix 1, the secular determinant \(\mathscr {D}_n\) is a degree-n polynomial of \(\lambda \) and \(\mathscr {D}_n=0\) if \(\lambda \) is an eigenvalue satisfying the eigenvalue equation in Eq. (8). However, for \(k<n\), \(\mathscr {D}_{k}\ne 0\) if \(\lambda \) is an eigenvalue for the n-bead loaded string satisfying \(\mathscr {D}_n=0\). Thus, the right side of Eq. (B1) does not vanish, while \(\mathscr {D}_n=0\). The adjugate of an \(n\times n\) square matrix \(\mathbb {A}-\lambda \mathbb {1}\) is the transpose of the cofactor matrix \(\mathbb {C}\)

Here, \(\mathbb {M}_{ji}\) is the \((n-1)\times (n-1)\) submatrix of \(\mathbb {A}-\lambda \mathbb {1}\) that is identical to \(\mathbb {A}-\lambda \mathbb {1}\) except that the jth row and the ith column are removed. \(\mathscr {M}_{ji}\equiv \mathscr {D}et[\mathbb {M}_{ji}]\) is the (j, i)-minor of \(\mathbb {A}-\lambda \mathbb {1}\).

When \(i \ge j\), the \((n-1)\times (n-1)\) submatrix \(\mathbb {M}_{ji}\) is given by

where \(d\equiv 2-\lambda \) and the omitted elements of the matrix are all vanishing. Then, by applying the elementary row operations of the matrix that preserve the value of the determinant, we can always transform the submatrix \(\mathbb {M}_{ji}\) into the upper triangular form. In that case, the determinant of the matrix is just the product of the diagonal elements. Hence, we conclude that

When \(i\le j\), we perform similar operations and find that

Then, by applying the elementary column operations of the matrix, we can always transform the submatrix \(\mathbb {M}_{ji}\) into the lower triangular form keeping its determinant unchanged. Again, the determinant of the matrix is just the product of the diagonal elements. Hence, we conclude that

Combining these two results in Eqs. (B4) and (B6) and imposing these conditions on Eq. (B2), we find that the ij element of the adjugate is

This completes the proof of the factorization formula in Eq. (39).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jung, DW., Han, W., Kim, UR. et al. Finding normal modes of a loaded string with the method of Lagrange multipliers. J. Korean Phys. Soc. 79, 1079–1088 (2021). https://doi.org/10.1007/s40042-021-00314-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40042-021-00314-9