Abstract

In Mitsui (Sci Eng Rev Doshisha Univ Jpn 51(3):181–190, 2010) the author introduced a new class of discrete variable methods known as look-ahead linear multistep methods (LALMMs) which consist of a pair of predictor-corrector (PC), including a function value at one more step beyond the present step for the numerical solution of ordinary differential equations. Two-step family of Look-Ahead linear multistep methods of fourth-order pair were derived and shown to be A(\(\theta \))-stable in Mitsui and Yakubu (Sci Eng Rev Doshisha Univ Jpn 52(3):181–188, 2011). The derived integration methods are of low orders and unfortunately cannot cope with stiff systems of ordinary differential equations. In this paper we extend the concept adopted in Mitsui and Yakubu (Sci Eng Rev Doshisha Univ Jpn 52(3):181–188, 2011) to construct second-derivative of high-order accuracy methods with off-step points which behave essentially like one-step methods. The resulting integration methods are A-stable, convergent, with large regions of absolute stability, suitable for stiff systems of ordinary differential equations. Numerical comparisons of the new methods have been made and enormous gains in efficiency are achieved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We present second-derivative of high-order accuracy methods for the numerical integration of stiff systems of initial-value problems (IVPs) in ordinary differential equations (ODEs) given by

on the finite interval [a, b]. Supposing f is continuous and satisfies \(\left| {f(x,y)-f(x,\bar{y})} \right| \le L\left| {y-\bar{y}} \right| \) on \([a,\,b]\times (-\infty ,\,\infty )\) guarantees the existence of a unique solution y(x)\(\in \)C\(^{1}\)[a, b]. We shall assume that y has continuous derivatives on [a, b] of any order required. Of the many numerical integration methods available for the solution of (1.1) most of them have specific advantages so that they are chosen for special types of problems. Several of them have a general applicability that has made them popular in a wide variety of problems. There exists, however, a class of equations which present considerable difficulty in their solution and for which there does not seem to be a simple and accurate method available for their solution, since most of the methods are of low orders and are not A-stable, hence they are not suitable for such differential equations. In this paper we introduce second-derivative of high-order accuracy methods with off-step points designed for the numerical integration of such systems of initial value problems. Although several authors have studied methods with second derivative terms, for example, see [7, 16, 21, 22, 26–28]. Further, some of the methods considered for the solution of (1.1) were derived on the basis that the required function evaluations are to be done only at the grid points (discrete points) which is typically of discrete variable methods [18] (Euler method, Runge-Kutta methods, Picard method, etc.). However, in the years between 1960 and 1970 many authors introduced some off-grid points in their methods with the hope of generalizing the two traditional numerical integration methods (Runge-Kutta methods and linear multistep methods) as a result of the barrier theorems of Dahlquist [11], for example see [3, 4, 13, 14]. In this report, we consider methods that are A-stable suitable for generating the solution of stiff initial value problems at both grid and off-grid points simultaneously within the interval of integration. These methods are derived by introducing one extra intermediate off-grid point in between the usual grid points and include some of the collocation points as interpolation points.

The motivation The motivation for the study of the block second-derivative of high-order accuracy methods is that construction of A-stable multi-derivative methods of higher orders are possible, which are suitable for solving stiff systems. Secondly, block methods generally preserve the traditional advantage of one-step methods of being self-starting and of permitting easy change of step length during integration, see [19]. Thirdly, their advantage over Runge-Kutta methods lies in the fact that they are very inexpensive in terms of number of function evaluations per step. The effectiveness of this class of methods for the treatment of ODEs is shown on the basis of their attractive properties and the efficient technique to deal with a wide range of stiff test problems.

Definition 1.1

A numerical method is said to be A-stable if its region of absolute stability contains the whole of the complex left hand-half plane (Dahlquist [11]). Alternatively, a numerical method is called A-stable if all the solution of (1.1) tend to zero as \(n\rightarrow \infty \), when the method is applied with fixed positive h to any differential equation of the form dy/dx =\(\lambda \)y, where \(\lambda \) is a complex constant with negative real part.

Definition 1.2

Let \(Y_m \)and \(F_m \) be defined by \(Y_m =( {y_n ,y_{n+1} ,\ldots ,y_{n+r-1} })^T,F_m =( {f_n ,f_{n+1} ,\ldots ,f_{n+r-1} })^T.\)

Then a general k-block, r-point block method is a matrix of finite difference equation of the form

where all the A\(_{i}\)’s and B\(_{i}\)’s are properly chosen \(r\times r\) matrix coefficients and m = 0,1,2,..., represents the block number, n = mr is the first step number of the m\(^\mathrm{th}\) block and r is the proposed block size [10].

Assumption 1.1

In the ODEs (1.1), the function f belongs to \(C^1\)-class, and therefore, satisfies the Lipschitz condition with the constant L. That is, if the estimation

holds, L is called the Lipschitz constant.

Definition 1.3

A solution y(x) of (1.1) is said to be stable if given any \(\in >0\) there is \(\delta >0 \) such that any other solution \(\hat{y}(x)\) of (1.1) satisfies

also satisfies

for all \(x>a\).

The solution y(x) is asymptotically stable if in addition to (1.3b) \(\left| {y(x)-} \right. \left. {\hat{y}(x)} \right| \rightarrow 0\) as \(x\rightarrow \infty .\)

2 Derivation principle of the second-derivative of high-order methods

In this section, our objective is to describe the derivation principle of the second-derivative high-order accuracy methods with off-step points for the direct integration of stiff systems of initial value problems of the form (1.1). In order to obtain such highly stable methods we seek an approximate solution to the exact solution of (1.1) by the interpolant of the form

which is twice continuously differentiable function of y(x). We set the sum p = r+s+t where r denotes the number of interpolation points used and \(s >\) 0, \(t >\) 0 are distinct collocation points. Interpolating y(x) in (2.1) at the points {\(x_{n+j} \)}, and collocating \({y}'(x)\) and \({y}''(x)\) at the points {\(c_{n+j} \)} we have the following system of equations:

From Eqs. (2.2)–(2.4) we obtain the continuous multistep collocation method of the form:

where

Here \(\phi _j (x)\), \(\psi _j (x)\) and \(\omega _j (x)\) are parameters of the method which are to be determined. They are assumed polynomials of the form

The numerical constant coefficients \(\phi _{j,i+1} \),\(\psi _{j,i+1} \) and \(\omega _{j,i+1}\) in (2.6) are to be determined. They are selected so that accurate approximations of well behaved problems are obtained efficiently. Actual evaluations of the constant coefficients \(\phi _{j,i+1} \), \(\psi _{j,i+1} \) and \(\omega _{j,i+1} \) are carried out with a computer algebra system, for example Maple software package.

In the second-derivative methods, we see that, in addition to the computation of the f-values of the usual standard multistep methods, the modified methods involve computing g-values, where g is defined in (2.5). According to [8] the second-derivative methods can be practical if the costs of evaluating \(\varvec{g}\) are comparable to those in evaluating \(\varvec{f}\), and can even be more efficient than the standard methods if the number of function evaluations is fewer.

3 An eighth order second-derivative of high-order accuracy method

The parameters of the first second-derivative method can now be obtain by considering the multistep collocation method (2.5), set \(\xi =(x-x_n )\) and introduce the intermediate off-step points in between the usual grid points which are respectively denoted by, u = \(\frac{1}{2}\) and v = \(\frac{3}{2}\). These points are carefully chosen to guarantee the zero stability of the block second-derivative of high-order accuracy method see [12]. Thus, expanding (2.5) we have the following continuous scheme:

where

Evaluating the continuous scheme y(x) in (3.1) at the points \(x=x_{n+u} \) and \(x_{n+v}\) we obtain the first and third members in the block (3.2). Differentiating the continuous scheme in (3.1) once and evaluate at the points, \(x=x_{n+u} \),\(x_{n+v} \) again, then solve simultaneously for the values of \(y_{n+1} \) and \(y_{n+2} \) to complete the first block Second-Derivative of high-order accuracy method, consisting of four members in a block as follows:

3.1 An eleventh order second-derivative of high-order accuracy method

For the 2\(^{nd}\) second-derivative high-order accuracy method, we similarly introduce three intermediate off-step points in between the usual grid points and denote them by u = \(\frac{1}{2}\), v = \(\frac{3}{2}\) and w =\(\frac{5}{2}\). Again, these points are carefully chosen to guarantee the zero stability of the block second-derivative high order method, see [12]. Hence expanding (2.5) we obtain the continuous scheme of the form,

where

Evaluating the continuous scheme (3.3) and its first derivative as done in (3.1) at the points {\(x=x_{n+u} \),\(x_{n+v} \) and \(x_{n+w} \)} where {u =\(\frac{1}{2}\), v =\(\frac{3}{2}\) and w = \(\frac{5}{2}\)} we obtain the block method (3.4) as follows:

4 Analysis of the second-derivative of high-order accuracy methods

4.1 Order, consistency, zero-stability and convergence of the SDH methods

With the multistep collocation method (2.5) we associate the linear difference operator \(\ell \) defined by

where y(x) is an arbitrary function, continuously differentiable on [a, b]. Following Lambert [19] and Fatunla [12], we can write the terms in (4.1) as a Taylor series expansion about the point x to obtain the expression,

where the constant coefficients \(C_p \), p = 0,1,2,... are given as follows:

According to Lambert [19], the multistep collocation method (2.5) has order p if

Therefore, \(C_{p+1} \) is the error constant and \(C_{p+1} h^{p+1}y^{(p+1)}(x_n )\) is the principal local truncation error at the point \(x_n \) [22]. Hence, from our calculation the order and error constants for the constructed methods are presented in Table 1. It is clear from the Table that the block second-derivative high-order method (3.2) is of uniform accurate order eight. The members of the block second-derivative high-order method (3.4) are of uniformly accurate order eleven. The members of this block method have smaller error constants and hence more accurate than those members of the block method (3.2).

Definition 4.1

(Consistency) The block second-derivative high-order methods (3.2) and (3.4) are said to be consistent if the order of the individual member is greater or equal to one, that is if \(p\ge 1.\)

-

(i)

\(\rho (1)=0\) and

-

(ii)

\({\rho }'(1)=\sigma (1)\), where \(\rho (z)\) and \(\sigma (z)\) are respectively the 1\(^\mathrm{st}\) and 2\(^\mathrm{nd}\) characteristic polynomials.

From Table 1 we can attest that the members of the block second-derivative high-order accuracy methods are consistent.

In what follows, the block second-derivative high-order methods (3.2) and (3.4) can generally be rearranged and written as a matrix finite difference equation of the form in (1.2) as follows:

where

and the matrices \(A^{(1)},A^{(0)},B^{(1)},B^{(0)},C\) and D are matrices whose entries are given by the coefficients of the block second-derivative high-order methods (3.2) and (3.4).

Definition 4.2

(Zero-stability) The block second-derivative high-order methods (3.2) and (3.4) are said to be zero-stable if the roots

satisfies \(\left| {\lambda _j } \right| \le 1,\,\,\,j=1,\ldots ,k \) and for those roots with\(\left| {\lambda _j } \right| =1\), the multiplicity does not exceed two, Lambert [19, 20]

Definition 4.3

(Convergence) The necessary and sufficient conditions of the block second-derivative high-order methods (3.2) and (3.4) to be convergent are that they must be consistent and zero-stable Dahlquist [11]. Hence from definitions (4.1) and (4.2) the second-derivative high-order methods are convergent.

5 Regions of absolute stability of the second-derivative of high-order methods

To study the stability properties of the block second-derivative high-order methods we reformulate (3.2) and (3.4) as general linear methods see Burrage and Butcher [2]. Hence, we use the notation introduced by Butcher [6] which a general linear method is represented by a partitioned (s+r)\(\times \) (s+r) matrix, (containing A, U, B, V),

where

and \(e=[1,\cdots ,1]^T\in R^m\) .

Hence (5.1a) takes the form

where r denotes quantities as output from each step and input to the next step and s denotes stage values used in the computation of the step \(y_1 \),\(y_2 \),...,\(y_s \). The coefficients of these matrices indicate the relationship between the various numerical quantities that arise in the computation of stability regions. The elements of the matrices A, U, B and V are substituted into the stability matrix. In the sense of [9] we apply (5.1) to the linear test equation \({y}'=\lambda y,x\ge 0\) and \(\lambda \in C\) but for the second-derivative high-order method we use \({y}''=\lambda ^2y,\) which leads to the recurrence relation \(y^{\left[ {n+1} \right] }=M(z)y^{\left[ n \right] }\), n = 1,2,...,N-1, \(z=\lambda h\), where the stability matrix M(z) is defined by

We also define the stability polynomial \(p(\eta ,z)\) by the relation

and the absolute stability region \(\mathfrak {R}\) of the method is given by

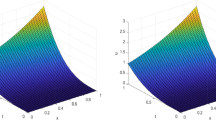

To compute the region of absolute stability we substitute the elements of the matrices A, U, B and V into the stability function (5.2) and finally into the stability polynomial of the methods, which is plotted to produce the required graphs of the absolute stability regions of the methods as shown in Fig. 1.

6 Numerical experiments

In this section, the performance of the second-derivative high-order accuracy methods is examined on five tests of initial value problems, particularly stiff systems. The results obtained are compared side by side in Tables. We also show the efficiency curves for some of the problem considered and compared with the ode code of Matlab. In order to provide a direct comparison, the tests were carried out with a fixed step length, using Matlab. In the computation we use nfe to denote the number of function evaluations.

Example 1

The first test problem is a well-known classical system. It is a mildly stiff problem composed of two first order equations,

and the exact solution is given by the sum of two decaying exponential components

The stiffness ratio is 1:1000. We solve the problem in the interval [0, 40] and the computed results are shown side by side in Table 2.

Example 2

Stiff nonlinear problem (The Kaps problem)

Consider the stiff system of two dimensional Kaps problem with corresponding initial conditions:

The exact solution is

In Table 3, the computed solutions of the problem using the new methods on the interval of [0, 10] are shown side by side. From this example, it is clearly confirmed that the second-derivative high-order accuracy methods are appropriate for stiff problems.

Example 3

The third example is a highly stiff system (see [5])

The eigenvalues of the Jacobian of the system are approximately \(\lambda _{1}\)= \(-\)1.000000000562500\(\times \)10\(^{6}\), and \(\lambda _{2}\) = \(-\)0.07443749995813. We solve the problem in the range [0, 40] and the computed results are shown in Table 4.

Example 4

Chemistry Problem suggested by Gear [15],

This problem was integrated using the newly constructed methods within the range [0, 10] and the efficiency curves obtained are compared with the ode code of Matlab in Fig. 2.

Example 5

Another chemistry problem considered by Robertson [25].

Many authors believed that this problem is a fairly hard test problem for stiff ordinary differential equation integrators [1, 5, 17, 29], so it is vital to demonstrate the efficiency of the new algorithms on this problem.

Here we applied the newly constructed methods and the results obtained are shown in Table 5 and for fair comparison see [5] page 426.

7 Concluding remarks

What we know is that very little literature exist on the second-derivative type of methods which utilize second derivative. It is for this reason that we subjected the newly derived methods to detailed implementations using different systems of first order initial value problems in ordinary differential equations. The results from the new high-order methods are very promising for stiff systems therefore encouraging further investigation of the new methods is necessary.

References

Bond, J.E., Cash, J.R.: A block method for the numerical integration of stiff systems of ordinary differential equations. BIT Numer. Math. 19, 429–447 (1979)

Burrage, K., Butcher, J.C.: Non-linear stability for a general class of differential equation methods. BIT 20, 185–203 (1980)

Butcher, J.C.: Modified multistep method for the numerical integration of ordinary differential equations. J. Assoc. Comput. Mach. 12(1), 124–135 (1965)

Butcher, J.C.: A multistep generalization of Runge-Kutta methods with four or five stages. J. Assoc. Comput. Mach. 14(1), 84–99 (1967)

Butcher, J.C., Hojjati, G.: Second derivative methods with Runge-Kutta stability. Numer. Algorithms 40, 415–429 (2005)

Butcher, J.C.: Numerical Methods for Ordinary Differential Equations, Second edn, Wiley (2008)

Cash, J.R.: High order methods for the numerical integration of ordinary differential equations. Numer. Math. 30, 385–409 (1978)

Chan, R.P.K., Tsai, A.Y.J.: On explicit two-derivative Runge-Kutta methods. Numer Algorithm. 53, 171–194 (2010)

Chollom, J.P., Jackiewicz, Z.: Construction of two step Runge-Kutta (TSRK) methods with large regions of absolute stability. J. Comput. Appl. Maths. 157, 125–137 (2003)

Chu, M.T., Hamilton, H.:Parallel solution of ordinary differential equations by multi-block methods. SIAM J. Sci. Stat. Comp. 8, 342–353 (1987)

Dahlquist, G.: A special stability problem for linear multistep methods. BIT Numer. Math. 3, 27–43 (1963)

Fatunla, S.O.: Block methods for second order ODEs. Int. J. Comput. Math. 41, 55–63 (1991)

Gragg, W.B., Stetter, H.J.: Generalized multistep predictor-corrector methods, \(J\). ACM . 11, 188–209 (1964)

Gear, C.W.: Hybrid multistep method for initial value in ordinary differential equations. J. SIAM Numer. Anal. B. 2, 69–86 (1965)

Gear, C. W.: DIFSUB for solution of ordinary differential equations. Comm. ACM. 14, 185–190 (1971)

Gupta, G.K.: Implementing second–derivative multistep methods using the Nordsieck polynomial representation. J. Math. Comput. 32(141), 13–18 (1978)

Hairer, E., Wanner, G.: Solving Ordinary Differential Equations II: Stiff and Differential-Algebraic Problems. Second Revised Edition, Springer-Series in Computational Mathematics 14, 75–77 (1996)

Henrici, P.: Discrete variable methods in ordinary differential equations. Wiley, New York (1962)

Lambert, J.D.: Computational methods in ordinary differential equations. Wiley, New York (1973)

Lambert, J.D.: Numerical methods for ordinary differential system. Wiley, New York (1991)

Mitsui, T.: Runge-Kutta type integration formulas including the evaluation of the second derivative, Part I. Publ. RIMS Kyoto Univ. Japan 18, 325–364 (1982)

Mitsui, T.: A modified version of Urabe’s implicit single-step method. J. Comp. Appl. Math. 20, 325–332 (1987)

Mitsui, T.: Look-Ahead linear multistep methods for ordinary differential equations, -Introduction of the Method-. Sci. Eng. Rev. Doshisha Univ. Jpn 51(3), 181–190 (2010)

Mitsui, T., Yakubu, D.G.: Two-step family of Look-Ahead linear multistep methods for ODEs. Sci. Eng. Rev. Doshisha Univ. Jpn 52(3), 181–188 (2011)

Robertson, H. H.: The solution of a set of reaction rate equations. In: Numerical Analysis an introduction, pp. 178–182. Academic Press, London (1966)

Shintani, H.: On one-step methods utilizing the second derivative. Hiroshima Math. J. 1, 349–372 (1971)

Shintani, H.: On explicit one-step methods utilizing the second derivative. Hiroshima Math. J. 2, 353–368 (1972)

Urabe, M.: An implicit one-step method of high-order accuracy for the numerical integration of ordinary differential equations. J. Numer. Math. 15, 151–164 (1970)

Vigo-Aguiar, J., Ramos, H.: A family of A-stable Runge-Kutta collocation methods of higher order for initial value problems. IMA J. Numer. Anal. 27(4), 798–817 (2007)

Acknowledgments

This work was supported by Tertiary Education Trust Fund (TETF) Ref. No. TETF/DAST&D.D/6.13/NOM-CA/BAS&BNAS. Therefore the authors gratefully acknowledged the financial support of the TETF. We wish to record our thanks to the referee for his/her constructive suggestions and comments that have led to a number of improvements to this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Taketom (Tom) Mitsui on his 70th Birthday.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yakubu, D.G., Markus, S. Second derivative of high-order accuracy methods for the numerical integration of stiff initial value problems. Afr. Mat. 27, 963–977 (2016). https://doi.org/10.1007/s13370-015-0389-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13370-015-0389-5