Abstract

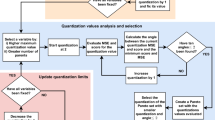

Computer-driven sampling methodology has been widely used in various application scenarios, theoretical models and data preprocessing. However, these powerful models are either based on explicit probability models or adopt data-level generation rules, which are difficult to be applied to the realistic environment that the prior distribution knowledge is missing. Inspired by the density estimation methods and quantization techniques, a method based on the quantization modeling of data density named probability quantization model (PQM) is proposed in this paper. The method quantifies the complex density estimation model through the discrete probability blocks so as to approximate the cumulative distribution and finally achieve backtracking sampling based on the roulette wheel selection and local linear regression, which avoids the indispensable prior distribution knowledge and troublesome modeling constraints in the existing stochastic sampling methods. Meanwhile, our method based on the data probability modeling is hard to be influenced by the sample features, thus avoiding over-fitting and having better data diversity than over-sampling methods. Experimental results show the advantages of the proposed method to various computer-driven sampling methods in terms of accuracy, generalization performance and data diversity.

Similar content being viewed by others

References

Wang, Z.; Liu, L.; Li, K.: Dynamic Markov chain Monte Carlo-based spectrum sensing. IEEE Signal Process. Lett. 27, 1380–1384 (2020). https://doi.org/10.1109/LSP.2020.3013529

Martino, L.; Luengo, D.: Extremely efficient acceptance-rejection method for simulating uncorrelated Nakagami fading channels. Commun. Stat. Simul. Comput. 48, 1798–1814 (2019). https://doi.org/10.1080/03610918.2018.1423694

Liu, Y.; Xiong, M.; Wu, C.; Wang, D.; Liu, Y.; Ding, J.; Huang, A.; Fu, X.; Qiang, X.; Xu, P.; Deng, M.; Yang, X.; Wu, J.: Sample caching Markov chain Monte Carlo approach to boson sampling simulation. New J. Phys. (2020). https://doi.org/10.1088/1367-2630/ab73c4

Li, C.; Tian, Y.; Chen, X.; Li, J.: An efficient anti-quantum lattice-based blind signature for blockchain-enabled systems. Inf. Sci. (Ny) 546, 253–264 (2021)

Su, H.S.; Zhang, J.X.; Zeng, Z.G.: Formation-containment control of multi-robot systems under a stochastic sampling mechanism. Sci. China Technol. Sci. 63, 1025–1034 (2020). https://doi.org/10.1007/s11431-019-1451-6

Li, M.; Dushoff, J.; Bolker, B.M.: Fitting mechanistic epidemic models to data: A comparison of simple Markov chain Monte Carlo approaches. Stat. Methods Med. Res. 27, 1956–1967 (2018). https://doi.org/10.1177/0962280217747054

Kim, M.; Lee, J.: Hamiltonian Markov chain Monte Carlo for partitioned sample spaces with application to Bayesian deep neural nets. J. Korean Stat. Soc. 49, 139–160 (2020). https://doi.org/10.1007/s42952-019-00001-3

Moka, S.B.; Kroese, D.P.: Perfect sampling for Gibbs point processes using partial rejection sampling. Bernoulli 26, 2082–2104 (2020)

Warne, D.J.; Baker, R.E.; Simpson, M.J.: Multilevel rejection sampling for approximate Bayesian computation. Comput. Stat. Data Anal. 124, 71–86 (2018)

Choe, Y.; Byon, E.; Chen, N.: Importance sampling for reliability evaluation with stochastic simulation models. Technometrics 57, 351–361 (2015)

Jiang, L.; Singh, S.S.: Tracking multiple moving objects in images using Markov Chain Monte Carlo. Stat. Comput. 28, 495–510 (2018). https://doi.org/10.1007/s11222-017-9743-9

Chan, T.C.Y.; Diamant, A.; Mahmood, R.: Sampling from the complement of a polyhedron: An MCMC algorithm for data augmentation. Oper. Res. Lett. 48, 744–751 (2020)

Yang, X.; Kuang, Q.; Zhang, W.; Zhang, G.: AMDO: An over-sampling technique for multi-class imbalanced problems. IEEE Trans. Knowl. Data Eng. 30, 1672–1685 (2018)

Robert, C.P.; Casella, G.: Monte Carlo statistical methods. Springer(Chapter 2), New York (2004).

Jia, G.; Taflanidis, A.A.; Beck, J.L.: A new adaptive rejection sampling method using kernel density approximations and its application to subset simulation. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part A Civ. Eng. 3, 1–12 (2017). https://doi.org/10.1061/ajrua6.0000841

Rao, V.; Lin, L.; Dunson, D.B.: Data augmentation for models based on rejection sampling. Biometrika 103, 319–335 (2016). https://doi.org/10.1093/biomet/asw005

Gilks, W.R.; Wild, P.: Adaptive rejection sampling for Gibbs sampling. Appl. Stat. 41, 337–348 (1992)

Martino, L.: Parsimonious adaptive rejection sampling. Electron. Lett. 53, 1115–1117 (2017). https://doi.org/10.1049/el.2017.1711

Martino, L.; Louzada, F.: Adaptive rejection sampling with fixed number of nodes. Commun. Stat. Simul. Comput. 48, 655–665 (2019). https://doi.org/10.1080/03610918.2017.1395039

Botts, C.: A modified adaptive accept-reject algorithm for univariate densities with bounded support. J. Stat. Comput. Simul. 81, 1039–1053 (2011)

Martino, L.: A review of multiple try MCMC algorithms for signal processing. Digit. Signal Process. A Rev. J. 75, 134–152 (2018). https://doi.org/10.1016/j.dsp.2018.01.004

Hastings, W.K.: Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57, 97–109 (1970)

Mijatovic, A.; Vogrinc, J.: On the Poisson equation for Metropolis-Hastings chains. Bernoulli 24, 2401–2428 (2018). https://doi.org/10.3150/17-BEJ932

Li, H.; Li, J.; Chang, P.C.; Sun, J.: Parametric prediction on default risk of Chinese listed tourism companies by using random oversampling, isomap, and locally linear embeddings on imbalanced samples. Int. J. Hosp. Manag. 35, 141–151 (2013)

Abu Alfeilat, H.A.; Hassanat, A.B.A.; Lasassmeh, O.; Tarawneh, A.S.; Alhasanat, M.B.; Eyal Salman, H.S.; Prasath, V.B.S.: Effects of distance measure choice on K-nearest neighbor classifier performance: a review. Big Data. 7, 221–248 (2019). https://doi.org/10.1089/big.2018.0175

Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P.: SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

Xu, X.; Chen, W.; Sun, Y.: Over-sampling algorithm for imbalanced data classification. J. Syst. Eng. Electron. 30, 1182–1191 (2019). https://doi.org/10.21629/JSEE.2019.06.12

Han, H.; Wang, W.Y.; Mao, B.H.: Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In: Proc. Int. Conf. Intell. Comput. Berlin, Germany: Springer. pp. 878–887 (2005).

He, H.B.; Yang, B.; Garcia, E.A.; Li, S.: ADASYN: adaptive synthetic sampling approach for imbalanced learning. In: Proc. of the IEEE World Congress on Computational Intelligence. pp. 1322–1328 (2008).

Douzas, G.; Bacao, F.; Last, F.: Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Inf. Sci. (Ny) 465, 1–20 (2018). https://doi.org/10.1016/j.ins.2018.06.056

Tang, Y.; Zhang, Y.Q.; Chawla, N.V.; Krasser, S.: SVMs modeling for highly imbalanced classification. IEEE Trans. Syst. Man Cybern. B Cybern. 39, 281–288 (2009)

Ahmed, H.I.; Wei, P.; Memon, I.; Du, Y.; Xie, W.: Estimation of time difference of arrival ( TDOA ) for the source radiates BPSK signal. IJCSI Int. J. Compuer Sci. 10, 164–171 (2013)

Yu, L.; Yang, T.; Chan, A.B.: Density-preserving hierarchical EM algorithm: simplifying Gaussian mixture models for approximate inference. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1323–1337 (2019). https://doi.org/10.1109/TPAMI.2018.2845371

Taaffe, K.; Pearce, B.; Ritchie, G.: Using kernel density estimation to model surgical procedure duration. Int. Trans. Oper. Res. 28, 401–418 (2021)

Cheng, M.; Hoang, N.D.: Slope collapse prediction using bayesian framework with K-nearest neighbor density estimation: case study in Taiwan. J. Comput. Civil Eng. 30, 04014116 (2016)

Li, Y.; Zhang, Y.; Yu, M.; Li, X.: Drawing and studying on histogram. Cluster Comput. 22, S3999–S4006 (2019). https://doi.org/10.1007/s10586-018-2606-0

Qin, H.; Gong, R.; Liu, X.; Bai, X.; Song, J.; Sebe, N.: Binary neural networks: A survey. Pattern Recognit. 105, 107281 (2020)

Castillo-Barnes, D.; Martinez-Murcia, F.J.; Ramírez, J.; Górriz, J.M.; Salas-Gonzalez, D.: Expectation-Maximization algorithm for finite mixture of α-stable distributions. Neurocomputing 413, 210–216 (2020). https://doi.org/10.1016/j.neucom.2020.06.114

Ho-Huu, V.; Nguyen-Thoi, T.; Truong-Khac, T.; Le-Anh, L.; Vo-Duy, T.: An improved differential evolution based on roulette wheel selection for shape and size optimization of truss structures with frequency constraints. Neural Comput. Appl. 29, 167–185 (2018)

Qian, W.; Chai, J.; Xu, Z.; Zhang, Z.: Differential evolution algorithm with multiple mutation strategies based on roulette wheel selection. Appl. Intell. 48, 3612–3629 (2018). https://doi.org/10.1007/s10489-018-1153-y

Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y.: Generative Adversarial Nets. Advances in Neural Information Processing Systems, p. 2672–2680. Springer, Berlin (2014)

Ponti, M.; Kittler, J.; Riva, M.; de Campos, T.; Zor, C.: A decision cognizant Kullback-Leibler divergence. Pattern Recognit. 61, 470–478 (2017). https://doi.org/10.1016/j.patcog.2016.08.018

Arain, Q.A.; Memon, H.; Memon, I.; Memon, M.H.; Shaikh, R.A.; Mangi, F.A.: Intelligent travel information platform based on location base services to predict user travel behavior from user-generated GPS traces. Int. J. Comput. Appl. 39, 155–168 (2017). https://doi.org/10.1080/1206212X.2017.1309222

Chen, X.; Kar, S.; Ralescu, D.A.: Cross-entropy measure of uncertain variables. Inf. Sci. (Ny) 201, 53–60 (2012). https://doi.org/10.1016/j.ins.2012.02.049

Zhou, D.X.: Theory of deep convolutional neural networks: Downsampling. Neural Netw. 124, 319–327 (2020). https://doi.org/10.1016/j.neunet.2020.01.018

Krizhevsky, A.; Sutskever, I.; Hinton, G.G.: Imagenet classification with deep convolutional neural networks. NIPS. 25, 1097–1105 (2012)

Yu, Y.; Si, X.; Hu, C.; Zhang, J.: A review of recurrent neural networks: LSTM cells and network architectures. NEURAL Comput. 31, 1235–1270 (2019). https://doi.org/10.1162/NECO

Scott, D.W.; Terrell, G.R.: Biased and unbiased cross-validation in density estimation. J. Am. Stat. Assoc. 82, 1131–1146 (1987)

Raykar, V.C.; Duraiswami, R.; Zhao, L.H.: Fast computation of kernel estimators. J. Comput. Graph. Stat. 19, 205–220 (2010)

Marron, J.S.; Wand, M.P.: Exact mean integrated squared error. Ann. Stat. 20, 712–736 (1992)

Wang, Z.; Huang, Y.; Lyu, S.X.: Lattice-reduction-aided Gibbs algorithm for lattice Gaussian sampling: Convergence Enhancement and Decoding Optimization. IEEE Trans. Signal Process. 67, 4342–4356 (2019)

Acknowledgements

The authors would like to thank anonymous reviewers for their comments, which greatly helped improve the quality of the paper. This research has been supported by the Educational Commission of Guangdong Province, China (No.: 2020ZDZX3093).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fang, B., Wang, J., Dong, Z. et al. Probability Quantization Model for Sample-to-Sample Stochastic Sampling. Arab J Sci Eng 47, 10865–10886 (2022). https://doi.org/10.1007/s13369-022-06932-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-022-06932-0