Abstract

The Inguinal Hernia Application (IHAPP) is designed to overcome current limitations of regular follow-up after inguinal hernia surgery. It has two goals: Minimizing unnecessary healthcare consumption by supplying patient information and facilitating registration of patient-reported outcome measures (PROMs) by offering simple questionnaires. In this study we evaluated the usability and validity of the app. Patients (≥18 years) scheduled for elective hernia repair were assessed for eligibility. Feasibility of the app was evaluated by measuring patient satisfaction about utilization. Validity (internal consistency and convergent validity) was tested by comparing answers in the app to the scores of the standardized EuraHS-Quality of Life instrument. Furthermore, test-retest reliability was analyzed correlating scores obtained at 6 weeks to outcomes after 44 days (6 weeks and 2 days). During a 3-month period, a total of 100 patients were included. Median age was 56 years and 98% were male. Most respondents (68%) valued the application as a supplementary tool to their treatment. The pre-operative information was reported as useful by 77% and the app was regarded user-friendly by 71%. Patient adherence was mediocre, 47% completed all questionnaires during follow-up. Reliability of the app was considered excellent (α > 0.90) and convergent validity was significant (p = 0.01). The same applies to test-retest reliability (p = 0.01). Our results demonstrate the IHAPP is a useful tool for reliable data registration and serves as patient information platform. However, further improvements are necessary to increase patient compliance in recording PROMs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Follow-up after inguinal hernia (IH) repair aids several purposes, such as clinical evaluation of the postoperative course, patient satisfaction, research objectives, and data registration for internal quality control and benchmarking [1]. In general, regular follow-up occurs once, at a predefined moment, by means of a telephone or outpatient consult. However, there is no consensus about the timing or method of follow-up. A recent study concluded that regular follow-up provides no clinical benefit since most patients report postoperative complications on their own initiative, regardless of timing [1]. Besides, morbidity rates following IH surgery are low. In our high-volume hernia clinic, this is, respectively, 1% for both recurrence and moderate pain after one year postoperatively [2,3,4]. Follow-up at a fixed time-point, even if the consult is very brief, does, however, improve patient satisfaction, regardless of whether the patient has complaints [1].

The quality of IH surgery can be determined, among other factors, by recurrence, pain, return to daily activities, complication rates and cost-effectiveness [5]. Especially important are those factors that are best reported by patients themselves. These patient-reported outcome measures (PROMs) need to be registered at multiple follow-up timepoints, as proper benchmarking cannot be achieved with a single follow-up consult. However, using multiple follow-up consults merely for quality assessment is wasteful, time-consuming and expensive. A different type of follow-up is required for this kind of quality control. In several existing hernia registries, the personal identification number (PIN) of a citizen can be used to follow patient movements across different care providers [6]. This allows for automated detection of whether patients were operated for a recurrent IH, for example. Nonetheless, these automated registries still lack PROMs. In The Netherlands specifically, reporting of outcomes for follow-up remains challenging due to lack of registration via PIN. This results in very heterogeneous registration, often lacking PROMs, or not registering at all [7]. Additionally, PROMs are reported differently nationwide and uniformity is lacking, even in research settings [8, 9]. Therefore, current methods of follow-up are inefficient for both patients and healthcare personnel, increasing the financial burden of hernia care whilst not providing the desired level of quality control and benchmarking.

The use of mobile Health (mHealth) technology for follow-up has the potential to overcome these challenges because it is portable, uniform and easy to use [10]. In The Netherlands, the rate of smartphone ownership is high, approximately 89% in 2022 [11]. This level of accessibility theoretically facilitates the usage of mHealth. In addition, mobile app follow-up care has been proven suitable for low-risk postoperative ambulatory patients [12]. In this context, the Inguinal Hernia App (IHAPP) was created. It has two main goals: to minimize healthcare consumption by expanding patient education and to facilitate the registration of PROMs for internal quality control and research purposes. It consists of three domains: (1) a time-dependent clinical care pathway explaining a “normal” pre- and postoperative course; (2) questionnaires for data registration; and (3) a library with additional background information about IH treatment. The primary outcome of our study is the feasibility of using the IHAPP as patient education and data registration tool. Secondary outcomes are reliability and validity in comparison to standardized questionnaires and scales.

Methods

Design and sample size

A single-center prospective cohort study was performed in a high-volume hernia clinic in The Netherlands. In this clinic, approximately 1500 procedures are performed annually. Patients were included from May 2021 up to and including July 2021. An empirically chosen sample of 100 patients was regarded sufficient to evaluate our primary outcome.

Patient selection

All patients scheduled for IH repair (either Lichtenstein or totally extraperitoneal procedure, TEP) were assessed for eligibility. Inclusion criteria were age between 18 and 80 years old, ownership of a device compatible with the IHAPP (iPhone or Android devices), and sufficient understanding of the Dutch language. No further exclusion criteria were applied. The study protocol was reviewed and approved by the local ethical review board (W20.008). Written informed consent was obtained in all patients.

Peri-operative course

Patients followed the standard peri-operative protocol for IH surgery in this hospital.

The IHAPP

The IHAPP was developed by Synappz using a Clinicards platform [13]. In this digital, web-based portal, patient data were self-reported and collected prospectively. The application was not designed for treatment or diagnostic purposes, although it could theoretically be used to monitor patients actively. Therefore, participants were informed that their treating physician would not have access to nor anticipate on the answers that they gave to any of the questionnaires. Because patients were not actively monitored through the IHAPP, they were explicitly instructed to contact their physician in case of any doubt concerning complications during the postoperative course.

The app respects the safety of privacy-sensitive medical information by using anonymized data and the software is in line with the Dutch privacy laws and regulations, such as the AVG (Algemene Verordening Persoonsgegevens, which is the Dutch implementation of the General Data Protection Regulation, GDPR). The medical application is Conformité Européenne (CE)-certified and has obtained all mandatory European certificates (ISO 27001, NEN 7510).

Questionnaires

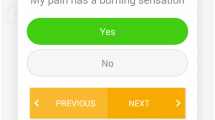

Upon downloading the app, patients answered a short demographic survey (e.g. age, gender, employment, smoking habit, etc.) in order to complete their profile. Short questionnaires were entered by patients at different timepoints: pre-operatively, 2 weeks and 6 weeks postoperatively. To evaluate test–retest reliability an additional questionnaire was incorporated at 44 days postoperative (6 weeks and 2 days). Patients were kept engaged and motivated to answer by posing dynamic questions, based on previous answers, using an adaptive question algorithm. To adequately register uniform PROMs the following standardized questionnaires and scales formed the foundation of the app:

-

(1)

The European Registry for Abdominal Wall Hernias—Quality of Life (EuraHS-QoL) instrument and adjusted questions of the Short-Form Inguinal Pain Questionnaire (SF-IPQ) to estimate pain and limitations [14, 15];

-

(2)

The Numeric Rating Scale (NRS) to quantify resumption of daily activities (e.g. work, sport, etc.) [7].

Questionnaires had a fixed timeslot of two to three days, which prevented retrospective data entry, which could introduce bias. For example, the pre-operative questionnaire could not be entered in retrospect. Push notifications were used to remind patients of open questionnaires, but only if explicit consent to do so was given during app installation. Therefore, all patients received additional manual reminders by e-mail when necessary. As a last resort, patients were contacted by one of the investigators by telephone, if they had not yet answered.

Patient satisfaction was measured by means of a questionnaire which was newly formulated for the purpose of our study, utilizing a 5- and 6-point Likert scale and posing open and nominal questions.

Clinical care pathway

The IHAPP also informed patients of the clinical care pathway. Patients followed different digital care paths for Lichtenstein and TEP repair. This is further personalized by time-dependent release of information, only showing when relevant to the patient. For example, when approaching the date of surgery, patients were supplied with specific pre-operative information. The care pathway is displayed as a chronologic timeline. Postoperatively, the pathway informed patients on what to expect from a normal postoperative course. Common complications not requiring intervention, such as hematoma, seroma and early postoperative pain were mentioned. Furthermore, practical instructions were provided, for instance when to remove the adhesive plasters, resume taking showers, return to work or sport, or when it is safe to drive or cycle again. This information was accompanied by informational videos or relevant research articles.

Statistical analysis

All statistical analyses were performed using SPSS statistical software, version 23 (IBM Corp., Armonk, NY, USA). For demographic and baseline data descriptive statistics were applied. Missing data were excluded list wise. The Cronbach’s alpha was used to assess the internal consistency reliability of the EuraHS-QoL and IHAPP. A threshold of 0.80 was considered an indication of good internal consistency and a value ≥ 0.90 was considered excellent. Convergent validity was calculated using Spearman correlation coefficients for the pain subscales of the EuraHS-QoL and corresponding NRS scores in the IHAPP. Test–retest reliability was studied by correlating scores obtained at 6 weeks and 44 days (6 weeks and 2 days) using Spearman correlation coefficients.

Results

A total of 290 patients were assessed for eligibility. After initial screening, 126 patients were included. After completion of the follow-up period, patients who had completed ≤1 questionnaire were excluded from analyses (Fig. 1). As a result of COVID-19, the amount of operations was reduced throughout the trial resulting in an unstable surgery schedule and many last minute changes. Therefore the missing group makes up the majority of the excluded patients. Other reasons for not participating can be found in Fig. 1. After inclusion, one of our participants presented at the outpatient clinic for single-visit hernia surgery with a small asymptomatic IH. After explanation of the possibility of a wait and see policy, the patient decided to cancel the operation. Four patients requested withdrawal before completion of the follow-up period. Often, an argument for withdrawal was technical issues when using the app for the first time. One 79-year-old woman desired access to the app but did not wish to participate in the trial. During follow-up 21 patients were lost, whereas eight patients had only answered one questionnaire in total, even after repeated reminders. Most reasons are unknown (n = 6) but one patient reported COVID-19 infection and another mentioned being on holiday as an explanation. Arguments for never activating the app were not receiving push notifications (n = 1), annoyed by the utilization (n = 1), not responding to our e-mails (n = 2), difficulty understanding content due to language (n = 1), technical difficulties when using the app (n = 3), wrong e-mail address (n = 1) and unknown reasons (n = 4).

Finally, a group of 100 patients was available for complete analysis. The median age was 56 years (inter quartile range (IQR) 40-64) with an average Body Mass Index (BMI) of 25.2 kg/m2. Patients were predominantly male (98%). The majority of patients received TEP repair (78%). Ten patients were treated for a recurrent hernia. Complete baseline characteristics are summarized in Table 1.

App feasibility

The evaluation questionnaire was answered by 96 of 100 patients (Tables 2 and 3). The complete questionnaire is shown in Table 4.

Usability

The app’s usability was assessed with three questions: user-friendliness, whether content was clear to understand and if the app was perceived as additional value to their treatment. Approximately 71% regarded the application as user-friendly. For 3% of patients the content of the app was not clear enough and 16% expressed a neutral opinion. The majority of participants (68%) valued the application as an additional tool to their treatment.

Patient information

The information provided in the clinical care pathway was predominantly experienced as ‘very useful’. There were no subjects who answered negatively (‘not useful at all’/‘not useful’) when asked about the pre-operative information and only two patients did not read this information. Usefulness of information in the pathway declined to 71% when asked again, at 6 weeks postoperatively. The articles in the library were accessed by the majority of patients, only 16% did not read any. Patients were able to comment freely as well. Nearly all comments mentioned a lack of detailed information concerning the resumption of daily activities. Patients wanted specific guidelines about which activity they could perform at which time point. Other comments included a ‘foreign body’ feeling of the mesh and specific case-reported symptoms.

Questionnaires

The questions included in the questionnaires were interpreted as (very) easy (88%) and (very) clear (83%). Reasons for not answering questions were forgetting (n = 6), vacation (n = 3) or unspecified reasons (n = 7). Eight out of ten participants were certain they had answered all questionnaires. However, our data report that less than half of all patients (n = 45) answered four questionnaires. Forty patients completed three questionnaires and 15 patients completed two questionnaires. In total, 84 participants responded to the pre-operative questionnaire, 93 patients responded to the 2 and 6 weeks postoperative questionnaire and 59 patients responded to the 44 days post-op survey. Feedback regarding the questionnaires mainly mentioned technical problems with the app, for example not being able to open a questionnaire, or that reminders kept being sent, even after completing all questions. Some patients requested the function to give more detailed context when entering pain scores.

Reliability and validity

Cronbach’s Alpha was measured at 0.96 (n = 72) pre-operative. Therefore, internal consistency reliability was considered excellent (α > 0.9). Cronbach’s Alpha was also regarded excellent 6 weeks postoperative (α = 0.97, n = 69).

Convergent validity scores were calculated for pre-operative pain in rest (n = 73, R = 0.720), pain during activities (n = 72, R = 0.896) and pain of the past week (n = 73, R = 0.769). A positive correlation was observed which was significant (p = 0.01). The same was true for answers about limitations during in house (n = 70, R = 0.758) and outside activities (n = 77, R = 0.866). Differences in total cases can be explained due to forgetting to fill in all the answers of the paper version of the EuraHS-QoL. It was impossible to skip questions in the app. The same variables at 6 weeks postoperative were also assessed. There was a positive correlation for all cases (n = 69). Pain in rest (R = 0.656), pain during activities (R = 0.795) and pain of the past week (R = 0.669) were all considered significant (p = 0.01). The same applies to limitations during in house (R = 0.602, p = 0.01) and outside activities (R = 0.644, p = 0.01).

The test–retest reliability for the subscales of pain (pain in rest, pain during activities, pain of the past week, pain during heavy labor, limitations during in house and outside activities) were all positively correlated (n = 59, p = 0.01). The different locations (n = 21) of pain had a positive correlation between 6 weeks and 44 days (6 weeks and 2 days), except for the answer “other located pain” (p = 0.064). For the variables returning fully to daily functioning and working, as well as the corresponding amount of days, the total caseload was too small to do a sufficient comparison (due to missing data).

Discussion

Present findings suggest that the IHAPP is primarily an effective tool for patient education. Additionally, it can be considered a valid and reliable platform for collecting PROMs when compared to the standardized EuraHS-QoL instrument. However, due to a lack of compliance, the IHAPP is not yet a feasible tool for systematic registration of PROMs after IH surgery.

When usability of the application was evaluated, at least two-thirds of all patients reported positive attitudes towards the utilization of the app. The majority of patients experienced the clinical care pathway as useful and informative.

The questionnaires’ fixed timeslot of two to three days prevented retrospective data entry to avoid recall bias. Although most participants were confident they answered every question (n = 77, 80%), data show that in reality, only 45 participants completed all four assessments. This discrepancy displays the general lack of adherence, which limits the potential of mHealth for the purpose of PROMs registration by means of this app.

Existing literature on feasibility of using smartphone applications for registration of PROMs in surgical patients is sparse. Nevertheless, one comparable report can be found. In 2020 van Hout et al. proposed the use of a smartphone app for perioperative monitoring of IH surgery. Comparable advantages, such as less recall and response bias, were suggested [16]. Their usage of an adaptive question algorithm makes comparing between subjects difficult, because every patients receives a different amount and type of questions, the standardized aspect of the questionnaires disappears. Another significant difference was an alarm notification system, which sent alerts when an adverse event was suspected based on self-reported scores. We deliberately did not integrate this option in our application. Patients were clearly instructed to report suspected adverse events to their physician instead. The recently published pilot study of van Hout et al. observed high patient satisfaction (92.8%), which is also true for our study. In contrast to the IHAPP, they used daily single questions multiple times a day and patients were not reminded to answer whatsoever [17]. For the purpose of determining validity and reliability, we were forced to send additional reminders. Moreover, we believe it is ethically debatable whether a patient should be unnecessarily burdened with questionnaires if an uncomplicated postoperative course is experienced. Therefore, we chose to restrict questionnaires to a limited number of timepoints. In future versions of the application a follow-up of two years could be incorporated, with extra questionnaires at 3 months, 6 months, 1 year and 2 years postoperatively.

Reasons for non-adherence and discontinuation are relevant outcomes to assess feasibility of applications for PROMs collection. Van Hout et al. showed after fourteen days and three months postoperatively, the adherence was 91,7% and 69% (n = 229). Yet after one year, only 28.8% completed follow-up, presenting poor long-term follow-up [17]. Specific reasons for low compliance were not investigated, but suggestions include “successful treatment” and high peak load of questions. Colls et al. found strong patient adherence (median adherence 79%) with a smartphone app for daily PROMs in rheumatoid arthritis, and observed a comparable decreasing trend over a 6-month period. There was a correlation between increased compliance and older age (≥ 65 years) as well as better disease control [18]. In the present study, factors influencing adherence were not specifically identified as patients were positive they had answered all the questionnaires. However, in reality only 47% answered all four. Examining our questionnaires separately, a fairly high level of adherence can be noted: 84% pre-operatively, 93% 2 weeks postoperatively and 93% 6 weeks postoperatively. Patients received push notifications through the app and they were reminded by e-mail and eventually telephone when they did not respond to questionnaires. Without these e-mail and telephone reminders, adherence would be lower. Bauer et al. reported that after 8 weeks, only 35% of patients sustained use of a smartphone app for transmitting PROMs daily [19]. Compliance in the present study was higher, and this is likely explained by the lower volume of questionnaires. Four questionnaires were sent over a 6-week period, compared to daily questionnaires for eight weeks. Retaining patient engagement remains the most challenging part of these studies. Most patients will endure few symptoms after IH surgery and they may feel as though reporting their (lack of) complaints is not worth their time. A review by Nielsen et al. suggests similar reasons for non-adherence [20]. Additionally, they pose that technical issues reported by more than half of its included participants (n = 51) caused dropout, which was similar in our study population [20]. Better understanding of patients’ reasons for discontinuation is important for future research.

Studies using mHealth as an additional tool in surgery for patient educational purposes and information provision are quite available. Timmers et al. showed that complementing patient education using a mobile application improved surgical outcomes in terms of level of pain, quality of life and patient satisfaction [21]. Another feasibility trial tested an application to monitor recovery after robot-assisted radical prostatectomy. They showed similar outcomes regarding patient education and patient satisfaction [22]. These studies provided exercises to enhance recovery, which is something that patients in the present study specifically suggested as an improvement. Another difference is the use of day-to-day time-intervals with information, which could result in better outcomes compared to our study. A systematic review of Lu et al. illustrates how application-based interventions have the potential to increase patient adherence to protocols and reduce healthcare costs whilst maintaining patient satisfaction [23]. These findings strengthen our theory that an app aids to minimize health care consumption.

A randomized controlled trial of Armstrong et al. demonstrated their mobile application averted in-person visits during the first 30 days after ambulatory breast reconstruction. Follow-up care using this app neither affected complication rates nor satisfaction scores. Visits were replaced by interactive options, such as uploading photographs of the surgical site, monitoring pain with a visual analogue scale (VAS) and measuring quality of recovery with a questionnaire [12]. An option to share images, as was done for the previous study, or for example chat directly with a surgeon was not included in the IHAPP. Although patient engagement is greater when these interactive options are available, they are likely to increase workload and healthcare costs and as such contradicted the present study goals [19].

Limitations

Our clinic operated at reduced surgical capacity due to the COVID-19 pandemic during this study. This resulted in a very unstable surgical schedule with many last minute changes and cancellations, which in turn led to the majority (n = 72) of exclusions. If not for this, the potential amount of patient inclusions could have been significantly greater and inclusion targets could have been achieved much faster.

It is arguable whether the present study population is representative for a normal patient group due to the ratio of Lichtenstein and TEP repairs, which was 1 to 4. Typically, Lichtenstein repairs account for 5–10% of all the IH repairs in our hernia clinic. The same applies for the gender imbalance, generally 5% of the IH population is female, but our group consists of only 2%. Possible explanations for this are the COVID-19 pandemic, resulting in increased waiting times, ‘catch-up care’ and a different male:female ratio.

The backend of the IHAPP was not yet connected to the Electronic Patient Record (EPR), which limits storing and accessing data. Therefore, the treating physician could not see or act upon the data entered by patients. Such integration would be highly recommended for a future application.

Test–retest ability was implemented by sending patients the same questionnaire twice within the interval of two days. Many patients misinterpreted this part of the app, thinking they had already completed this questionnaire and thus did not complete it, despite explicit instructions. Removing this test–retest ability assessment would positively influence adherence. Another way of determining test–retest ability would need to be conceived.

Patients were actively reminded to complete questionnaires by the investigators. This likely had a significant influence on adherence. For future pilots it would be interesting to study patient compliance for the IHAPP without any interference by an investigator, thus relying only on the existing reminder mechanisms, in order to get a real-world impression of patient adherence.

Conclusions

The IHAPP is an innovative tool, especially as a patient information platform. Registering patient data by using this app is possible in a reliable and valid manner. However, adherence is quite low. Improvements should target increasing adherence by designing surveys to be more enticing to the patient or allowing more interaction from within the app. Increasing compatibility of the app with the EPR of the hospital will be a significant improvement. Future studies should focus on reduction of unnecessary postoperative consultations, by improving the provided information regarding the postoperative course. We assume optimization of preoperative education will result in improved patient outcomes and the need of fixed follow-up will no longer be necessary.

Data availability

The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

Change history

28 March 2023

A Correction to this paper has been published: https://doi.org/10.1007/s13304-023-01494-8

References

Bakker WJ, van Hessen CV, Clevers GJ, Verleisdonk EJMM, Burgmans JPJ (2020) Value and patient appreciation of follow-up after endoscopic totally extraperitoneal (TEP) inguinal hernia repair. Hernia 24:1033–1040. https://doi.org/10.1007/s10029-020-02220-8

Roos MM, Bakker WJ, Schouten N, Voorbrood CEH, Clevers GJ, Verleisdonk EJMM, Davids PHP, Burgmans JPJ (2018) Higher recurrence rate after endoscopic totally extraperitoneal (TEP) inguinal hernia repair with ultrapro lightweight mesh: 5-year results of a randomized controlled trial (TULP-trial). Ann Surg 268:241–246. https://doi.org/10.1097/SLA.0000000000002649

Burgmans JPJ, Voorbrood CE, Simmermacher RK, Schouten N, Smakman N, Clevers GJ, Davids PHP, Verleisdonk EJMM, Hamaker ME, Lange JF, van Dalen T (2016) Long-term Results of a randomized double-blinded prospective trial of a lightweight (ultrapro) versus a heavyweight mesh (prolene) in laparoscopic total extraperitoneal inguinal hernia repair (TULP-trial). Ann Surg 263:862–866. https://doi.org/10.1097/SLA.0000000000001579

Burgmans JPJ, Schouten N, Clevers GJ, Verleisdonk EJMM, Davids PHP, Voorbrood CE, Simmermacher RK, van Dalen T (2015) Pain after totally extraperitoneal (TEP) hernia repair might fade out within a year. Hernia 19:579–585. https://doi.org/10.1007/s10029-015-1384-3

Bay-Nielsen M, Kehlet H, Strand L, Malmstrom J, Andersen FH, Wara P, Juul P, Callesen T, Danish Hernia Database C (2001) Quality assessment of 26,304 herniorrhaphies in Denmark: a prospective nationwide study. Lancet 358:1124–1128. https://doi.org/10.1016/S0140-6736(01)06251-1

Lunde AS, Lundeborg S, Lettenstrom GS, Thygesen L, Huebner J (1980) The person-number systems of Sweden, Norway, Denmark, and Israel. Vital Health Stat 2:1–59

Molegraaf M, Lange J, Wijsmuller A (2017) Uniformity of chronic pain assessment after inguinal hernia repair: a critical review of the literature. Eur Surg Res 58:1–19. https://doi.org/10.1159/000448706

Gwaltney CJ, Shields AL, Shiffman S (2008) Equivalence of electronic and paper-and-pencil administration of patient-reported outcome measures: a meta-analytic review. Value Health 11:322–333. https://doi.org/10.1111/j.1524-4733.2007.00231.x

Kyle-Leinhase I, Kockerling F, Jorgensen LN, Montgomery A, Gillion JF, Rodriguez JAP, Hope W, Muysoms F (2018) Comparison of hernia registries: the CORE project. Hernia 22:561–575. https://doi.org/10.1007/s10029-017-1724-6

Waller A, Forshaw K, Carey M, Robinson S, Kerridge R, Proietto A, Sanson-Fisher R (2015) Optimizing patient preparation and surgical experience using eHealth technology. JMIR Med Inform 3:e29. https://doi.org/10.2196/medinform.4286

Central Bureau of Statistics CBS (2022) Statline: Internet; access, use and personal characteristics. https://opendata.cbs.nl/statline/#/CBS/nl/dataset/84888NED/table?ts=1667055882001. Accessed 29 Oct 2022.

Armstrong KA, Coyte PC, Brown M, Beber B, Semple JL (2017) Effect of home monitoring via mobile app on the number of in-person visits following ambulatory surgery: a randomized clinical trial. JAMA Surg 152:622–627. https://doi.org/10.1001/jamasurg.2017.0111

Synappz (2022) Clinicards portal. https://clinicards.info/. Accessed 10 May 2022

Muysoms FE, Vanlander A, Ceulemans R, Kyle-Leinhase I, Michiels M, Jacobs I, Pletinckx P, Berrevoet F (2016) A prospective, multicenter, observational study on quality of life after laparoscopic inguinal hernia repair with ProGrip laparoscopic, self-fixating mesh according to the European Registry for Abdominal Wall Hernias Quality of Life Instrument. Surgery 160:1344–1357. https://doi.org/10.1016/j.surg.2016.04.026

Franneby U, Gunnarsson U, Andersson M, Heuman R, Nordin P, Nyren O, Sandblom G (2008) Validation of an inguinal pain questionnaire for assessment of chronic pain after groin hernia repair. Br J Surg 95:488–493. https://doi.org/10.1002/bjs.6014

van Hout L, Bokkerink WJV, Ibelings MS, Vriens P (2020) Perioperative monitoring of inguinal hernia patients with a smartphone application. Hernia 24:179–185. https://doi.org/10.1007/s10029-019-02053-0

van Hout L, Bokkerink WJV, Vriens P (2022) Clinical feasibility of the Q1.6 inguinal hernia application: a prospective cohort study. Hernia. https://doi.org/10.1007/s10029-022-02646-2

Colls J, Lee YC, Xu C, Corrigan C, Lu F, Marquez-Grap G, Murray M, Suh DH, Solomon DH (2021) Patient adherence with a smartphone app for patient-reported outcomes in rheumatoid arthritis. Rheumatology (Oxford) 60:108–112. https://doi.org/10.1093/rheumatology/keaa202

Bauer AM, Iles-Shih M, Ghomi RH, Rue T, Grover T, Kincler N, Miller M, Katon WJ (2018) Acceptability of mHealth augmentation of collaborative care: a mixed methods pilot study. Gen Hosp Psychiatry 51:22–29. https://doi.org/10.1016/j.genhosppsych.2017.11.010

Nielsen AS, Kidholm K, Kayser L (2020) Patients’ reasons for non-use of digital patient-reported outcome concepts: a scoping review. Health Inform J 26:2811–2833. https://doi.org/10.1177/1460458220942649

Timmers T, Janssen L, van der Weegen W, Das D, Marijnissen WJ, Hannink G, van der Zwaard BC, Plat A, Thomassen B, Swen JW, Kool RB, Lambers Heerspink FO (2019) The Effect of an app for day-to-day postoperative care education on patients with total knee replacement: randomized controlled trial. JMIR Mhealth Uhealth 7:e15323. https://doi.org/10.2196/15323

Belarmino A, Walsh R, Alshak M, Patel N, Wu R, Hu JC (2019) Feasibility of a mobile health application to monitor recovery and patient-reported outcomes after robot-assisted radical prostatectomy. Eur Urol Oncol 2:425–428. https://doi.org/10.1016/j.euo.2018.08.016

Lu K, Marino NE, Russell D, Singareddy A, Zhang D, Hardi A, Kaar S, Puri V (2018) Use of short message service and smartphone applications in the management of surgical patients: a systematic review. Telemed J E Health 24:406–414. https://doi.org/10.1089/tmj.2017.0123

Acknowledgements

The authors thank Floor Davids, Niels Bax and Wouter Bakker for their efforts and contributions in the development of the IHAPP.

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by RRM. The first draft of the manuscript was written by RRM and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Drs. Richtje R. Meuzelaar, drs. Floris P.J. den Hartog, dr. Egbert-Jan M.M. Verleisdonk, dr. Anandi H.W. Schiphorst and dr. Josephina P.J. Burgmans have no conflicts of interest or financial ties to disclose.

Ethical approval

This is an observational study. The local Research Ethics Committee (MEC-U) has confirmed approval of the study protocol (W20.008).

Informed consent

Informed consent was obtained from all individual participants included in the study.

Research involving human participants and/or animals

All procedures performed involving human participants were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki Declaration and its later amendments.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original article has been updated: Due to textual errors.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Meuzelaar, R.R., den Hartog, F.P.J., Verleisdonk, E.J.M.M. et al. Feasibility of a smartphone application for inguinal hernia care: a prospective pilot study. Updates Surg 75, 1001–1009 (2023). https://doi.org/10.1007/s13304-023-01455-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13304-023-01455-1