Abstract

Several studies have explored the risk of graft dysfunction after liver transplantation (LT) in recent years. Conversely, risk factors for graft discard before or at procurement have poorly been investigated. The study aimed at identifying a score to predict the risk of liver-related graft discard before transplantation. Secondary aims were to test the score for prediction of biopsy-related negative features and post-LT early graft loss. A total of 4207 donors evaluated during the period January 2004–Decemeber 2018 were retrospectively analyzed. The group was split into a training set (n = 3,156; 75.0%) and a validation set (n = 1,051; 25.0%). The Donor Rejected Organ Pre-transplantation (DROP) Score was proposed: − 2.68 + (2.14 if Regional Share) + (0.03*age) + (0.04*weight)-(0.03*height) + (0.29 if diabetes) + (1.65 if anti-HCV-positive) + (0.27 if HBV core) − (0.69 if hypotension) + (0.09*creatinine) + (0.38*log10AST) + (0.34*log10ALT) + (0.06*total bilirubin). At validation, the DROP Score showed the best AUCs for the prediction of liver-related graft discard (0.82; p < 0.001) and macrovesicular steatosis ≥ 30% (0.71; p < 0.001). Patients exceeding the DROP 90th centile had the worse post-LT results (3-month graft loss: 82.8%; log-rank P = 0.024).The DROP score represents a valuable tool to predict the risk of liver function-related graft discard, steatosis, and early post-LT graft survival rates. Studies focused on the validation of this score in other geographical settings are required.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Liver transplantation (LT) is the best therapeutic strategy for managing more than 50 pathologies causing end-stage liver disease [1]. One of the main goals of transplant physicians is to maximize the pool of available liver grafts to increase the number of transplants and reduce the number of LT candidates dying on the waiting list [2].

Therefore, the current focus is on identifying predictive criteria to guide the safe use of liver grafts [3], since inappropriate graft selection might generate fatal consequences for the recipient [4].

In recent years, many studies have focused on the risk of early graft dysfunction after transplantation [5,6,7,8,9], while interest has been observed in developing pre-procurement available prognosticators of scarce organ quality for transplant [3].

This study aimed at identifying and validating a score to predict the risk of liver-related graft discard from donors after brain death (DBD). The secondary aim was to test the score for prediction of biopsy-related features and graft loss at 3 months after transplantation.

Materials and methods

Patients

We performed a retrospective analysis of 4,372 DBDs evaluated for liver graft donation from January 1st 2004 to December. Four Italian centers joined the project: University of Pisa, Italy (n = 2,694), and the three University Centers of Rome (n = 1,678). Only DBDs offered for a primary transplant were included. DBD with missing clinical information (n = 17) and livers used for secondary (n = 118) or ABO-incompatible transplants (n = 30) were excluded from analysis so that the final sample numbered 4,207 cases.

This group was split into a Training Set of 3156 candidates (75.0%) and a Validation Set of 1051 candidates (25.0%) using a causal number generator randomization. A flowchart reporting the selection process is reported in Supplementary Fig. 1.

The Italian national organ procurement and allocation system

In Italy, liver donors are allocated on a regional basis except for urgencies (i.e., fulminant hepatic failures), pediatric recipients, and patients with a model for end-stage liver disease score ≥ 29. If a local center declines a graft before or during procurement surgery, it is offered at the national level via the Italian National Center for Transplantation Office. Decline criteria are varied across centers, and liver graft biopsy is left at the discretion of the surgical procurement team. With the intent to avoid center-related biases, only donors that were declined both locally and nationally were considered in the present study.

Definitions

We categorized the causes of graft discarding in two groups, namely liver-related versus liver-unrelated. Liver-related reasons for discard included any of the following: pre-procurement liver blood tests and/or imaging; gross anatomy; procurement histology, and poor perfusion. Liver-unrelated reasons for graft discard were donor tumors, donor infections, and pre-procurement donor cardiac arrest.

A liver graft biopsy was performed on demand, depending on surgical evaluation at procurement. The time of biopsy was before organ procurement. Biopsies review was not centralized, but performed by the different Pathology services on a rota basis.

Donor hypotension was defined as any episode of mean arterial pressure < 60 mm Hg for more than 1 h during the intensive care unit (ICU) stay.

The vasoactive-inotropic score was calculated according to the formula:

dopamine (mcg/Kg/min) + dobutamine (mcg/Kg/min) + vasopressin (U/Kg/min × 10,000) + noradrenaline (mcg/Kg/min × 100) + adrenaline (mcg/Kg/min × 100).

Statistical analysis

Continuous variables were reported as medians and inter-quartile ranges (IQR). Dummy variables were reported as numbers and percentages. We used the maximum likelihood estimation method for managing missing data [10]. For model construction, missing data were always < 5%. Mann–Whitney U test and Fisher's exact test were used to compare continuous and categorical variables, respectively.

A competing-risk analysis using a cause-specific logistic regression model was constructed to identify the risk factors for liver-related graft discard. The competing event (i.e., non-liver-related graft discard) was censored in the model. The analysis was performed on the Training Set data. Thirty-one variables were initially tested in a univariable model. All the covariates with a p value < 0.20 were used for the multivariable model. Odds ratios (OR) and 95% confidence intervals (95%CI) were reported.

The model's accuracy was assessed through c-statistic analysis, with the intent to evaluate its ability to predict a liver-related discarded graft. In the Training Set, validation was eventually performed using a bootstrap approach based on 100 generated samples deriving from the original set.

Areas under the curve (AUCs) and 95%CIs were reported. The model's accuracy was compared in both sets with previous scores, namely the Discard Risk Index (DSRI) [3], the donor body mass index (BMI), and the donor age. The validation in the Validation Set tested sensitivity, specificity, and diagnostic odds ratio (DOR) at different thresholds of the identified score. Validation sub-analyses were done to test the score for predicting macrovescicular steatosis (MaS) > 30%, fibrosis and necrosis for donors with available liver graft histology. The Akaike information criterion (AIC) was calculated for the different scores; the lowest AIC value was associated with the best discriminatory ability for the given score [11].

Survival probabilities were estimated using the Kaplan–Meier method. Survival rates comparisons were estimated using the log-rank method. Variables with a p < 0.05 were considered statistically significant. We used the SPSS statistical package version 24.0 (SPSS Inc., Chicago, IL, USA).

Results

The characteristics of the entire population, Training and Validation Sets are reported in Table 1. Overall, 2,642/4,207 (62.8%) grafts were considered eligible for LT and 1565 (37.2%) were discarded. Liver-related issues were the reason for graft discard in 1254 cases (29.8%) versus liver-unrelated in 311 (7.4%). In the liver-related group, the most common reasons for declining a graft were: poor histology (n = 660; 15.7%); pre-procurement liver function tests and/or imaging (n = 310; 7.4%); poor macroscopic aspect of the organ at surgery (n = 216; 5.1%); and poor perfusion during procurement (n = 68; 1.6%).

Among the liver-unrelated discard causes the most frequent were: tumors (n = 111; 2.6%); bacterial infections (n = 66; 1.6%); pre-procurement cardiac arrest (n = 17; 0.4%).

The rates of discarded and used grafts throughout the study period are reported in Fig. 1, and the rates of liver-related versus liver-unrelated causes of discard and the median donor age.

As reported in Table 1, the median donor age of the entire donor population was 66 years (IQR: 51–76). Anti-hepatitis B virus (HBV) core antigen and anti-HCV-positive donors were reported in 694 (16.5%) and 140 (3.3%) cases. Donors death was due to cerebro-vascular accident in 2991 cases (71.1%); trauma in 889 (21.1%); anoxia in 206 (4.9%), and to other causes in 113 (2.7%). The most frequent donor co-morbidities were arterial hypertension in 2083 cases (49.5%); cardiac disease of any origin in 1154 (27.4%); dyslipidemia in 596 (14.2%), and diabetes mellitus type-II (DM2) in 520 (12.4%). AST and ALT median peak values were 38 (IQR: 24–71) and 29 IU/L (IQR: 18–58), respectively. The median total bilirubin peak was 0.8 mg/dL (IQR: 0.5–1.1). Liver graft histology was obtained in 1975 (46.9%) donors.

Risk factors for liver-related graft discard

Table 2 illustrates the results of the uni- and multivariable logistic regression analyses for risk factors of liver-related graft discard. At multivariable analysis, these were regional share (OR = 8.46; p < 0.001); anti-HCV-positive donor (OR = 5.19; p < 0.001); weight (OR = 1.04; p < 0.001), and age (OR = 1.03; p < 0.001). Anti-HBV core positive donor (OR = 1.31; p = 0.03) and DM2 (OR = 1.33; p = 0.04) were also risk factors. On the opposite, previous hypotension episodes (OR = 0.50; p < 0.001) and donor height (OR = 0.97; p < 0.001) showed a protective effect for the risk of liver-related graft discard.

Peak values of serum creatinine (OR = 1.10; p = 0.055), AST (OR = 1.46; p = 0.057), total bilirubin (OR = 1.06; p = 0.059), and ALT (OR = 1.40; p = 0.07) were slightly below the level of statistical significance.

Using the beta-coefficients derived from the multivariable model, we constructed the following score:

Donor Rejected Organ Pre-transplantation (DROP) Score = − 2.68 + (2.14 if Regional Share) + (0.03*age years) + (0.04*weight kg)—(0.03*height cm) + (0.29 if DM2) + (1.65 if anti-HCV positive) + (0.27 if anti-HBV core positive)—(0.69 if hypotension episode) + (0.09*serum creatinine peak) + (0.38*log10 AST peak) + (0.34*log10 ALT peak) + (0.06*total bilirubin peak).

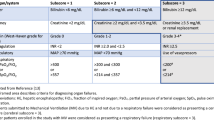

Validation for the risk of liver-related graft discard

The DROP Score was tested in both the Training and Validation Sets for prediction of the risk of liver-related graft discard. DROP showed a higher AUC (0.83 and 0.82; p < 0.001) concerning the other tested scores in both validation processes. For instance, DSRI AUC was 0.66–0.68, while donor BMI and donor age AUCs were 0.62 and 0.59–0.61, respectively (Table 3). Again, in terms of AIC DROP showed better accuracy and smaller values. In the Training Set validation process, DROP AIC was 2,877.87 versus 3,727.60 for DSRI. In the Validation Set validation process, its AIC was 976.62 vs. 1,253.56.

After stratification of DROP scores in deciles, different thresholds were investigated. A value corresponding to the 50th centile was identified as a low DROP value. A value corresponding to the 90th centile (hence, high DROP) showed the best DOR (33.48) with a sensitivity of 26.4 and a specificity of 97.1 (Table 3). Supplementary Fig. 2 illustrates the percent of DBDs with low versus high DROP scores throughout the study period.

Validation for the risk of MaS, fibrosis, and necrosis

The DROP was tested in both the Training and Validation Sets to predict macrovesicular steatosis (MaS) > 30% and any rate of fibrosis and necrosis. In both the sets, DROP AUC and AIC performed better than the tested scores (Table 4). Supplementary Fig. 3 shows a direct correlation between higher DROP scores and the severity of histology-proven graft lesions.

Post-transplant graft loss evaluation

The group of recipients of the Training and Validation Sets (n = 2,642) were stratified in four sub-classes according to the following thresholds of DROP: <50th centile (<− 0.79); 50th–75th centile (− 0.79 to 0.66); 75th–90th centile (0.66–1.32); and, >90th centile (>1.32). At survival analyses, patients >the 90th centile of DROP showed 3-month worse survival versus the <50th centile (82.8 vs. 91.3%; log-rank p = 0.024) (Fig. 2). In Supplementary Fig. 4, a sub-analysis has been reported in which the transplanted patients were dichotomized in two different time period: 2004–2010 and 2011–2018. Interestingly, the differences among the different DROP classes disappeared in the more recent period.

Discussion

The present study illustrates a new score for the prediction of the risk of DBD liver-related graft discard. To the best of our knowledge, DROP is the first score developed with this specific aim. Several scores reported previously in the international literature have focused more on donor-specific features [3], or on a combination of donor- and transplant-related variables [5,6,7,8,9, 12, 13]. Among them, the Donor Risk Index (DRI) has internationally been recognized as a valuable tool for liver graft selection [12]. However, broad implementation of DRI is limited by its being sensitive to the geographical setting where it was derived [14], and by including variables (i.e., cold ischemia time ([CIT]) that can be obtained only at transplantation [15]. A recent European-derived score—namely the Euro-Transplant-DRI (ET-DRI)—recalibrated DRI score coefficients according to the European epidemiology, but this score also includes CIT and can be obtained only after transplantation. [13].

A score focusing on the risk of liver graft discarding—namely, the discard risk index (DSRI)—has recently been generated from a large donor population (n = 72,297) and based entirely on pre-transplant variables [3]. However, DSRI includes procured donors only (i.e., those undergoing procurement surgery) and excludes discarded donors as per pre-procurement imaging and/or blood test results [3]. A further limitation to DSRI might be that no distinction between liver-related and unrelated causes of discard has been made [3].

We developed the DROP score able to solve the above limitations. Created on an extensive, interregional experience (n = 3,156 DBDs) and validated on a Training Set of 1,051 donors, DROP was entirely based on variables available at the time of donor reporting and included not only donors discarded after intra-operative evaluation but also those declined before procurement as per their clinical chart data. Since graft discard criteria may greatly vary across transplant centers due to local experience and waitlist dynamics, the current study included only liver grafts that were declined at a national level. However, this might not have entirely offset the bias of initial graft discard on the eventual decline by other centers, as highlighted in the international literature [16, 17]. Moreover, the study spans over 15 years (2004–2018), and specific time-dependent biases (i.e., increasing experience with extended criteria donors or the introduction of ex situ machine perfusion) might have changed transplant centers policies [18].

Furthermore, DROP primary aim was the prediction of liver function-related discard. Thus, we reduced the impact of biases related to donors dropped off for other causes like tumors, bacterial infections, or organizational issues.

The DROP score identified 12 different variables for its construction. In some cases, the reason why the selected variables were significant was conceptually logical. For example, donor age, donor weight, and history of DM2 might portend more severe MaS. In agreement with this finding, previous reports have confirmed their role in predicting a higher risk of graft loss [19], and biliary complications [20]. Donor height might be another surrogate of graft quality and play an inverse role than donor weight: in other words, the higher the donor, the lower the risk of graft discard. This observation is consistent with the results observed in the DRI and ET-DRI studies. [12, 13].

Some variables included in the model are historical. Until the introduction of direct-acting antivirals, donor HCV-positive status was a strong surrogate of underlying liver disease, fibrosis, and inflammation [21]. Several studies have reported the use of HCV-positive grafts, mainly from RNA-negative donors [22, 23]. The weight of this variable will likely disappear in the next years. Consistently, anti-HBV core positivity might be a surrogate of poor graft quality. Previous studies highlighted a negative impact of donor anti-HBc positivity on post-transplant survival [24, 25]. Again, the role of anti-HBc core positivity is anticipated to decline in the following years, requiring recalibration of the score.

Higher peak values of AST, ALT, and total bilirubin might portend more severe ischemia–reperfusion injury, graft necrosis, or be the result of donor hemodynamic instability. Accordingly, serum creatinine is sensitive to hemodynamics, fluid, and electrolyte balance and might be strictly correlated with liver graft quality. The role of all these variables on transplant outcome has already been substantiated to a considerable extent. [3, 12, 13].

The role of other variables included in the DROP is less clear. As an example, regional sharing turned out to be a risk factor versus the extra-regional one. This finding seems somewhat contradictory to other scores like DRI, where the greater the distance, the higher the risk of poor organ quality [12]. However, DROP and DRI have been developed with different aims, and DRI focuses on the risk of poor post-transplant survival [12]. Consequently, donors procured far from the transplant center have longer CIT and weaker results [26]. On the opposite, DROP was developed to investigate the risk of liver-related graft discard. The negative role of regional sharing on liver graft decline might be due to similar evaluation criteria across regional centers, while extra-regional donors are usually accepted for priority patients (i.e., national urgencies). In other words, centers are more willing to accept all regional local donors on the chart and decline the livers according to biopsy or gross appearance. At the same time, a more accurate selection takes place during the call offer of an extra-regional donor, with the intent to avoid unnecessary travels, higher costs, and loss of human resources.

A paradoxical result of the score is the protective role of donor hypotension episodes. Three possible explanations might account for this result. First, donors with previous hypotension episodes might require more accurate hemodynamic control during the agonic phase with resulting improved organ perfusion [27]. Second, donors with prolonged hypotension episodes typically show generalized organ failure and are excluded from the donation, while donors with hypotension episodes that are still considered for donation are intensively managed. Finally, a preconditioning role of hypotension cannot be excluded in these donors, thus minimizing the impact of ischemia–reperfusion injury. [28].

A relevant aspect of the score was its ability to predict the results of the graft histology. For example, the AUC for the diagnosis of MaS ≥ 30% was 0.71 in the Validation Set. In other terms, the score identified seven out of ten donors with MaS < 30% (true negative) or ≥ 30% (true positive). Also the AIC was the best one among the different tested scores. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality. In other terms, the smallest the AIC value, the smallest the loss of information, the better the quality of the model. It is fascinating to note that a mathematical score that can be obtained with data available at the time of donor reporting can predict the risk of MaS with similar diagnostic performances of gross evaluation of expert procurement surgeons or radiological examinations performable only during procurement [29, 30].

The practical use of this score should present several beneficial effects, mainly in the setting of a more appropriate donor–recipient matching. In Italy, in fact, the transplant centers are not strictly bound to a MELD-based allocation system, presenting a percentage of cases in which a proper allocation of marginal offers to fitter recipients is done and vice versa [31]. The effect of this improved allocation process should be already supposed observing the results of Supplementary Fig. 4, in which acceptable 3-month results were observed in recent years also when grafts with high DROP value were transplanted.

The further amelioration of the donor–recipient match vesiculated by the early identification during the donation process of grafts with a relevant risk of discard should better consent to allocate them only to specific sub-groups of recipients presenting a beneficial effect in receiving even more marginal grafts (i.e., advanced HCC, colorectal metastases).

The study presents some limitations. First, it is based on retrospective and multicenter data. Nevertheless, these biases are shared by all the studies focusing on this topic [3, 12, 13]. Unfortunately, the retrospective nature of the study limited our ability to collect all the required information about important issues like the results of the pre-donation imaging. We are confident that future studies aimed at recalibrating the score should be done adding these parameters. Second, the decision to accept an organ is often dependent on specific prerogatives of the center. With the intent to overcome this limit, we tried to minimize center-specific biases, including only organs discarded on a national basis.

Third, the liver biopsies performed before organ procurement were not evaluated by the same pathologists, and an interrater variability assessment for macrovesicular steatosis should be considered. Unfortunately, the possibility to perform a centralized revision of the biopsies was impossible due to the retrospective nature of the study.

Lastly, we are not able to assert if the two Italian regions considered in the present study had a different rate of graft discard respect to the national mean value. This datum should impact mainly on the role of the variable “regional sharing” as a risk factor for liver-related graft discard. Further studies involving more centers are required for better detailing this aspect.

In conclusion, the DROP score might be a useful tool to predict the risk of liver-related graft discard. The score is also able to predict several histological variables like steatosis, fibrosis, and necrosis. More studies aimed at investigating this score in other geographical settings are required.

Change history

21 July 2022

Missing Open Access funding information has been added in the Funding Note.

Abbreviations

- AIC: :

-

Akaike information criterion

- AUC: :

-

Area under the curve

- BMI: :

-

Body mass index

- CI: :

-

Confidence intervals

- CIT: :

-

Cold ischemia time

- DBD: :

-

Donor after brain death

- DM2: :

-

Diabetes mellitus type-II

- DOR: :

-

Diagnostic odds ratio

- DRI: :

-

Donor Risk Index

- DROP::

-

Donor rejected organ pre-transplantation

- DSRI: :

-

Discard Risk Index

- ET-DRI: :

-

Euro-transplant-DRI

- HBV: :

-

Hepatitis B virus

- HCV: :

-

Hepatitis C virus

- IQR: :

-

Interquartile ranges

- LT: :

-

Liver transplantation

- MaS: :

-

Macrovesicular steatosis

- OR: :

-

Odds ratio

References

Adam R, Karam V, Delvart V et al (2012) Evolution of indications and results of liver transplantation in Europe A report from the European Liver Transplant Registry (ELTR). J Hepatol 57(3):675–688

Feng S, Lai JC (2014) Expanded criteria donors. Clin Liver Dis 18(3):633–649

Rana A, Sigireddi RR, Halazun KJ et al (2018) Predicting liver allograft discard: the Discard Risk Index. Transplantation 102:1520–1529

Pagano D, Barbàra M, Seidita A et al (2020) Impact of extended-criteria donor liver grafts on benchmark metrics of clinical outcome after liver transplantation: a single center experience. Transplant Proc 52:1588–1592

Olthoff KM, Kulik L, Samstein B et al (2010) Validation of a current definition of early allograft dysfunction in liver transplant recipients and analysis of risk factors. Liver Transpl 16:943–949

Rana A, Hardy MA, Halazun KJ et al (2008) Survival outcomes following liver transplantation (SOFT) score: a novel method to predict patient survival following liver transplantation. Am J Transplant 8:2537–2546

Avolio AW, Cillo U, Salizzoni M et al (2011) Balancing donor and recipient risk factors in liver transplantation: the value of D-MELD with particular reference to HCV recipients. Am J Transplant 11:2724–2736

Avolio AW, Franco A, Schlegel A et al (2020) How to identify patients with the need for early liver re-transplant? Development and validation of a comprehensive model to predict Early Allograft Failure. Jama Surg 155:e204095

Schlegel A, Kalisvaart M, Scalera I et al (2018) The UK DCD Risk Score: a new proposal to define futility in donation-after-circulatory-death liver transplantation. J Hepatol 68:456–464

Wagner B, Smith TS (2010) Missing data analyses. J Am Coll Surg 211:435

Stone M (1998) Akaike’s criteria. In: Armitage P, Colton T (eds) Encyclopedia of Biostatistics. Wiley, Chichester, pp 123–124

Feng S, Goodrich NP, Bragg-Gresham JL et al (2006) Characteristics associated with liver graft failure: the concept of a donor risk index. Am J Transplant 6:783–790

Braat AE, Blok JJ, Putter H et al (2012) The Eurotransplant donor risk index in liver transplantation: ET-DRI. Am J Transplant 12:2789–2796

Flores A, Asrani SK (2017) The donor risk index: a decade of experience. Liver Transpl 23:1216–1225

Ghinolfi D, Lai Q, De Simone P (2018) Donor diabetes and prolonged cold ischemia time increase the risk of graft failure after liver transplant: should we need a redefinition of the donor risk index? Dig Liver Dis 50:100–101

Halazun KJ, Quillin RC, Rosenblatt R et al (2017) Expanding the margins: high volume utilization of marginal liver grafts among >2000 liver transplants at a single institution. Ann Surg 266:441–449

Marcon F, Schlegel A, Bartlett DC et al (2018) Utilization of declined liver grafts yields comparable transplant outcomes and previous decline should not be a deterrent to graft use. Transplantation 102:e211–e218

Ghinolfi D, Lai Q, Dondossola D et al (2020) Machine perfusions in liver transplantation: The evidence-based position paper of the Italian society of organ and tissue transplantation. Liver Transpl 26:1298–1315

Ghinolfi D, Lai Q, Pezzati D, De Simone P, Rreka E, Filipponi F (2018) Use of elderly donors in liver transplantation: a paired-match analysis at a single center. Ann Surg 268:325–331

Ghinolfi D, De Simone P, Lai Q et al (2016) Risk analysis of ischemic-type biliary lesions after liver transplant using octogenarian donors. Liver Transpl 22:588–598

Prakash K, Ramirez-Sanchez C, Ramirez SI et al (2020) Post-transplant survey to assess patient experiences with donor-derived HCV infection. Transpl Infect Dis 22:e13402

Cotter TG, Aronsohn A, Reddy KG, Charlton M (2021) Liver transplantation of HCV-viremic donors into HCV-negative recipients in the USA: increasing frequency with profound geographic variation. Transplantation 105:1285–1290

Cotter TG, Paul S, Sandıkçı B et al (2019) Increasing utilization and excellent initial outcomes following liver transplant of hepatitis C virus (HCV)-viremic donors into HCV-negative recipients: outcomes following liver transplant of HCV-viremic donors. Hepatology 69:2381–2395

Lai Q, Molinaro A, Spoletini G et al (2011) Impact of anti-hepatitis B core-positive donors in liver transplantation: a survival analysis. Transplant Proc 43:274–276

Angelico M, Nardi A, Marianelli T et al (2013) Hepatitis B-core antibody positive donors in liver transplantation and their impact on graft survival: evidence from the Liver Match cohort study. J Hepatol 58:715–723

Totsuka E, Fung JJ, Lee MC et al (2002) Influence of cold ischemia time and graft transport distance on postoperative outcome in human liver transplantation. Surg Today 32:792–799

Plurad DS, Bricker S, Neville A, Bongard F, Putnam B (2012) Arginine vasopressin significantly increases the rate of successful organ procurement in potential donors Am J Surg 204:856–860

Robertson FP, Magill LJ, Wright GP, Fuller B, Davidson BR (2016) A systematic review and meta-analysis of donor ischaemic preconditioning in liver transplantation. Transpl Int 29:1147–1154

Yersiz H, Lee C, Kaldas FM et al (2013) Assessment of hepatic steatosis by transplant surgeon and expert pathologist: a prospective, double-blind evaluation of 201 donor livers. Liver Transpl 19:437–449

Golse N, Cosse C, Allard MA et al (2019) Evaluation of a micro-spectrometer for the real-time assessment of liver graft with mild-to-moderate macrosteatosis: a proof of concept study. J Hepatol 70:423–430

Cillo U, Burra P, Mazzaferro V et al (2015) A Multistep, Consensus-Based Approach to Organ Allocation in Liver Transplantation: Toward a “Blended Principle Model.” Am J Transplant 15:2552–2561

Funding

Open access funding provided by Alma Mater Studiorum - Università di Bologna within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

QL and PDS contributed to conception and design of the study; QL, DG, AWA, TMM, GM, FM, FF, MP, ZLL, RA, AF, and CQ contributed to acquisition of data; QL and PDS analyzed and interpreted the data; QL drafted the article; AWA, TMM, GT, SA, MR, and PDS critically revised the manuscript; and all authors approved the final version.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare.

Ethical approval

This is a retrospective study which has been conducted in accordance with the ethical standards as laid in the 1964 Helsinki Declaration.

Research involving human participants and/or animals

A study-specific approval was obtained by the local ethical committee of Sapienza University of Rome Policlinico Umberto I of Rome (leading center of the study).

Informed consent

The authors obtained an informed consent at the time of transplantation from all the participants of the study for the treatment of their clinical data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lai, Q., Ghinolfi, D., Avolio, A.W. et al. Proposal and validation of a liver graft discard score for liver transplantation from deceased donors: a multicenter Italian study. Updates Surg 74, 491–500 (2022). https://doi.org/10.1007/s13304-022-01262-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13304-022-01262-0