Abstract

Manual contouring of organs at risk (OAR) is time-consuming and subject to inter-observer variability. AI-based auto-contouring is proposed as a solution to these problems if it can produce clinically acceptable results. This study investigated the performance of multiple AI-based auto-contouring systems in different OAR segmentations. The auto-contouring was performed using seven different AI-based segmentation systems (Radiotherapy AI, Limbus AI version 1.5 and 1.6, Therapanacea, MIM, Siemens AI-Rad Companion and RadFormation) on a total of 42 clinical cases with varying anatomical sites. Volumetric and surface dice similarity coefficients and maximum Hausdorff distance (HD) between the expert’s contours and automated contours were calculated to evaluate their performance. Radiotherapy AI has shown better performance than other software in most tested structures considered in the head and neck, and brain cases. No specific software had shown overall superior performance over other software in lung, breast, pelvis and abdomen cases. Each tested AI system was able to produce comparable contours to the experts’ contours of organs at risk which can potentially be used for clinical use. A reduced performance of AI systems in the case of small and complex anatomical structures was found and reported, showing that it is still essential to review each contour produced by AI systems for clinical uses. This study has also demonstrated a method of comparing contouring software options which could be replicated in clinics or used for ongoing quality assurance of purchased systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

To create a patient-specific radiotherapy plan, the radiation oncologists (ROs) manually contour the tumour or target region and organs at risk (OARs) on the patient’s computed tomographic (CT) or magnetic resonance (MR) images. The accuracy of the contours is essential as inaccurate contours have the potential to affect the outcome of the treatment. The manual contouring process is time-consuming, and the time taken for manual contouring can vary according to professionals’ abilities and knowledge. It can take several hours to complete contouring for one patient [4]. Previous studies found that manual contouring can take up to 3 h in Head and Neck intensity-modulated radiotherapy (IMRT) planning [9].

These factors can also lead to noticeable delays in treatment, resulting in unwanted treatment outcomes [4]. A previous study found that the increased waiting time for radiotherapy can increase the risk of local recurrence, which can be translated into decreased overall survival rate in some clinical situations [6].

Additionally, the contouring process suffers from large inter- and intra- observer contouring variabilities between professionals [9, 12, 17, 20]. A considerable mean volume variations of about 50% during parotid delineations was found [9]. A study of inter-observers/institutions variability in target and OARs contouring for breast radiotherapy planning found that the overlap between manually contoured structures was low (up to 10%) and the variation between manually contoured volumes had standard deviations up to 60% [17]. Inter-observer variations were also found in radiotherapy planning for other anatomical sites such as cervical cancer radiotherapy [12] and oral cavity cancer radiotherapy [20]. Inter-observer variation has been shown to have a dosimetric impact during radiation therapy planning [17].

The auto-segmentation method has the potential to replace manual contouring. This auto-contouring technique was developed based on the capability of the algorithms to use prior knowledge. In the early stage, the auto-contouring technique had no or minimal capability of using prior knowledge due to limitations on computing power and the limited availability of prior segmentation data. These were low-level segmentation approaches such as intensity thresholding, region growing, and heuristic edge detection [4]. As the computer powers rapidly developed along with a much larger availability of prior knowledge, the auto-contouring developed rapidly, for example, Atlas-based auto-contouring and deep-learning auto segmentation depending on the size of prior knowledge used in the technique.

Deep-learning auto-segmentation is a technique of machine learning where the algorithms learn or get trained to calculate the final contour. This technique uses a multi-layer neural network called convolution neural networks (CNNs) [4, 31]. A large set of pre-contoured data referred to as training data, is passed through the CNNs to train the algorithm and optimise its parameters through the backpropagation algorithm to calculate and create the optimised contour for target structures [16, 31]. The type and performance of deep-learning based auto-segmentation depend on which network structure was used, such as U-Net [24], V-Net (3D version of U-Net) [4] or ResNet [14] and the quality and quantity of training data set [2, 31]. More advanced network structures such as vision transformer (ViT) were introduced [28] and other studies showed ViT performed better than CNNs when both networks were trained on larger datasets [11].

Many studies have compared the performance of in-house AI-based, and atlas-based auto-contouring systems in OAR delineation accuracy in different cancer types such as Head and neck [5], breast [8], and liver [1]. Even though these studies had demonstrated its better performance in OAR contouring and better efficiency over atlas-based auto-contouring, the development and implementation of in-house AI-based auto-contouring can be complex due to challenges such as the required expertise in developing and implementing the programming code and limitations in collecting a large amount of “training” set [26].

In this study, we compared the performance of seven different commercially available AI-based auto contouring systems: Radiotherapy AI (Radiotherapy AI, Sydney, Australia), 2 different versions of Limbus Contour (Limbus AI Inc, Regina, SK, Canada), Therapanacea ART-plan Annotate (Therapanacea, Paris, France), MIM Contour Protégé AI (MIM, Cleveland, USA) Siemens AI-Rad Companion Organs RT (Siemens Healthineers, Erlangen, Germany) and RadFormation AutoContour (RadFormation, New York, USA) in OAR delineation.

Method

Clinical dataset

A total of 42 clinical cases (10 head and neck (HN), 10 brain (B), 10 pelvis (PLV), 4 breast (BT), 4 lung (L) and 4 abdomen (ABO) cases) treated at Chris O’Brien Lifehouse between 2019 and 2021 were selected in this study. The patient scans were selected consecutively from the clinical patient scans for each relevant body site. The computed tomographic (CT) images were acquired with the Canon Aquilion LB CT scanner. Different CT scan parameters were used depending on the patient and anatomical site scanned, illustrated in Table 1. Twenty-three organs at risk were delineated by a single expert for each corresponding case, including brain (total number of sample, n = 10), brainstem (n = 19), left eye (n = 12), right eye (n = 12), spinal cord (n = 19), oesophagus (n = 12), optic chiasm (n = 11), left optic nerve (n = 11), right optic nerve (n = 11), left parotid gland (n = 10), right parotid gland (n =9), left submandibular gland (n = 5), right submandibular gland (n = 4), bladder (n = 10), left femoral head (n = 10), right femoral head (n = 10), heart (n = 7), liver (n = 6), left kidney (n = 5), right kidney (n = 5), left lung (n = 9), right lung (n = 9), rectum (n = 10), and stomach (n = 4). During this study, the manual contours of OARs in each case were considered as the reference contours to be compared with automated contours from AI systems.

AI-based auto-contouring systems

Seven different AI-based segmentation systems were used to delineate the same OARs contoured in each case during this study, Limbus Contour version 1.5 and 1.6, MIM Contour Protégé AI version 1.1.1, Radformation AutoContour version 2.0.19, Radiotherapy AI version RTAI lifehouse-v0.2.0, Siemens AI-Rad Companion Organs RT (AIRC) version VA31A and Therapanacea ART-plan Annotate version 1.10.1. Each AI system uses different network structures to train its model. Limbus Contour [22] and MIM Contour Protégé AI [29] both use CNN based on U-Net structure. Radformation AutoContour [18] uses CNN based on V-Net structure. Siemens AI-Rad Companion Organs RT [15] uses deep image-to-image network (DI2IN). Radiotherapy AI uses an adapted 3D U-Net. The author were unable to identify the network used for Therapanacea ART-plan Annotate.

Radiotherapy AI used clinical data from Chris O’Brien Lifehouse as the training data set for its model. The training data set and the data set used for this study were mutually exclusive. Radiotherapy AI is in the development stage and is not commercially available yet.

Quantitative evaluation method

The volumetric Dice Similarity Coefficient (DSC), surface Dice Similarity Coefficient (sDSC) and maximum Hausdorff Distance (HD) between manual segmentation and AI-based auto-contouring systems’ segmentation were calculated to quantitatively evaluate the performance of each AI-based auto-contouring software in OAR delineations [25]. The DSC, sDSC and HD were calculated using python script with PlatiPy version 0.4.0 [7]. The volumetric Dice Similarity Coefficient (DSC) calculates the overlap between 2 contoured volumes and is defined as:

Where A is the volume of manual contours and B is the volume of contours delineated by an AI system. The value of the DSC metric varies from 0, which illustrates no overlap between two contours, to 1, which illustrates the complete overlap between two contours.

The surface Dice Similarity Coefficient (sDSC) is a new metric for assessing the segmentation performance introduced by Nikolov et al [21]. This metric calculates the overlap between the two surfaces at a defined tolerance (\(\tau\)) and is defined as:

where \(S_A\) and \(S_B\) are surface of manual contours (A) and AI contours (B) and \(B_A\) and \(B_B\) are the border regions of manual contours (A) and AI contours (B) respectively. As in radiotherapy, the OAR is contoured slice by slice and the segmentation performance is assessed by the fraction of the surface of the contour which needed to be edited, sDSC has been suggested as a more suitable metric compared to volumetric DSC to assess the segmentation performance as the volumetric DSC weighs all regions where two volumes do not overlap equally and independently of their distance from the surface, and is biased towards OARs which has large volume [21]. Another study showed that sDSC is a better indicator than DSC and HD of the time needed to edit and time saved by using auto contouring systems [27]. The tolerance parameter \(\tau\) needs to be set appropriately where variation is clinically acceptable by measuring inter-observer variation in contouring [21]. For this study, \(\tau\) value of 0 mm was used for sDSC calculation to evaluate the absolute difference between manual and AI system’s contours and additional sDSC calculations with different \(\tau\) values (1,2,3 mm) were performed as previous study by Rhee et. al found sDSC with tolerance value of 1, 2, 3 mm are most accurate similarity metrics compared to other metrics used to detect the errors in contour [23].

The maximum Hausdorff Distance (HD) between two contoured volumes to calculate the greatest distance from a point in one contour to the closest point in the other contour based on equation:

Where h(A,B) is the directed Hausdorff distance between A and B. The directed Hausdorff distance is expressed as:

\(||a-b||\) is the Euclidean distance between point a in A and point b in B. The zero HD value represents there is no difference between 2 contours’ shapes but as the HD value increases, the difference between 2 contours’ shapes are increasing.

To ensure a valid comparison, cases with non-identical numbers of data sets were divided into separate groups, ensuring that each set had an equal number of data points when calculating mean DSC, sDSC and HD. For instance, 19 cases were selected for testing in spinal cord segmentation. However, data from RTAI was unavailable for 9 out of the 19 cases, as the RTAI model was exclusively designed for Head and Neck cases at the time of the study.

Statistical analysis

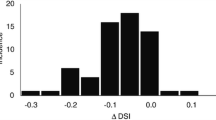

The statistical difference between each index of DSC and HD for each tested AI-based software was calculated using a suitable type of statistical test between 3 tests, (1) Student’s t-test, (2) Welch’s t-test and (3) Wilcoxon-Signed Rank test, depending on properties of compared data sets with a p-value lesser than 0.05 indicating significance [26]. The test was automated using an in-house Python script combined with published python packages. The box plots of each data set in each case were created to check if there are any outliers. Then the histogram was created to visually inspect the distribution of data. The Shapiro-Wilk and Q-Q plot tests were used to test the normality of the distribution of each sample. When the data was assumed to be normally distributed, the F-test was used to find whether each compared data set’s variance are equal. The Student’s t-test was used in case of equal variance between 2 compared data sets, and the Welch’s t-test was used in case of unequal variances between 2 compared data sets. The Wilcoxon-Signed Rank test was used when both compared data sets were not normally distributed and when normally distributed data sets were compared with data sets which were not normally distributed. It was also used to compare two data sets where any one of the data sets or both had outlier data points [13]. The detailed results of statistical test conducted during study can be found in supplementary data A (DSC), B (HD) and C (sDSC).

Results

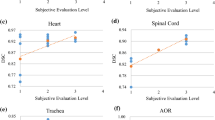

The performance of each individual AI-based auto-contouring system in contouring twenty three different organs at risks considered in various clinical cases (head and neck, brain, lung, breast, pelvis, and abdomen) was quantitatively evaluated by calculating the DSC, HD and sDSC between contours of each tested organ contoured manually by expert (Manual) and automatically by each software, Radiotherapy AI (RTAI), Limbus AI version 1.5 (Lim1.5) and version 1.6 (Lim1.6), Therapanacea (TH), MIM (MIM), Siemens AIRC (SAIRC) and RadFormation (RF). The higher DSC, sDSC and lower HD value illustrate better agreement with the Manual. The mean, standard deviation, range, and maximum absolute difference of the DSC for each considered OAR case in head and neck and brain cases are illustrated in Table 2. Similarly, the values for lung, breast, pelvis, and abdomen cases are presented in Table 3. The mean, standard deviation, range, and maximum absolute difference of the maximum HD for each considered OAR case in head and neck and brain cases are illustrated in Table 4. Similarly, the values for lung, breast, pelvis, and abdomen cases are presented in Table 5. The mean, standard deviation, range, and maximum absolute difference of the surface DSC for each considered OAR case in head and neck and brain cases are illustrated in Table 6. Similarly, the values for lung, breast, pelvis, and abdomen cases are presented in Table 7. Both highest mean DSC and sDSC values and lowest HD value for each case are presented in bold and highlighted. The distribution of individual data for each OAR were tabulated and illustrated in both scatter and box plot, corresponding statistical results are illustrated in Supplementary data A for DSC, B for HD, C for sDSC. The box plot of data for individual AI systems for all considered OARs are shown in Supplementary data 1 (DSC), 2 (HD), 3 to 6 (sDSC with different tau value).

Discussion

In this study, seven different AI-based auto-contouring systems were tested to study each system’s performance in contouring organs at risk considered in different clinical cases. In general, the study showed sDSC values were considerably smaller than volumetric DSC values, especially for OARs with large volumes as reported from previous studies [10, 21, 27].

In head and neck and brain cases, the contours delineated by each AI system showed good agreement with reference contours for most of OARs considered. The DSC for brain, brainstem, left eye, right eye, left parotid gland, right parotid gland, left submandibular gland and right submandibular gland from tested AI systems were comparable to the previous study by Doolan et. al [10] and by Liu et. al [19]. This study reported slightly lower sDSC for brain, brainstem, left eye, right eye, left parotid gland, right parotid gland, left submandibular gland and right submandibular gland from tested AI systems [10]. The HD for the same set of OARs from tested AI systems were slightly higher compared to previously reported HD [10].

The study found that the AI systems had shown reduced and inconsistent performance in contouring small and complex structures such as optic structures and oesophagus which is difficult to visualise in CT images rather than MR. The reduced and inconsistent performance of auto contouring systems in contouring small and complex structures had been previously reported in other studies. The previous study by Liu et. al [19] reported low DSC value for optic chiasm and wide variation in DSC value for the left and right optic nerve across multiple previous studies. Similarly, the reduced and inconsistent performance was found in this study for oesophagus cases which correlates with previously reported DSC, sDSC and HD values for oesophagus case [10].

The Radiotherapy AI software showed the best performance across all tested systems. The better agreement between the Radiotherapy AI contours and manual contours in this study may be due to the fact that the Radiotherapy AI model was trained on our clinic’s contours and therefore produced contours similar to those used in our clinic. This result demonstrates the advantages of an in-house built AI system or AI systems which were trained based on clinic-specific data. This would provide contours more similar to those currently used in that clinic. On the other hand, this could perpetuate incorrect contouring and does not provide review of current contouring practice. Nor would it lead to standardisation of contours across radiation therapy centres. However, the study found very small maximum differences in both DSC and HD values across all tested systems. So, in most test cases, the shape of contours delineated by AI systems were comparable to each other.

Low DSC of spinal cord was found across all tested AI systems during this study where previously reported DSC of spinal cord was considerably higher [10, 19]. This large disagreement occurs because the manual contours only cover the part of spinal cord which lies in the treatment field, while AI systems contour all area of spinal cord in the image as shown in Fig. 1.

There was no specific AI based software showing overall superior performance compared to others in lung, breast, pelvis and abdomen cases. Again, the very small maximum differences in both DSC and HD values across all tested systems supports that the shapes of contours delineated by each AI system are comparable to each other.

The DSC for bladder, left and right lungs, heart, left and right kidneys, liver, rectum and stomach from tested AI systems were comparable to the previous study [1, 10]. This study reported slightly lower sDSC for bladder, heart, left and right lung, liver from tested AI systems compared to previously reported sDSC [10]. The HD for same set of OARs from tested AI systems were slightly higher compared to previously reported HD [10]. This study reported slightly lower performance in rectum case compared to previously reported DSC, sDSC and HD [10].

Both left and right femoral head DSC and sDSC were comparable and HD was slightly higher compared to previously reported DSC, sDSC and HD [10]. The study found that DSC values of RadFormation were lower and HD values were higher compared to other tested AI for both left and right femoral head cases. The low DSC values, high HD values and large variation in the average DSC value when compared with other AI software were due to the difference in contouring method of RadFormation, which delineated the femoral head only while other systems and the manual reference contours included a small portion of the femoral neck as shown in Fig. 2.

There were several limitations in this study. Firstly, there were limitations in a few tested AI systems’ models. The Radiotherapy AI model was only available for head and neck, and brain regions, while the MIM model only contoured structures in male pelvis cases at the time of study. Not long after the analysis of the study was performed, most AI systems updated their models to improve their contouring quality and also offered additional structures to be contoured. Due to the rapid development of the field, it was not feasible to reflect the performance of all tested AI systems up to date. So it must be noted to the reader that this study only reflects the specific version of each tested system which was stated previously in the method section. This implies that clinics, whether in the planning stages of implementing or already having integrated an AI system, require a set of workflows or a tool to assess the AI system’s performance. This will be crucial for keeping pace with the rapid advancements in this field. Secondly, the sample size used may have been insufficient to provide adequate power for the statistical tests [30]. The sample size for some OARs was very small, with only four or five reference contours for the right submandibular gland and the stomach. So the statistical test performed for data sets with less than five samples were ignored and denoted as ***** in supplementary data A, B and C. Thirdly, in a few cases, some software systems were not able to produce particular contours for every patient. For instance, the Radiotherapy AI produced an incompleted contour of the left optic nerve by contouring on only a single CT image slice in case HN10. Fourthly, the manual contours considered as the reference during this study were contoured by only a single expert. Using cross-validated contours would have ensured the accuracy of the reference data. Lastly, Baroudi et. Al [3] discussed that to clinically accept the automated contours, the AI systems need to be evaluated in multiple domains such as quantitative evaluation of automated contours using geometric metrics, qualitative evaluation of automated contours by the end users using Likert scales and Turing tests, the dosimetric evaluation of automated contours by assessing the impact on the dose for OARs and targets when automated contours were used in planning, and lastly assessing the improvement of efficiency of clinical workflow when the AI system was used. This study exclusively conducted a quantitative evaluation of automated contours and as one of the main intentions of this study was to provide a starting point or guidance to other clinics that are considering implementing the AI system into their clinical workflow, additional forms of evaluations are planned as future work.

Conclusion

The study successfully investigated the performance of multiple AI-based auto-contouring systems by performing quantitative comparisons. Each tested AI system was able to produce comparable contours to the expert’s contours of organs at risk which implies that these contours can potentially used for clinical use after experts’ assessment and QA on the system. This study has demonstrated a method of comparing contouring software options which could be replicated in clinics or used for ongoing quality assurance of purchased systems. A statically significant difference between AI systems’ performance in various cases was found, but the absolute difference between values was not large which illustrate that all tested AI systems’ performance were comparable to each other. A reduced performance of AI systems in the case of small and complex anatomical structures was found and reported, showing that it is still essential to review each contour produced by AI systems for clinical uses.

Supplementary information There are nine supplementary files that contain all results sets collected during the study.

Supplementary file 1 and 2 contains box plot of all DSC (Supplementary data 1_DSC Box plot) and HD (Supplementary data 2_HD Box plot) data for each tested AI based contouring system. and Supplementart file 3 to 6 contains box plot of all sDSC with different \(\tau\) value applied (0 to 3 mm) data for each tested AI based contouring system.

Supplementary file 7 (Supplementary data A_DSC) contains all results data for each tested organ at risk obtained from the method conducted in this study. Each tab with the name of the organ at risk tested has:

-

The table of calculated dice similarity coefficient

-

The scatter plot and box plot of data

-

Histogram, Q-Q plot and table of Shapiro-Wilk Test results

-

The table of statistical test results

Supplementary file 8 (Supplementary data B_HD) contains all results data for each tested organ at risk obtained from the method conducted in this study. Each tab with the name of the organ at risk tested has:

-

The table of calculated maximum Hausdorff distance

-

The scatter plot and box plot of data

-

Histogram, Q-Q plot and table of Shapiro-Wilk Test results

-

The table of statistical test results

Supplementary file 9 (Supplementary data C_sDSC) contains all results data for each tested organ at risk obtained from the method conducted in this study. Each tab with the name of the organ at risk tested has:

-

The table of calculated surface dice similarity coefficient with different \(\tau\) value applied (0 to 3 mm)

-

The scatter plot and box plot of data

-

Histogram, Q-Q plot and table of Shapiro-Wilk Test results

-

The table of statistical test results

Data availability

The patient data that support the findings of this study are available on request from the corresponding author but will be subject to ethics and hospital approval. The data are not publicly available due to privacy restrictions. All calculated values are available on request.

References

Ahn SH, Yeo AU, Kim KH et al (2019) Comparative clinical evaluation of atlas and deep-learning-based auto-segmentation of organ structures in liver cancer. Radiat Oncol 14(1):213. https://doi.org/10.1186/s13014-019-1392-z

AlBadawy EA, Saha A, Mazurowski MA (2018) Deep learning for segmentation of brain tumors: impact of cross-institutional training and testing. Med Phys 45(3):1150–1158. https://doi.org/10.1002/mp.12752

Baroudi H, Brock KK, Cao W et al (2023) Automated contouring and planning in radiation therapy: What is ‘clinically acceptable’? Diagnostics. https://doi.org/10.3390/diagnostics13040667

Cardenas CE, Yang J, Anderson BM et al (2019) Advances in auto-segmentation. Semin Radiat Oncol 29(3):185–197. https://doi.org/10.1016/j.semradonc.2019.02.001

Chen W, Li Y, Dyer BA et al (2020) Deep learning vs. atlas-based models for fast auto-segmentation of the masticatory muscles on head and neck ct images. Radiat Oncol 15(1):176. https://doi.org/10.1186/s13014-020-01617-0

Chen Z, King W, Pearcey R et al (2008) The relationship between waiting time for radiotherapy and clinical outcomes: a systematic review of the literature. Radiother Oncol 87(1):3–16. https://doi.org/10.1016/j.radonc.2007.11.016

Chlap P, Finnegan R (2021) Platipy (processing library and analysis toolkit for medical imaging in python). http://https://pypi.org/project/platipy/

Choi MS, Choi BS, Chung SY, et al (2020) Clinical evaluation of atlas- and deep learning-based automatic segmentation of multiple organs and clinical target volumes for breast cancer. Radiotherapy and Oncology 153:139–145. https://www.sciencedirect.com/science/article/pii/S0167814020308203

Daisne JF, Blumhofer A (2013) Atlas-based automatic segmentation of head and neck organs at risk and nodal target volumes: a clinical validation. Radiat Oncol 8:154. https://doi.org/10.1186/1748-717X-8-154

Doolan PJ, Charalambous S, Roussakis Y et al (2023) A clinical evaluation of the performance of five commercial artificial intelligence contouring systems for radiotherapy. Front Oncol. https://doi.org/10.3389/fonc.2023.1213068

Dosovitskiy A, Beyer L, Kolesnikov A, et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929

Eminowicz G, McCormack M (2015) Variability of clinical target volume delineation for definitive radiotherapy in cervix cancer. Radiother Oncol 117(3):542–547. https://doi.org/10.1016/j.radonc.2015.10.007

Fay MP, Proschan MA (2010) Wilcoxon-Mann-Whitney or t-test? on assumptions for hypothesis tests and multiple interpretations of decision rules. Stat Surv 4:1–39. https://doi.org/10.1214/09-SS051

He K, Zhang X, Ren S, et al (2015) Deep residual learning for image recognition. https://doi.org/10.48550/arXiv.1512.03385

Healthineers S (2021) Customer experience ai-rad companion organs rt the power of automated contouring at ccgm montpellier [white paper]. Siemens Healthcare GmbH, Erlangen https://pep.siemens-info.com/en-us/whitepaper-ai-rad-companion-organs-rt-the-power-of-automated-contouring-at-ccgm-montpellier/view

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Li XA, Tai A, Arthur DW et al (2009) Variability of target and normal structure delineation for breast cancer radiotherapy: an rtog multi-institutional and multiobserver study. Int J Radiat Oncol Biol Phys 73(3):944–951. https://doi.org/10.1016/j.ijrobp.2008.10.034

Liu C, Tierney K, Blackwell T (2024) Autocontour: State-of-the-art deep-learning auto-segmentation [white paper]. Radformation, New York, NY, https://21696034.fs1.hubspotusercontent-na1.net/hubfs/21696034/AutoContour%20White%20Paper.pdf?utm_campaign=2023%20Product%3A%20AutoContour &utm_medium=email &_hsmi=246459087 &_hsenc=p2ANqtz-9xITM8hj5VxtNRYKaG7Wkyc2dIo03hDqh73fPipBCz_i_CwUl_kCZQA7aGzEBOje1In7qzOUgRZv_DEdS5_5wFmuRAZg &utm_content=246459087 &utm_source=hs_automation”

Liu P, Sun Y, Zhao X et al (2023) Deep learning algorithm performance in contouring head and neck organs at risk: a systematic review and single-arm meta-analysis. BioMed Eng OnLine 22(1):104. https://doi.org/10.1186/s12938-023-01159-y

Ng SP, Dyer BA, Kalpathy-Cramer J et al (2018) A prospective in silico analysis of interdisciplinary and interobserver spatial variability in post-operative target delineation of high-risk oral cavity cancers: Does physician specialty matter? Clin Transl Radiat Oncol 12:40–46. https://doi.org/10.1016/j.ctro.2018.07.006

Nikolov S, Blackwell S, Zverovitch A et al (2021) Clinically applicable segmentation of head and neck anatomy for radiotherapy: deep learning algorithm development and validation study. J Med Internet Res 23(7):e26151. https://doi.org/10.2196/26151, https://www.ncbi.nlm.nih.gov/pubmed/34255661

Radici L, Ferrario S, Borca V et al (2022) Implementation of a commercial deep learning-based auto segmentation software in radiotherapy: evaluation of effectiveness and impact on workflow. Life 12:2088. https://doi.org/10.3390/life12122088

Rhee DJ, Akinfenwa CPA, Rigaud B et al (2022) Automatic contouring qa method using a deep learning-based autocontouring system. J Appl Clin Med Phys 23(8):e13647. https://doi.org/10.1002/acm2.13647, https://www.ncbi.nlm.nih.gov/pubmed/35580067, pMC9359039

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. https://doi.org/10.48550/arXiv.1505.04597, arXiv:1505.04597

Taha AA, Hanbury A (2015) Metrics for evaluating 3d medical image segmentation: analysis, selection, and tool. BMC Med Imaging 15(1):29. https://doi.org/10.1186/s12880-015-0068-x

Urago Y, Okamoto H, Kaneda T et al (2021) Evaluation of auto-segmentation accuracy of cloud-based artificial intelligence and atlas-based models. Radiat Oncol 16(1):175. https://doi.org/10.1186/s13014-021-01896-1

Vaassen F, Hazelaar C, Vaniqui A et al (2020) Evaluation of measures for assessing time-saving of automatic organ-at-risk segmentation in radiotherapy. Phys Imaging Radiat Oncol 13:1–6. https://doi.org/10.1016/j.phro.2019.12.001

Vaswani A, Shazeer N, Parmar N, et al (2023) Attention is all you need. arXiv:1706.03762

Wan H (2024) Automated contouring using neural networks [white paper]. MIM software inc, Cleveland https://5300642.fs1.hubspotusercontent-na1.net/hubfs/5300642/TD650-Contour_ProtegeAI_White_Paper-20211208.pdf

Whitley E, Ball J (2002) Statistics review 6: nonparametric methods. Crit Care 6(6):509. https://doi.org/10.1186/cc1820

Zabel WJ, Conway JL, Gladwish A et al (2021) Clinical evaluation of deep learning and atlas-based auto-contouring of bladder and rectum for prostate radiation therapy. Pract Radiat Oncol 11(1):e80–e89. https://doi.org/10.1016/j.prro.2020.05.013

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. The authors declare that no funds or grants were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. ECM supervised the study and manuscript preparation in general. SB mentored YWK as software development mentor during data collection and analysis. YWK performed material preparation, data collection and analysis under supervision and guidance from ECM and SB. The first draft of the manuscript was written by YWK and all authors reviewed and commented on draft versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Simon Biggs is the founder of Radiotherapy AI. The authors have no other Conflict of interest/Conflict of interest to disclose.

Ethics Approval

This study is a retrospective study. The study was approved by the Radiation Oncology Research Committee at Chris O’Brien Lifehouse to run under an existing ethics committee approval.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kim, Y.W., Biggs, S. & Claridge Mackonis, E. Investigation on performance of multiple AI-based auto-contouring systems in organs at risks (OARs) delineation. Phys Eng Sci Med 47, 1123–1140 (2024). https://doi.org/10.1007/s13246-024-01434-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-024-01434-9