Abstract

Following the reverse engineering (RE) approach to analyse an economic complex system is to infer how its underlying mechanism works. The main factors that condition the difficulty of RE are the number of variable components in the system and, most importantly, the interdependence of components on one another and nonlinear dynamics. All those aspects characterize the economic complex systems within which economic agents make their choices. Economic complex systems are adopted in RE science, and they could be used to understand, predict and model the dynamics of the complex systems that enable to define and to control the economic environment. With the RE approach, economic data could be used to peek into the internal workings of the economic complex system, providing information about its underling nonlinear dynamics. The idea of this paper arises from the aim to deepen the comprehension of this approach and to highlight the potential implementation of tools and methodologies based on it to treat economic complex systems. An overview of the literature about the RE is presented, by focusing on the definition and on the state of the art of the research, and then we consider two potential tools that could translate the methodological issues of RE by evidencing advantages and disadvantages for economic analysis: the recurrence analysis and the agent-based model (ABM).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Since many years, the development, analysis of and experimentation with models are tools of science and applied systems in economics. To model means to develop a model as a representative of a system, and it has sense only if the produced model and its dynamics sufficiently correspond to the original system. For simple models that are represented in mathematical formula, languages may be analysed by analytical tools.

However, for most systems such a model would be too abstract for wording the relevant aspects in structure and dynamics. There is a variety of different modelling paradigms with advantages and constraints, adopted for different types of systems or modelling objectives. A basic distinction can be made between macro and micro models. A macro model describes the original system in terms of variables whose values are used to express particular states of the modelled system. The complete model describes the original system as one entity. The used representation language often is the language of mathematics: differential equations or difference equations. A micro model on the other hand represents different components, entities or units separately. The overall system status and dynamics is produced by the entities. (Benso et al., 2014). For a long time, the scientific method has been based on the consideration that natural phenomena are linear and understanding them is possible only by decomposing them into simple processes that can be dealt with by deterministic theories. The reductionist approach of linear systems has been challenged by the discovery of complex system theories focused on “interaction among parts of the systems and not so much on the characteristics of the parts themselves”. In this framework, the behaviour of the single parts does not explain the behaviour of the whole.

These theories change (Kirby, 2007) also the way to consider and analyse economic systems. In this view, economic agents are the elementary component unit of a system capable of yielding a given response for a given solicitation, which the agents’ local interactions arises in different configurations. Few of these interactions can be forecasted when there are a limited set of interactions that each agent can perform. In most cases, however, when we have larger numbers of agents acting and interacting in different ways, we see complex and new emergent behaviours from these interactions of aggregate agents that produce significantly greater results than we would wait.

These aggregate agents group together with other aggregate agents, creating increasingly larger CAS (complex adaptive systems) with more full sets of emergent behaviours and interactions.

Economic agents cannot achieve perfect knowledge of the global effects of their actions; they are not able to analyse costs and benefits of knowledge. A complex system is constituted at a lower level by various and numerous elements whose interactions are governed by specific rules of interaction. Thus, the dynamics of a system is simply the sequence of the results of interactions. The states on which the rules act must be formed as relatively stable ones, but they also have inner degrees of freedom allowing them to undergo a transition only when dynamical conditions are met. In fact, the dynamics of a social system consists of the succession of the system’s states in time generated by the different rules of interaction. Therefore, looking for local interactions and their specific rules, we can detect the formation of rules at a higher level in order to learn the dynamics of system global behaviour resulting from the local interactions. Revealing the mechanisms underlying system behaviour is usually a long and difficult endeavour.Footnote 1 Almost all studies about complex systems using an approach based on constructivismFootnote 2 have shown how complex behaviours emerge from simple rules, but very few studies have been carried out considering rule formation from dynamic behaviours.

Some authors (Barzel et al., 2016; Wanga Lai & Grebogie, 2016) have considered the application of a bottom-up approach to complex systems. Using the bottom-up method (Buraschi et al., 2013), macro-behaviours are explained as “emergent” by the interactions among lower-level elements; in order to explain observed processes, the specific data and facts are the starting point for formulating a model.

Reverse engineering (RE) characterized by a bottom-up approach is a useful methodology with which to analyse this kind of dynamical system behaviour. “Reverse engineering is a process where an engineered artefact (such as a car, a jet engine, or a software program) is deconstructed in a way that reveals its innermost details, such as its design and architecture. This is similar to scientific research that studies natural phenomena, with the difference that no one commonly refers to scientific research as reverse engineering, simply because no one knows for sure whether or not nature was ever engineered” (Eldad, 2005).

There are many differences between RE applied to artificial (engineering) and natural and human systems (biology and economics). The RE of products can therefore be very successful by decomposing the system into a system-subsystem-component hierarchy with well-defined interactions between elements on different levels, and with well-defined functions of each of the elements with respect to the whole system. In manmade machines, parts, chosen to have efficient functions, are combined to work as designed so that no serious emergent phenomena rise (Davis & Alken, 2000).

The definition of rules for these complex systems from the individual behaviour of agents is called reverse socio-engineering where some artificial models built in biology have been applied, taking into account the difference between biological and human systems (Dautenhahn, 2000). The idea of redesigning using a reverse engineering framework to study a complex economic system has existed for over 10 years.

From existing protocols and tools designed on the basis of RE, for example, the utility functions of agents are determined implicitly and can be extracted through reverse engineering. Other papers consider various control tools as distributed algorithms for solving system utility maximization problems. Based on the knowledge gained from reverse engineering, our research idea is to improve the knowledge and analysis of complex economic systems and by proposing an advanced framework of reverse engineering tools and methodologies. Inspired in fact by recent reverse engineering research, this work extends both to the study of the control of chaotic systems based on a reverse engineering framework, which acts as a tool to bridge the gap between applications and existing theoretical results on the optimization of chaos control: both to study the agent-based model (ABM) based on a micro-foundation macroeconomic approach.

Our research questions are as follows: could RE process help us to extract information from systems behaviour, to build models to simulate real economic systems, and to constitute valid tools in taking decisions on the basis of built models? Since the system is analysed to identify its components and their interrelationships, so as to create a representation of the system, could the internal mechanisms of a system, program or data structures be modified without changing its functionality? Could we recapture the top-level specification by analysing the product in order to recover all aspects of the original specification purely by studying the product or data?

The paper is structured as follows: in “Introduction”, we explain the aim of the paper to consider the reverse engineering approach for economics on the basis of features of economic complex systems. In “The Reverse Engineering Approach to Discover the Dynamics of Economic Complex Systems”, we propose an example of models for complex system dynamics by developing a method of RE to regain a class of complex system; in “Reverse Engineering Framework for Controlling Chaos: the Recurrence Analysis”, we stress the inadequacy of the top-down and bottom-up approach and we suggest a new approach, chaotic itinerancy (Kaneko & Tsuda, 1994), for integrating them. For applying a RE approach to economics, we propose two kinds of tools: one is recurrence analysis for its ability to work with few data and to detect chaos and CI (Itoh & Kimoto, 1997); in “Reverse Engineering of Multi-Agent System: ABM Simulation”, we propose the agent-based model founded on the double auction in the micro-foundation macroeconomic approach. In the final section are some conclusive considerations. In the following, we will discuss and compare agent-based simulations to other modelling and simulation techniques. Such a comparison is important as a means of argumentation for adopting an agent-based approach but also for identifying where nothing is gained by using agent-based simulation.

The Reverse Engineering Approach to Discover the Dynamics of Economic Complex Systems

The absence of accurate microscopic models of their interaction makes us unable to predict and manage the behaviour in social, biological, and technological complex systems. The interaction between their topology and their dynamics drives the observed behaviour rules of complex systems prompting us to develop reliable methodologies for the construction of dynamic models for complex systems. The difference with physical systems, where the interactions are described in terms of a set of fundamental rules, is translated for most complex systems in the condition that such rules remain to be discovered.

Until now, in the case of linear economic systems, the only available tool if we want to formalize and to describe complex economic problems could be the tools that employ a relatively small number of complex enough mathematical operations. This involves many consequences: economic models bounded to equations, super-rational representative agents must be supposed; and only global rationality is allowed. Because of a small number of complex enough mathematical operations, the agents must present perfect knowledge and complete information on whose basis they must be able to make all sorts of necessary complex calculations implied by mathematical analysis (Barzel et al., 2016, p. 2).

According to Eldad Eilam and Elliot J. Chikofsky, reverse engineering “is the process of discovering the functional principles of a device, object, or system through analysis of its structure, function, and operation” (Tranouez et al., 2005) Complex systems are adopted to “the” reverse engineering science, and it can be used to understand, predict and model the dynamics of the complex systems that enable to define and to control economic environment.

Starting from the awareness that designing a system and reverse engineering it are two opposite things whose complexity can be different by orders of magnitude, our aim is to understand if the methodological approach followed to reverse engineer complex economic systems can be driven by the observed system’s complexity, by considering mathematical terms. Answering this question is fundamental, because it could have important consequences in terms of how research is planned.

If the system is complex rather than linear, building the higher-level model by merging the local observations might not be possible, because the superposition property no longer holds. These complex, nonlinear systems consist of many different and autonomous components linked together through many interconnections, which make them interrelated and interdependent. Their features emerge from the interaction of their components and that cannot be predicted from only each individual component’s feature. Those characteristics make complex and dynamic systems not able to be described by a single rule, and the failure of the abstraction process might be caused by the methodology itself which is unable to show some of the system’s important functional features. In bottom-up approaches, relationships between variables are deducted from local observations. In top-down approaches, a high-level hypothesis is based on the specification, but not on details, of any first-level subsystems. A top-down approach is essentially the breaking down of a system to gain insight into its compositional sub-systems. The top-down approach starts with the big picture. It breaks down from there into smaller segments. A bottom-up approach is the piecing together of systems to give rise to more complex systems, thus making the original systems sub-systems of the emergent system. Bottom-up processing is a type of information processing based on incoming data from the environment to form a perception.

In this situation, a bottom-up approach has strong limitations despite that it can show insights into the system behaviour. The mistake is to consider these insights enough to produce a higher-level model when instead it happens that some important system dynamics are lost because the complexity itself masks the basic mechanics. The top-down approach has its limitations too because it works on inferences and abstractions and the conclusions reached are often unable to explain the overall mechanics, but they are based on computational assumptions that might be incorrect.

For a long time, researchers assumed that the top- and bottom-down approaches could be used independently, finding a compromise. This proved true in the reverse engineering of linear systems, but it showed strong limitations for complex systems (Benso et al., 2014).

Both approaches have their advantages and their disadvantages. The top-down approach has the advantage of having all the necessary knowledge about a problem: the rules of a process are established “a priori”. The bottom-up approach has the advantage of being able to model a lower level of systems. Each method fails where the other excels—and it is this trait of the two approaches that is the root of the debate. In general, top-down approaches tend to be abstract, symbolic and computational and are best suited to our high-level cognitive; bottom-up approaches tend to be concrete.

Barzel et al. (2016) showed an example of models for complex system dynamics by developing a method of RE to regain a class of complex system described by ordinary differential equations. The microscopic dynamics of a complex system was inferred by observing its response to external perturbations.

The result is a dynamic model that can predict the system’s behaviour, and the developed method can be used to construct the most general class of nonlinear pairwise dynamics that ensure to get back the observed behaviour.

Starting from the assumption of minimal a priori knowledge about the system, they developed a methodology to construct dynamic models directly from empirical observations with the aim to formalize these observations into a mechanistic model that, by capturing the interactions between the system’s elements, can provide the system’s equation of motion. They consider a complex system with N elements starting from the assumption that ordinary differential equations drive the activities xi(t) (i = 1,….N) of those elements:

Mo(xi(t) captures the self-dynamics of each element, adjacency matrix Aij defines the interacting elements and M1(xi(t))M2(xj(t)) describe the interaction between elements i and j, appropriate for many social biological and technological systems. When the system dynamics is uniquely characterized by three independent functions, the system’s model is:

which represents a point in the model space M. Other authors (Wanga et al., 2016) starting from this model highlight that the goal was to develop a general method to infer the subspace M(X) by relying on minimal a priori knowledge of the structures of M0(x), M1(x) and M2(x). The solution is using the system’s response to external perturbations, at the same way of technique used in biological experiments such as genetic perturbation, which is suitable for social complex systems as well.

The link between the main terms of m and the observed system response was determined, allowing the formulation of X into a dynamical equation. Despite traditional RE, the approach does not give a specific model of m but provides the boundaries of M(X).

The hard goal was to differentiate the coefficients An, Bn and Cn for discovering the functional form (3), which can be passed by taking advantage of the system’s response to external perturbations. The determination of the parameters gives only the main terms of M0(x), M1(x) and M2(x) and to better model complex systems additional terms can be used. This model (Wanga et al., 2016, p. 55) is called a minimal model because it catch the essential features, without any additional constraint, of the underlying system; the subspace given by the reconstruction method is robust to parameter selection and coherent data with observations can arise from the models subject to the subspace.

Reverse Engineering Framework for Controlling Chaos: The Recurrence Analysis

Nonlinear dynamics concern also chaotic complex systems (Haxholdt et al., 2003). The study of controlling chaos (Ott et al., 1990) in nonlinear dynamic systems means to start from the idea that chaos, while signifying random or irregular behaviour, possesses not intrinsically sensitive dependence on initial conditions that can be exploited for controlling the system using only small perturbation. This feature, in combination with the fact that a chaotic system possesses an infinite set of unstable periodic orbits, each leading to different system performance, implies that a chaotic system can be stabilized about some desired state with optimal performance (Wanga et al., 2016), and makes of course difficult to implement effective control tools and methodologies. Inspired by recent RE research, it extends to the study of the control of chaotic systems based on a reverse engineering framework, which could act as a tool to bridge the gap between applications and existing theoretical results on the optimization of chaos control.

In the study of chaotic systems, the interest of researchers is focused on low-dimensional systems. The usual “position” is that high-dimensional systems are outside the realms of chaos studies (Kozma, 2001). The limited interest in high-dimensional systems is caused by difficulties to describe these systems using existing chaos methods.

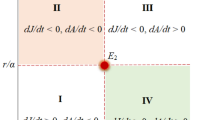

Generally, in low-dimensional systems dynamic behaviour is classified into four categories: a steady state, a periodic state, a quasi-periodic state and a chaotic state represented by a fixed point attractor, a limit cycle, a torus and a strange attractor, respectively. Of course, chaos could exist also in high-dimensional systems, whose complex behaviour is not always described by these kinds of attractors.

Applied to complex systems, neither top-down nor bottom-up strategies are effective if they work separately (Senge, 1990; Stacey, 1992). The more top-down and bottom-up forces are coordinated, the more likely complex systems will move toward greater effectiveness. Integration of bottom-up and top-down approaches seems not to be sufficient. The possible appearance of more complex behaviour, dynamical multiple equilibria and dynamical chaos suggests us to use, both methodologically and as tool, the new approach of chaotic itinerancy. As a tool, it allows the discovery and analysis of a more general chaotic behaviour based on itinerant orbit over attractor ruins that otherwise could appear as casual.

As a methodology, it implies that the passage from a position on the itinerant orbit to a different one—a different attractor characterized by weak instability—is caused by an endogenous modification of individual behaviours. Of course, this implies that the behavioural rules are changing. We are not able to understand this if we do not observe the global orbit.

Consequently, we must allow an endogenous change in the behavioural rules of individuals. But, of course, this change is top-down imposed. So, again, we must manipulate the rules in the bottom-up system. This sort of continuum necessity to have information from the upper level consequently modifies the lover-level mechanism, and vice versa, and implies more than a mixing together of the two approaches. It requires that the two methodologies be completely integrated in the RE system.

The present state of literature highlights that both basic tools that could be used for implementing each single methodology is either not sufficient for obtaining our analysis or impossible to apply for an economic system. In fact, generally, the tools able to deal with chaos are not “efficient” in the case of real economic systems, for they require a number of data superior to the usual availability for economics. Some authors (Ikeda et al., 1989; Kaneko, 1994; Tsuda et al., 2001; Tsuda, 2013) have proposed chaotic itinerancy (CI) as a mechanism that can replace the views of the top-down and the bottom-up approaches and detect transition dynamics in high-dimensional systems. However, recurrence analysis seems able to overcome this data availability question. This tool has been also used to detect chaotic itinerancy in time series. Therefore, we propose to use this tool for dealing with CI.

The chaotic itinerancy approach adopts the view that the top level could not be represented by a few degrees of freedom and the relationships between elements at a lower level can often change dynamically. So, CI allows to consider the continuous changes that manifest at a macroscopic level caused by change at a micro level and, vice versa, the changes at macro level provoked by change at micro level.

What specifically is a chaotic itinerancy? It was defined as a concept used to refer to a dynamical behaviour in which typical orbits visit a sequence of regions of the phase space called “quasi attractors” or “attractor ruins” in some irregular way. Informally speaking, during this itinerancy, the orbits visit a neighbourhood of a quasi-attractor (attractor ruin) with a relatively regular and stable motion, for relatively long times, and then the trajectory jumps to another quasi attractor of the system after a relatively small chaotic transient” (Tsuda, 2013, p. 1). In an itinerant process,Footnote 3 the system moves among attractor ruins, which are a destabilized attractor. Consequently, an attractor of the destabilized system is formed by a collection of attractor ruins and itinerant orbits connecting attractor ruins. This new type of attractor is called an itinerant attractor.

In this itinerancy, the visit near some attractors was associated to the appearance of some macroscopic aspect of the system, like the emergence of a perception or a memory, while the chaotic iterations from a quasi-attractor to another are associated to an intelligent (history dependent with trial and errors) search for the next thought or perception.

Furthermore, transitory dynamics demands a new concept of attractor, because the transition should be associated with the instability of such an attractor itself. The concept of chaotic itinerancy expresses the chaotic transitions between “quasi-attractors”. The trajectories behave as if the attractors still exist, in the sense that a positive measure of orbits is attracted to a neighbourhood of an original attractor. However, such an attracted area is not asymptotically stable. It should be noted that this idea of quasi-attractor is similar to the attractor concept proposed by Milnor (1985). However, if a Milnor attractor exits, the trajectories converge to a Milnor attractor unless it has a riddled basin, and then cannot leave from a neighbourhood of an attractor without additional perturbations. Thus, we used the term “attractor ruin” (Tsuda, 2013), which includes the region of a quasi-attractor and indicates the states that appear soon after the destabilization of attractor.

In Economics, there have been numerous studies, both theoretical and empirical, concerning the detection of complex and/or chaotic behaviours. Especially empirical studies have encountered many problems, and their results have tended to be inconclusive, due to the lack of appropriate testing methods through transition (Gilmore, 1993). Taking into account that standard techniques, such as spectral analysis or the autocorrelation function, cannot distinguish whether a time series was generated by a deterministic or a stochastic mechanism, also complex tools are unable to give reliable outcomes.

In fact, the correlation dimension test, a metric approach developed by Grassberger and Procaccia (1983a, b), has been widely used in natural sciences, generally in conjunction with related procedures such as the calculation of the Lyapunov exponent, but its application to economic data has been problematic. In fact, the implementation of these algorithms is connected with specific requirements such as extensive amounts of data, which are not always available in the experimental settings, and stationarity of data under investigation, and distributional behaviours, while many time series variables are nonlinear or do not behave as Gaussians.

Therefore, the application of metric approaches to relatively small, noisy data sets, which are common in economics, is of dubious validity.Footnote 4 To avoid these difficulties in the metric approach, a new method called topological approach for detecting deterministic chaos has been developed (Mindlin et al., 1990; Mindlin & Gilmore, 1992). The topological method has several important advantages over the metric one: it is applicable to relatively small data sets, typical in economics and finance; it is robust against noise; since the topological analysis maintains time ordering of the data, it is able to provide additional information about the underlying system generating chaotic behaviour; and to false is possible, as reconstruction of the strange attractor can be verified.

The topologic method of recurrence analysis can represent a useful methodology to detect non-stationarityFootnote 5 and chaotic behaviours in time series (Zbilut et al., 2000; Iwanski, 1998). At first, this approach was used to show recurring patterns and non-stationarity in time series (Cao & Cai, 2012); later, recurrence analysis was applied to the study of chaotic systems because recurring patterns are among the most important features of these systems. This methodology can reveal correlation in the data that is not possible to detect in the original time series. It does not require any assumptions on the stationarity of time series, or assumptions regarding the underlying equations of motions and distributional behaviours.

Recurrence analysis is particularly suitable to investigate the economic time series that are characterized by noise and lack of data and are the output of high-dimensional systems (Trulla et al., 1996), and it seems especially useful for cases in which there is modest data availability and, compared with classical approaches for analysing chaotic data, in its ability to detect bifurcation.

The basic feature of recurrence analysis is to show recurring patterns in time series, while the CI is a phenomenon in which system trajectories persist for a long time at certain areas in phase space and move promptly among them. In both approaches, we were interested in detecting the return rate of recurring states for the RA and of the orbit to the attractor for CI. It is interesting to see the similarity between recurrence and chaotic itinerancy. Therefore, it is possible, as suggested by Itoh and Kimoto (1997), to analyse the patterns that appear recurrently and persist for a long time in an itinerant process by recurrence analysis combined by several other plots such as trajectory plots, local power spectra, and wavelet plots. The goals of this application are to prove the existence of preferred transition routes. This is possible if the system is not characterized by spatial symmetry; otherwise, no preferred transition routes can be observed. The combined use of two approaches seems to be very useful to analyse many complex phenomena like economics.

Reverse Engineering of Multi-Agent System: ABM Simulation

In many fields, agent-based modelling competes with equation-based approaches in the sense that both approaches build a model that simulates the system but are different in the way the model is constructed and the form it takes. In agent-based modelling (ABM), the model is based on a set of agents that capture the behaviours of the various individuals that make up the system and the result is an emulation of these behaviours. In equation-based modelling (EBM), the model is a set of equations and execution consists of evaluating them. For these reasons, the term “Simulation” applies to both methods, which however are distinguished as emulation (based on agents) and evaluation (based on equations). Understanding the relative capabilities of these two approaches is of great ethical and practical interest to system modellers and simulators. Understanding the relative capabilities of these two approaches is of great ethical and practical interest to system modellers and simulators (Van Dyke Parunak et al., 1998).

Economic complex systems are multi-agent system, a case of exclusive use of agent modelling techniques (Axtell, 2000). For this kind of systems, writing down equations is useless and the only way available is to explore such processes systematically through agent-based computational models. A multi-agent system is a system composed by interaction “agents”, and an agent-based model uses a conceptualization of the original or reference system as a multi-agent system as basis for its model. The impressive versatility and potential of agent-based modelling in developing an understanding of industrial and labour dynamics are shown. The main attraction of agent-based models is that the actors-firms, workers and networks-which are the objects of study in the “real world”, can be represented directly in the model. This one-to-one correspondence between model agents and economic actors provides greater clarity and more opportunities for analysis than many alternative modelling approaches. However, the advantages of agent-based modelling have to be tempered by disadvantages and as yet unsolved methodological problems.

The agents can be seen as active autonomous entities that live in an environment where they have possibly restricted perception and local manipulation capabilities to reach its own goals. A second major element of an agent-based simulation model is the environmental model that roles the frame for the agents. A special attention must be direct on the interactions between the agents. Main examples of real-world multi-agent systems are human or animal societies; therefore, its major application area is social science. Meanwhile agent-based software, where the core structure is based on the conceptualization of a multi-agent system, is hoped to provide the next major abstraction for software engineering as a natural continuation of object-oriented software1 (Jennings & Wooldridge, 2000). More than the results, the ability to explain the observed phenomena is important and to choose an ABM or not we have to analyse first of all the characteristics of economic systems. A range of techniques exist to develop, analyse and maintain feature models, and the RE techniques can be used to support the extraction of them (Jungles & Klug, 2012).

There are four aspects that are relevant for capturing the notion of multi-agent systems and agent-based software: the agents forming a multi-agent system based on their interactions and situated in an environment (Gilberg & Troitzsch, 2005).

Reconsidering the definition of a CAS (Hadzikadic & Avdakovic, 2017) as a system composed of a large number of agents that interact with each other in a non-trivial manner and yield emergent behaviours. Each of these agents operate using a set of simple rules as their internal model of the global system and produce outcomes using simple rules that are part of this model (Sayama, 2015).

Internal model refers to the mechanisms used by an agent to respond to a given urge and to learn new rules through interaction with its surrounding agents. Analytical approaches work well with “goal-seeking systems”, since it is possible to divide these systems into their small component parts, analyse the function of those parts, and then try to explain the behaviour of the aggregate system in terms of those interactions (Gharajedaghi, 2011). In many cases, these interactions can be described using systems of equations and a mathematical model of the system produced.

In complex systems, due to the vast number and variety of combinations of interactions among agents, many times it is impossible and impractical to use analytical methods. To determine systems of equations capable of describing their dynamics and to capture all of the possible reactions produce an intractable or unsolvable system. Agent-based models (ABMs) are computational models that enable us to understand how different combinations of large numbers of agents produce global outcomes through their local interactions.

An ABM is a representation of the constituent agents of a system with a mechanism to enable agents to interact through information exchange with the environment as well as other agents. These agents act according to rules that attempt to approximately replicate the properties and behaviours of the actual components in the real world.

The results of these models are sensitive to initial conditions and may produce different results in agree to the inherent randomness of the phenomena that they try to reproduce. Statistical or mathematical or methods may be used to analyse outputs of ABMs to verify how accurately they reproduce the corresponding real-world system.

Because of the feature of complex systems, it is very difficult in many cases, however, to formalize mathematical models to represent the same varied nonlinear emergent outcomes possible and ABMs are one of a handful of tools useful for exploring the emergent behaviour of such systems.

More than the results, the ability to explain the observed phenomena is important, and to choose an ABM or not, we have to analyse first of all the characteristics of economic systems.

Interestingly, as ABM generates bottom-up emerging phenomena, it raises the question of what is an explanation and reason for such a phenomenon. The research goes in the direction of supporting a new way of dealing with socio-economic phenomena not from a traditional modelling perspective but from the perspective of reverse engineering of the analysis process entirely.

The first question of all is as follows: what are the main advantages of agent-based computational modelling over conventional mathematical theorizing?

Compared with traditional simulation approaches, agent-based simulation forms a modelling and simulation approach that provides many advantages: The general approach has no constraints such as the restriction in the possible design and no need of homogenous populations or homogeneous space, for strictly local uniform interactions. The modeller is free to include in the model everything that is necessary; heterogeneous populations with variable structures can be included in the model in addition to complex and rich spatial representations. Agent-based modelling possesses the ability to represent low-level dynamic and behaviour in a dynamic environmental context. All those considerations explain why agent-based simulation allows treating problems that could not be treated appropriately before. Examples of such systems are emergent, self-organizing phenomena and complex organizational models (Junges & Klügl, 2012).

First of all, it is easy to limit agent rationality in agent-based computational models. Even if one wishes to use completely rational agents, it is a trivial matter to make agents heterogeneous in agent-based models. Since the model is “solved” merely by its execution, there results an entire dynamical history of the process under study. That is, one need not focus exclusively on the equilibria, should they exist, for the dynamics are an inescapable part of running the agent model. Finally, in most social processes, either physical space or social networks matter.

On the other hand, the main disadvantages are that a single run does not provide any information on the robustness of such theorems (Axtell, 2000). It is difficult to infer for what parameters modification no longer obtains a certain result. We cannot use simple differentiation, the implicit function theorem, comparative statistics, and so on. Therefore, the only way to treat this problem is through multiple runs, systematically varying initial conditions or parameters in order to assess the robustness of results.

As we know, agent-based modelling (ABM) competes with equation-based modelling (EBM). The question is methodologically important because the choice of technology should be driven by its adequacy for the modelling task.

The main differences between ABM and standard economic theory regard the importance of a network structure for the building of agents’ values and behaviours, the hypothesis of averages and assumptions of agent homogeneity, the connection of micro behaviours, and macroeconomic aspects.

According to standard economic theory, what agents do depends on their values and available information. Nevertheless, standard theory typically ignores where values and information come from. It treats agents’ values and information as exogenous and autonomous. In reality, agents learn from each other, and their values may be influenced by values and actions of individuals that pertain to the same network. These processes of learning and influencing happen through the social interaction networks in which agents are embedded, and they may have important economic consequences (Arthur et al., 1997).

Despite the standard approach, the ABM directly connects micro behaviours and the macroeconomic aspects. Agents interact directly with one another, and social macrostructure emerges from these interactions. What is the relation to traditional simulation techniques?

In most application fields of agent-based simulation, modelling and simulation have been acknowledged as a useful tool before the introduction of the agent paradigm. In all these fields, successful macro and micro simulations were developed using, among others, partial differential equations; macroscopic statistical models based on global equilibrium approaches, like discrete choice models, are performed using individual-level simulations. Thus, we must argue why multi-agent simulation offers more in addition to comparing agent-based simulations with macro-level equation-based simulations.Footnote 6 (Klügl & Bazzan, 2012).

Consequently, the two approaches present diversities in the form of the model and how it is executed on the fundamental relationships among agents that they model, and the level at which they focus their attention.

Agents that they model both recognize two kinds of agents: individual and observable, each with a time dimension.

Individuals: They are bounded active (as having behaviours) regions of the domain. The boundaries that set individuals apart could either be physical (like people), or more abstract (like organizational, a business firm, an institution and so on).

Observables: These are measurable characteristics of interest. They may be associated either with separate individuals (e.g., the income of an individual) or with the collection of individuals as a whole (the income of an area) whose values change over time.

ABM explores the dynamics of a network maintaining a given set of conditions as long as desired; this permits the collection of statistically relevant time series data. Though artificial, this environment allows us to explore the dynamic nature of the network and can lead to important insights of great practical significance.

An EBM does not show many of the effects that we observe in the ABM and in real supply networks, including the memory effect of backlogged orders, transition effects, or the amplification of flow variation.

Relationships on which they focus attention: EBM begins with a set of equations that express (direct) relationships among observables and produce the evolution of the observables over time.Footnote 7 Observables are related to one another by equations. ABM begins with behaviours through which individuals interact with one another. These behaviours may involve multiple individuals directly or indirectly through a shared resource. The modeller takes account of the levels of observables, but this is the result of the modelling and simulation activity only (Mihm et al., 2003). He considers the behaviour of each individual. Direct relationships among the observables are not an input of the process.

The level at which the model focuses: EBM tends to make extensive use of system-level observables (top-down approach). ABM tends to define agent behaviours in terms of observables accessible to the individual agent (bottom-up approach); in this last case, the evolution of system-level observables does emerge from an agent-based model, but the modeller does not use these observables explicitly to drive the model’s dynamics as in equation-based modelling.

Of course, the two approaches can be combined (Fishwick, 1995); behavioural decisions (Borgonovo & Smith, 2011) may be driven by the evaluation of equations over particular observables. In fact, while agents can embody arbitrary computational processes, some equation-based systems are also computationally complete.

Summing up, there are different aspects that are hard to represent and to treat with standard modelling approaches. Particularly agent-based simulation provides the appropriate tools for processing systems and therefore allows to apply simulation to give answer to research questions that were not possible before.

The agent-based approach promises to be advantageous in the following cases:

Individual behaviour is not linear, and describing discontinuity in individual behaviour is difficult with differential equations. Not only that, individual behaviour shows path addiction, memory, non-Markov behaviour, or time correlations, including learning and adaptation—systems whose dynamics are from flexible and local interaction and thus coupling social models with environmental dynamics and heterogeneousness of space, etc., since the interactions of agents are heterogeneous and can produce network effects. In this situation, aggregate flow equations usually assume global homogeneous mixing, but the interaction network topology can lead to deviations from the expected aggregate behaviour.

Multi-level systems that need observation abilities on more than one level, especially in the absence of a connection between different levels, means emergent phenomena are to be analyzed.

In this kind of systems, decision making happens on different micro and macro levels of aggregation (individual vs aggregate) and feedback loops influence individual as well as aggregate levels.

We speack about systems that include adaptive and interaction processes on the individual level, or on aggregate levels, trough evolutionary processes. To analize the transient dynamics of these systems means that is not interesting the stationary equilibrium, but the phenomena and behaviour that drive them. This is the reason why averages do not work, and fluctuations tend to be smoothed out by aggregate differential equations, unlike ABM. It is important to highlight this aspect since under certain conditions the fluctuations can be amplified and the system is linearly stable but unstable to greater perturbations (Junges & Klügl, 2012).

Conclusions

What are the challenges of RE? Theoretically, RE would be a function, which can act on a dynamic system and produce an output as a static model. Now that the dynamic model has to be observed very minutely, it is one of the big challenges.

Other challenges involve connecting the complex systems with the expected high-level understanding, which again is a difficult task.

Reverse engineering can help us infer, understand and analyse the mechanisms of complex systems. In this sense, modelling is a systematic way to efficiently encapsulate our current knowledge of these systems. However, the value of models can (and should) go beyond their value explanation: they can be used to make predictions and also to suggest new questions and hypotheses that can be tested (Villaverde & Banga, 2014).

Getting deep understanding of system levels is hard. This is because system optimization can happen despite non-optimality for a given function at the sub-system level.

The characteristics of complex economic systems imply RE starting from the assumption to pay more attention to individual behaviour and their interconnections and interactions. So, the limits of a pure bottom-up approach, and for which integration with the top-down approach has been suggested, are stronger for economics.

Integration of bottom-up and top-down approaches seems not to be sufficient. The possible appearance of more complex behaviours, dynamical multiple equilibria, and dynamical chaos suggests us to use, both methodologically and as tool, the new approach of chaotic itinerancy. As a tool, it allows the discovery and analysis of a more general chaotic behaviour based on itinerant orbit over attractor ruins that otherwise could appear as casual.

The present state of literature highlights that both basic tools that could be used for implementing each single methodology is either not sufficient for obtaining our analysis or impossible to apply for an economic system. In fact, generally, the tools able to deal with chaos are not “efficient” in the case of real economic systems, for they require a number of data superior to the usual availability for economics. However, recurrence analysis seems able to overcome this data availability question. This tool has been also used to detect chaotic itinerancy in time series. Therefore, we propose to use this tool for dealing with CI.

ABM simulation tools are centred on spatial rather than economic-based interrelations. They do not permit a direct integration between the behaviour of microeconomic agents and macroeconomic observables. Especially, the sort of microeconomic behaviour considered does not always consider economic constraint (like budget constraint). Too often, each agent is relegated to one single market action like buying or selling. Therefore, the possibility that a consumer agent will buy goods only if he is able to sell his work is not considered. Therefore, even if these sorts of models present strong methodological differences from traditional EBM economic modelling, they are not able to take into account one of the specific causes of economic complexity rationing and interrelations between offer and demand.

These two proposed tools might be considered the basis for future integration between the top-down and bottom-up methodologies that we are pursuing. The next important step will be the preparation of an integrated shell that will be able to evaluate the chaotic itinerancy of a simulated system comparing it to the same dynamics of a real system of reference.

As outlined above, one of the more important outcomes of the effort to study and model complex economic dynamics has been a shift of methods as well as of tools.

The characterization of economic systems as a complex system and the necessity to consider not only how complex behaviours emerge from simple rules (top-down approach) but also how the rules arise from dynamic behaviours (bottom-up approach), determine the use of new methodology and tools.

The research perspectives examined in this work above from different areas clearly show overlaps and converging ideas, and different insights can be extracted from them. In our opinion, the first inference is that modelling should start with the questions associated with the intended use. These questions will also help us choose the level of description that needs to be selected. Our research must continue in this direction and develop theories and tools that have important implications for the identification and reverse engineering of complex systems.

Notes

It is actually impossible in theory to determine exactly what the hidden mechanism is without opening the box since there are always many different mechanisms with identical behaviour. Quite apart from this, analysis is more difficult than invention in the sense in which, generally, induction takes more time to perform than deduction: in induction, one has to search for the way, whereas in deduction one follows a straightforward path (Kaneko & Tsuda, 1994).

Constructivism [...] is the thesis that knowledge cannot be a passive reflection of reality, but is more of an active construction by agent” (Kaneko & Tsuda, 1994).

“[...] A transition through chaotic itinerancy is topologically quite different from a transition resulting from noise in multi-attractor systems. [...] In the latter, which has been dealt with in previous studies, the external noise is necessary to obtain the transitions. On the other hand, in the former, the entire phase space is decomposed into several subspace, and in each subspace the system is stable, as characterised by Lyapunov exponents within each subspace, but in a direction normal to a subspace the system is unstable as characterised by the “normal” Lyapunov exponents. Since for each subspace the normal Lyapunov exponent is positive, the set representing an asymptotic state of the dynamics restricted to each subspace is unstable, and thus it is not an attractor in the conventional sense. It is, however, a Milnor attractor.” (Tsuda, 2001, p. 29). See also Tsuda (2013).

For the problem associated with metric approaches implementation, see Gilmore (1993).

“[…] system properties that cannot be observed using other linear and non linear approaches and is specially useful for analysis of non stationarity systems with high dimensional and /or noisy dynamics” (Holyst et al. 2000).

Klügl, Franziska & Bazzan, Ana (2012). Agent-Based Modeling and Simulation. AI Magazine. 33. 29–40. 10.1609/aimag.v33i3.2425.

The equations may be algebraic, ODEs, as used in time system dynamics or PDE’s—partial differential equations, for time and space dynamics. The modeller may recognize that these relationships result from the interlocking behaviours of the individuals, but those behaviours have no explicit representation in EBM.

References

Arthur, W. B., Durlauf, S. N., & Lane D. (1997). The economy as an evolving complex System II, Proceedings Volume XXVII – Santa Fe Institute; Perseus Book Publishing, Reading, Massachusetts.

Axtell, R. (2000). Why agents? On the varied motivations for agent computing in social sciences - Center on Social and Economic Dynamics Working Paper No. 17 November, web: http://www.brook.edu/es/dynamics.

Barzel, B., Liu, Y. Y., & Barabási, A. L. (2016). Constructing minimal models for complex system dynamics. Nature Communications, 6, 1–8. https://doi.org/10.1038/ncomms8186

Benso, A., Di Carlo, S., Politano, G., Savino, A., & Bucci, E. (2014). Alice in “Bio-Land”: Engineering challenges in the world of life sciences, in IT Professional, vol. 16, no. 4, pp. 38–47, July-Aug.

Borgonovo, E., & Smith, C. L. (2011). A study of interactions in the risk assessment of complex engineering systems: An application to space PSA. Operations Research, 59(6).

Buraschi, A., Trojani, F., & Vedolin, A. (2013). Economic uncertainty, disagreement, and credit markets. Management Science, 60(5).

Cai, M., & Cao, H. (2012). Bifurcations of periodic orbits in Duffing equation with periodic damping and external excitations. Nonlinear Dynamics, 70(1), 453–462.

Davis, K. H., & Alken, P. H. (2000). Data reverse engineering: A historical survey. Proceedings Seventh Working Conference on Reverse Engineering, Brisbane, Queensland, Australia, 2000, 70–78.

Dautenhahn, K. (2000). Reverse engineering of societies -A biological perspective, in Edmonds, B. and Dautenhahn, K. Proceedings of the AISB'00 Symposium on Starting from Society - The Application of Social Analogies to Computational Systems.

Eldad, E. (2005). Reversing: secrets of reverse engineering. John Wiley & Sons. ISBN 978–0–7645–7481–8.

Fishwick, P. A. (1995). Simulation model design and execution: Building digital worlds. Englewood Cliffs.

Gharajedaghi, J. (2011). Systems thinking: managing chaos and complexity: A platform for designing business architecture. Elsevier.

Gilbert, N., & Troitzsch, K. (2005). Simulation for the social scientist. Open University Press.

Gilmore, C. G. (1993). A new test for chaos. Journal of Economic Behaviour Organisations, 22, 209–237.

Grassberger, P., & Procaccia, I. (1983a). Characterization of strange attractors. Physics Review Letters A, 50, 346–349.

Grassberger, P., & Procaccia, I. (1983b). Measuring the strangeness of strange attractors. Physica D: Nonlinear Phenomena, 9, 189–208.

Hadzikadic, M., Avdakovic, S. (Eds) (2017). Advanced technologies, systems, and applications, lecture notes, networks and systems 3. Springer International Publishing. https://doi.org/10.1007/978-3-319-47295-9_1

Haxholdt, C., Larsen, E. R., & van Ackere A. (2003). Mode locking and chaos in a deterministic queueing model with feedback. Management Science, 48(8). http://www.scholarpedia.org/article/Chaotic_itinerancy

Hołyst, J. A., Kacperski, K., & Schweitzer, F. (2000). Phase transitions in social impact models of opinion formation. Physica A, 285, 199–210.

Ikeda, K., Otsuka, K., & Matsumoto, K. (1989). Maxwell-Bloch turbulence. Progress of Theoretical Physics Supplement, 99, 295–324.

Itoh, M., & Kimoto, M. (1997). Chaotic itinerancy with preferred transition routes appearing in an atmospheric model. Physica D: Nonlinear Phenomena, 109, 274–292.

Iwanski, J. S., & Bradley, E. (1998). Recurrence plots of experimental data: To embed or not to embed?, Chaos, 8, 861–871. http://www.cs.colorado.edu/users/lizb/papers/iwanski-chaos98.pdf

Jennings, N.R., & Wooldridge, M. (2000). Agent-Oriented Software Engineering. Artificial Intelligence, 117, 277–296.

Junges, R., Klügl, F. (2012). How to design agent-based simulation models using agent learning Proceedings. Winter Simulation Conference 1–10. https://ieeexplore.ieee.org/document/6465017

Kaneko, K., & Tsuda, I. (1994). Constructive complexity and artificial reality: An introduction. Physica D, 75, 1–10.

Kirby, M. W. (2007). Paradigm change in operations research: Thirty years of debate. Operations Research, 55(1).

Kozma, R. (2001). Fragmented attractor boundaries in the KIII model of sensory information processing -- A potential evidence Cantor encoding in cognitive processes. Behavioral and Brain Sciences, 24(5), October, pp. 820–821.

Klügl, F., & Bazzan, A. (2012). Agent-based modeling and simulation. AI Magazine., 33, 29–40. https://doi.org/10.1609/aimag.v33i3.2425

Mihm, J., Loch C., & Huchzermeier, A. (2003). Problem–solving oscillations in complex engineering projects. Management Science, 49(6).

Milnor, J. (1985). On the concept of attractor. Communications in Mathematical Physics, 99, 177–195.

Mindlin, G. B., Hou, X. J., Solari, H. G., Gilmore, R., & Tufillaro, N. B. (1990). Classification of strange attractors by integers. Physical Review Letters, 64, 2350–2353.

Mindlin, G. B., & Gilmore, R. (1992). Topological analysis and synthesis on chaotic time series. Physica D: Nonlinear Phenomena, 58, 229–242.

Ott E., Grebogi C., & Yorke J.A. (1990). Controlling chaos. Physical Review Letters, 64(11), 1196–1199 (1990).

Sayama, H. (2015). Introduction to the modeling and analysis of complex systems. Open SUNY Textbooks.

Senge, P. (1990). The fifth discipline. Doubleday.

Stacey, R. (1992). Managing the unknowable. Jossey-Bass.

Tranouez, P., Prevost, G., Bertelle, C., & Olivier, D. (2005). Simulation of a compartmental multiscale model of predator-prey interactions. Applications and Algorithms.

Trulla, L. L., Giuliani, A., Zbilut, J. P., & Webber Jr, C. L. (1996). Recurrence quantification analysis of the logistic equation with transients. Physics Letters A, 223(4), 255–260.

Tsuda, I. (2001). Towards an interpretation of dynamic neural activity in terms of chaotic dynamical systems. Behavioral and Brain Sciences, 24(5), 575–628.

Tsuda, I. (2013). Chaotic itinerancy, Scholarpedia, 8(1), 4459.

Wanga, W. X., Laic, Y. C., & Grebogie, C. (2016). Data based identification and prediction of nonlinear and complex dynamical systems. Physics Reports, 644, 1–76.

Van Dyke Parunak H., Savit R., & Riolo, R.L. (1998). Agent-based modeling vs. equation-based modeling: A case study and users’ guide. In: Sichman J.S., Conte R., Gilbert N. (eds) Multi-agent systems and agent-based simulation. MABS 1998. Lecture Notes in Computer Science, vol 1534. Springer, Berlin, Heidelberg. https://doi.org/10.1007/10692956_2

Villaverde, A. F., & Banga, J. R. (2014). Reverse engineering and identification in systems biology: strategies, perspectives and challenges. Journal of the Royal Society, Interface, 11, 20130505.

Zbilut, J. P., Giuliani, A., & Webber Jr, C. L. (2000). Recurrence quantification analysis as an empirical test to distinguish relatively short deterministic versus random number series. Physics Letters A, 267, 174–178.

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Faggini, M., Bruno, B. & Parziale, A. Toward Reverse Engineering to Economic Analysis: An Overview of Tools and Methodology. J Knowl Econ 13, 1414–1432 (2022). https://doi.org/10.1007/s13132-021-00770-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13132-021-00770-5