Abstract

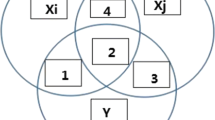

Feature selection achieves dimensionality reduction by selecting some effective features from the original feature set. However, in the process of feature selection, most conventional methods do not accurately describe various correlations between features and the dynamic changes of the relation, leading to an incomplete definition of the evaluation function and affecting the classification accuracy. In this study, a dynamic feature selection method combining standard deviation and interaction information (DFS-SDII) is proposed. In DFS-SDII, conditional mutual information is introduced to measure the changes in the importance of the selected features for classification. Then, the interaction information is used to measure the synergy between the candidate and selected features. In addition, candidate features with higher importance to the class are selected by standard deviation under the condition of the same score. To evaluate the performance of DFS-SDII, nine state-of-the-art feature selection methods are selected for comparison on 16 benchmark data sets based on the classification accuracy and F-measure. The experimental results show that the proposed method performs better in terms of feature selection and has a higher classification accuracy.

Similar content being viewed by others

References

Datta S, Pihur V (2010) Feature selection and machine learning with mass spectrometry data. Bioinform Methods Clin Res. https://doi.org/10.1007/978-1-60327-194-3_11

Mahindru A, Sangal AL (2021) SemiDroid: a behavioral malware detector based on unsupervised machine learning techniques using feature selection approaches. Int J Mach Learn Cybern 12(5):1369–1411. https://doi.org/10.1007/s13042-020-01238-9

Zhao J, Zhou Y, Zhang X, Chen L (2016) Part mutual information for quantifying direct associations in networks. Proceed Natl Acad Sci 113(18):5130–5135. https://doi.org/10.1073/pnas.1522586113

Zhang Q, Yang C, Wang G (2019) A sequential three-way decision model with intuitionistic fuzzy numbers. IEEE Trans Syst Man Cybern Syst 51(5):2640–2652. https://doi.org/10.1109/TSMC.2019.2908518

Cheng Y, Zhang Q, Wang G, Hu BQ (2020) Optimal scale selection and attribute reduction in multi-scale decision tables based on three-way decision. Inf Sci 541(1):36–59. https://doi.org/10.1016/j.ins.2020.05.109

Kohavi R, John GH (1997) Wrappers for feature subset selection. Artif Intell 97(1–2):273–324. https://doi.org/10.1016/S0004-3702(97)00043-X

Wang A, An N, Yang J, Chen G, Li L, Alterovitz G (2017) Wrapper-based gene selection with Markov blanket. Comput Biol Med 81:11–23. https://doi.org/10.1016/j.compbiomed.2016.12.002

Chen Y, Bi J, Wang JZ (2006) MILES: Multiple-instance learning via embedded instance selection. IEEE Trans Pattern Anal Mach Intell 28(12):1931–1947. https://doi.org/10.1109/TPAMI.2006.248

Zhang J, Ding S, Zhang N, Shi Z (2016) Incremental extreme learning machine based on deep feature embedded. Int J Mach Learn Cybern 7(1):111–120. https://doi.org/10.1007/s13042-015-0419-5

Darshan SL, Jaidhar CD (2020) An empirical study to estimate the stability of random forest classifier on the hybrid features recommended by filter based feature selection technique. Int J Mach Learn Cybern 11(2):339–358. https://doi.org/10.1007/s13042-019-00978-7

Liaghat S, Mansoori EG (2019) Filter-based unsupervised feature selection using Hilbert Schmidt independence criterion. Int J Mach Learn Cybern 10(9):2313–2328. https://doi.org/10.1007/s13042-018-0869-7

Abe N, Kudo M (2006) Non-parametric classifier-independent feature selection. Pattern Recogn 39(5):737–746. https://doi.org/10.1016/j.patcog.2005.11.007

Zhang Z, Li S, Li Z, Chen H (2013) Multi-label feature selection algorithm based on information entropy. J Comput Res Dev 50(6):1177. https://doi.org/10.1109/TCSVT.2014.2302554

Peng H, Fan Y (2017) Feature selection by optimizing a lower bound of conditional mutual information. Inf Sci 418:652–667. https://doi.org/10.1016/j.ins.2017.08.036

Yang H, Moody J (1999) Data visualization and feature selection: New algorithms for nongaussian data. In: Proceedings of the 12th International Conference on Neural Information Processing Systems, MIT Press, Cambridge, MA, USA, pp 687–693

Saeys Y, Inza I, Larranaga P (2007) A review of feature selection techniques in bioinformatics. Bioinformatics 23(19):2507–2517. https://doi.org/10.1093/bioinformatics/btm344

Maji P, Paul S (2011) Rough set based maximum relevance-maximum significance criterion and gene selection from microarray data. Int J Approx Reason 52(3):408–426. https://doi.org/10.1016/j.ijar.2010.09.006

Zhou P, Wang N, Zhao S (2021) Online group streaming feature selection considering feature interaction. Knowl Based Syst 226:107157. https://doi.org/10.1016/j.knosys.2021.107157

Shannon CE (2001) A mathematical theory of communication. ACM SIGMOBILE Mobile Comput Commun Rev 5(1):3–55, 107157. https://doi.org/10.1145/584091.584093

Sebban M, Nock R (2002) A hybrid filter/wrapper approach of feature selection using information theory. Pattern Recogn 35(4):835–846. https://doi.org/10.1016/S0031-3203(01)00084-X

Murino V (1998) Structured neural networks for pattern recognition. IEEE Trans Syst Man Cybern B Cybern 28(4):553–561, 107157. https://doi.org/10.1109/3477.704294

Maes F, Collignon A, Vandermeulen D, Marchal G, Suetens P (1997) Multimodality image registration by maximization of mutual information. IEEE Trans Med Imaging 16(2):187–98, 107157

Paninski L (2003) Estimation of entropy and mutual information. Neural Comput 15(6):1191–1253. https://doi.org/10.1162/089976603321780272

Jakulin A, Bratko I (2004) Testing the Significance of Attribute Interactions. In: Proceedings of the twenty-first international conference on Machine learning (ICML), Association for Computing Machinery, New York, NY, USA, pp 409–416. https://doi.org/10.1145/1015330.1015377

Hu L, Gao W, Zhao K, Zhang P, Wang F (2018) Feature selection considering two types of feature relevancy and feature interdependency. Expert Syst Appl 93:423–434. https://doi.org/10.1016/j.eswa.2017.10.016

Li J, Cheng K, Wang S, Morstatter F, Trevino RP, Tang J, Liu H (2017) Feature selection: A data perspective. ACM Comput Surv (CSUR) 50(6):1–45, 107157. https://doi.org/10.1145/3136625

Bolrn-Canedo V, Snchez-Marono N, Alonso-Betanzos A, Bentez JM, Herrera F (2014) A review of microarray datasets and applied feature selection methods. Inf Sci 282:111–135. https://doi.org/10.1016/j.ins.2014.05.042

Lewis DD (1992) Feature selection and feature extraction for text categorization. In: Proceedings of the workshop on Speech and Natural Language, Association for Computational Linguistics, USA, pp 212–217. https://doi.org/10.3115/1075527.1075574

Battiti R (1994) Using mutual information for selecting features in supervised neural net learning. IEEE Trans Neural Netw 5(4):537–550, 107157. https://doi.org/10.1109/72.298224

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238. https://doi.org/10.1109/TPAMI.2005.159

Lin D, Tang X (2006) Conditional infomax learning: an integrated framework for feature extraction and fusion. Computer vision ECCV 2006, vol 3951. Lecture Notes in Computer Science. Springer, Berlin, pp 68–82

Zeng Z, Zhang H, Zhang R, Yin C (2015) A novel feature selection method considering feature interaction. Pattern Recogn 48(8):2656–2666. https://doi.org/10.1016/j.patcog.2015.02.025

Gao W, Hu L, Zhang P (2018) Class-specific mutual information variation for feature selection. Pattern Recogn 79:328–339. https://doi.org/10.1016/j.patcog.2018.02.020

Vinh NX, Zhou S, Chan J, Bailey J (2016) Can high-order dependencies improve mutual information based feature selection? Pattern Recogn 53:46–58. https://doi.org/10.1016/j.patcog.2015.11.007

Zhou H, Zhang Y, Zhang Y, Liu H (2019) Feature selection based on conditional mutual information: minimum conditional relevance and minimum conditional redundancy. Appl Intell 49(3):883–896. https://doi.org/10.1007/s10489-018-1305-0

Wang J, Wei JM, Yang Z, Wang SQ (2017) Feature selection by maximizing independent classification information. IEEE Trans Knowl Data Eng 29(4):828–841. https://doi.org/10.1109/TKDE.2017.2650906

Dua, D., Graff, C. (2019). UCI Machine Learning Repository [https://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science

Chen Z, Wu C, Zhang Y, Huang Z, Ran B, Zhong M, Lyu N (2015) Feature selection with redundancy-complementariness dispersion. Knowl-Based Syst 89:203–217. https://doi.org/10.1016/j.knosys.2015.07.004

Aksakalli V, Malekipirbazari M (2016) Feature selection via binary simultaneous perturbation stochastic approximation. Pattern Recogn Lett 75:41–47. https://doi.org/10.1016/j.patrec.2016.03.002

Zhang Z, Bai L, Liang Y, Hancock E (2017) Joint hypergraph learning and sparse regression for feature selection. Pattern Recogn 63:291–309. https://doi.org/10.1016/j.patcog.2016.06.009

Bennasar M, Hicks Y, Setchi R (2015) Feature selection using joint mutual information maximisation. Expert Syst Appl 42(22):8520–8532. https://doi.org/10.1016/j.eswa.2015.07.007

Bennasar M, Setchi R, Hicks Y (2013) Feature interaction maximisation. Pattern recognition letters 34(14):1630–1635. https://doi.org/10.1016/j.patrec.2013.04.002

Bennasar M, Hicks Y, Setchi R (2015) Feature selection using joint mutual information maximisation. Expert Syst Appl 42(22):8520–8532. https://doi.org/10.1016/j.eswa.2015.07.007

Gao W, Hu L, Zhang P et al (2018) Feature selection considering the composition of feature relevancy. Pattern Recogn Lett 112:70–74. https://doi.org/10.1016/j.patrec.2018.06.005

Zhou H, Wang X, Zhu R (2022) Feature selection based on mutual information with correlation coefficient. Appl Intell 52(5):5457–5474. https://doi.org/10.1007/s10489-021-02524-x

Zhang R, Zhang Z (2020) Feature selection with symmetrical complementary coefficient for quantifying feature interactions. Appl Intell 50(1):101–118. https://doi.org/10.1007/s10489-019-01518-0

Wan J, Chen H, Li T et al (2021) Dynamic interaction feature selection based on fuzzy rough set. Inf Sci 581:891–911. https://doi.org/10.1016/j.ins.2021.10.026

Fleuret F (2004) Fast binary feature selection with conditional mutual information. J Mach Learn Res 5(9):1531–1555. https://doi.org/10.1023/B:JIIS.0000047395.18103.28

Acknowledgements

This work was supported by the National Key Research and Development Program of China (No. 2021YFF0704101, No. 2020YFC2003502), the National Natural Science Foundation of China (No. 62276038, No. 61876201), the Natural Science Foundation of Chongqing (cstc2019jcyj-cxttX0002, cstc2021ycjh-bgzxm0013), the key cooperation project of chongqing municipal education commission(HZ2021008) and the Doctoral Talent Training Program of Chongqing University of Posts and Telecommunications (No. BYJS202109).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, P., Zhang, Q., Wang, G. et al. Dynamic feature selection combining standard deviation and interaction information. Int. J. Mach. Learn. & Cyber. 14, 1407–1426 (2023). https://doi.org/10.1007/s13042-022-01706-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01706-4