Abstract

Concept Mining is one of the main challenges both in Cognitive Computing and in Machine Learning. The ongoing improvement of solutions to address this issue raises the need to analyze whether the consistency of the learning process is preserved. This paper addresses a particular problem, namely, how the concept mining capability changes under the reconsideration of the hypothesis class. The issue will be raised from the point of view of the so-called Three-Way Decision (3WD) paradigm. The paradigm provides a sound framework to reconsider decision-making processes, including those assisted by Machine Learning. Thus, the paper aims to analyze the influence of 3WD techniques in the Concept Learning Process itself. For this purpose, we introduce new versions of the Vapnik-Chervonenkis dimension. Likewise, to illustrate how the formal approach can be instantiated in a particular model, the case of concept learning in (Fuzzy) Formal Concept Analysis is considered.

Similar content being viewed by others

1 Introduction

Concept mining deals with the extraction of concepts from artifacts such as data, traces of behaviors, or collections of unstructured data, among others. In a broad sense, the task should be understood as a problem on recognizing patterns from source data. Its resolution needs to use Artificial Intelligence and Statistics techniques (such as data mining, text mining, and variants of statistical learning). However, other scientific fields as Cognitive Computing or Psychology are also needed.

When faced with this task, the researcher must bear in mind that the concept notion itself is subject to various interpretations, some of them accommodated to the nature of a particular problem. Such notion is not limited to concept extraction based on linguistic patterns (e.g., using WordNet [44]). One can also address the problem of extracting concepts with slightly more formalized semantics as for instance with Formal Concept Analysis (see a survey of related issues in [39]) or even at the level of Semantic Web technologies [17].

There are several issues related to Concept Mining as, for instance, the granularity of the conceptual structure achieved, the richness of the concept repertoire, and the treatment of uncertainty. The latter affects the extensionality of the extracted concepts; in equivalent terms, the problem of deciding the concept membership. Decision-making in Concept Mining could be essentially different from other decision-making tasks since solving the uncertainty would solve, in practice, the problem itself, the concept specification. For example, the treatment of concept mining by means of fuzzy methods (e.g., [42]) would allow posing the problem in such a way that general-purpose solutions for uncertainty processing could be applied. It is in this aspect that Concept Mining relates to approaches for shaping decision regions within the data space, as the so-called Three-Way Decisions (3WD) research paradigm.

The 3WD paradigm has emerged as a framework to address the challenges related to decision-making processes [22, 32, 61, 63, 66]. The ground idea that underlies in 3WD is that the domain of a decision-making problem is intrinsically partitioned into two regions. The first one -the decided data- comprises those inputs for which the decision problem has been solved, split in turn in the set for which the answer is positive, and the set containing the inputs with negative output. A second region comprises all data for which we do not know, for the moment, the decision to be made (the boundary region). The analysis of the partition is prior to the second problem to address: the design of strategies for the three regions (see Fig. 3).

The 3WD paradigm aims to bridge ideas between different approaches, ranging from well-established fields such as Granular Computing [65], to human problem-solving skills [62, 64]. Solutions based on 3WD techniques capture different ways of understanding both the decision process and the (ontological) nature of positive, negative, and boundary (representing undecided, uncertainty) sets. Among other approaches, the following are considered: techniques from Rough Set Theory [66], assessment of thresholds and determination of decisions [49], working with interval-based evaluations [36], and extensions of Formal Concept Analysis [47, 66, 68, 71], etc. The 3WD is inspiring new ways of managing decision-making that broaden the horizon of its applicability [5].

Focusing on a topic closer to that of the paper -consistency of Concept Mining from datasets- there are several 3WD applications in Data Science and related fields (e.g. [40, 58]). These cover topics as foundations [5, 30, 62], the enrichment of Machine Learning (ML) processes [54], applications in the presence of uncertainty or absence of data/information [1], semantic tagging and sentiment analysis [24, 69, 70], and incremental concept learning [68].

1.1 Related work on 3WD foundations of Concept Learning in FCA

Roughly speaking, efforts focused on the formalization of processes for concept learning within the 3WD paradigm can be classified in two categories. Those that design the formalism from 3WD principles as [23, 29], and those that exploit the similarities with 3WD within other frameworks that allow formalizing them, such as Formal Concept Analysis or Concept Graphs [19, 27].

The later type of approach is rooted in 3WD principles. For example, the notion of Three-Way Cognitive Concept Learning introduced through the so-called Three-way cognitive operator [29]. This approach is framed within a multi-granularity context. It starts with an attribute partition according to the different ways of deciding data. In the cited paper the basic requirements for such an operator are detailed (an axiomatic approach). This is specified employing a pair of applications relating, in both directions, the three-way decisions with the sets of the attribute partition. From the axioms, the notion of concept under such applications is defined as: a pair \((\langle X, Y \rangle , B)\) formed by a 3WD decision (X, Y are the positive and the negative region resp.) and a subset of attribute partition satisfying two closure conditions. The first is (X, Y), which is the most effective decision for the multi-decision set represented by B. The second condition, states that B contains all decision problems for which \((\langle X, Y)\) or another less effective 3WD decision is a solution. Two particularities of the theory developed in that work are that the decision thresholds for each function are set as initial parameters (thus prefixing the positive and negative sets for 3WD reasoning) and that only uncontradictory three-way decisions are considered (something natural for learning in a multi-decision context). The framework is also useful to formalize the dynamics and evolution of the 3WD decision (depending on the variations of the information), as well as to establish how the 3WD cognitive concept learning would be [23], from a fusion viewpoint. The idea guiding the learning in the latter is to find the best approach to the decision problem from the multi-granularity [23, 29].

Concerning the second approach mentioned above -FCA and related approaches as the source of Concept Learning formalization- the two cited works [19, 27] develop concept learning approaches in FCA. These can be seen as extensions of Kuznetsov foundational work [26]. The idea can be read in 3WD since the attribute to learn classifies the data from G (object set in FCA) in positive \(G_+\), negative \(G_-\), and undetermined \(G_{\tau }\) (the so-called boundary region in 3WD). The partition of the object set induces three formal contexts that, combined, allow to define the version space to select the hypothesis (the consistent classifier), as well as to characterize different types of sound classifiers. The approach [27] further generalizes the idea to work with conceptual graphs, that are not necessarily endowed with a lattice structure, although they are partially ordered by means of a specialization relationship, which gives rise to a concept lattice on sets of graphs.

The former two approaches share the aim of establishing the appropriate framework in which to specify and solve the concept learning problem. Such a framework can and should be complemented with other two types of studies. On the one hand, a study on the computational complexity of the problem. And on the other hand, another is on how to decide the consistency of the learning processes, designed on the constructed hypothesis spaces (a Statistical Learning issue). In the present case, how to study the consistency of methods based on Empirical Risk Minimization (ERM) using the new hypothesis classes.

Concerning the first issue, the complexity was characterized by Kuznetsov in [28]. The author presents the main complexity results in (FCA-based) Concept Learning. As regards counting the (minimal) hypotheses in FCA-based learning, the problem is proved to be #P-complete. And concerning the decision problem itself (the existence of a positive hypothesis of bounded size), its NP-completeness is proved.

The second study would focus on the convergence of the learning procedures. For example, whether the empirical risk of an ERM method converges to the true risk when the sample set increases. In particular, we are concerned with the ERM consistency preservation when the hypothesis class itself is enhanced (e.g. providing more effective 3WD decisions or refining classifiers). To better frame this problem, let us focus for a moment on such enrichment.

1.2 Enhancing the hypothesis class by means of 3WD

This paper concerns some of the foundational issues arising when reconsidering Machine Learning (ML) models under 3WD premises. Specifically, how the Learning Process is influenced by the transformation of \({\mathcal {F}}\), the class of functions on the data space U that the ML model can use to learn (the hypothesis class). To properly raise the problem, the elements involved in the issue are sketched here.

Let \(\overline{{\mathcal {F}}}^{3WD}\) be some extension of \({\mathcal {F}}\) using a particular 3WD-based enhancing method (previously designed). Such an extension will be called 3WD closure. The selected 3WD closure should present some features, for instance, to be easy to implement (from \({\mathcal {F}}\)) and that (partially) solves the noncommitement problem. The change of \({{\mathcal {F}}}\) by \({\overline{{\mathcal {F}}}^{3WD}}\) will have an impact on the Learning Process. Consequently, the decision-making procedure based on such a process may change. This fact suggests addressing two issues.

The first one is foundational in nature, namely what consequences the extension of \({\mathcal {F}}\) to \(\overline{{\mathcal {F}}}^{3WD}\) would have. It should describe how such an extension would affect the performance of the model. To study the issue, we will focus on Vapnik-Chervonenkis (VC) dimension, -denoted in this paper by \(\dim _{VC}(.)\). VC dimension is useful for studying PAC-learning and Empirical Risk Minimization-based (ERM) learning processes as well.

The second would deal with the usefulness of the study for the first one. That is how the theoretical framework developed in the first part of the paper applies to a particular ML model. We have selected as a case study the (Fuzzy) Formal Concept Analysis (F)FCA, considered as a Knowledge Discovery tool for concept mining. The application of (F)FCA for Concept Learning is an active research topic in both Cognitive Computing and Machine Learning [15, 30, 37, 39, 68]. Its soundness is based on its natural relationship with the traditional notion of concept. The classical view on concepts, the classical theory, holds that concepts possess a definitional structure. In other words, a concept can be defined by specifying (a set of) its properties. In fact, according to the International Standard ISO 704, a concept is a unit of thought constituted of two parts: its extent and its intent. That is, besides the definitional structure formed by properties, it is the set of elements satisfying it. This definition matches with the notion of the formal concept itself in FCA.

In order to familiarize the reader with the basics of FCA, let us see a simple but illustrative example using FFCA for Concept Learning.

Concept Lattices associated to the formal contexts from Fig. 1. The left concept lattice corresponds to the formal context \({\mathbb {K}}_1\) taking as threshold 1

Example 1

Consider the dataset from Fig. 1 and its study through FFCA by using the fuzzy formal context \({\mathbb {K}}_1 = (G, M, I)\) grounded on the dataset, and the fuzzy attributes \(M=\{\)young, very young, tall, very tall, Female, Male\(\}\). The last ones are crisp, while the first ones have the membership functions (resp.):

(see Fig. 1, right). In FFCA, it is usual to take a common threshold for attributes to induce crisp concepts. For example, one can select as a threshold 1 to obtain a (crisp) formal context. However, for characterizing some sets, employing concepts is not convenient. If different thresholds for the same attribute are selected, one can obtain the formal context \({\mathbb {K}}_2\) shown in Fig 1, down. The associated concept lattices are shown in Fig. 2.

Note that, in this case, any subset of \(P=\{Peter, John, Mary\}\) can be characterized -in \({\mathbb {K}}_2\)- employing concepts with crisp attributes, i.e., for all \(A\subseteq P\) there exists a concept \(C=(X, Y)\) of \({\mathbb {K}}_2\) such that \(P\cap X = A\). That is, (and restricted to P itself):

-

\(\{Peter,John, Mary\}\equiv \) all persons in P (universal set)

-

\(\{Peter,John\}\equiv \) male persons

-

\(\{Peter, Mary \}\equiv \) very young persons

-

\(\{John,Mary\}\equiv \) tall persons

-

\(\{John\}\equiv \) tall male persons

-

\(\{Mary\}\equiv \) very tall female persons

-

\(\{Peter \}\equiv \) very young persons

The example would have the maximum size of a subset with maximum semantic differentiation (technically, a shattered set using concepts from \({\mathbb {K}}_2\)). To obtain a similar differentiation for all the individuals, \(2^4\) concepts would be needed, thus this is not possible with \({\mathbb {K}}_2\). However, the number of fuzzy attributes can be increased by taking other modifications from the original attributes. A question that arises is whether the full object could be shattered by enlarging the attribute set with some newer (crisp) modifiers of the fuzzy attributes. This issue would be interesting when working with potentially infinite datasets and hypothesis classes.

As it can be seen in the example, the step from the dataset to FFCA can be considered natural, and the refinement of the class of membership functions would be necessary for proper concept mining. It is necessary to keep in mind that increasing the number of attributes is like increasing the size (the variable dimension) of the dataset, so this could bring more complexity. Therefore, new issues can arise (something similar to the curse of dimensionality [7]). To address the issue, the VC dimension for a formal context \({\mathbb {K}}\) will be introduced in a natural way (Sect. 6). Namely it is the VC dimension of the class formed by (the extensions of) the concepts of \({\mathbb {K}}\).

Generalizing the framework sketched in the previous example, we can see that any hypothesis class \({\mathcal {F}}\) on a data space G naturally induces a formal context

In general, \(\dim _{VC}({\mathcal {F}})<\infty \) does not imply that \(\dim _{VC}({\mathbb {K}}[{\mathcal {F}}])<\infty \) (although the reciprocal is true). The aim in the case at hand is to extend the hypothesis class to \(\overline{{\mathcal {F}}}^{3WD}\) (built by some method), before constructing the formal context. Thus, the same issue arises: whether \(\dim _{VC}({\mathbb {K}}[\overline{{\mathcal {F}}}^{3WD}])<\infty \). Furthermore, in case it is true, a new question arises: whether it is possible to restrict ourselves to finitely generated (f.g) concepts, that is, concepts that are characterizable by a finite set of attributes. The requisite of only using f.g. concepts is a natural condition since it facilitates user acceptability of the discovered concepts by presenting simpler characterizations (see e.g. [4]).

Summing up, the Table 1 shows, with a brief description, both the hypothesis classes and the different versions of the VC dimension that will be used along the paper, both in the abstract definition and in the case of FFCA. The first block contains the general notation. The term \(\overline{{\mathcal {F}}}^{3WD}\) denotes a generic 3WD-closure obtained using some approach. The second block lists the different types of hypothesis classes considered in the paper. It includes both the ones induced by the attributes of the formal context (the two first ones) and the ones built by concept subsets of the concept lattice of the formal context. The third block enumerates the four VC dimensions studied in the paper.

Throughout the paper, several results related to different VC dimensions are presented. Additionally, many examples are introduced to show how the extension of the hypothesis class modifies the VC dimension, that could even lead to an infinite dimension in some examples (and hence loosing ERM consistency). Among other results, it is verified that VC and DVC dimensions agree for any hypothesis class (Prop. 3). Within FFCA, the DVC and SVC dimensions agree in formal contexts, even in some cases in which a finite hypothesis class is extended to an infinite one. Concerning the extension of the attribute set, it is shown that the extension obtained by the so-called contractions (roughly speaking, those obtained by varying the thresholds for decision-making) preserves the SVC dimension finiteness (Corollary 8). Moreover, the study of different dimensions on finite generated hypothesis class is carried out, showing that SVC dimension is preserved in interesting cases (Th. 2).

1.3 Structure of the paper

The paper aims to address the above issues from a 3WD inspired theoretical framework. The next section recalls the main elements of the 3WD paradigm, Machine Learning, and (fuzzy) Formal Concept Analysis needed along the paper. Section 3 is devoted to framing learning within the 3WD trisecting-acting framework. In Sect. 4, the extension of the hypothesis class, by means of some 3WD technique, is formalized in functional terms. In Sect. 5, some variants of the VC dimension are introduced. These facilitate the analysis of the new ML models. The second part of the paper starts with Sect. 6, which is devoted to instantiate the above ideas for (F)FCA, considered as a model for Concept Mining. The main results on the VC dimension are shown for this case. The analysis follows in Sect. 7, where the so-called DVC dimension for FFCA is studied. Several variants, related to finitely generated concepts, are also analyzed. In Sect. 8 it is proven that a particular type of 3WD closures -focused to refining indecision regions for functions of the hypothesis class- preserves PAC learnability. The paper ends with some final considerations, as well as future work (Sect. 9).

2 Background

The cardinal of the set A will be denoted by |A|, by \({\mathcal P}(A)\) its power set, and by \(C_A\) its characteristic function. Throughout the paper U will denote a data space. The class of evaluations on U is defined as the function class

Likewise, the class of binary functions \(U^{\{0,1\}}\) is analogously defined.

2.1 Learning and VC dimension

Any ML model considered here has a hypothesis class \({\mathcal {F}}\) associated, where the ML procedure searches the solution to the learning problem of a set A (i.e., the decision problem of belonging to that set), under an unknown measure of probability, P(.).

There is a risk function \(Q:{\mathbb {R}}^2\times {\mathcal {F}} \rightarrow {\mathbb {R}}\) to estimate the discrepancy between the decision value, y, for the input x and the result given by the function chosen as solution, f(x). By default, \(Q(x,y,f)=|y-f(x)|\) is selected.

Definition 1

Under the above conditions, the (functional) risk of f is

When A is fixed, any reference to the set will be omitted in the notation, e.g. by writing Q(x, f) instead of \(Q(x,C_A(x),f)\).

The purpose of the ML-based method will be then to minimize the risk, by finding \(f_0\in {\mathcal {F}}\) such that

The learning process will use sample data; independent random, identically distributed sets S. The solution proposed from S will also be a function of \({\mathcal {F}}\). Learning will be driven by the goal of minimizing the empirical risk associated with the sample data.

Definition 2

The empirical risk of f for the finite sample S is defined as:

A process is said to be a Empirical Risk Minimizer (ERM) (relative to Q) if it uses the empirical risk as an estimate of the soundness of the solution, in the following sense. Suppose that, for a sample \(S_n\) of size n, the ERM-based process returns a function \(f_{n}\) that minimizes the empirical risk for S.

Definition 3

The ERM is consistent if the two following limits converge in probability to the value sought;

2.2 Vapnik-Chervonenkis dimension

A key measure for studying the consistency of ERM is the so-called Vapnik-Chervonenkis dimension (VC dimension) [52] (see also [10, 51]). Under certain conditions, a hypothesis class with a finite VC dimension guarantees the consistency of the (ERM-based) learning process. The VC dimension is also useful for other Data Science challenges such as Differential Privacy ([72], p. 64).

Definition 4

Let A be a set and \({{\mathcal {B}}}\subseteq {\mathcal {P}} (U) \) a set class.

-

The trace of A in \({\mathcal {B}}\) is the class \(A \cap {{\mathcal {B}}} = \{A \cap B : B \in {{\mathcal {B}}}\}\).

-

A set A is shattered by \({{\mathcal {B}}}\) if \( A \cap {{\mathcal {B}}} = {\mathcal {P}} (A)\).

-

The Vapnik-Chervonenkis dimension (thereafter VC dimension) of \({\mathcal {B}}\), \(\dim _{VC}({{\mathcal {B}}})\), is the largest cardinal of a set shattered by \({\mathcal {B}}\) (it can be infinite).

The notation \(\dim _{VC}(U,{\mathcal {B}})\) will be used when the aim is to make U explicit. The reader can find several illustrative examples of computing VC dimension in classical literature, as in [10, 35].

In this paper we work with pairs of the form \((U,{\mathcal {F}})\) where \({\mathcal {F}}\) is a hypothesis class on U. The VC dimension can also be defined for a real-valued hypothesis class \({\mathcal {F}}\). Given \(A\subseteq U\), it is said that A is learned by means of \({\mathcal {F}}\) if there exists \(f\in {\mathcal {F}}\) and \(\beta \in \mathbb R\) such that

That is, it is working with the set class

The VC dimension of \((U,{\mathcal {F}})\) is defined as \(\dim _{VC}(U,{\mathcal {F}}):=dim_{VC}(U,{\mathcal {B}}_{{\mathcal {F}}})\). Without loss of generality, one can only work with set classes \({\mathcal {B}}_{{\mathcal {F}}}\) defined as \(\{pos(f) \ : \ f\in \mathcal F\}\) where \(pos(f)=\{ x \in U \ : \ f(x)>0\}\)

A key result in Statistical Learning states that, for any hypothesis class with bounded VC dimension, a consistent learner induces a PAC learning algorithm by providing a large enough training set [10, 31, 35] (see Thm. 1 below). In the case of convergence, the VC dimension is useful to bound the error of the ML-based algorithm [10, 51]. The VC dimension is used to find a bound -independent of the underlying distribution P- for the sample size that is needed to select a hypothesis with arbitrarily small error, and with arbitrarily high probability, no matter which set we are trying to learn. We refer the reader to the references [9, 10] or [51] for technical details. Therefore, the VC dimension turns out to be a useful tool to analyze ERM-based ML models. To refer to this result throughout the paper, it is stated here in the following general terms:

Theorem 1

The following conditions are equivalent:

-

1.

\({\mathcal {F}}\) is PAC-learnable.

-

2.

\(\dim _{VC}({\mathcal {F}})<\infty \).

-

3.

ERM is consistent.

The researcher can compare different ML models based on their VC dimensions, taking into account that the larger the VC dimension, the higher the size of the data sample to be used. Additionally, hypothesis classes with excessive VC dimension should be avoided since these might overfit [51]. That is, these could be focused on irrelevant features of the input dataset. Therefore, the aim is to work with a hypothesis class with a low VC dimension.

The following function is useful to estimate the growth of the VC dimension with respect to the size of the sample set.

Definition 5

Consider \((U,{\mathcal {F}})\) being \({\mathcal {F}}\) a hypothesis class on U.

-

1.

Let \(X\subseteq U\). It is defined \(|X|_{{\mathcal {F}}} := |\{ Y \subseteq X \ : \ Y \text{ is } \text{ learned } \text{ by } {\mathcal {F}}\}|\).

-

2.

\(s_{(U,{\mathcal {F}})} (n)\) is defined as \(s_{(U,{\mathcal {F}})} (n) := max \{ |X|_{{\mathcal {F}}} \ : \ X \subseteq U \text{ and } |X|=n \} \).

Therefore \(0\le |X|_{{\mathcal {F}}} \le 2^{|X|}\), reaching the upper bound when X is shattered. It is interesting to note that, although \(s_{(S,{\mathcal {F}})} (n) \le 2^n\), its growth is polynomially bounded under finite VC dimension.

Lemma 1

[46] [Sauer-Shelah-Perles] Suppose \(\dim _{VC}({{\mathcal {F}}})= d < \infty \). Then

In particular, if \(n>d+1\) then \(s_{{\mathcal {F}}}(n) \le (e\cdot n/d)^d\).

The question arising here is how to change the VC dimension if some processing is applied to data. Namely, either by (1) some data processing before applying the ML process, or either by (2) transforming the values returned by the classifier function. The following result is about the first one (that, roughly speaking, is a data pre-processing).

Lemma 2

[6] Let \(f: U' \rightarrow U\) and \({\mathcal {F}}\) be a hypothesis class on U. Let \((U', {\mathcal {F}} \circ f)\) where \({\mathcal {F}}\circ f := \{ g\circ f \ : \ g\in {\mathcal {F}}\}\). Then \(\displaystyle s_{(U', \mathcal F\circ f)}(n) \le s_{(U,{\mathcal {F}})}(n)\), and equality holds if f is surjective. In particular,

and equality holds if f is surjective.

2.3 Three-way decision modeling through evaluation

Among the various 3WD frameworks put forward by the research community [63], only those based on evaluations will be considered here. Given an evaluation \(f \in U^{[0,1]}\), the elements belonging to U are classified as accepted, rejected, or unknown (identifying 0 as false and 1 as true) by means of the decision regions associated to f:

respectively (see [63] for more details). Since the three sets form a partition of U, the 3WD decision can be specified by the pair \((X_f,Y_f):=(POS_{f},NEG_{f})\).

The class of all 3WD decisions on U is partially ordered in the following way. Consider that \((X_1,Y_1)\) is more effective than \((X_2,Y_2)\) [29], \((X_2,Y_2)\preceq (X_1,Y_1)\) (or \((X_2,Y_2)\) is decision consistent with \((X_1,Y_1)\)) if \(X_1\subseteq X_2\) and \(Y_1\subseteq Y_2\).

Trisecting-and-acting model (extracted from [64])

The 3WD general framework considers two main tasks (see Fig. 3): trisecting and acting [64]. The first one splits the data space into the three regions, whilst the second one is devoted to applying specific strategies to each. Thinking in the Learning Problem, the second task has to solve the decision on the uncertainty region.

Other different decision regions can be obtained by taking thresholds on the evaluations. The idea would be to consider as decided data having an uncertain value close to a decision value. There are several ways to formalize the idea (including the use of fuzzy logic). For example, to show several examples in the paper, the following regions will be considered, given \( \varepsilon \) and \(\delta \) with \(0\le \delta + \varepsilon \le 1\):

-

\(POS^{ \varepsilon ,\delta }_{f} = \{ u \in U \ : \ 1- \varepsilon \le f(u)\le 1\} = f^{-1}([1-\varepsilon ,1])\)

-

\(NEG^{ \varepsilon ,\delta }_{f} = \{ u \in U \ : \ 0 \le f(u) \le \delta \} = f^{-1} ([0,\delta ])\)

-

\(BND^{ \varepsilon ,\delta }_{f} = \{ u \in U \ : \ \delta< f(u)<1- \varepsilon \} = f^{-1}((\delta ,1-\varepsilon ))\)

Please note that this notation extends the previous one (for \( \varepsilon =\delta =0\)), and produce more effective 3WD decisions, that is

2.4 (Fuzzy) formal concept analysis

The information format used in Formal Concept Analysis (FCA) is organized in the so-called Formal Context, a three elements set \({\mathbb {K}} = (G,M,I)\), where G is a set of objects, M is a non-empty set of attributes, and \(I \subseteq G \times M\). For example, Fig. 4 (left) shows a formal context describing fishes (objects) living on different aquatic ecosystems (attributes). Attributes can be considered as boolean functions \(m:G \rightarrow \{ 0,1\}\), defined by: \(m(g)=1\) iff \((g,m)\in I\).

A formal context induces a pair of operators, which are called derivation operators. Given \(A \subseteq G\) and \(B \subseteq M\), they are defined by

FCA [20] mathematizes the philosophical understanding of a concept as a unit of thought, comprising its extent and its intent. The extent covers all objects belonging to the concept, and the intent comprises all common attributes valid for all objects under consideration:

Definition 6

A formal concept is a pair \(C=(A,B)\) of object and attribute sets (called extent, \(ext(C)=A\), and intent, \(int(C)=B\), of C) verifying that \(A'=B\) and \(B'=A\).

The set of concepts of \({\mathbb {K}}\) is endowed with the structure of lattice by means of the subconcept relationship, \(\le \). The lattice, denoted by \({\mathfrak {B}} ({\mathbb {K}})\), is complete [20]. The Hasse diagram of the concept lattice associated with the formal context of Fig. 4 left is shown in Fig. 4, right. In this representation, each node is a concept and its intent (extent, resp.) is formed by the set of attributes (objects, resp.) included along the path to the top (bottom resp.) concept. For example, the bottom concept

is a concept that could be interpreted as euryhaline-fish. Note that for this concept there is not a proper term of the language within the attribute set to denote it, thus it is something new. This is an example of how FCA can be used as a concept mining tool.

2.4.1 Fuzzy formal concept analysis

There is an extensive bibliography on extending FCA to work with vagueness employing Fuzzy Logics [38, 48], which has become a subfield of its own, the so-called Fuzzy FCA (FFCA). There are general proposals of what a formal fuzzy context/concept would be [11, 34], as well as others for specific applications (e.g., [41, 50, 57, 67]). Although there exist more general approaches, the selected here relies on inducing crisp sets by selecting thresholds for fuzzy relations. More specifically, for the attributes if they are considered as fuzzy predicates.

Definition 7

A fuzzy formal context (f.f.c.) is a triple \(K=(G, M,I),\) where I is a fuzzy relation on \(G \times M\) (with membership relation \(\mu _I\)).

Example 1 already shows a f.f.c. The fuzzy relation I induces a fuzzy membership function for each atttibute \(m\in M\), defined by

which would turn it as a fuzzy predicate.

There exist several ways for defining concepts in FFCA [50, 67]. The formalization selected here is similar to, for example, that of [8], but making the attributes fuzzy instead of the object sets.

Definition 8

Let \({\mathbb {K}}=(G, M, I)\) be a fuzzy formal context and \(t\in [0,1]\) be a threshold.

-

The fuzzy derivation operator \('\) on objects is

$$\begin{aligned}A'=\left\{ m \in M \ : \ \forall g \in A \ \mu _{l}(g, m) \ge t\right\} \end{aligned}$$and, on attributes, is \(B'=\left\{ g \in G \mid \forall m \in B: \mu _{I}(g, m) \ge t\right\} \).

-

A fuzzy formal concept C is \((A, \varphi (B))\) such that \(A'=B\) and \(B'=A\), where \(\varphi (B)\) is the fuzzy predicate with membership function

$$\begin{aligned}\mu _{\varphi (B)}(g):=\min _{m \in B} \mu _m(g)\end{aligned}$$

It is simply written \(\mu _B\) instead of \(\mu _{\varphi (B)}\). This way, an structure analogous to the concept lattice for classic formal contexts, can be obtained.

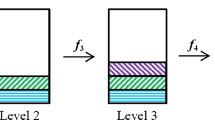

3 On 3WD and learning

As it was already discussed in the introduction, extensions of \({\mathcal {F}}\), by using some 3WD-method, are considered. Then \(\overline{{\mathcal {F}}}^{3WD}\subseteq U^{[0,1]}\). The trisecting-and-acting model can be, thus, reformulated as follows (Fig. 5): the new class \(\overline{{\mathcal {F}}}^{3WD}\) refines the boundary region in the trisecting task. This way, the decision-making task has to be refined as well.

In functional terms, the overall result of the refinement of the Trisecting-and-Acting model sketched in Fig. 3 should be an answer function; a decision function (values in \(\{0,1\}\)) that extends the decision regions of the initial function.

Definition 9

-

The class of answer functions, \(\mathcal {ANS}\), is the class of functions \(U^{\{0,1\}}\).

-

Given \(f: U\rightarrow [0,1]\), an answer for f is an answer \(g\in \mathcal {ANS}\) such that \(POS_{f}\subseteq POS_g\) and \(NEG_{f}\subseteq NEG_g\).

In general, the step from \({\mathcal {F}}\) to \(\overline{{\mathcal {F}}}^{3WD}\) may not preserve decision regions.

Definition 10

Let \({\mathcal {F}}\) be a hypothesis class

-

An operator \(\varTheta : {\mathcal {F}} \rightarrow \overline{{\mathcal {F}}}^{3WD}\) is answer preserving if

$$\begin{aligned}POS_f \subseteq POS_{\varTheta (f)} \text{ and } NEG_f \subseteq NEG_{\varTheta (f)} \text{ for } \text{ any } f \in {\mathcal {F}}\end{aligned}$$ -

Let \({\mathcal {G}}\) be a class of functions on [0, 1]. It is said that \({\mathcal {G}}\) uniformly preserves the decisions of \({\mathcal {F}}\) if \(POS_{f}\subseteq POS_{g\circ f} \ \text{ and } \ NEG_{f}\subseteq NEG_{g\circ f}\) for any \(g\in {\mathcal {G}}\) and \(f\in {\mathcal {F}}\).

Please note that 3WD-based decision techniques would produce an answer function from f, which could be considered as a post-processing of the output of f. An example of an answer that comes from a 3WD technique could be the functional version of the well-known Closed World Assumption (CWA) from Nonmonotonous Reasoning in AI (cf. [21]).

Definition 11

Let \(f:U\rightarrow [0,1]\). The closed answer induced by f is the function

where cwa is defined by

Thus, \((X_f,Y_f)\preceq (X_{f_{cwa}},Y_{f_{cwa}})\)

Since any \(f\in {\mathcal {F}}\) induces a default answer \(f_{cwa}\), VC dimension can be assigned by default to any evaluation class.

Definition 12

Let \({\mathcal {F}}\subseteq U^{[0,1]}\). The VC dimension of \(\mathcal F\) is defined as

In functional terms, the description of a 3WD-based decision-making improvement process (sketched in Fig. 5) consists in the design of two operators,

The first operator submerges \({\mathcal {F}}\) into \(\overline{\mathcal F}^{3WD}\), in order to provide more learning power (it is possibly the simplest inclusion). The second one will be an operator on \(\overline{{\mathcal {F}}}^{3WD}\) to obtain answers. The operator \(\varTheta _2\) aims to solve the indecision problem for any \(f\in \overline{{\mathcal {F}}}^{3WD}\) and would reflect the modification of strategy II (shown in Fig. 5). The composition \(\varTheta _2\circ \varTheta _1\) could be answer preserving.

4 Extending the hypothesis class

At this point, it is necessary to consider the issue of how the extension of the hypothesis class would affect the learning consistency. To address this issue, by Thm. 1 it would suffice to study how the VC dimension changes. In particular, we are interested in the following question, expressed using the following notation:

(equivalent to whether the 3WD technique preserves PAC-learning). Of course, the answer to such a question depends on the particular selection of the 3WD closure.

Example 2

(taken from [55]) Suppose \({\mathcal {F}}\) is a finite set of linearly independent real-valued functions on U. Consider \(\overline{{\mathcal {F}}}^{3WD}\) the \(\mathbb R\)-vector space generated by \({\mathcal {F}}\). Then

Thus, in this case, \(\overline{{\mathcal {F}}}^{3WD}\) preserves PAC learnability.

To illustrate the results, throughout the paper, we will use a particular example of 3WD-closure (that we will call contraction). Nevertheless, the study can be carried out for any \(\overline{{\mathcal {F}}}^{3WD}\).

4.1 Extending the hypothesis class by contraction

The 3WD closure introduced in this section focuses on refining \(BND_f\).

Definition 13

Given \(0 \le \varepsilon +\delta < 1\), the \(\varepsilon \)-\(\delta \) contraction function is

The class of contraction functions is denoted by \(\mathcal {CON}\). This class is amenable to performing a modification of the trisecting task.

Proposition 1

\(\mathcal {CON}\) uniformly preserves the decisions for any hypothesis class.

A particular case of \(\overline{{\mathcal {F}}}^{3WD}\) using \(\mathcal {CON}\) is defined as follows:

Definition 14

Let \(0 \le \varepsilon +\delta < 1\).

-

The \(\varepsilon \)-\(\delta \) contraction of f is

$$\begin{aligned}f^{\varepsilon ,\delta } := c_{\varepsilon ,\delta }\circ f\end{aligned}$$(if \(\delta =0\), it is written \(f^{\varepsilon } \)).

-

The 3WD closure by contraction of \({\mathcal {F}}\) is

$$\begin{aligned}{\mathcal {F}}^c := \mathcal {CON} \circ {\mathcal {F}} =\{ f^{ \varepsilon ,\delta } \ : f \in {\mathcal {F}} \text{ and } 0\le \varepsilon +\delta <1 \}\end{aligned}$$

The transformation of a function \(f \in {\mathcal {F}}\) employing the contraction is a relatively simple (computable) operation that extends the decision regions (see Fig. 6). In the Fuzzy logic realm, the definition of the contraction itself, \(f^{\varepsilon ,\delta }=c_{\varepsilon ,\delta }\circ f\), shows how the contraction functions can be interpreted as (restrictive) external modifiers. Its implementation in the ML model is expected to be possible. Moreover, one can easily reestimate the empirical risk for \(f^{\varepsilon ,\delta })\).

By using 3WD contractions, the acceptance/rejection regions are expanded whilst the boundary region is retracted. Thus produces a more effective 3WD decision,

The problem of learning is transformed through 3WD to the problem of finding \(f,\varepsilon _0, \delta _0\) such that:

being R(A, f) the risk associated to the function f. It is equivalent to address the classical problem of learning under ERM using functions from \({\mathcal {F}}^c\) instead of \({\mathcal {F}}\), with the possibility of obtaining a minor risk.

In some cases, the learning problems for both classes, \({\mathcal {F}}\) and \( \overline{{\mathcal {F}}}^{3WD}\) are equivalent since both classes allow to learn the same sets. However, this might not be true in general (as it will be shown when analyzing the case of FCA).

The issue of extending \({\mathcal {F}}\) is whether the consistency of the ERM process is preserved. Or equivalently, whether \(\dim _{VC} (\varTheta _2[{\mathcal {F}}^c])<\infty \) for some \(\varTheta _2\).

5 Introducing 3WD VC dimension

To address the problem of PAC learnability preservation, in this section, a rewriting of the VC dimension is introduced. Please recall that, although the study will be made for \({\mathcal {F}}^c\), any 3WD closure \(\overline{{\mathcal {F}}}^{3WD}\) would support a similar one. To simplify the notation, \(\delta =0\) will be considered (definitions and results are analogous for the general case). Thus, it is necessary to work with the extension of the hypothesis class \({\mathcal {F}}\) to

(Note that \({\mathcal {F}}^c_0={\mathcal {F}}\)).

Definition 15

The 3WD VC dimension of level \(\gamma \) is

Recall that the VC dimension is \(\dim _{VC}({\mathcal {F}}^c_{\gamma })= \dim _{VC}(\{ f_{cwa} \ : \ f \in {\mathcal {F}}^c_{\gamma }\})\). In terms of classes of sets associated to functions, the definition would be as follows.

Definition 16

Let \((U,{\mathcal {F}})\), with \({\mathcal {F}}\) being a hypothesis class on U, and \(A\subseteq U\) and \(\varepsilon \in [0,1]\).

-

1.

The \(\varepsilon \)-cut of A by \(f\in {\mathcal {F}}\) is \(A\cap f^{-1}([1-\varepsilon ,1])= A\cap (f^{\varepsilon })^{-1}(\{1\})\).

-

2.

A is 3WD-shattered by \({\mathcal {F}}^c_{\gamma }\) if \(A \cap \{ f^{-1}(\{1\}) : \ f\in {\mathcal {F}}^c_{\gamma }\}={\mathcal {P}} (A)\).

Proposition 2

The 3WD Vapnik-Chervonenkis dimension, \(\dim _{3VC}({\mathcal {F}}, \gamma )\), is the maximum size of a set 3WD-shattered by \({\mathcal {F}}^c_{\gamma }\) (it may be infinite).

As it was already mentioned, it may occur that \(\dim _{3VC}({\mathcal {F}}, \gamma )=dim_{VC}({\mathcal {F}})\), or even that \({{\mathcal {B}}}_{{\mathcal {F}}^c_{\gamma }}={{\mathcal {B}}}_{{\mathcal {F}}}\) for some 3WD closures. Let us show an example.

Example 3

(extension by contraction preserving VC dimension). Consider the class FuzzyCirc of membership degree functions associated to circles in the plane with center (0, 0).

where

It is not difficult to check that the VC dimension of this class is 1. Given \(0\le \varepsilon \le \gamma \), the contraction \(f^{\varepsilon }_{\lambda }\) is:

Thus

for \(\lambda '= \sqrt{(}2\lambda )^2\cdot \varepsilon + \lambda ^2\cdot (1-\varepsilon )\). Therefore \(\mathcal B_{FuzzyCirc} ={\mathcal {B}}_{FuzzyCirc^c_{\gamma }}\), hence

Bearing in mind that \(\dim _{3VC}({\mathcal {F}},\gamma ) \ge \dim _{VC}({\mathcal {F}})\), and that both are natural numbers, the following cases are possible:

-

1.

\(\dim _{3VC}({\mathcal {F}}, \gamma )=dim_{VC}({\mathcal {F}})\) for all \(\gamma \). In this case, if \(\dim _{VC}({\mathcal {F}})<\infty \) then \({\mathcal {F}}^c_{\gamma }\) preserves PAC learnability.

-

2.

\(\dim _{3VC}({\mathcal {F}},\gamma )= \infty \) and \(\dim _{VC}({\mathcal {F}})<\infty \). The new class \(\mathcal F^c_{\gamma }\) has more shattering capacity than the original one. By Thm. 1 a convergent learning method based on minimizing ERM, is not available for such \(\gamma \).

-

3.

In the case of finite and distinct dimensions, PAC learnability is preserved.

-

\(\dim _{3VC}({\mathcal {F}}, \gamma ) \ne \dim _{VC}({\mathcal {F}})\) but \(\lim _{\gamma \rightarrow 0}\dim _{3VC}({\mathcal {F}}, \gamma )=dim_{VC}({\mathcal {F}})\). Since the dimension is a natural number, from a certain \(\gamma \), both dimensions are equal (we might reduce ourselves to the case (1), being \(\gamma \) small enough).

-

\(\lim _{\gamma \rightarrow 0}\dim _{3VC}({\mathcal {F}}, \gamma )\ne \dim _{VC}({\mathcal {F}})\). In this case, for some small enough \(\gamma \), the dimension remains constant and greater than \(\dim _{VC}({\mathcal {F}})\). Therefore it is possible to use contractions of \({\mathcal {F}}\) to work with the regions \(BND_{f^{\varepsilon }}\), in order to be able to shatter sets of greater size. However, the bounds for empirical error could be greater than that of the original hypothesis class.

-

Despite increasing the VC dimension (that may suppose a problem), case (3) could be interesting when working with discrete data. Even it could be interesting to study what would be the maximum value for \(\dim _{3VC}({\mathcal {F}},\gamma )\) (e.g. for tasks as Categorization). This would imply a better ability for characterizing datasets (learning) from their available attributes/features. However, on the downside, the new concepts may not be easily interpretable. This issue will be revisited in the second part of the paper in a particular case.

5.1 Differential VC dimension

The change of \({\mathcal {F}}\) by \(\overline{{\mathcal {F}}}^{3WD}\) can be traumatic for the efficiency of the ML model because it can cause an increase of both the VC dimension (even becoming infinite) and the dimension of the dataset itself. However, the ML procedure usually works on finite subclasses of \(\overline{{\mathcal {F}}}^{3WD}\). This option suggests studying a version of the VC dimension for finite extensions.

Definition 17

The differential VC dimension of \(\overline{{\mathcal {F}}}^{3WD}\) is

The following result guarantees that it is possible to work with finite extensions for any hypothesis class.

Proposition 3

\(\dim _{DVC} ({\mathcal {F}})= \dim _{VC}({\mathcal {F}})\)

Proof

Of course \(\dim _{DVC}({\mathcal {F}})\le \dim _{VC}({\mathcal {F}})\). Consider now \(A\subseteq G\) a finite set shattered by \({\mathcal {F}}\). Then there exists \({\mathcal {F}}_0 \subseteq {\mathcal {F}}\) finite such that \(A\cap {\mathcal {B}}_{{\mathcal {F}}_0 } = {\mathcal {P}} (A)\). Therefore

hence \(\dim _{VC}({\mathcal {F}})\le \dim _{DVC}({\mathbb {K}}, \gamma )\). \(\square \)

The relationship between \(\dim _{VC}({\mathcal {F}})\) and \(\dim _{VC}(\overline{{\mathcal {F}}}^{3WD})\) for a selected 3WD closure, remains to be studied.

A general analysis of the enhancement of \({\mathcal {F}}\) to \(\overline{{\mathcal {F}}}^{3WD}\) has been developed so far. The following sections are devoted to instantiating the general framework outlined above, for the case of FFCA.

6 The semantic VC dimension

This section aims to study how in FCA the extension/transformation of the attribute set influences the ability of a formal context to approximate a set by using concepts. FCA provides a learning model; a formal context \({\mathbb {K}} =(G, M, I)\) induces a hypothesis class \(ext({\mathbb {K}})\) composed of (the extents of) its concepts, i.e., from \({\mathfrak {B}} ({\mathbb {K}})\) [26, 28].

The concepts (actually, their characteristic functions) can be extended (or transformed) to another class of functions through some 3WD method, obtaining new contexts of the type \((G,{\mathcal {F}}, I_{{\mathcal {F}}})\), as it was defined in the introduction. First, let us examine the case of FCA (crisp). The so-called semantic VC dimension is the straight translation of the VC dimension to FCA.

Definition 18

Let \({\mathbb {K}} = (G,M,I)\) be a formal context and \(O\subseteq G\).

-

1.

\(O\subseteq G\) is learned by \({\mathbb {K}}\) if O is the extent of a concept.

-

2.

\({\mathbb {K}}\) shatters O if

$$\begin{aligned} O \cap \{ ext(C) \ : \ C \in {\mathfrak {B}} ({\mathbb {K}})\} = {\mathcal {P}} (O)\end{aligned}$$ -

3.

The semantic VC dimension of \({\mathbb {K}}\) (also called SVC dimension, denoted by \(\dim _{VC}^s({\mathbb {K}})\)) is the maximum size of an object set shattered by \({\mathbb {K}}\).

Since \(|{\mathfrak {B}} ({\mathbb {K}})|\le 2^{|M|}\), and \(2^{|O|}\) concepts are required to shatter an object set O, then \(\dim _{VC}^s({\mathbb {K}})\le |M|\). In functional terms, the hypothesis class would be

Example 4

Considering \({\mathbb {K}}\) from Fig. 4, it holds \(\dim ^s_{VC}({\mathbb {K}})\ge 2\), because

and it can not be 3 since in that case it would be necessary that \(|{\mathfrak {B}} ({\mathbb {K}})|\ge 8\).

6.1 Related notions

The dimension defined above is global and exogenous in nature. That is, all the elements of the lattice \({\mathfrak {B}} ({\mathbb {K}})\) (global) can be used to shatter any set of the data space; it is not restricted to shatter concept extents only (exogenous). This feature contrasts with another VC dimension for lattices introduced in [12].

In [12] Cambie et al. introduce a dimension of type VC partial in the sense that it is computed for subsets F of a ranked lattice \(\langle L, \le \rangle \), instead of the full lattice. Additionally, it is endogenous in nature, that is, it concerns those elements of the lattice itself that F shatters using the meet operation. Formally, it is said that \(F\subset L\) shatters an element \(c\in L\) if and only if

From this notion of shattering, the definition of the corresponding VC dimension follows naturally: \(\dim _{VC}^*(F)\) is the maximum rank of the elements shattered by F. A lattice is called a SSP lattice [12] if the following Sauer-Shelah-Perles Lemma version is satisfied for any F

Whether SSP lattices are the relatively complemented ones (which are those that does not have any 3-element interval), is an open problem.

In the case of finite concept lattices, one can endow a concept lattice with the rank height. This is defined as the supremum of the lengths of all chains that join the smallest element of the lattice with the considered element. In this case, any shattered concept in the sense of our definition induces the lattice of all its subsets. Therefore the height (that we used as rank) of a concept C is |ext(C)|, and consequently, both shattering notions coincide on concept extents.

The so-called contranominal scale represents a bridge between the semantic dimension and other studies on concept lattices. Given a set A, the context \(N^c(A)=(A,A,\ne )\) is its contranominal scale. If A is shattered, then the formal context \({\mathbb {K}}_A =(A,M,I_{\restriction A\times M})\) is isomorphic to \(N^c(A)\) ([3], lemma 29)). In the particular case of A being the extent of a concept, then \({\mathfrak {B}} ({\mathbb {K}}_A)\) is also a sublattice of \({\mathfrak {B}} ({\mathbb {K}})\). The semantic dimension would be

This way, the following result, stated by Albano (Th. 3 from [2]), follows from the bound on the size of the concept lattice:

In a later work [3], Albano and Chornomaz complement the results of [2] by studying B(k)-free contexts. These are contexts in which B(k), the boolean lattice of k atoms, can not be (order-)embedded in them. In our terms, those that \(\dim _{VC}^s({\mathbb {K}}) < k\). In this case:

Moreover, this bound is sharp (Sect. 4 in [3]).

6.2 Semantic dimension and Learning consistency in FCA

Via semantic VC dimension, it is possible to analyze features of FCA as a model for ML. Please note that the use of FCA could involve working with complex ML models.

Example 5

(Formal context with \(\dim _{VC}(M)<\infty \) but \(\dim _{VC}^s({\mathbb {K}})=\infty \)). Let \({\mathbb {K}}_C = ({\mathbb {R}}^2, {\mathcal {H}} , \in )\) where \(\mathcal H\) is the set of half-planes in \({\mathbb {R}}^2\), and let Convex be the class of plane closed convex sets.

It is straightforward to see that \(C=(X,Y) \in {\mathfrak {B}}(\mathbb K)\) if and only if X is a closed convex set, \(Y=\{ h \in \mathcal H \ : \ X\subseteq h\} \) and \(X=\bigcap Y\). Therefore, \({\mathfrak {B}} ({\mathbb {K}})\) is the class of convex sets in the plane. Thus,

although \(\dim _{VC}({\mathcal {H}})=3\)

Example 6

(formal context with finite VC dimension distinct from the VC dimension of the original hypothesis class). Let \({\mathbb {K}}_R = ({\mathbb {R}}^2 , {\mathcal {F}}, \in )\) where

Then \(\dim _{VC}({\mathcal {F}}) =2\), but \(\dim _{VC}^s({\mathbb {K}}_R)=4\) because the extents of concepts are the axis parallel rectangles and (infinite) bands (also parallel to the axes).

According to what has been shown, for the semantic VC dimension, finite class approximations can be used.

Corollary 1

\(\dim _{DVC}({\mathbb {K}})= \dim _{VC}^s({\mathbb {K}})\)

Proof

Apply Prop. 3 to the class \({\mathcal {F}} =M\). \(\square \)

Definition 19

Let \({\mathbb {K}}= (G,M,I)\) be a formal context and \(X\subseteq M\). The semantic granularity is

and

Roughly speaking, the following result states that -for formal contexts with finite SVC dimension- it should not be expected that many subsets of big concepts can be semantically characterized.

Corollary 2

Let \(C \in {\mathfrak {B}} ({\mathbb {K}})\). If \(\dim ^s_{VC}({\mathbb {K}}) = d < \infty \) then

Proof

Let \(C=(X,Y) \in {\mathfrak {B}} ({\mathbb {K}})\).

Since C is a concept, any subset of \(ext(C)\cap {\mathfrak {B}} ({\mathbb {K}})\) is already the extent of a concept. The reason is that if \(A=ext(C)\cap ext(D)\) for some \(D \in {\mathfrak {B}} ({\mathbb {K}})\), then \(A=ext(C\wedge D)\). Therefore

\(\square \)

Please note that by Thm. 1 the following consequence holds, which is interesting for infinite formal contexts.

Corollary 3

Any formal context with finite SVC dimension is PAC learnable.

Moreover, due to Lemma 2, the SVC dimension would not be increased after applying some type of pre-processing:

Corollary 4

Let \({\mathbb {K}}=(G,M,I)\) and \(f:G' \rightarrow G\). Let \({\mathbb {K}}_f =(G',M,I_f)\) where

Then \(\dim ^s_{VC} ({\mathbb {K}}_f )\le \dim ^s_{VC} ({\mathbb {K}})\).

The new notion of dimension that will be introduced comes from the application of the 3WD paradigm, introduced in Sect. 5, to fuzzy formal contexts.

A fuzzy f.c. \({\mathbb {K}} =(G,M,I)\) has a default semantic dimension, associated with the operator cwa,

where \(gI_{cwa}m \Longleftrightarrow \mu _I(g,m)=1\) (i.e. \(cwa(\mu _{m}(g))=1\)). The following example will be taken up later.

Example 7

Let \({\mathbb {K}}_{circ} =({\mathbb {R}}^2,{\mathbb {R}}^2,I)\) where

Thus

Therefore \(I_{cwa}\) is the identity relationship on \({\mathbb {R}}^2\), “=”. It is not difficult to check that \(\dim _{VC}^s((\mathbb R^2,{\mathbb {R}}^2,=))=1\).

6.3 Semantic differential dimension

The Differential VC dimension (DVC dimension, Subsect. 5.1) can be instantiated for fuzzy formal contexts once the extension \({\mathcal {F}}^c\) has been built. The hypothesis class associated with \({\mathbb {K}}\) is composed of the membership functions of their fuzzy attributes,

Therefore, we will actually work with its contraction, \(M_{\gamma }^c := {\mathcal {F}} [{\mathbb {K}}]_{\gamma }^{c}\). That this,

Definition 20

Let \(m\in M\) and \(\varepsilon < 1\). The crisp predicate defined by \(\varepsilon \)-contraction, \(m^{\varepsilon }\), is the attribute defined by

That is,

Remark 1

In this way, it is possible to make the FCA derivation operator on attributes compatible with the idea introduced in the first part of the paper;

Definition 21

Given \({\mathcal {F}} \subseteq M^c_{\gamma }\), the crisp formal context induced by \({\mathcal {F}}\) is the formal context

where \((g,m^{\varepsilon })\in I_{{\mathcal {F}}} \Longleftrightarrow m^{\varepsilon }(g)=1\) for all \((g,m^{\varepsilon })\in G\times {\mathcal {F}}\).

This way, for all \(A\subseteq G\) and \(B \subseteq {\mathcal {F}}\),

and \( B' = \{ g \in G \ : \ \ 1- \varepsilon \le \mu _m (g) \text{ for } \text{ all } m^{ \varepsilon }\in B\} \} \).

Two issues should now be addressed. On the one hand, it has already been commented that if the attributes are considered as data dimensions, the step from \({\mathcal {F}}\) to \({\mathcal {F}}^c\) involves a dimensionality increase. On the other hand, please recall that the contractions \(c_{\varepsilon ,\delta }\) apply to the outputs of attributes. That is, it is necessary to work with

(in terms of fuzzy logic, these could be, for example, external modifiers of the fuzzy predicates as it was illustrated in Ex. 1). Thus, the bound on VC dimension presented in the Lemma 2 does not apply. As consequence, the consideration of the new class \(\overline{{\mathcal {F}}}^{3WD}\) may cause an increase in the VC dimension.

Example 8

Consider, for example, the context \({\mathbb {K}}\) from Fig. 7. To transform \({\mathbb {K}}\) into a classic formal context it is necessary to solve the no decision issue of the attribute m, for each object g when \(0<m(g)<1\). That is, for those objects belonging to the region \(BND_{m}\), by selecting crisp attributes \(m^{\varepsilon }\).

It is possible to choose more than one crisp predicate for the same fuzzy attribute (e.g., as a consequence of using several external modifiers). Thus, the SVC dimension can be increased over |M|. For example, if only one defuzzification for each attribute is taken (as occurs with \({\mathbb {K}}_2\)), it would not be possible to shatter the three object set, since \(\dim _{VC}^s({\mathbb {K}}_1 )\le 2\). However, by making multiple defuzzifications (that is, multiple decisions about the region \(BND_{\mu _{m}}\)), such bound could be surpassed (see formal context \({\mathbb {K}}_2\)).

In general, it may occur that \(\dim ^S_{VC}({\mathbb {K}} [\mathcal F])=\infty \). This phenomenon suggests to consider the FFCA version of the DVC dimension (based on finite classes) in order to avoid this.

Definition 22

Let \({\mathbb {K}}= (G,M,I)\) be a fuzzy formal context and \(0 \le \gamma <1\)

-

1.

A 3WD defuzzification of \({\mathbb {K}}\) of level \(\gamma \) is a set \({\mathcal {F}} \subseteq M^c_{\gamma }\).

-

2.

The differential semantic VC dimension (DVC) of \({\mathbb {K}}\) of level \(\gamma \) is

$$\begin{aligned}\displaystyle \dim _{DVC}({\mathbb {K}},\gamma ):= \sup _{{\mathcal {F}} \subseteq M^c_{\gamma }, |{\mathcal {F}}|<\infty } \dim _{VC}^s({\mathbb {K}}[{\mathcal {F}}])\end{aligned}$$

Example 9

(Formal context \({\mathbb {K}}\) with \(\dim _{DVC}({\mathbb {K}},\gamma ) \ne \dim _{VC}^s({\mathbb {K}})\)) In Fig. 7 two defuzzifications of a fuzzy formal context \({\mathbb {K}}\) are shown. The first one considers the set \(\{m_1^{0.2},m_2^{0.2},m_3^{0.15}\}\). The formal context obtained has a SVC dimension equal to 2. However, for \({\mathbb {K}}_2\) it holds that

(G itself is shattered), so \(\dim _{DVC}({\mathbb {K}},0.4)=3\).

Example 10

(extension of an infinite formal context with finite attribute set which preserves finiteness of VC dimension) Consider the fuzzy formal context \({\mathbb {K}} = ( G,M,I)\) being \(G=\{g_n \ : \ n\in {\mathbb {N}}\}\), \(M=\{m_1,m_2\}\) and I is defined by

Consider the hypothesis class \({\mathcal {F}}\subseteq M^c_{1}\) defined by

where

Thus the relationship I for the formal context \({\mathbb {K}} [{\mathcal {F}}]\) can be expressed as (see Fig. 8):

In view of the above, \((X,Y)\in {\mathfrak {B}}({\mathbb {K}} [{\mathcal {F}}])\) if and only if

-

\(X=\{ g_n \ : \ k_0 \le n \le k_1 \}\), where \(k_0=\sup \{k \ : \ m_1^{\varepsilon (1,k)}\in Y \}\) and \(k_1= \inf \{k \ : \ m_2^{\varepsilon (1,k)}\in Y \}\); and

-

\(Y = \{ m_1^{\varepsilon (1,1)}, \dots , m_1^{\varepsilon (1,k_0)} \} \cup \{ m_2^{\varepsilon (1,k_1)}, \dots \} \)

Reasoning as with the intervals in \({\mathbb {R}}\), it is easy to see that \(\dim _{VC}^s({\mathbb {K}} [{\mathcal {F}}])=2\).

The formal context \({\mathbb {K}} [{\mathcal {F}}]\) from Example 10

The above example suggests the following issue. For a formal context with an infinite object set, it could be possible that the VC-dimension of some defuzzification was infinite, even if M is finite. In Sect. 8, it is shown that this is not possible using \(M_{\gamma }^c\). Also, finite approximations can be used:

Proposition 4

\(\dim _{DVC}({\mathbb {K}}, \gamma ) = dim_{VC}^s({\mathbb {K}} [M^c_{\gamma }])\)

Proof

Apply Thm. 3. \(\square \)

A straightforward consequence of the proposition is that it achieves PAC learnable classes with concepts from finite subcontexts that are induced by finite subclasses of \(M^c_{\gamma }\).

Example 11

(Fuzzy formal context with DVC dimension different from its default VC dimension) In Example 7 it was shown that \(\dim _{VC}^s(\mathbb K_{circ})=1\). Consider \(\epsilon > 0\). Then

Thus

Therefore, \(M^c_{\gamma }\) define the class of circles (with radius bounded by \(\gamma /(1-\gamma )\)). Then \(\dim _{VC}(M^c_{\gamma })=3\), and \(\dim _{VC}^s({\mathbb {K}}[{\mathcal {M}}^c_{\gamma }])=\infty \) (see Example 12).

Corollary 5

Suppose that \(\dim _{VC}^s({\mathbb {K}}[M^{c}_{\gamma }])<+\infty \). Then the class

is a PAC class.

Suppose that \(\dim _{VC}^s({\mathbb {K}})<+\infty \). Regarding the relationship between \(\dim _{VC}^s({\mathbb {K}})\) and \(dim_{DVC}({\mathbb {K}},\gamma )\), by Prop. 4, the possible cases are:

-

\(\dim _{VC}^s({\mathbb {K}})=dim_{DVC}({\mathbb {K}},\gamma )\). Then, the use of functions from \(M_{\gamma }^c\) does not provide more shattering capacity. The new context preserves the PAC learnability.

-

\(\dim _{VC}^s({\mathbb {K}})<\dim _{DVC}({\mathbb {K}},\gamma ) < +\infty \). Then there exists \(\{m_i^{\varepsilon _j}\}_{i,j}\) finite such that

$$\begin{aligned}\dim ^s_{VC}({\mathbb {K}}[\{m_i^{\varepsilon _j}\}_{i,j}],\gamma )=dim_{DVC}({\mathbb {K}},\gamma )\end{aligned}$$that provides an extension of \({\mathbb {K}}\) with maximum VC dimension and preserving PAC learnability. This kind of formal context could be interesting for concept learning: a new (finite) set of functions can be added and PAC learnability is preserved.

-

There would exist the possibility that \(\dim _{DVC}(\mathbb K,\gamma ) =+\infty \). In this case, the new contexts are not useful to PAC-learning. It would be necessary to refine the set of new predicates to be used. We will see that this is not possible if M is finite.

The result corresponding to Lemma 1, which shows that any finite subclass of \({\mathcal {F}}\) can be used, would be the following:

Corollary 6

Suppose \(\dim _{DVC}({\mathbb {K}}, \gamma )<+\infty \). Then for any finite \({\mathcal {F}}\subseteq {\mathbb {M}}^c_{\gamma }\)

Proof

Let \(d=\dim _{DVC}({\mathbb {K}}, \gamma )<+\infty \). Note that by Prop. 3 we have

Let \({\mathcal {F}}_0\subseteq M^c_{\gamma }\) finite, such that \(\dim ^S_{VC}({\mathbb {K}} [{\mathcal {F}}_0])=d\). Note that, in general, if \({\mathcal {F}}\subseteq {\mathcal {F}}'\), \(s_{({\mathbb {K}}[{\mathcal {F}}], {\mathcal {F}})}(n) \le s_{({\mathbb {K}}[{\mathcal {F}}'], {\mathcal {F}}')} (n)\). We have:

\(\square \)

7 Learning with finitely generated concepts

The use of FFCA as a conceptual learning model can also have its drawbacks, as it occurs with FCA. For example, it involves the use of concepts that can not be specified by a finite set of attributes. In this section this issue is examined.

In the case of a formal context with finite VC dimension, Thm. 1 ensures the convergence. That is, if for each sample \(S_n\), a function \(f_{D_n}\) minimizing empirical risk is selected, then

which in the case of FCA and \(Q(x,y,f)=|y-f(x)|\), will be rewritten as:

Hence, in the sample, the difference between A and the chosen concept is close in probability to 0.

For FFCA, the defuzzification of the class \({\mathcal {F}}^c_{\gamma }\) brings the learning problem back to FCA, although with the peculiarity that it is necessary to work with a potentially infinite attribute set. This could represent a difficulty (thinking that the natural processes of conceptualization often involve concept characterization by finite attribute sets). Therefore, it is necessary to study ERM consistency using only finitely generated concepts, in the following sense:

Definition 23

Let \({\mathbb {K}} = (G,M,I)\) be a formal context.

-

\(C=(X,Y) \in {\mathfrak {B}} ({\mathbb {K}})\) is a finitely generated concept if there exists \(Y_0\) finite such that \(Y_0''=Y\).

-

\({\mathbb {K}}\) is finitely generated if any concept of \(\mathbb K\) is finitely generated.

-

\({\mathfrak {B}}_f ({\mathbb {K}})\) is the sub-lattice of \({\mathfrak {B}} ({\mathbb {K}})\) whose elements are finitely generated concepts.

7.1 Some examples

Example 12

(fuzzy formal context with infinite VC dimension, generated by a finite VC dimension class but with not f.g. concepts) Consider the class

the functions are fuzzy membership functions for circles in \(\mathbb R^2\), \(f_{\mathbf{c}, r} : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\) is defined by

It is defined the fuzzy formal context

(where \(\mu _I(p,f)=f(p)\)).

It is verified that \(\dim _{VC}({\mathcal {F}})=3\), whilst \(\dim ^s_{VC}({\mathbb {K}}_O)=\infty \). To see the latter, note, for example, that a segment \({\overline{AB}}\) is the extent of a concept, since

It is also true for any convex polygon. Thus they are not f.g. in \({\mathbb {K}}_O\), although they are f.g. in other formal contexts as \({\mathbb {K}}_C\) (Example 5).

Example 13

The extents of \({\mathfrak {B}}_f ({\mathbb {K}}_C)\), the formal context from Example 5, are the convex polygons. Therefore, it is also \(\dim _{VC}({\mathfrak {B}}_f ({\mathbb {K}}_C))=\infty \)

Example 14

(Formal context with finite semantic VC dimension but no finitely generated) Let

It is verified that \(\dim _{VC}^s(\mathbb K_r) =2\), as any concept of \({\mathbb {K}}\) is an angular region, and the VC dimension of this class is 2. Consider the concept of \({\mathfrak {B}} ({\mathbb {K}}_r)\)

It is easy to check that \(C\in {\mathfrak {B}} ({\mathbb {K}}) \setminus {\mathfrak {B}}_f ({\mathbb {K}})\).

Example 15

(Formal context which is not f.g. but \(\dim _{VC}({\mathfrak {B}}_f ({\mathbb {K}}))=\dim _{VC}^s({\mathbb {K}})\)). Let \({\mathbb {K}}_{\ge } = ( {\mathbb {R}}, {\mathbb {R}}, \ge )\). Please note that any \(m\in {\mathbb {R}}\), considered as attribute, satisfies

With this in mind, it is straightforward to see that

Therefore

However, the concept \(C=(\emptyset , {\mathbb {R}})\) is not f.g.

7.2 Preserving PAC learnability working with f.g. concepts

Since in conceptualization processes one usually works with concepts characterized by a finite number of attributes (that is, f.g. concepts), it has to be studied whether it is possible to achieve ERM consistency by only using f.g. concepts as hypothesis class.

Note that any concept is approximable by f.g. concepts in the following sense: for any \(D \in {\mathfrak {B}} ({\mathbb {K}})\)

since \( D =\bigwedge \{ ( \{ m \}',\{ m \}'') \ : \ m\in int(D) \} \). Recall that this fact does not imply a finite VC dimension. For the formal context \({\mathbb {K}}_C\) from Ex. 5\(\dim _{VC}^s({\mathbb {K}}_C) =\dim _{VC}({\mathfrak {B}}_f (\mathbb K))=\infty \).

However, it is not necessarily true that any concept is finitely approximable by an enumerable sequence of f.g. concepts. That is, it is not true in general that any \(D\in {\mathfrak {B}} (K)\) can be characterized as

for some sequence \(\{ C_{n}\}_n\) of f.g. concepts. This property would be useful to replace the concepts involved in ERM by f.g. concepts, preserving the convergence required in Def. 3.

Example 16

(Formal context with a concept not approximable by an enumerable sequence of f.g. concepts). Consider the contranominal scale on \({\mathbb {R}}\), \({\mathbb {K}}_{\ne } = ({\mathbb {R}}, {\mathbb {R}}, \ne )\). Then \(\dim _{VC}^s(\mathbb K_{\ne })=\infty \), since the concept set is

Since \(Y'={\mathbb {R}}\setminus Y\), and \(Y_1'' \ne Y_2 ''\) if \(Y_1\ne Y_2\), then it is easy to see that

Therefore, any sequence \(\{ C_{n}\}_n \subseteq \mathfrak B_f({\mathbb {K}}_{\ne })\) satisfies

Thus, the concept \((\emptyset ,{\mathbb {R}})\) can not be approximated by an enumerable sequence of f.g. concepts.

The former example seems to suggest that there is no convergence to the infimum of the empirical risk using f.g. concepts. For example, when the infimum is reached with concepts that are not approximable by such an enumerable sequence. To prove that this circumstance does not occur, the strategy has to be reformulated. The idea is to use the lattices instead of working with the limit of the empirical risk. The following theorem guarantees ERM consistency using f.g. concepts. The proof will be carried out by checking that the (finite) VC dimension is preserved.

Theorem 2

Let \({\mathbb {K}}\) be a formal context. Then

Proof

It is only necessary to prove that \(\dim ^s_{VC} ({\mathbb {K}}) \le \dim _{VC}({\mathfrak {B}}_f ({\mathbb {K}}))\).

Let A be a finite set shattered by \({\mathfrak {B}}({\mathbb {K}})\). To prove that it is also shattered by \({\mathfrak {B}}_f ({\mathbb {K}})\), it suffices to demonstrate that for all \(D\in {\mathfrak {B}}({\mathbb {K}})\) exists \(C\in {\mathfrak {B}}_f({\mathbb {K}})\) such that

Since D is finitely approximable, let \(C_0\in \mathfrak B_f({\mathbb {K}})\) such that \(D\le C_0\).

For the same reason, for each \(z\in A\cap ext(C_0) \setminus A \cap ext(D)\) there exists \(C_z \in {\mathfrak {B}}_f({\mathbb {K}})\) such that \(D\le C_z\) and \(z\notin ext(C_z)\). Then

We need to check that the latter concept is f.g.

Since \(|A\cap ext(C) \setminus (A\cap D)|<\infty \), and

then such concept is f.g.

Therefore, any set shattered by \({\mathbb {K}}\) is also shattered by \({\mathfrak {B}}_f({\mathbb {K}})\). Thus, \(\dim ^s_{VC} ({\mathbb {K}}) \le \dim _{VC}({\mathfrak {B}}_f ({\mathbb {K}}))\). \(\square \)

Finally, combining the above results, we can restrict ourselves to f.g. concepts of the 3WD extension \({\mathbb {K}} [M^c_{\gamma }]\). In formal terms:

Corollary 7

\(\dim _{DVC} ({\mathbb {K}},\gamma ) = \dim ^s_{VC} ({\mathfrak {B}}_f({\mathbb {K}} [M^c_{\gamma }]))\)

Proof

It verifies:

\(\square \)

Example 17

A consequence of the former result is that, for the formal context \({\mathbb {K}}_O\) from Ex. 12

That is, the same dimension would be obtained for the ERM method using only finite intersections of circles.

7.3 Bounding the number of attributes in f.g. concepts

Some examples have shown that \({\mathfrak {B}}_f({\mathbb {K}}[\mathcal F])\) could have infinite VC dimension, although \(\dim _{VC}(\mathcal F)\) was finite. For these cases, it is interesting to consider other hypothesis classes that no longer comes from sublattices. For the sake of completeness, the following notes describe the class of concepts generated by attribute sets bounded by a constant.

Definition 24

Let \(k\in {\mathbb {N}}\). The hypothesis class \({\mathfrak {B}}_{\le k} ({\mathbb {K}})\) is the class of all concepts of \({\mathbb {K}}\) that are finitely generated by an attribute set of size k at most.

Let us see an example in which the finiteness of VC dimension could be preserved with these classes.

Example 18

(formal context with finite VC dimension for bounded f.g. concepts) Ex. 5 started with the hypothesis class \({\mathcal {H}}\), the set of half-planes in \({\mathbb {R}}^2\). Then \(\dim _{VC}({\mathcal {H}})=3\) whilst \(\dim _{VC}^s({\mathbb {K}}_C)=+\infty \). It is straightforward to see that

since the extent of the concepts generated by the three hyperplanes are: hyperplanes, angular regions, bands and triangles. In general,

as the concepts are the convex polygons with at most k sides.

The following result would be the translation, to formal contexts, of the fact that the finite Boolean combination of functions from a hypothesis class with finite VC dimension also has a finite VC dimension [14]. This shows that, when the formal context is PAC learnable, then there exist a \(k_0\) such that \(\mathfrak B_{\le k_0}({\mathbb {K}})\) is not only PAC learnable, but it can also be used granting the same error bounds as the original class.

Proposition 5

\(\dim _{VC}^s({\mathbb {K}})=\sup _k \dim _{VC}({\mathfrak {B}}_{\le k}({\mathbb {K}}))\)

Proof

Since \({\mathfrak {B}}_f ({\mathbb {K}}) = \bigcup _k {\mathfrak {B}}_{\le k} ({\mathbb {K}})\), it is easy to see that

By Thm. 2, \(\dim _{VC}^s({\mathbb {K}}) =\dim _{VC} (\mathfrak B_f ({\mathbb {K}})) \) hence the result is proved. \(\square \)

Example 19

(from the example 14) \(\dim _{VC}^s({\mathbb {K}}) = \dim _{VC} ({\mathfrak {B}}_{\le 2}(\mathbb K)) \).

8 On 3WD closures preserving PAC learnability

Different examples showing how the VC dimension changes when \({\mathcal {F}}\) is extended to \(\overline{{\mathcal {F}}}^{3WD}\) have been previously presented. This section presents a sufficient condition for the preservation of VC dimension finiteness, that can be applied to the particular case of \({\mathcal {F}}^c\). The following result will be used.

Theorem 3

[55] Let \({\mathcal {C}} =\left\{ \bigcap _{i=1}^n C_i \ : \ C_i \in \mathcal C_i , i = 1,2,. . . , n\right\} \), where each \({\mathcal {C}}_i\) is a collection of subsets of U that is linearly ordered by inclusion. Then \(dim_{VC}({\mathcal {C}})\le n+1\).

The following theorem shows that, for formal contexts with a finite attribute set, any extension by contraction has a finite VC dimension.

Corollary 8

Let \({\mathbb {K}}=(G,M,I)\) be a formal context. If M is finite, then

Proof

Suppose that \(M=\{m_1,\dots , m_n\}\). Note that any \(C\in {\mathfrak {B}} ({\mathbb {K}} [M^c_1])\) can be expressed as

where \(Y_k := int(C) \cap \{m_k\}^c_{\gamma }\). On the one hand, since

then

On the other hand, it is verified that \(Y_k' =\{ m^{\varepsilon _k}\}'\) taking

(since if \(\varepsilon <\varepsilon '\), then \(\{m^{\varepsilon } \}' \subseteq \{ m^{\varepsilon '}\}'\)). Therefore

For each \(m\in M\) the class \({\mathcal {C}}_m\) formed by the extents of the concept set

is linearly ordered under \(\subseteq \). Thus the assumptions of Thm. 3 are satisfied, hence

\(\square \)

Extension of \({\mathbb {K}}=(G,M,I)\) according to the hypothesis of Thm. 4. To build \({\mathbb {K}}[\bigcup _{m\in M} {\mathcal {F}}_m]\), each \(m\in M\) is expanded to a linearly ordered class \(\mathcal F_m\). \(\varPhi \) is an immersion, defined as \(\varPhi ((X,Y)):=(Y',Y'')\)

The above proof can be adapted for any 3WD closure obtained by extending the positive sets of each attribute as follows (see Fig. 9):

Theorem 4

Let \({\mathbb {K}} =(G,M,I)\) with \(|M|<\infty \). Suppose that the 3WD-closure has the structure

where each \({\mathcal {F}}_m\) where \(m\in {\mathcal {F}}\) and the class set \(\{ POS_{f} \ : \ f\in {\mathcal {F}}_m\}\) is linearly ordered. Then

Proof

To adapt the above proof, let

where \(f_{{\mathcal {G}}}(x):= \min _{g\in {\mathcal {G}}}g(x)\). Note that for any \({\mathcal {G}} \subseteq {\mathcal {F}}\)

and that \({{\mathcal {F}}_m}^*\) is linearly ordered. Reasoning as the above result, it has \(\dim _{VC}({\mathbb {K}}[{\mathcal {F}}^*]) \le |M| + 1\). Since \(\dim _{VC}({\mathbb {K}}[{\mathcal {F}}]) \le \dim _{VC}(\mathbb K[{\mathcal {F}}^*])\), we have the result. \(\square \)

9 Conclusions, related and future Work

This paper formalizes and analyzes the impact of the 3WD paradigm on ML models throughout the study of (variants of) the VC dimension. The study has been carried out at two levels. The first and more general one concerns the enhancement of the hypothesis class by means of some 3WD method. The idea lies in the fact that any 3WD technique that reduces the boundary regions of the hypothesis impacts on the VC dimension. Its finiteness is essential to preserve ERM consistency.

The second level concerns a case study instantiating the general analysis to (F)FCA, understood as a model for concept mining (or categorization) from data. The starting idea has already been considered in other works (e.g., [19, 26, 30]). In the present approach, the hypothesis class is the class of definable sets (the extents of concepts). The option of using only definable sets is not a new idea (e.g. o-minimality and VC dimension in [13]). In this work, we show how to extend the description language (i.e. attribute set) using a particular 3WD closure, \(M^c_{\gamma }\). However, the analysis can be performed for other options.

There exists other multi-source search that aims to improve the quality of information (which enriches learning in turn) to enhance decision procedures. For example, for agents [59] and multi-source information systems [45], and even for combining both in multi-agency [36, 49]. We believe that the approach developed here can enhance such works and others as [30]. In the latter, Li et al. analyze how to learn one exact or two approximate cognitive concepts from a given object set or attribute set. Our proposal can be seen as complementary to that of [67] where authors seek to minimize the use of attributes by associating to them an estimation of their significance.

Our formalization can also help to enrich other approaches. For example, those addressing the granularity of selection/updating [30, 33, 53], as well as those addressing the management of data dynamics [33]. The analysis of both SVC and DVC dimensions for different hypothesis classes might be useful to analyze other (incremental) concept learning approaches [23, 29, 60, 68]. The consideration of more effective 3WD-decisions for shattering, complements -to some extent- the cited works. For example, allowing the use of 3WD-closures that imply managing contradictory 3WD decisions (that is to say, it can exist \((X_1,Y_1)\), \((X_2,Y_2)\) with \(X_1\cap Y_2\ne \emptyset \) or \(X_2\cap Y_1\ne \emptyset \)). This extension could be useful to decision reconsideration. Moreover, due to the goal of estimating VC dimension, we use different (variable) thresholds for building 3WD-decisions. This fact differentiates it from the above (multi-decision) 3WD approaches in that they are prefixed to build the 3WD cognitive operators [23, 29].