Abstract

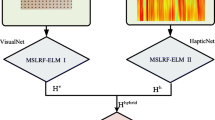

The material property of an object’s surface is critical for the tasks of robotic manipulation or interaction with its surrounding environment. Tactile sensing can provide rich information about the material characteristics of an object’s surface. Hence, it is important to convey and interpret tactile information of material properties to the users during interaction. In this paper, we propose a visual-tactile cross-modal retrieval framework to convey tactile information of surface material for perceptual estimation. In particular, we use tactile information of a new unknown surface material to retrieve perceptually similar surface from an available surface visual sample set. For the proposed framework, we develop a deep cross-modal correlation learning method, which incorporates the high-level nonlinear representation of deep extreme learning machine and class-paired correlation learning of cluster canonical correlation analysis. Experimental results on the publicly available dataset validate the effectiveness of the proposed framework and the method.

Similar content being viewed by others

References

Andrew G, Arora R, Bilmes J, Livescu K (2013) Deep canonical correlation analysis. In: International conference on machine learning, pp. 1247–1255

Arun KS, Govindan VK (2016) A context-aware semantic modeling framework for efficient image retrieval. Int J Mach Learn Cybern 8(4):1–27

Baumgartner E, Wiebel CB, Gegenfurtner KR (2013) Visual and haptic representations of material properties. Multisens Res 26(5):429–455

Burka A, Hu S, Helgeson S, Krishnan S, Gao Y, Hendricks LA, Darrell T, Kuchenbecker KJ (2017) Proton: A visuo-haptic data acquisition system for robotic learning of surface properties. In: IEEE international conference on multisensor fusion and integration for intelligent systems, pp. 58–65

Cao J, Lin Z (2015) Extreme learning machines on high dimensional and large data applications: A survey. Math Problems Eng 2015(3):1–13

Chu Y, Feng C, Guo C, Wang Y (2018) Network embedding based on deep extreme learning machine. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-018-0895-5

Fang B, Wei X, Sun F, Huang H, Yu Y, Liu H et al Skill learning for human-robot interaction using wearable device. Tsinghua Sci Technol. https://doi.org/10.26599/TST.2018.9010096

Feng F, Li R, Wang X (2015) Deep correspondence restricted boltzmann machine for cross-modal retrieval. Neurocomputing 154(C):50–60

Fukumizu K, Bach F, Gretton A (2007) Statistical consistency of kernel canonical correlation analysis. J Mach Learn Res 8(2007):361–383

Hardoon DR, Szedmak S, Shawe-Taylor J (2004) Canonical correlation analysis: an overview with application to learning methods. Neural Comput 16(12):2639–2664

Huang GB, Wang DH, Lan Y (2011) Extreme learning machines: a survey. Int J Mach Learn Cybern 2(2):107–122

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501

Kerr E, McGinnity T, Coleman S (2018) Material recognition using tactile sensing. Expert Syst Appl 94:94–111

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Kroemer O, Lampert CH, Peters J (2011) Learning dynamic tactile sensing with robust vision-based training. IEEE Trans Robot 27(3):545–557

Kuchenbecker KJ, McMahan W, Landin N, Romano JM (2010) Dimensional reduction of high-frequencey accelerations for haptic rendering, In: International conference on human haptic sensing and touch enabled computer applications, pp 79–86

Kursun O, Alpaydin E, Favorov OV (2011) Canonical correlation analysis using within-class coupling. Pattern Recognit Lett 32(2):134–144

Liu C, Sun F, Zhang B (2018) Brain-inspired multimodal learning based on neural networks. Brain Sci Adv 4(1):61–72

Liu H, Sun F (2018) Material identification using tactile perception: a semantics-regularized dictionary learning method. IEEE/ASME Trans Mechatron 23(3):1050–1058

Liu H, Sun F, Fang B (2017) Seeing by touching: cross-modal matching for tactile and vision measurements. In: Advanced robotics and mechatronics (ICARM), 2017 2nd international conference on, pp. 257–263. IEEE

Liu H, Sun F, Fang B, Lu S (2018) Multimodal measurements fusion for surface material categorization. IEEE Trans Instrum Meas 67(2):246–256

Liu H, Yu Y, Sun F, Gu J (2017) Visual-tactile fusion for object recognition. IEEE Trans Autom Sci Eng 14(2):996–1008

Luo S, Bimbo J, Dahiya R, Liu H (2017) Robotic tactile perception of object properties: a review. Mechatronics 48:54–67

Rasiwasia N, Mahajan D, Mahadevan V, Aggarwal G (2014) Cluster canonical correlation analysis. In: Artificial intelligence and statistics, pp 823–831

Rasiwasia N, Pereira JC, Coviello E, Doyle G, Lanckriet GRG, Levy R, Vasconcelos N (2010) A new approach to cross-modal multimedia retrieval. In: International conference on multimedia. ACM, pp 251–260

Sinapov J, Sukhoy V, Sahai R, Stoytchev A (2011) Vibrotactile recognition and categorization of surfaces by a humanoid robot. IEEE Trans Robot 27(3):488–497

Strese M, Lee JY, Schuwerk C, Han Q, Kim HG, Steinbach E (2014) A haptic texture database for tool-mediated texture recognition and classification. In: Haptic, audio and visual environments and games (HAVE), 2014 IEEE international symposium on. IEEE, pp 118–123

Strese M, Schuwerk C, Iepure A, Steinbach E (2017) Multimodal feature-based surface material classification. IEEE Trans Haptics 10(2):226–239

Strese M, Schuwerk C, Steinbach E (2015) Surface classification using acceleration signals recorded during human freehand movement. In: World Haptics conference (WHC), 2015 IEEE. IEEE, pp 214–219

Ukil S, Ghosh S, Obaidullah SM, Santosh K, Roy K, Das N (2018) Deep learning for word-level handwritten indic script identification. arXiv preprint arXiv:1801.01627

Ukil S, Ghosh S, Obaidullah SM, Santosh K, Roy K, Das N (2019) Improved word-level handwritten indic script identification by integrating small convolutional neural networks. Neural Comput Appl. https://doi.org/10.1007/s00521-019-04111-1

Vicente A, Liu J, Yang GZ (2015) Surface classification based on vibration on omni-wheel mobile base. In: Intelligent robots and systems (IROS), 2015 IEEE/RSJ international conference on. IEEE, pp 916–921

Wang W, Arora R, Livescu K, Bilmes J (2016) On deep multi-view representation learning: objectives and optimization. arXiv preprint arXiv:1602.01024

Wang X, Cao W (2018) Non-iterative approaches in training feed-forward neural networks and their applications. Soft Comput 18(12):1–4

Wang X, Chen A, Feng H (2011) Upper integral network with extreme learning mechanism. Neurocomputing 74(16):2520–2525

Yu W, Zhuang F, He Q, Shi Z (2015) Learning deep representations via extreme learning machines. Neurocomputing 149:308–315

Zhang J, Ding S, Zhang N, Shi Z (2016) Incremental extreme learning machine based on deep feature embedded. Int J Mach Learn Cybern 7(1):111–120

Zheng W, Liu H, Wang B, Sun F (2019) Cross-modal surface material retrieval using discriminant adversarial learning. IEEE Trans Ind Inform pp. 1–1 https://doi.org/10.1109/TII.2019.2895602

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant U1613212; in part by the Key Project of Natural Science Foundation of Hebei Province No. E2017202035; and in part by Joint Doctoral Training Foundation of HEBUT 2017GN0006.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zheng, W., Liu, H., Wang, B. et al. Cross-modal learning for material perception using deep extreme learning machine. Int. J. Mach. Learn. & Cyber. 11, 813–823 (2020). https://doi.org/10.1007/s13042-019-00962-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-019-00962-1