Abstract

The enhancement of human senses electronically is possible when pervasive computers interact unnoticeably with humans in ubiquitous computing. The design of computer user interfaces towards “disappearing” forces the interaction with humans using a content rather than a menu driven approach, thus the emerging requirement for huge number of non-technical users interfacing intuitively with billions of computers in the Internet of Things is met. Learning to use particular applications in ubiquitous computing is either too slow or sometimes impossible so the design of user interfaces must be naturally enough to facilitate intuitive human behaviours. Although humans from different racial, cultural and ethnic backgrounds own the same physiological sensory system, the perception to the same stimuli outside the human bodies can be different. A novel taxonomy for disappearing user interfaces (DUIs) to stimulate human senses and to capture human responses is proposed. Furthermore, applications of DUIs are reviewed. DUIs with sensor and data fusion to simulate the sixth sense is explored. Enhancement of human senses through DUIs and context awareness is discussed as the groundwork enabling smarter wearable devices for interfacing with human emotional memories.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The growing importance of human computer interaction (HCI) is a result of the widespread deployment of computers connecting with humans. Early computer users were computer specialists and programmers when the major design goals for computers were processing-power and processing-speed during the time a single mainframe computer occupied a whole room. Usability became a profound issue when personal computers prevailed in the late 70s since personnel and non-professionals had joined the user group with no prior computer science knowledge (Shackel 1997). Ubiquitous Computing proposed by Weiser (1991) as “The Computer for The 21st Century” has envisioned future computers to be invisibly living with humans, hence the range of user types has again widened. With the popularity of the Internet in recent decades, the concept of internet of things (IoT) further boosts the applications of ubiquitous computing to connect almost everything electronic to the Internet. The ultimate result is an unprecedented demand for better HCI technology to cope with the needs for the huge number of non-technical users interacting with billions of network-connected computers.

Human computer interaction is a multidisciplinary technology that heavily focuses on human-centric user interface (UI) design. Extensive research on the natural sciences not only provides extremely functional UIs through tangible and intangible media, but smart algorithms based on computational intelligence also help predict human behaviours through activity recognition; thus tailor-made responses are astutely delivered to individuals. Further enhancement of the HCI’s humanisation quality is found from affective computing that enables computers to understand human emotions (Sunghyun et al. 2015; Weerasinghe et al. 2014), and Computational humour studies that provides a more relaxing interaction environment (Nijholt 2014; Nijholt et al. 2006). Collaboration between researchers from applied and social science is the core enabler for a successful multicultural and multi-ethnic HCI design; however, a seamless integration does not always occur. Dourish and Bell (2011) reviewed the current gaps in HCI research for Ubiquitous Computing from an ethnographic perspective with the conclusion that social and cultural consideration will enhance future HCI design through a socio-technical practice. A novel way to connecting multidisciplinary researchers is equally important as the basic research but is not obvious in the current HCI field.

Natural user interface (NUI) is an emerging trend in HCI where humans can interact naturally with computers instead of using conventional methods such as CLI (command line interface) or GUI (graphical user interface). Despite its high prevalence in both academic research and commercial applications (Bhowmik 2013), NUIs are criticised as being “artificial naturality” and many types of NUIs are not representing natural human behaviours especially gesture based interactions (Malizia and Bellucci 2012; Norman 2010; Norman and Nielsen 2010). This paper reviews the usefulness of NUIs from a different direction by considering only invisible or “disappearing” natural interfaces in Ubiquitous Computing environments.

Disappearing UI (DUI) is a logical provision of natural HCI and the term “disappearing” in this paper refers to tangible and intangible UIs that exist and work well but are not noticeable by humans. They are classified into three main categories: (i) human body as DUIs, (ii) edible and implantable DUIs, and (iii) wearable DUIs. Individual HCI technologies and algorithms have been researched with favourable results and they normally target certain functional areas and particular user groups. A novel taxonomy is proposed in this paper to classify and compare the different types of state-of-the-art DUIs, together with their advantages and disadvantages according to their intended areas of application. Researchers and industry personnel can use this classification as a guideline to select and combine different DUIs to form a holistic natural UI for certain group of users in ubiquitous computing. For example, car drivers will be alerted when they are not paying attention to the roads using a speech interface based on abnormal behaviour discovery from visual posture detection, and elderly people with Alzheimer’s disease may require constant monitoring of their activities to detect their whereabouts and predict their behaviours with non-invasive and non-intrusive sensors.

An interesting DUI application is to enhance human senses. Researchers relentlessly proposed and proved the existence of extra human sense organs since Aristotle first classified the five basic senses. Physiological comparison of the same sensory type between humans and animals reveals that some animal sensory systems are more sensitive, some of them can even sense the seismic waves before earthquakes happen (Yamauchi et al. 2014), thus UABs (unusual animal behaviours) can be applied as a natural disaster sense for human beings. Spiritual Sixth Sense is a controversial subject that many attempts have been tried to prove without success, but abnormal behaviours such as clairvoyance, clairaudience, remote viewing, etc., keep on happening without logical explanation. Collection of local and/or remote sensory data through a combination of various DUIs may be a possible way to simulate those paranormal sixth sense activities based on ubiquitous sensors and scientific computational intelligent algorithms (see Table 2). Despite missing formal definition, super senses are generally referred to as superior to normal human sensory system. As stated above, there are many animal sensory organs having superior sensitivity to the human counterparts. The following discussions will treat super sense as an enhancement of the extended human senses based on DUI connectivity to IoT and the associated Ubiquitous Computing technologies.

This paper proposes a novel taxonomy of DUI technologies from the result of a literature survey on related HCI topics and research. Section 2 explains the classification criteria for the DUI technologies with reference to the human input and output systems. Simulating the Sixth Sense, enhancing human senses and enabling the Super Senses as applications of DUIs are discussed in Sect. 3. Finally we conclude the paper in Sect. 4 with indicators for further research.

2 Disappearing user interfaces

Natural user interface provides an opportunity to enhance user experience by interacting naturally with computers. Technologies such as gesture based detection, voice and facial expression recognition are typical NUI technologies that are promoted as better than traditional HCIs like CLI and GUI methods which have been claimed as hard-to-learn and hard-to-use for computer illiterate people. Presumably natural human behaviours are the only requirement to effectively use NUI, and no training is required since natural way of interaction should be intuitive. Macaranas et al. (2015) reviewed the 3 major issues undermining the intuition of NUI as (i) no common standard; (ii) unexpected users’ experiences; and (iii) lack of affordance. However, intuition is subjective and varies according to cultures and users’ past experiences, thus global standard may be indefinable (Malizia and Bellucci 2012; Lim 2012).

DUI is a natural HCI focusing on interaction with the contents instead of the interfaces (Lim 2012). Ubiquitous Computing together with the concept of IoT drive the merging of the digital and physical worlds, where information and digital contents are the products communicating in Ubiquitous Computing networks based on machines-to-machines (M2M) and humans-to-machines (H2M) interactions (Holler et al. 2014). Humans are content receivers through the sensory systems, and at the same time humans are content providers mainly through muscular movements and nervous systems. DUIs enable the conveyance of contents between humans and machines in a natural way through tangible or intangible artefacts; touch or touchless interface; outside or inside human bodies; and most importantly not noticeable by the target persons so the interaction is done based on intuition. DUI is multi-modal in nature by sensing human behaviours through different disappeared UIs in a natural way and creating the contents for exchange. For example: a “content” representing “Peter is going home from his office” is created and delivered to Peter’s home server once the combination of spatial and temporal information from the DUIs around Peter agree to pre-set rules and past experience. Peter’s home server decodes the content and prepares the services to welcoming Peter; such as warming the house, heating-up the water, preparing dinner, etc.

DUI may become the de facto standard for future miniaturised digital artefacts by removing the requirement of built-in tangible interface. Moore’s law has been the driving force in the silicon world for five decades shrinking the size of silicon-based electronics until recently that the benefit of miniaturisation starts to diminish with the uneconomically high cost of lithography at nanoscale (Mack 2015; Huang 2015). New packaging methods such as 3D-stacking will continue the miniaturisation process after solving the associated problems such as high heat density and low manufacturing yield (Das and Markovich 2010; Kyungsu et al. 2015; Qiang et al. 2012). Nanotechnology especially molecular electronics has a high chance to take over the driver seat for the miniaturisation journey despite its controversial and unfulfilled promises during its up and down in the last 40 years (Choi and Mody 2009; Kelly and Mody 2015). Recent research shows promising results in single-molecular transistor, DNA computing, and nanotube devices that enables followers of molecular electronics to see the light at the end of the long dark tunnel (Boruah and Dutta 2015; Strickland 2016). Micro electromechanical system (MEMS) technology complements the miniaturisation paradigm by shrinking electronic control and mechanical structure together which fit nicely for DUIs with mechanical sensors or actuators. Miniaturised electronics and MEMS help hide electronic devices on or inside human bodies where wearable, implantable and edible electronic artefacts can interact invisibly with humans and communicate with one another based on body area network (BAN) topology or Internet connectivity with built-in network-enabled facility.

2.1 DUIs for human inputs

DUIs may interact with human inputs mainly through the five basic senses: vision, hearing, touch, smell and taste. Dedicated organs sense the world outside human bodies where sensory transduction produces the corresponding electrical signals and activates the human brain for perception (Henshaw 2012). The whole “stimulus-sensation-perception” process is dynamic and will be affected by various factors such as the properties of stimulus, the physical environment, the condition of human body and also the human age. Sensory adaptation causes sensitivity adjustment which adapts to the recent perception experience (Webster 2012; Roseboom et al. 2015). Sensory changes occur if there is undue impairment on the sensory system, or a change in human weight (Skrandies and Zschieschang 2015), or most commonly when the sensitivity declines due to ageing (Wiesmeier et al. 2015; Humes 2015). Multisensory integration, acts as a fusion of sensory data, helps increase the accuracy of perception but the application is normally restricted to adults since the development of sensor fusion for humans only starts in pre-teen years (Nardini et al. 2010). Figure 1 shows a typical illustration of a human sensory system and it can be seen that human perception based on the human brain is the result of decoding the sensation signals which are activated by the stimuli coming from the outside world through the basic sensory organs, while human outputs, which will be discussed in Sect. 2.2, are the responses to this perception.

2.1.1 DUIs for basic human senses: vision

Vision has been the dominant interface between humans and computers since the prevalence of the GUI paradigm where computer generated graphics are displayed on desktop computer monitors using CRTs (cathode ray tubes) or flat panel displays (e.g. LCD, Plasma, OLED, Quantum Dot, etc.). Although desktop computer monitors are still one of the most useful interfacing devices for formal computing, they don’t normally fall into the DUI category since they are far from disappearing for normal users in the ubiquitous computing world. Exceptions are found when computer monitors, especially flat panel displays, are embedded into everyday objects or furnitures such as mirrors or window glasses (Jae Seok et al. 2014; Zhen and Blackwell 2013).

Projection display can be a good candidate as DUI for human vision where computer generated images can be projected onto flat surfaces such as tabletops or concrete walls in indoor or outdoor environments. Together with the sensing of human body part movements, an interactive projection interface is provided on fixed surfaces whenever necessary with single or multiple projectors (Yamamoto et al. 2015; Fleury et al. 2015; Duy-Quoc and Majumder 2015). A wearable projector enables image projection onto any surfaces wherever the wearer goes, it can project onto any object in-front of the viewer, or even the wearer’s own body (Harrison and Faste 2014). Mid-air or free-space display technology allows the projection of images without a physical “surface” and various media are proposed to establish non-turbulent air flow of particle clouds in free space as projection screens that viewers can walk-through (Rakkolainen et al. 2015).

Considering 3D image projection, viewers wearing passive or active shutter eye-glasses can perceive image depth when stereoscopic technology is implemented in the projection systems. However, research has shown that viewers exposed to image-switching for a certain period will suffer from visual discomfort or even visual and mental fatigue (Amin et al. 2015), thus the wearing of special eye-glasses is not considered as DUI. Autostereoscopy provides glasses-free 3D image viewing using multiple projectors but the complicated alignment of parallax-barrier, lenticular lens or diffuser mechanism on the projection screen is still a big challenge for commercial applications (Hyoung et al. 2013; Hong et al. 2011). A true 3D image can be shown in free space using volumetric display technologies. Mid-air laser 3D holograms, composed of visible ionised air molecules as image pixels, are produced by laser-induced plasma scanning display (Ishikawa and Saito 2008). Recent research on laser hologram using femtosecond laser pulses enables a safer composition so users can touch the image with bare hands for real time interaction but the required instrumentation and setup still belong to laboratories. An interesting volumetric 3D projection display can be found from an assembly of fog-producing matrix and 3D images are projected to selected particle cloud above the dedicated fog-producing element (Miu-Ling et al. 2015). Wearable eye-glasses are good alternative DUI for human visual input, and the see through nature of the glasses allows augmented data to be imposed on top of the physical environment in real time (Brusie et al. 2015).

Electronic cotton paves a new path for embedding electronic circuits in textile (Savage 2012), a fabric display can be made to show simple images on clothing (Zysset et al. 2012).

2.1.2 DUIs for basic human senses: hearing

Hearing is another popular human input interface, while computer generated sound in the audio frequency range is delivered through a medium to the human auditory system. Sonic interface is a good DUI for naturally interfacing with humans in open space, and the critical requirement is the delivering of high quality sound signals which can normally be achieved through frequency response equalisation of loudspeakers and/or room acoustics (Cecchi et al. 2015).

Privacy is one of the major concerns in sonic UI, and technologies for directional sound can change the omnipresent nature of normal sound broadcast in open space. Parametric speaker consists of an array of ultrasonic transducers can transmit a narrow sound beam to the location of receivers through modulation of a large amplitude ultrasonic frequency carrier with an audio signal and use phase delaying method to align the beaming direction. The non-linear air medium then self-demodulates and recovers the audio to receiver(s) along the direction of the broadcasting path. Distortion of the recovered audio signals and the directivity are big challenges in this method, while recent research has improved the frequency response through enhancing the transducers as well as pre-processing the audio signal before modulation (Akahori et al. 2014; Woon-Seng et al. 2011). Modulated ultrasonic carriers can also be self-demodulated through the human body, thus the privacy is further enhanced since only the person(s) touching with the ultrasonic vibration can hear the original audio signals (Sung-Eun et al. 2014; Kim et al. 2013). Personal Sound Zone is an active research topic trying to control ambient sound pressure and establish multi-zones in a room where intended sound source dominates the sound field inside a particular sound zone, thus humans staying in that zone will not be interfered by sound sources outside this virtual zone. The requirements of speaker array with large number of directional speakers, and the problem of dynamic acoustic reverberation will be obstacles for turning the idea into general public usage (Betlehem et al. 2015).

Computer generated sound for interfacing with humans can be classified into speech and non-speech audio signals. Natural language processing (NLP) allows humans to communicate with computers through speech using their own language and recent research in computational linguistics with the support from Big Data and Cloud Computing enables a more human-like computer generated speech through machine learning and text-to-speech (TTS) technologies (Hirschberg and Manning 2015). TTS algorithm with modification on prosodic feature can even provide computer generated speech with emotions according to the context (Yadav and Rao 2015). Auditory display based on Sonification converts data into non-speech sound, such as alert and warning signals; status and progress indications; or data exploration; etc., thus the content of the data initiates human perception through hearing (Thomas et al. 2011). There is proof that auditory feedback helps synchronise human output based on interactive sonification (Degara et al. 2015).

2.1.3 DUIs for basic human senses: touch

The sense of touch enables a private communication with computers through the human skin. Three types of receptors: pressure and touch (mechanoreception), heat and cold (thermoreception), and pain (nociception) in the human skin sense the outside world based on mechanical, thermal, chemical, or electrical stimuli (Kortum 2008). Haptic technology is becoming popular when mobile computing devices such as cell-phones and tablet-computers utilise touch sensitive screens as major input, while Internet connectivity further enables remote touch sensing for tele-operation in the medical field thus operators can remotely feel the patience and the equipments in real time (Gallo et al. 2015).

Contactless touch interface, a good DUI candidate, makes tactile sensation in free space possible through compressed-air, ultrasound, or laser technology. Air-jet tactile stimulation is based on controlling compressed air blowing through focused nozzles but the common problems are limited travelling distance and low spatial resolution. An improvement can be found by replacing direct air flow with air vortex although the generation mechanism of air vortex may be more complex (Arafsha et al. 2015; Sodhi et al. 2013). Airborne ultrasonic tactile display is an alternative for free air tactile stimulation where human skin can feel the pressure from the radiated ultrasound waves. Spatial accuracy is further enhanced but the power consumption is relatively higher (Iwamoto et al. 2008; Inoue et al. 2015). Laser-induced thermoelastic effect also produces free air haptics but the precise control of laser pulse timing and energy is the most critical factor in safely utilising the technology (Jun et al. 2015; Hojin et al. 2015).

Wearable touch interfaces can also be considered as DUIs if they are not noticeable after attaching to the human bodies, especially when miniaturisation based on MEMS and new materials enables the complete interface modules be hidden in everyday things humans will carry around (Ishizuka and Miki 2015; Ig Mo et al. 2008). Jackets or vests with embedded sensors and actuators for vibrotactile, pressure and thermal stimulation are DUIs providing sense of touch to the human bodies. Affective tactile perception can also be accomplished through different combinations of embedded stimuli inside the electro-mechanical clothing, typical examples are hugging (Teh et al. 2008; Samani et al. 2013), bristling (Furukawa et al. 2010), and warm social touch (Pfab and Willemse 2015), etc.

Electrostatic discharge through the human body can also generate haptic feedback with proper control of the discharge power within safety limits. Mujibiya (2015) proposed a boost up electrostatic charger installed in a grounded shoe to stimulate a tactile feeling when the wearer touches anything grounded to form a closed circuit.

2.1.4 DUIs for basic human senses: smell

Human smell, or olfactory, is a chemical sense and is not as popular as the three basic senses mentioned above for the purpose of HCI. Molecules with molecular weight from approximately 30 to 300 vaporised in free air enter the human nose through the left and right nostrils during breathing, bind to the olfactory receptors and activates the perception of smell in the human brain. The small spatial difference between the two nostrils allows localising the odour similar to the spatial differences in human ears and eyes to detect the direction of the sound source and perceive the image depth respectively. Although decades of research has proposed different versions of odour classification, and also estimated 350 receptor proteins in the human olfactory epithelium, the primary odours are still unknown so digital reproduction of odour is a biggest challenge in computer olfactory interface (Kortum 2008; Kaeppler and Mueller 2013). A systematic approach to select and combine different chemical substances for odour reproduction was reviewed by Nakamoto and Murakami (2009), Nakamoto and Nihei (2013). They made use of “odour approximation” technique to choose and blend a limited number of odour components based on their mass spectrum to record and reproduce some target odours. This is not a generic approach but it may fit for specific application areas.

Presentation of smell stimuli can be achieved through diffusion to open air but the vaporisation of odour molecules is relatively slow compared to the propagation of mechanical waves in sound and electromagnetic waves in vision, thus a continuous emission of odour vapour is normally applied. However, a long exposure to same stimulus causes olfactory adaptation which declines the sensitivity. Controlling of emission timing and synchronisation with human breathing patterns may help eliminate the adaptation problem but the sensing area will become personal and the corresponding detection of human breath will increase the complexity of the system (Kadowaki et al. 2007; Sato et al. 2009). Installing scent emission facility on existing display systems enables multisensory integration of vision and smell, pores on the projection screen can emit vaporised smell stimuli which synchronise with the dynamic video content although the sensing distance is short.

Scent projector using air vortex can deliver scented air to a longer distance at controlled directions. Double vortex rings from two scent projectors can even stimulate the direction of movement of the scented air (Matsukura et al. 2013; Yanagida et al. 2013). Wearable scent diffuser can also personalise smell stimulation by assembling micro-pumps to deliver liquid odourants for atomisation by SAW (surface acoustic wave) device and vaporise to air surrounding the wearer (Ariyakul and Nakamoto 2014; Hashimoto and Nakamoto 2015).

The human sense of smell may also act as an interface for dreaming. Active research has found that using olfactory stimulus can enhance fear extinction during slow-wave sleep condition when same olfactory context re-exposes to humans who have faced contextual fear condition during awake periods (Hauner et al. 2013; Braun and Cheok 2014).

2.1.5 DUIs for basic human senses: taste

Humans sense five different tastes using the taste receptors distributed on the dorsal surface of the tongue: sweet, bitter, sour, salty and umami. The perception of smell (or gustatory), however, relies not only on the chemical sense on the tongue but also the senses of sound, smell and touch (Kortum 2008). Narumi et al. (2011) demonstrated a pseudo-gustatory system influencing the perception of taste using multisensory integration with sensory cues from vision and olfactory, where plain cookies could taste like chocolate with the associated chocolate image and smell exposed to the human subjects under test.

The tongue, sense organ for taste, is heavily used as computer interface based on its haptic nature such as controlling the mouse movements, operating smartphones or wheelchairs, or even convert tactile sensing on the tongue to human visionFootnote 1. However, there are very few computer-taste interfaces, and one of them comes from Ranasinghe et al. (2012) who proposed a tongue-mounted interface utilising a combination of electrical and thermal signals to stimulate the different taste. An improvement was further reviewed by the same authors to combine smell stimuli for a better perception of flavour (Ranasinghe et al. 2015).

2.1.6 Extra human senses

Since Aristotle first proposed the classification of the five basic human senses more than two thousand years ago, research from anatomy, physiology and psychophysics have relentlessly discovered additional human sense organs such as vestibular, muscle, and temperature senses (Wade 2003). Humans do have more than five senses and they all fall into the same “stimulus-sensation-perception” process with the human brain as the final decision maker after interpreting the outside world from the stimuli. Despite the nature of stimulus to each type of sense organ is different, the perception can be the same. Sensory Substitution is an emerging research demonstrating that Brain Plasticity enables human brain to choose a particular type of sensor or combination of sensors to arrive the same perception after a training period (Bermejo and Arias 2015). Examples can be found from previous sections that haptic sensors can replace vision sensors allowing blind people to see the world, or sonification enables humans to perceive the content of an analysis without looking into the raw data. DUI should therefore be more effective to interface with human inputs through delivering content instead of raw data, and the more we know the human brain the more we will realise how to interface with humans. Numerous research institutions all over the world are already investing considerable efforts into exploring the human brainFootnote 2 , Footnote 3 , Footnote 4, thus computer generated stimuli for human inputs will become more effective and precise in the future.

2.2 DUIs for human outputs

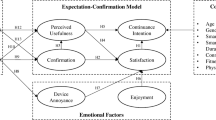

DUIs capture human outputs based on monitoring human body activities: body parts movements, body sound generation, body temperature variations, body odour emissions, and the dynamic physiological parameters. The disappearing nature of DUI avoids step-by-step manual driven human interaction, and its focus is set on interfacing with the contents which are the actions generally derived from human perceptions (Lim 2012). DUI for human outputs is normally based on a “data capture, feature extraction, classification and prediction” process which is the algorithm generally adopted in Computational Intelligence, and a typical block diagram is depicted in Fig. 2.

Discussion in the following subsections concentrates mostly on the DUI front end which is the capturing of human activities, and various data or contents capturing technologies are reviewed according to the nature of the activities. The corresponding feature extraction is application specific which depends on the methodology for activity detection (e.g. same sound clips captured from a human body can be used for voice command using speech recognition algorithm, or medical diagnosis using sound sensing techniques, etc.) and will be mostly skipped in this paper. Classification and prediction normally adopt probabilistic methods, such as HMM (hidden Markov models) or Bayesian network or ANN (artificial neural network), to choose the most possible result according to the extracted features and the past history, and generally supervised or unsupervised training will be followed to increase the prediction accuracy. Four basic types of human outputs are discussed in the following sub-sections together with the explanation of the different capturing methods and technologies. A summary of human outputs and the corresponding capturing techniques is depicted in Fig. 3 and the corresponding cross references are listed in Table 1.

2.2.1 DUIs for human outputs: body parts movement

Body parts movements are the major human outputs which normally include (i) body postures, (ii) limb movements, (iii) locomotion, (iv) hand and finger gestures, (v) facial expression, (vi) eye gaze, and (vii) body micro-motions. All of these actions are achieved by muscle movements activated by the human brain, and the capturing of these movements normally involves spatial and temporal tracking of the target body parts.

Image capturing through regular visual-spectrum cameras detects the target body parts in fine details which is useful in getting facial expression or tracking eye-gaze. Facial expression is thought to be linked with human emotion and is usually composed by the subtle movement of facial muscles. Detection of human behaviours as well as their sex, age or ethnicity is accomplished by the analysis of features through the spontaneous facial images with reasonably resolution so landmarks can be clearly labelled on human faces (Mavadati et al. 2013; Tasli et al. 2015). Tracking of mouth-shapes and lips movements provides visual speech recognition which helps vocal communication in noisy environment (Tasaka and Hamada 2012). Eye-gaze tracking allows humans to communicate with computer through the tracking of pupil’s position or pupil corneal reflection through examining visual images of the human eyes (Bazrafkan et al. 2015). Ambient lighting condition affects the image clarity seriously, thus the application is restricted.

Depth cameras and thermal cameras improve the privacy by capturing the contour of target body parts and is less affected by ambient lighting. Applications can be found in analysing human gait sequence by capturing the images for locomotion. Analysis of the gait sequences can identify each individual for multiple dwellers in an ambient space, and the trajectory of the centre of mass for each person through feature extraction from the depth images can be used to detect human falls as well (Dubois and Charpillet 2014; Dubois and Bresciani 2015). Human posture detection can also utilise depth image sequences through extracting a skeleton model for further examination on body parts movements. Kondyli et al. (2015) demonstrated the postures of different drivers based on skeleton models to compare driving attitude. PIR (Passive InfraRed) technology provides a simpler method detecting human body based on body temperature but the detection resolution and accuracy is relatively low.

Leveraging the RF (radio frequency) signals surrounding the human body can also detect body parts movements. Active and passive Doppler radar technologies are commonly adopted to track body parts. Active Doppler radar technology normally requires customized hardware using frequencies such as UWB (ultra wide band), ultrasound, or mm-waves to detect moving objects using the Doppler effect. Detection accuracy and resolution for active Doppler radar is high but it requires dedicated transmitters and receivers, where required frequencies may have potential interference with existing RF systems. The capability of radar to penetrate through walls allows detection of minor human movement from a distance, Qiu et al. (2015) reviewed how active Doppler radar applied from the outside of a building to detect human micro-motions such as respiration and heart beating for people living inside. Silent speech interface is an emerging research area where the Doppler effect based on ultrasound allows the detection of speech through the decoding of lip and mouth movements (Srinivasan et al. 2010). Passive Doppler radar utilises RF signals already existing in the environment as transmission signals and detects the variations of the received signal due to reflections from human body parts, thus the detection system set up is comparatively less complicated and the interference becomes minimal. The widespread use of WiFi technology at home enables the application of passive Doppler radar for hand gesture detection using WiFi frequency bands (i.e. 2.4 and 5 GHz) as the radar frequencies (Bo et al. 2014). To further simplify the hardware requirement, Abdelnasser et al. (2015) demonstrated a gesture detection algorithm using the variation of RSSI (received signal strength indicator) of the WiFi signal due to the interference of human body parts, thus no extra hardware is needed but the detection distance was much shorter.

Wearable sensors enable an accurate detection of wearers’ motions through a combination of traditional sensing elements such as accelerometers, gyroscopes, magnetometers, and barometers. Recognition of walking, running, cycling, or driving are achievable with reasonable accuracy (Elhoushi et al. 2014). Together with MEMS technology, wearable sensors are logical DUIs once they are well hidden in human bodys like embedding in smart-watches or clothing.

2.2.2 DUIs for human outputs: body sounds

Body sound is another popular human output and can be classified as “speech” and “non-speech”. Humans generate speech by pushing air from the lungs through the vocal folds, while non-speech sound is the result of the sound producing by various internal and external parts of the human body such as body parts movement, heart beat, breathing, drinking or swallowing, etc.

Capturing ambient sounds normally utilises a microphone to convert sound waves into electrical signals. A single microphone is capable of performing an accurate conversion when the speaker is within an effective pick-up distance under high SNR (signal to noise ratio) condition, thus the application for DUI is limited. Distributed microphone networks enabling ambient sound capturing without fixed pick-up spots allow speakers to travel within an open space in single or multiple rooms environment. Data fusion of distributed sound signals from different areas can then enhance automatic speech recognition by localisation of speakers and reduction of background noise (Trawicki et al. 2012; Giannoulis et al. 2015). A microphone array further enhances captured sound quality by putting multiple microphones in grid form to expand the coverage and enable the face orientation estimation of speakers (Soda et al. 2013; Ishi et al. 2015).

Miniaturised on-body sound capture devices enable the detection of both speech and non-speech body sound. Skin-attached piezoelectric sensors such as throat or non-audible murmur (NAM) microphones convert tissue vibrations into recognizable speech which falls into the emerging research field of “silent speech interface” (Turan and Erzin 2016; Toda et al. 2012). Similar sensors attaching to different parts of the human body allows the detection of various body internal activities which translate into human output actions, examples are dietary monitoring through eating sounds, lungs condition monitoring through breathing sounds, human emotion monitoring through laughing and yawning sounds, etc. (Rahman et al. 2015). Miniaturisation (e.g. electronic tattoo) and the continuous supply of operating power (e.g. wireless power) are key factors making this interface successful as DUI for detecting human sound output (Alberth 2013; Mandal et al. 2009).

2.2.3 DUIs for human outputs: body temperature

Human bodies in their living form generate heat by metabolism, muscle contraction, intake of food, and non-shivering thermogenesis (neonate only); whereas body heat is lost from the body through the processes of radiation, convection, conduction and evaporation (Campbell 2011). Humans are homeothermic so they try to maintain a constant body temperature through physiological adjustments, but body temperature still varies dynamically due to human body conditions (e.g. health, emotions, environment, etc.). Capturing the human body temperature is often achieved through remote sensing based on an IR (Infra-Red) camera or on-body direct thermal sensing.

Thermal radiation from human bodies consists primarily of IR radiation according to Wien’s law, which treats human body as a black-body radiator and the estimated wavelength of the emitted EM (electromagnetic) wave falls into the IR region of the spectrum thus invisible to human eyes. Images showing the thermal maps of the human bodies are therefore captured by IR camera which is mentioned in Sect. 2.2.1 that the data is used for detecting body parts movement. Recently IR images also enable facial recognition in dark environment by capturing thermal images of human faces to assist the recognition process by comparing details with normal facial images in database (Hu et al. 2015). Facial signatures reflecting body conditions due to the fluctuations in skin temperature are being remotely captured by many airports for mass screening of passengers for infection quarantine, e.g. to prevent SARS (severe acute respiratory syndrome) (Nakayama et al. 2015).

Personal micro-climate is the result of body heat convection where a layer of warm air is surrounding the human body, and the rising air becomes human thermal plume appearing above the head with air rising velocity proportional to the temperature difference between the human body and the surrounding air, therefore thermal plume can be used to detect the comfort level of the human body so as to control the ambient air-conditioning (Voelker et al. 2014). Craven et al. (2014) demonstrated the using of Schlieren imaging to capture human thermal plume for an application of chemical trace detection which monitors and detects the air flow of a walking suspect in a security gate for carrying illegal chemicals.

Wearable thermal sensors capture body temperature by direct skin contact. Temperature sensing is a mature technology and miniaturisation becomes the major design criteria for attaching unnoticeable sensors to the human bodies, Vaz et al. (2010) proposed an RFID tag with long range wireless connection and low profile on-body temperature sensing. A bold attempt uses electronic capsule housing a self-powered temperature sensor for swallowing into human body, however, technical and psychological concerns are big challenges (Zhexiang et al. 2014).

2.2.4 DUIs for human outputs: body odour

Body odour is the result of the emission of various VOCs (volatile organic compounds) from the human body, and is normally carried by the warm-air current of the personal micro-climate to the outside world (see Sect. 2.2.3). Research has found that some VOCs are the reflection of certain diseases which change the human metabolic condition, thus the detection of VOCs signature applies to health care monitoring (Li 2009; Shirasu and Touhara 2011).

Ambient capturing of human body odour is achieved through the air circulation in an open space, VOCs are collected from the circulated air and detected by gas detection systems. Chemical sensors are used for detection of pre-defined VOCs and gas chromatography (GC) together with Mass Spectrometry are applicable to generic chemical analysis of VOCs based on mixture separation techniques. Minglei et al. (2014) proposed a GC system for biometrics using body odour as personal identity, and Fonollosa et al. (2014) used chemical sensors for activity recognition where activities of multiple dwellers can be estimated using classification of captured VOCs in a living space.

Personal body odour detection is achieved by wearable detector such as eNose (electronic noise) which normally consists of chemical sensors with their electrical characteristics changed once the coated chemically sensitive materials (e.g. metal oxides, carbon nanotubes, etc.) are in contact with the VOCs (Kea-Tiong et al. 2011). Embedding the sensors in clothing is a good way to keep an effective personal VOCs detection non-noticeable (Seesaard et al. 2014).

2.2.5 DUI for human outputs: physiological parameters

Physiological parameters are mostly presented as biosignals for monitoring human body functions, for examples the primary vital signs are body temperature, blood pressure, heart beat, breathing rate which can be captured by DUIs mentioned in the previous sections through indirect methods. In order to get an accurate measurement of all biosignals, direct biomedical examination is needed through common methods such as electroencephalogram (EEG), electrocardiogram (ECG), electromyogram (EMG), mechanomyogram (MMG), electrooculography (EOG), galvanic skin response (GSR), etc. Measurement of physiological responses are essential for recognising human emotions which activate or deactivate the human autonomic nervous system (Kreibig 2010). Active research in Psychophysiology has been proposing effective measuring methods to identify different feelings (e.g. angry, sad, fear, happiness, etc.) under the umbrella of affective computing (Picard 1997). However, discussion of these physiological measurements is too broad to be included this paper.

3 Sixth sense and super senses

Humans do possess more than five senses according to numerous research as briefly discussed in previous Sect. 2.1.6, but there is no formal classification for ranking them as the sixth human sense. The fact that “Sixth Sense” has long been referred by the general public as paranormal phenomena could be one reason. Parapsychology has been an active research topic for decades exploring those paranormal activities and psychic abilities for human beings. Many institutions and organisations all over the world under the umbrella of parapsychology or extrasensory perception (ESP) are getting involved with continuous publications of research papers and empirical reports gaining controversial results. This paper will not comment or criticise any parapsychology area, however, the application of DUIs to enable what may be seen to be paranormal activities or psychic abilities for normal human being catches our attention. Extrasensory perception is the ability to use “extra” human senses to perceive things that are not normally achievable as an ordinary people, such as feeling someone far away or talk to a dead person. There are recent experiments reporting the fusion of sensors based on Ubiquitous Computing which enable humans with extra senses by delivering data from surrounding sensors to them when they walk by (Dublon and Paradiso 2014), and Mistry et al. (2009) also proposed a “SixthSense” wearable gesture interface device merging on-body computer and on-body sensors with cloud computing for augmented reality application which enables individual to collect whatever data from ubiquitous computing devices through hand gesture recognition. Ubiquitous computing, IoT and Big Data through cloud computing already provide immediate tools for data-fusion and sensor-fusion which allow humans to interact with the world easily, for example, humans can check the road traffic five blocks away with the estimated time to get there, or see the real-time image of a street in New York from London and locate a particular face, or estimate a 10-day weather forecast with high accuracy through computational intelligence and past history. Accessing all these complicated technologies naturally through DUIs can seamlessly merge humans with the fusion of data and sensors, and ultimately DUIs can turn on the so-called Sixth Sense through simulation. Table 2 shows some examples of applying DUIs for enabling normal human to gain common psychic abilities which are generally described as the Sixth Sense.

Supersenses or Super Senses, similar to the Sixth Sense, are also treated as supernatural behaviours among the general public, and the term “Super Senses” is sometimes associated with animal senses that are superior to the human senses in terms of sensitivity and functionalityFootnote 5. Super sense in this work refers to the extension of human senses which enhances temporal, spatial, and most importantly contextual senses. IoT is shaping the Internet into a global nervous systemFootnote 6, Footnote 7, thus the growing number of ubiquitous sensors, based on Context Awareness technology, enables local and remote contextual sensing anywhere in the world when an Internet connection is reached. This huge IoT nervous system is then the sensory extension for each connected individual.

Context awareness technology in ubiquitous computing not only provides a platform for mobile computing to be location sensitive, but also enables the mobile devices to be smart. Dey et al. (2001) defined Context a decade ago as “any information that characterises the situation of entities” and laid down the groundwork for smart mobile computing devices that can accurately aware and astutely react based on the corresponding context information. Collection of past and present contexts provides cues for making immediate decisions (da Rosa et al. 2015) and predicts future actions as well (da Rosa et al. 2016).

Emotion promotes behavioural and physiological responses by activating the autonomic nervous system (Kreibig 2010), and at the same time the experience or emotional memory is encoded onto the neural circuits in the brain through the limbic system (LaBar and Cabeza 2006). The famous “Little Albert Experiment” shows that once a particular emotional memory is consolidated in the brain, the emotional response becomes conditioned emotional response (CER) which can be recalled by conditioned stimuli (CS) (Watson and Rayner 2000). CS is the context of the emotional experience which is usually stimulated by an unconditioned stimulus (US). For examples, (1) a dog barking sound is the CS and a dog bite is the US in an emotional experience of “bitten by a dog”, (2) a bicycle riding activity and a bad weather together are the CS and the falling on the ground is the US in a road accident happened in a raining day. emotional context awareness (ECA) is possible to discover or “sense” the CS through recorded contexts with emotional tagging (Alam et al. 2011), and it can be applied to predict emotions based on machine learning and specific context prediction algorithms.

Context conditioning is an emerging research based on CER and CS/US, and researchers in this field normally focus on applications to detect or to make extinct anxiety or fear (Kastner et al. 2016; Cagniard and Murphy 2013; Kerkhof et al. 2011). Research on Emotion Prediction normally investigates the features extraction and classification methods on contexts stimulating emotion using machine learning algorithms (Ringeval et al. 2015; Fairhurst et al. 2015), or focuses on the prediction algorithms under controlled contextual stimuli. However research on sensing the CS for emotional memories is not obvious. Sensing and extracting the ground-truth contextual datasets for training the prediction function is equally important. ECA together with context prediction is a super sense enabling mobile devices in ubiquitous computing to “have” emotions which allow humanised interaction to the users, e.g. it will advice the user to a session of physical exercise when he/she is currently sad and near a gymnasium since 80 % of past emotional context shows that “workout” is followed by a happy emotional state.

4 Conclusions and future work

The number of users and the number of computers in the Ubiquitous Computing world have reached an unprecedented scale, and the associated interactions between multiple users and multiple computers at the same time become a profound issue requiring a novel HCI solution. A menu driven approach HCI requiring operational training will be ineffective to non-technical users, thus NUIs have been promoted as the future interface for Ubiquitous Computing, where users interact with computers based on intuition. Some NUI, especially gesture NUIs are criticised as artificial naturality since they replace tangible interface devices such as mouse and keyboard with gestures in a menu driven approach. DUI, based on its unnoticeable nature, is a logical provision for natural HCI to intuitively interact with non-technical users without training. DUI can pave a new path for NUI to be more natural by hiding the interface from the users and target to interact with them through contents directly based on natural human inputs and outputs. The application of DUIs not only provides intuitive natural human interaction, but also extends human senses through context awareness technology. Getting to know details about human inputs through stimulus to sensation to perception, and human outputs through the brain to body responses are necessary required research for successful DUIs for ubiquitous computing. Research has shown that there are human responses not controlled by the conscious mind, such as pulse rate, breathing rate, body temperature, or even certain facial and gesture expressions; and these responses are classified as behavioural and physiological responses promoted by human emotions. Emotional memory is first encoded to the neural circuits through an emotional event stimulated by conditioned and unconditioned stimuli. This paper lays the groundwork for an ongoing research for discovering the “conditioned contextual stimuli” for “conditioned emotional responses” using super senses.

Notes

References

Abdelnasser H, Youssef M, Harras KA (2015) WiGest: a ubiquitous WiFi-based gesture recognition system. arXiv:1501.04301

Akahori H, Furuhashi H, Shimizu M (2014) Direction control of a parametric speaker. In: Ultrasonics sSymposium (IUS). IEEE International, pp 2470–2473. doi:10.1109/ULTSYM.2014.0616

Alam KM, Rahman MA, Saddik AE, Gueaieb W (2011) Adding emotional tag to augment context-awareness in social network services. In: Instrumentation and measurement technology conference (I2MTC). IEEE, pp 1–6. doi:10.1109/IMTC.2011.5944225

Alberth W (2013) Coupling an electronic skin tattoo to a mobile communication device. United States Patent Application No. US 2013(0294617):A1

Amin HU, Malik AS, Mumtaz W, Badruddin N, Kamel N (2015) Evaluation of passive polarized stereoscopic 3D display for visual and mental fatigues. In: Engineering in medicine and biology society (EMBC), 37th annual international conference of the IEEE, pp 7590-7593. doi:10.1109/EMBC.2015.7320149

Arafsha F, Zhang L, Dong H, Saddik AE (2015) Contactless haptic feedback: state of the art. In: Haptic, audio and visual environments and games (HAVE), IEEE International Symposium on, pp 1–6. doi:10.1109/HAVE.2015.7359447

Ariyakul Y, Nakamoto T (2014) Improvement of miniaturized olfactory display using electroosmotic pumps and SAW device. In: TENCON IEEE Region 10 Conference, pp 1–5. doi:10.1109/TENCON.2014.7022484

Bazrafkan S, Kar A, Costache C (2015) Eye gaze for consumer electronics: controlling and commanding intelligent systems. Consum Electron Mag IEEE 4(4):65–71. doi:10.1109/MCE.2015.2464852

Bermejo F, Arias C (2015) Sensory substitution: an approach to the experimental study of perception/Sustitución sensorial: un abordaje para el estudio experimental de la percepción. Estudios de Psicología 36(2):240–265. doi:10.1080/02109395.2015.1026118

Betlehem T, Wen Z, Poletti MA, Abhayapala TD (2015) Personal Sound Zones: Delivering interface-free audio to multiple listeners. Signal Process Mag IEEE 32(2):81–91. doi:10.1109/MSP.2014.2360707

Bhowmik AK (2013) 39.2: invited paper: natural and intuitive user interfaces: technologies and applications. In: SID symposium digest of technical papers, vol 44, no 1, pp 544–546. doi:10.1002/j.2168-0159.2013.tb06266.x

Bo T, Woodbridge K, Chetty K (2014) A real-time high resolution passive WiFi Doppler-radar and its applications. In: Radar conference (Radar), international, pp 1–6. doi:10.1109/RADAR.2014.7060359

Boruah K, Dutta JC (2015) Twenty years of DNA computing: From complex combinatorial problems to the Boolean circuits. In: Electronic design, computer networks and automated verification (EDCAV), international conference, pp 52–57. doi:10.1109/EDCAV.2015.7060538

Braun MH, Cheok AD (2014) Towards an olfactory computer-dream interface. In: Proceedings of the 11th conference on advances in computer entertainment technology, ACM, pp 1–3. doi:10.1145/2663806.2663874

Brusie T, Fijal T, Keller A, Lauff C, Barker K, Schwinck J, Calland JF, Guerlain S (2015) Usability evaluation of two smart glass systems. In: Systems and information engineering design symposium (SIEDS), pp 336–341. doi:10.1109/SIEDS.2015.7117000

Cagniard B, Murphy NP (2013) Affective taste responses in the presence of reward- and aversion-conditioned stimuli and their relationship to psychomotor sensitization and place conditioning. Behav Brain Res 236:289–294. doi:10.1016/j.bbr.2012.08.021

Campbell I (2011) Body temperature and its regulation. Anaesth Intensive Care Med 12(6):240–244. doi:10.1016/j.mpaic.2011.03.002

Cecchi S, Romoli L, Gasparini M, Carini A, Bettarelli F (2015) An adaptive multichannel identification system for room response equalization. In: Electronics, computers and artificial intelligence (ECAI), 7th international conference, pp AF:17–21. doi:10.1109/ECAI.2015.7301215

Choi H, Mody CC (2009) The long history of molecular electronics: microelectronics origins of nanotechnology. Soc Stud Sci 39(1):11–50. doi:10.1177/0306312708097288

Craven BA, Hargather MJ, Volpe JA, Frymire SP, Settles GS (2014) Design of a high-throughput chemical trace detection portal that samples the aerodynamic wake of a walking person. IEEE Sens J 14(6):1852–1866. doi:10.1109/JSEN.2014.2304538

da Rosa JH, Barbosa JLV, Kich M, Brito L (2015) A multi-temporal context-aware system for competences management. Int J Artif Intell Educ 25(4):455–492. doi:10.1007/s40593-015-0047-y

da Rosa JH, Barbosa JLV, Ribeiro GD (2016) ORACON: an adaptive model for context prediction. Expert Syst Appl 45:56–70. doi:10.1016/j.eswa.2015.09.016

Das RN, Markovich VR (2010) Nanomaterials for electronic packaging: toward extreme miniaturization [Nanopackaging]. Nanotechnol Mag IEEE 4(4):18–26. doi:10.1109/MNANO.2010.938653

Degara N, Hunt A, Hermann T (2015) Interactive sonification [Guest editors’ introduction]. MultiMed IEEE 22(1):20–23. doi:10.1109/MMUL.2015.8

Dey AK, Abowd GD, Salber D (2001) A conceptual framework and a toolkit for supporting the rapid prototyping of context-aware applications. Hum Comput Interact 16(2–4):97–166. doi:10.1207/S15327051HCI16234_02

Dourish P, Bell G (2011) Divining a digital future: mess and mythology in ubiquitous computing. MIT Press, Cambridge, MA

Dublon G, Paradiso JA (2014) Extra Sensory Perception. Sci Am 311(1):36–41. doi:10.1038/scientificamerican0714-36

Dubois A, Bresciani JP (2015) Person identification from gait analysis with a depth camera at home. In: Engineering in medicine and biology society (EMBC), 37th annual international conference of the IEEE, pp 4999–5002. doi:10.1109/EMBC.2015.7319514

Dubois A, Charpillet F (2014) A gait analysis method based on a depth camera for fall prevention. In: Engineering in medicine and biology society (EMBC), 36th annual international conference of the IEEE, pp 4515–4518. doi:10.1109/EMBC.2014.6944627

Duy-Quoc L, Majumder A (2015) Interactive display conglomeration on the wall. In: Everyday virtual reality (WEVR), IEEE 1st Workshop, pp 5–9. doi:10.1109/WEVR.2015.7151687

Elhoushi M, Georgy J, Korenberg M, Noureldin A (2014) Robust motion mode recognition for portable navigation independent on device usage. In: Position, location and navigation symposium—PLANS IEEE/ION, pp 158–163. doi:10.1109/PLANS.2014.6851370

Fairhurst M, Erbilek M, Li C (2015) Study of automatic prediction of emotion from handwriting samples. IET Biom 4(2):90–97. doi:10.1049/iet-bmt.2014.0097

Fleury C, Ferey N, Vezien JM, Bourdot P (2015) Remote collaboration across heterogeneous large interactive spaces. In: Collaborative virtual environments (3DCVE), IEEE second VR international workshop, pp 9–10. doi:10.1109/3DCVE.2015.7153591

Fonollosa J, Rodriguez-Lujan I, Shevade AV, Homer ML, Ryan MA, Huerta R (2014) Human activity monitoring using gas sensor arrays. Sens Actuators B Chem 199:398–402. doi:10.1016/j.snb.2014.03.102

Furukawa M, Uema Y, Sugimoto M, Inami M (2010) Fur interface with bristling effect induced by vibration. In: Proceedings of the 1st augmented human international conference, ACM, pp 1–6. doi:10.1145/1785455.1785472

Gallo S, Son C, Lee HJ, Bleuler H, Cho IJ (2015) A flexible multimodal tactile display for delivering shape and material information. Sens Actuators A Phys 236:180–189. doi:10.1016/j.sna.2015.10.048

Giannoulis P, Brutti A, Matassoni M, Abad A, Katsamanis A, Matos M, Potamianos G, Maragos P (2015) Multi-room speech activity detection using a distributed microphone network in domestic environments. In: Signal processing conference (EUSIPCO), 23rd European, pp 1271–1275. doi:10.1109/EUSIPCO.2015.7362588

Harrison C, Faste H (2014) Implications of location and touch for on-body projected interfaces. In: Proceedings of the 2014 conference on designing interactive systems, ACM, pp 543–552. doi:10.1145/2598510.2598587

Hashimoto K, Nakamoto T (2015) Stabilization of SAW atomizer for a wearable olfactory display. In: Ultrasonics symposium (IUS), IEEE International, pp 1–4. doi:10.1109/ULTSYM.2015.0355

Hauner KK, Howard JD, Zelano C, Gottfried JA (2013) Stimulus-specific enhancement of fear extinction during slow-wave sleep. Nat Neurosci 16(11):1553–1555. doi:10.1038/nn.3527

Henshaw JM (2012) A tour of the senses: how your brain interprets the world. JHU Press, Baltimore, MD

Hirschberg J, Manning CD (2015) Advances in natural language processing. Science 349(6245):261–266. doi:10.1126/science.aaa8685

Hojin L, Ji-Sun K, Seungmoon C, Jae-Hoon J, Jong-Rak P, Kim AH, Han-Byeol O, Hyung-Sik K, Soon-Cheol C (2015) Mid-air tactile stimulation using laser-induced thermoelastic effects: The first study for indirect radiation. In: World haptics conference (WHC), IEEE, pp 374–380. doi:10.1109/WHC.2015.7177741

Holler J, Tsiatsis V, Mulligan C, Avesand S, Karnouskos S, Boyle D (2014) From machine-to-machine to the internet of things: introduction to a new age of intelligence. Academic Press, Waltham, MA

Hong J, Kim Y, Choi HJ, Hahn J, Park JH, Kim H, Min SW, Chen N, Lee B (2011) Three-dimensional display technologies of recent interest: principles, status, and issues [Invited]. Appl Opt 50(34):H87–H115. doi:10.1364/AO.50.000H87

Hu S, Choi J, Chan AL, Schwartz WR (2015) Thermal-to-visible face recognition using partial least squares. J Opt Soc Am A 32(3):431–442. doi:10.1364/JOSAA.32.000431

Huang A (2015) Moore’s law is dying (and that could be good). Spectr IEEE 52(4):43–47. doi:10.1109/MSPEC.2015.7065418

Humes LE (2015) Age-related changes in cognitive and sensory processing: focus on middle-aged adults. Am J Audiol 24(2):94–97. doi:10.1044/2015_AJA-14-0063

Hyoung L, Young-Sub S, Sung-Kyu K, Kwang-Hoon S (2013) Projection type multi-view 3D display system with controllable crosstalk in a varying observing distance. In: Information optics (WIO), 12th workshop, pp 1–4. doi:10.1109/WIO.2013.6601263

Ig Mo K, Kwangmok J, Ja Choon K, Nam JD, Young Kwan L, Hyouk Ryeol C (2008) Development of soft-actuator-based wearable tactile display. Robot IEEE Trans 24(3):549–558. doi:10.1109/TRO.2008.921561

Inoue S, Makino Y, Shinoda H (2015) Active touch perception produced by airborne ultrasonic haptic hologram. In: World haptics conference (WHC), IEEE, pp 362–367. doi:10.1109/WHC.2015.7177739

Ishi CT, Even J, Hagita N (2015) Speech activity detection and face orientation estimation using multiple microphone arrays and human position information. In: Intelligent robots and systems (IROS), IEEE/RSJ international conference, pp 5574–5579. doi:10.1109/IROS.2015.7354167

Ishikawa H, Saito H (2008) Point cloud representation of 3D shape for laser-plasma scanning 3D display. In: Industrial electronics. IECON. 34th annual conference of IEEE, pp 1913–1918. doi:10.1109/IECON.2008.4758248

Ishizuka H, Miki N (2015) MEMS-based tactile displays. Displays 37:25–32. doi:10.1016/j.displa.2014.10.007

Iwamoto T, Tatezono M, Shinoda H (2008) Non-contact method for producing tactile sensation using airborne ultrasound. In: Haptics: perception, devices and scenarios, pp 504–513. doi:10.1007/978-3-540-69057-3_64

Jae Seok J, Gi Sook J, Tae Hwan L, Soon Ki J (2014) Two-phase calibration for a mirror metaphor augmented reality system. Proc IEEE 102(2):196–203. doi:10.1109/JPROC.2013.2294253

Jun JH, Park JR, Kim SP, Min Bae Y, Park JY, Kim HS, Choi S, Jung SJ, Hwa Park S, Yeom DI, Jung GI, Kim JS, Chung SC (2015) Laser-induced thermoelastic effects can evoke tactile sensations. Sci Rep 5:11,016:1–16. doi:10.1038/srep11016

Kadowaki A, Sato J, Bannai Y, Okada K (2007) Presentation technique of scent to avoid olfactory adaptation. In: Artificial reality and telexistence, 17th international conference, pp 97–104. doi:10.1109/ICAT.2007.8

Kaeppler K, Mueller F (2013) Odor classification: a review of factors influencing perception-based odor arrangements. Chem Sens 38(3):189–209. doi:10.1093/chemse/bjs141

Kastner AK, Flohr ELR, Pauli P, Wieser MJ (2016) A scent of anxiety: olfactory context conditioning and its influence on social cues. Chem Sen 41(2):143–153. doi:10.1093/chemse/bjv067

Kea-Tiong T, Shih-Wen C, Meng-Fan C, Chih-Cheng H, Shyu JM (2011) A wearable Electronic Nose SoC for healthier living. In: Biomedical circuits and systems conference (BioCAS), IEEE, pp 293–296. doi:10.1109/BioCAS.2011.6107785

Kelly KF, Mody CCM (2015) The booms and busts of molecular electronics. Spectr IEEE 52(10):52–60. doi:10.1109/MSPEC.2015.7274196

Kerkhof I, Vansteenwegen D, Baeyens F, Hermans D (2011) Counterconditioning: an effective technique for changing conditioned preferences. Exp Psychol 58(1):31–38. doi:10.1027/1618-3169/a000063

Kim SE, Kang TW, Hwang JH, Kang SW, Park KH, Son SW (2013) An innovative hearing system utilizing the human body as a transmission medium. In: Communications (APCC), 19th Asia-Pacific conference, pp 479–484. doi:10.1109/APCC.2013.6765995

Kondyli A, Sisiopiku VP, Liangke Z, Barmpoutis A (2015) Computer assisted analysis of drivers’ body activity using a range camera. Intell Transp Syst Mag IEEE 7(3):18–28. doi:10.1109/MITS.2015.2439179

Kortum P (2008) HCI beyond the GUI: Design for haptic, speech, olfactory, and other nontraditional interfaces. Morgan Kaufmann, Burlington, MA

Kreibig SD (2010) Autonomic nervous system activity in emotion: a review. Biol Psychol 84(3):394–421. doi:10.1016/j.biopsycho.2010.03.010

Kyungsu K, Benini L, De Micheli G (2015) Cost-effective design of mesh-of-tree interconnect for multicore clusters with 3-D stacked L2 scratchpad memory. Very Large Scale Integr (VLSI) Syst IEEE Trans 23(9):1828–1841. doi:10.1109/TVLSI.2014.2346032

LaBar KS, Cabeza R (2006) Cognitive neuroscience of emotional memory. Nat Rev Neurosci 7(1):54–64. doi:10.1038/nrn1825

Li S (2009) Overview of odor detection instrumentation and the potential for human odor detection in air matrices. Tech. rep., MITRE Nanosystems Group,. http://www.mitre.org/sites/default/files/pdf/09_4536.pdf

Lim YK (2012) Disappearing interfaces. Interactions 19(5):36–39. doi:10.1145/2334184.2334194

Macaranas A, Antle AN, Riecke BE (2015) What is intuitive interaction? Balancing users’ performance and satisfaction with natural user interfaces. Interact Comput 27(3):357–370. doi:10.1093/iwc/iwv003

Mack C (2015) The multiple lives of Moore’s law. Spectr IEEE 52(4):31–31. doi:10.1109/MSPEC.2015.7065415

Malizia A, Bellucci A (2012) The artificiality of natural user interfaces. Commun ACM 55(3):36–38. doi:10.1145/2093548.2093563

Mandal S, Turicchia L, Sarpeshkar R (2009) A Battery-Free Tag for Wireless Monitoring of Heart Sounds. In: Wearable and implantable body sensor networks (BSN), sixth international workshop, pp 201–206. doi:10.1109/BSN.2009.11

Matsukura H, Yoneda T, Ishida H (2013) Smelling screen: development and evaluation of an olfactory display system for presenting a virtual odor source. Vis Comput Graph IEEE Trans 19(4):606–615. doi:10.1109/TVCG.2013.40

Mavadati SM, Mahoor MH, Bartlett K, Trinh P, Cohn JF (2013) DISFA: a spontaneous facial action intensity database. Affect Comput IEEE Trans 4(2):151–160. doi:10.1109/T-AFFC.2013.4

Minglei S, Yunxiang L, Hua F (2014) Identification authentication scheme using human body odour. In: Control science and systems engineering (CCSSE), IEEE international conference, pp 171–174. doi:10.1109/CCSSE.2014.7224531

Mistry P, Maes P, Chang L (2009) WUW—wear Ur world: a wearable gestural interface. In: CHI’09 extended abstracts on human factors in computing systems, ACM, pp 4111–4116. doi:10.1145/1520340.1520626

Miu-Ling L, Bin C, Yaozhung H (2015) A novel volumetric display using fog emitter matrix. In: Robotics and automation (ICRA), IEEE international conference on, pp 4452–4457. doi:10.1109/ICRA.2015.7139815

Mujibiya A (2015) Haptic feedback companion for body area network using body-carried electrostatic charge. In: Consumer electronics (ICCE), IEEE international conference, pp 571–572. doi:10.1109/ICCE.2015.7066530

Nakamoto T, Nihei Y (2013) Improvement of odor approximation using mass spectrometry. Sens J IEEE 13(11):4305–4311. doi:10.1109/JSEN.2013.2267728

Nakamoto T, Murakami K (2009) Selection method of odor components for olfactory display using mass spectrum database. In: Virtual reality conference, IEEE, pp 159–162. doi:10.1109/VR.2009.4811016

Nakayama Y, Guanghao S, Abe S, Matsui T (2015) Non-contact measurement of respiratory and heart rates using a CMOS camera-equipped infrared camera for prompt infection screening at airport quarantine stations. In: Computational intelligence and virtual environments for measurement systems and applications (CIVEMSA), IEEE international conference on, pp 1–4. doi:10.1109/CIVEMSA.2015.7158595

Nardini M, Bedford R, Mareschal D (2010) Fusion of visual cues is not mandatory in children. Proc Natl Acad Sci 107(39):17,041–17,046. doi:10.1073/pnas.1001699107

Narumi T, Kajinami T, Nishizaka S, Tanikawa T, Hirose M (2011) Pseudo-gustatory display system based on cross-modal integration of vision, olfaction and gustation. In: Virtual reality conference (VR), IEEE, pp 127–130. doi:10.1109/VR.2011.5759450

Nijholt A, Stock O, Strapparava C, Ritchie G, Manurung R, Waller A (2006) Computational humor. Intell Syst IEEE 21(2):59–69. doi:10.1109/MIS.2006.22

Nijholt A (2014) Towards humor modelling and facilitation in smart environments. In: Advances in affective and pleasurable design, pp 2997–3006. doi:10.1109/SIoT.2014.8

Norman DA (2010) Natural user interfaces are not natural. Interactions 17(3):6–10. doi:10.1145/1744161.1744163

Norman DA, Nielsen J (2010) Gestural interfaces: a step backward in usability. Interactions 17(5):46–49. doi:10.1145/1836216.1836228

Pfab I, Willemse CJAM (2015) Design of a wearable research tool for warm mediated social touches. In: Affective computing and intelligent interaction (ACII), international conference, IEEE, pp 976–981. doi:10.1109/ACII.2015.7344694

Picard RW (1997) Affective computing, vol 252. MIT Press, Cambridge

Qiang X, Li J, Huiyun L, Eklow B (2012) Yield enhancement for 3D-stacked ICs: recent advances and challenges. In: Design automation conference (ASP-DAC), 17th Asia and South Pacific, pp 731–737. doi:10.1109/ASPDAC.2012.6165052

Qiu L, Jin T, Lu B, Zhou Z (2015) Detection of micro-motion targets in buildings for through-the-wall radar. In: Radar conference (EuRAD), European, pp 209–212. doi:10.1109/EuRAD.2015.7346274

Rahman T, Adams AT, Zhang M, Cherry E, Choudhury T (2015) BodyBeat: eavesdropping on our body using a wearable microphone. In: GetMobile: mobile computing and communications, vol 19, no 1, pp 14–17. doi:10.1145/2786984.2786989

Rakkolainen I, Sand A, Palovuori K (2015) Midair user interfaces employing particle screens. Comput Graph Appl IEEE 35(2):96–102. doi:10.1109/MCG.2015.39

Ranasinghe N, Nakatsu R, Nii H, Gopalakrishnakone P (2012) Tongue mounted interface for digitally actuating the sense of taste. In: Wearable computers (ISWC), 16th international symposium, pp 80–87. doi:10.1109/ISWC.2012.16

Ranasinghe N, Suthokumar G, Lee KY, Do EYL (2015) Digital flavor: towards digitally simulating virtual flavors. In: Proceedings of the ACM on international conference on multimodal interaction, pp 139–146. doi:10.1145/2818346.2820761

Ringeval F, Eyben F, Kroupi E, Yuce A, Thiran JP, Ebrahimi T, Lalanne D, Schuller B (2015) Prediction of asynchronous dimensional emotion ratings from audiovisual and physiological data. Pattern Recognit Lett 66:22–30. doi:10.1016/j.patrec.2014.11.007

Roseboom W, Linares D, Nishida S (2015) Sensory adaptation for timing perception. Proc R Soc Lond B Biol Sci 282(1805). doi:10.1098/rspb.2014.2833

Samani H, Teh J, Saadatian E, Nakatsu R (2013) XOXO: haptic interface for mediated intimacy. In: Next-generation electronics (ISNE), IEEE International Symposium, pp 256–259. doi:10.1109/ISNE.2013.6512342

Sato J, Ohtsu K, Bannai Y, Okada K (2009) Effective presentation technique of scent using small ejection quantities of odor. In: Virtual reality conference. IEEE, pp 151–158. doi:10.1109/VR.2009.4811015

Savage N (2012) Electronic cotton. Spectr IEEE 49(1):16–18. doi:10.1109/MSPEC.2012.6117819

Seesaard T, Seaon S, Lorwongtragool P, Kerdcharoen T (2014) On-cloth wearable E-nose for monitoring and discrimination of body odor signature. In: Intelligent sensors, sensor networks and information processing (ISSNIP), IEEE ninth international conference, pp 1–5. doi:10.1109/ISSNIP.2014.6827634

Shackel B (1997) Human-computer interaction-whence and whither? J Am Soc Inf Sci 48(11):970–986. doi:10.1002/(SICI)1097-4571(199711)48:11<970:AID-ASI2>3.0.CO;2-Z

Shirasu M, Touhara K (2011) The scent of disease: volatile organic compounds of the human body related to disease and disorder. J Biochem 150(3):257–266. doi:10.1093/jb/mvr090

Skrandies W, Zschieschang R (2015) Olfactory and Gustatory functions and its relation to body weight. Physiol Behav 142:1–4. doi:10.1016/j.physbeh.2015.01.024

Soda S, Izumi S, Nakamura M, Kawaguchi H, Matsumoto S, Yoshimoto M (2013) Introducing multiple microphone arrays for enhancing smart home voice control. Technicl report of IEICEEA 112(388):19–24. http://ci.nii.ac.jp/naid/110009727674/en/

Sodhi R, Poupyrev I, Glisson M, Israr A (2013) AIREAL: interactive tactile experiences in free air. ACM Trans Graph (TOG) 32(4):134:1–10. doi:10.1145/2461912.2462007

Srinivasan S, Raj B, Ezzat T (2010) Ultrasonic sensing for robust speech recognition. In: Acoustics speech and signal processing (ICASSP), IEEE international conference, pp 5102–5105. doi:10.1109/ICASSP.2010.5495039

Strickland E (2016) DNA manufacturing enters the age of mass production. Spectr IEEE 53(1):55–56. doi:10.1109/MSPEC.2016.7367469

Sung-Eun K, Taewook K, Junghwan H, Sungweon K, Kyunghwan P (2014) Sound transmission through the human body with digital weaver modulation (DWM) method. In: Systems conference (SysCon), 8th annual IEEE, pp 176–179. doi:10.1109/SysCon.2014.6819254

Sunghyun P, Scherer S, Gratch J, Carnevale P, Morency L (2015) I can already guess your answer: predicting respondent reactions during dyadic negotation. Affect Comput IEEE Trans 6(2):86–96. doi:10.1109/TAFFC.2015.2396079

Tasaka T, Hamada N (2012) Speaker dependent visual word recognition by using sequential mouth shape codes. In: Intelligent signal processing and communications systems (ISPACS), international symposium, pp 96–101. doi:10.1109/ISPACS.2012.6473460

Tasli HE, den Uyl TM, Boujut H, Zaharia T (2015) Real-time facial character animation. In: Automatic face and gesture recognition (FG), 11th IEEE international conference and workshops, vol 1, pp 1–1. doi:10.1109/FG.2015.7163173

Teh JKS, Cheok AD, Peiris RL, Choi Y, Thuong V, Lai S (2008) Huggy Pajama: a mobile parent and child hugging communication system. In: Proceedings of the 7th international conference on Interaction design and children, ACM, pp 250–257. doi:10.1145/1463689.1463763

Thomas H, Hunt A, Neuhoff J (2011) The sonification handbook. Logos Verlag, Berlin

Toda T, Nakagiri M, Shikano K (2012) Statistical voice conversion techniques for body-conducted unvoiced speech enhancement. Audio Speech Lang Process IEEE Trans 20(9):2505–2517. doi:10.1109/TASL.2012.2205241

Trawicki MB, Johnson MT, An J, Osiejuk TS (2012) Multichannel speech recognition using distributed microphone signal fusion strategies. In: Audio, language and image processing (ICALIP), international conference, pp 1146–1150. doi:10.1109/ICALIP.2012.6376789

Turan MAT, Erzin E (2016) Source and filter estimation for throat-microphone speech enhancement. Audio Speech Lang Process IEEE/ACM Trans 24(2):265–275. doi:10.1109/TASLP.2015.2499040

Vaz A, Ubarretxena A, Zalbide I, Pardo D, Solar H, Garcia-Alonso A, Berenguer R (2010) Full passive UHF tag with a temperature sensor suitable for human body temperature monitoring. Circuits and systems II: express briefs, IEEE Transactions 57(2):95–99. doi:10.1109/TCSII.2010.2040314

Voelker C, Maempel S, Kornadt O (2014) Measuring the human body’s microclimate using a thermal manikin. Indoor Air 24(6):567–579. doi:10.1111/ina.12112

Wade NJ (2003) The search for a sixth sense: The cases for vestibular, muscle, and temperature senses. J Hist Neurosci 12(2):175–202. doi:10.1076/jhin.12.2.175.15539

Watson JB, Rayner R (2000) Conditioned emotional reactions. Am Psychol 55(3):313–317. doi:10.1037/0003-066X.55.3.313

Webster MA (2012) Evolving concepts of sensory adaptation. F1000 Biol Rep 4:21. doi:10.3410/B4-21

Weerasinghe P, Marasinghe A, Ranaweera R, Amarakeerthi S, Cohen M (2014) Emotion expression for affective social communication. Int J Affect Eng 13(4):261–268. doi:10.5057/ijae.13.268

Weiser M (1991) The Computer for the 21st Century. Sci Am 265(3):94–104. doi:10.1038/scientificamerican0991-94

Wiesmeier IK, Dalin D, Maurer C (2015) Elderly use proprioception rather than visual and vestibular cues for postural motor control. Front Aging Neurosci 7(97). doi:10.3389/fnagi.2015.00097

Woon-Seng G, Ee-Leng T, Kuo SM (2011) Audio projection. Signal Process Mag IEEE 28(1):43–57. doi:10.1109/MSP.2010.938755

Yadav J, Rao KS (2015) Generation of emotional speech by prosody imposition on sentence, word and syllable level fragments of neutral speech. In: Cognitive computing and information processing (CCIP), international conference, pp 1–5. doi:10.1109/CCIP.2015.7100694

Yamamoto G, Hyry J, Krichenbauer M, Taketomi T, Sandor C, Kato H, Pulli P (2015) A user interface design for the elderly using a projection tabletop system. In: Virtual and augmented assistive technology (VAAT), 3rd IEEE VR international workshop, pp 29–32. doi:10.1109/VAAT.2015.7155407

Yamauchi H, Uchiyama H, Ohtani N, Ohta M (2014) Unusual animal behavior preceding the 2011 earthquake off the pacific coast of Tohoku, Japan: a way to predict the approach of large earthquakes. Animals 4(2):131–145. doi:10.3390/ani4020131

Yanagida Y, Kajima M, Suzuki S, Yoshioka Y (2013) Pilot study for generating dynamic olfactory field using scent projectors. In: Virtual reality (VR), IEEE, pp 151–152. doi:10.1109/VR.2013.6549407

Zhen B, Blackwell AF (2013) See-through window vs. magic mirror: a comparison in supporting visual-motor tasks. In: Mixed and augmented reality (ISMAR), IEEE international symposium, pp 239–240. doi:10.1109/ISMAR.2013.6671784

Zhexiang C, Hanjun J, Jingpei X, Heng L, Zhaoyang W, Jingjing D, Kai Y, Zhihua W (2014) A smart capsule for in-body pH and temperature continuous monitoring. In: Circuits and systems (MWSCAS), IEEE 57th international Midwest symposium, pp 314–317. doi:10.1109/MWSCAS.2014.6908415

Zysset C, Munzenrieder N, Kinkeldei T, Cherenack K, Troster G (2012) Woven active-matrix display. Electron Devices IEEE Trans 59(3):721–728. doi:10.1109/TED.2011.2180724

Author information

Authors and Affiliations

Corresponding author