Abstract

In complex visual representations, there are several possible challenges for the visual perception that might be eased by adding sound as a second modality (i.e. sonification). It was hypothesized that sonification would support visual perception when facing challenges such as simultaneous brightness contrast or the Mach band phenomena. This hypothesis was investigated with an interactive sonification test, yielding objective measures (accuracy and response time) as well as subjective measures of sonification benefit. In the test, the participant’s task was to mark the vertical pixel line having the highest intensity level. This was done in a condition without sonification and in three conditions where the intensity level was mapped to different musical elements. The results showed that there was a benefit of sonification, with higher accuracy when sonification was used compared to no sonification. This result was also supported by the subjective measurement. The results also showed longer response times when sonification was used. This suggests that the use and processing of the additional information took more time, leading to longer response times but also higher accuracy. There were no differences between the three sonification conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data are produced by many different research disciplines, resulting in data ranging from static data to dynamic temporal data sets. It is common to use different visual representations to present this information, either to facilitate data exploration for researchers or in outreach activities for sharing information with fellow researchers or the public. Employing visualization also entails exposure to general challenges in visual perception. One of these challenges is known as simultaneous brightness contrast [36], as shown in Fig. 1. Simultaneous brightness contrast happens when a colored patch with a set luminance is perceived as brighter when it is placed in a region surrounded by dark hues compared to when it is placed in region with overall lighter hues. This will in turn negatively affect the perception of density levels as well as strength or amount in the data encoded as intensity levels in visual representations. As differences in these levels might be essential for understanding and interpreting a visualization correctly, simultaneous brightness contrast might be a considerable impediment for visual data exploration. Another challenge for the human visual perception is the Mach band phenomenon [19], as shown in Fig. 2. The Mach band phenomena occurs at boundaries between colors, or between shades of colors, and is perceived as a gradient just next to the boundary even if the actual color is solid. This will also affect the perception the visual representation negatively.

Visualization is often applied to data of large size and complex structure. To facilitate perception of visual representations, it is common to employ renderings based on the data density [6]. This is typically achieved by rendering semi-transparent objects and additively blending them together. In this way, it is possible to visualize more than one data point in the same position in the visual representation. However, using density information has the drawback that it is difficult to perceive the actual number of blended data points in the representation, as the intensity level (luminance) in the visual representation might become saturated. Also, as discussed above, simultaneous brightness contrast and the Mach band phenomenon further adds to the challenges in interpreting and understanding of the data in the visual representation.

An example of simultaneous brightness contrast where the gradient from white to black in the background affect the perception of intensity of the four squares. Even though the four squares have the same hue and the same intensity, the one to the left is (usually) perceived as darker compared to the square to the right

Shortcomings related to the inherent function of visual perception can never be overcome by visualization alone. However, they could be addressed by adding sound as a complementary modality to sonify density levels, for example. The research area of sonification is rather new and emerging, and focuses primarily on turning data into sound [11]. The reason to use sonification is to enhance and clarify visual representations of data, and to simplify the understanding of these. Auditory display, and sonification, could to be considered as a complementary modality to the visual modality [9, 11, 24], and the aural modality could be described as another set of senses (i.e. the ears) providing additional input and further information [11, 34]. By combining the visual and the aural modalities it should be possible to design more effective and efficient multimodal visual representations compared to when using visual stimuli alone [31].

Even though the concept of sonification and data exploration is not new (see for example [8]), there are only a few examples of evaluating the application of visualization and sonification in combination, for example in connection to depth of market stock data [22], to augment 3D visualization [17], and to enhance visualization of molecular simulations [25]. These studies suggest that there is a benefit of sonification in connection to visualization. Some research has considered sonification in connection to data exploration and scatter plots [7, 26]. Even though none of these two studies evaluated simultaneous visual and sonic representation of data, they suggest that there is a benefit of sonification in connection to visualization. Similarly, a beneficial effect of sonification was found when sonification was used for data exploration in scatter plots and parallel coordinates [27, 28].

There are different approaches to sonification, and the use of sounds in the sonification. For example, a set of data might be converted to a soundwave, or a histogram can be translated into frequencies [11, 24]. It might be questioned how intuitive and pleasant this type of sonification is, and to what extent it is able to convey information and meaning to a listener, at least to an inexperienced listener. Some studies suggest that natural real-world sounds forming auditory icons [10], like rain and thunder to sonify weather data, might be used in sonification for monitoring and control (see for example [3, 13, 16]). However, it is dubious if auditory icons would be useful in relation to all types of visual representations or data sets, as more complex sonifications with a multiplicity of combined auditory icons might rather result in noise than forming a soundscape with meaningful holistic characteristics.

Sonification can also use musical sounds, which is interesting as the use of musical elements give good control over the design of the sounds, and enables potentially useful musical components such as timbre, harmonics, melody, rhythm, tempo, and amplitude (e.g. [4, 14, 18, 32]). Musical sounds is here referred to as deliberately designed and composed sounds, based on a music-theoretical and aesthetic approach. Previous studies have shown promising results for the concept of musical sonification [28, 30].

There is a number of musical elements [4, 18, 32] that might be utilized in sonification. The key is the combination of tones that forms chords producing different harmonies where, traditionally, a major chord is more positive than a minor chord, the harmonics is the sequence of chords which creates the overall impression of the key. More complex harmonies might be more captivating for a listener compared to simpler harmonies [12], and dissonant chords are experienced as more unpleasant compared to harmonious major or minor chords [23]. Furthermore, timbre is the “color” of the total sound, built up by the different sounds and their inherent characteristics. Softer and more dull timbres are experienced as more negative compared to brighter timbre [14]. Furthermore, pitch is the subjective perception of frequency, where higher pitch (i.e. brighter tones) as well as lower pitch (i.e. lower tones) is more activating for the listener compared to tones in the middle register, and the melody is the sequential contour of the pitches used, where upward melodies are generally more positive compared to downward melodic movements [14]. Moreover, meter, rhythm, and tempo are closely linked together, where meter is the basic rhythmic pattern of beats in a piece of music, while rhythm is the movement in time in which musical elements take place, and tempo is the time interval for the rhythm. A fast-paced rhythm tends to be more activating for a listener compared to a slower paced rhythm [12]. Finally, sound level is the amplitude of the sound, where a louder sound level might give a higher activity in the listener compared to a lower sound level [12, 14]. Designing a sonification using musical elements and sounds, according to the above discussion, gives the opportunity to create an emergent musical timbre, that should be able to convey different aspects of information even in complex visual representations. Furthermore, musical sounds are well adapted, at least on a more general level, to convey meaning, information, and emotions (see for example the discussions in [1, 5, 15, 29]), and musical structures have an ability to convey a multitude of information to listeners quickly and intuitively [35]. This suggests that musical sounds are well suited for sonification, and this sonification approach will hereon be referred to as musical sonification.

The aim of this study is to explore the use of composed and deliberately designed musical sounds in connection to data visualization, to reduce a possible negative effect of simultaneous brightness contrast and the Mach band phenomena. These sounds are used to sonify intensity levels, i.e. brightness, in visual representations. These visual representations are constructed to mimic a part of a complex “real world” visualization. The main research interest is to investigate whether there is a benefit of musical sonification, and to what extent two different musical elements: the timbre (i.e. the frequency components of the sonification) and the sound level (i.e. the amplitude of the sonification), as well as the combination of these two elements, contributes to a possible benefit of sonification. It is hypothesized that the sonification will facilitate identifying the highest density level marked by the part in the visual representation with highest intensity.

2 Method

To address this hypothesis, an interactive test using musical sonification was devised to investigate: (1) if there is a benefit of musical sonification when exploring visual representations designed to illustrate different levels of density levels and simultaneous brightness contrast, and (2) which of the three suggested musical sonification settings (timbre, sound level, and the combination of these two) that would be most effective to sonify the visual representations.

2.1 The visual representations

The visual representations (see example in Fig. 3) were designed to mimic a complex visualization of data. These visual representations contained visual elements that created challenges for the visual perception such as simultaneous brightness contrast and the Mach band phenomenon. The visual representations were created in Matlab (R2016a) as a variant of sine wave grating, by mixing two sinusoids in different frequencies, and then by adding some random ripples with applied Gaussian filter to the mixed sinusoids, and finally by adding a triangle wave to create a peak level in the combined wave form. Each output wave form created in this way was different by circularly shifting the elements in the array containing the sinusoids, and by the randomness of the ripples, as well as by variations of the slope and the magnitudes of the triangle wave. The parameters were changed within sets of ten wave forms, thus creating wave forms with different difficulty levels regarding identifying the highest peak level but with balanced difficulty level within the set of ten images. Then, a total of 90 images were created with an overall balanced difficulty level. The wave form was then scaled to 8 bit integers, and saved as intensity levels for the green channel in 24 bit RGB images in PNG format (Portable Network Graphics). The green color channel was used because the human visual perception is maximally sensible to contrasts between no light, i.e. black, and green with full luminance. This is due to the fact that green has higher perceived brightness (luminance) than red or blue of equal power [2, 33].

2.2 The sonification

The sonification used for this study was created in SuperCollider (3.8.0), which is an environment and programming language for real-time audio synthesis [20, 21]. In SuperCollider a synth definition was used that consisted of seven triangle waves, somewhat detuned around the fundamental frequency (− 6, − 4, − 2, + 2, + 4, and + 6 cents relative the fundamental frequency) to create a richer harmonic content. Cent is a logarithmic unit, where the interval between each semitone is divided into 100 cent. Eleven instances of the synth definition was used to form a rather large C major chord consisting of eleven tones (see Table 1). These tones were chosen to form the sonification with a musical approach to sonification in mind. This chord was mixed with pink noise at a low sound level to further ensure that a wide frequency band sound was used for the sonification, but still with a pleasant harmonic content.

2.3 Mapping the sonification to the visual representation

Two aspects of the composed sound were modulated due to the visual representation, amplitude and cutoff frequency. The intensity level of the pixels in the green RGB channel in the visual representation (see Fig. 3) was used to control the sound modulation. For the filter modulation (hereafter referred to as BPF), the sound passed through a second order band pass filter with a Q value of 0.5, where Q is conventionally defined as the cutoff frequency divided by the bandwidth. The cutoff frequency was mapped via a linear to exponential conversion where the lowest intensity level generated a cutoff frequency of 100 Hz while the highest intensity levels yielded a cutoff frequency of 6000 Hz.

The amplitude modulation (hereafter referred to as AM) of the sound was mapped via a linear to exponential conversion, where the amplitude level was almost completely attenuated for the darkest regions in the visual representation, while there was no attenuation for the highest intensity levels. When amplitude modulation was used alone the original unfiltered sound was used for the sonification, and when filter modulation only was used the sound level was somewhat attenuated to match the overall sound level of the amplitude modulation.

Finally, when both modulation settings (hereafter referred to as BPFAM) were used, the sound first passed through the band pass filter and was then attenuated to the right amplitude level according to the intensity levels in the visual representation. A short demonstration of the sonification can be found here: https://vimeo.com/241283797.

2.4 Participants

For the present study, 25 participants (8 female and 17 male) with a median age of 34 (range 26–46) with normal, or corrected to normal, vision and self-reported normal hearing were recruited. The participants were all students or employees at Linköping University. No compensation for participating in the study was provided.

2.5 The test setup

The test was developed to explore the possible benefit of sonification, and was divided into four conditions: no sonification, sonification with band pass filtering, sonification with amplitude modulation, and sonification with both band pass filtering and amplitude modulation.

The test session took 20 min at the most, and was initiated with ten learning trials for familiarizing and to reduce learning effects. The learning trials consisted of three visual representations with no sonification, followed by two with band pass filtering, then two with amplitude modulation, and finally three with combined band pass filtering and amplitude modulation of the musical sonification. After the training, the test was divided into four parts according to the four sonification conditions, with twenty visual representations in each. The order of these four parts was balanced between subjects to avoid order effects. Between each part of the test, there was a short break where the participants answered a questionnaire about that particular sonification condition.

The test setup. From above: “Find the brightest vertical line. Number 11 of 90. Try to answer as fast as possible.”, followed by “Changes in sound level and frequency”, the visual representation, the interactive slider used to select the vertical pixel line in the visual representation, and the button used to confirm the selection and for starting the next trial “Push this button to mark the brightest line.” (all texts translated from Swedish)

In the test, the participants moved a slider by using the computer mouse, to mark the highest intensity (i.e. brightest) level in the visual representation (see Fig. 4). The slider position corresponded to one pixel wide vertical line, and the sonification was modulated according to the intensity level for that pixel line. When the participants were pleased with their decision, they pressed the button just beneath the slider to mark the perceived pixel line with the highest intensity level and to initiate the next trial. The participants were asked to give answers as quickly as possible. The accuracy in the participants’ selection, the selected intensity level in comparison with the highest intensity level in the visual representation, as well as response time were recorded. The accuracy for each sonification condition was calculated as the mean error (the highest intensity level minus the answer given) in twenty answers, and the response time for each condition was the mean response time for that condition. The condition was presented above each visualization to prepare the participant and to facilitate the participant’s interpretation of the condition.

The experiment took place in a single session in a quiet office. Even if there were ambient sounds, the test environment was deemed quiet enough not to affect the tests conducted. Visual stimuli were presented on a 21” computer screen and auditory stimuli through a pair of Beyerdynamic DT-770 Pro headphones. The output of the headphones gave an auditory stimulation of approximately 65 dB SPL.

After the test, the participants answered the questionnaire again about whether they experienced a benefit of the sonification in general or not, as well as their experience of the different sonification conditions. Answers in the questionnaire were given via a 5-point Likert scale with ratings that ranged from 1 (strongly disagree) to 5 (strongly agree). Thus, the experiment yielded objective measures of sonification benefit, accuracy and response time, as well as subjective measures from the questionnaire.

3 Results

The accuracy was measured as the highest intensity level minus the participant’s selected intensity level, hence a lower measure means better performance. The mean accuracy for each participant was the mean error for twenty measurements for each sonification condition. The mean accuracy was 6.8 (95% CI [4.24, 9.43]) for No sonification, 2.9 (95% CI [1.64, 4.10]) for BPF, 2.9 (95% CI [1.99, 3.69]) for AM, and 2.3 (95% CI [1.63, 2.95]) for BPFAM, see Fig. 5. When accuracy was analysed using a repeated measures ANOVA with one within-subject factor, sonification condition (No sonification, BPF, AM, BPFAM), a main effect of sonification condition was found (\(\textit{F}(3,72)=14.10, \textit{p}<0.001, \eta _\textit{p}^{2}=0.37\)), where accuracy was better with sonification compared to the No sonification condition. Post-hoc tests with Bonferroni correction for multiple comparisons revealed a significant difference between No sonification and BPF (\(\textit{p}=0.005\)), as well as between No sonification and AM (\(\textit{p}=0.003\)), and between No sonification and BPFAM (\(\textit{p}=0.003\)), but there were no differences found between the three sonification conditions and there were no interactions found.

The mean response time was 6.1 s (95% CI [5.33, 6.88]) for No sonification, 11.5 (95% CI [9.07, 13.85]) for BPF, 11.1 (95% CI [8.68, 13.54]) for AM, and 10.5 (95% CI [8.51, 12.45]) for BPFAM, see Fig. 6. When response time was analysed using a repeated measures ANOVA with one within-subject factor, sonification condition (No sonification, BPF, AM, BPFAM), a main effect of sonification condition was found (\(\textit{F}(3,72)=19.05, \textit{p}<0.001, \eta _\textit{p}^{2}=0.44\)), where response times were longer when sonification was used compared to the No sonification condition. Post-hoc tests with Bonferroni correction for multiple comparisons revealed a significant difference between No sonification and BPF (\(\textit{p}<0.001\)), as well as between No sonification and AM (\(\textit{p}<0.001\)), and between No sonification and BPFAM (\(\textit{p}<0.001\)), but there were no differences in response time found between the three sonification conditions and there were no interactions found.

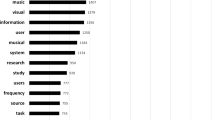

The subjective measures from the questionnaire show-ed that the participants generally experienced sonification as helpful, see Fig. 7. This was measured as a lower ranking on the difficulty level (1 = very hard, to 5 = very easy) for the task without respectively with sonification. The median ranking for No sonification was 2 (range 1–4), and for sonification it was 4 (range 3–5). These results suggest that the task was experienced as easier with sonification compared to without sonification. The experienced benefit of sonification for improving accuracy was also measured (1 = no help, to 5 = much help). The median ranking was 5 (range 2–5), which suggest that the participants were aware of a benefit of sonification. And, finally, the experienced benefit of sonification for increasing response time was measured (1 = not faster, to 5 = much faster), where the median ranking was 4 (range 1–5), proposing that some participants thought they experienced a decrease in response time when sonification was used while others experienced an increase in response time.

4 Discussion

The visual representations used within this test setup contained visual elements that created problematic simultaneous brightness contrasts and Mach band phenomena for the user. Even if the task of marking the vertical pixel line with the highest intensity level might be considered as not very relevant for practical applications, the challenges for the visual perception that arise from these visual representations exist in many other visual representations in different visualization techniques used for data exploration, such as when using parallel coordinates for analyzing large multivariate data resulting in visual clutter or when exploring two dense populations in a scatter plot. Even though it is true that a simple mathematical test would be sufficient to mark the highest intensity level in a data set, the simple task used in this study should be considered as a simplification to enable the examination of musical sonification in a controlled setting. The challenge and problem with density levels, simultaneous brightness contrast and the Mach band phenomena still exist in larger and more complex visual representations, used in a variety of research disciplines and public outreach activities; hence the outcome of this experiment should be considered in a broader visualization perspective. Consequently, the knowledge gained within present study, that sonification supports the visual perception, should be generalizable to other visual representations than those used in the present study, such as parallel coordinates or scatter plots where the perception and exploration of the visual representation might be negatively affected by, for example, simultaneous brightness contrast.

The results found in the present study suggest that sonification can improve perception of a visual representation with regards to color intensity, thus reducing the negative effects simultaneous brightness contrast and the Mach band phenomenon might have on the visual perceptual system. Even if the performance without sonification was fairly high, the effect of sonification was nevertheless demonstrated by increased accuracy when sonification was used. This assumption was also supported by the subjective measurements. The subjective measurements from the questionnaire supported the objective measurements with regards to improved accuracy when sonification was used. It should be noted, though, that subjective ratings always may be biased in that participants may want to “do well” and please the researcher rather than sharing their actual experience.

Response time was also found to be longer when sonification was used regardless of sonification (i.e. BPF, AM, BPFAM), as compared to the condition with no sonification. This increase in response time suggests that the participants used the sonification to refine their selection, and that this took longer time. This hypothesis is also supported by the fact that accuracy increased when sonification was used. Consequently, the amount of information for the perception was not enough for full accuracy in the No sonification condition, but when another modality was added, more information was provided which enabled a higher accuracy, but this refined comparison also required longer time. Interestingly, some of the participants stated in the subjective measurements that sonification improved their response time, even if this was not the case according to the objective measure. It might be hypothesized that some participants actually believed that they performed the task faster, when they rather performed the task more accurately. There was nevertheless no statistical significant support for the subjective statement of performing the task faster.

The results also show that the specific sonification condition (i.e. BPF, AM, BPFAM) did not affect the outcome of the experiment. The accuracy was better and the response time longer with sonification regardless of the three sonification conditions tested. When studying mean accuracy as well as mean response time the combination of mapping both the cut-off frequency of the bandpass filter as well as the amplitude of the sonification had higher accuracy and faster response times, however there were no statistical significant differences found between the three sonification conditions. It might be hypothesized that mapping two musical aspects of the sonification to the visualization might provide a stronger effect for the auditory perception, but this was not proved in the present study.

It could be argued that the demonstrated benefit of sonification is because two modalities are better than one modality for this kind of task, as two modalities provide more information which facilitates a higher accuracy. However, as the mapping between intensity levels and the sonification was done linearly to exponentially, the sonification provided a higher degree of difference in intensity levels where the participant needed more information to perform more accurately. As a result, the accuracy could be expected to be higher when sonification was used compared to without sonification. However, the perception of amplitude as well as frequency is also nonlinear, which justifies the nonlinear mapping between intensity in the visual representation and the modulation in the sonification. As a consequence, it could be argued that the aural modality by itself, would perform better than the visual modality alone. Even if this was not investigated in the present study it could at least briefly be discussed. The two modalities are inherently different, where an image is spatial while a sound is temporal (at least as sound has been used within the present study). In the experiment used in the present study, only one sound was present at a time. Therefore, the participant would have to keep the changes in the sound, i.e. the envelope of the changes in frequency content and/or amplitude, in memory without visual cues in the visual representation on the display. This reasoning suggests that the use of the aural modality alone would result in poorer performance compared to performance when the visual modality alone is used, and most definitely when compared to the combination of the visual and aural modalities.

In the present study the type of sonification used in each trial was presented on the screen. The reason for this was to support the participant in understanding what change in the sonification to listen for. It could be argued that providing this information primed the participant to focus more on the sound than on the relationship between the visual representation and the sonification. However, the scope of the study was to explore how well the different sonification conditions could support the visual perception, and not how fast the participant could adjust to the different sonification conditions or how intuitive the participant experienced the link between the sonification and the brightness levels in the visual representation. From this perspective, providing this information to the participant strengthened the experimental setup, and enabled the participant to focus on using the sound as a tool to solve the task. Nevertheless, the intuitive link between brightness, as one of many possible visual properties that could be explored, and the mapping of different musical elements would be an interesting scope for future work.

5 Future work

For future work, further musical elements other than timbre (the mapping of the cutoff frequency of the band pass filter) and amplitude will be investigated. Musical elements that might be suitable for sonification is pitch, and harmony, as well as tempo and rhythm. Performance when using pitch as well as harmony could be compared to BPFAM and No sonification condition as used in the present study, thus forming a deeper understanding of the more detailed musical aspects contributing to the usefulness of sonification support for the visual perception.

It would also be interesting to explore a user’s experienced link between the visual representation and the mapping of the sonification. This could answer not only what musical elements in sonification that are useful in terms of accuracy and response time to complement a visual representation, but also what musical elements that would be more ecologically valid to use in sonification. This could be qualitatively explored by an auditory only display, where data could be sonified and the user’s task would be to explain their intuitive understanding of changes in the data based on the impression of the sonification alone.

Furthermore, it would be interesting to evaluate sonification in relation to a wider range of visual representations, as well as with “real world” data sets. This could indicate which musical elements in the sonification are most suitable to use interactively, and in combination with which kinds of visual representations for maximal usefulness of the interactive sonification. Sonification of real data would also be interesting to evaluate with domain experts to discern possible benefits of sonification in their work environment.

6 Conclusion

The results show that there was a benefit of sonification, showed by increased accuracy, in selecting the vertical pixel column with the highest color intensity in the visual representations. This suggests that sonification enhances the perception of color intensity, and thus simplifies the visual perception when it comes to challenges such as simultaneous brightness contrast and the Mach band phenomenon. This result was also supported by the subjective measurement. There were no differences between sonification conditions. Finally, the use and processing of the additional information took more time, leading to a longer response time when sonification was used as compared to when no sound was used.

References

Bresin R, Friberg A (2011) Emotion rendering in music: range and characteristic values of seven musical variables. Front Psychol 47:1068–1081. https://doi.org/10.3389/fpsyg.2013.00487

CIE (1932) Commission internationale de l’Eclairage proceedings, 1931. Cambridge University Press, Cambridge

Cohen J (1994) Monitoring background activities. In: Santa Fe Institute, studies in the sciences of complexity proceedings, vol XVIII. AddisonWesley, Reading, MA, pp 499–532

Delieège I, Sloboda JA (1997) Perception and cognition of music. Psychology Press Ltd., Hove

Eerola T, Friberg A, Bresin R (2013) Emotional expression in music: contribution, linearity, and additivity of primary musical cues. Front Psychol 4:1–12. https://doi.org/10.3389/fpsyg.2013.00487

Ellis G, Dix A (2007) A taxonomy of clutter reduction for information visualisation. IEEE Trans Vis Comput Gr 13:1216–1223. https://doi.org/10.1109/TVCG.2007.70535

Flowers JH, Buhman DC, Turnage KD (1997) Cross-modal equivalence of visual and auditory scatterplots for exploring bivariate data samples. Hum Factors 39:341–351

Flowers JH, Buhman DC, Turnage KD (2005) Data sonification from the desktop: should sound be part of standard data analysis software? ACM Trans Appl Percept 2:467–472

Franinovic K, Serafin S (2013) Sonic interaction design. MIT Press, Cambridge

Halim Z, Baig R, Bashir S (2009) Sonification: a novel approach towards data mining. In: Proceedings of 2nd international conference on emerging technologies (ICET 2006), pp 548–553

Hermann T, Hunt A, Neuhoff JG (2011) The sonification handbook, 1st edn. Logos Publishing House, Berlin

Iakovides SA, Iliadou VT, Bizeli VT, Kaprinis SG, Fountoulakis KN, Kaprinis GS (2004) Psychophysiology and psychoacoustics of music: perception of complex sound in normal subjects and psychiatric patients. Ann Gen Hosp Psychiatry 3:1–4

Jung R (2008) Ambience for auditory displays: embedded musical instruments as peripheral audio cues. In: Proceedings of 14th international conference on auditory display (ICAD 2008)

Juslin P, Laukka P (2004) Expression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listening. J New Music Res 33:217–238

Juslin PN, Laukkan P (2003) Communication of emotions in vocal expression and music performance: different channels, same code? Psychol Bull 129:770–814. https://doi.org/10.1037/0033-2909.129.5.770

Kainulainen A, Turunen M, Hakulinen J (2006) An architecture for presenting auditory awareness information in pervasive computing environments. In: Proceedings of 12th meeting of the international conference on auditory display (ICAD 2006), pp 121–128

Kasakevich M, Boulanger P, Bischof WF, Garcia M (2007) Augmentation of visualisation using sonification: a case study in computational fluid dynamics. In: Proceedings of IPT-EGVE symposium. The Eurographics Association, Germany, Europe, pp 89–94. https://doi.org/10.2312/PE/VE2007Short/089-094

Levitin DJ (2006) This is your brain on music: the science of a human obsession. Dutton/Penguin Books, New York

Lotto RB, Williams SM, Purves D (1999) Mach bands as empirically derived associations. In: Proceedings of National Academy of Sciences, vol 96. National Academy of Sciences of the United States of America, Los Alamitos, pp 5245–5250

McCartney J (1996) Supercollider: a new real-time synthesis language. In: Proceedings of ICMC

McCartney J (2002) Rethinking the computer music language: supercollider. IEEE Comput Gr Appl 26:61–68

Nesbitt KV, Barrass S (2002) Evaluation of a multimodal sonification and visualisation of depth of market stock data. In: Proceedings of international conference on auditory display (ICAD). International Community on Auditory Display, United States, pp 2–5

Pallesen KJ, Brattico E, Bailey C, Korvenoja A, Koivisto J, Gjedde A, Carlson S (2005) Emotion processing of major, minor, and dissonant chords: a functional magnetic resonance imaging study. Ann N Y Acad Sci 1060:450–453. https://doi.org/10.1196/annals.1360.047

Pinch T, Bijsterveld K (2012) The Oxford handbook of sound studies. Oxford University Press, Oxford. https://doi.org/10.1093/oxfordhb/9780195388947.001.0001

Rau B, Frieß F, Krone M, Müller C, Ertl T (2015) Enhancing visualization of molecular simulations using sonification. In: Proceedings of IEEE 1st international workshop on virtual and augmented reality for molecular science (VARMS@IEEEVR 2015). The Eurographics Association, Arles, France, pp 25–30. https://doi.org/10.1109/VARMS.2015.7151725

Riedenklau E, Hermann T, Ritter H (2010) Tangible active objects and interactive sonification as a scatter plot alternative for the visually impaired. In: Proceedings of 16th international conference on auditory display (ICAD-2010). International Community for Auditory Display, Germany, Europe, pp 1–7

Rönnberg N, Hallström G, Erlandsson T, Johansson J (2016) Sonification support for information visualization dense data displays. In: Proceedings of IEEE VIS Infovis Posters (VIS2016). IEEE VIS, Baltimore, Maryland, pp 1–2

Rönnberg N, Johansson J (2016) Interactive sonification for visual dense data displays. In: Proceedings of 5th interactive sonification workshop (ISON-2016). CITEC, Bielefeld University, Germany, pp 63–67

Rönnberg N, Löwgren J (2016) The sound challenge to visualization design research. In: Proceedings of EmoVis 2016, ACM IUI 2016 workshop on emotion and visualization, vol 103, pp 31–34. Linköping electronic conference proceedings, Sweden

Rönnberg N, Lundberg J, Löwgren J (2016) Sonifying the periphery: supporting the formation of gestalt in air traffic control. In: Proceedings of 5th interactive sonification workshop (ISON-2016). CITEC, Bielefeld University, Germany, pp 23–27

Rosli MHW, Cabrera A (2015) Gestalt principles in multimodal data representation. IEEE Comput Gr Appl 32:80–87

Seashore CE (1967) Psychology of music. Dover, New York

Smith T, Guild J (1931) The C.I.E colorimetric standards and their use. Trans Opt Soc 33:73–134. https://doi.org/10.1088/1475-4878/33/3/301

Tran QT, Mynatt ED (2000) Music monitor: ambient musical data for the home. In: Proceedings of IFIP WG 9.3 international conference on home oriented informatics and telematics (HOIT 2000), vol 173. IFIP conference proceedings, Kluwer, pp 85–92

Tsuchiya T, Freeman J, Lerner LW (2006) Data-to-music API: real-time data-agnostic sonification with musical structure models. In: Proceedings of 21st international conference on auditory display (ICAD 2015), pp 244–251

Ware C (2013) Information visualization: perception for design, 3rd edn. Morgan Kaufmann Publishers Inc., San Francisco

Acknowledgements

Open access funding provided by Linköping University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 16293 KB)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rönnberg, N. Sonification supports perception of brightness contrast. J Multimodal User Interfaces 13, 373–381 (2019). https://doi.org/10.1007/s12193-019-00311-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-019-00311-0