Abstract

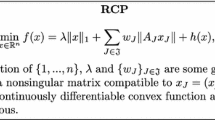

In recent years, the sparse and group sparse optimization problem has attracted extensive attention due to its wide applications in statistics, bioinformatics, signal interpretation and machine learning, which yields the sparsity both in group-wise and element-wise. In this paper, the sparse and group sparse optimization problem with a nonsmooth loss function is considered, where the sparsity and group sparsity are induced by a penalty composed of a combination of \(\ell _1\) norm and \(\ell _{2,1}\) norm, so it is called the sparse group Lasso (SGLasso) problem. To solve this problem, the nonsmooth loss function is smoothed first. Then, based on the smooth approximation of the loss function, a smoothing composite proximal gradient (SCPG) algorithm is proposed. It is showed that any accumulation point of the sequence generated by SCPG algorithm is a global optimal solution of the problem. Moreover, it is proved that the convergence rate of the objective function value is \(O(\frac{1}{k^{1-\sigma }})\) where \(\sigma \in (0.5,1)\) is a constant. Finally, numerical results illustrate that the proposed SCPG algorithm is effective and robust for sparse and group sparse optimization problems. Especially, compared with some popular algorithms, SCPG algorithm has obvious advantages in anti-outlier.

Similar content being viewed by others

References

Argyriou, A., Micchelli, C.A., Pontil, M.: Efficient first order methods for linear composite reularizers. arXiv:1104.1436v1 (2011)

Allahyar, A., De Ridder, J.: FERAL: network-based classifier with application to breast cancer outcome prediction. Bioinformatics 31(12), 311–319 (2015)

Bian, W., Chen, X.J.: A smoothing proximal gradient algorithm for nonsmooth convex regression with cardinality penalty. SIAM J. Numer. Anal. 58(1), 858–883 (2020)

Bickel, P.J., Ritov, Y.: Simultaneous analysis of Lasso and Dantzig selector. Ann. Stat. 37(4), 1705–1732 (2009)

Breheny, P., Huang, J.: Group descent algorithms for nonconvex penalized linear and logistic regression models with grouped predictors. Stat. Comput. 25(2), 173–187 (2015)

Cai, T.T., Zhang, A.R., Zhou, Y.C.: Sparse group lasso: optimal sample complexity, convergence rate, and statistical inference. IEEE Trans. Inf. Theory 68(9), 5975–6002 (2022)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2004)

Chatterjee, S., Steinhaeuser, K.: Sparse group Lasso: consistency and climate applications. In: Proceedings of the SIAM International Conference on Data Mining, pp. 47–58 (2012)

Fan, J.Q., Li, R.Z.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Friedman, J., Hastie, T., Tibshirani, R.: A note on the group Lasso and a sparse group lasso. arXiv:1001.0736 (2010)

Feng, X., Yan, S., Wu, C.: The \(\ell _{2, q}\) regularized group sparse optimization: lower bound theory, recovery bound and algorithms. Appl. Comput. Harmon. Anal. 49(2), 381–414 (2020)

Hu, Y.H., Li, C., Meng, K.W., Qin, J., Yang, X.Q.: Group sparse optimization via \(L_{p, q}\) regularization. J. Mach. Learn. Res. 18(1), 960–1011 (2017)

Jaganathan, K., Oymak, S., Hassibi, B.: Sparse phase retrieval: convex algorithms and limitations. In: IEEE International Symposium on Information Theory, pp. 1022–1026 (2013)

Jiao, Y., Jin, B., Lu, X.: Group sparse recovery via the \(\ell _{0}(\ell _2)\) penalty: theory and algorithm. IEEE Trans. Signal Process. 65(4), 998–1012 (2017)

Johnstone, I.M., Lu, A.Y.: On consistency and sparsity for principal components analysis in high dimensions. J. Am. Stat. Assoc. 104(486), 682–693 (2009)

Li, W., Bian, W., Toh, K.C.: DC algorithms for a class of sparse group \(\ell _0 \) regularized optimization problems. SIAM J. Optim. 32(3), 1614–1641 (2022)

Li, X.D., Sun, D.F., Toh, K.C.: A highly efficient semismooth newton augmented Lagrangian method for solving Lasso problems. SIAM J. Optim. 28(1), 433–458 (2018)

Li, X.D., Sun, D.F., Toh, K.C.: On efficiently solving the subproblems of a level-set method for fused Lasso problems. SIAM J. Optim. 28(2), 1842–1862 (2018)

Li, Y.M., Nan, B., Zhu, J.: Multivariate sparse group Lasso for the multivariate multiple linear regression with an arbitrary group structure. Biometrics 71(2), 354–363 (2015)

Lounici, K., Pontil, M.: Taking advantage of sparsity in multi-task learning. In: The 22nd Conference on Learning Theory (2009)

Lozano, A.C., Swirszcz, G.: Multi-level Lasso for sparse multi-task regression. In: Proceedings of the 29th International Conference on Machine Learning, pp. 595–602 (2012)

Ma, Z.: Sparse principal component analysis and iterative thresholding. Ann. Stat. 41(2), 772–801 (2013)

Nikolova, M., Tan, P.: Alternating structure-adapted proximal gradient descent for nonconvex nonsmooth block-regularized problems. SIAM J. Optim. 29(3), 2053–2078 (2019)

Oymak, S., Jalali, A., Fazel, M.: Noisy estimation of simultaneously structured models: limitations of convex relaxation. In: IEEE Conference on Decision and Control, pp. 6019–6024 (2013)

Pan, L., Chen, X.: Group sparse optimization for images recovery using capped folded concave functions. SIAM J. Imaging Sci. 14(1), 1–25 (2021)

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trends Optim. 1(3), 127–239 (2014)

Peng, D.T., Chen, X.J.: Computation of second-order directional stationary points for group sparse optimization. Optim. Methods Softw. 35(2), 348–376 (2020)

Phan, D.N., Le Thi, H.A.: Group variable selection via \(\ell _{p,0}\) regularization and application to optimal scoring. Neural Netw. 118, 220–234 (2019)

Poignard, B.: Asymptotic theory of the adaptive sparse group Lasso. Ann. Inst. Stat. Math. 72, 297–328 (2020)

Qin, Z., Scheinberg, K., Goldfarb, D.: Efficient block-coordinate descent algorithms for the group Lasso. Math. Program. Comput. 5(2), 143–169 (2013)

Richtörik, P., Takáč, M.: Iteration complexity of randomized block-coordinate descent methods for minimizing a composite function. Math. Program. 144(1–2), 1–38 (2014)

Shechtman, Y., Beck, A., Beck, Y.C.: Gespar: efficient phase retrieval of sparse signals. IEEE Trans. Signal Process. 62(4), 928–938 (2014)

Silver, M., Chen, P., Li, R.: Pathways-driven sparse regression identifies pathways and genes associated with high-density lipoprotein cholesterol in two Asian cohorts. PLoS Genet. 9(11), e1003939 (2013)

Simon, N., Friedman, J., Hastie, T.: A sparse-group Lasso. J. Comput. Graph. Stat. 22(2), 231–245 (2013)

Tibshirani, R.: Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B 58(1), 267–288 (1996)

Vidyasagar, M.: Machine learning methods in the computational biology of cancer. Proc. R. Soc. A Math. Phys. Eng. Sci. 470(2167), 20140081 (2014)

Van Den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31(2), 890–912 (2009)

Wang, L., Chen, G., Li, H.: Group SCAD regression analysis for microarray time course gene expression data. Bioinformatics 23(12), 1486–1494 (2007)

Wang, W., Liang, Y., Xing, E.: Block regularized lasso for multivariate multi-response linear regression. In: Artificial Intelligence and Statistics, pp. 608–617 (2013)

Wang, M., Li, L.: Learning from binary multiway data: probabilistic tensor decomposition and its statistical optimality. J. Mach. Learn. Res. 21(1), 6146–6183 (2020)

Wu, Q.Q., Peng, D.T., Zhang, X.: Continuous exact relaxation and alternating proximal gradient algorithm for partial sparse and partial group sparse optimization problems. (2023). https://doi.org/10.13140/RG.2.2.32164.04484

Yu, Y.L.: On decomposing the proximal map. In: Proceedings of the 26th International Conference on Neural Information Processing Systems (NIPS’13), vol. 1, pp. 91–99 (2013)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B 68(1), 49–67 (2006)

Yuan, L., Liu, J., Ye, J.: Efficient methods for overlapping group Lasso. IEEE Trans. Pattern Anal. Mach. Intell. 35(9), 2104–2116 (2013)

Zhang, C.H., Zhang, S.S.: Confidence intervals for low dimensional parameters in high dimensional linear models. J. R. Stat. Soc. Ser. B 76(1), 217–242 (2014)

Zhang, A., Xia, D.: Tensor SVD: statistical and computational limits. IEEE Trans. Inf. Theory 64(11), 7311–7338 (2018)

Zhang, A., Han, R.: Optimal sparse singular value decomposition for high-dimensional high-order data. J. Am. Stat. Assoc. 114(528), 1708–1725 (2019)

Zhang, J., Yang, X.M., Li, G.X., Zhang, K.: A smoothing proximal gradient algorithm with extrapolation for the relaxation of \(\ell _0\) regularization problem. Comput. Optim. Appl. 84(3), 737–760 (2023)

Zhang, X., Peng, D.T.: Solving constrained nonsmooth group sparse optimization via group capped-\(\ell _1\) relaxation and group smoothing proximal gradient algorithm. Comput. Optim. Appl. 83(3), 801–844 (2022)

Zhang, Y.J., Zhang, N., Sun, D.F.: An efficient hessian based algorithm for solving large-scale sparse group Lasso problems. Math. Program. 179, 223–263 (2020)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (12261020), the Guizhou Provincial Science and Technology Program (ZK[2021]009), the Foundation for Selected Excellent Project of Guizhou Province for High-level Talents Back from Overseas ([2018]03), and the Research Foundation for Postgraduates of Guizhou Province (YJSCXJH[2020]085)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shen, H., Peng, D. & Zhang, X. Smoothing composite proximal gradient algorithm for sparse group Lasso problems with nonsmooth loss functions. J. Appl. Math. Comput. (2024). https://doi.org/10.1007/s12190-024-02034-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12190-024-02034-2

Keywords

- Sparse group Lasso problem

- Nonsmooth loss function

- Smoothing composite proximal gradient algorithm

- Sublinear convergence rate

- Anti-outlier