Abstract

Porosity, as one of the reservoir properties, is an important parameter to numerous studies, i.e., the reservoir’s oil/gas volume estimation or even the storage capacity measurement in the Carbon Capture Storage (CCS) project. However, an approach to estimate porosity using elastic property from the inversion propagates its error, affecting the result’s accuracy. On the other hand, direct estimation from seismic data is another approach to estimating porosity, but it poses a high non-linear problem. Thus, we propose the non-parametric machine learning approach, Gaussian Process (GP), which draws distribution over the function to solve the high non-linear problem between seismic data with porosity and quantify the prediction uncertainty simultaneously. With the help of Random Forest (RF) as the feature selection method, the GP predictions show excellent results in the blind test, a well that is completely removed from the training data, and comparison with other machine learning models. The uncertainty, standard deviation from GP prediction, can act as a quantitative evaluation of the prediction result. Moreover, we generate a new attribute based on the quartile of the standard deviation to delineate the anomaly zones. High anomaly zones are highlighted and associated with high porosity from GP and low inverted P-impedance from inversion results. Thus, applying the GP using seismic data shows its potential to characterize the reservoir property spatially, and the uncertainty offers insights into quantitative and qualitative evaluation for hydrocarbon exploration and development.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Reservoir characterization in complex lithologies poses challenges due to the inherent uncertainties of predicting its properties. Porosity as one of the reservoir properties and an important parameter in the geoscience field. It is used for multiple studies, including oil/gas accumulation volume in the field estimation (Wang et al. 2015), or to calculate the storage availability in the Carbon Capture Utilization Storage (CCUS) project (Montoya and Hoefner 2022). Practically, porosity can be accurately measured through core analysis and well logs. However, these two measurements have a limited spatial coverage where it only estimates the well location and certain depth. On the other hand, seismic data, which has a greater spatial coverage, can estimate the porosity through the seismic inversion process, which firstly obtains elastic properties and uses rock physics models to transform it to the porosity (Jessell et al. 2015; Maurya et al. 2020). However, the differences, i.e. mean squared error, always occurs in the inversion process of elastic properties from seismic data. By cascading the process of estimating reservoir properties from inverted elastic properties, the error propagation highly affects the estimated property accuracy and uncertainty, which can be even more intractable for reservoirs rich in heterogeneities or that wildly develop thin layers (Marfurt and Kirlin 2001). To address the cascading approach issue, employing joint inversion guaranteeing consistency between the elastic and reservoir properties (Bosch et al. 2010).

Another approach is by a direct estimation from seismic data and bypassing inversion (Zahmatkesh et al. 2018). However, it commonly poses a strong non-linearity underlying the relationship between the reservoir properties of interest and seismic data. On the other hand, Machine Learning (ML) can solve a non-linear problem. To name a few ML applications, Feng et al. (2020) successfully estimated the porosity from seismic post-stack data using the unsupervised deep-learning model, Din and Hongbing (2020) employed the neural network (NN) by combining seismic attributes to estimate porosity. However, those methods only estimate the target deterministically, which gives a unique result. If the process is repeated with a different initial value, it will produce a different output. It shows that the model does not incorporate uncertainty in its prediction of the non-uniqueness of the parameter itself (Wood and Choubineh 2020).

Uncertainty is a situation containing unknown, incomplete, or imperfect knowledge arising from the analytical process, including gathering, organizing, and analyzing huge amounts of data (Wood and Choubineh 2020). A study by Abdar et al. (2020) described how uncertainty affects the performance of ML, and it always occurs in every scenario in various fields, affecting the decision-making process. There are two types of uncertainty, i.e., aleatoric and epistemic uncertainties (Abdar et al. 2020; Feng et al. 2021). Aleatoric uncertainty is the irreducible uncertainty that is inherent in the training data, also known as data uncertainty. On the other hand, Epistemic uncertainty or model uncertainty occurs due to imperfect understanding of the underlying parameters within the model or inadequate data, and it is reducible by optimizing the model parameter or increasing the number of training data. The measurement of uncertainty can be referred to as Uncertainty Quantification (UQ). Taking account of the uncertainty can enhance the reliability of the ML model in real-world applications (Fig. 1).

Recently, the UQ using the ML model has been actively researched in the geoscience community. Multiple ML studies with uncertainty awareness on its prediction used the combination of seismic attributes to solve the high non-linearity relationship between the seismic data and the reservoir properties (Hossain et al. 2022; Pradhan and Mukerji 2018; Zou et al. 2021; Feng 2023). To name a few of the applications, Hossain et al. (2022) used the Bayesian Neural Network (BNN), which uses the Bayesian learning of each hidden layer parameter and describes the uncertainty based on the distribution of its nodes. Pradhan and Mukerji (2018) presented a Bayesian evidential analysis (BEA) framework to directly estimate reservoir properties from near and far-offset seismic waveforms using a deep neural network with uncertainty in various confidence intervals. Moreover, Zou et al. (2021) implemented the ensemble of Random Forest (RF) to capture the uncertainty of the porosity prediction with the combination of seismic attributes. However, multiple training processes to generate an ensemble model and drawing a distribution in the Bayesian network are the drawbacks of those models, affecting the training and test computation time to generate the results.

Another way to quantify the uncertainty is by using a non-parametric approach. Gaussian Process (GP) is an ML algorithm that uses a non-parametric approach which draws distributions over functions (Rasmussen and Williams 2006). Instead of estimating multiple lines, the drawn distribution from GP gives the flexibility of the model to solve a non-linear problem, quantify the uncertainty and reducing the training and test computation time. The width (standard deviation) and mean of the distribution describe the uncertainty of the prediction and the main prediction, respectively. GP has been used in multiple studies, including an estimation of porosity and permeability in the well logs (Mahdaviara et al. 2021a) and Total Organic Content (TOC) estimation using wireline logs (Rui et al. 2020) which display the accurate result from GP. In geoscience, GP is well known as kriging and successfully reservoir characterization application shows that geostatistical method help to guarantee the estimation of the properties (Bosch et al. 2010; Pradhan et al. 2023). Nevertheless, the usage of GP is still sparsely documented in reservoir parameter prediction from directly seismic data. As GP produces the mean predictions alongside its uncertainty, the uncertainty can be interpreted multiple perspectives. As an example, Zou et al. (2021) interpreted the uncertainty as correction values to increase the prediction accuracy. Furthermore, this study extends the application of quantified uncertainty in different approach, which is to create a zonation to delineate anomaly locations.

Thus, the study’s objective is to estimate porosity directly from the combination of seismic attributes and quantify the uncertainty using GP. The GP will be implemented on real seismic post-stack data where the pre-stack data was unavailable, and the Amplitude Versus Offset (AVO) study has not yet been conducted. Firstly, the following section will introduce the related methodologies. Then, it will be followed by the description of the dataset and the experiment workflows. The experiment will use six wells and be validated on one blind well, completely removed from the training dataset. The exclusion of the blind well determines the model performance and reliability where the dataset is completely unknown. After validating the accuracy and reliability in the blind well, the model is employed on seismic cubes with the same seismic attributes to predict the porosity and its uncertainty. Furthermore, this study extends the application of the uncertainty to delineate anomaly distribution in the targeted reservoir. In the end, discussion and conclusions are given.

Gaussian process

A Gaussian process (GP) is a collection of random variables, any Gaussian process a finite number of which have a joint Gaussian distribution (Menke and Creel 2021; Rasmussen and Williams 2006; Seeger 2004). The Gaussian process is completely specified by its mean function m(x) and the covariance function k(x, x’) of process f(x) as,

Thus the general equation of Gaussian Process becomes,

It implies a consistency requirement, meaning that the examination of a larger covariance, i.e. combination of test and train dataset, does not change the distribution of the training points covariance (Rasmussen and Williams 2006). The covariance matrix typically depends on a set of hyperparameters θ. The common GP covariance are enlisted in Table 1.

Now, Suppose the training dataset is vector of X and target y with noises \({\sigma }_{n}\) and n is number of data points. The joint distribution of the observed target values and the function values (\({\varvec{f}}_{\mathbf{*}})\) at the test locations (\({\varvec{X}}_{\text{*}}\)) under the prior can be written as,

Deriving the equation will yield the key predictive of Gaussian Process regression for the mean (\({\stackrel{-}{\mathbf{f}}}_{\text{*}}\)) and the variance \(\left(\text{V}\left[{\mathbf{f}}_{\text{*}}\right]\right)\), as a measurement to quantify the uncertainty, for each test point,

Where \(K(\varvec{X}, \varvec{X})\) is the n x n training points covariance matrix, \(K\left(\varvec{X}, {\varvec{X}}_{\mathbf{*}}\right)\) is n-row dimensional training points with test points \(\left({\varvec{X}}_{\text{*}}\right)\), and \(K\left(\varvec{X}, {\varvec{X}}_{\mathbf{*}}\right) = K( {\varvec{X}}_{\text{*}}, \varvec{X})\). The posterior of the prediction is highly dependent with the parameter of GP. Thus, the standard objective function of GP is to maximize the marginal log-likelihood given parameters with equation (Rasmussen and Williams 2006; Seeger 2004),

The Gaussian Process can accomplish the regression and classification task. Successful regression applications of GP in geophysical examples are found in the literature on predicting rock resistivity from magnetotelluric interpretation (Anandaroop and David 2019) and permeability in well log application (Mahdaviara et al. 2021b). On the other hand, GP also has been implemented for fault classification in engineering domain (Basha et al. 2023). GP classification take the form of class in probabilities which involves some level of uncertainty (Rasmussen and Williams 2006). According to the objectives, the GP employed in this study will focus on the regression task. As a non-parametric regression, GP is characterized by the kernel (K) shape and its equation. One common hyperparameter that controls the kernel is the length scale (\(\mathcal{l}\)) (Table 1), which defines how smooth/coarse the regression will be. As an example, if the hyperparameter length is small, GP will generate very coarse predictions. On the other hand, the larger of the hyperparameter length, GP will generate smoother predictions.

Dataset and research workflow

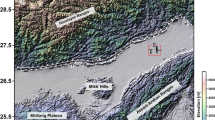

The input datasets are the seismic post-stack time migrated data with area coverage about 886.0000.000 m2 and seven wells with average separation 2.5 km (AI-1, AI-2, AI-3, AI-4, AI-5, AI-6, AI-7) from field X in the Malay basin. Field X is in a clastic environment with mainly deltaic depositional settings, including multiple channels and deltaic lobes and mainly focuses on reservoir I-X in group I formation which is located within interval of 1400 to 2000 milliseconds. Then, thirty-three derived seismic attributes from post-stack data are generated and extracted at every well location, for example Fig. 2 displays AI-2 with several seismic attributes. The study is also accompanied by the inverted impedance using seismic inversion as the comparison with the GP results. After the well-to-seismic tie, the log data is low-pass filtered with frequency cut-off 100 Hz and upscaled by using mean averaging method to have the same frequency and data sampling of seismic data. Then, the training input is generated by merging the log and seismic data with the same scale. The model is validated by using two validation test, the first validation utilizes 20% of the training dataset. And the second validation test is performed on one well interchangeably as the blind test, which is excluded completely from the training input. Thus, by excluding one well interchangeably, the total number of training points ranges from 1300 to 1500 data.

Although multiple features can be generated, a low correlation between the features and the target will poorly impact the model performance. It is essential to filter out the irrelevant attributes as part of the training process. The feature selection method is Recursive Feature Elimination - Random Forest (RFE-RF). The RFE-RF is an open-source module in Scikit-learn (Pedregosa et al. 2011). Random Forest is a robust algorithm to select features even with more variables (Li et al. 2012). Meanwhile, RFE is a procedure to rank the features and eliminate the unrelated features to the porosity. Furthermore, the combination of RFE and RF performs well in selecting the features for the machine learning model (Chen et al. 2020; Li et al. 2012). The study uses the Correlation of Coefficient (R) as the metric score.

Where \({x}_{i}\) as the predicted value, \({y}_{i}\) as the true value, \({\widehat{x}}_{i}\) and \({\widehat{y}}_{i}\) as the mean predicted and true value, respectively, at sample point i. The R-score determines the correlation between the predicted and true values. With a maximum value of 1, it shows an excellent positive linear correlation between two variables. R-scores with − 1 indicate a perfectly negative linear correlation between two variables, and 0 indicates no linear correlation between two variables. Since GP produces mean and standard deviation, Mean Standardized Log Likelihood (MSLL) can also be used as a metric score to evaluate the model. MSLL measures the error between the prediction and the target and introduces the standard deviation to its measurement (Rasmussen and Williams 2006). Thus, it combines accuracy and precision measurements to determine the model’s reliability. MSLL is defined as follows,

Where \({\sigma }_{i}\) is the predicted standard deviation at point i, and N is the number of the data. The lower of MSLL values indicates the more accurate and precise and vice versa.

Gaussian process training result

Initially, AI-2 is excluded from the training process and the datasets are split into 80% for training and 20% for testing. The training input consists of multiple statistical seismic full-stack attributes, with each attribute enveloped. The enveloping attributes process does increase the number of features to 33. After that, the recursive feature selection will optimize the model’s accuracy by filtering the features based on their importance to the target (Chen et al. 2020; Li et al. 2012). The RFE-RF shows that R-scores do not increase significantly after 11 attributes (Fig. 3). Hence, those attributes are selected and enlisted in Fig. 4.

In GP, the hyperparameter tuning is initiated by selecting the proper kernel to estimate the target. Therefore, using the selected features, the kernel selection is performed with the 10-fold training. The outcome of the 10-fold training process will specify the suitable hyperparameter for the selected kernel. The initial proposed kernels are Rational Quadratic (RQ), Matern, and Exponential. To address the high non-linearity between the seismic attributes with porosity issue, non-stationary kernels are also proposed which is a multiplication each those three kernel with Linear kernel. The GP model was then employed for the test and blind test data with scores. Figure 5 shows that only three from six proposed kernels able to predict the train and test dataset. It shows the three kernels are able to achieve the train and test score above than 80%. Therefore, the best two kernels are then employed to predict in the blind well as shown in Fig. 6. A high discrepancy appears within the reservoir interval indicate in those particular points, the model unable to recognize it from the training input which has been given to the model. Furthermore, to test the model robustness with the same input features and kernel, a blind-test score excluding the well interchangeably is carried out. Figure 7 displays every each blind-test score when the blind well is being interchanged and the results shows the model able to achieve the blind test score above 60% and MSLL below 3.5%.

Comparison gaussian process blind test accuracy with other models

To validate the GP predictions, blind test comparisons were also carried out using two other ML models. The first supervised machine learning model is XGBoost. XGBoost is a decision tree algorithm based on gradient lifting, but it performs better in terms of generalization and prediction accuracy when compared to gradient lifting (Chen and Guestrin 2016). It has good performance and is efficient in estimating regression problems. Several studies have implemented the XGBoost for some petrophysical properties (Pan et al. 2022). Then, the second model for the comparison is Support Vector Machine (SVM). SVM is a machine learning algorithm that maps non-linear inputs to a high-dimensional feature space and creates a linear decision surface to predict its target (Cortes and Vapnik 1995). This model was initially created for classification problems, but recently, it displayed its implementation in regression problems (Al-Anazi and Gates 2010; Kaymak and Kaymak 2022). Hence, these two models are trained throughout the same workflow and predict the blind well. Figure 8; Table 2 display the porosity estimation and the accuracy for each model on the blind-test dataset. However, lower predicted porosity at the reservoir area is shown in those three models. Since all the three model produces a similar results, it may occurred due to the limitation of the dataset which is used, only post-stack time migrated seismic dataset.

Seismic cube GP implementation and uncertainty zone generation

As the result which was displayed in the blind-well, the model with AI-5 as the blind well then is applied to the seismic cube. Maps of the mean and standard deviation of porosity prediction in I-X reservoir are carried out. Also, an inverted P-impedance map of I-X reservoir is carried out as comparison with the GP result. The inverted P-impedance is generated using model based inversion algorithm. Traditionally, the porosity map can be produced from a regression between inverted P-impedance and porosity within the reservoir interval. However, the error from P-impedance inversion process can propagate to the predicted porosity. Thus, Fig. 9 shows the I-X reservoir maps comparison between the predictions from GP model and the inverted P-impedances overlaying with the location of the wells.

Furthermore, the study analyzed further the uncertainty that has been predicted. A cross plot between the mean and standard deviation from GP predictions is generated to determine the uncertainty zone based on the quartile of the standard deviation. It classified the uncertainty into three groups: low, medium, and high uncertainty zones. The visualization and classification can indicate several anomalies and become other attributes to help the expert analyze the predictions (Kinkeldey et al. 2014). Figure 10 displays the cross plot to determine the uncertainty zone and map result from the uncertainty classification. Thus, an arbitrary line which intersects AI-5, a hydrocarbon production well, is carried out to compare the inverted P-impedance, predicted porosity and high anomaly zone as shown in Fig. 11. Figure 11 displays a clear delineation reservoir which indicated by low inverted P-impedance, high porosity and high anomaly zones.

I-X horizon comparison of a inverted P-impedance from inverted post-stack seismic data b Porosity mean prediction from GP and c standard deviation from GP d estimated porosity from inverted P-impedance. The I-X reservoir is associated with deltaic lobes and stacked channels according to (Ghosh et al. 2010; Reilly et al. 2008)

Discussion

A detailed discussion will be separated into two parts, the GP performance for porosity prediction in the well, and the interpretation of uncertainty in seismic cube alongside the comparison with the inversion result.

Gaussian process performance for porosity prediction in the blind well

Two kernels, the Matern and the product of Rational Quadratic (RQ) and Linear kernel, are selected based on the training-test scores exceeding 80%. However, the Matern kernel fails to characterize the uncertainty at shallow and deeper parts of the blind well, as shown in Fig. 6. Instead, only the product of RQ with the Linear kernel, a non-stationary kernel, can predict entire sections. A non-stationary kernel is a dot product of two or more kernels more flexible than a stationary/basic kernel (Rasmussen and Williams 2006). Combining two kernels allows it to predict and characterize the uncertainty for the whole blind well. Thus, it is necessary to test the model performance by partitioning the training dataset and using the blind test to ensure its reliability and robustness.

The GP robustness of the dot product of RQ and Linear kernel is also validated by using two scenarios, by interchanging the blind well and comparing it with other machine learning. By interchanging the blind well, the GP shows stable performance with the selected kernel. Also, the GP performs better compared to other machine learning models. GP has the highest score in the test dataset and exceeds 70% on blind-test prediction. Hence. These are the outcomes of GP with a non-stationary kernel to demonstrate its first implementation and the advantage in estimating porosity from seismic data.

The uncertainty interpretation of porosity estimation on 2D and 3D sections

The high porosity reservoir with a value over 0.23 continuity of I-X can be observed in multiple wells, accompanied by an associated level of uncertainty. Furthermore, a strong correlation and structural relationship exists between the prediction of porosity and the inverted P-impedance as depicted in Fig. 9. The structures with association of deltaic lobes and stacked channels is shown from both maps which the been identified in accordance with prior studies on reservoir connectivity within this domain (Ghosh et al. 2010; Reilly et al. 2008). Two reservoir locations with high porosity alongside the standard deviation and low impedance are identified at the north-west and north part of the map. In Fig. 9c, the standard deviation acts as a precision value which can be added or subtracted to the mean prediction. It can be a parameter for the expert to evaluate the range of the reservoir porosity.

Moreover, the uncertainty can also act as a delineation to help interpret the associate reservoir. Using the quartile of the predicted standard deviation (Fig. 10a), it generates a new attribute map and shows good porosity reservoirs are associated with high anomalies (Fig. 10b). Futhermore, the 2D cross-section passing through AI-5 shows high anomalies around the well are associated with high porosity from GP and low inverted P-impedance as shown in Fig. 11. Thus, the new attribute provides additional information for experts to delineate anomalies in the reservoir. It should be emphasized that the dataset used in this study is post-stack only. Pre-stack data and AVO studies should also be included to increase confidence in the reservoir analysis.

However, a potential constraint of GP under consideration is the possibility of producing outcomes that surpass certain thresholds. As an illustration, consider a scenario where a given property is constrained by certain values (such as the minimum Porosity value being 0% or the water saturation falling within the range of 0–100%). In such an instance, the calculation of uncertainty may surpass its assigned value. Numerous studies have studied to restrict the predictions by establishing certain limitations (Gulian et al. 2022).

Conclusion

In this study, we investigated the application of the Gaussian Process (GP) for porosity prediction alongside its uncertainty directly from seismic data. The GP effectiveness was validated using two scenarios, interchange the blind well test and comparison with other machine learning. The associated uncertainty from GP, the standard deviation, was predicted simultaneously with the mean porosity and can act as the quantitative reliability to determine the predicted porosity range at the reservoir location. Moreover, the results found that the standard deviation can be categorized based on the quartile to generate a new attribute to delineate the anomaly zone. The area with a high anomaly area is associated with the low inverted P-impedance and high porosity, which can give insight to the expert about the reservoir character. However, the study only used post-stack seismic data where the pre-stack data was unavailable, and the Amplitude Versus Offset (AVO) study has not yet been conducted. Thus, future research could address these limitations by using the pre-stack data as the input for the prediction and implementing an AVO study to emphasize the anomaly delineation from the GP result.

Data availability

Datasets generated during the current study are available from the corresponding author on reasonable request.

References

Abdar M, Pourpanah F, Hussain S, Rezazadegan D, Liu L, Ghavamzadeh M, Fieguth PW, Cao X, Khosravi A, Acharya UR, Makarenkov V, Nahavandi S (2020) A review of uncertainty quantification in deep learning: techniques, applications and challenges. Inf Fusion 76:243–297

Al-Anazi AF, Gates ID (2010) Support vector regression for porosity prediction in a heterogeneous reservoir: a comparative study. Comput Geosci 36(12):1494–1503. https://doi.org/10.1016/j.cageo.2010.03.022

Anandaroop R, David M (2019) Bayesian geophysical inversion with gaussian process machine learning and Trans-D Markov Chain Monte Carlo. ASEG Ext Abstracts 2019(1):1–5. https://doi.org/10.1080/22020586.2019.12072961

Basha N, Kravaris C, Nounou H, Nounou M (2023) Bayesian-optimized Gaussian process-based fault classification in industrial processes. Comput Chem Eng 170:108126. https://doi.org/10.1016/j.compchemeng.2022.108126

Bosch M, Mukerji T, Gonzalez EF (2010) Seismic inversion for reservoir properties combining statistical rock physics and geostatistics: A review. Geophysics 75(5):75A165-75A176. https://doi.org/10.1190/1.3478209

Chen T, Guestrin C (2016) Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd Acm Sigkdd International Conference On Knowledge Discovery and Data Mining, pp 785–94

Chen R-C, Dewi C, Huang S-W, Rezzy EC (2020) Selecting critical features for data classification based on machine learning methods. J Big Data 7(1):52. https://doi.org/10.1186/s40537-020-00327-4

Cortes C, Vapnik VN (1995) Support-vector networks. Mach Learn 20:273–297

Din NU, Hongbing Z (2020) Porosity prediction from model-based seismic inversion by using probabilistic neural network (PNN) in Mehar Block, Pakistan. Int Union Geol Sci 43(4):935–46. https://doi.org/10.18814/epiiugs/2020/020055

Feng R (2023) Physics-informed deep learning for rock physical inversion and its uncertainty analysis. Geoenergy Sci Eng 230:212229. https://doi.org/10.1016/j.geoen.2023.212229

Feng R, Grana D, Balling N (2021) Uncertainty quantification in fault detection using convolutional neural networks. Geophysics 86(3):M41–M48. https://doi.org/10.1190/geo2020-0424.1%JGeophysics

Feng R, Hansen TM, Grana D, Balling N (2020) An unsupervised deep-learning method for porosity estimation based on poststack seismic data. 85(6):M97–105. https://doi.org/10.1190/geo2020-0121.1

Ghosh DP, Abdul Halim MF, Brewer M, Vernato B, Darman N (2010) Geophysical issues and challenges in malay and adjacent basins from an E & P perspective. Lead Edge 29(4):436–449. https://doi.org/10.1190/1.3378307

Gulian M, Frankel A, Swiler L (2022) Gaussian process regression constrained by boundary value problems. Comput Methods Appl Mech Eng 388:114117. https://doi.org/10.1016/j.cma.2021.114117

Hossain TM, Hermana M, Jaya MS, Sakai H, Abdulkadir SJ (2022) Uncertainty quantification in classifying complex geological facies using bayesian deep learning. IEEE Access 10:113767–113777. https://doi.org/10.1109/ACCESS.2022.3218331

Jessell L, de Kemp E, Lindsay M, Wellmann F, Hillier M, Laurent G, Carmichael T, Martin R, Aillères M (2015) Geological uncertainty and geophysical inversion. Geotectonic Res 97(1):141. https://doi.org/10.1127/1864-5658/2015-62

Kaymak ÖÖ, Kaymak Y (2022) Prediction of crude oil prices in COVID-19 outbreak using real data. Chaos Solitons Fractals 158:111990. https://doi.org/10.1016/j.chaos.2022.111990

Kinkeldey C, MacEachren AM, Schiewe J (2014) How to assess visual communication of uncertainty? A systematic review of geospatial uncertainty visualisation user studies. Cartographic J 51(4):372–386. https://doi.org/10.1179/1743277414Y.0000000099

Li Y, Xia J, Zhang S, Ai JYX (2012) An efficient intrusion detection system based on support Vector machines and gradually feature removal method. Expert Syst Appl 39(1):424–430. https://doi.org/10.1016/j.eswa.2011.07.032

Mahdaviara M, Rostami A, Keivanimehr F (2021a) Accurate determination of permeability in Carbonate reservoirs using gaussian process regression. J Petrol Sci Eng 196:107807. https://doi.org/10.1016/j.petrol.2020.107807

Mahdaviara M, Rostami A, Keivanimehr F, Shahbazi K (2021b) Accurate determination of permeability in carbonate reservoirs using gaussian process regression. J Pet Sci Eng 196. https://doi.org/10.1016/j.petrol.2020.107807

Marfurt KJ, Kirlin RL (2001) Narrow-band spectral analysis and thin-bed tuning. Geophysics 66(4):1274–1283. https://doi.org/10.1190/1.1487075

Maurya SP, Singh NP, Singh KH (2020) Geostatistical Inversion. Seismic inversion methods: a practical approach. Springer International Publishing, Cham, pp 177–216

Menke W, Creel R (2021) Gaussian process regression reviewed in the Context of Inverse Theory. Surv Geophys 42(3):473–503. https://doi.org/10.1007/s10712-021-09640-w

Montoya P, Hoefner M (2022) Earth modeling applied to carbon capture and storage at LaBarge field. Wyoming 457–461. https://doi.org/10.1190/IMAGE2022-3748408.1

Pan S, Zheng Z, Guo Z, Luo H (2022) An optimized XGBoost method for predicting reservoir porosity using petrophysical logs. J Petrol Sci Eng 208:109520. https://doi.org/10.1016/j.petrol.2021.109520

Pedregosa F, Varoquaux G, Gramfort A, Michel V (2011) Scikit-Learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Pradhan A, Mukerji T (2018) Seismic estimation of reservoir properties with Bayesian Evidential Analysis. SEG Tech Program Expand Abstr 3166–3170. https://doi.org/10.1190/segam2018-2998259.1

Pradhan A, Adams KH, Chandrasekaran V, Liu Z, Reager JT, Stuart AM, Turmon MJ (2023) Modeling groundwater levels in California’s Central Valley by hierarchical Gaussian process and neural network regression. https://doi.org/10.48550/arXiv.2310.14555

Rasmussen CE, Williams CKI (2006) Gaussian processes for machine learning. MIT Press

Reilly JM, Pitcher D (2008) SEG applied research workshop: geophysical challenges in Southeast Asia exploration. Lead Edge 27(10):1282–1299. https://doi.org/10.1190/1.2996539

Rui J, Zhang H, Ren Q, Yan L, Guo Q, Zhang D (2020) TOC Content Prediction Based on a Combined Gaussian Process Regression Model. Mar Pet Geol 118:104429. https://doi.org/10.1016/j.marpetgeo.2020.104429

Seeger M (2004) Gaussian Process for Machine Learning 14(02):69–106. https://doi.org/10.1142/s0129065704001899

Wang Z, Yin C, Lei X, Gu F, Gao J (2015) Joint rough sets and Karhunen-Loève transform approach to seismic attribute selection for porosity prediction in a chinese sandstone reservoir. Interpretation 3(4):SAE19-28. https://doi.org/10.1190/INT-2014-0268.1

Wood DA, Choubineh A (2020) Transparent machine learning provides Insightful estimates of Natural Gas Density based on pressure, temperature and compositional variables. Nat Gas Geoscience 5:33–43

Zahmatkesh I, Kadkhodaie A, Soleimani B, Golalzadeh A, Azarpour M (2018) Estimating vsand and reservoir properties from seismic attributes and acoustic impedance inversion: a case study from the Mansuri oilfield, SW Iran. J Petrol Sci Eng 161:259–274. https://doi.org/10.1016/j.petrol.2017.11.060

Zou C, Zhao L, Xu M, Chen Y, Geng J (2021) Porosity prediction with uncertainty quantification from multiple seismic attributes using Random Forest. J Geophys Research: Solid Earth 126(7). https://doi.org/10.1029/2021JB021826

Acknowledgements

The authors would like to thank PETRONAS Malaysia and Centre for Subsurface Imaging (CSI) for providing the data for this study. We would like to express our appreciation to Centre for Subsurface Imaging and Geoscience department Universiti Teknologi PETRONAS (UTP) colleagues for supporting us throughout the project. Huge gratitude to UTP fundamental research grant with cost centre 15MD0-077 for granting this research. We acknowledge to CGG Company for providing Hampson Russell software licensing, Scikit-learn as Open Source modules in Python environment and Schlumberger Company for providing Petrel software licensing.

Funding

This research was funded by UTP fundamental research grant with grant number 15MD0-077.

Author information

Authors and Affiliations

Contributions

M.H.R.P. and M.H. did the conceptualization with validation by M.H.R.P, T.M.H., I.B.S.Y. and M.F.A. M.H.R.P. wrote the main manuscript text under the supervision of M.H. and S.J.A.K. All Authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Communicated by: H. Babaie

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rahma Putra, M.H., Hermana, M., Yogi, I.B.S. et al. Reservoir porosity assessment and anomaly identification from seismic attributes using Gaussian process machine learning. Earth Sci Inform 17, 1315–1327 (2024). https://doi.org/10.1007/s12145-024-01240-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12145-024-01240-7