Abstract

Challenges of modern living such as burden of disease, a global COVID-19 pandemic and workplace stress leading to anxiety and depression raise the importance of psychological resilience. Psychological interventions should increase trait resilience that involves reinforcing state resilience and requires a clear distinction between state and trait aspects of the construct. Generalizability theory is the appropriate method increasingly used to distinguish between state and trait and to establish reliability of psychological assessment. G-theory was applied to examine five major resilience scales completed at 3 time-points by the sample (n = 94) that possess adequate statistical power for such analyses. All five resilience scales demonstrated strong reliability and generalizability of scores across occasions and sample population as expected for a valid trait measure (G > 0.90). However, eight state aspects of resilience were identified from all five resilience scales including adaptation to change; perseverance; self-confidence while facing adversity; self-efficacy; trust in instincts; ability to follow plans; non-reactivity; and ability to plan. State aspects of resilience appear to show more variability and, pending further research, could potentially be a target for resilience-building interventions. All five measures of resilience are useful to assess long-lasting changes in resilience. Development of a state resilience scale is warranted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The modern world presents a plethora of challenges, such as the burden of disease (e.g., the COVID-19 pandemic), workplace stress, burnout, and negative environmental effects. These factors have been linked to anxiety and depression, heightening the importance of the resilience concept across various areas of psychology (Heinz et al., 2018). Psychological resilience is generally defined as the ability to recover from difficult circumstances or challenging life events. It is proposed as an effective tool to mitigate the negative effects of stressors leading to anxiety and depression (Dantzer et al., 2018; Sheerin et al., 2018; Stewart & Yuen, 2011; McCraty & Atkinson, 2012).

Resilience is posited to function as a protective ‘buffer’ against the impact of adverse childhood experiences that can lead to adult depression (Poole et al., 2017a, b). It has also been found to be negatively associated with suicidal ideation (Kim et al., 2020). Despite its potential, current resilience-building interventions have yielded inconsistent results. A recent study found that one-third of university students experienced anxiety or depression that affected their daily functioning. Although students who underwent a short resilience-building program showed reductions of depression and perceived stress, the program had a negligible effect on resilience levels and even resulted in a decrease in resilience scores from pre- to post-training (Akeman et al., 2019). A meta-analysis of workplace-based resilience-building programs found an overall modest effect size, which decreased significantly over a one-month period post-intervention (Vanhove et al., 2016). Another meta-analysis found only small to moderate effect sizes, with only four of eleven identified studies yielding statistically significant results when control conditions were present (Joyce et al., 2018).

Further issues in resilience research lie in the current conceptualizations of measuring trait and state resilience. Trait resilience refers to a person’s relatively stable resilience over time, akin to a resilient personality type (Spielberger, 1983). On the other hand, state resilience is a dynamic and changing form of resilience. It emerges from the interaction between individual trait resilience features and occasion-specific factors. Importantly, state resilience does not include the interaction between person, item, and occasion, as such interaction represents an error variance due to changes in understanding an item due to situational factors (Medvedev et al., 2017). These complexities necessitate ongoing research to better understand and measure these facets of resilience.

The Whole-Trait Theory (Fleeson & Jayawickreme, 2015) and the TESSERA framework (Wrzus & Roberts, 2017) proposed that states are manifesting as an interaction of traits with specific situations. Therefore, if experience of states is frequently repeated over time it can result it trait changes. Accordingly, interventions aiming at trait changes should target states on the first place to enable individuals to experience relevant states more often and in a diverse set of situations, such that these transitory changes in thoughts, emotions, and behaviours will ultimately impact on enduring (trait) changes over time (Miller et al., 2021). Thus, research aiming at enhancing effectiveness of interventions should try to understand the causes of state variations. Items in a psychometric measure reflect different aspects of a construct being measured (e.g., resilience) and investigating (state) variability of different items over time can provide a meaningful information as to what aspects of a construct are the most dynamic over time (Medvedev et al., 2021). The research shows that trait aspects of a construct are resistant to change while more dynamic aspects reflected by state items are easier to influence by means of an intervention and such aspects should be a primary target of such interventions (Arterberry et al., 2014; Medvedev et al., 2017). Consequently, it is important to distinguish between state and trait aspects of a construct, which are reflected by assessment items operationalising a construct under investigation.

The most common method of distinguishing between state and trait features utilizes test-retest reliability and question wording (STAI; Gaudry et al., 1975). Conceptually, items and instructions should be worded to refer to the present in order to assess state features, and towards overall tendencies to assess trait features. Spielberger (1983) proposed that a correlation between two test scores at separate intervals of less than 0.60 indicates a state measure, while a correlation of 0.70 and above indicates a trait scale. Problems with this style of assessment were shown in Barnes et al. (2002) reliability investigation of the State-Trait Anxiety Inventory (STAI; Gaudry et al., 1975), which found a test-retest correlation ranging from 0.82 to 0.94 for trait anxiety, and 0.34 to 0.96 for state anxiety. The higher upper range for state anxiety contradicts the prescribed criteria for state and trait distinction. Thus, more psychometric work is needed to establish the overall reliability of measuring resilience, identify sources of the measurement error and distinguish between state and trait aspects of resilience using appropriate methodology such as Generalizability theory (G-theory; Cronbach et al., 1963, 1972; Bloch & Norman, 2012; Medvedev et al., 2017).

G-theory, a robust statistical methodology pioneered by Cronbach et al. (1963, 1972), offers a comprehensive analysis of the reliability and generalizability of assessment scores obtained from psychometric scales. It also identifies specific error sources potentially influencing measurements. This method enhances Classical Test Theory (CTT) by using ANOVA to quantify error sources, which in CTT, would be collectively accounted for as an overall error estimate. This approach can be likened to the historical usage of garbage containers where people discarded everything, they no longer desired to keep, including potentially recyclable items. The CTT model of X = T + E posits that the observed score (X) consists of the true score (T) and the error (E) (Brennan, 2010). In this model, all error sources are included within E, rendering the specific error sources and their contribution to E indiscernible.

G-theory operates in a manner analogous to modern recycling bins which segregate waste into different categories based on their sources such as plastic, metals, paper, biological and general waste, recognizing that many types of these waste are indeed useful. Similarly, G-theory quantifies the unique contribution of different error sources to the overall error, which can be instrumental in enhancing the reliability of assessments. This is achieved through a model that can be expressed as:

Where: Ep = Error attributed to person; EI = error attributed to item; EO = error attributed to occasion; E (P x I) = error attributed to interaction of person and item; E (P x O) = error attributed to interaction of person and occasion; E (P x I x O) = error attributed to interaction of person, item, and occasion (Medvedev et al., 2017).

G-theory can also be used to reliably distinguish between trait and state aspects of a psychological construct such as resilience (Medvedev et al., 2017). Usually, interactions between an individual’s trait and assessment occasions represent an individual’s state, which is a dynamic aspect associated with a construct (Medvedev & Siegert, 2022). Formulas to estimate state and trait component indices to distinguish between trait and state using G-theory were proposed by Medvedev et al. (2017). Although, there are statistically equivalent approaches to disengage state and trait components such as the latent state-trait theory (Steyer et al., 1999; Vispoel et al., 2021), such methods were not specifically developed to examine the overall reliability and generalisability of assessment scores across various populations and contexts (Medvedev & Siegert, 2022). Therefore, G-theory is increasingly applied to evaluate and enhance the overall reliability of psychometric instruments across disciplines and to quantify sources of measurement error as well as distinction between state and trait (Kumar et al., 2022; Medvedev et al., 2021).

G-theory analysis typically begins with a Generalizability (G)-study, which centers around assessing the aggregate reliability at the primary scale-level (Cronbach et al., 1972; Brennan, 2010). The principal aim of the G-study is to compute the variance components ascribed to the various sources of variability within the scale, such as the person, item, occasion, and the interactions amongst them. The main outcome of the G-study is the calculation of the individual variance components and their standard errors, one for the object of measurement and one for each facet in the design, along with one for the residual. Generalizability coefficients (G-coefficients) are derived in a subsequent phase, the Decision (D)-study, and based on variance components that were estimated in the G-study.

The D-study follows the G-study and conducts further experimentation with measurement designs to optimize overall reliability of the assessment. It includes analyzing the variance component estimates obtained from the G-study, answering ‘what if’ questions about how the magnitude of the error variances and G-coefficients would vary under changes to the number of levels in any facets (such as items, raters, or occasions) or alterations to the structure of the design. As a result, it calculates absolute and relative error variances, and the G-coefficients. A G-coefficient of 0.80 or above signifies high generalizability of assessment scores, reflecting a reliable trait measure (Cronbach et al., 1972; Brennan, 2001, 2010; Medvedev et al., 2018a, b).

However, such aspects concerning ‘what if’ scenarios or structural changes to the design are not relevant to the current study. In our case, the D-study might involve the reduction of item numbers to improve the psychometric properties of a measure. Importantly, it can be conducted at the individual item level, thereby providing insights into which items best reflect trait or state resilience. This distinction between the G-study and D-study is in line with the descriptions provided by Cronbach et al. (1972) and does not conflict with the understanding imparted by Bloch and Norman (2012).

A state component index (SCI) can be calculated using formulas from the G study (Medvedev et al., 2017):

A trait component index (TCI) has an inverse relations with the SCI and can be computed using the following formula (Medvedev et al., 2017):

Where, \({\sigma }_{po}^{2}\) is person x occasion interaction representing a state;\({\sigma }_{p}^{2}\) is a true person variance representing a trait. The SCI and TCI indicate how strongly each scale/item measures state or trait resilience. In this study, we have chosen a cut-off value of 0.60 for both SCI and TCI, as it represents a more conservative and defensible criterion that requires at least 60% of the variance to be explained by either state or trait. This threshold helps to increase the confidence in the classification of items as predominantly measuring state or trait resilience. It is important to note that, in addition to the SCI and TCI values, the content of the item should also be considered when distinguishing between state and trait items. To date, G-theory is increasingly used across disciplines such as medicine, psychology, and education to examine the overall reliability and generalizability of assessment scores, sources of the measurement error (Bloch & Norman, 2012; Medvedev et al., 2018a, b), and to distinguish between state and trait (Lyndon et al., 2020; Truong et al., 2020).

This study aimed to distinguish between state and trait resilience, identify sources of measurement error and the overall generalizability of assessment scores in the five widely used resilience measures by applying G-theory. Identifying stable and dynamic aspects of the resilience construct may add valuable knowledge to conceptualizations of resilience. While many studies have assessed both proximal and distal effects of resilience training, the scales used do not distinguish between state and trait in their assessment (Salisu & Hashim, 2017). If sensitivity to distinguish between state and trait resilience is achieved, proximal and distal effects of resilience training can be more accurately assessed. By identifying dynamic features of resilience, we would also be able to identify the factors which are most changeable. This can then be applied to form resilience building interventions that target amendable features of resilience thus increasing their efficacy. Overall, this study aimed to contribute to more accurate assessment of resilience in a wider context including psychological interventions to enhance resilience in people.

Materials and methods

Study population

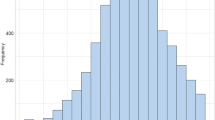

This study included 94 university students that completed an online questionnaire at three separate time points, with one-week intervals. Our sample size is adequate for this type of reliability research as demonstrated by similar studies (Shoukri et al., 2004; Truong et al., 2020). The sample included 75 (80%) females and 19 (20%) males with a mean age of 27 years (SD = 9.47). The ethnic composition of the sample is as follows: New Zealand European 57 (60%), New Zealand Maori 13 (14%), Pacific Peoples 2 (2%), Asian 13 (14%), and Other 9 (10%). Student participants received course credit for research participation.

Procedure

This study was approved by the authors’ institutional ethics committee. Participants who wished to take part in the study completed online surveys at three time points in their own time. Informed consent was obtained from all participants as a part of completing the questionnaire. A one-week period between completion of one survey and distribution of the next was selected based on Spielberger’s, 1983 findings that this timeframe is optimal for assessment of temporal reliability and to minimize learning effect. Qualtrics XM was used to create and administer an online survey. The survey was comprised of the five resilience scales described in the Measures section below and included demographic information such as age, sex, and ethnicity.

Measures

Connor-Davidson Resilience Scale (CD-RISC)

CD-RISC is a 25-item unidimensional self-report scale, that is designed to measure psychological resilience. The scale uses a five-point Likert scale response format from “Not true at all” = 0 to “True nearly all the time” = 4 with the higher scores corresponding to higher levels of psychological resilience. The total score is calculated as the sum of individual item responses. The CD-RISC has a reported Cronbach’s alpha of 0.89–0.96, and test-retest reliability of 0.87 (Connor & Davidson, 2003) (Ponce-Garcia et al., 2015).

The Resilience Scale (TRS)

TRS is also a 25-item unidimensional self-report scale designed to measure psychological resilience. Responses range from “Strongly disagree” =1 to “Strongly agree” = 7. Total score for this scale is calculated as the sum of individual item responses. Reported Cronbach’s alpha for TRS is 0.89–0.94 (Wagnild & Young, 1993). Reported test-retest reliability from a Dutch adaptation of this scale is 0.90 (Wagnild & Young, 1993; Ponce-Garcia et al., 2015). Test-retest reliability data was not available for the English version of this scale. To make the TRS compatible with other scales used in this study, we have used uniform response format ranging from “Strongly disagree” = 1 to “Strongly agree” = 5, instead of the original 7-point response format.

Scale of Protective Factors (SPF)

SPF is a 24-item unidimensional self-report scale. Responses range from “Disagree completely” =1 to “Agree completely” = 7. This scale measures the following facets of resilience: social skills, social support, goal efficacy, and planning and prioritizing behavior. Reported Cronbach’s alpha for the SPF is 0.91. No test-retest reliability values were available for this scale (Ponce-Garcia et al., 2015). To make the SPF compatible with other scales used in this study, we have used uniform response format ranging from “Strongly disagree” = 1 to “Strongly agree” = 5, instead of the original 7-point response format.

Ego Resilience Scale (ER89)

The ER89 is a 14-item unidimensional self-report scale designed to measure trait resilience. Responses range from “Does not apply to me at all” = 1 to “Applies to me completely” = 5. Reported Cronbach’s alpha = 0.73–0.81 and test-retest reliability = 0.87 (Block & Kremen, 1996; Farkas & Orosz, 2015).

Brief Resilience Scale (BRS)

The BRS is a short, 6-item unidimensional self-report scale. Responses for the BRS range from “Strongly disagree” = 1 to “Strongly agree” = 5. Reported Cronbach’s alpha values for this scale range from 0.80 to 0.91, and test-retest reliability values range from 0.62 to 0.69 (Smith et al., 2008).

Psychometric properties including citations for all measures included in this study are summirised in Table 1.

Statistical analyses

Descriptive statistics were computed using IBM SPSS Statistics version 25. Generalizability analysis was conducted according to the guidelines described by Cardinet et al. (2009) and Medvedev et al. (2017), and analysis was carried out using EduG 6.1e software (Swiss Society for Research in Education Working Group, 2006).

Our research utilizes the generalizability theory framework, which seeks to explain the variance of observed raw scores, with the responses assumed to be on an interval scale. It is essential to recognize these assumptions, as they fundamentally underpin our analytical approach. While these assumptions could appear stringent, G-theory offers a robust and versatile structure for assessing the reliability of measurements across diverse conditions. This flexibility is particularly relevant to our study, given our focus on state and trait aspects of resilience.

A fully crossed p x I x o design was used in both the G study and the D study. Persons (p) and occasions (o) were classified as random effects, but items (I) were considered to be fixed because both the G study and the D study focused on the specific items existing in the five scales and there was no intent to make inferences about items beyond those included in the existing scales. Here, ‘persons’ refers to the participants in our study, and ‘occasions’ refers to the different time points at which measurements were taken. Both ‘persons’ and ‘occasions’ could theoretically have infinite levels and are thus modelled as random effects in our analysis. The facets were operationalized with person as the object of measurement or differentiation facet, which is not a source of error. Instrumentation factors were items and occasions (Cardinet et al., 2009). Individual states by nature, should vary across occasions as reflected by person-occasion interaction. Therefore, the error variance attributed to person x occasion interaction is reflective of a state component (Medvedev et al., 2017). The relative value of this interaction is also indicative of a scale’s sensitivity to measure individual states (Paterson et al., 2017). In our study, we computed G and D-study estimates based on formulas developed by Brennan (1992), which are rooted in repeated measures ANOVA.

Next, we utilized these variance component estimates in the D-study to calculate the generalizability coefficients, referred to as Gr (relative generalizability) and Ga (absolute generalizability), which is equivalent to the index of dependability, denoted by Φ. These coefficients provide insights into the reliability of the measurement under varying conditions. The formulas for these calculations, also provided by Brennan (1992), are as follows:

Here, \({\sigma }_{p}^{2}\) is variance due to the object of measurement (persons), and \({\sigma }_{\delta }^{2} ={\sigma }_{po}^{2} +{\sigma }_{pi}^{2}+{\sigma }_{pio}^{2}\)is relative error variance, where \({\sigma }_{po}^{2} ; {\sigma }_{pi}^{2} ; {\sigma }_{pio}^{2}\) are person x occasion; person x item, and person x item x occasion interaction variance components respectively. Ga accounts for absolute error variance (\({\sigma }_{{\Delta }}^{2}\)=\({\sigma }_{o}^{2}+{{\sigma }_{i}^{2}+{\sigma }_{io}^{2}+\sigma }_{po}^{2} +{\sigma }_{pi}^{2}+{\sigma }_{pio}^{2}\)) that includes other variance components of occasion \(({\sigma }_{o}^{2}\)), item \(({\sigma }_{i}^{2}\)), and item x occasion interaction \(({\sigma }_{io}^{2}\)), influencing an absolute measure (Gardinet et al. 2009):

To refine our ANOVA estimates, we applied Whimbey’s correction using the formula ((N(f) − 1)/N(f)), where N(f) signifies the size of the “f” facet. It should be noted that this correction does not influence facets sampled from an infinite universe (e.g., persons).

G-study estimates provided variance components for individual facets, which were used to compute relative (Gr) and absolute (Ga) generalizability coefficients, that accounted for true variance associated with the object of measurement (persons). The Ga coefficient involves using Whimbey’s correction and controls for all sources of error variance, both direct and indirect (e.g., item; Cardinet et al., 2009), potentially impacting the reliability of measurement. On the other hand, the Gr coefficient, while also employing Whimbey’s correction, only considers error variances directly related to the object of measurement (e.g., person-item interaction).

TCI and SCI were used to quantify the proportion of variance in our measurements that can be attributed to the stable (trait-like) and dynamic (state-like) aspects of resilience, respectively. These indices were computed using variance components for the person and person-occasion interaction, as per the formulas developed by Medvedev et al. (2017). In our D study, we conducted an initial analysis of individual items in our resilience measures with the intention of distinguishing between ‘state’ and ‘trait’ resilience. We identified certain items that seemed to reflect a higher degree of state resilience based on their content. Subsequently, we experimented with different measurement designs by selectively combining these ‘state-like’ items to create a modified resilience scale that aimed to capture state resilience more accurately. It’s worth noting that this process was iterative and exploratory, based on our interpretation of the item content and the data patterns observed in our sample. This approach allowed us to explore the potential of creating a more nuanced measure of resilience that could differentiate between the state and trait aspects of this complex construct.

Results

Table 2 shows temporal reliability estimates computed using CTT methodology. All scales showed strong temporal reliability with test-retest correlations ranging from 0.82 to 0.92 across measures. ICC, a more robust CTT method of reliability estimation has also been used. An ICC value of 0.80 and above indicates near-perfect reliability of a valid trait measure (Landis & Koch, 1977), scale values ranged from 0.81 (SPF) to 0.92 (CD-RISC).

G-Study

Table 3 shows G-study results for person (P), item (I), occasion (O) and interaction effects for the resilience measures. Relative and absolute generalizability coefficient values ranged from 0.88 to 0.97 and indicated that all five scales are reliable measures of trait resilience with scores generalizable across occasions and the sample population. Consistent with high G-coefficient, negligible SCI values ranging between 0.00 and 0.01 for all scales showed lack of sensitivity to dynamic aspects of resilience, and TCI values of 0.99–1.00 indicate ideal characteristics of a trait resilience measure.

Overall, across all resilience measures, standard error (SE) values were relatively small compared to the values of person estimates, which reflect trait resilience. However, the SEs estimated for person x occasion interaction variance components, while negligible when compared to the SE estimated for person variance, are relatively larger when compared to the person-occasion variance estimates. This suggests that trait estimates, such as G coefficients and TCI, may be more accurate compared to state estimates (e.g., SCI).

D study

Analysis of facets was conducted for individual items of each scale by excluding all other scale items. Overall, eleven items with SCI values (> 0.60) indicating sensitivity to state resilience were identified within the scales studied. Five items were identified within the CD-RISC with sensitivity to dynamic resilience (SCI 0.63–0.94). Item 13 with the highest SCI value in this scale relates to confidence of resources in times of trouble. The most trait-sensitive CD-RISC item related to enjoyment of challenges (TCI = 0.77). These results are included for each individual item in the Supplementary Table S1.

Three TRS items were also reflecting state resilience with (SCI 0.61–0.74). The highest SCI scored was obtained for item is item 7 – “I usually take things in stride” suggesting that this item reflects very dynamic aspect of resilience. In contrast, item 2 “I usually manage one way or another” represents a very stable aspect of resilience (TCI = 0.79). The results for each TRS item are presented in the Supplementary Table S2. Item 18 (SCI = 0.63) within SPF was also sensitive to dynamic aspects of resilience with wording ‘when working on something I plan things out’. SPF item 15 - ‘when working on something I organize my time well’ had the greatest trait-sensitivity (TCI = 0.84). The results showing sensitivity of each individual SPF item to changes in state resilience are included in the Supplementary Table S3.

The Supplementary Table S4 shows the results for all ER89 items with only item 14 “I get over my anger at someone reasonably quickly” (SCI = 0.82) is reflecting state resilience to a higher degree. On the other hand, item 9 – “most of the people I meet are likeable” is the best representation of trait resilience in this scale (TCI = 0.83). No state-sensitive items were found in the BRS. All other scale items were clearly measuring trait resilience (TCI 0.60–0.71) with the highest value attributed to item 5 – “I usually come through difficult times with little trouble”.

A D-study was also conducted on combinations of 7 state items with SCIs over 0.60, identified in the five scales, in an attempt to develop a state-resilience scale. However, combining these items did not yield a valid state measure, as reflected by the obtained SCI values ranging from 0.00 to 0.10. This is likely because state variances tended to cancel each other out when items were combined (refer to Supplementary Table S4). For instance, one state item measures the ability to adapt to changes, while another measures the tendency to make plans and stick to them. These traits may be contradictory and unlikely to co-occur at the same point in time.

Discussion

Resilience can act as a protective factor against stress, anxiety, and depression and was frequently the focus of studies relating to these disorders and challenges of modern living. Issues such as workplace stress, burden of disease, and the global COVID-19 pandemic highlight the importance of psychological resilience. Evaluation of the efficacy of interventions to develop resilience requires knowledge of stable and dynamic aspects of resilience as well as sources of error in its measurement. The aim of this study was to apply G-theory to evaluate the five major resilience measures and to elucidate differences in state and trait resilience, sources of error, and generalizability of assessments scores. Eight dynamic aspects of resilience were identified from all five resilience scales including adaptation to change (CD-RISC, item 1); perseverance (CD-RISC, item 10); self-confidence while facing adversity (CD-RISC, item 13; TRS, item 17, 23); self-efficacy (CD-RISC, item 17); trust in instincts (CD-RISC, item 20); ability to follow plans (TRS, item 1); non-reactivity (TRS, item 7; ER-89, item 14); ability to plan (SPF, item 18).

Self-confidence is the aspect most represented across measures and has an inverse relationship to symptoms of anxiety and depression (Lun et al., 2018). Adaptation to change is also negatively associated with stress and depressive symptoms (Dyson & Renk, 2006). Self-efficacy has been shown as a strong predictor of resilience (Martinez-Marti & Ruch, 2017). Therefore, targeted efforts to enhance this quality may be particularly effective in resilience building. Non-reactivity, represented by two out of five resilience measures is also one of the five facets of mindfulness (Baer et al., 2006) and was consistently found as a protective factor against depression and stress (Medvedev et al., 2018a, b). Persistence was implicated as a protective factor against depression, anxiety, and panic disorder (Zainal & Newman, 2019). Trust in instincts, or an intuitive decision-making style has a strong positive correlation with global happiness and wellbeing (Stevenson & Hicks, 2016). Planning and following plans are key features of behavioral activation, an effective means to reduce depressive symptoms (Soucy et al., 2017). The dynamic aspects of resilience, as identified in our study, could potentially be more amenable to change. However, it should be noted that these findings are based on our specific sample and further research is needed to substantiate these results. Future interventions designed to increase resilience may consider these aspects, but this recommendation should be approached with caution until further evidence is gathered from diverse populations.

The variance in state aspects of resilience, as reflected by identified state items, could be due to several reasons. These items may be more sensitive to situational or environmental influences, individual moods, or transient factors that might alter an individual’s response at different occasions. For instance, an individual’s “adaptation to change” could fluctuate based on recent experiences of significant life changes. “Perseverance” might vary based on current challenges or obstacles an individual is facing. “Self-confidence while facing adversity” might be influenced by the nature and intensity of recent adversities. In other words, these items might capture state-like aspects of resilience because they are prone to short-term variations influenced by specific occasions. On the contrary, trait-like aspects of resilience such as “trust in self,“ “self-efficacy,“ and “enjoying challenges” are likely more stable characteristics that do not vary significantly over short periods or across different situations. These aspects are more inherent and less influenced by external factors, and thus, they are more trait-like.

The distinction between these state and trait aspects of resilience aligns with theoretical understanding in psychology that traits are relatively enduring characteristics, while states are temporary and can change based on circumstances. This distinction is important for the design and implementation of interventions targeted at enhancing resilience. For instance, interventions aiming at state resilience might focus on providing individuals with tools to manage situational stressors or fluctuations in mood, while those targeting trait resilience might focus on fostering enduring characteristics like self-efficacy. However, this is a preliminary interpretation based on the current study, and further research is needed to fully understand why these particular items are more susceptible to person-occasion interaction.

All five resilience scales demonstrated high generalizability of scores across occasions and sample population (G > 0.90) as expected for a trait measure. Therefore, all five measures of resilience are useful to assess long-lasting changes but may lack sensitivity to detect temporal changes in resilience caused by person-environment interaction. Similar generalizability research has been conducted on other psychological scales. Truong et al. (2020) found that the Five Factor Mindfulness questionnaire and its shortened 18 item version are both reliable measures of stable mindfulness, and at the item level similarly identified several items reflecting dynamic aspects of mindfulness. In our analyses, we observed that the effect of person x occasion interaction at the scale level was indeed negligible. This means that the dynamic aspects of resilience identified at the item level did not significantly affect the overall scale scores. While we identified certain items that appeared to capture state-like aspects of resilience, these did not significantly sway the resilience scores at the level of the entire scale.

We recognize that the person x occasion interaction term in G-theory carries important implications for understanding the dynamic aspects of individual responses across different occasions. However, the G-theory framework assumes a stable “true” person estimate across occasions. In our study, we aimed to understand the stability of resilience measures in the absence of specific interventions or repeated-survey effects, making G-theory an appropriate and informative framework. We also acknowledge that the person x occasion interaction term captures variability due to fluctuations in individual responses across occasions. However, these fluctuations, if consistent, can be considered a part of the trait resilience we aim to measure. If such fluctuations are random and inconsistent, they contribute to the error variance, reducing the reliability of our measures. In the context of resilience, the person x occasion interaction refers to the possibility that a person’s resilience may manifest differently in different circumstances. While this interaction is considered a source of error variance in CTT, it potentially carries meaningful information about the state-like aspect of resilience, which is why we chosen to analyse it in this study.

In the context of this study, the person x occasion x item (P x I x O) interaction tends to capture error variance associated with the unique combination of a specific person responding to a specific item at a specific occasion. This is typically seen as a source of measurement error in G Theory analyses, as it represents variability that is not systematically associated with the persons, items, or occasions. It is also consistent with the literature that such interaction could reflect changes in understanding an item due to situational factors. On the other hand, the person x item interaction represents an error due to inconsistent understanding of items by individuals in a sample, which was negligible in the current study, which supports psychometric properties of items used in these resilience measures.

A D-study was performed on the eleven identified state-items to attempt the creation of a state-resilience measurement scale (Supplementary Table S4). However, combining these items did not result in a valid state measure, as evidenced by the low SCI values ranging from 0.00 to 0.10. This outcome aligns with previous findings by Medvedev et al. (2018a, b) and Truong et al. (2020), suggesting that state variances may neutralize each other when items are combined. For instance, one state item evaluates an individual’s ability to adapt to changes, while another assesses their inclination to make and adhere to plans. Strictly following plans could negatively influence a person’s adaptability to change, thus diminishing the overall effect when these items are combined.

Limitations and directions for future research

This study has shown the first evidence that the evaluated scales measure trait and have low sensitivity to state-resilience, which needs to be replicated with different samples for the purpose of robustness. This lack of state-sensitivity may explain some inconsistencies found in resilience research. For example, successful resilience training is likely to yield dynamic changes proximal to intervention, and more stable changes over time. Therefore, more effective measurement of resilience training effects may be achieved by measurement of changes in both stable and dynamic resilience features.

State aspects of resilience appear to show more variability and, pending further research, could potentially be a target for resilience-building interventions. A need for a reliable measure of state-resilience has been identified by this study. Our findings may be useful to inform research to create such a scale. The similarity of the SPF item with the highest SCI value ‘when working on something I plan things out’ (SCI = 0.63) and SPF item with the highest TCI value ‘when working on something I organize my time well’ (TCI = 0.84) bears further investigation to ascertain whether the distinction between planning and time organization is critical in this context, or if other factors such as question wording have shaped this result.

The primary limitation of this study is its relatively small sample size of 94 participants, which may limit the generalizability of our findings. Future studies could benefit from a larger and more diverse sample. Therefore, we present our study as a preliminary investigation and emphasize the need for future research with larger samples to further validate our findings and improve generalizability to a wider population. In addition, the sample for this study was obtained from a predominantly European and female university class population. Future research should endeavour to test the measurement invariance of dynamic and stable aspects of resilience in larger, more diverse samples to increase the generalizability of findings.

In this study we adapted two scales (SPF, TRS) to 5-point Likert scale format to ensure the same number of response options across all scales used in this study, which was necessary for a more coherent and reliable analysis. However, we believe that our adaptations, which were limited to the number of response options and did not involve altering the content of the items, are unlikely to significantly distort the constructs being measured. We have maintained the integrity of the original scales’ content and have only modified the response options to align with the rest of the scales used in our research. While we acknowledge that this is a limitation of our study, we also believe that our findings provide valuable initial insights into the state and trait aspects of resilience.

We would like to emphasize the importance of interpreting the SCI and TCI estimates with caution, as these indices are derived from variance components that are themselves approximations subject to sampling error. As such, the SCI and TCI estimates may not be infallible, and their precision is contingent upon the precision of the underlying variance components estimates. Unfortunately, there is currently no established procedure for computing confidence intervals for the SCI and TCI, which would provide valuable information on the reliability of these indices. Future research in this area would greatly benefit from further investigation of methods to compute confidence intervals and assess the standard error and sampling distribution of the SCI and TCI. In light of these limitations, we encourage readers to consider the potential uncertainties and biases in our results when interpreting the SCI and TCI estimates. Recognizing these constraints is important for a comprehensive understanding of the degree to which items measure state or trait resilience in our study.

In our study, we reported Cronbach’s alpha coefficients for the resilience measures as a routine requirement and for comparison with their respective validation studies. We recognize that Cronbach’s alpha is based on certain assumptions, such as unidimensionality, tau-equivalence, and independent error covariances among items. Some of the resilience measures used in this study are designed to assess multiple factors, which may violate the assumption of unidimensionality (Vispoel et al., 2018). Additionally, we did not test for tau-equivalence in our analysis. We would like to emphasize that the main focus of our study was not to examine the internal consistency of these measures, but rather to explore the overarching construct of resilience that they assess. In our analyses, we operated under the assumption that all scales measure the same construct—resilience. We acknowledge that this assumption could potentially be perceived as a limitation. We also acknowledge the potential limitations of using Cronbach’s alpha in this context and recommend interpreting these coefficients with caution. Further research may benefit from employing alternative reliability metrics that are more appropriate for multidimensional measures.

Data availability

The study is preregistered at the Open Science OSF and the data is available at the OSF depository using the following link: https://osf.io/6a9ws/?view_only=5c5fd3ef9ab946139bdb3feac16d79b2.

References

Akeman, E., Kirlic, N., Clausen, A. N., Cosgrove, K. T., McDermott, T. J., Cromer, L. D., & Aupperle, R. L. (2019). A pragmatic clinical trial examining the impact of a resilience program on college student mental health. https://doi.org/10.1002/da.22969

Arterberry, B. J., Martens, M. P., Cadigan, J. M., & Rohrer, D. (2014). Application of generalizability theory to the big five inventory. Personality and Individual Differences, 69, 98–103.

Baer, R. A., Smith, G. T., Hopkins, J., Krietemeyer, J., & Toney, L. (2006). Using self-report assessment methods to explore facets of mindfulness. Assessment, 13(1), 27–45.

Barnes, L. L. B., Harp, D., & Jung, W. S. (2002). Reliability generalization of scores on the Spielberger state-trait anxiety inventory. Educational and Psychological Measurement, 62(4), 603–618. https://doi.org/10.1177/0013164402062004005

Bloch, R., & Norman, G. (2012). Generalizability theory for the perplexed: A practical introduction and guide: AMEE Guide No. 68. Medical Teacher, 34(11), 960–992.

Block, J., & Kremen, A. M. (1996). IQ and ego-resiliency: Conceptual and empirical connections and separateness. Journal of Personality and Social Psychology, 70(2), 349–361. https://doi.org/10.1037/0022-3514.70.2.349

Brennan, R. (1992). Generalizability theory. Educational Measurement: Issues & Practice, 11(4), 27–34.

Brennan, R. (2010). Generalizability Theory and classical test theory. Applied Measurement in Education, 24(1), 1–21.

Brennan, R. (2001). Generalizability theory. Springer.

Cardinet, J., Johnson, S., & Pini, G. (2009). Applying generalizability theory using EduG. Routledge.

Connor, K. M., & Davidson, J. (2003). Development of a new resilience scale: The Connor-Davidson resilience scale (CD‐RISC). Depression and Anxiety, 18(2), 76–82.

Cronbach, L. J., Gleser, G. C., Nanda, H., & Rajaratnam, N. (1972). The dependability of behavioral measurements. Wiley.

Cronbach, L. J., Rajaratnam, N., & Gleser, G. C. (1963). Theory of generalizability: A liberalization of reliability theory. British Journal of Statistical Psychology, 16(2), 137–163.

Dantzer, R., Cohen, S., Russo, S. J., & Dinan, T. G. (2018). Resilience and immunity. Brain Behavior and Immunity, 74, 28–42. https://doi.org/10.1016/j.bbi.2018.08.010

Dyson, R., & Renk, K. (2006). Freshmen adaptation to university life: Depressive symptoms, stress, and coping. Journal of Clinical Psychology, 62(10), 1231–1244.

Farkas, D., & Orosz, G. (2015). Ego-resiliency reloaded: A three-component model of general resiliency. PLoS One, 10(3), e0120883–e0120883. https://doi.org/10.1371/journal.pone.0120883

Fleeson, W., & Jayawickreme, E. (2015). Whole trait theory. Journal of Research in Personality, 56, 82–92.

Gaudry, E., Vagg, P., & Spielberger, C. D. (1975). Validation of the state-trait distinction in anxiety research. Multivariate Behavioral Research, 10(3), 331–341. https://doi.org/10.1207/s15327906mbr1003_6

Heinz, A. J., Meffert, B. N., Halvorson, M. A., Blonigen, D., Timko, C., & Cronkite, R. (2018). Employment characteristics, work environment, and the course of depression over 23 years: Does employment help foster resilience? Depression and Anxiety, 35(9), 861–867. https://doi.org/10.1002/da.22782

Joyce, S., Shand, F., Tighe, J., Laurent, S. J., Bryant, R. A., & Harvey, S. B. (2018). Road to resilience: A systematic review and meta-analysis of resilience training programmes and interventions. BMJ Open, 8(6), e017858. https://doi.org/10.1136/bmjopen-2017-017858

Kim, S. M., Kim, H. R., Min, K. J., Yoo, S. K., Shin, Y. C., Kim, E. J., & Jeon, S. W. (2020). Resilience as a protective factor for suicidal ideation among korean workers. Psychiatry Investigation, 17(2), 147–156. https://doi.org/10.30773/pi.2019.0072

Kumar, S. S., Merkin, A. G., Numbers, K., Sachdev, P. S., Brodaty, H., Kochan, N. A., Trollor, J. N., Mahon, S., & Medvedev, O. (2022). A novel approach to investigate depression symptoms in the aging population using generalizability theory. Psychological Assessment. Advance online publication. https://doi.org/10.1037/pas0001129

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174. https://doi.org/10.2307/2529310

Lun, K. W., Chan, C. K., Ip, P. K., Ma, S. Y., Tsai, W. W., Wong, C. S., & Yan, D. (2018). Depression and anxiety among university students in Hong Kong. Hong Kong Medical Journal = Xianggang yi xue za zhi, 24(5), 466–472.

Lyndon, M. P., Medvedev, O. N., Chen, Y., & Henning, M. A. (2020). Investigating stable and dynamic aspects of student motivation using generalizability theory. Australian Journal of Psychology, 72(2), 199–210.

Martínez-Martí, M. L., & Ruch, W. (2017). Character strengths predict resilience over and above positive affect, self-efficacy, optimism, social support, self-esteem, and life satisfaction. The Journal of Positive Psychology, 12(2), 110–119.

McCraty, R., & Atkinson, M. (2012). Resilience training program reduces physiological and psychological stress in police officers. Global Advances in Health and Medicine, 1(5), 44–66. https://doi.org/10.7453/gahmj.2012.1.5.013

Medvedev, O. N., & Siegert, R. J. (2022). Generalizability theory. Handbook of Assessment in Mindfulness Research. Springer. .https://doi.org/10.1007/978-3-030-77644-2_5-1

Medvedev, O. N., Berk, M., Dean, O. M., Brown, E., Sandham, M. H., Dipnall, J. F., Robert, K., McNamara, R. K., Sumich, A., Krägeloh, C. U., Narayanan, A., & Siegert, R. J. (2021). A novel way to quantify schizophrenia symptoms in clinical trials. European Journal of Clinical Investigation, 51(3), e13398.

Medvedev, O. N., Krägeloh, C. U., Narayanan, A., & Siegert, R. J (2017). Measuring mindfulness: Applying generalizability theory to distinguish between state and trait. Mindfulness, 8(4), 1036–1046.

Medvedev, O. N., Norden, P. A., Krägeloh, C. U., & Siegert, R. J. (2018a). Investigating unique contributions of dispositional mindfulness facets to depression, anxiety, and stress in general and student populations. Mindfulness, 9(6), 1757–1767.

Medvedev, O. N., Merry, A. F., Skilton, C., Gargiulo, D. A., Mitchell, S. J., & Weller, J. M. (2018b). Examining reliability of WHOBARS: A tool to measure the quality of administration of WHO surgical safety checklist using generalizability theory with surgical teams from three New Zealand hospitals. British Medical Journal Open, 9(1). https://doi.org/10.1136/bmjopen-2018-022625

Miller, Y. R., Medvedev, O. N., Hwang, Y. S., & Singh, N. N. (2021). Applying generalizability theory to the perceived stress scale to evaluate stable and dynamic aspects of educators’ stress. International Journal of Stress Management, 28(2), 147–153. https://doi.org/10.1037/str0000207

Paterson, J., Medvedev, O. N., Sumich, A., Tautolo, E., Krägeloh, S. U., Sisk, R., McNamara, R., Berk, M., Narayanan, A., & Siegert, R. J. (2017). Distinguishing transient versus stable aspects of depression in New Zealand Pacific Island children using Generalizability Theory. Journal of Affective Disorders, 227, 698–704.

Ponce-Garcia, E., Madewell, A. N., & Kennison, S. M. (2015). The development of the scale of protective factors: Resilience in a violent trauma sample. Violence and Victims, 30(5), 735–755.

Poole, J. C., Dobson, K. S., & Pusch, D. (2017a). Anxiety among adults with a history of childhood adversity: Psychological resilience moderates the indirect effect of emotion dysregulation. Journal of Affective Disorders, 217, 144–152. https://doi.org/10.1016/j.jad.2017.03.047

Poole, J. C., Dobson, K. S., & Pusch, D. (2017b). Childhood adversity and adult depression: The protective role of psychological resilience. Child Abuse & Neglect, 64, 89–100.

Salisu, I., & Hashim, N. (2017). A critical review of scales used in resilience research. IOSR Journal of Business and Management, 19(4), 23–33.

Sheerin, C. M., Lind, M. J., Brown, E. A., Gardner, C. O., Kendler, K. S., & Amstadter, A. B. (2018). The impact of resilience and subsequent stressful life events on MDD and GAD. Depression and Anxiety, 35(2), 140–147. https://doi.org/10.1002/da.22700

Shoukri, M. M., Asyali, M. H., & Donner, A. (2004). Sample size requirements for the design of reliability study: review and new results. Statistical Methods in Medical Research, 13(4), 251–271.

Smith, B. W., Dalen, J., Wiggins, K., Tooley, E., Christopher, P., & Bernard, J. (2008). The brief resilience scale: Assessing the ability to bounce back. International Journal of Behavioural Medicine, 15(3), 194–200.

Soucy, I., Provencher, M., Fortier, M., & McFadden, T. (2017). Efficacy of guided self-help behavioural activation and physical activity for depression: A randomized controlled trial. Cognitive Behaviour Therapy, 46(6), 493–506.

Spielberger, C. D. (1983). State-trait anxiety inventory for adults (STAI-AD) [Database record]. APA PsycTests. https://doi.org/10.1037/t06496-000

Stevenson, S. S., & Hicks, R. E. (2016). Trust your instincts: The relationship between intuitive decision making and happiness. European Scientific Journal, 12(11), 463.

Stewart, D. E., & Yuen, T. (2011). A systematic review of Resilience in the physically ill. Psychosomatics, 52(3), 199–209. https://doi.org/10.1016/j.psym.2011.01.036

Steyer, R., Schmitt, M., & Eid, M. (1999). Latent state-trait theory and research in personality and individual differences. European Journal of Personality, 13(5), 389–408.

Swiss Society for Research in Education Working Group. (2006). EDUG user guide. IRDP.

Truong, Q. C., Krägeloh, C. U., Siegert, R. J., Landon, J., & Medvedev, O. N. (2020). Applying Generalizability Theory to Differentiate between Trait and State in the Five Facet Mindfulness Questionnaire (FFMQ). Mindfulness, 11, 953–963. https://doi.org/10.1007/s12671-020-01324-7

Vanhove, A. J., Herian, M. N., Perez, A. L. U., Harms, P. D., & Lester, P. B. (2016). Can resilience be developed at work? A meta-analytic review of resilience‐building programme effectiveness. Journal of Occupational and Organizational Psychology, 89(2), 278–307. https://doi.org/10.1111/joop.12123

Vispoel, W. P., Morris, C. A., & Kilinc, M. (2018). Applications of generalizability theory and their relations to classical test theory and structural equation modeling. Psychological Methods, 23(1), 1–26. https://doi.org/10.1037/met0000126

Vispoel, W. P., Xu, G., & Schneider, W. S. (2021). Interrelationships between latent state-trait theory and generalizability theory within a structural equation modeling framework. Psychological Methods, 27(5), 773–803. https://doi.org/10.1037/met0000290

Wagnild, G. M., & Young, H. (1993). Development and psychometric evaluation of the resilience scale. Journal of Nursing Measurement, 1(2), 165–17847.

Wrzus, C., & Roberts, B. W. (2017). Processes of personality development in adulthood: The TESSERA framework. Personality and Social Psychology Review, 21(3), 253–277.

Zainal, N. H., & Newman, M. G. (2019). Relation between cognitive and behavioral strategies and future change in common mental health problems across 18 years. Journal of Abnormal Psychology, 128(4), 295–304.

Acknowledgements

The authors express their gratitude to the participants without whom this research would not have been possible.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

LC contributed to study design, data collection, literature review, writing and revision of the report and creation of figures. OM contributed to study design, data collection, writing and revision of the report and creation of figures.

Corresponding author

Ethics declarations

Ethics approval

This study has received ethics approval from the ALPSS Human Research Ethics Committee of the University of Waikato based on internationally accepted ethical standards.

Informed consent

All participants provided informed consent for participating in this study that included publications and was a requirement for completing the scale.

Competing interests

There are no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Below is the link to the electronic supplementary material.

ESM 1

(DOCX 36.0 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Child, L.E., Medvedev, O.N. Investigating state and trait aspects of resilience using Generalizability theory. Curr Psychol 43, 9469–9479 (2024). https://doi.org/10.1007/s12144-023-05072-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-023-05072-4