Abstract

The degree to which people take advice, and the factors that influence advice-taking, are of broad interest to laypersons, professionals, and policy-makers. This meta-analysis on 346 effect sizes from 129 independent datasets (N = 17, 296) assessed the weight of advice in the judge-advisor system paradigm, as well as the influence of sample and task characteristics. Information about the advisor(s) that is suggestive of advice quality was the only unique predictor of the overall pooled weight of advice. Individuals adjusted estimates by 32%, 37%, and 48% in response to advisors described in ways that suggest low, neutral, or high quality advice, respectively. This indicates that the benefits of compromise and averaging may be lost if accurate advice is perceived to be low quality, or too much weight is given to inaccurate advice that is perceived to be high quality. When examining the three levels of perceived quality separately, advice-taking was greater for subjective and uncertain estimates, relative to objective estimates, when information about the advisor was neutral in terms of advice quality. Sample characteristics had no effect on advice-taking, thus providing no evidence that age, gender, or individualism influence the weight of advice. The findings contribute to current theoretical debates and provide direction for future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The definition and measurement of advice varies across disciplines and research paradigms (MacGeorge & Van Swol, 2018a). It has been suggested that advice may be a higher-order factor subsuming lower-order factors, including, but not limited to, provision for or against a specific recommendation, and provision of guidance on how to make a decision (Bonaccio & Dalal, 2006). The current meta-analysis synthesises studies using the judge–advisor system (JAS) paradigm (Sniezek & Buckley, 1995), which is the most commonly applied measure of advice-taking. In this paradigm, the judge is asked to provide a numerical estimate (e.g., distance between two cities) before receiving an advisor’s (or advisors’) estimate(s). Then the judge is invited to revise their estimate, and sometimes an incentive is provided for accuracy. This allows for the calculation of the weight of advice using the formula: [(final estimate—initial estimate) / (advice – initial estimate)], which provides a continuous outcome on a scale from 0 (completely ignoring advice) to 1 (completely relying on advice) (Harvey & Fischer, 1997; Yaniv, 2004a). In the JAS, advice is broadly defined as information from another person, or people, or even an algorithm, that does not require advocacy by the advice-giver (Rader et al., 2017).

Receiving advice in the form of a numerical estimate that can be used to update an independent estimate represents one of the simplest forms of advice-taking and is ubiquitous in diverse real-world contexts. Professionals, such as physicians, weather forecasters, and financial advisors, as well as non-professional friends, family, and strangers, regularly provide advice in the form of quantitative estimates (e.g., number of calories in a meal, the chance of rain, cost of an investment or holiday, or online reputation ratings). The degree to which people incorporate advice into their decision-making has critical implications for public policy, including via vaccine refusal and climate change scepticism.

The average of more than one quantitative estimate usually results in a more accurate estimate if the advice is well-intentioned, and each estimate is independent of the other(s). Incorporating 50% of an advised estimate into an independent estimate would represent a rational use of advice (Larrick & Soll, 2006). However, the mean level of adjustment towards advice is typically around 30% (Soll & Larrick, 2009). This tendency can be explained by the theoretical construct of egocentric discounting whereby individuals weigh their own estimation more strongly than the estimations of others (Yaniv & Kleinberger, 2000). Previous narrative reviews that have focused on advice-taking (MacGeorge & Van Swol, 2018a), or social information use across five different tasks (Morin et al., 2021), or the JAS task specifically (Bonaccio & Dalal, 2006; Rader et al., 2017; Van Swol et al., 2018), concur that egocentric discounting is generally suboptimal yet poorly understood. Each of the three reviews that focused on the JAS task described the influence of multiple variables on egocentric discounting, while Bonaccio and Dalal (2006) stated that “once a critical mass of studies pertaining to each of these variables comes into existence, meta-analytic investigations will be in order.” (p. 139) A quantitative meta-analysis is useful for determining which variables may best explain egocentric discounting.

Input-process-output model

The conceptual framework for the current meta-analysis is based on the Bonaccio and Dalal (2006) input-process-output (IPO) model for explaining weight of advice in the JAS (see Fig. 1). The “input” category in the IPO model comprises of individual-level, JAS-level, and environment-level factors. Individual-level factors include the judge’s pre-advice opinion and confidence, as well as information about the advisor. JAS-level factors include whether advice is optional or imposed, and the number of advisors. Environment-level factors include the type of decision task and reward structure (e.g., whether financial incentives are available and whether they are tied to decision accuracy). The “process” category accounts for intra-JAS interaction between the judge and advisor(s) on a continuum from in-person to partially concealed to completely anonymous. The “process” category and the “input” factors both predict the “output” which includes the weight of advice (advice use or discounting), as well as the judge’s post-advice accuracy and confidence. The present meta-analysis used the JAS IPO model (Bonaccio & Dalal, 2006) as a framework to examine the influence of “input” factors on weight of advice, and in particular the influence on advice discounting. The “process” category was not assessed because most studies involved anonymous interactions.

Input factors

Following data extraction, the most commonly measured variables included the judge’s age, gender, and culture (i.e., degree of individualism), as well as the perceived advice quality based on information about the advisor, and the type of decision task (i.e., objective and certain versus subjective and uncertain).

Judge age

The weight given to advice may decrease from early childhood through adolescence (Molleman et al., 2021; Rakoczy et al., 2015), and then increase again after the age of 65 years (Bailey et al., 2021a). Dual process models of ageing and decision-making suggest reduced deliberation with age and therefore a motivational shift away from autonomous decision-making in young adulthood and towards increased joint decision-making and reliance on others (Peters et al., 2007). There is also evidence that advice-taking is positively associated with trust (Bonaccio & Dalal, 2006), and trust increases with age (Bailey et al., 2021b), further suggesting greater weight of advice as age increases. An analysis of the influence of age between the ages of 18 and 65 years would provide a broader view of the trajectory of advice-taking across the adult lifespan.

Judge gender

Trust also differs as a function of gender. One study showed that men are more trusting than women in the economic trust game and suggested that men viewed the interaction more strategically than women (Buchan et al., 2008). However, a meta-analysis provided evidence that women are more trusting than men (Feingold, 1994), and co-workers have been shown to report that women take more advice than men (See et al., 2011). In contrast, MacGeorge et al. (2016) found that gender has only limited influence on advice evaluation, albeit in the context of discussing a problem with a friend. Nevertheless, the confidence literature further suggests that there may be increased advice-taking among women relative to men. Confidence is negatively associated with advice-taking (Rader et al., 2017), and women are less confident than men when making judgments (See et al., 2011). The current meta-analysis presents an opportunity to establish a clearer picture of the potential effect of gender on advice-taking in the judge-advisor task. This can be achieved by assessing the influence of the proportion of female judges in each sample on the weight of advice.

Judge culture

Individualist versus collectivist cultures may differ in the degree to which they integrate advice from others into judgment and decision-making. Members of collectivist cultures desire relational harmony, obey authority, and are more likely to perceive and understand advice from others (Tinghu et al., 2018). This suggests increased advice-taking among cultures that are more collectivistic. In contrast, Gheorghiu et al. (2009) found that individualism was more likely to foster trust among people than collectivism, and as previously discussed, trust may increase advice-taking. They explained this finding in light of Yamagishi and Yamagishi’s theory that norms emphasising independence, autonomy, and distinctiveness, which are characteristic of individualistic cultures, are more likely to foster trust among people (Yamagishi et al., 1998). Alternatively, individualists may discount advice because it undermines their desire for autonomy and motivation to maintain a favourable self-concept (Rader et al., 2017). The Morin et al. (2021) narrative review concurs that there is mixed empirical evidence for the effect of culture on advice-taking. A quantitative synthesis of the existing data will help to disentangle this evidence to determine whether there is a systematic effect of individualism on advice-taking.

Perceived advice quality

JAS studies to date have manipulated participants’ perceptions of advisors, and therefore perceptions of advice quality, in a number of ways. They have explicitly referred to advisors as novices or experts with low versus high expertise, respectively (e.g., Bailey et al., 2021a; Meshi et al., 2012). Alternatively, advisors have been described in neutral terms, as other participants, supposedly similar to the current participant (e.g., Gino, 2008; See et al., 2011). Advice has also been described as the average of estimates provided by a number of previous participants, suggesting high quality (i.e., Carbonell et al., 2019; Logg et al., 2019). Greater activity in the ventral striatum, a brain region associated with the anticipation of reward (Knutson et al., 2001), was identified when participants were told to expect expert rather than novice advice (Meshi et al., 2012). This suggests that expert advice is valued more highly than novice advice, perhaps due to an expectation of it being high quality and leading to an improvement in performance. We included descriptions of the advisor that suggest advice quality as a task characteristic that was not previously specified as an input factor in the JAS IPO model.

The informational asymmetry account suggests that egocentric discounting occurs because people have greater access to their own reasons for a judgment relative to the reasoning behind another person’s judgment (Yaniv, 2004b; although see Trouche et al., 2018). This assumption is supported by evidence for increased advice-taking when self-reported knowledge is low (Duan et al., 2021; Yaniv & Choshen-Hillel, 2012) or the decision is difficult (Gino & Moore, 2007). Having more information about the advisor, such as about their level of expertise, is likely to reduce informational asymmetry. Indeed, Yaniv and Kleinberger (2000) suggest that a judge will form a view of the advisor following repeated interactions, and that this reputation formation will influence the weight of advice. They further argue that risk aversion contributes to asymmetry of reputation formation because the risk of an average advisor giving bad advice looms larger than the benefit of receiving good advice. This means that average and bad advisors would be considered similarly, despite average advisors sometimes giving good advice. Monitoring of behaviour across repeated interactions is not always possible and consequently, reputation is often derived from available information in one-off interactions or via second-hand information. The latter is the most common method for conveying the quality of the advisor in the JAS paradigm, and the degree to which reputation asymmetry influences advice-taking from advisors likely to provide low, average, or high quality advice remains to be established.

Uncertainty of estimate

The uncertainty of the estimate may influence advice-taking but has received little attention in the literature to date. Objective values have one correct answer (e.g., the number of coins in a jar or distance between two cities). However, for more subjective or uncertain judgements (e.g., stock forecasting or service-provider ratings), there is not necessarily a single correct answer. Subjective or uncertain judgments, which are based on opinion, may result in greater advice-taking than objective judgments because ultimately their correctness is determined by a consensus among individuals (Laughlin & Ellis, 1986). Indeed, uncertainty about an initial estimate is a good predictor of advice-taking (Gino, 2008). Uncertainty also implies low knowledge, which may increase the perception that the advisor is more knowledgeable than the decision-maker and therefore increase advice-taking (Gino & Moore, 2007; Yaniv & Kleinberger, 2000; Yaniv, 2004a, b). An alternative proposition is that advice will be given more weight when estimating objective relative to subjective values. A primary motivation for taking advice is to improve accuracy (Rader et al., 2017). This suggests that because subjective estimates do not have a right or wrong answer, advice offers less opportunity to improve accuracy relative to advice relating to an objective estimate (See et al., 2011). Indeed, Van Swol (2011) demonstrated that decision-makers take more advice when determining a cognitively challenging objective estimate relative to a subjective estimate based on personal taste. The current meta-analysis will test these competing theoretical arguments for disproportionate use of advice when estimating subjective and uncertain versus objective and certain values.

The current meta-analysis

We build upon existing narrative reviews (i.e., Bonaccio & Dalal, 2006; MacGeorge & Van Swol, 2018a; Morin et al., 2020; Rader et al., 2017; Van Swol et al., 2018) and the IPO conceptual framework to conduct a systematic review and meta-analysis of advice-taking in the JAS task. In their review, Bonaccio and Dalal (2006) pointed to the need for research to extend an analysis of situational variables that influence advice-taking, to also include individual difference variables. Thus, we sought to provide the first synthesis of data to examine whether advice-taking is influenced by age, gender, or cultural context. We also intended to contribute to competing theoretical arguments relating to the potential influences of gender, culture, and estimate subjectivity on weight of advice. Morin et al.’s (2020) review identified mixed evidence for the effect of culture in advice-taking. Further, culture operationalised as a degree of individualism has the potential to contribute to an understanding of the roles of self-concept and desire for autonomy, which Rader et al.’s (2017) narrative review highlighted as motives that may be particularly important in advice-taking. The previous reviews did not address estimate subjectivity in any depth and as such the current meta-analysis will provide an initial review of this potential influence on advice-taking.

A further aim was to establish the influence of perceptions of the advisor on advice-taking, and particularly whether decision-makers differentiate advisors perceived to provide low or neutral quality advice. Based on Yaniv and Kleinberger’s (2000) conceptual framework for understanding advice-taking, we expected that information about the advisor indicating advice quality would be the strongest influence on the overall mean weight of advice. We therefore planned further analyses to separately examine predictors of the weight of advice in response to advisors described as providing (1) high, (2) neutral, and (3) low quality advice. In addition to examining mean age, the percentage of females, culture, uncertainty of estimate, and perceived advice quality as predictors of advice-taking, we extracted further potential predictors if they were amenable to meta-analysis. These included, the judge’s pre-advice confidence, type of sample (i.e., student vs non-student), actual advice accuracy, whether the judge was offered an accuracy incentive, type of incentive for participating in the study (cash versus course credit), whether advice was imposed versus optional, and number of advisors (single versus multiple).

Method

This study was conducted according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher et al., 2009). Anonymised data and code are accessible at the Open Science Framework (https://osf.io/atz6y/?view_only=a5e435f0b5de42a286736725a11bb58d).

Information sources and search

A computerised literature search using PsycINFO, PubMed, Web of Science, and Scopus was completed on 21 February, 2020. The search did not apply any limitation on the year of publication. The title, keyword, and abstract search terms included: “use of advice” OR “advice use” OR “advice seeking” OR “advice taking” OR “weight of advice” OR “judge-advisor system” OR “judge-adviser system” OR “judge-advisor” OR “judge-adviser” OR “advice utilization” OR “advice utilisation”. In December 2020 we emailed the corresponding author from each identified paper that was published within the past 10 years to request unpublished data. At the same time, we posted a call for unpublished data on the Society for Judgment and Decision Making mailing list, and performed a search of preprints in OSF. Manual forward (review of articles that were cited in the final set of articles) and backward (review of articles that were cited in the final set of articles) citation searches were conducted February 2021. A PRISMA flowchart outlines the process for selecting studies for inclusion in this meta-analysis (see Fig. 2).

Eligibility criteria

Studies were included if (1) the paper was written in English, (2) advice-taking was measured using the JAS paradigm, (3) weight of advice was calculated using the formula: [(final estimate—initial estimate) / (advice – initial estimate)] or a variant of this formula, such as [final estimate—initial estimate / advice – initial estimate], and (4) statistics for calculating effect size were available in the paper or supplied by the author.

Data extraction and study selection

Table 1 reports the sample and task characteristics for each independent data set. Authors PB and TL extracted all data. When data were not available in tables or text, but figures were available, we used WEBPLOTDIGITIZER software to extract the data from the figures. Estimating data in this way has been shown to involve a small margin of error but to be “satisfactory, accurate, and efficient” (Burda et al., 2017, p. 260). If no form of data was available in a paper, we contacted the corresponding and/or first author via email. Two attempts were made to contact authors and effects were excluded when we received no response or were informed that data were no longer available (i.e., Sciandra, 2019; Scopelliti et al., 2015; See et al., 2011; Study 4; Sniezek et al., 2004; Tzioti et al., 2014; Yaniv, 2004a; Yaniv & Kleinberger, 2000, Studies 2 to 4; Wanzel et al., 2017).

PB extracted data for each effect size (M, N, and SD or SE) a second time to ensure 100% reliability across the two independent data files. When only the total number of participants was available across multiple conditions, we assumed even numbers of participants in each condition. Data extracted using WebPlotDigitizer often differed by decimal places across the two extractions, and, in such cases, we used the average of the two extractions. PB extracted the predictor data from the included studies. TL checked the extracted predictor data for errors, and disagreements were resolved by discussion and consensus. An independent coder then extracted predictor data for 20% of the 129 studies (i.e., 26 studies). There was initially 96% agreement between the independent coder and the coding completed by the authors. The inspection of discrepancies revealed errors in 6.3% of the independent coder’s extractions. Removing these errors there was 99% agreement between coders.

Twelve effects that reflected group rather than individual decision-making were excluded (Kim et al., 2020; Larson et al., 2020), as were eleven effects based on the decisions of dyads (Minson & Mueller, 2012; Schultze et al., 2019). Four effects were excluded because participants were presented with manipulated initial estimates at the same time that they were provided with advice (Trouche et al., 2018). A summary of the predictor names, definitions, operationalisations, and representative sources is provided in Table 2. In-depth explanations of predictor coding decisions are provided as Supplementary Information.

Meta-analytic approach

This meta-analysis of proportion data synthesises a one-dimensional binomial measure known as the (weighted or pooled) average proportion. This is the average of proportions within multiple studies weighted by the inverse of their sampling variances. Raw proportion of advice-taking was used as the effect size index because observed proportions were around 0.5 and the number of studies was sufficiently large (Barendregt et al., 2013), and also because a re-analysis of the data using a logit transformation did not change the significance of any finding. A larger proportion indicates a greater degree of adjustment of an estimate towards the estimate of an advisor or advisors.

Dependency refers to violation of the statistical assumption that effect sizes are independent. One type of dependency in meta-analysis arises from individual studies contributing multiple effect sizes. We dealt with dependency of effects within studies by following the steps described in Assink and Wibbelink (2016) for fitting a three-level meta-analytic model using the metafor package (Viechtbauer, 2010) in R (Version 4.1.2; R Core Team, 2021). Variance components are distributed over three levels of the model: individual level sampling variance (level 1); variance between effect sizes within studies (level 2), and variance between studies (level 3), as described by Van den Noortgate et al. (2013). Parameters were estimated using the restricted maximum likelihood procedure. An ANOVA function tested the fit of a three-level model against the two-level models. weight of advice is measured as a proportion (ranging from 0 to 1). To examine potential predictors of the overall effect in each three-level model, continuous variables were centered around the variable mean and were assessed using a three-level meta-regression model. Categorical predictors with k categories were converted to k-1 dummy variables through binary coding and were assessed using a three-level mixed-effects model. Testing multiple significant predictors in a single model after potential effects have been evaluated separately in univariate models is a reasonable strategy for dealing with potential multicollinearity (Hox, 2010). Variance inflation factors (VIFs) were also calculated to test for multicollinearity.

It is not possible to test for publication bias using trim-and-fill or Egger’s test in a multilevel meta-analysis. We therefore tested for publication bias using one pooled estimate of the weight of advice for each study. When an individual study included two or more conditions (i.e., dependent outcomes), effect sizes for each outcome were pooled. We used the MAd package (Del Re & Hoyt, 2014) in R to create the composite estimate using recommended procedures as described in The Handbook of Research Synthesis and Meta-Analysis (Cooper et al., 2009). The composite was calculated accounting for a conservative correlation of 1.0 among within-study outcomes and implemented the Borenstein et al. (2009) procedures for aggregating dependent effect sizes.

After imputing missing studies to form a symmetrical funnel plot, the trim-and-fill method provides an estimate of the true mean and variance (Duval & Tweedie, 2000). Egger’s test assesses the degree of asymmetry in the funnel plot as measured by the intercept from regression of standard normal deviates against precision (Egger et al., 1997).

Results

Study selection and characteristics

As summarised in Fig. 2, the initial literature search resulted in 355 articles in PsycINFO, 158 articles in PubMed, 453 articles in Web of Science, and 555 articles in Scopus (n = 1,521). After merging the four databases, 340 duplicates were removed. An additional 93 articles were identified using other methods described in Information Sources and Search. 944 records were excluded following the screening of the titles and abstracts, and a further 277 following screening of the full paper. The final data consisted of 53 articles comprising 129 independent data sets with a total of 17,296 participants. From these data sets we extracted 346 effect sizes.

Overall pooled effect

We conducted multi-level meta-analysis using the rma.mv function of the ‘metafor’ package (Viechtbauer, 2010). Our three-level meta-analytic model showed that the overall pooled weight of advice (k = 346) was 0.39, 95% CI [0.37, 0.42]. This overall effect was significant, t(345) = 31.57, p < 0.001, and indicates that individuals, on average, adjusted their estimates to be 39% closer to an advised estimate/s (see Fig. 3). A boxplot identified two outlier effect sizes (0.93 and 0.92). Exclusion of these two data points did not substantially change the overall effect, 0.39, 95% CI [0.37, 0.42], t(343) = 31.97, p < 0.001, and so they were retained in subsequent analyses.

Forest Plot of the Overall Weight of Advice. Note. The diamond represents the overall pooled weight of advice proportion. Each effect size and 95% confidence interval (error bar) represents an independent sample (s = 129). For articles with multiple independent samples, the effect size for each sample (S1, S2, etc.) is reported separately. Where a sample contributed more than one effect, the pooled effect, accounting for dependency between effects, is represented

We compared the fit of the original three-level model with the fit of a two-level model in which within-study variance (level 2) was not modelled. We found that the fit of the original three-level model was statistically better than the fit of the two-level model (p < 0.0001), suggesting that there was significant heterogeneity between effect sizes within studies. Next, we compared the fit of the original three-level model to the fit of a model where only variance at level 2 was freely estimated and where the variance at level 3 (between-studies), was fixed at zero. We found that the fit of the original three-level model was statistically better than the fit of the two-level model (p < 0.0001), suggesting that there was significant heterogeneity between studies. The estimated variance components between effect sizes within- and between-studies were τ2Level2 = 0.012 and τ2Level3 = 0.015, respectively. Of the total variance, 0.16 percent was attributed to variance at level 1 (i.e., sampling variance); 57.08 percent was attributed to differences between effect sizes within samples at level 2 (i.e., within-study variance); and 42.76 percent was attributed to differences between studies at level 3. We therefore extended our model to examine the potential influence of additional variables.

Multiple predictor model

An analysis with multiple predictors was conducted to examine the unique influence of each significant univariate model predictor (perceived advice quality, estimate uncertainty, and accuracy incentive) on the summary weight of advice (see Supplementary Information for the univariate models). We excluded actual advice accuracy because only 35% of effect sizes could be coded for accuracy. There was no evidence of multicollinearity among the predictor variables as evidenced by VIFs ≤ 1.36. The overall model was significant, F(4, 279) = 10.82, p < 0.001. Only high, t(279) = 3.70, p < 0.001, and low, t(279) = 2.10, p = 0.037, perceived advice quality (relative to neutral perceived advice quality) had unique effects not confounded by other variables in the model.

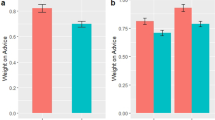

Advice-taking as a function of perceived advice quality

Next, we separately examined the weight of advice in response to advisors perceived to be providing (1) high quality advice, (2) neutral quality advice, and (3) low quality advice. Because there were no predictors of advice-taking when advice was perceived to be either high or low quality, we report these data in the Supplementary Information.

Advice perceived as neutral quality

The summary effect when the advisor was perceived to provide neutral quality advice (k = 170) equaled 0.38, 95% CI [0.35, 0.40], t(169) = 25.46, p < 0.001 (see Fig. 4). A boxplot identified no outlier effect sizes.

Forest Plot of the Weight of Advice in Response to Advisors Perceived to Provide Neutral Quality Advice. Note. The diamond represents the summary pooled weight of advice proportion. Each effect size and 95% confidence interval (error bar) represents an independent sample (s = 90). For articles with multiple independent samples, the effect size for each sample (S1, S2, etc.) is reported separately. Where a sample contributed more than one effect, the pooled effect, accounting for dependency between effects, is represented

The original three-level model was a better fit than the two-level model in which level 2 (within-study variance) was not modelled (p < 0.0001), as well as the two-level model where level 3 (between-study variance) was fixed at zero (p < 0.0001). Consequently, there was significant variability between effect sizes within- and between-studies, and the estimated variance components were τ2Level2 = 0.009 and τ2Level3 = 0.015, respectively. Of the total variance, 1.45 percent was attributed to variance at level 1 (i.e., sampling variance); 61.95 percent was attributed to level 2 (i.e., within-study variance); and 36.59 percent was attributed to level 3 (i.e., between-study variance). We therefore extended our model to examine potential predictors.

Multiple predictor model

An analysis with multiple predictors was conducted to examine the unique influence of each significant univariate model predictor (estimate uncertainty, accuracy incentive, and participation payment) on the summary weight of advice (see Supplementary Information for the univariate models). There was no evidence of multicollinearity among the predictor variables as evidenced by VIFs ≤ 1.32. The overall model was significant, F(3, 124) = 8.93, p < 0.001. Only estimate uncertainty, t(124) = 4.10, p < 0.001, had a unique effect that was not confounded by other variables in the model.

Publication bias and power

To determine whether there was evidence of publication bias, we first visually inspected a funnel plot displaying the aggregated within-study effect size estimates and standard errors (see Fig. 5). A pattern of asymmetry in the funnel plot suggests potential publication bias. The Trim and Fill method imputed eight missing studies to the left of the mean overall effect, and Egger’s regression test detected significant bias (p = 0.012). We therefore cannot rule out publication bias.

Next, we inspected funnel plots for displaying the aggregated within-study effect size estimates and standard errors separately for advice-taking in response to advisors perceived to provided high, neutral, and low quality advice (see Fig. 5). The Trim and Fill method imputed eight missing studies to the left of the mean effect for advisors perceived to provide high quality advice, and the updated estimate of the pooled effect size was 0.41, 95% CI [0.36, 0.47], but Egger’s regression test detected no significant bias (p = 0.122). Two missing studies were imputed to the left of the mean effect for advisors perceived to provide neutral advice quality, and the updated estimate of the pooled effect size was 0.36, 95% CI [0.33, 0.39], but Egger’s regression test detected no significant bias (p = 0.139). Two missing studies were imputed to the left of the mean effect for advisors perceived to provide low quality advice, and the updated estimate of the pooled effect size was 0.29, 95% CI [0.22, 0.36], but Egger’s regression test detected no significant bias (p = 0.194).

Power analysis shows that we had 100% power to detect a small overall effect (d = 0.2) based on k = 346 and an average sample size of 134, regardless of the degree of heterogeneity (Valentine et al., 2010). If k = 38, as for studies that include advice from advisors perceived to provide low quality advice, we had 99.9% power to detect a small overall effect (d = 0.2) with the same average sample size. Power increases as the number of studies (s) and effect sizes (k) increase (Assink & Wibbelink, 2016). To ensure sufficient power, meta-regression requires at least 10 studies per predictor (Higgins & Green, 2006). We met this threshold for the two multiple predictor models that included three predictors each (k = 284, s = 117; k = 128, s = 67). We also met this threshold for the univariate model with the smallest number of studies (k = 31, s = 18). Nevertheless, any null effects should be interpreted with caution.

Discussion

The current meta-analysis examined the extent to which individuals use advice, as well as predictors of this behaviour. The combined results from 346 effect sizes within 129 independent data sets from 53 articles suggest that, on average, estimates are adjusted 39% towards advised estimates. This is less than the 50% that is considered a rational adjustment towards the estimate of an advisor, based on the statistical principle that aggregation of imperfect estimates reduces error (Larrick & Soll, 2006). Publication bias analyses showed that this tendency towards egocentric discounting of advice may be even stronger than suggested in the literature to date. Our analyses also revealed that characteristics of the sample do not predict the weight of advice, providing no evidence that advice-taking is influenced by age, gender, or individualism. The most significant predictor of advice-taking was information about the advisor suggesting the potential quality of the advice. When information about the advisor(s) was unavailable or neutral, more weight was given to advice when the estimation was based on a subjective or uncertain value compared to an objective or certain value.

Individual-level predictors of advice-taking

Characteristics of the judge

Although this meta-analysis did not support an effect of age on advice-taking, only two studies involved participants younger than 18 years of age (Molleman et al., 2021; Rakoczy et al., 2015), and only one study involved older adults (aged 65 years or more; Bailey et al., 2021a). We therefore cannot rule out maturation and socialisation influencing advice-taking prior to reaching young adulthood or in older age. However, these processes do not appear to influence advice-taking throughout adulthood. Similarly, there was no influence of degree of individualism on advice-taking. A suggestion that remains to be tested in future research is that geographical differences, including economic and psychosocial adversity, may have more of an influence on advice-taking than culturally transmitted ideologies (Morin et al., 2020). Alternatively, motivations underlying advice-taking, rather than degree of advice-taking, may differ for individualistic and collectivistic cultures. Whereas increased advice-taking in individualistic cultures may be motivated by the desire for autonomy and maintenance of self-concept (Rader et al., 2017), collectivistic cultures may be motivated by relational harmony, even in anonymous, one-off JAS interactions (Tinghu et al., 2018). Future studies should examine whether additional cultural differences such as power distance and advice-giver authority, and their interaction, influence advice-taking.

Increased trust and reduced confidence are associated with both greater advice-taking (Bonaccio & Dalal, 2006; Rader et al., 2017) and being female (Feingold, 1994; See et al., 2011). However, gender (i.e., percent female; 0.04% to 81.33% of each sample) was not a predictor of the weight of advice. There is some evidence that the effect of gender on trust may depend on the type of trust. For example, men have been found more trusting than women in an economic trust game when financial incentives are present (Buchan et al., 2008). Differing incentives between studies may have influenced trust-based gender effects in the JAS paradigm. Similarly, the effect of gender on confidence and therefore advice-taking may depend on context. Previous research showing that women are less confident in their judgements and take more advice than men was in the context of existing co-worker relationships (See et al., 2011). In contrast, the JAS paradigm typically involves one-off, anonymous interactions. Nevertheless, the current data may simply reflect a lack of any effect of gender on advice-taking.

Characteristics of the advisor

A mean weighting of 48% was evident in response to advice from advisors perceived to provide high quality advice. This is closely approaching Larrick and Soll’s (2006) suggested rational weighting of 50%, and suggests that egocentric discounting may not be as pervasive as suggested in the literature to date. It may also be argued that a rational weighting should be greater than 50% if the advisor is perceived to be providing high quality advice. Given people consider experts to provide more influential and helpful, and less intrusive advice (Dalal and Bonaccio, 2010), it is not surprising that greater weight is given to advisors perceived to be providing high quality advice, including those described as experts. Critically, however, only perceptions of the accuracy of the advisor, and not actual advice accuracy or knowledge of actual accuracy, uniquely predicted advice-taking.

The mean weight of advice in response to advisors perceived to provide low quality advice (i.e., 32%) did not differ from the degree of advice-taking from advisors who were described in neutral terms (i.e., 37%). This is consistent with asymmetry of reputation formation over repeated interactions, which in turn is explained by risk aversion theories (Yaniv & Kleinberger, 2000). Specifically, the risk of an average advisor giving bad advice looms larger than the possibility that the advisor may provide good advice. We extend evidence for this effect from repeated interactions that involve progressive learning to one-off interactions and repeated interactions that do not involve feedback. An important distinction between these different methods of reputation formation is that first impressions are not always reliable. Thus, without first-hand evidence of the quality of advice, there is a risk that too much weight is given to advice from an unreliable advisor, or too little weight to good advice from an unknown advisor.

JAS-level and environment-level predictors of advice-taking

The current data contribute to clarification of competing theoretical propositions regarding the influence of estimate uncertainty on advice-taking. When information about the advisor is lacking, objective estimates are adjusted by 35%, while subjective/uncertain estimates are adjusted 55% towards advice. This greater weight of advice when determining a subjective estimate may reflect an understanding that subjective values are typically determined by aggregation (Laughlin & Ellis, 1986). It may also suggest that the judge perceives that their own knowledge of the estimate is uncertain and potentially reduced relative to the knowledge of the advisor, and this in turn may increase advice-taking (Gino & Moore, 2007; Yaniv & Kleinberger, 2000; Yaniv, 2004a, b). This type of knowledge comparison may occur more frequently when judges do not have information about the advisor that suggests the potential quality of the advice. Given previous evidence for a negative association between confidence and advice-taking (Bonaccio & Dalal, 2006), it is also possible that an uncertain estimate reduces the judge’s confidence which in turn increases advice-taking.

We did not find evidence for the alternative proposition that advice would be given more weight when estimating objective relative to subjective values because only the former offers the opportunity to improve accuracy. A preference for advice in relation to a subjective estimate where there is no single correct answer may suggest that the JAS is not always dominated by accuracy-seeking informational motives (See et al., 2011; Van Swol, 2011), but may also assess normative social influence and the motivation to maintain social harmony (Mahmoodi et al., 2015). Rader et al.’s (2017) review identified a focus on informational motives as both a strength and a limitation of the existing JAS literature. They recommended that future research reconnect with the social influence literature and normative motives within the JAS task. Our data suggest that these motives contribute to understanding egocentric discounting, and that future JAS research should examine the role of normative motives in reducing suboptimal egocentric discounting.

Although accuracy incentives and advice accuracy were predictors of advice-taking in the univariate models, they were not unique predictors of the mean weight of advice. There was also no influence of whether advice was imposed versus optional, or for multiple pieces of advice versus a single piece of advice. It should be noted that few studies included in the current meta-analysis examined whether advice was optional (< 5%) or the influence of receiving multiple pieces of advice (< 6%). Nevertheless, we considered these variables important to analyse given that they are input factors in Bonaccio and Dalal’s (2006) JAS IPO model.

Limitations and future directions

The current meta-analysis was the first to quantify the magnitude of advice-taking and the variables that influence this behaviour. We extended Bonaccio and Dalal’s (2006) input-process-output model to include perceptions of the advisor as a specific input factor that may predict advice-taking. We further broadened the focus of this model on situational influences as inputs (i.e., task characteristics) to include individual difference variables (i.e., decision-maker characteristics). The analysis is not without limitations, which are largely a consequence of the existing data sets. For example, additional characteristics of the advisor are likely to influence advice-taking. This includes trustworthiness, likability, and similarity to the judge (Feng & MacGeorge, 2010; MacGeorge & Van Swol, 2018b). These characteristics are not commonly measured in studies using the JAS paradigm. Likewise, there are several sample characteristics which were not analysed and which may nonetheless have an effect on advice-taking. This includes, but is not limited to, the judge’s expertise, personality, or desire for autonomy. Only a few studies have provided data to allow for an examination of advisor confidence. This individual-level factor is likely to interact with other variables such as advisor accuracy (Sah et al., 2013) or estimate uncertainty (Van Swol, 2011).

It was also not possible to measure the process category of the IPO model as a predictor. In contrast to the input level, the “process” level in the JAS IPO model, involving intra-JAS interaction between the judge and advisor, or between multiple advisors, is relatively neglected. For example, 111 out of 129 samples in the current meta-analysis interacted with advisors via a computer. Two interacted via telephone (i.e., Gino & Schweitzer, 2008, Study 1; Gino et al., 2009, Study 1), two face-to-face (Tinghu et al., 2018, Study 1, Study 2), one via web-cam (De Wit et al., 2017, Study 3), and 12 in writing (e.g., Kaliuzhna et al., 2012). One study did not specify the form of interaction (Minson & Mueller, 2012). Most studies using the JAS paradigm have involved anonymous interactions between judge and advisor. To adequately assess the effects of the process level, the next step will be to measure real-life judge-advisor interactions using more naturalistic methods such as experience sampling.

One of the methodological difficulties with the weight of advice metric as currently determined is that it does not capture instances where estimates move away from advice. In the JAS paradigm, this is typically adjusted to zero. However, a score of zero indicates that advice was simply ignored, rather than caused the judge to move their estimate in the opposite direction. This ensures that the average weight of advice is always positive, which biases the results toward finding evidence for advice-taking. Generally, this is not a substantial problem as most adjusted estimates fall between the initial estimate and the advice and so are not adjusted to zero (Harvey & Fischer, 1997). However, future JAS research should address the difficulties with the classic weight of advice formula to capture circumstances where the judge may incorporate advice but in the opposite direction to that suggested by the advisor. Future studies should also explore advice-taking calculations that account for non-linear dynamics of the opinion aggregation process. The current meta-analysis focused on adjustment of quantitative estimates following advice and did not examine the effects of advice-taking for decisions that involve choosing between qualitatively different options. Nevertheless, the current approach addresses a common criticism of meta-analysis, which is the problem of mixing ‘apples and oranges’ (Sharpe, 1997).

Conclusion

In conclusion, the most significant predictor of advice-taking was information about the advisor(s) that suggested low, neutral, or high quality advice. However, risk aversion effects appeared to diminish differentiation of advisors perceived to provide low quality or neutral quality advice. Taken together, the benefits of compromise and averaging may be lost if accurate advice is perceived to be low quality, or too much weight is given to inaccurate advice that is perceived to be high quality. When there was no information about the advisor with which to establish the potential quality of advice, advice-taking increased when the estimate was of a subjective or uncertain nature relative to when there was an objectively correct answer, suggesting that normative motives may increase JAS advice-taking. The current data provide no evidence that advice-taking is influenced by age, gender, or individualism, while noting there is relatively little data about the effects of more extreme age groups on advice-taking. These findings provide an important evidence base across diverse contexts, from policy-makers tasked with advising the public to reduce risks, to professionals such as doctors advising patients with health-related information, or friends and families passing on uninformed financial advice. An understanding of advice-taking is critical for ensuring optimal integration of social information into independent judgment.

Data availability

Data and code are accessible at the Open Science Framework (https://osf.io/atz6y/?view_only=a5e435f0b5de42a286736725a11bb58d).

References

References with asteriks were included in the meta-analysis synthesis.

Assink, M., & Wibbelink, C. J. M. (2016). Fitting three-level meta-analytic models in R: A step-by-step tutorial. The Quantitative Methods for Psychology, 12(3), 154–174. https://doi.org/10.20982/tqmp.12.3.p154

Bailey, P. E., Ebner, N. C., & Stine-Morrow, E. A. L. (2021b). Introduction to the special issue on prosociality in adult development and aging: Advancing theory within a multilevel framework. Psychology and Aging, 36(1), 1–9. https://doi.org/10.1037/pag0000598

*Bailey, P. E., Ebner, N. C., Moustafa, A. A., Phillips, J. R., Leon, T., & Weidemann, G. (2021a). The weight of advice in older age. Decision, 8(2), 123-132. https://doi.org/10.1037/dec0000138

Barendregt, J. J., Doi, S. A., Lee, Y. Y., Norman, R. E., & Vos, T. (2013). Meta-Analysis of Prevalence. Theory and Methods, 67, 974–978. https://doi.org/10.1136/jech-2013-203104

Bonaccio, S., & Dalal, R. S. (2006). Advice taking and decision-making: An integrative literature review, and implications for the organizational sciences. Organizational Behaviour and Human Decision Processes, 101(2), 127–151. https://doi.org/10.1016/j.obhdp.2006.07.001

Borenstein, M., Hedges, L. V., Higgins, J. T., & Rothstein, H. R. (2009). Introductionto Meta-Analysis. John Wiley and Sons. https://doi.org/10.1016/B978-0-240-81203-8.00002-7

Buchan, N. R., Croson, R. T. A., & Solnick, S. (2008). Trust and gender: An examination of behaviour and beliefs in the Investment Game. Journal of Economic Behaviour and Organization, 68(3–4), 466–476. https://doi.org/10.1016/j.jebo.2007.10.006

Burda, B. U., O’Connor, E. A., Webber, E. M., Redmond, N., & Perdue, L. A. (2017). Estimating data from figures with a web-based program: Considerations for a systematic review. Research Synthesis Methods, 8(3), 258–262. https://doi.org/10.1002/jrsm.1232

*Carbonell, G., Meshi, D., & Brand, M. (2019). The use of recommendations on physician rating websites: The number of raters makes the difference when adjusting decisions. Health Communication, 34(13), 1653–1662. https://doi.org/10.1080/10410236.2018.1517636

Cooper, H., Hedges, L. V., & Valentine, J. C. (2009). The handbook of research synthesis and meta-analysis (2nd ed.). Russell Sage Foundation.

Dalal, R. S., & Bonaccio, S. (2010). What types of advice do decision-makers prefer? Organizational Behaviour and Human Decision Processes, 112(1), 11–23. https://doi.org/10.1016/j.obhdp.2009.11.007

*De Hooge, I. E., Verlegh, P. W. J., & Tzioti, S. C. (2014). Emotions in advice taking: The roles of agency and valence. Journal of Behavioural Decision Making, 27(3), 246–258. https://doi.org/10.1002/bdm.1801

*De Wit, F. R. C., Scheepers, D., Ellemers, N., Sassenberg, K., & Scholl, A. (2017). Whether power holders construe their power as responsibility or opportunity influences their tendency to take advice from others. Journal of Organizational Behaviour, 38(7), 923–949. https://doi.org/10.1002/job.2171

Del Re, A. C., & Hoyt, W. T. (2014). MAd: Meta-analysis with mean differences. In R Package. http://cran.r-project.org/web/packages/MAd

Duan, J., Xu, Y., & Van Swol, L. M. (2021). Influence of self-concept calrity on advice seeking and utilisation. Asian Journal of Social Psychology, 24, 435–444. https://doi.org/10.1111/ajsp.12435

Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56, 455–463.

Egger, M., Davey Smith, G., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. Graphical Test is Itself Biased. BMJ, 315, 629–634. https://doi.org/10.1136/bmj.315.7109.629

Feingold, A. (1994). Gender differences in personality: A meta-analysis. Psychological Bulletin, 116(3), 429–456. https://doi.org/10.1037//0033-2909.116.3.429

Feng, B., & MacGeorge, E. L. (2010). The influences of message and source factors on advice outcomes. Communication Research, 37(4), 553–575. https://doi.org/10.1177/0093650210368258

*Fiedler, K., Hütter, M., Schott, M., & Kutzner, F. (2019). Metacognitive myopia and the overutilization of misleading advice. Journal of Behavioural Decision Making, 32(3), 317–333. https://doi.org/10.1002/bdm.2109

Gheorghiu, M. A., Vignoles, V. L., & Smith, P. B. (2009). Beyond the United States and Japan: Testing Yamagishi’s emancipation theory of trust across 31 nations. Social Psychology Quarterly, 72(4), 365–383. https://doi.org/10.1177/019027250907200408

*Gino, F., & Moore, D. A. (2007). Effects of task difficulty on use of advice. Journal of Behavioural Decision Making, 20(1), 21–35. https://doi.org/10.1002/bdm.539

*Gino, F., & Schweitzer, M. E. (2008). Blinded by anger or feeling the love: How emotions influence advice taking. Journal of Applied Psychology, 93(5), 1165–1173. https://doi.org/10.1037/0021-9010.93.5.1165

*Gino, F., Shang, J., & Croson, R. (2009). The impact of information from similar or different advisors on judgment. Organizational Behaviour and Human Decision Processes, 108(2), 287–302. https://doi.org/10.1016/j.obhdp.2008.08.002

*Gino, F., Brooks, A. W., & Schweitzer, M. E. (2012). Anxiety, advice, and the ability to discern: Feeling anxious motivates individuals to seek and use advice. Journal of Personality and Social Psychology, 102(3), 497–512. https://doi.org/10.1037/a0026413

*Gino, F. (2008). Do we listen to advice just because we paid for it? The impact of advice cost on its use. Organizational Behaviour and Human Decision Processes, 107(2), 234–245. https://doi.org/10.1016/j.obhdp.2008.03.001

*Haran, U., & Shalvi, S. (2020). The implicit honesty premium: Why honest advice is more persuasive than highly informed advice. Journal of Experimental Psychology: General, 149(4), 757–773. https://doi.org/10.1037/xge0000677

Harvey, N., & Fischer, I. (1997). Taking advice: Accepting help, improving judgment, and sharing responsibility. Organizational Behaviour and Human Decision Processes, 70(2), 117–133. https://doi.org/10.1006/obhd.1997.2697

*Häusser, J. A., Leder, J., Ketturat, C., Dresler, M., & Faber, N. S. (2016). Sleep Deprivation and Advice Taking. Scientific Reports, 6, 1–8. https://doi.org/10.1038/srep24386

Higgins, J.P.T. & Green, S. (2006) Cochrane handbook for systematic reviews of interventions 4.2.6. In: The Cochrane Library, Issue 4, John Wiley & Sons, Ltd., Chichester, 1–181.

*Hofheinz, C., Germar, M., Schultze, T., Michalak, J., & Mojzisch, A. (2017). Are depressed people more or less susceptible to informational social influence? Cognitive Therapy and Research, 41(5), 699–711. https://doi.org/10.1007/s10608-017-9848-7

Hox, J. J. (2010). Multilevel analysis: Techniques and applications. Routledge.

*Hütter, M., & Ache, F. (2016). Seeking advice: A sampling approach to advice taking. Judgment and Decision Making, 11(4), 401–415.

*Hütter, M., & Fiedler, K. (2019). Advice taking under uncertainty: The impact of genuine advice versus arbitrary anchors on judgment. Journal of Experimental Social Psychology, 85(March), 103829. https://doi.org/10.1016/j.jesp.2019.103829

*Kadous, K., Leiby, J., & Peecher, M. E. (2013). How do auditors weight informal contrary advice? The joint influence of advisor social bond and advice justifiability. Accounting Review, 88(6), 2061–2087. https://doi.org/10.2308/accr-50529

*Kaliuzhna, M., Chambon, V., Franck, N., Testud, B., & van der Henst, J. B. (2012). Belief revision and delusions: How do patients with schizophrenia take advice? PLoS ONE, 7(4), 7–9. https://doi.org/10.1371/journal.pone.0034771

*Kausel, E. E., Culbertson, S. S., Leiva, P. I., Slaughter, J. E., & Jackson, A. T. (2015). Too arrogant for their own good? Why and when narcissists dismiss advice. Organizational Behaviour and Human Decision Processes, 131, 33–50. https://doi.org/10.1016/j.obhdp.2015.07.006

*Kim, H. Y., Lee, Y. S., & Jun, D. B. (2020). Individual and group advice taking in judgmental forecasting: Is group forecasting superior to individual forecasting? Journal of Behavioural Decision Making, 33(3), 287–303. https://doi.org/10.1002/bdm.2158

Knutson, B., Fong, G. W., Adams, C. M., Varner, J. L., & Hommer, D. (2001). Dissociation of reward anticipation and outcome with event-related fMRI. NeuroReport, 12, 3683–3687. https://doi.org/10.1097/00001756-200112040-00016

Larrick, R. P., & Soll, J. B. (2006). Intuitions about combining opinions: Misappreciation of the averaging principle. Management Science, 52(1), 111–127. https://doi.org/10.1287/mnsc.1050.0459

*Larson, J. R., Tindale, R. S., & Yoon, Y. J. (2020). Advice taking by groups: The effects of consensus seeking and member opinion differences. Group Processes and Intergroup Relations, 23(7), 921–942. https://doi.org/10.1177/1368430219871349

Laughlin, P. R., & Ellis, A. L. (1986). Demonstrability and social combination processes on mathematical intellective tasks. Journal of Experimental Social Psychology, 22, 177–189. https://doi.org/10.1016/0022-1031(86)90022-3

*Logg, J. M., Minson, J. A., & Moore, D. A. (2019). Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behaviour and Human Decision Processes, 151(December 2018), 90–103. https://doi.org/10.1016/j.obhdp.2018.12.005

MacGeorge, E. L., Guntzviller, L. M., Hanasono, L. K., & Feng, B. (2016). Testing advice response theory in interactions with friends. Communication Research, 43(2), 211–231. https://doi.org/10.1177/0093650213510938

MacGeorge, E. L., & Van Swol, L. M. (2018a). Advice across disciplines and contexts. In E. L. MacGeorge & L. M. Van Swol (Eds.), The Oxford Handbook of Advice. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190630188.001.0001

MacGeorge, E. L., & Van Swol, L. M. (2018b). Advice: Communication with consequence. In E. L. MacGeorge & L. M. Van Swol (Eds.), The Oxford Handbook of Advice. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190630188.001.0001

Mahmoodi, A., Bang, D., Olsen, K., Zhao, Y. A., Shi, Z., Broberg, K., Safavi, S., Han, S., Ahmadabadi, M. N., Frith, C. D., Roepstorff, A., Rees, G., & Bahrami, B. (2015). Equality bias impairs collective decision-making across cultures. PNAS, 112(12), 3835–3840. https://doi.org/10.1073/pnas.1421692112

*Meshi, D., Biele, G., Korn, C. W., & Heekeren, H. R. (2012). How expert advice influences decision making. PLoS ONE, 7(11). https://doi.org/10.1371/journal.pone.0049748

*Minson, J. A., & Mueller, J. S. (2012). The cost of collaboration: Why joint decision making exacerbates rejection of outside information. Psychological Science, 23(3), 219–224. https://doi.org/10.1177/0956797611429132

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., & Group, T. P. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLOS Medicine, 6, 1–6. https://doi.org/10.1371/journal.pmed.1000097

*Molleman, L., Tump, A. N., Gradassi, A., Herzog, S., Jayles, B., Kurvers, R. H. J. M., & van den Bos, W. (2020). Strategies for integrating disparate social information: Integrating disparate social information. Proceedings of the Royal Society B: Biological Sciences, 287(1939). https://doi.org/10.1098/rspb.2020.2413rspb

*Molleman, L., Ciranka, S., & van den Bos, W. (2021). Social influence in adolescence as a double-edged sword. PsyArXiv. https://doi.org/10.31234/osf.io/gcbdf

Morin, O., Jacquet, P. O., Vaesen, K., & Acerbi, A. (2021). Social information use and social information waste. Philosophical Transactions of the Royal Society, 376(1828), 20200052. https://doi.org/10.1098/rstb.2020.0052

*Önkal, D., Goodwin, P., Thomson, M., Gönül, S., & Pollock, A. (2009). The relative influence of advice from human experts and statistical methods on forecast adjustments. Journal of Behavioural Decision Making, 22(4), 390–409. https://doi.org/10.1002/bdm.637

*Önkal, D., Sinan Gönül, M., Goodwin, P., Thomson, M., & Öz, E. (2017). Evaluating expert advice in forecasting: Users’ reactions to presumed vs. experienced credibility. International Journal of Forecasting, 33(1), 280–297. https://doi.org/10.1016/j.ijforecast.2015.12.009

Peters, E., Hess, T. M., Västfjäll, D., & Auman, C. (2007). Adult age differences in dual information processes: Implications for the role of affective and deliberate processes in older adults’ decision making. Perspectives on Psychological Science, 2(1), 1–23. https://doi.org/10.1111/j.1745-6916.2007.00025.x

*Prahl, A., & Van Swol, L. (2017). Understanding algorithm aversion: When is advice from automation discounted? Journal of Forecasting, 36(6), 691–702. https://doi.org/10.1002/for.2464

R Core Team. (2021). R: A language and environment for statistical computing, Vienna, Austria. https://www.R-project.org/

Rader, C. A., Larrick, R. P., & Soll, J. B. (2017). Advice as a form of social influence: Informational motives and the consequences for accuracy. Social and Personality Psychology Compass, 11(8), e12329. https://doi.org/10.1111/spc3.12329

*Rakoczy, H., Ehrling, C., Harris, P. L., & Schultze, T. (2015). Young children heed advice selectively. Journal of Experimental Child Psychology, 138, 71–87. https://doi.org/10.1016/j.jecp.2015.04.007

*Rees, L., Rothman, N. B., Lehavy, R., & Sanchez-Burks, J. (2013). The ambivalent mind can be a wise mind: Emotional ambivalence increases judgment accuracy. Journal of Experimental Social Psychology, 49(3), 360–367. https://doi.org/10.1016/j.jesp.2012.12.017

*Reyt, J. N., Wiesenfeld, B. M., & Trope, Y. (2016). Big picture is better: The social implications of construal level for advice taking. Organizational Behaviour and Human Decision Processes, 135, 22–31. https://doi.org/10.1016/j.obhdp.2016.05.004

*Ribeiro, V. F., Hilal, A. V. G. de, & Avila, M. G. (2020). Advisor gender and advice justification in advice taking. RAUSP Management Journal, 55(1), 4–21. https://doi.org/10.1108/RAUSP-08-2018-0068

*Sah, S., Moore, D. A., & MacCoun, R. J. (2013). Cheap talk and credibility: The consequences of confidence and accuracy on advisor credibility and persuasiveness. Organizational Behaviour and Human Decision Processes, 121(2), 246–255. https://doi.org/10.1016/j.obhdp.2013.02.001

*Scheunemann, J., Gawęda, Ł., Reininger, K. M., Jelinek, L., Hildebrandt, H., & Moritz, S. (2020). Advice weighting as a novel measure for belief flexibility in people with psychotic-like experiences. Schizophrenia Research, 216, 129–137. https://doi.org/10.1016/j.schres.2019.12.016

*Scheunemann, J., Fischer, R., & Moritz, S. (2021). Probing the hypersalience hypothesis – an adapted judge-advisor system tested in individuals with psychotic-like experiences. Frontiers in Psychiatry, 12, 158. https://doi.org/10.3389/fpsyt.2021.612810

Schilbach, L., Eickhoff, S. B., Schultze, T., Mojzisch, A., & Vogeley, K. (2013). To you I am listening: Perceived competence of advisors influences judgment and decision-making via recruitment of the amygdala. Social Neuroscience, 8(3), 189–202. https://doi.org/10.1080/17470919.2013.775967

*Schul, Y., & Peri, N. (2015). Influences of distrust (and trust) on decision making. Social Cognition, 33(5), 414–435. https://doi.org/10.1521/soco.2015.33.5.414

*Schultze, T., & Loschelder, D. D. (2021). How numeric advice precision affects advice taking. Journal of Behavioural Decision Making, 34(3), 303–310. https://doi.org/10.1002/bdm.2211

*Schultze, T., Rakotoarisoa, A. F., & Schulz-Hardt, S. (2015). Effects of distance between initial estimates and advice on advice utilization. Judgment and Decision Making, 10(2), 144–171.

*Schultze, T., Mojzisch, A., & Schulz-Hardt, S. (2017). On the inability to ignore useless advice a case for anchoring in the judge-advisor-system. Experimental Psychology, 64(3), 170–183. https://doi.org/10.1027/1618-3169/a000361

*Schultze, T., Gerlach, T. M., & Rittich, J. C. (2018). Some people heed advice less than others: Agency (but not communion) predicts advice taking. Journal of Behavioural Decision Making, 31(3), 430–445. https://doi.org/10.1002/bdm.2065

*Schultze, T., Mojzisch, A., & Schulz-Hardt, S. (2019). Why dyads heed advice less than individuals do. Judgment and Decision Making, 14(3), 349–363.

Sciandra, M. R. (2019). For one or many? Tie strength and the impact of broadcasted vs. narrowcasted advice. Journal of Marketing Communications, 25(5), 494–510. https://doi.org/10.1080/13527266.2017.1360930

Scopelliti, I., Morewedge, C. K., McCormick, E., Min, H. L., Lebrecht, S., & Kassam, K. S. (2015). Bias blind spot: Structure, measurement, and consequences. Management Science, 61(10), 2468–2486. https://doi.org/10.1287/mnsc.2014.2096

*See, K. E., Morrison, E. W., Rothman, N. B., & Soll, J. B. (2011). The detrimental effects of power on confidence, advice taking, and accuracy. Organizational Behaviour and Human Decision Processes, 116(2), 272–285. https://doi.org/10.1016/j.obhdp.2011.07.006

Sharpe, D. (1997). Of apples and oranges, file drawers and garbage: Why validity issues in meta-analysis will not go away. Clinical Psychology Review, 17(8), 881–901. https://doi.org/10.1016/s0272-7358(97)00056-1

Sniezek, J. A., & Buckley, T. (1995). Cueing and cognitive conflict in judge-advisor decision making. Organizational Behaviour and Human Decision Processes, 62, 159–174.

Sniezek, J. A., Schrah, G. E., & Dalal, R. S. (2004). Improving judgement with prepaid expert advice. Journal of Behavioural Decision Making, 17(3), 173–190. https://doi.org/10.1002/bdm.468

Soll, J. B., & Larrick, R. P. (2009). Strategies for revising judgment: How (and how well) people use others’ opinions. Journal of Experimental Psychology: Learning Memory and Cognition, 35, 780–805.

*Tinghu, K., Li, W., Peiling, X., & Qian, P. (2018). Taking advice for vocational decisions: Regulatory fit effects. Journal of Pacific Rim Psychology, 12. https://doi.org/10.1017/prp.2017.12

*Tost, L. P., Gino, F., & Larrick, R. P. (2012). Power, competitiveness, and advice taking: Why the powerful don’t listen. Organizational Behaviour and Human Decision Processes, 117(1), 53–65. https://doi.org/10.1016/j.obhdp.2011.10.001

*Trouche, E., Johansson, P., Hall, L., & Mercier, H. (2018). Vigilant conservatism in evaluating communicated information. PLoS ONE, 13(1), 1–17. https://doi.org/10.1371/journal.pone.0188825

*Tzini, K., & Jain, K. (2018). The role of anticipated regret in advice taking. Journal of Behavioural Decision Making, 31(1), 74–86. https://doi.org/10.1002/bdm.2048

Tzioti, S. C., Wierenga, B., & van Osselaer, S. M. J. (2014). The effect of intuitive advice justification on advice taking. Journal of Behavioural Decision Making, 27(1), 66–77. https://doi.org/10.1002/bdm.1790

Valentine, J. C., Pigott, T. D., & Rothstein, H. R. (2010). How many studies do you need? A primer on statistical power for meta-analysis. Journal of Educational and Behavioural Statistics, 35(2), 215–247. https://doi.org/10.3102/1076998609346961

Van den Noortgate, W., López-López, J. A., Marín-Martínez, F., & Sánchez-Meca, J. (2013). Three-level meta-analysis of dependent effect sizes. Behaviour Research Methods, 45(2), 576–594. https://doi.org/10.3758/s13428-012-0261-6

Van Swol, L. M. (2011). Forecasting another’s enjoyment versus giving the right answer: Trust, shared values, task effects, and confidence in improving the acceptance of advice. International Journal of Forecasting, 27, 103–120. https://doi.org/10.1016/j.ijforecast.2010.03.002

Van Swol, L. M., Paik, J. E., & Prahl, A. (2018). Advice recipients: The psychology of advice utilization. In E. L. MacGeorge & L. M. Van Swol (Eds.), The Oxford Handbook of Advice. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190630188.001.0001

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal Of Statistical Software, 36(3), 1–48. https://doi.org/10.18637/jss.v036.i03

*Wang, X., & Du, X. (2018). Why does advice discounting occur? The combined roles of confidence and trust. Frontiers in Psychology, 9, 1–8. https://doi.org/10.3389/fpsyg.2018.02381

*Wanzel, S. K., Schultze, T., & Schulz-Hardt, S. (2017). Disentangling the effects of advisor consensus and advice proximity. Journal of Experimental Psychology: Learning Memory and Cognition, 43(10), 1669–1675. https://doi.org/10.1037/xlm0000396

Yamagishi, T., Cook, K. S., & Watabe, M. (1998). Uncertainty, trust, and commitment formation in the United States and Japan. American Journal of Sociology, 104(1), 165–194. https://doi.org/10.1086/210005

Yaniv, I. (2004a). Receiving other people’s advice: Influence and benefit. Organizational Behaviour and Human Decision Processes, 93(1), 1–13. https://doi.org/10.1016/j.obhdp.2003.08.002

Yaniv, I. (2004b). The benefit of additional opinions. Current Directions in Psychological Science, 13(2), 75–78. https://doi.org/10.1111/j.0963-7214.2004.00278.x

Yaniv, I., & Choshen-Hillel, S. (2012). Exploiting the wisdom of others to make better decisions: Suspending judgment reduces egocentrism and increases accuracy. Journal of Behavioural Decision Making, 25(5), 427–434. https://doi.org/10.1002/bdm.740

*Yaniv, I., & Kleinberger, E. (2000). Advice taking in decision making: Egocentric discounting and reputation formation. Organizational Behaviour and Human Decision Processes, 83(2), 260–281. https://doi.org/10.1006/obhd.2000.2909

*Yaniv, I., & Milyavsky, M. (2007). Using advice from multiple sources to revise and improve judgments. Organizational Behaviour and Human Decision Processes, 103(1), 104–120. https://doi.org/10.1016/j.obhdp.2006.05.006

*Yoon, H., Scopelliti, I., & Morewedge, C. K. (2021). Decision making can be improved through observational learning. Organizational Behaviour and Human Decision Processes, 162, 155–188. https://doi.org/10.1016/j.obhdp.2020.10.011

*Zhang, T., & North, M. S. (2020). What goes down when advice goes up: Younger advisers underestimate their impact. Personality and Social Psychology Bulletin, 46(10), 1444–1460. https://doi.org/10.1177/0146167220905221

Acknowledgements

We acknowledge Professor Tom Denson for providing statistical advice.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions This research was supported under Australian Research Council’s Discovery Projects funding scheme (DP200100876) and National Institutes of Health Grant 1R01AG057764.

Author information

Authors and Affiliations

Contributions

The review was conceived and designed by Phoebe Bailey, Tarren Leon, and Gabrielle Weidemann. The literature search was performed by Tarren Leon. Data analysis and drafting were completed by Phoebe Bailey. Critical revisions of the work were carried out by Tarren Leon, Natalie Ebner, Ahmed Moustafa, and Gabrielle Weidemann.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bailey, P.E., Leon, T., Ebner, N.C. et al. A meta-analysis of the weight of advice in decision-making. Curr Psychol 42, 24516–24541 (2023). https://doi.org/10.1007/s12144-022-03573-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-022-03573-2