Abstract

Single-solution-based optimization algorithms have gained little to no attention by the research community, unlike population-based approaches. This paper proposes a novel optimization algorithm, called Single Candidate Optimizer (SCO), that relies only on a single candidate solution throughout the whole optimization process. The proposed algorithm implements a unique set of equations to effectively update the position of the candidate solution. To balance exploration and exploitation, SCO is integrated with the two-phase strategy where the candidate solution updates its position differently in each phase. The effectiveness of the proposed approach is validated by testing it on thirty three classical benchmarking functions and four real-world engineering problems. SCO is compared with three well-known optimization algorithms, i.e., Particle Swarm Optimization, Grey Wolf Optimizer, and Gravitational Search Algorithm and with four recent high-performance algorithms: Equilibrium Optimizer, Archimedes Optimization Algorithm, Mayfly Algorithm, and Salp Swarm Algorithm. According to Friedman and Wilcoxon rank-sum tests, SCO can significantly outperform all other algorithms for the majority of the investigated problems. The results achieved by SCO motivates the design and development of new single-solution-based optimization algorithms to further improve the performance. The source code of SCO is publicly available at: https://uk.mathworks.com/matlabcentral/fileexchange/116100-single-candidate-optimizer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The rapid advances in science and technology in the last decade has increased the difficulty level of real-world optimization problems and this motivates the development of fast and efficient optimization algorithms. The first step in optimization is to formulate an objective function that can be maximized or minimized. Once the optimization problem is formulated, an optimization algorithm is needed to search for the best variables that can achieve the best solution. Real-world optimization problems are mathematically formulated as follows:

where \(f({\mathbf {x}})\) is the objective function that needs to be optimized, D, L and K are the numbers of dimensions (variables), inequality constraints, equality constraints, respectively, \(ub_j\) and \(lb_j\) represents the upper and lower bounds of variable x at dimension j.

Generally, optimization problems can be solved by deterministic or stochastic methods. Utilizing gradient information, linear and non-linear programming are two prominent deterministic methods that can be used to find the optimal solution of a given problem. However, these conventional deterministic methods can converge to local optima [1] [2]. To overcome the limitations of the conventional approaches, meta-heuristic algorithms, as a stochastic approach, can be used to solve complicated real-world optimization problems. Meta-heuristic algorithms have shown robust performance when applied to different optimization problems in various fields such as wireless communications [3,4,5] and artificial intelligence [6,7,8].

The main merits of meta-heuristic algorithms are their simplicity, flexibility, ability to avoid a local optimum, and derivative-free mechanisms [9]. The searching process of meta-heuristic algorithms is split into two phases: exploration and exploitation. The exploration stage broadly spans the search space with the aim of finding promising regions that can lead towards the optimal solution [10]. Poor exploration can lead to local optima entrapment. The exploitation phase focuses on searching around the promising regions discovered in the exploration phase. The inability to perform successful exploitation can significantly reduce the solution accuracy. Balancing between exploration and exploitation is one of the major challenges faced by meta-heuristic approaches . An efficient meta-heuristic algorithm is a one that:

-

1

Balances well between exploration and exploitation

-

2

Provides high level of accuracy

-

3

Converges towards the optimal solution and escapes from local optima

-

4

Has a stable performance where results are not significantly different from one independent run to another

The purpose of this paper is to develop a robust optimization algorithm that can be used to solve diverse real-world optimization problems. The rest of the paper is organized as follows. In Section two, a literature review on meta-heuristic algorithms is provided. Section three develops the mathematical model and the algorithm of the proposed approach. It also presents the complexity of the proposed single candidate optimizer. Sections four and five discuss the performance of the proposed optimization algorithm on thirty three benchmarking functions and four engineering problems, respectively. Finally, Section six concludes this work and provides some potential research directions.

2 Literature review

Meta-heuristic algorithms can be classified into four main different groups: swarm algorithms, evolutionary algorithms, physics-based approaches, and human-based algorithms [11]. Swarm intelligence algorithms are inspired by the behaviour of animals when they search for food in groups. In this category, the information of all or part of the particles is shared during an iterative optimization process. One of the most well-known swarm approaches is Particle Swarm Optimization (PSO) which was developed by Kennedy and Eberhart in 1995 [12]. PSO mimics birds flying in swarms where individuals in a swarm are guided by a leader who has the closest position to the target.

In PSO, a swarm of particles where each particle represents a potential solution flies in the search space with the aim of finding better positions that help to move toward the optimal solution. During the PSO iterative process, each particle is attracted to the global best position (gbest) which is the particle that has achieved the best fitness so far and it is also attracted to its best historical position (Pbest). Other widely known swarm algorithms are: Grey Wolf Optimization [9], Ant Colony Optimization [13], Salp Swarm Algorithm [14],Whale Optimization Algorithm [11], Krill Herd [15], Butterfly Optimization Algorithm [16], Seagull Optimization Algorithm [17], and Cuckoo Search [18].

Evolutionary algorithms as the second class of meta-heuristics are developed by imitating biological evolution such as mutation and crossover. The most well-known evolutionary algorithm is the Genetic Algorithm (GA) developed by Holland in 1992 [19]. A GA implements three main steps: selection, crossover, and mutation. In the selection process, some of the exiting candidate solutions (ones with better fitness) are selected to produce a second generation using the crossover concept. To maintain diversity, some dimensions of certain solutions are mutated with a mutation probability. Besides GA, Evolutionary Programming [20], Differential Evolution (DE) [21], Evolution Strategies [22] are three other widely used evolutionary approaches.

The third category of meta-heuristic algorithms utilizes the laws of physics such as Newton’s gravitational law and Archimedes’ principle to build interactions between candidate solutions. Simulated Annealing (SA) [23] and Gravitational Search Algorithm (GSA) [24] are two prominent approaches that belong to this class. SA is a single-solution-based algorithm that imitates the physical annealing process of metals. In GSA, candidate solutions are treated as a collection of masses that obey Newton’s gravity and motion laws. Equilibrium Optimizer [25] and Henry Gas Solubility Optimization [26] are two recent state-of-the-art physics-based optimization algorithms. Other optimization algorithms that belong to this

class are: Sine Cosine Algorithm [48], Water Cycle Algorithm [49], Black Hole algorithm [50], and Thermal Exchange Optimization [51].

The final group of meta-heuristic methods emulates the social behaviour of humans. For instance, Political Optimizer [36] and Parliamentary Optimization Algorithm [52] are two optimization algorithms inspired by the political process. Teaching-Learning-Based Optimization (TLBO) [53] is another social example where its mechanism is developed by mimicking the teaching-learning process in a classroom. Election Campaign Optimization [54], Brain Strom Optimization [55], Exchange Market Algorithm [56], Bus Transportation Algorithm [57], Group Teaching Optimization Algorithm [37], and Student Psychology Based Optimization [58] are other optimization algorithms that belong to this category.

Another classification of meta-heuristic algorithms is presented in [59] where meta-heuristic algorithms are divided into nine different categories: swarm-based, chemical-based, biology-based, physics-based, sports-based, musical-based, social-based, mathematical-based, and hybrid approaches. Besides the nine aforementioned categories, the authors in [60,61,62] added water-based, light-based and plant-based as three different classes of intelligent optimization algorithms.

Based on the number of candidate solutions involved in each iteration of the optimization process, meta-heuristic algorithms can also be classified into two categories: single-solution-based and population-based. In single-solution-based, only a single candidate solution is used to search for the optimal solution while in population-based methods a swarm of candidate solutions are needed. Most of the recent literature if not all as can be seen from Table 1 has focused on population-based methods as it is believed amongst the research community that single-solution-based algorithms always have poor performance compared with population-based approaches. This belief is because the performance of the three most common single-solution-based approaches, i.e, SA, Tabu Search [63], and Hill Climbing is very poor when compared with population-based algorithms. Lack of inspiration is another reason that has led to the development ignorance of single-solution-based algorithms. It is hard to find natural, physical or social phenomena that rely on a single object or creature. On the other hand, it is relatively easy to observe natural or physical behaviours generated by groups. These two main reasons have increased the popularity of population-based algorithms and at the same time neglected the development of new single-solution-based algorithms.

Many works have attempted to improve the optimization performance by introducing new ideas that can help to update the positions of potential solutions effectively. The authors in [9] proposed a Grey Wolf Optimizer (GWO) that implements a leadership hierarchy that consists of four wolves known as alpha, beta, delta, and omega. Moreover, GWO imitates the hunting behaviour of preys that is performed in three different steps: searching, encircling and attacking preys. The proposed hierarchy system of GWO provides diversity that can help to achieve good results. In [25], a novel optimization algorithm called Equilibrium Optimizer (EO) is proposed where it is inspired by mass balance for a control volume. The strength of EO mainly comes from a term called generation rate that is responsible to exploit the space and it sometimes plays an essential exploration role.

Inspired by the Archimedes’ Principle, an Archimedes Optimization Algorithm (AOA) is proposed in [39]. The exploration phase of AOA is activated when objects collide with each other while exploitation takes place when no collision happens. The work in [64] developed a new nature-inspired algorithm called Moth-Flame Optimization (MFO) that mimics moths movements that rely on the moon’s light to travel in a direct path. In MFO, moths are potential solutions whereas flames are the best solutions that have been obtained. Another difference between moths and flames is the updating mechanism. To promote exploration and avoid local optimum in MFO, each moth is assigned one flame only. Moreover, MFO attempts to balance exploration and exploitation by reducing the number of flames. In [65], a Honey Badger algorithm (HBA) is developed by formulating the digging behaviour to represent the exploration stage while exploitation is represented by the process of finding honey. HBA proposes a density factor that can help to smoothly switch from exploration to exploitation.

Another nature-inspired optimization algorithm called Ant Lion Optimizer (ALO) is proposed in [66]. In ALO, following the natural searching behaviour of ants, the movements of ants are modelled by a random walk. The roulette wheel operator is implemented for modelling the hunting behaviour of antlions. In addition, elitism is applied by ALO to store the best obtained solutions. The proposed random walk of ALO enhances its exploration abilities whereas elitism promotes exploitation particularly at the final stages of the ALO search process.

In [67], a novel intelligent optimization algorithm called War Strategy Optimization (WSO) is proposed where its mechanism mimics the strategical movements (defence or attack) of army troops when wars take place. Utilizing a war strategy, WSO develops a novel updating mechanism to update the position of soldiers. Moreover, a unique updating mechanism is proposed to update the positions of weak soldiers. Another interesting mechanism of WSO is to replace weak or injured soldiers with new ones or to relocate them. The proposed WSO strategies can contribute towards balancing exploration and exploitation and achieve good performances. Inspired by chemical reactions, an Artificial Chemical Reaction Optimization Algorithm (ACROA) is proposed in [68]. IN ACROA, atoms are treated as particles since they have positions and velocities. To enhance the global and local search capabilities, ACROA applies five chemical reactions: synthesis, bimolecular, redox2, displacement, and monomolecular reactions. One of the main advantages of ACROA is that it has a few parameters. The methodologies in [67, 68] and [5] can be integrated with SCO and other optimization algorithms to improve the optimization performance.

Although the state-of-the-art optimization algorithm have shown remarkable improvements in solving diverse problems, they achieve the best performance only on certain problems while their performances of different problems is far from optimal. The following summarizes six main disadvantages of existing metaheuristic algorithms:

-

Many metaheuristic algorithms achieve strong exploration performances; however, their exploitation ability is weak. On the other hand, some metaheuristic algorithms can exploit the search space well; nevertheless, they have poor exploration capabilities. This results in undesired exploration-exploitation imbalance that degrades the overall optimization performance.

-

Some intelligent swarm algorithms can easily coverage to local optima. These algorithms either have poor strategies that cannot avoid local optima or they do not have strong mechanisms that can help to redirect the search towards promising regions once the algorithm is trapped.

-

A significant disadvantage of some algorithms is the requirement of massive number of function evaluations to achieve acceptable solutions.

-

Many algorithms have various sensitive parameters where a slight change in a certain parameter can affect the performance significantly.

-

Some optimization algorithms perform well on low dimensional problems; however, their performance substantially degrades as the number of dimensions increases.

-

Although some algorithms can achieve promising results on unconstrained problems, they face difficulties in solving real-world constrained optimization problems.

According to the No Free Lunch (NFL) theorem [69], a meta-heuristic algorithm that performs well on a particular class of problems achieves degraded performance when it solves different sets of problems. In other words, there is no meta-heuristic algorithm that can provide the best solutions for all kind of problems. Many state-of-the-art meta-heuristic algorithms have shown promising results on a certain set of problems; however, they have demonstrated poor performance when applied to solve a different set of problems. This motivates researchers to develop novel meta-heuristic methods that achieve higher level of accuracy when applied to a wide range of optimization problems. Table 1 summarizes some recent optimization algorithms and their inspirations.

3 Proposed algorithm

This work proposes a novel approach that utilizes only a single candidate solution during the whole optimization process to find better solutions, unlike most of the existing searching algorithms that rely on a swarm of particles. In the proposed scheme, the overall optimization process that consists of T function evaluations or iterations is divided into two phases where the candidate solution updates its position differently in each phase. Although single-solution-based algorithms and two-phase approaches are two established meta-heuristic optimization methods, they have been implemented separately. The developed approach integrates the single candidate approach with the two-phase strategy to form a single robust algorithm. Most importantly, the proposed algorithm implements a unique set of equations to update the position of the candidate solution with relying only on its information, i.e., its current position.

The purpose of the two-phase strategy is to provide diversity and balance between exploration and exploitation. The first phase in SCO terminates when \(\alpha \) function evaluations are performed while the second phase consists of \(\beta \) function evaluations where \(\alpha +\beta =T\). In the first phase of SCO, the candidate solution updates its positions as follows:

where \(r_1\) is a random variable in the range [0,1].

The mathematical definition of w is given as follows:

where b is a constant, t is the current function evaluation or iteration, and T is the maximum number of function evaluations, respectively.

The second phase of SCO performs a deep search that starts by extensively exploring the space around the best position obtained in the first phase. The latter part of phase two reduces the space to be searched which helps to focus on promising regions only. The following shows how the candidate solution updates its position in the second phase:

where \(r_2\) is another random variable in the range of [0,1], \(ub_j\) and \(lb_j\) are the boundary upper and lower bounds, respectively and w is the most important parameter in SCO which is responsible to balance between exploration and exploitation. From (3), w decreases exponentially as the number of function evaluations increase. This behaviour is crucial as a relatively high value of w at the beginning of the search process helps to explore the search space effectively while a small value of w strengthens the exploitation abilities at the latter stages of the optimization process. One of the main limitations of meta-heuristic algorithms is becoming trapped in local optima particularly at the latter phases of the searching process. In other words, continuous update of the positions of candidate solutions does not yield fitness improvement. SCO tackles this issue by updating the position of the candidate solution differently in the second phase if no fitness improvement is achieved in m consecutive function evaluations. A counter c is used to count the number of function evaluations m that sequentially can not achieve fitness improvement. A binary parameter p is used to determine whether the updated candidate can achieve a successful fitness or not where \(p=1\) indicates successful fitness improvement while \(p=0\) denotes fitness improvement failure. In the second phase of SCO, a candidate solution updates its position based on (4); however, if performing m consecutive function evaluations does not improve the fitness value, the candidate solution updates its position as follows:

where \(r_3\) is a random number that can have a value in the range of [0,1]. The position update in (5) allows the candidate solution to shift from exploitation to exploration which is helpful to escape from local optimum.

Updating the positions of some variables can sometimes cause their values to go out of range or boundaries. To restrict variables from exceeding the boundaries, the updated positions are set as follows in case their values are higher than their upper bounds and lower bounds, respectively:

In (6), the updated dimension of a candidate solution is assigned the same value as the global best value if the updated position goes out of boundaries.

In SCO, a single candidate solution x is randomly generated and then it is iteratively updated in order to search for a better solution. The steps of the proposed algorithm are explained as follows. The process starts by randomly generating a candidate solution in the search space, evaluating its fitness, recording this candidate as gbest (global best position) and its fitness f(gbest) as the global best fitness. The initial candidate solution is generated as follows:

where \(lb_j\) and \(ub_j\) are the lower and upper boundaries of the search space, \(r_4\) is a random number in the range of [0,1].

The repetitive process that terminates when it reaches T function evaluations starts by updating the position of the candidate solution. The candidate solution x updates its position in phase one and phase two based on (2) and (4), respectively. After updating the candidate position, the fitness of the newly generated candidate solution f(x) is evaluated and compared with f(gbest). If f(x) is better than the gbest fitness f(gbest), gbest and f(gbest) are replaced by x and f(x), respectively. The iterative process continues until the maximum number of function evaluations T is reached. The pseudo-code of the proposed algorithm is presented in Algorithm 1.

3.1 Complexity analysis

The computational complexity of population-based algorithms depends on four parameters: the number of candidate solutions and dimensions denoted as N and D, respectively, the cost of evaluating the objective function C and the maximum number of function evaluations T. In swarm algorithms, the maximum number of function evaluations is given as \(T=Nt\) where t is the maximum number of iterations. Generally, the minimal computational complexity of swarm algorithms including PSO, GWO and EO is composed of two main elements: initialization and main loop. Initialization involves generation of random candidate solutions and evaluating their fitness. The time complexity of generating candidate solutions is given as O(ND) while O(NC) represents the complexity of evaluating their fitness. Thus, the overall initialization complexity is give as \(O(ND+NC)\).

The main loop is mainly composed of function evaluations and position updates. The computational complexity of evaluating the fitness for all candidate solutions in the main loop for all iterations is O(tNC) which is equivalent to O(TC) whereas the complexity of updating positions is O(TD). The minimal computational complexity of population-based algorithms can be written as follows:

Besides O(initialization) and O(main loop), other operations such as memory savings as in PSO and EO are needed which contribute to increasing the complexity level.

In SCO, the initialization complexity is \(O(D+C)\) which is lower than the initialization complexity of other algorithms (\(O(ND+NC)\)) as SCO has only one candidate solution. The main loop complexity of SCO is \(O(TC+TD)\) which includes function evaluations and positions update with complexities of O(TC) and O(TD), respectively. Thus, the overall computational complexity of SCO is given as follows:

From (8) and (9), it is clear that the computational complexity of SCO is even lower than the minimal complexity of population-based algorithms.

4 Results and discussion

To validate the effectiveness of the proposed algorithm, it is tested first on a set of 23 classical benchmarking functions [9, 11, 70,71,72] that are divided into three different groups: unimodal, multimodal and fixed-dimension multimodal functions. Unimodal functions are used to test the exploitation ability of optimization algorithms since they have only one global optimum whereas multimodal functions assess the exploration efficiency as they have multiple local optima. The difference between the multimodal functions (\(f_8-f_{13}\)) and the fixed-dimension multimodal functions (\(f_{14}-f_{23}\)) is that the number of variables of \(f_{14}-f_{18}\) are unchangeable, unlike \(f_8-f_{13}\). In addition, the fixed-dimension multimodal functions spans different search range. Tables 2 , 3 and 4 list the mathematical representations of unimodal, multimodal and fixed-dimension multimodal functions, respectively.

To further validate the effectiveness of the proposed algorithm, its performance is evaluated on the CEC 2019 test suite. A summary of the CEC 2019 test functions that includes function name, dimension, and search range is provided in Table 5. The performance of the proposed approach is compared with three well-known optimization algorithms, i.e., PSO, GWO and GSA. It is also compared with four recent high-performance approaches: EO, AOA, MA, and SSA. EO, AOA, MA, and SSA have shown outstanding performance when applied to solve benchmarking functions and real-world engineering problems. Their results showed that they can outperform several optimization algorithms such as Success-History Based Parameter Adaptation Differential Evolution (SHADE) [73], LSHADE-SPACM, GA, DE, HHO, and L-SHADE.

The results of all compared algorithms are averaged over 30 independent runs. For all the test functions, the algorithms are compared in terms of the average fitness and the average standard deviation. All simulation results are generated under equal conditions. MATLAB is used to produce the results for all algorithms on Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz with 8 GB RAM.

To provide a fair comparison, the maximum number of function evaluations for all algorithms is set to 3000. For all algorithms except SCO, the maximum number of iterations are 100 while the number of candidate solutions are 30 which is equivalent to 3000 function evaluations. Similar to all other algorithms, the maximum number of function evaluations in SCO is 3000; however, the difference is that SCO uses only one candidate solution instead of a swarm of particles. Candidate solutions are known as particles in PSO, search agents in EO, GWO, and SSA, solutions in MA, objects in AOA, and mass in GSA. Table 6 summarizes the parameter settings of all algorithms as recommended by their original papers.

Tables 7, 8, 9 show the results of the average fitness and standard deviation for the unimodal, multimodal, and fixed-dimension functions for the eight compared algorithms, respectively.

4.1 Exploitation analysis

As mentioned earlier, the purpose of unimodal benchmarking functions is to validate the exploitation ability of an optimization algorithm.

According to the statistical results of unimodal functions (\(f_1-f_7\)) in Table 7, it is clear that SCO outperforms all other compared algorithms on all functions except \(f_5\). For \(f_5\), SCO is ranked second following EO and its performance is very close to the performance achieved by EO. The superior performance of the proposed approach is also shown in terms of standard deviation demonstrating that the proposed method is a more stable algorithm. The results in Table 7 shows that the SCO algorithm has strong exploitation ability.

4.2 Exploration analysis

The exploration ability of the proposed algorithm is validated by testing it on 16 multimodal functions that include high dimensional (\(f_8-f_{13}\)) and fixed dimension (\(f_{14}-f_{23}\)) functions. Tables 8 and 9 provide the statistical results of all compared approaches for the \(f_8-f_{13}\) functions and for the \(f_{14}-f_{23}\) functions, respectively. The results illustrate that SCO achieves better solution accuracy than other algorithms on functions \(f_8-f_{12}\), \(f_{14}\), \(f_{21}\), and \(f_{23}\) while it achieves the best performance on \(f_{16}-f_{19}\) equally with a few other algorithms such as EO and MA.

As Tables 8 and 9 show, SCO is able to achieve the optimal solutions for \(f_9\), \(f_{11}\), \(f_{16}\), \(f_{18}\) and \(f_{19}\). It is also evident that SCO is the only algorithm that provides the optimal solutions for \(f_9\) and \(f_{11}\). For the rest of the multimodal functions, SCO provides a close to optimum solutions and its performance is competitive with other algorithms. Table 8 also shows that SCO is the best algorithm to solve \(f_8\) where \(f_8\) is considered to be one of the most difficult multimodal functions as it has a high number of local optimum.

4.3 Impact of high-dimensionality

Many metaheuristic algorithms achieve degraded performance when they solve high dimensional problems. Therefore, it is essential to test the high-dimensional performances of new metaheuristic algorithms. This subsection evaluates the high-dimensional performance of SCO on \(f_1-f_{13}\) by increasing the number of dimensions from 30 to 100 and 200. Tables 10 and 11 present the statistical results of SCO and the other comparative algorithms when \(D=100\) and \(D=200\), respectively. Table 10 shows that SCO outperforms all algorithms on all functions except \(f_{13}\) when \(D=100\). It also shows that SCO is the only algorithm that can achieve the optimal solutions for \(f_1\), \(f_9\) and \(f_{11}\). When \(D=200\), Table 11 shows that SCO performs better than all other algorithms on all functions except \(f_5\) and \(f_{13}\) where SCO is ranked second. Tables 10 and 11 have shown that SCO is not significantly affected by increasing the number of dimensions unlike other algorithms.

4.4 Sensitivity analysis

The SCO parameters particularly \(\alpha \) and b are expected to significantly influence its optimization performance. The impact of \(\alpha \) and b on the SCO performance is investigated in this subsection. Three different cases are studied where the first phase of SCO in case one, case two and case three consists of 500, 1000 and 2000 function evaluations, respectively. In each case, eight different scenarios are considered where the value of b decreases from 3 to 0.9. The statistical results of unimodal and multimodal functions for the first case, second case and third case are presented in Table 12, Table 13, and Table 14, respectively. From these Tables, it is evident that better exploitation is achieved in the first case where the value of \(\alpha \) is 500. However, better exploration can be obtained if the value of \(\alpha \) increases from 500 to 1000 or 2000.

According to the results, a good balance between exploration and exploitation is achieved when the value of \(\alpha \) is 1000. Considering the parameter b, Tables 12, 13 and 14 show that the exploration performance of SCO degrades when b decreases from 3 to 0.9 while the exploitation of SCO improves. The best performance is achieved when the value of b is 2.4. Overall, based on the Friedman mean rank, the best performance of SCO is achieved when the value of \(\alpha \) is 1000 and the value of b is 2.4.

4.5 Performance of SCO on the CEC 2019 suite

The performance of SCO on the CEC 2019 test suite is presented in Table 15. The results in Table 15 show that SCO outperforms all algorithms on functions \(F_1\), \(F_2\), \(F_5\), and \(F_{10}\). It is also clear from Table 15 that SCO achieves the optimal solution on \(F_1\) while all other algorithms achieve poor performance when solving the same function. The proposed algorithm achieved competitive results on \(F_3\) and \(F_8\) that allows it to be ranked second. The performance of SCO on the rest of the CEC 2019 functions is close to the best performance achieved by other algorithms as Table 15 illustrates.

4.6 Statistical significance analysis

The Friedman test as one of the most famous statistical tests is used to statistically analyze the performance of SCO. The principle of this test is to rank all compared approaches for each problem individually. For each problem, the best, second best, and third best algorithms are ranked as 1, 2, and 3 and so on. The performance of each algorithm is averaged over all problems. In Friedman test,the best approach is the one that achieves the lowest average rank. The Friedman test results that provide the average rank for all algorithms considering all test functions in low and high dimensional cases are shown in Table 16. As Table 16 illustrates, SCO achieves the lowest average rank with a value of 1.4 demonstrating the superiority of the proposed approach. The second best algorithm is EO, followed by AOA, GWO, MA, PSO, GSA and SSA.

Wilcoxon rank-sum test is another prominent statistical test that is widely used to validate the effectiveness of novel metaheuristic algorithms. A pair-wise comparison between SCO and the comparative algorithms at 0.05 significance level is carried out. Table 17, Table 18, Table 19, and Table 20 show the p-values of the Wilcoxon test for \(f_1-f_{23}\) (D=30 for \(f_1-f_{13}\)), \(f_1-f_{23}\) (D=100), \(f_1-f_{23}\) (D=200), and the ten CEC2019 test functions, respectively. From these Tables, it is obvious that SCO is significantly better as compared with the state-of-the-art algorithms.

4.7 Convergence behavior of SCO

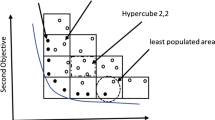

One of the main problems faced by optimization algorithms is convergence to local optima. To tackle this undesired convergence behavior, it is essential to balance between exploration and exploitation which in turns ensures convergence to global optima. The balance between exploration and exploitation in SCO is achieved by the implementation of the two phases. Moreover, in the second phase, the parameter w is used to control the right amount of space to be explored or exploited. A relatively high w value allows it to perform extensive exploration while a low w value is needed to exploit promising regions. As a result, w is set to have a high value at the beginning of the search process to enable efficient exploration and it is decreased as the number of function evaluations increase in order to achieve successful exploitation.

To provide a fair and concise comparison, the convergence behaviour of the proposed algorithm is compared with the best four existing algorithms, i.e, EO, GWO, AOA, and PSO. The selection of these algorithms is based on their rank as presented in Table 16. The convergence curves of SCO and the best four existing algorithms for some unimodal, multimodal and CEC 2019 functions are shown in Figure 1. From Fig. 1, it is clear that SCO outperforms all algorithms. The convergence behaviour of SCO on unimodal functions (\(f_1\), \(f_3\), \(f_4\), \(f_6\) and \(f_7\)) shows the superiority of SCO to rapidly exploit promising regions. From the same figure, it is evident that SCO requires only a few function evaluations to reach near optimal solutions while other algorithms require higher numbers of function evaluations. For instance, SCO requires only 199 function evaluations to achieve a value of \(10^{-10}\) (a near-optimal value) when solving \(f_1\) while all other algorithms are not able to reach this value with 3000 function evaluations except AOA that requires 1553 function evaluations. This demonstrates the efficient convergence behaviour of SCO when dealing with unimodal functions.

Similarly, SCO has shown its ability to escape from local optima quickly as illustrated in Fig. 1 (\(f_9\), \(f_{10}\), \(f_{11}\), \(f_{21}\)). Figure 1 also shows that SCO is a fast optimization algorithm. Overall, the convergence curves shown in Fig. 1 illustrate the superiority of SCO in terms of convergence speed when it is used to solve different kind of optimization problems.

5 Engineering problems

The proposed algorithm is tested on four widely used real-world engineering problems in order to further validate its effectiveness. Real-world optimization problems usually have a number of constraints that must be satisfied. The presence of constraints divides particles or candidate solutions into two groups: valid and invalid candidate solutions. A valid candidate solution is a one that can satisfy all constraints whereas a candidate solution that violates one or more constraints is invalid. To penalize an invalid candidate solution in minimization problems, its fitness is assigned a large value, for example \(10^9\). For all engineering problems, the parameter setting of all algorithms are the same parameters presented in Table 6 except that the maximum number of function evaluations is 15000. The following presents the engineering problems and the obtained results for all compared algorithms while their mathematical formulations are provided in [9, 74].

5.1 Welded beam design (WBD)

Welded beam design is one of the most well-known real-world engineering problems that serves as a benchmark to validate the effectiveness of meta-heuristic algorithms. This problem aims to minimize the fabrication cost when designing a welded beam. The welded beam design problem has four variables and five constraints as shown in Appendix A.

Table 21 shows the best solutions obtained by all compared algorithms including the best variables and the best fitness. From Table 21, it is clear that SCO provides the best fitness besides EO, PSO, MA, and AOA. It is also evident from Table 21 that SCO requires fewer number of function evaluations.

The statistical results of all algorithms for the welded beam design problem are presented in Table 22.

5.2 Speed reducer design problem (SRD)

SRD deals with designing a speed reducer for small aircraft engine with the objective of minimizing the weight of the speed reducer. As shown in Appendix B [75], the number of constraints and variables of the SRD minimization problem are 11 and 7, respectively. Table 23 shows the best obtained solutions in terms of best variables, best weight, and the number of function evaluations for the proposed and the state-of-the-art algorithms. From Table 23, it is shown that SCO outperforms GWO, SSA and GSA algorithms in terms of the best obtained weight while it achieves the same performance as EO, PSO, MA and AOA. The statistical results of all compared algorithms are presented in Table 24.

5.3 Pressure vessel design problem (PVD)

The main aim of the PVD problem is to minimize the total cost when designing a pressure vessel. As shown in Appendix C, four constrains must be satisfied to solve the PVD problem while four variables are involved to compute the objective function. The best achieved solutions and the statistical results of all algorithms are presented in Tables 25 and 26, respectively. The results in Table 25 demonstrates the superiority of SCO in terms of achieving the best cost. Moreover, SCO requires fewer number of function evaluations to achieve better cost compared with other algorithms.

5.4 Tension/compression spring design problem (TSDP)

TSDP involves designing a tension/compression spring where the main objective is to minimize weight. The TSDP problem contains 3 variables and 4 constraints as illustrated in Appendix D. Table 27 compares the performance of all compared algorithms in terms of best achieved variables, best achieved solution, and the number of function evaluations required to reach the value of the best weight. It is clear from Table 27 that SCO achieves the best solution with requiring only 1445 function evaluations while other algorithms require more than 4170 function evaluations (Table 28).

SCO has achieved significant and remarkable optimization improvements because it implements a unique set of equations that can effectively update the position of the candidate solution throughout the entire optimization process. The proposed unique set of updating equations allows SCO to extensively explore the search space in the early stages of the SCO optimization process. In this exploration phase, SCO updates its position based on an equation that allows the candidate solution to visit as many new locations as possible. In other words, SCO broadly yet effectively explores the search space to discover places where the optimal solution might be found.

The integration of SCO with the two-phase strategy has shown its effectiveness in balancing exploration and exploitation. According to the results, when SCO performs 500 function evaluations only during the exploration phase and 2500 function evaluations for exploitation, SCO achieves promising exploitation performances. However, the exploration performance of SCO degrades. This happens because SCO did not spend enough time to explore the space. As a result, SCO skips some regions where the optimal solution might be located. On the other hand, increasing the number of function evaluations from 500 to 1000 for the exploration phase has demonstrated that SCO performs well on unimodal and multimodal functions. This happens as a result of giving SCO enough time for exploration without affecting the exploitation process as SCO still spends two-thirds of the optimization process searching around the discovered promising areas. Overall, the best SCO performance is achieved when one-third of the optimization process is dedicated for exploration while the remaining SCO process focuses on exploitation.

SCO does not only avoid local optima entrapment, it also implements the escape from local optima strategy to smoothly switch from exploration into exploitation in case SCO is stagnated. The integration of SCO and this strategy can help to enhance the performance particularly for problems that have many local optima as results have shown. In addition, the exploration and exploitation abilities of SCO heavily relies on the parameter w. This parameter can help to balance exploration and exploitation if its values during the iterative SCO process is chosen properly. The parameter w should have a relatively high value at the beginning of the SCO process to promote exploration. As the number of function evaluations increase, the value of w should decrease to strength the exploitation abilities.

To summarize, the proposed unique set of updating equations, the two-phase strategy, the escape from local optima strategy, and the parameter w are the main contributors that have supported SCO to achieve promising results.

One of the main strengths of SCO is its strong exploration abilities that is achieved by effectively updating the position of the candidate solution. In addition, SCO starts a deep exploitation search after an extensive exploration search is performed. SCO also have a higher potential to avoid local optimum. However, it might be stuck at local optima. SCO can tackle this problem by the implementation of the escape from local optima strategy which allows SCO to smoothly switch from the exploitation process into an exploration mission. In terms of computational complexity, SCO has lower complexity compared with swarm algorithms as shown in (9). Moreover, SCO does not require a massive number of function evaluations to achieve good performances. In this work, only 3000 function evaluations are needed to produce optimal and near-optimal solutions.

Although SCO has shown superior performances on most of the investigated unconstrained and constrained problems, it still suffers from two main limitations. Similar to swarm algorithms, SCO replaces the entire global best position once a new candidate solution can achieve a better fitness even if a few dimensions of the newly candidate solution obtained at iteration tare worse than their corresponding dimensions of the global best position found at iteration \(t-1\). In some cases, combining the best dimensions (not necessarily all) of the candidate solution at iteration t and the best dimensions of the candidate solutions at iteration \(t-1\) might generate a new candidate solution that can achieve a better fitness compared with the fitness of the best candidate solution found so far. This combination has been investigated in [76] for PSO and its implementation has shown significant improvements. However, the approach in [76] is computationally expensive. Therefore, it is needed to develop new methods to tackle this issue for SCO and swarm algorithms. Another limitation of SCO is that careful selections of the parameters b and \(\alpha \) are required to achieve the best performances.

6 Conclusions and future research

This paper proposes a novel optimization algorithm called Single Candidate Optimizer (SCO) that implements a unique set of equations to effectively update the position of the candidate solution. To balance between exploration and exploitation, the two-phase strategy is applied where the candidate solution updates its position differently eat each phase. SCO also implements a escape from local optima strategy which permits the candidate solution to shift from an exploitation mode into an exploration mode in the second phase of SCO. The integration of SCO with the two-phase strategy and the escape from local optimum method allows the candidate solution to explore and exploit the search space well. The effectiveness of SCO is validated by testing it on thirty-three classical benchmarking functions and four real-world engineering problems. The performance of SCO is compared with 7 well-known and recent optimization algorithms including PSO, GWO, EO and AOA. Results of unimodal and multimodal functions have demonstrated that SCO can effectively explore the search space in the first phase and it then switches to the second phase to perform deep exploitation. Moreover, SCO has shown that it can avoid and escape from local optima particularly when it solves functions with multiple optima. For most of the studied problems, results have shown that SCO can achieve optimal and near-optimal solutions and its performance is significantly better than other algorithms in terms of solution accuracy and convergence speed. According to the results, the best SCO performance is achieved when it spends one-third of the optimization process exploring the search space while the remaining SCO process focuses on exploitation. In addition, it has been demonstrated that the computational complexity of SCO is lower than the complexity of swarm algorithms. Another advantage of SCO is that it does not require massive number of functions evaluations to achieve significant performances. This work has shown that single-solution-based algorithms can outperform population-based algorithms if designed well.

Further work is needed to further improve the performance of single-solution-based algorithms. The following present some potential research directions that can further improve the performance of SCO:

-

SCO can be hybridized with other algorithms such as PSO, GWO and EO.

-

A new version of SCO can be developed to solve multi-objective problems.

-

A binary version of SCO can be developed to solve binary problems such as the problem of feature selection.

-

SCO can be applied to solve a wide range of real-world optimization problems such as lot-sizing optimization [77, 78], data clustering [79], optimizing the hyper-parameters of convolutional neural networks [80], designing supply-chain network [81], and maintenance scheduling [82].

-

SCO can be integrated with chaotic maps and levy flight random walk [83].

-

SCO be applied to solve well-known constrained optimization problems such as cantilever beam design and three-bar truss design.

Availability of data and materials

My manuscript has no associated data.

References

Afshar M, Faramarzi A (2010) Size optimization of truss structures by cellular automata. J Comput Sci Eng 3(1):1–9

Faramarzi A, Afshar M (2014) A novel hybrid cellular automata-linear programming approach for the optimal sizing of planar truss structures. Civil Eng Environ Syst 31(3):209–228

Shami TM, Grace D, Burr A, Vardakas JS (2019) Load balancing and control with interference mitigation in 5G heterogeneous networks. EURASIP J Wireless Commun Netw 2019(1):1–12

Feng S, Chen Y, Zhai Q, Huang M, Shu F (2021) Optimizing computation offloading strategy in mobile edge computing based on swarm intelligence algorithms. EURASIP J Adv Signal Process 2021(1):1–15

Pham Q-V, Mirjalili S, Kumar N, Alazab M, Hwang W-J (2020) Whale optimization algorithm with applications to resource allocation in wireless networks. IEEE Trans Vehicular Technol 69(4):4285–4297

Al-Tashi Q, Akhir EAP, Abdulkadir SJ, Mirjalili S, Shami TM, Alhusssian H, Alqushaibi A, Alwadain A, Balogun AO, Al-Zidi N (2021) Classification of reservoir recovery factor for oil and gas reservoirs: a multi-objective feature selection approach. J Marine Sci Eng 9(8):888

Moayedi H, Nguyen H, Kok Foong L (2021) Nonlinear evolutionary swarm intelligence of grasshopper optimization algorithm and gray wolf optimization for weight adjustment of neural network. Eng Comput 37(2):1265–1275

Singh P, Chaudhury S, Panigrahi BK (2021) Hybrid mpso-cnn: multi-level particle swarm optimized hyperparameters of convolutional neural network. Swarm Evol Comput 63:100863

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Shami TM, El-Saleh AA, Alswaitti M, Al-Tashi Q, Summakieh MA, Mirjalili S (2022) Particle swarm optimization: a comprehensive survey. IEEE Access

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95-international conference on neural networks 4:1942–1948 . IEEE

Dorigo M, Birattari M, Stutzle T (2006) Ant colony optimization. IEEE Comput Intell Mag 1(4):28–39

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Gandomi AH, Alavi AH (2012) Krill herd: a new bio-inspired optimization algorithm. Commun Nonlinear Sci Numer Simul 17(12):4831–4845

Arora S, Singh S (2019) Butterfly optimization algorithm: a novel approach for global optimization. Soft Comput 23(3):715–734

Dhiman G, Kumar V (2019) Seagull optimization algorithm: theory and its applications for large-scale industrial engineering problems. Knowl Based Syst 165:169–196

Yang XS, Deb S (2009) Cuckoo search via lévy flights. In: 2009 World congress on nature and biologically inspired computing (NaBIC), pp 210–214. IEEE

Holland J (1975) Adaptation in natural and artificial systems. The University of Michigan Press, Ann Arbor

Fogel DB (1998) Artificial intelligence through simulated evolution. Wiley-IEEE Press, London

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Glob Opt 11(4):341–359

Hansen N, Müller SD, Koumoutsakos P (2003) Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol Comput 11(1):1–18

Kirkpatrick S, Gelatt Jr CD, Vecchi MP (1987) Optimization by simulated annealing. In: Readings in computer vision, pp 606–615. Elsevier

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: a novel optimization algorithm. Knowl Based Syst 191:105190

Hashim FA, Houssein EH, Mabrouk MS, Al-Atabany W, Mirjalili S (2019) Henry gas solubility optimization: a novel physics-based algorithm. Future Generation Comput Syst 101:646–667

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: algorithm and applications. Future Generation Comput Syst 97:849–872

Zhao W, Wang L, Zhang Z (2019) Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl Based Syst 163:283–304

Yapici H, Cetinkaya N (2019) A new meta-heuristic optimizer: pathfinder algorithm. Appl Soft Comput 78:545–568

Shadravan S, Naji H, Bardsiri VK (2019) The sailfish optimizer: a novel nature-inspired metaheuristic algorithm for solving constrained engineering optimization problems. Eng Appl Artif Intell 80:20–34

Faramarzi A, Heidarinejad M, Mirjalili S, Gandomi AH (2020) Marine predators algorithm: a nature-inspired metaheuristic. Exp Syst Appl 152:113377

Askari Q, Saeed M, Younas I (2020) Heap-based optimizer inspired by corporate rank hierarchy for global optimization. Exp Syst Appl 161:113702

Ahmadianfar I, Bozorg-Haddad O, Chu X (2020) Gradient-based optimizer: a new metaheuristic optimization algorithm. Inf Sci 540:131–159

Zervoudakis K, Tsafarakis S (2020) A mayfly optimization algorithm. Comput Ind Eng 145:106559

Ghasemi-Marzbali A (2020) A novel nature-inspired meta-heuristic algorithm for optimization: bear smell search algorithm. Soft Comput 24(17):13003–13035

Askari Q, Younas I, Saeed M (2020) Political optimizer: a novel socio-inspired meta-heuristic for global optimization. Knowl Based Syst 195:105709

Zhang Y, Jin Z (2020) Group teaching optimization algorithm: a novel metaheuristic method for solving global optimization problems. Exp Syst Appl 148:113246

Abualigah L, Diabat A, Mirjalili S, Abd Elaziz M, Gandomi AH (2021) The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376:113609

Hashim FA, Hussain K, Houssein EH, Mabrouk MS, Al-Atabany W (2021) Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl Intell 51(3):1531–1551

Abualigah L, Yousri D, Abd Elaziz M, Ewees AA, Al-qaness MA, Gandomi AH (2021) Aquila optimizer: a novel meta-heuristic optimization algorithm. Comput Ind Eng 157:107250

Połap D, Woźniak M (2021) Red fox optimization algorithm. Exp Syst Appl 166:114107

MiarNaeimi F, Azizyan G, Rashki M (2021) Horse herd optimization algorithm: a nature-inspired algorithm for high-dimensional optimization problems. Knowl Based Syst 213:106711

Jia H, Peng X, Lang C (2021) Remora optimization algorithm. Exp Syst Appl 185:115665

Agushaka JO, Ezugwu AE, Abualigah L (2022) Dwarf mongoose optimization algorithm. Comput Methods Appl Mech Eng 391:114570

Hashim FA, Hussien AG (2022) Snake optimizer: a novel meta-heuristic optimization algorithm. Knowl Based Syst 242:108320

Oyelade ON, Ezugwu AE-S, Mohamed TI, Abualigah L (2022) Ebola optimization search algorithm: a new nature-inspired metaheuristic optimization algorithm. IEEE Access 10:16150–16177

Abualigah L, Abd Elaziz M, Sumari P, Geem ZW, Gandomi AH (2022) Reptile search algorithm (rsa): a nature-inspired meta-heuristic optimizer. Exp Syst Appl 191:116158

Mirjalili S (2016) SCA: a sine cosine algorithm for solving optimization problems. Knowl Based Syst 96:120–133

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012) Water cycle algorithm—a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110:151–166

Hatamlou A (2013) Black hole: a new heuristic optimization approach for data clustering. Inf Sci 222:175–184

Kaveh A, Dadras A (2017) A novel meta-heuristic optimization algorithm: thermal exchange optimization. Adv Eng Softw 110:69–84

Borji A (2007) A new global optimization algorithm inspired by parliamentary political competitions. In: Mexican international conference on artificial intelligence, pp 61–71 . Springer

Rao RV, Savsani VJ, Vakharia D (2011) Teaching-learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Lv W, He C, Li D, Cheng S, Luo S, Zhang X (2010) Election campaign optimization algorithm. Proc Comput Sci 1(1):1377–1386

El-Abd M (2017) Global-best brain storm optimization algorithm. Swarm Evol Comput 37:27–44

Ghorbani N, Babaei E (2014) Exchange market algorithm. Appl Soft Comput 19:177–187

Bodaghi M, Samieefar K (2019) Meta-heuristic bus transportation algorithm. Iran J Comput Sci 2(1):23–32

Das B, Mukherjee V, Das D (2020) Student psychology based optimization algorithm: a new population based optimization algorithm for solving optimization problems. Adv Eng Softw 146:102804

Akyol S, Alatas B (2017) Plant intelligence based metaheuristic optimization algorithms. Artif Intell Rev 47(4):417–462

Alatas B, Bingol H (2020) Comparative assessment of light-based intelligent search and optimization algorithms. Light Eng 28(6)

Alatas B, Bingol H (2019) A physics based novel approach for travelling tournament problem: optics inspired optimization. Inf Technol Control 48(3):373–388

Bingol H, Alatas B (2020) Chaos based optics inspired optimization algorithms as global solution search approach. Chaos Solitons Fractals 141:110434

Glover F, Laguna M (1998) Tabu search. In: Handbook of combinatorial optimization, pp 2093–2229. Springer

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl Based Syst 89:228–249

Hashim FA, Houssein EH, Hussain K, Mabrouk MS, Al-Atabany W (2022) Honey badger algorithm: New metaheuristic algorithm for solving optimization problems. Math Comput Simul 192:84–110

Mirjalili S (2015) The ant lion optimizer. Adv Eng Softw 83:80–98

Ayyarao TS, RamaKrishna N, Elavarasan RM, Polumahanthi N, Rambabu M, Saini G, Khan B, Alatas B (2022) War strategy optimization algorithm: a new effective metaheuristic algorithm for global optimization. IEEE Access 10:25073–25105

Alatas B (2011) Acroa: artificial chemical reaction optimization algorithm for global optimization. Exp Syst Appl 38(10):13170–13180

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evolut Comput 1(1):67–82

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3(2):82–102

Digalakis JG, Margaritis KG (2001) On benchmarking functions for genetic algorithms. Int J Comput Math 77(4):481–506

Mirjalili S, Lewis A (2013) S-shaped versus V-shaped transfer functions for binary particle swarm optimization. Swarm Evol Comput 9:1–14

Tanabe R, Fukunaga A (2013) Success-history based parameter adaptation for differential evolution. In: 2013 IEEE congress on evolutionary computation, pp 71–78 . IEEE

He X, Zhou Y (2018) Enhancing the performance of differential evolution with covariance matrix self-adaptation. Appl Soft Comput 64:227–243

Pant M, Thangaraj R, Singh V (2009) Optimization of mechanical design problems using improved differential evolution algorithm. Int J Recent Trends Eng 1(5):21

Van den Bergh F, Engelbrecht AP (2004) A cooperative approach to particle swarm optimization. IEEE Trans Evol Comput 8(3):225–239

Gharaei A, Hoseini Shekarabi SA, Karimi M (2020) Modelling and optimal lot-sizing of the replenishments in constrained, multi-product and bi-objective EPQ models with defective products: Generalised cross decomposition. Int J Syst Sci Oper Logist 7(3):262–274

Gharaei A, Karimi M, Hoseini Shekarabi SA (2020) Joint economic lot-sizing in multi-product multi-level integrated supply chains: Generalized benders decomposition. Int J Syst Sci Oper Logist 7(4):309–325

Alswaitti M, Albughdadi M, Isa NAM (2018) Density-based particle swarm optimization algorithm for data clustering. Exp Syst Appl 91:170–186

Wang Y, Zhang H, Zhang G (2019) cPSO-CNN: An efficient pso-based algorithm for fine-tuning hyper-parameters of convolutional neural networks. Swarm Evol Comput 49:114–123

Rabbani M, Foroozesh N, Mousavi SM, Farrokhi-Asl H (2019) Sustainable supplier selection by a new decision model based on interval-valued Fuzzy sets and possibilistic statistical reference point systems under uncertainty. Int J Syst Sci Oper Logist 6(2):162–178

Duan C, Deng C, Gharaei A, Wu J, Wang B (2018) Selective maintenance scheduling under stochastic maintenance quality with multiple maintenance actions. Int J Prod Res 56(23):7160–7178

Houssein EH, Saad MR, Hashim FA, Shaban H, Hassaballah M (2020) Lévy flight distribution: a new metaheuristic algorithm for solving engineering optimization problems. Eng Appl Artif Intell 94:103731

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no financial or personal relationships related to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Welded beam design problem

Appendix B: Speed reducer design problem

Appendix C: Pressure vessel design problem

Appendix D: Tension/compression spring design problem

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shami, T.M., Grace, D., Burr, A. et al. Single candidate optimizer: a novel optimization algorithm. Evol. Intel. 17, 863–887 (2024). https://doi.org/10.1007/s12065-022-00762-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-022-00762-7