Abstract

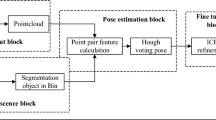

Bin picking has been much studied because it can help save labour as well as increase productivity. However, most of the research still use “look and then close eye” approach to pick up objects in boxes. That means that the object, after being processed in 3D image to know the position and the direction, will be transmitted directly to the robot to perform the picking task. This accuracy of this method is greatly affected by noise as well as camera calibration. This study will present a different method of picking objects in a box with higher accuracy; furthermore, this method is less affected by camera calibration. Firstly, by using a low cost camera, and 3D image processing, the rough position and the object to be picked up are determined. Next, the image moment based visual servoing method will be applied to fine-tune the accuracy of 3D image processing. This image based visual servoing method can limit the noise generated by the 3D image processing as well as the error of the camera calibration process. The efficiency of the system will be proven through a series of simulations by using real 3D point cloud captured image and 6DOF manipulator.

Similar content being viewed by others

Code or data availability

Not applicable.

References

Fernando, C., Yago, L., Diego, P.L., Alejandro, S.A.: Pose estimation and object tracking using 2D images. Procedia Manuf. 11, 63–71 (2017)

Zhang, X., Jiang, Z., Zhang, H., Wei, Q.: Vision-based pose estimation for textureless space objects by contour points matching. IEEE Trans. Aerosp. Electron. Syst. 54, 2342–2355 (2018)

Li, D., Liu, S.: Structured light based high precision 3D measurement and workpiece pose estimation. In: 2019 Chinese Automation Congress (CAC), pp. 669–674 (2019)

Wang, Z., Fan, J., Jing, F., Deng, S., Zheng, M., Tan, M.: An efficient calibration method of line structured light vision sensor in robotic eye-in-hand system. IEEE Sens. J. 20, 6200–6208 (2020)

Chang, W.C., Wu, C.H.: Eye-in-hand vision-based robotic bin-picking with active laser project. Int. J. Adv. Manuf. Technol. 85, 2873–2885 (2016)

Li, M., Hashimoto, K.: Fast and robust pose estimation algorithm for bin picking using point pair feature. In: 24th International Conference on Pattern Recognition (ICPR), pp. 1604–1609 (2018)

Radhakrishnamurthy, H.C., Murugesapandian, P., Ramachandran, N., Yaacob, S.: Stereo vision system for a bin picking adept robot. Malays. J. Comput. Sci. 20(1), 91–98 (2017)

Automation, Stereo 3D camera Ensenso N20 helps robots in bin picking, https://www.automationmagazine.co.uk/stereo-3d-camera-ensenso-n20-helps-robots-in-bin-picking/. Accessed 2021-10-13

Udaya, W., Choi, S.-I., Park, S.-Y.: Stereo vision-based 3D pose estimation of product labels for bin picking. J. Inst. Control Robot. Syst. 22, 8–16 (2016). https://doi.org/10.5302/j.icros.2016.15.0160

Mahler, J., Liang, J., Niyaz, S., Laskey, M., Doan, R., Liu, X., Ojea, J.A., Goldberg, K.: Dex-net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. In: Robotics: Science and Systems, pp. 245–251 (2017)

Tuan, L.T., Lin, C.Y.: Bin-picking for planar objects based on a deep learning network: a case study of USB Packs. Sensors 19(16), 3602–3618 (2019)

Chen, J., Fujinami, T., Li, E.: Deep bin picking with reinforcement learning. In: Proceedings of the 35th International Conference on Machine Learning, pp. 1–8 (2018)

Hanh, L.D., Tu, H.B.: Computer vision for industrial robot in planar bin picking application. Adv. Sci. Technol. Eng. Syst. J. 5(6), 1244–1249 (2020)

Pickit, “pickit3d.com,” (2018). https://www.pickit3d.com/videos/fast-bin-picking-with-pick-it-and-an-abb-robot

Cognex, High-speed bin picking, packing and palletizing, https://www.cognex.com/applications/customer-stories/food-and-beverage/high-speed-bin-picking-packing-and-palletizing

Luo, B., Chen, H., Quan, F., Zhang, S., Liu, Y.: Natural feature-based visual servoing for grasping target with an aerial manipulator. J. Bionic Eng. 17, 215–228 (2020)

Vicente, P., Jamone, L., Bernardino, A.: Towards markerless visual servoing of grasping tasks for humanoid robots. 2017 IEEE International Conference on Robotics and Automation (ICRA) 2017, 3811–3816 (2017). https://doi.org/10.1109/ICRA.2017.7989441

Ma, Y., Liu, X., Zhang, J.: Robotic grasping and alignment for small size components assembly based on visual servoing. Int. J. Adv. Manuf. Technol. 106, 4827–4843 (2020). https://doi.org/10.1007/s00170-019-04800-0

Claudio, G., Spindler, F., Chaumette, F.: Vision-based manipulation with the humanoid robot Romeo. In: 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), pp. 286–293 (2016). https://doi.org/10.1109/HUMANOIDS.2016.7803290. (Qrcode)

Engemann, H., Du, S., Kallweit, S., Cönen, P., Dawar, H.: OMNIVIL—an autonomous mobile manipulator for flexible production. Sensors 20, 7249 (2020). https://doi.org/10.3390/s20247249

Bedaka, A.K., Lee, S.-C., Mahmoud, A.M., Cheng, Y.-S., Lin, C.-Y.: A camera-based position correction system for autonomous production line inspection. Sensors 21, 4071 (2021). https://doi.org/10.3390/s21124071

Flecher, E.L., Petiteville, A.D., Cadenat, V., Sentenac, T.: Visual predictive control of robotic arms with overlapping workspace. In: ICINCO (2019)

Haviland, J., Dayoub, F., Corke, P.: Control of the final-phase of closed-loop visual grasping using image-based visual servoing. arXiv: Robotics (2020)

Recatala, G., Sanz, P.J., Cervera, E., del Pobil, A.P.: Filter-based control of a gripper-to-object positioning movement. In: 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), vol. 6, pp. 5423–5428 (2004). https://doi.org/10.1109/ICSMC.2004.1401056

Chen, W., Xu, T., Liu, J., Wang, M., Zhao, D., Picking robot visual servo control based on modified fuzzy neural network sliding mode algorithms. Electronics, Vol. 8, 2019, 605. [Google Scholar] [CrossRef]

Hanh, L.D., Lin, C.: Combining stereo vision and fuzzy image based visual servoing for autonomous object grasping using a 6-DOF manipulator. In: IEEE International Conference on Robotics and Biomimetics (ROBIO), pp. 1703–1708 (2012). https://doi.org/10.1109/ROBIO.2012.6491213.

Bulanon, D.M., Burr, C., DeVlieg, M., Braddock, T., Allen, B.: Development of a visual servo system for robotic fruit harvesting. AgriEngineering 3, 840–852 (2021). https://doi.org/10.3390/agriengineering3040053

Kapach, K., Barnea, E., Mairon, R., Edan, Y., Ben-Shahar, O.: Computer vision for fruit harvesting robots—state of the art and challenges ahead. Int. J. Comput. Vis. Robot. 3, 4–34 (2021)

Wang, Y., Lang, H., Lin, H., Silva, C.W.: Vision-based grasping using mobile robots and nonlinear model predictive control. Control Intell. Syst. 40, 1 (2012)

Ghasemi, A., Li, P., Xie, W.F.: Adaptive switch image-based visual servoing for industrial robots. Int. J. Control Autom. Syst. 18, 1324–1334 (2020). https://doi.org/10.1007/s12555-018-0753-y

Ghasemi, A., Xie, W.F.: Decoupled image-based visual servoing for robotic manufacturing systems using gain scheduled switch control. In: 2017 International Conference on Advanced Mechatronic Systems (ICAMechS), pp. 94–99 (2017)

Huangsheng, X., Guodong, L., Yuexin, W., Zhihe, F., Fengyu, Z.: Research on visual servo grasping of household objects for nonholonomic mobile manipulator. J. Control Sci. Eng. (2014). https://doi.org/10.1155/2014/315396

Wang, A.S., Zhang, W., Troniak, D., Liang J., Kroemer, O.: Homography-based deep visual servoing methods for planar grasps. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 6570–6577 (2019). https://doi.org/10.1109/IROS40897.2019.8968160

Bateux, Q., Marchand, E., Leitner, J., Chaumette, F., Corke, P.: Training deep neural networks for visual servoing. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 3307–3314 (2018). https://doi.org/10.1109/ICRA.2018.8461068

Castelli, F., Michieletto, S., Ghidoni, S., Pagello, E.: A machine learning-based visual servoing approach for fast robot control in industrial setting. Int. J. Adv. Robot. Syst. (2017). https://doi.org/10.1177/1729881417738884

Chaumette, F.: Image moments: a general and useful set of features for visual servoing. IEEE Trans. Robot. 20(4), 713–723 (2004). https://doi.org/10.1109/TRO.2004.829463

Khiabani, P.M., Ramezanzadeh, J., Taghirad, H.D.: Implementation of an improved moment-based visual servoing controller on an industrial robot. In: 2019 7th International Conference on Robotics and Mechatronics, pp. 125–131 (2019). https://doi.org/10.1109/ICRoM48714.2019.9071911

Hanh, L.D., Hieu, K.T.G.: 3D matching by combining CAD model and computer vision for autonomous bin picking. Int. J. Interact. Des. Manuf. 15, 239–247 (2021). https://doi.org/10.1007/s12008-021-00762-4

Corke, P.I.: Robotics, Vision & Control: Fundamental Algorithms in MATLAB, 2nd edn. Springer, Berlin (2017)

Hu, M.K.: Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 8(2), 179–187 (1962)

Acknowledgements

We acknowledgment the support of time and facilities from Ho Chi Minh City University of Technology (HCMUT), VNU-HCM for this study

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

L.D.H.: Writing and review; N.V.L.: Coding 3D matching; L.N.B.: Coding visual servoing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Not applicable.

Consent to participate

The authors consent to participate.

Consent for publication

The authors consent for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The parameter of interaction matrix

Rights and permissions

About this article

Cite this article

Duc Hanh, L., Luat, N.V. & Bich, L.N. Combining 3D matching and image moment based visual servoing for bin picking application. Int J Interact Des Manuf 16, 1695–1703 (2022). https://doi.org/10.1007/s12008-022-00870-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12008-022-00870-9