Abstract

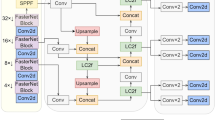

Within the fields of underwater robotics and ocean information processing, computer vision-based underwater target detection is an important area of research. Underwater target detection is made more difficult by a number of problems with underwater imagery, such as low contrast, color distortion, fuzzy texture features, and noise interference, which are caused by the limitations of the unique underwater imaging environment. In order to solve the above challenges, this paper proposes a multi-color space residual you only look once (MCR-YOLO) model for underwater target detection. First, the RGB image is converted into YCbCr space, and the brightness channel Y is used to extract the non-color features of color-biased images based on improved ResNet50. Then, the output features of three scales are combined between adjacent scales to exchange information. At the same time, the image features integrated with low-frequency information are obtained via the low-frequency feature extraction branch and the three-channel RGB image, and the features from the three scales of the two branches are fused at the corresponding scales. Finally, multi-scale fusion and target detection are accomplished utilizing the path aggregation network (PANet) framework. Experiments on relevant datasets demonstrate that the method can improve feature extraction of critical targets in underwater environments and achieve good detection accuracy.

Similar content being viewed by others

References

ZHANG S, WANG T, DONG J, et al. Underwater image enhancement via extended multi-scale retinex[J]. Neuro computing, 2017, 245: 1–9.

REN S, HE K, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE transactions on pattern analysis and machine intelligence, 2017, 39(6): 1137–1149.

BOCHKOVSKIY A, WANG C Y, LIAO H Y M. YOLOv4: optimal speed and accuracy of object detec-tion[EB/OL]. (2020-04-23) [2022-10-17]. https://arxiv.org/abs/2004.10934.

ANCUTI C O, ANCUTI C, VLEESCHOUWER C D, et al. Color balance and fusion for underwater image enhancement[J]. IEEE transactions on image processing, 2018, 27(1): 379–393.

LIN W H, ZHONG J X, LIU S, et al. RoIMix: proposal-fusion among multiple images for underwater object detection[C]//IEEE International Conference on Acoustics, Speech and Signal Processing, May 4–8, 2020, Barcelona, Spain. New York: IEEE, 2020: 2588–2592.

JIAN M, LIU X, LUO H, et al. Underwater image processing and analysis: a review[J]. Signal processing: image communication, 2021, 91: 116088.

CAI X, JIANG N, CHEN W, et al. CURE-Net: a cascaded deep network for underwater image enhance-ment[J]. IEEE journal of oceanic engineering, 2023.

LI Y, RUAN R, MI Z, et al. An underwater image restoration based on global polarization effects of underwater scene[J]. Optics and lasers in engineering, 2023, 165: 107550.

FAN B, CHEN W, CONG Y, et al. Dual refinement underwater object detection network[C]//European Conference on Computer Vision, August 23–28, 2020, Glasgow, UK. Cham: Springer, 2020: 275–291.

LIU H, SONG P, DING R. WQT and DG-YOLO: towards domain generalization in underwater object de-tection[EB/OL]. (2020-04-14) [2022-10-17]. http://arxiv.org/abs/2004.06333.

WANG Z, LIU C, WANG S, et al. UDD: an underwater open-sea farm object detection dataset for underwater robot picking[EB/OL]. (2021-07-28) [2022-10-17]. https://arxiv.org/abs/2003.01446v1.

LI Y, RUAN R, MI Z, et al. An underwater image restoration based on global polarization effects of underwater scene[J]. Optics and lasers in engineering, 2023, 165: 107550.

ZHANG Y, LI X S, SUN Y M, et al. Underwater object detection algorithm based on channel attention and feature fusion[J]. Journal of Northwestern Polytechnical University, 2022, 40(2): 433–441.

YANG Y, YU H P, ZHAO G L. A fast algorithm for YCbCr to RGB conversion[J]. IEEE transactions on consumer electronics, 2007, 53(4): 1490–1493.

PREMAL C E, VINSLEY S S. Image processing based forest fire detection using YCbCr colour model[C]//2014 International Conference on Circuits, Power and Computing Technologies (ICCPCT-2014), March 20–21, 2014, Nagercoil, India. New York: IEEE, 2014: 1229–1237.

CHEN Y, FAN H, XU B, et al. Drop an octave: reducing spatial redundancy in convolutional neural networks with octave convolution[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision, October 27–November 2, 2019, Seoul, Korea (South). New York: IEEE, 2019: 3435–3444.

PENG L, ZHU C, BIAN L. U-shape transformer for underwater image enhancement[EB/OL]. (2021-11-23) [2022-10-17]. http://arxiv.org/abs/2111.11843.

MAHASIN M, DEWI I A. Comparison of CSPDark-Net53, CSPResNeXt-50, and EfficientNet-B0 backbones on YOLOv4 as object detector[J]. International journal of engineering, science and information technology, 2022, 2(3): 64–72.

HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, June 27–30, 2016, Las Vegas, USA. New York: IEEE, 2016: 770–778.

SETHI R, INDU S. Fusion of underwater image enhancement and restoration[J]. International journal of pattern recognition and artificial intelligence, 2020, 34(03): 2054007.

ZHANG Q, DA L, ZHANG Y, et al. Integrated neural networks based on feature fusion for underwater target recognition[J]. Applied acoustics, 2021, 182: 108261.

YANG H H, HUANG K C, CHEN W T. Laffnet: a lightweight adaptive feature fusion network for underwater image enhancement[C]//2021 IEEE International Conference on Robotics and Automation (ICRA), May 30–June 6, 2021, Xi’an, China. New York: IEEE, 2021: 685–692.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Rights and permissions

About this article

Cite this article

Liu, P., Xing, W. & Ma, Y. MCR-YOLO model for underwater target detection based on multi-color spatial features. Optoelectron. Lett. 20, 313–320 (2024). https://doi.org/10.1007/s11801-024-3248-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11801-024-3248-5