Abstract

Classification trees are one of the most common models in interpretable machine learning. Although such models are usually built with greedy strategies, in recent years, thanks to remarkable advances in mixed-integer programming (MIP) solvers, several exact formulations of the learning problem have been developed. In this paper, we argue that some of the most relevant ones among these training models can be encapsulated within a general framework, whose instances are shaped by the specification of loss functions and regularizers. Next, we introduce a novel realization of this framework: specifically, we consider the logistic loss, handled in the MIP setting by a piece-wise linear approximation, and couple it with \(\ell _1\)-regularization terms. The resulting optimal logistic classification tree model numerically proves to be able to induce trees with enhanced interpretability properties and competitive generalization capabilities, compared to the state-of-the-art MIP-based approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Classification trees (CTs) are a popular machine learning model for classification problems (Kotsiantis 2013; Song and Ying 2015). Introduced in a seminal work by Breiman et al. (1984), CTs have widely been employed for decades, especially for tasks with small-sized, tabular data.

Formally, a CT is a connected acyclic graph, the nodes of which are linked in a hierarchical manner. Each internal node (branch) acts by splitting data in the feature space, based on some predefined condition. Finally, nodes that have no children (i.e., there is no outbound arc connecting them to lower nodes in the hierarchy) are called leaves and are associated with a prediction label for samples that are forwarded to them by the above branches.

Most algorithms designed to construct classification trees define univariate (axis-aligned) splits (Breiman et al. 1984; Quinlan 1986; De Mántaras 1991). With univariate splits, a single feature is chosen at each node and the corresponding value of each data point is compared to a given threshold; data points are, thus, forwarded to one of the children nodes, based on the result of this comparison. Each prediction of the model is, therefore, finally obtained following a path along the tree, based on the sequence of “decisions” at each encountered node.

To improve the expressive power of CTs, however, more complex splitting rules have been also considered in the literature for defining branching splits. These rules can take into account linear (in this case we talk about oblique trees) or even nonlinear relations between features (Friedman 1977; Loh and Vanichsetakul 1988; John 1995).

Yet, the intrinsic interpretability of CTs is a key factor that makes them particularly popular in domains, such as healthcare, finance or justice, where understanding the decision-making process and accounting for the choices made is important (Rudin 2019). For this reason, recent research has dealt with training algorithms to construct CTs having both good predictive performance and intrinsic interpretability properties. In particular, oblique trees are often considered interpretable as long as branching linear classifiers are actually sparse models (Ross et al. 2017; Ribeiro et al. 2016; Jovanovic et al. 2016). Then, the rules that ultimately define the decision process are basically “if–then–else” conditions with very simple clauses; hence, domain experts with possibly no technical background are still typically able to interpret and understand the logic that leads to decisions.

Within this scenario, the contribution of the present paper consists of the encapsulation of the concept of classification loss within each splitting rule in the popular optimal CTs (OCTs) framework (Bertsimas and Dunn 2017). This point of view also generalizes the idea of D’Onofrio et al. (2024), where maximum margin splits were shown to improve the generalization performance of optimal trees. In fact, both OCTs and margin-optimal trees can be seen as particular instances of the generalized loss-optimal classification tree framework discussed in this manuscript.

Following further this path, we propose to choose the log loss to construct optimal logistic classification trees (OLCTs). This way, the nice properties of logistic regression models (e.g., generalization, calibration, interpretability) can be combined with the easily readable structure of CTs. We show that the logistic loss can be effectively encapsulated within the standard mixed-integer linear programming (MILP) models for OCTs, using the piece-wise linear approximation defined in Sato et al. (2016), and thus handled by the usual off-the-shelf MILP solvers.

In addition, we point out a simple patch for the soft feature selection strategy employed in D’Onofrio et al. (2024) for maximum margin CT models: the sparsity requirement can be more easily handled exploiting \(\ell _1\)-regularization; clearly, this idea can then be extended to the case of general loss-optimal models and thus to the OLCTs framework, which finally allows to obtain sparse, yet effective classification models with the additional interpretability properties of logistic regression.

The rest of the manuscript is organized as follows: in Sect. 2 we report a detailed review of the literature concerning classification trees induction. Then, in Sect. 3, we derive the general MIP framework for optimal classification trees, based on the concepts of loss and regularizer. We consequently introduce in Sect. 4 a novel specification of the general framework, leading to the definition of the optimal logistic classification tree model. In Sect. 5, we then report the results of computational experiments aimed at assessing the actual potential of the proposed approach. Finally, we give some concluding remarks in Sect. 6.

2 Related literature

The construction of an optimal decision tree is an \({\mathcal {N}}{\mathcal {P}}\)-complete problem (Laurent and Rivest 1976) and, for this reason, traditional algorithms apply top-down greedy strategies (Rokach and Maimon 2005), employing quality measures to define the best parameters for each branch node (Breiman et al. 1984; Quinlan 1986; De Mántaras 1991).

Easily applicable even with large datasets, unfortunately greedy approaches often generate sub-optimal structures with poor generalization capabilities (Murthy and Salzberg 1995; Norouzi et al. 2015). This is mainly due to the fact that decision rules at the deepest nodes are often defined taking into account very small portions of the dataset. Once the tree has been grown, these methods usually apply a post-pruning phase to reduce the complexity of the structure and alleviate the overfitting problem (Breiman et al. 1984; Quinlan 1987; Bohanec and Bratko 1994).

To overcome these performance drawbacks, several methods have been proposed in the literature. One of the most common approaches consists of resorting to ensembles to reduce the model variance. Particularly relevant techniques of this kind are random forest (Breiman 2001), gradient boosting machines (Friedman 2001), and XGBoost (Chen et al. 2016) which combine, in different ways, multiple trees to increase the overall performance.

Other works are focused on oblique classification trees, i.e., on the usage of hyperplanes to recursively divide the feature space. In this case, the tree model exploits a multivariate linear function at each branch node so that multiple features are involved in the decision process (Murthy et al. 1994; Brodley and Utgoff 1995; Orsenigo and Vercellis 2003). Although both ensembles and oblique trees are more expressive than standard univariate classification trees, both classes of approaches suffer from an evident loss of interpretability.

A conceptually different path to improve the performance concerns the improvement of the fitting procedure, rather than the development of more expressive models. In particular, exact formulations of the learning problem exploiting linear programming have been considered. Back in the early ’90s, Bennet et al. proposed linear methods for constructing separation hyperplanes (Bennett and Mangasarian 1992, 1994) and for the induction of oblique classification trees solving an LP problem at each branch node (Bennett 1992).

More recently, thanks to the outstanding improvements in both hardware power and software solvers capabilities (Bixby 2012), new formulations of the learning problem have been developed. These formulations are related to both univariate and multivariate splits, and exploit various mathematical optimization techniques (Carrizosa et al. 2021) for an effective modeling of the learning problem. One particularly prominent approach involves the use of mixed-integer linear programming (MILP) formulations. The seminal work in this context is the one by Bertsimas and Dunn (Bertsimas and Dunn 2017), where an exact MILP model is proposed that can be solved up to a certifiably globally optimal CT (OCT) structure (in terms of misclassification error). Inspired by this work, a plethora of improved MILP-based approaches followed; among them, we can notably cite a formulation of the problem for the case of binary classification with categorical features (Günlük et al. 2021), or a flow-based formulation exploiting linear relaxation and Bender’s decomposition to efficiently handle the specific case of binary classification with binary features (Aghaei et al. 2021). A reformulation for the OCT model was also proposed for the case of parallel splits, allowing to significantly improve the efficiency of the approach (Verwer and Zhang 2019). Focusing on the interpretability aspect of CTs, some works take advantage of regularization terms to limit the number of branch nodes (Bertsimas and Dunn 2017) and total number of leaves in the model (Hu et al. 2019; Lin et al. 2020).

The above formulations can in principle generate certified globally optimal structures. In practice, however, there is a combinatorial explosion in the number of binary variables as the depth of the tree or the size of the dataset increases. Thus, optimality gap can be closed by branch-and-bound type procedures only for really shallow trees and on tasks with a few hundred examples at most.

For this reason, local optimization strategies have also been investigated. Starting from a given tree, trained by a greedy approach, these methods refine its structure by iteratively minimizing the misclassification loss associated with each node in the tree. Carreira-Perpinán and Tavallali (2018) introduced an alternating minimization strategy, that operates level-wise on the tree, decomposing the learning problem and solving each sub-problem up to global optimality. Similarly, Dunn (2018) proposed local search methods, able to handle real-world datasets and to refine greedy structures grown with CART-like algorithms, at the cost of producing sub-optimal models.

In addition to exploring integer optimization, researchers have also delved into continuous optimization approaches within the context of optimal trees. Blanquero et al. (2021) presented a nonlinear programming model in their work, aiming to develop an optimal "randomized" classification tree with oblique splits. This approach involves making random decisions at each node based on a soft rule, which is induced by a continuous cumulative density function.

Expanding on their earlier findings, Blanquero et al. (2020) addressed the issues of global and local sparsity in the randomized optimal tree model. To tackle these challenges, they incorporated regularization terms based on polyhedral norms. By employing this regularization technique, the researchers aimed to promote sparse solutions both globally and locally within the randomized tree framework. The same approach has also been investigated for the regression case by Blanquero et al. (2022).

It is important to note that, in the randomized framework proposed by Blanquero et al., the assignment of a sample to a specific class is not deterministic. Instead, it is determined based on a given probability, allowing for a more flexible and probabilistic classification approach.

A different stream of research aims at improving the performance of classification trees exploiting the concept of loss in defining splits. Specifically, there are works that deal with oblique splits, greedily computing at each branch node the parameters of the separating hyperplane as a support vector machine (SVM) (Cortes and Vapnik 1995). The formulation from Bennett and Blue (1998) for SVM branches exploits a dual convex quadratic model that can also handle kernel functions to capture non linear patterns in data. Tibshirani and Hastie (2007) instead developed a greedy algorithm to handle high dimensional features and multiclass classification, inducing classification trees with margin properties, i.e., structures where each branch node employs a linear SVM to split the feature space.

With reference again to multivariate trees, approaches that exploit logistic models at nodes have also been studied. Landwehr et al. (2005) presented a method that induces standard axis-aligned classification trees with the difference that, at each leaf node, a logistic regression model is built to provide the final prediction. Moreover, a greedy method to fit a piece-wise linear logistic regression model is presented by Chan and Loh (2004), exploiting decision trees to recursively partition the feature space. The final model can be basically seen as a multivariate classification tree with a logistic regressor on each branch node.

Recently, D’Onofrio et al. (2024) took advantage of an exact mixed-integer quadratic programming (MIQP) formulation of the CT learning problem including the concept of maximum margin. This approach allows to define OCTs where each branch node is a maximum-margin linear classifier. The maximum-margin property, obtained by minimizing the hinge loss at all branch nodes, allows for significant improvements on generalization performance. However, relying on oblique splits has the usual drawback of undermining interpretability. For this reason, the authors also proposed strategies (both hard and soft) to induce sparsity in the coefficients of each hyperplane.

3 Generalized loss-optimal classification trees

3.1 Notation and oblique tree structure by mixed-integer programming

Let \(\mathcal {I} = \{1,..., n\}\) and \(\mathcal {D}: = \{ (\varvec{x}_i, y_i), \ \varvec{x}_i \in {\mathbb {R}}^p, \ y_i \in \{-1, 1 \}, \ i \in \mathcal {I} \}\) be a finite dataset of n observations with p features and binary labels.

We introduce the notation, largely based on Bertsimas and Dunn (2017), D’Onofrio et al. (2024), used for the formulation of the Loss-Optimal CTs learning problem. Formally, let \(\mathcal {T}\) be the set of branch nodes of a generic (oblique) CT. We denote by d the number of layers of branching nodes of the tree, i.e., its depth, by \(\mathcal {H}\) the set \(\{1,\ldots ,d\}\) and by \(\mathcal {T}_{h}\) the set of nodes at depth h, for \(h\in \mathcal {H}\).

Each branch node \(t \in \mathcal {T}\) is characterized by a linear function \(\varvec{w}_t^T \varvec{x} + b_t, \ \varvec{w}_t \in {\mathbb {R}}^p, \ b_t \in {\mathbb {R}}\), which induces the hyperplane \(\varvec{w}_t^T \varvec{x} + b_t = 0\) as decision boundary. This has the effect of forwarding an examined data point \(\varvec{x}_i\) to the left child of node t if \(\varvec{w}_t^T\varvec{x}_i + b_t \le 0\) and to the right child otherwise. Note that, using this notation, a glass-box axis-aligned CT can be obtained as a special case, imposing \(\Vert \varvec{w}_t\Vert _0 = 1, \ \forall \ t \in \mathcal {T}\), where \(\Vert \cdot \Vert _0\) denotes the \(\ell _0\) pseudo-norm.

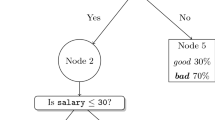

Since we are interested in CTs where each node is a binary classifier, we can let the final prediction of the point depend solely on the decision of the last branch node. Indeed, each splitting hyperplane is a linear classifier with decision function \(\text {sgn}(\varvec{w}_t^T\varvec{x} + b)\). In view of this, there is no need of tracking the points up until the leaves, so that the number of nodes to be modeled can be reduced of \(2^d\) units, or, in other words, cut to a half. An example of an oblique CT with branching classifiers of depth 3 is shown in Fig. 1.

We now introduce the essential building blocks of the MILP models proposed in the literature for OCTs training. Recalling that \(\mathcal {T}_{d} \subset \mathcal {T}\) is the set of nodes of the last branching layer, the routing of each point to the proper branch of the last layer can be modeled introducing the binary variables:

Using these variables, we can force each point to be routed to exactly one of the last branching nodes, complying with the structure of the model. In particular, this can be obtained imposing the following constraints:

We then introduce the constraints to take into account the path of each data point, which is fully determined by the sequence of decisions taken at the branch nodes. For this purpose, we introduce the set \(\mathcal {T}_d(t) \subseteq \mathcal {T}_d\) as the set of branches at the last layer that are successors of the node t; in other words, nodes in \(\mathcal {T}_d(t)\) belong to the last layer of the sub-tree rooted at t; moreover, we define the subsets \(\mathcal {T}_d^r(t)\), \(\mathcal {T}_d^l(t)\) as a partition of \(\mathcal {T}_d(t)\) such that:

-

\(\mathcal {T}_d^r(t)\) is the set of nodes of the last branching level that belong to the right sub-tree rooted at node t;

-

\(\mathcal {T}_d^l(t)\) is the set of nodes of the last branching level that belong to the left sub-tree rooted at node t.

Each branch t forwards points to its left child if \(\varvec{w}_t^T\varvec{x}+b_t \le 0\) and to the right one otherwise. This logic can be enforced by means of the following forwarding constraints:

where M is a suitable constant for a big-M type constraint and \(\epsilon\) is a small constant preventing numerically degenerate cases.

For example, given \(t \in \mathcal {T}\) and a data point \(\varvec{x}_i\), if \(z_{i,s} = 1\) and \(s \in \mathcal {T}_d(t)\), then \(\varvec{x}_i\) has to be routed to the last branch node s, which either belongs to \(\mathcal {T}_d^l(t)\) or \(\mathcal {T}_d^r(t)\). Hence, one and only one of the following pair of equations hold:

If the former holds, then (2a) guarantees that \(\varvec{w}_t^T\varvec{x}_i+b_t \le 0\), otherwise (2b) forces \(\varvec{w}_t^T\varvec{x}_i+b_t > 0\). The big-M strategy makes the constraint for the “wrong case” irrelevant. Also, no constraint applies for branch t in case \(\varvec{x}_i\) is forwarded to a node \(s \in \mathcal {T}_d {\setminus } \mathcal {T}_d(t)\). Moreover, we shall observe that these constraints are not required if \(t \in \mathcal {T}_d\) since the last branching level is only responsible to make the final prediction.

3.2 The role of loss functions

For a CT to be meaningful, a suitable loss function necessarily has to be used to push the last branching layers and the overall model to correctly classify the data points. This can be done by estimating the errors, or slacks \(\xi _i,\,i=1,\ldots ,n\), committed on each data point:

The definition of quantities \(\varvec{\xi }\) clearly depends on the particular loss function to be used. For example, most MIP models for OCTs (Bertsimas and Dunn 2017; Aghaei et al. 2021; Hu et al. 2019; Carreira-Perpinán and Tavallali 2018) directly employ the misclassification loss, which is defined using additional binary variables and big-M constraints as

The additional variables \(\hat{y}\) model the predicted class for each data point; the slack variable corresponding to each data point will be set to 0 if prediction is correct \(y_i\hat{y}_i=1\), otherwise it will be equal to 1. Constraints (4a)–(4b) guarantee that, for a point \(x_i\) arriving at the leaf t, \(\hat{y}_i=1\) if and only if \(\varvec{w}_t^T\varvec{x}_i+b_t\ge 0\).

However, this is not necessarily the only option for a loss function. In fact, different choices not only might be statistically more robust, but may also avoid the introduction of the additional binary variables and logical constraints that increase problem complexity. An example of a continuous loss with these features, easily embeddable in the MIP framework, is the hinge loss, \(\max \{0,1-y_i(\varvec{w}^T\varvec{x}_i+b)\}\), that can be modeled as:

The objective function can then be defined as the sum of the total loss function L and a regularization term for weights \(\varvec{w}_t\), \(t\in \mathcal {T}_d\), so that we basically have an empirical risk minimization problem:

We note that:

-

By setting \(\lambda =0\) and using (4), we actually retrieve the standard OCTs problem from Bertsimas and Dunn (2017);

-

setting \(\lambda >0\), \(\Omega (\cdot ) = \Vert \cdot \Vert _2^2\) and using (5) we get “SVM leaves”.

In fact, loss terms can also be associated with the upper branching nodes; in this case, we have d vectors of slack variables, \(\varvec{\xi }_1,\ldots ,\varvec{\xi }_d\): along its path to the leaves, each data point encounters only one node at each layer, and thus only the slack associated with the corresponding classifier has to be taken into account. The overall objective function for a general loss-optimal CT model is, therefore, given by

It is worth noting at this point that the margin-optimal CT models (MARGOT) from D’Onofrio et al. (2024) can then be retrieved as a special case of the general framework, setting

and \(\Omega (\cdot )=\Vert \cdot \Vert _2^2\).

3.3 Exact modeling of \(\ell _0\) terms

To enhance the interpretability of the models, sparsity can be compelled within the weights of branching classifiers, thus inducing features selection. The \(\ell _0\) norm of vectors \(\varvec{w}_t\) can easily be modeled, in a MINLP program, by introducing binary variables, big-M constraints and linear expressions:

Then, the value of \(\Vert \varvec{w}_t\Vert _0\) can either be upper bounded or penalized. Following the terminology in D’Onofrio et al. (2024), we talk about hard feature selection (HFS) in the former case and soft feature selection (SFS) in the latter one.

4 Optimal logistic classification trees

In this section, we formalize a novel, particular instance of loss-optimal CT model, the Optimal Logistic CT (OLCT). In particular, we first show how to introduce the logistic loss function within the considered MIP; then, we point out the benefits of using \(\ell _1\)-regularization terms; we finally show the resulting overall optimization model.

4.1 Logistic loss in mixed-integer linear optimization

The logistic loss function for binary linear classifiers is defined as

This loss function appears in the individual terms of the summation in the negative log likelihood function of logistic regression models [see, e.g, Hastie et al. (2009)], i.e., the linear model for binary classification that is obtained by maximum likelihood estimation under the assumption that data follows a Bernoulli distribution.

In addition to often having strong performance in terms of out-of-sample prediction accuracy, other advantages of logistic regression compared to other linear classifiers, such as SVMs, include

-

(a)

the possibility of obtaining estimates of features importance by simple manipulation of the model weights (Hastie et al. 2009);

-

(b)

the opportunity of getting calibrated probability estimates associated with predictions.

The above properties offer nice insights that are valuable from the perspective of model interpretability. These considerations motivate us to consider the employment of the logistic loss within the general framework for loss-optimal CTs, discussed in Sect. 3.

The straightforward objection, at this point, concerns the nonlinearity of the logistic loss function; indeed, contrarily to the hinge loss defining SVMs, the log loss cannot exactly be modeled by linear constraints in MILP. However, this issue can be addressed following the strategy, proposed in Sato et al. (2016), where the best subset selection problem in logistic regression is solved by means of a MILP approach. In particular, Sato et al. proposed to approximate the logistic loss function by a piece-wise linear underestimator. The function

is a convex function, thus its tangent line at a point \(v_0\) constitutes a global underestimator; more explicitly, for all \(v,v_0\in {\mathbb {R}}\), it holds

To obtain an accurate approximation of the logistic loss, we can thus construct a piece-wise linear underestimator obtained as the point-wise maximum of a family of tangent lines:

Sato et al. also propose a greedy strategy to select points \(v_k\) where computing the tangent lines so as to minimize the approximation error: at each iteration, a tangent line is added to the piece-wise linear approximation so that the area between the exact and approximated loss function is minimized. The resulting sets of tangent points to be used for increasingly accurate approximations of the logistic loss are

Obviously, a larger number of points leads to a larger number of constraints in the MILP model and, thus, makes it more difficult to solve.

Following this methodology, we can redefine the slack variables to finally introduce the logistic loss in the loss-optimal CT model discussed in Sect. 3:

4.2 Lasso regularization

Together with the loss function defining the slack values, the second element to be chosen in our generalized OCT framework is the regularizer. Using an \(\ell _2\)-regularization term, as for example in MARGOT, has the effect of making the entire problem an MIQP instance. Here, we instead propose to consider a Lasso regularizer (Tibshirani 1996), i.e., an \(\ell _1\) penalty term; there are two main reasons for doing so:

-

the \(\ell _1\)-norm can be easily handled by linear constraints within a linear programming model; thus, using the \(\ell _1\)-norm instead of the squared \(\ell _2\)-norm we can derive a fully linear model which should be easier to solve;

-

exploiting the well-known properties of the \(\ell _1\)-norm (Bach et al. 2012), we can implicitly induce sparsity within branch nodes classifiers, without the need of recurring to explicit (and expensive) \(\ell _0\)-norm penalization as in SFS models.

The \(\ell _1\)-norm can be efficiently handled in a linear program, similarly as what is done in Figueiredo et al. (2007), setting

Basically, \(\varvec{w}_t\) is split into its positive and negative parts \(\varvec{w}_t^+\) and \(\varvec{w}_t^-\). Indeed, constraints (11) are satisfied by \(\hat{w}_{j,t}^+ = \max \{0,{w}_{j,t}\}\) and \(\hat{w}_{j,t}^- = \max \{0,-{w}_{j,t}\}\); this solution is such that \(\hat{w}_{j,t}^++\hat{w}_{j,t}^- = |w_{j,t}|\). We, thus, have \(\varvec{1}^T(\hat{\varvec{w}}_t^++\hat{\varvec{w}}_t^-) = \Vert \varvec{w}_t\Vert _1\).

Any other feasible solution can be obtained by shifting \(\hat{\varvec{w}}_t^+\) and \(\hat{\varvec{w}}_t^-\) by a vector \(\varvec{\Delta }\ge 0\), \(\Vert \varvec{\Delta }\Vert >0\); hence, for any other feasible solution we have

The shift \(\varvec{\Delta }\), however, has no influence on the variables \(\varvec{w}_t\); if the term \(\varvec{1}^T(\varvec{w}^++\varvec{w}^-)\) is minimized in the objective, then \(\hat{\varvec{w}}_t^+\) and \(\hat{\varvec{w}}_t^-\) will always be chosen and thus the actual quantity being minimized is \(\varvec{1}^T(\hat{\varvec{w}}^++\hat{\varvec{w}}^-) = \Vert \varvec{w}_t\Vert _1\).

Lasso regularization is well known not only to induce sparsity and guarantee that the optimization process is well behaved, but also to be beneficial at tackling overfitting (Hastie et al. 2009; Tibshirani 1996). Thus, if the sparsity requirement within each node is not set by a specific budget (as in HFS), but it is imprecisely imposed by means of a penalty, then the expedient of setting \(\Omega (\cdot ) =\Vert \cdot \Vert _1\) allows to preserve, at a much lower cost, both the predictive performance of the obtained model and its interpretability.

The alternative SFS strategy from D’Onofrio et al. (2024) exploits the objective function

This kind of penalty on the \(\ell _0\)-norm of weights is modeled with binary variables, see Eq. (9), highly increasing the complexity of the optimization model. Moreover, here there is an additional hyperparameter to be tuned for each layer, making the cross-validation procedure harder to manage.

4.3 The overall model

The overall optimal logistic classification tree (OLCT) model proposed in this paper is obtained putting together the pieces described thus far:

The constraints referenced at (12b) contain almost all the defining elements of the logistic tree: constraint (1) defines the indicator binary variables of data points routing, constraints (2) enforce the routing logic along the tree, constraints (10) define the slacks corresponding to the logistic loss and (11) define the branching classifiers weights and model their \(\ell _1\)-norm.

Constraints (12c) and (12d) simply define the domain of the remaining variables. The objective function is nothing but the general loss (7) where the \(\ell _1\) regularizer is employed, formulated as shown in Sect. 4.2.

Remark 1

Note that, in our model, we use a piece-wise linear underestimator, i.e., a surrogate function, to approximate the log loss. Thus, at the end of the training process, we can actually perform a refinement operation affecting the last layer of branching nodes. Specifically, we can exactly fit an \(\ell _1\)-regularized logistic regression model at each node of the last layer, using as training data the samples that actually reach that node. By this procedure, we are guaranteed that the overall exact objective function associated with the entire tree model decreases.

The same reasoning cannot be applied with higher level nodes: changes to the branching classifiers possibly change how training data are forwarded to lower nodes, thus affecting the corresponding loss terms. The overall loss associated with the tree might increase, because of the lack of global perspective.

Remark 2

One of the most appealing features of classification trees is their glass-box nature; however, the highest level of interpretability is only reached in the case of parallel splits. For this reason, we believe it to be important to explicitly point out how to retrieve a univariate optimal logistic classification tree. The model is basically equivalent to (12), except for HFS type constraints, with an upper bound on the \(\ell _0\)-norm of each branching classifier set to 1. This can be modeled in MILP terms by constraints (9) and setting \(\varvec{1}^T\varvec{\delta }_t\le 1\).

Of course, the addition of a number of new binary variables and big-M type constraints directly proportional to \(p\times |\mathcal {T}|\) is significant in terms of the computational resources needed to solve the problem and especially to certify optimality, closing the optimality gap.

4.4 Interpreting OLCTs

Inheriting, at least partially, the nice interpretability properties of logistic regression models is one of the main advantages of using the logistic loss within the OCTs framework.

Specifically, there are some aspects that can be taken into account to retrieve additional information about the model prediction mechanisms.

Evaluation of feature influence At each branching node of oblique OLCTs, the influence of each individual parameter in the splitting decision can be estimated looking at the magnitude of the corresponding coefficient and multiplying it by the standard deviation of the feature among the training data points reaching that node; formally, given the weights \(\varvec{w}_t\) at a node \(\bar{t}\in \mathcal {T}\), the influence \(r_{j,\bar{t}}\) of feature j can be estimated by:

The larger the coefficient \(r_{j,t}\) is, the higher is the connection of feature j with positive outputs; on the other hand, the largest negative values of importance are associated with features pushing the point toward the left child of the branching node. The features influence for an OLCT trained on the heart dataset is reported, as an example, in Fig. 2.

Probabilistic interpretation of outputs and model calibration

Sigmoid function \(\sigma (z) = \frac{1}{1+\exp (-z)}\) is used in logistic regression to map the output of the linear function defined by \(\varvec{w},b\) to (0, 1), so that a data point \(\varvec{x}\) can finally be classified as positive if \(\sigma (\varvec{w}^T\varvec{x}+b)\ge 0.5\).

In a standalone logistic regression model, these “probability values” are related to the odds of the positive outcome over the negative one; in particular, the linear regressor is designed to model, by maximum likelihood, the log-odds (logits) of the output:

Exponentiating we get

and by simple algebraic manipulations, we retrieve

In other words, the logistic output is an actual probability estimate for the positive output, i.e., the logistic model is well calibrated by construction.

Within the OLCTs framework, we can exploit this property both at the splitting and the classifying nodes of the tree. If \(t\in \mathcal {T}_d\), then the odds associated with the outputs of \(\varvec{w}_t, \ b_t\) are actually interpretable as the odds of the positive class over the negative one, given that the data point belongs to the subspace deterministically defined by the splits at the higher nodes. Since each node classifier is calibrated within the corresponding space region, the overall output probability of the OLCT models should also be implicitly well calibrated, with no need of any extra post-train calibration.

If, on the other hand, \(t\in \mathcal {T}_h\), \(h<d\), then the classifier has been trained looking not only at its corresponding slacks, but also at those of all its descendant nodes. Thus, in this case we can (somewhat improperly) interpret the probability estimates as the confidence about forwarding the data point to the right child rather than to the left one. This concept can be visualized through the example in Fig. 3.

Confidence at each branch node of the logistic classification tree from Fig. 2 for the sample \(\varvec{x} = [ 0.69, 0.87, -1.23, -0.8, -0.4, 1.01, -1.87, 1.4, -0.94, 0.63, 0.35, -0.89, -0.07]\) of the heart dataset. The true label y is equal to 1. In this case, the model predicts the correct class of the point and, given the sigmoid activation, we are able to get the confidence of the forwarding decision at each branch node

5 Numerical experiments

In this section, we present the results of computational experiments carried out to evaluate the performance of the proposed approach. All the experiments described in this section have been carried out on a server with an Intel ®Xeon ®Gold 6330N CPU with 28 cores and 56 threads @ 2.20GHz, but we set a limit of only 40 of the 56 available threads and the total available memory is 128GB. The code has been implemented in Python (v. 3.9) and the commercial solver Gurobi (Gurobi Optimization 2022) has been used to solve all the mixed-integer programming models considered in this work. All the code is available at https://github.com/tom1092/Optimal-Logistic-Classification-Trees.

For each instance of classification tasks, we performed an 80/20 train/test split of the data and we also standardized each feature before the training to zero mean and unit variance. Experiments are always repeated for different random seeds, resulting in different train/test splits. Hyperparameters have been tuned by cross-validation over a grid of values, where the test balanced accuracy (see below) is used as quality metric; more details will be provided for each group of experiments in the following. The parameters M and \(\epsilon\) in MIP formulations have been set to 100 and \(10^{-5}\), respectively; the value of \(M=100\) is a reasonable value, large enough not to introduce bound constraints and tight enough not to make Gurobi branch-and-bound too inefficient; this same value was also used, for instance, by D’Onofrio et al. (2024); as for \(\epsilon\), the value \(10^{-5}\) is much larger than the one employed for the IntFeasTol parameter of Gurobi (\(10^{-9}\)), so that the issues highlighted in Liu et al. (2023) did never occur, and at the same time is small enough to let the underlying logic of the constraints work properly—no feasible solution is erroneously cut off. Without loss of generality, we also decided to move the regularization parameter \(\lambda _h\) to the slack component of the loss, to be aligned with the work by D’Onofrio et al. (2024). The objective function now has the form:

Note that, at the end of the optimization process of OLCTs, we applied the refinement strategy discussed in Remark 1. For each node in the last layer, we retrained the \(\ell _1\)-regularized logistic regression model using scikit-learn (Pedregosa et al. 2011) implementation.

To compare the performance of each model, along with the running times, we used the balanced accuracy metric defined as:

where TP, TN, FP, FN are the number of true-positive, true-negative, false-positive, and false-negative outputs, respectively. The B\(_\text {Acc}\) value is always computed on test set data.

To provide a condensed view of the results, in the following we are making use of performance profiles (Dolan and Moré 2002). Performance profiles provide a unified view of the relative performance of the solvers on a suite of test problems. Formally, consider a benchmark of \(\mathcal {P}\) problem instances and a set of solvers \(\mathcal {S}\). For each solver \(\sigma \in \mathcal {S}\) and problem \(\pi \in \mathcal {P}\), we define

where cost is the performance metric we are interested in. In particular, we will be interested in CPU time. We then consider the ratio

which expresses a relative measure of the performance on problem \(\pi\) of solver \(\sigma\) against the performance of the best solver for this problem. If a solver fails to solve a problem, we shall put \(\eta _{\pi , \sigma } = \eta _M\), with \(\eta _M \ge \max \{\eta _{\pi , \sigma }\mid \pi \in \mathcal {P},\,\sigma \in \mathcal {S} \}\).

Finally, the performance profile for a solver \(\sigma\) is given by the function

which represents the estimated probability for solver \(\sigma\) that the performance ratio \(\eta _{\pi ,\sigma }\) on an arbitrary instance \(\pi\) is at most \(\tau \in {\mathbb {R}}\). The function \(\rho _\sigma (\tau ):[1, +\infty ]\rightarrow [0, 1]\) is, in fact, the cumulative distribution of the performance ratio.

Note that the value of \(\rho _\sigma (1)\) is the fraction of problems where solver \(\sigma\) attained the best performance; on the other hand, \(\lim _{\tau \rightarrow \eta _M^-} \rho _\sigma (\tau )\) denotes the fraction of problems solved from the given benchmark.

In addition to performance profiles, we will also make use of the cumulative distribution of absolute gaps for a given metric \(\mu\); in particular, this tool has a similar concept as performance profiles and is obtainable setting

where the \(\text {opt}\) operator denotes the minimum or the maximum according to the metric \(\mu\) selected. Of course, in this case, we have \(\rho _\sigma (\tau ):[0, +\infty ]\rightarrow [0, 1]\). The distribution of absolute gaps is particularly useful when evaluating results in terms of accuracy or objective values.

For example, let us consider a test suite to evaluate the accuracy performance of a set of models on a benchmark of problem instances. From this kind of plot we can infer, for each model, estimates of the probability to obtain an accuracy value distant at most t points from the best one attained by any model. In other words, for any t, we can observe the fraction of times a model reaches an accuracy level within t points from the best one. For \(t=0\), we obtain the fraction of times each model is the best one among all those considered.

5.1 Preliminary experiments

The first experiments we carried out concern the assessment of the performance of our model as we vary the set V of the tangent points that are used to obtain the piece-wise linear underestimator of the logistic loss. In particular, we are interested in finding a good trade-off between the quality of the approximation and the running time of the model.

We considered a small benchmark of 5 datasets (parkinsons, wholesale, tik-tak-toe, haberman, sonar, see Table 1) from the UCI repositories (Dua and Graff 2017), testing \(V_0, V_1, V_2\) with refinement as configurations for the MILP problem of training an OLCT of depth 2. The experiment has been repeated for five different random seeds for each dataset for a total of 25 different problems. The Gurobi time limit has been set to 300 s. In these experiments, we did not employ the \(\ell _1\) regularization terms, as we are mainly interested in assessing the effectiveness of log loss approximation. Note that the MIP solution process is warm-started, initializing the weights of each branch node following a strategy similar to the one adopted by D’Onofrio et al. (2024): starting from the root in a greedy fashion, we assign to each node weights the values obtained training a logistic regression classifier using the data that are forwarded to that node by the above branches. This strategy allows to obtain significant speedups in computation.

As shown by the performance profiles in Fig. 4, choosing \(V_0=\{ 0, \pm \infty \}\) to build the linear piece-wise approximation seems to provide a nice trade-off between running time and solution quality. Indeed, the use of \(V_1\) does not seem to significantly improve the out-of-sample accuracy of the model; on the other hand, the more accurate approximation obtained with \(V_2\) does result in better predictive models, but a much higher training cost has to be paid. For this reason, we decided to use the \(V_0\) setting to assess the performance of our model in the following sections. Nonetheless, we do not rule out that, in certain settings, it might be worth exploiting the increased effectiveness provided by \(V_2\).

5.2 Impact assessment for the global optimization approach

In this section, we investigate the actual beneficial effects of conducting a global optimization phase during logistic trees training. Indeed, good performance of OLCTs might be mainly due, in principle, to the warm-start or the refinement steps. In particular, our greedy warm-start procedure constructs a logistic tree model with an iterative top-down approach, which is somewhat similar to the one proposed by Chan and Loh (2004) and, therefore, might actually already lead to a properly effective classifier. However, the results reported in Fig. 5 suggest that solving model (12) does provide a substantial boost to the performance of the resulting logistic tree.

In this experiment, we examined the value of the overall (in-sample) logistic loss associated with the model after the warm-start, global optimization and refinement steps, and then we also took into account the (out-of-sample) values of balanced accuracy. Here, we solved the same 25 instances of classification problems considered in Sect. 5.1. Again, in order to focus on the consequences of approximating the loss in the global step, we did not employ the \(\ell _1\) regularization terms. We also set Gurobi time limit to 300 s. Moreover, based on the results in the previous section, we chose to employ the set \(V_0\) of tangent points in log loss approximation. We did not focus on the running times of the three phases, as the global optimization step obviously represents the main computational burden for our approach.

From Fig. 5b, we observe that solving the training problem with a global structure perspective generally allows to slightly improve the overall loss attained by the greedy model. This result has to be underlined, taking into account that the loss function is roughly approximated during the global optimization phase. Then, the refinement step on the last layer allows to really polish the model on training data, often leading to substantially lower values of loss.

The results in Fig. 5a are even more appealing. Apparently, when it comes to test performance, learning branching rules with a global point of view is crucial to improve the effectiveness of the resulting model. In this perspective, although visible, the positive effect of refinement is much more limited. Thus, we can arguably state that solving (12) as a global optimization has a significant effect in improving the effectiveness of logistic tree models.

5.3 OLCTs performance evaluation

We now present the results of a larger computational experiment where we compare the OLCT model to the MARGOT, SFS-MARGOT (D’Onofrio et al. 2024) and OCT-H (Bertsimas and Dunn 2017) approaches. To assess the performance of our method, we considered 10 standard binary classification datasets from the UCI repositories (Dua and Graff 2017) that are reported in Table 1. For each dataset, we consider 5 classification problem instances obtained considering different random train/test splits, so that the overall benchmark is made up of 50 test instances. For each problem instance, we set the depth of each tree model to 2. For all models, we set the Gurobi time limit to 300 s both for validation runs and the final fit over the entire training set.

For hyperparameters tuning, this time we used a 4-fold cross-validation. For both OLCT and MARGOT, we considered the slack parameters \(C_h\in \{ 10^i, \ i = -2, -1,..., 2\} \ \ \forall \ h \in \mathcal {H}\), obtaining 25 possible model configurations. On the other hand, the SFS-MARGOT model has two hyperparameters for the classifiers at each level: the slack parameter \(C_h\) and the \(\ell _0\) penalty parameter \(\alpha _h\). To consider a comparable grid of configurations in size as that of OLCTs and MARGOTs, we used the same \(\alpha\) for each branch layer h letting \(C_h\) vary in \(\{10^{-2}, 1, 10^{2}\}\) and \(\alpha\) in \(\{10^{-1}, 1, 10\}\), so that a total number of 27 models is considered for the SFS variant. Finally for OCT-H, we used the grid \(\alpha \in \{ 2^i, \ i = -8, -1,..., 2\} \cup \{0\}\) to tune the \(\alpha\) parameter that penalizes the number of features used at each branch node to make the decision.

Again, we initialize the training phase of each MIP model by injecting a warm-start solution, obtained training logistic or SVM classifiers, depending on the particular tree classifier. For OCT models we used an analogous greedy strategy: we solve the MILP problem at each individual single node, i.e., setting the depth of the tree equal to 1 and only using the set of points reaching the considered branch node, with a time limit of 30 s. As also mentioned in Bertsimas and Dunn (2017), this strategy can significantly speedup the optimization, providing a good initial upper bound of the loss that may help the branch-and-bound method.

The results of the experiment are shown in Fig. 6 in the form of cumulative distribution of absolute gap from the best balanced accuracy on the test set and performance profiles (Dolan and Moré 2002) of runtime and sparsity. Sparsity is measured by the average number of features used at each node of the tree, and constitutes a proxy measure for interpretability of oblique trees.

Observing Fig. 6a, we observe that our proposed model attains the top accuracy in about 50% of cases; moreover, it also appears to be the most robust, consistently being the most likely to obtain an accuracy value close to the best one, as the gap parameter t increases. Our model is, thus, not only most frequently the best one, but when it is not, it is still the one with the lowest probability of falling shorter than any given threshold from the best result.

Another interesting observation is that both SFS-MARGOT and MARGOT exhibit similar performances. That is, the SFS variant is able to produce sparse trees without drastically reduce the out-of-sample prediction performance. In this regard, from Fig. 6c, we can observe that OLCT outperforms both MARGOT and SFS-MARGOT in terms of sparsity, i.e., interpretability.

The high average levels of sparsity in OLCTs branches support the effectiveness of the \(\ell _1\)-regularization approach to this aim. This is of course not particularly surprising, as the effects of LASSO regularization are well known. Yet, the \(\ell _1\)-regularization approach appears to also be able to somewhat outperform the SFS strategy based on \(\ell _0\) penalization: this result was not granted and is certainly worth to be remarked, even more so taking into account that it is coupled with the efficiency advantage due to the avoided use of binary variables.

On the other hand, the OCT-H approach proves to be the best one in terms of sparsity. This result is driven by two elements within the model as described by Bertsimas and Dunn (2017): the penalty term in the objective aimed to encourage splits considering a low number of features, and a constraint in the formulation which forces the weights of each split to have a unitary \(\ell _1\) norm. However, as a consequence of the combined use of these strategies, the final model tends to be “over-regularized”, resulting the clearly worst one in terms of balanced accuracy as shown in Fig. 6a.

Finally, in Fig. 6b, performance profiles of the running times highlight that the vanilla MARGOT is, in general, the most likely model to close the optimality gap in less than 300 s. However, OLCT appears to have a comparable cost, whereas the SFS-MARGOT and the OCT-H approaches are much more computationally demanding since these latter models make use of many more binary variables. By the way, it is worth to notice that no model was able to close the optimality gap in more than 70% of the instances with a time limit of 300 s.

Summarizing, results highlight that our method is able to outperform other approaches in terms of balanced accuracy, to increase the interpretability, inducing sparser structures and exploiting the well-known logistic properties discussed in Sect. 4.4, and finally that these improvements can be achieved in competitive running times with respect to the other approaches.

5.4 Performance analysis with larger-scale problems

The mixed-integer optimization models associated with loss-optimal classification trees grow very fast in size as the number of data points or nodes layers increases. In particular, the number of integer variables (and of constraints) grows linearly with the number of data points and exponentially with the trees depth.

This increment is problematic from the perspective of solving the training optimization problem, as even the most efficient solvers from the state-of-the-art struggle when the number of integer variables becomes large.

We are, thus, interested in conducting, at least, a preliminary assessment of the scalability of the OLCT approach compared to the behavior of MARGOT and OCT-H models. In this larger-scale setting, we extended the time limit to 3600 s for Gurobi during the final fitting of the models, after the cross-validation phase.

First, we considered 9 additional datasets,Footnote 1 whose size is reported in Table 2. Since the computational burden to carry out the present experiment is significant, we only considered a single train-test split for each dataset. The comparison concerns the OLCT model with the \(V_0\) tangent points set, the MARGOT model and the OCT-H model, setting trees depth to 2. The results of the experiment are reported in Table 2. We do not report Gurobi running times as for no instance optimality of the solution was certified. In fact, for all models, the optimality gap was consistently very close to 100% when the time limit of 3600 s was reached.

We can observe that OCT-H heavily struggled on these problems, producing meaningful models only with the least large instances of the benchmark. In the most difficult problems, neither the warm start and the global steps were able to find, within their time limits, anything better than a trivial tree forwarding all the points up to the same leaf, leading to a value of \(B_\text {Acc}\) of 0.5. On the other hand, even if unable to certify optimality, both OLCT and MARGOT were able to handle the datasets. The out-of-sample performance of the two approaches is close, with OLCT apparently having a slight advantage; yet, the size of the benchmark does not allow us to state that one model is better than the other.

We then proceeded to evaluate the models as the depth of the trees increased. Specifically, we conducted experiments for trees with a depth of 3. It is important to note that, in this scenario, the cost of the experiments dramatically rises. The addition of an extra layer of nodes necessitates tuning an additional hyperparameter. Consequently, the number of hyperparameters configurations to be considered in the cross-validation phase increases accordingly, rendering the entire process time-consuming. As a result, we only considered 5 datasets from Table 1 with a single train/test split.

Again, the goal of the experiment is to show that OLCTs are indeed employable with depth-3 trees, at least to the same extent as MARGOT and OCT-H are usable. The results of the experiment are reported in Table 3. We can observe that, in general, the loss-optimal classification tree framework remains manageable when used to train more complex tree classifiers with a depth of 3. In fact, the produced models demonstrate generalization capabilities, underlining the effectiveness of the procedure. It is important to note that the size of the benchmark considered is too small to draw definitive conclusions in this regard. However, the performance of OLCT models appear to be at least comparable to that of MARGOT and OCT-H, both in terms of accuracy and ease of training.

6 Conclusions

In this work, we proposed a general framework for loss-optimal classification trees, showing how different losses can be handled through a proper definition of the slack variables. We reviewed the recent state-of-the-art MIP methods for the modeling of the learning problem and we encapsulated both the misclassification loss strategy proposed by Bertsimas and Dunn (2017) and the hinge-loss approach (D’Onofrio et al. 2024) in our framework.

Moreover, we provided a new formulation which employs a piece-wise linear surrogate of the log loss for the induction of logistic multivariate classification trees. We showed how logistic splits with a standard LASSO regularization can be used to construct sparser and more interpretable trees with better generalization performances, with a reasonable computational cost. The trade-off between out-of-sample accuracy, interpretability and running time attained by our proposed approach thus appears to be optimal among the models considered in our numerical experiments.

Our work opens several directions for future contributions. Possible extensions may take into account, for example, the possibility to carry over both the general framework and the logistic approach to decision diagrams (Florio et al. 2023) or to fit ensemble methods. Moreover, future research shall focus on strategies to employ OLCT-like models in the multiclass setting, i.e., using multinomial regression models at each node. In the case of sparse multinomial regression, this extension was carried out for example by Kamiya et al. (2019) with an outer approximation algorithm that exploit a dual formulation. This approach, however, is not straightforwardly applicable within a full tree structure.

Finally, some approaches have been proposed very recently to enhance the scalability of MILP approaches to train classification trees (Alès et al. 2024; Patel et al. 2024). The employment of strategies of this kind within the loss-optimal CTs framework might be considered in future work.

Data availability

The datasets analyzed in the present study are available in the UCI repository https://archive.ics.uci.edu/ml/index.php and at the webpage https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/.

Code Availability

All the code developed for the experimental part of this paper is publicly available at https://github.com/tom1092/Optimal-Logistic-Classification-Trees

Notes

Datasets are available at https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/.

References

Aghaei S, Gómez A, Vayanos P (2021) Strong optimal classification trees. arXiv preprint arXiv:2103.15965

Alès Z, Huré V, Lambert A (2024) New optimization models for optimal classification trees. Comput Oper Res 164:106515

Bach F, Jenatton R, Mairal J, Obozinski G et al (2012) Optimization with sparsity-inducing penalties. Found Trends Machine Learn 4(1):1–106

Bennett KP (1992) Decision tree construction via linear programming. Technical report, University of Wisconsin-Madison Department of Computer Sciences

Bennett KP, Blue J (1998) A support vector machine approach to decision trees. In: 1998 IEEE International Joint Conference on neural networks proceedings. IEEE World Congress on computational intelligence (Cat. No. 98CH36227), vol. 3, pp. 2396–2401. IEEE

Bennett KP, Mangasarian OL (1992) Robust linear programming discrimination of two linearly inseparable sets. Optimiz Methods Softw 1(1):23–34

Bennett KP, Mangasarian OL (1994) Multicategory discrimination via linear programming. Optimiz Methods Softw 3(1–3):27–39

Bertsimas D, Dunn J (2017) Optimal classification trees. Mach Learn 106(7):1039–1082

Bixby RE (2012) A brief history of linear and mixed-integer programming computation. Doc Math 2012:107–121

Blanquero R, Carrizosa E, Molero-Río C, Romero Morales D (2020) Sparsity in optimal randomized classification trees. Eur J Oper Res 284(1):255–272

Blanquero R, Carrizosa E, Molero-Río C, Romero Morales D (2021) Optimal randomized classification trees. Comput Oper Res 132:105281

Blanquero R, Carrizosa E, Molero-Río C, Romero Morales D (2022) On sparse optimal regression trees. Eur J Oper Res 299(3):1045–1054

Bohanec M, Bratko I (1994) Trading accuracy for simplicity in decision trees. Mach Learn 15:223–250

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Breiman L, Friedman JH, Olshen RA, Stone CJ (1984) Classification and regression trees. Chapman & Hall/CRC, Boca Raton

Brodley CE, Utgoff PE (1995) Multivariate decision trees. Mach Learn 19(1):45–77

Carreira-Perpinán MA, Tavallali P (2018) Alternating optimization of decision trees, with application to learning sparse oblique trees. Adv Neural Inform Process Syst 31:1211–1221

Carrizosa E, Molero-Río C, Romero Morales D (2021) Mathematical optimization in classification and regression trees. TOP 29(1):5–33

Chan K-Y, Loh W-Y (2004) Lotus: an algorithm for building accurate and comprehensible logistic regression trees. J Comput Graph Stat 13(4):826–852

Chen T, Guestrin C (2016) Xgboost: A scalable tree boosting system. In: Proceedings of the 22nd Acm Sigkdd International Conference on knowledge discovery and data mining, pp. 785–794

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

De Mántaras RL (1991) A distance-based attribute selection measure for decision tree induction. Mach Learn 6(1):81–92

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Program 91:201–213

D’Onofrio F, Grani G, Monaci M, Palagi L (2024) Margin optimal classification trees. Comput Oper Res 161:106441

Dua D, Graff C (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml. Accessed May 2023

Dunn JW (2018) Optimal trees for prediction and prescription. PhD thesis, Massachusetts Institute of Technology

Figueiredo MA, Nowak RD, Wright SJ (2007) Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J Sel Top Signal Process 1(4):586–597

Florio AM, Martins P, Schiffer M, Serra T, Vidal T (2023) Optimal decision diagrams for classification. In: Proceedings of the AAAI Conference on artificial intelligence 37:7577–7585

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Friedman JH et al (1977) A recursive partitioning decision rule for nonparametric classification. IEEE Trans Comput 26(4):404–408

Günlük O, Kalagnanam J, Li M, Menickelly M, Scheinberg K (2021) Optimal decision trees for categorical data via integer programming. J Global Optim 81(1):233–260

Gurobi Optimization LLC (2022) Gurobi optimizer reference manual. https://www.gurobi.com. Accessed May 2023

Hastie T, Tibshirani R, Friedman JH, Friedman JH (2009) The elements of statistical learning: data mining, inference, and prediction, vol 2. Springer, New York

Hu X, Rudin C, Seltzer M (2019) Optimal sparse decision trees. Adv Neural Inform Process Syst 32:7267–7275

John GH (1995) Robust linear discriminant trees. In: Pre-proceedings of the Fifth International Workshop on artificial intelligence and statistics, pp 285–291. PMLR

Jovanovic M, Radovanovic S, Vukicevic M, Van Poucke S, Delibasic B (2016) Building interpretable predictive models for pediatric hospital readmission using tree-lasso logistic regression. Artif Intell Med 72:12–21

Kamiya S, Miyashiro R, Takano Y (2019) Feature subset selection for the multinomial logit model via mixed-integer optimization. In: The 22nd International Conference on artificial intelligence and statistics, pp 1254–1263. PMLR

Kotsiantis SB (2013) Decision trees: a recent overview. Artif Intell Rev 39(4):261–283

Landwehr N, Hall M, Frank E (2005) Logistic model trees. Mach Learn 59:161–205

Laurent H, Rivest RL (1976) Constructing optimal binary decision trees is NP-complete. Inf Process Lett 5(1):15–17

Lin J, Zhong C, Hu D, Rudin C, Seltzer M (2020) Generalized and scalable optimal sparse decision trees. In: International Conference on machine learning, pp 6150–6160. PMLR

Liu E, Hu T, Allen TT, Hermes C (2023) Optimal classification trees with leaf-branch and binary constraints applied to pipeline inspection. Available at SSRN 4360508

Loh W-Y, Vanichsetakul N (1988) Tree-structured classification via generalized discriminant analysis. J Am Stat Assoc 83(403):715–725

Murthy SK, Salzberg S (1995) Decision tree induction: How effective is the greedy heuristic? In: KDD, pp 222–227

Murthy SK, Kasif S, Salzberg S (1994) A system for induction of oblique decision trees. J Artif Intell Res 2:1–32

Norouzi M, Collins M, Johnson MA, Fleet DJ, Kohli P (2015) Efficient non-greedy optimization of decision trees. Adv Neural Inform Process Syst 28:1729–1737

Orsenigo C, Vercellis C (2003) Multivariate classification trees based on minimum features discrete support vector machines. IMA J Manag Math 14(3):221–234

Patel KK, Desaulniers G, Lodi A (2024) An improved column-generation-based matheuristic for learning classification trees. Comput Oper Res, p 106579

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Quinlan JR (1986) Induction of decision trees. Mach Learn 1(1):81–106

Quinlan JR (1987) Simplifying decision trees. Int J Man Mach Stud 27(3):221–234

Ribeiro MT, Singh S, Guestrin C (2016) "Why should I trust you?" Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on knowledge discovery and data mining, pp 1135–1144

Rokach L, Maimon O (2005) Top-down induction of decision trees classifiers-a survey. IEEE Trans Syst Man Cybern Part C (Applications and Reviews) 35(4):476–487

Ross A, Lage I, Doshi-Velez F (2017) The neural lasso: local linear sparsity for interpretable explanations. In: Workshop on Transparent and Interpretable Machine Learning in Safety Critical Environments, 31st Conference on Neural Information Processing Systems, vol. 4

Rudin C (2019) Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1(5):206–215

Sato T, Takano Y, Miyashiro R, Yoshise A (2016) Feature subset selection for logistic regression via mixed integer optimization. Comput Optim Appl 64:865–880

Song Y-Y, Ying L (2015) Decision tree methods: applications for classification and prediction. Shanghai Arch Psychiatry 27(2):130

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J Roy Stat Soc Ser B (Methodol) 58(1):267–288

Tibshirani R, Hastie T (2007) Margin trees for high-dimensional classification. J Mach Learn Res 8(3):637–652

Verwer S, Zhang Y (2019) Learning optimal classification trees using a binary linear program formulation. In: Proceedings of the AAAI Conference on artificial intelligence 33:1625–1632

Acknowledgements

The authors are grateful to the Editor and the anonymous referees for the stimulating comments that led to substantial improvements to this manuscript.

Funding

Open access funding provided by Università degli Studi di Firenze within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aldinucci, T., Lapucci, M. Loss-optimal classification trees: a generalized framework and the logistic case. TOP 32, 323–350 (2024). https://doi.org/10.1007/s11750-024-00674-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11750-024-00674-y