Abstract

Modern data collection in many data paradigms, including bioinformatics, often incorporates multiple traits derived from different data types (i.e., platforms). We call this data multi-block, multi-view, or multi-omics data. The emergent field of data integration develops and applies new methods for studying multi-block data and identifying how different data types relate and differ. One major frontier in contemporary data integration research is methodology that can identify partially shared structure between sub-collections of data types. This work presents a new approach: Data Integration Via Analysis of Subspaces (DIVAS). DIVAS combines new insights in angular subspace perturbation theory with recent developments in matrix signal processing and convex–concave optimization into one algorithm for exploring partially shared structure. Based on principal angles between subspaces, DIVAS provides built-in inference on the results of the analysis, and is effective even in high-dimension-low-sample-size (HDLSS) situations.

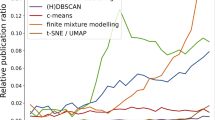

Similar content being viewed by others

References

Akaho S (2007) A kernel method for canonical correlation analysis. arXiv preprint arXiv:cs/0609071v2

Blum A, Mitchell T (1998) Combining labeled and unlabeled data with co-training. In: COLT: Proceedings of the workshop on computation learning theory

Cai J, Huang X (2017) Robust kernel canonical correlation analysis with applications to information retrieval. Eng Appl Artif Intell 64:33–42

Chikuse Y (2012) Statistics on special manifolds. Lecture notes in statistics. Springer, New York

Farquhar JD, Hardoon DR, Meng H, Shawe-Taylor J (2005) Two-view learning: SVM-2K, theory and practice. In: Advances in neural information processing systems

Feng Q, Jiang M, Hannig J, Marron JS (2018) Angle-based joint and individual variation explained. J Multivar Anal 166:241–265

Gavish M, Donoho DL (2014) The optimal hard threshold for singular values is 4/\(\sqrt{3}\). IEEE Trans Inf Theory 60(2014):5040–5053

Gavish M, Donoho DL (2017) Optimal shrinkage of singular values. IEEE Trans Inf Theory 63:2137–2152

Gaynanova I, Li G (2019) Structural learning and integrative decomposition of multi-view data. Biometrics 75(4):1121–1132

Grant M, Boyd S (2008) Graph implementations for nonsmooth convex programs. In: Blondel V, Boyd S, Kimura H (eds) Recent advances in learning and control. Lecture notes in control and information sciences. Springer-Verlag Limited, London, pp 95–110

Grant M, Boyd S (2014) CVX: Matlab software for disciplined convex programming, version 2.1. http://cvxr.com/cvx

Horst P (1961) Relations amongm sets of measures. Psychometrika 26:129–149

Hotelling H (1936) Relations between two sets of variates. Biometrika 28(3–4):321–377

Ismailova D, Lu W-S (2016) Penalty convex-concave procedure for source localization problem. In: 2016 IEEE Canadian conference on electrical and computer engineering (CCECE)

Jiang M (2018) Statistical Learning of Integrative Analysis. PhD thesis, University of North Carolina at Chapel Hill

Kalben BB, FSA, EA, M (2000) Why men die younger. North Am Actuar J 4(4):83–111

Kettenring J (1971) Canonical analysis of several sets of variables. Biometrika 58:433–451

Kish L (1965) Survey sampling. John Wiley & Sons, Inc, New York

Li Y, Yang M, Zhang Z (2019) A survey of multi-view representation learning. IEEE Trans Knowl Data Eng 31(10):1863–1883

Lock E, Hoadley K, Marron J, Nobel A (2013) Joint and individual variation explained (JIVE) for integrated analysis of multiple data types. Ann Appl Stat 7:523

Lock EF, Park JY, Hoadley KA (2020) Bidimensional linked matrix factorization for pan-omics pan-cancer analysis. Ann Appl Stat 16:193

Marchenko VA, Pastur LA (1967) Distribution of eigenvalues for some sets of random matrices. Math USSR-Sbornik 114:507–536

Marron JS, Alonso AM (2014) Overview of object oriented data analysis. Biom J 56(5):732–753

Marron J, Dryden I (2021) Object oriented data analysis. Monographs on statistics and applied probability. CRC Press, Chapman & Hall/CRC

Miao J, Ben-Israel A (1992) On principal angles between subspaces in Rn. Linear Algebra Appl 171:81–98

Network CGA et al (2012) Comprehensive molecular portraits of human breast tumours. Nature 490(7418):61

Nielsen A (2002) Multiset canonical correlations analysis and multispectral, truly multitemporal remote sensing data. IEEE Trans Image Process 11(3):293–305

Patton GC, Coffey C, Sawyer SM, Viner RM, Haller DM, Bose K, Vos T, Ferguson J, Mathers CD (2009) Global patterns of mortality in young people: a systematic analysis of population health data. Lancet 374(9693):881–892

Prothero JB (2021) Data Integration Via Analysis of Subspaces. PhD thesis, University of North Carolina at Chapel Hill

Prothero J, Hannig J, Marron J (2023) New perspectives on centering. New Engl J Stat Data Sci 1(2):216–236

Shabalin A, Nobel A (2013) Reconstruction of a low-rank matrix in the presence of gaussian noise. J Multiv Anal 118(2013):67–76

Shu H, Qu Z (2021) CDPA: common and distinctive pattern analysis between high-dimensional datasets. Electron J Stat 16:2475

Sun S (2013) A survey of multi-view machine learning. Neural Comput Appl 23:2031–2038

Tran-Dinh Q, Diehl M (2009) Sequential Convex Programming Methods for Solving Nonlinear Optimization Problems with DC constraints. Tech. report, ESAT/SCD and OPTEC, KU Leuven, Belgium

Wedin P-A (1972) Perturbation bounds in connection with singular value decomposition. BIT Numer Math 12:99–111

White M, Yu Y, Zhang X, Schuurmans D (2012). Convex multi-view subspace learning. In: 25th International conference on neural information processing systems

Wilmoth JR, Shkolnikov V (2000-2021). Human mortality database. University of California, Berkeley (USA), and Max Planck Institute for Demographic Research (Germany)

Xu C, Tao D, Xu C (2013) A survey on multi-view learning. arXiv preprint arXiv:1304.5634

Yi S, Wong R K W, and Gaynanova I (2022). Hierarchical nuclear norm penalization for multi-view data. arXiv:2206.12891

Yuan D, Gaynanova I (2021) Double-matched matrix decomposition for multi-view data. J Comput Graph Stat 31:1114–1126

Zhao S, Gao C, Mukherjee S, Engelhardt BE (2016) Bayesian group factor analysis with structured sparsity. J Mach Learn Res 17(196):1–47

Zhua P, Knyazev AV (2012) Principal angles between subspaces and their tangents. MITSUBISHI ELECTRIC RESEARCH LABORATORIES

Acknowledgements

Jan Hannig’s research was supported in part by the National Science Foundation under Grant No. DMS-1916115, 2113404, and 2210337. J.S. Marron’s research was partially supported by the National Science Foundation Grant No. DMS-2113404.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Review of random matrix theory

Our chosen signal extraction procedure uses random matrix theory ideas. The classical result from Marchenko and Pastur (1967) on the distribution of the eigenvalues of random matrices underpins all of these ideas; we restate that result below.

Let \(\textbf{E}\) be a \(d\times n\) random matrix. The entries of \(\textbf{E}\) are independent and identically distributed (i.i.d.) with mean 0, finite variance \(\sigma ^2\), and finite fourth moment. Form the \(d\times d\) estimator of the covariance matrix \({\varvec{\Sigma }}_n = \frac{1}{n}\textbf{E}\textbf{E}^{\top }\) and let \(\lambda _1,\dots ,\lambda _d\) denote the eigenvalues of \({\varvec{\Sigma }}_n\). Consider the empirical measure \(\mu _d(A) = \frac{1}{d}\#\{\lambda _j\in A\}, A\subset \mathbb {R}\) representing the empirical distribution of the eigenvalues of \({\varvec{\Sigma }}_n\) as random variables themselves. Define an indicator fuction \({\textbf{1}}_{\{K\}}\) for a given condition K as a function that returns 1 when condition K is satisfied and returns 0 otherwise.

Theorem 3

[Marchenko and Pastur 1967] If \(d,n\rightarrow \infty \) such that \(\frac{d}{n}\rightarrow \beta \in (0,+\infty )\), then \(\mu _d\) converges weakly to the measure whose density is \(\mu (\lambda )\):

where the function \(h(\lambda )\) is defined below:

If \(d < n\), then \(\beta < 1\) for \(\textbf{E}\) and \({\varvec{\Sigma }}_n\) is rank d. In this case, since \({\varvec{\Sigma }}_n\) is full-rank, all eigenvalues are nonzero, and asymptotically fall between \(\sigma ^2(1-\sqrt{\beta })^2\) and \(\sigma ^2(1+\sqrt{\beta })^2\). Alternatively, if \(d > n\), then \(\beta > 1\) for \(\textbf{E}\) and \({\varvec{\Sigma }}_n\) is rank n. In this case, \({\varvec{\Sigma }}_n\) is not full rank so the eigenvalues \(\lambda _{n+1} \dots \lambda _d\) are all 0. In cases where \(\beta > 1\) the Marchenko–Pastur density is therefore a mixture between a point mass of \(1 - \frac{1}{\beta }\) at zero and a continuous portion bounded between \(\sigma ^2(1-\sqrt{\beta })^2\) and \(\sigma ^2(1+\sqrt{\beta })^2\) with total area \(\frac{1}{\beta }\).

Review of principal angle analysis

The following is based on Zhua and Knyazev (2012) and Miao and Ben-Israel (1992). Principal angle analysis characterizes the relative positions of two subspaces \(\mathcal {X}\) and \(\mathcal {Y}\) in Euclidean space using canonical angles found via SVD. In particular, let \(\textbf{W}_{\mathcal {X}}\) and \(\textbf{W}_{\mathcal {Y}}\) be orthonormal basis matrices for \(\mathcal {X}\) and \(\mathcal {Y}\), respectively. Then the singular value decomposition of \(\textbf{W}_{\mathcal {X}}^{\top }\textbf{W}_{\mathcal {Y}}\) finds both the principal angles between \(\mathcal {X}\) and \(\mathcal {Y}\) and the corresponding principal vectors. Write the singular value decomposition of \(\textbf{W}_{\mathcal {X}}^{\top }\textbf{W}_{\mathcal {Y}}\) as \(\textbf{W}_{\mathcal {X}}^{\top }\textbf{W}_{\mathcal {Y}} = \textbf{U}\textbf{D}\textbf{V}^{\top }\), where \(\textbf{U}\) and \(\textbf{V}\) are orthonormal matrices containing the principal vectors of \(\mathcal {X}\) and \(\mathcal {Y}\) respectively, and \(\textbf{D}\) is a diagonal matrix. The inverse cosines of the nonzero entries of \(\textbf{D}\) give the principal angles between \(\mathcal {X}\) and \(\mathcal {Y}\), and in particular the angles between each pair of corresponding principal vectors. The jth pair of principal vectors have an angle between them equal to the jth principal angle.

This perspective also demonstrates the result of principal angle analysis when the dimensions of \(\mathcal {X}\) and \(\mathcal {Y}\) differ. Let the dimensions of \(\mathcal {X}\) and \(\mathcal {Y}\) be p and q, respectively, with \(p < q\). In this case, some of the singular values will be zero as the matrix \(\textbf{W}_{\mathcal {X}}^{\top }\textbf{W}_{\mathcal {Y}}\) is non-square, and the inverse cosine of zero is \(90^{\circ }\). If \(p<q\), the principal angles \(\theta _{p+1},\dots ,\theta _{q}\) are all \(90^{\circ }\).

Principal angle analysis is also orthogonally invariant. In particular, the principal angles between \(\mathcal {X}\) and \(\mathcal {Y}\) will be identical to the principal angles between reoriented versions \(\textbf{O}\mathcal {X}\) and \(\textbf{O}\mathcal {Y}\), where \(\textbf{O}\) is an orthogonal matrix and \(\textbf{O}\mathcal {X} = \{\textbf{O}\textbf{x}|\textbf{x}\in \mathcal {X}\}\). The matrices \(\textbf{O}\textbf{W}_{\mathcal {X}}\) and \(\textbf{O}\textbf{W}_{\mathcal {Y}}\) represent orthonormal bases for \(\textbf{O}\mathcal {X}\) and \(\textbf{O}\mathcal {Y}\), so the principal angle structure between the two rotated subspaces is found by taking a singular value decomposition of \(\textbf{W}_{\mathcal {X}}^{\top } \textbf{O}^{\top } \textbf{O}\textbf{W}_{\mathcal {Y}}\), which is equivalent to that of \(\textbf{W}_{\mathcal {X}}^{\top }\textbf{W}_{\mathcal {Y}}\).

Noise matrix estimation

The residual \({\hat{\textbf{E}}} = \textbf{X}- {\hat{\textbf{A}}}\) is a poor estimate of the non-signal component of the data, especially in the case of a non-square matrix. Heuristically, this is caused by the residual lying entirely in the subspace spanned by the data. We investigate the causes of this phenomenon and propose a solution.

For this investigation, our synthetic data will be a \(5000\times 500\) matrix \(\textbf{X}=\textbf{A}+\textbf{E}\). The signal matrix \(\textbf{A}=\textbf{U}\textbf{D}\textbf{V}^{\top }\) is rank 50 with equally spaced singular values from 0.1 to 5. \(\textbf{E}\) is a full-rank i.i.d. Gaussian matrix with variance \(\frac{\sigma ^2}{5000}\). This scaling of the noise variance by the number of traits is common in the matrix signal processing literature (Gavish and Donoho 2014, 2017). It results in columns with expected norm \(\sigma \) and sets the noise at a level commensurate with the magnitude of the signal. With \(\sigma =1\), we expect most of the singular values to be easily recoverable while others are indistinguishable from the noise. We perform signal extraction as described in Sect. 2.1.1 on this matrix and subsequently examine estimators of \(\textbf{E}\) given \({\hat{\textbf{A}}}\).

One way to check the efficacy of an estimator \({\hat{\textbf{E}}}\) for \(\textbf{E}\) is to see how well its eigenvalues align with the Marchenko–Pastur distribution (see Appendix A). We can compare the observed values to theoretical quantiles using a quantile–quantile (Q-Q) plot. On the horizontal axis we plot the sorted observed eigenvalues for a noise matrix estimate \({\hat{\textbf{E}}}\), and on the vertical axis we plot evenly spaced quantiles of the Marchenko–Pastur distribution with parameter \(\beta =\frac{d\wedge n}{d\vee n}\) (here d and n are the row and column dimensions of the matrix, respectively). Typically, if the plotted points on a Q–Q plot roughly follow the \(45^{\circ }\) line, the conclusion is that the observed data align well with the theoretical distribution. To get a sense of how much variability to expect about the \(45^{\circ }\) line, we generate \(M=100\) i.i.d. Gaussian matrices and plot their eigenvalues as green lines underneath the magenta Q-Q points. These traces create a visually striking region of acceptable variability, which can be used to judge the goodness of fit at a glance.

Figure 15 shows such a Q–Q plot for the eigenvalues of the naïve noise estimate \({\hat{\textbf{E}}}=\textbf{X}- {\hat{\textbf{A}}}\) for the synthetic data matrix. The naïve estimated non-signal component tends to display perhaps unexpectedly low energy in directions associated with the estimated signal subspace. This phenomenon leads to Q–Q plots that are challenging to interpret. For this matrix, the estimated signal rank is 44, and the bottom 44 eigenvalues of the estimated noise matrix completely deviate from the theoretical Marchenko–Pastur distribution. Importantly, this phenomenon (explained in detail below) occurs regardless of the chosen estimate for \(\sigma \). The aberration in this graphic demonstrates the ineffectiveness of the naïve estimate. The rotational bootstrap procedure (see Sect. 2.1.3) central to DIVAS depends on effective estimation of the underlying noise matrix \(\textbf{E}\). This is accomplished via a correction to a portion of the singular values of \({\hat{\textbf{E}}}\).

Q–Q plot for the eigenvalues of the naïve noise matrix estimate. The first \({\hat{r}}\) eigenvalues fall entirely outside the range determined by Theorem 3, signaling that the naive noise matrix estimate is flawed. These eigenvalues are also scaled using the original noise level estimate to retain some interpretability. Scaling using the apparent noise level in the estimated error matrix produces an even worse fit to the Marchenko-Pastur distribution because the apparent noise level is too low

To explain this behavior and motivate our proposed correction, we consider our data model (2.1) in a special case where the signal is rank one, and the signal, noise and data are all vectors in \(\mathbb {R}^2\), illustrated in Fig. 16. The signal (green) and noise (red) vectors each lie in distinct one-dimensional subspaces. The two vectors are added together to form the data vector (blue). When we form our estimate of the signal \({\hat{\textbf{A}}}\) (green-blue dashed), our shrinkage procedure gives us a good estimate of signal magnitude. However, it is challenging to recover directional information about \(\textbf{A}\) as the estimate \({\hat{\textbf{A}}}\) lies in the same subspace as the data. When we next subtract \({\hat{\textbf{A}}}\) from the data \(\textbf{X}\) to form the naïve estimate \({\hat{\textbf{E}}}\) (red-blue dashed), the subtraction occurs entirely in the data subspace so we don’t account for the angle between the initial signal and noise vectors at all. This leads to an underestimation of noise energy: the length of the estimated noise within the data subspace (red-blue dashed) is distinctly shorter than the length of the original noise vector (red). This length discrepancy is the one-dimensional analog of the phenomenon shown in Fig. 15 where many of the smallest eigenvalues are even smaller than expected.

Example of noise energy underestimation for a rank-one signal subspace in \(\mathbb {R}^2\). Left: Signal space (green), noise space (red), and data space (blue). Data vector formed by adding signal and noise vectors tip to tail. Right: Estimating \({\hat{\textbf{A}}}\) (green-blue dashed) and \({\hat{\textbf{E}}}=\textbf{X}-{\hat{\textbf{A}}}\) (red-blue dashed). When we remove energy equal to that of the signal space from the data space the leftover energy is noticeably smaller than the true noise energy. Note that the black arc indicates a rotation rather than a projection, so the green-blue dashed line has the same length as the green line

Several potential corrections for this effect are proposed in Chapter 4 of Prothero (2021). We present the correction used in DIVAS here. Consider \(\textbf{X}\) as a sum of rank 1 approximations in the manner of (2.3): \(\textbf{X}= \sum _{i=1}^{d\wedge n} \hspace{0.83328pt}\overline{\hspace{-0.83328pt}\nu \hspace{-0.83328pt}}\hspace{0.83328pt}_i \hspace{0.83328pt}\overline{\hspace{-0.83328pt}\textbf{u}\hspace{-0.83328pt}}\hspace{0.83328pt}_i \hspace{0.83328pt}\overline{\hspace{-0.83328pt}\textbf{v}\hspace{-0.83328pt}}\hspace{0.83328pt}_i^{\top }\). Once we estimate the signal singular values, we can split the energy in the associated singular vector directions into signal energy \({\hat{\nu }}\) and non-signal energy \(\hspace{0.83328pt}\overline{\hspace{-0.83328pt}\nu \hspace{-0.83328pt}}\hspace{0.83328pt}-{\hat{\nu }}\):

The Gavish–Donoho shrinkage function (2.4) gives us good estimates for the first \({\hat{r}}\) singular values \({\hat{\nu }}_{1:{\hat{r}}}\) while confirming many of the \(\hspace{0.83328pt}\overline{\hspace{-0.83328pt}\textbf{u}\hspace{-0.83328pt}}\hspace{0.83328pt}_i \hspace{0.83328pt}\overline{\hspace{-0.83328pt}\textbf{v}\hspace{-0.83328pt}}\hspace{0.83328pt}_i^{\top }\) subspaces and associated singular values as noise. However, by subtracting \({\hat{\textbf{A}}} = \sum _{i=1}^{{\hat{r}}} {\hat{\nu }}_i \hspace{0.83328pt}\overline{\hspace{-0.83328pt}\textbf{u}\hspace{-0.83328pt}}\hspace{0.83328pt}_i \hspace{0.83328pt}\overline{\hspace{-0.83328pt}\textbf{v}\hspace{-0.83328pt}}\hspace{0.83328pt}_i^{\top }\) from \(\textbf{X}\) we are overestimating the influence of the signal within the data subspace as the \(\hspace{0.83328pt}\overline{\hspace{-0.83328pt}\nu \hspace{-0.83328pt}}\hspace{0.83328pt}_i - {\hat{\nu }}_i\) terms of \({\hat{\textbf{E}}}\) have inordinately low energy in directions associated with the estimated signal.

The DIVAS solution to this energy deficiency is to replace each deficient singular value in \({\hat{\textbf{E}}}\) with a Marchenko–Pastur random variate. Let \(MP_q(\beta )\) be the qth percentile of the Marchenko–Pastur distribution with parameter \(\beta \), let \(U_{1:{\hat{r}}}\) be \({\hat{r}}\) i.i.d. standard uniform random variables, and let \({\hat{\sigma }}^2\) be an estimate of the noise variance. We form the imputed noise matrix estimate \({\hat{\textbf{E}}}_{impute}\) as follows:

Figure 17 shows the original Q–Q plot from Fig. 15 with the eigenvalues of \({\hat{\textbf{E}}}_{impute}\) for the synthetic data matrix also included in black. After imputing the deficient singular values, the eigenvalues of the reconstructed noise matrix estimate follow the expected Marchenko–Pastur distribution quite closely; nearly all of them fall within the green acceptable variability envelope.

Optimization algorithm and implementation

In this appendix, we provide the details of our numerical algorithm to solve the optimization problem (2.8). First, we will explicitly rewrite (2.8) into a convex-concave optimization problem, also called a DC (difference of two convex functions) program. The detail primarily involves the steps to reformulate the problem (2.8) into the convex-concave setting described in Ismailova and Lu (2016), and subsequently implements that convex–concave procedure for solving the resulting problem. The convex–concave procedure (or also called a DC algorithm) can be found in the literature including Ismailova and Lu (2016); Tran-Dinh and Diehl (2009). Since our problem has both the DC objective function and DC constraints, we can use the convergence analysis from Tran-Dinh and Diehl (2009) to guarantee the well-definedness of our algorithm.

DC programming reformulation of (2.8). To move toward to a DC programming reformulation of (2.8), we express the angles between candidate directions \(\textbf{v}^{\star }\) and various subspaces in terms of their squared cosines. For an arbitrary-magnitude \(\textbf{v}^{\star }\) and orthonormal basis matrix \(\textbf{V}\) for a subspace, if we define \({\hat{\theta }}_{\textbf{V}}=\angle (\textbf{v}^{\star }, \textbf{V})\), then we have \(\cos ^2({\hat{\theta }}_{\textbf{V}}) = \frac{\textbf{v}^{\star \top }\textbf{V}\textbf{V}^{\top }\textbf{v}^{\star }}{\textbf{v}^{\star \top }\textbf{v}^{\star }}\). More specifically, by using the representation \(\cos ^2({\hat{\theta }}_{Tk}) = \frac{\textbf{v}^{\star \top }{\check{\textbf{V}}}_k {\check{\textbf{V}}}_k^{\top }\textbf{v}^{\star }}{\textbf{v}^{\star \top }\textbf{v}^{\star }}\) and keeping in mind the orthonormal condition that \(\textbf{v}^{\star \top }\textbf{v}^{\star }= 1\), the objective function of (2.8) becomes \(-\sum _{k\in \textbf{i}} \cos ^2({\hat{\theta }}_{Tk}) = - \textbf{v}^{\star \top }\left( \sum _{k\in \textbf{i}} {\check{\textbf{V}}}_k {\check{\textbf{V}}}_k^{\top }\right) \textbf{v}^{\star }\). Next, using the decreasing monotonicity of \(\cos ^2\) in \([0, \frac{\pi }{2}]\), the constraint \({\hat{\theta }}_{Tk} \le {\hat{\phi }}_k\) is equivalent to \(\cos ^2({\hat{\theta }}_{Tk}) = \frac{\textbf{v}^{\star \top }{\check{\textbf{V}}}_k {\check{\textbf{V}}}_k^{\top }\textbf{v}^{\star }}{ \textbf{v}^{\star \top }\textbf{v}^{\star }} \ge \cos ^2({\hat{\phi }}_k)\) for all \(k\in \textbf{i}\). Similarly, \({\hat{\theta }}_{Tk} \ge {\hat{\phi }}_k\) is equivalent to \(\cos ^2({\hat{\theta }}_{Tk}) = \frac{\textbf{v}^{\star \top }{\check{\textbf{V}}}_k {\check{\textbf{V}}}_k^{\top }\textbf{v}^{\star }}{ \textbf{v}^{\star \top }\textbf{v}^{\star }} \le \cos ^2({\hat{\phi }}_k)\) for all \(k\in \textbf{i}^c\). The constraint \({\hat{\theta }}_{Ok} \le {\hat{\psi }}_k\) is equivalent to \(\cos ^2({\hat{\theta }}_{Ok}) = \frac{ \textbf{v}^{\star \top }\textbf{X}_k^{\top }{\check{\textbf{U}}}_k {\check{\textbf{U}}}_k^{\top } \textbf{X}_k \textbf{v}^{\star }}{ \textbf{v}^{\star \top }\textbf{X}_k^{\top }\textbf{X}_k\textbf{v}^{\star }} \ge \cos ^2({\hat{\psi }}_k)\) for all \(k\in \textbf{i}\). Finally, we multiply all these constraint reformulations by \(\textbf{v}^{\star \top }\textbf{v}^{\star }\) to eliminate their denominator, and transform them into DC constraints. The orthonormal constraint \(\textbf{v}^{\star \top }\textbf{v}^{\star }= 1\) is equivalent to \(\textbf{v}^{\star \top }\textbf{v}^{\star }- 1 \le 0\) and \(1 - \textbf{v}^{\star \top }\textbf{v}^{\star }\le 0\). Putting these transformations together, we can easily see that (2.8) is equivalent to the following problem:

Clearly, the objective function of (D.1) is concave. In addition, the third, the fourth, the fifth, and the last constraints of (D.1) are DC constraints of the form \(f(\textbf{v}^{\star }) - g(\textbf{v}^{\star }) \le 0\). Therefore, (D.1) is a DC program. This problem is feasible depending on the choice of \({\hat{\phi }}_k\) and \({\hat{\psi }}_k\). However, due to the orthonormal constraint \(\textbf{v}^{\star \top }\textbf{v}^{\star }= 1\), the feasible set of problem (D.1) does not have nonempty interior. In this case, to guarantee the DC algorithm being well-defined, we will relax it by adding slack variables.

DC algorithm. Note that the objective function of (D.1) is though concave, it can be written into a DC function \(f_0(\textbf{v}^{\star }) - g_0(\textbf{v}^{\star })\), where \(f_0 = 0\) and \(g_0\) is a quadratic function. Assume that we have \(m_c\) DC constraints. Then, all the DC constraints can be written as \(f_k(\textbf{v}^{\star }) - g_k(\textbf{v}^{\star }) \le 0\) for \(k=1,\cdots , m_c\). The other convex constraints are expressed as \(\textbf{v}^{\star }\in \mathcal {F}\), including the orthogonal constraints \(\textbf{v}^{\star }\perp {\mathfrak {V}}_{\textbf{j}}\) for all \(\textbf{j}\supseteq \textbf{i}\), which are in fact linear. However, to guarantee the feasibility of our DC program, we instead relax the DC constraints to obtain \(f_k(\textbf{v}^{\star }) - g_k(\textbf{v}^{\star }) \le s_k\), where \(s_k \ge 0\) are given slack variables. We also penalize the slack variables \(s_k\) into the objective function with a given penalty parameter \(\tau > 0\) to better approximate feasible solutions of (D.1). Therefore, we can write the relaxation form of (D.1) into the following DC program:

Note that if \(s_k = 0\) for \(k=1,\cdots , m_c\), then (D.2) reduces to (D.1). To solve (D.2), we apply a DC algorithm (see, e.g., Ismailova and Lu (2016); Tran-Dinh and Diehl (2009)), which can be roughly described as follows.

-

1.

Initialization: At the iteration \(t=0\), find an initial point \(\textbf{v}_0\) of (D.2) (specified later).

-

2.

Iteration t. At each iteration \(t\ge 0\), given \(\textbf{v}_t\), linearize the concave parts of (D.2) to obtain the following convex optimization subproblem:

$$\begin{aligned} \left\{ \begin{array}{lll} {\displaystyle \min _{\textbf{v}^{\star }, s_k}} &{} f_0(\textbf{v}^{\star }) - [g_0(\textbf{v}_t) + \nabla {g_0}(\textbf{v}_t)^{\top }(\textbf{v}- \textbf{v}_t)] + \tau \sum _{k=1}^{m_c}s_k\vspace{1ex}\\ \mathrm {s.t.} &{} f_k(\textbf{v}^{\star }) - [g_k(\textbf{v}_t) + \nabla {g_k}(\textbf{v}_t)^{\top }(\textbf{v}- \textbf{v}_t)] \le s_k, \quad (k =1,\cdots , m_c),\\ &{}\textbf{v}^{\star }\in \mathcal {F}, \quad s_k \ge 0, ~(k=1,\cdots ,m_c). \end{array}\right. \end{aligned}$$(D.3) -

3.

Solve (D.3) to obtain an optimal solution \(\textbf{v}_{t+1}\) and repeat the next iteration \(t+1\) with \(\textbf{v}_{t+1}\).

-

4.

Termination. The algorithm is terminated if it does not significantly improve the objective values, or other criteria are met.

Note that as proven in Tran-Dinh and Diehl (2009), under mild conditions imposed on (D.2), our DC algorithm guarantees that the sequence \(\{\textbf{v}_t\}\) generated by our DC algorithm converges to a stationary point of (D.2) (i.e., the point satisfying the optimality condition of (D.2)). We do not repeat the convergence analysis of our DC procedure here, but refer to Tran-Dinh and Diehl (2009) for more details.

Detailed implementation. We now specify the detailed implementation of our DC procedure as follows. The first step is to choose an initial point \(\textbf{v}_0\) for our DC program (D.1). Without relaxation, choosing a feasible initial point for (D.1) is indeed challenging. Hence, we introduce slack variables \(s_k\) to the constraints to guarantee that our relaxed DC program is always feasible and thus our algorithm is well-defined and can proceed. For instance, one can directly choose an arbitrary \(\textbf{v}_0\) in \(\mathcal {F}\) first, and then set \(s_{k, 0} = \max \{ f_k(\textbf{v}_0) - g_k(\textbf{v}_0), 0\}\) for each k to obtain a feasible point of (D.1).

In our implementation, we choose as our initial point for the ith direction in the joint subspace of block collection \(\textbf{i}\) the ith right singular vector from the SVD of \(\left[ \textbf{V}_{k}\right] ^{\top }_{k\in \textbf{i}}\), which is related to the joint structure found via the AJIVE algorithm (Feng et al. 2018). If necessary, the chosen initial point is also projected to obey any orthogonality constraints present at that point in the algorithm. As explained, the slack variables \(s_k\) introduced to allow for an infeasible initial condition also appear in the objective function. They are penalized with a weight \(\tau \) (also called the penalty parameter) which changes on each iteration of the optimization problem as \(\tau _t\). Notably, the values of the quadratic forms involved in the object space constraints are often much larger than those for the trait space constraints as the object space constraints include the full-energy data matrices \(\textbf{X}_k\). Therefore, we downweight the slack penalty on those constraints by the leading singular value \(\hspace{0.83328pt}\overline{\hspace{-0.83328pt}\nu \hspace{-0.83328pt}}\hspace{0.83328pt}_{1,k}\) of \(\textbf{X}_k\) so the optimization problem is not overly restricted by the object space constraints. For further computational efficiency, if the algorithm reaches a point where all the angle-constraint slack variables are zero, it will stop early and add to the current basis a normalized version of the current iteration’s intermediate solution.

Progression of the sequential optimization locating joint structure between each possible combination of data blocks for the synthetic data from Fig. 1. The horizontal axes represent iterations of the optimization problem and the vertical axes represent angles in degrees. Colored paths show progression of angles between the candidate direction and \({\textbf {TS}}({\hat{\textbf{A}}}_k)\) (blue) and \({\textbf {TS}}(\textbf{A}_k)\) (red). Perturbation angle bounds \({\hat{\phi }}_k\) shown as green horizontal lines. From top to bottom: three-way joint, joint between blocks 1 and 2, joint between blocks 1 and 3, joint between blocks 2 and 3

Next, if we specify the convex optimization subproblem (D.3) for (D.1), then it becomes

This problem is in fact a convex optimization problem with linear objective function and convex quadratic and linear constraints, which can be efficiently solved by several convex optimization solvers, including interior-point methods. In our implementation, we use a MATLAB package associated with a default solver, called CVX from Grant and Boyd (2014); Grant and Boyd (2008) to model the subproblem (D.4). If we use CVX’s default solver, SDPT3, then our algorithm runs in about 30 min on the mortality data example from Sect. 3.2 on the authors’ laptop with the default solver. Larger data sets like the breast cancer genomics example can take considerably longer, but substantial speed-ups are possible with Mosek and other commercial solvers.

To terminate our algorithm, one can look at the objective value of (D.1) to see if it is actually improved through iterations. If after, e.g., five consecutive iterations, the objective values do not significantly improved, then we can terminate it. Alternatively, we can also look at the quality of the final solution to see if it is reasonable to terminate the algorithm or not.

Experiments. Figure 18 shows the iterative progress of the optimization problem for the synthetic data example displayed in Fig. 1. On each panel, the horizontal axis represents the number of iterations and the vertical axis represents the angles in degrees to the panel’s respective estimated signal subspace in trait space. Each horizontal green line represents the trait space perturbation angle bound. The blue paths represent the angles to the low-rank approximations of trait spaces at each iteration. The red dashed paths represent the angle to the true trait subspaces at each iteration, which are known since this is a synthetic data set. Note that in the right panel in the first row, the initial condition is infeasible for the X3 data block’s angle perturbation bound, demonstrating how the algorithm can use the flexibility afforded by the slack variables to explore the space before choosing a final solution. Since the objective function is trying to minimize the total squared cosine of all included blocks, the angle with one data block may increase, as in the top middle panel, if it means the angle with other data blocks decreases.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Prothero, J., Jiang, M., Hannig, J. et al. Data integration via analysis of subspaces (DIVAS). TEST (2024). https://doi.org/10.1007/s11749-024-00923-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11749-024-00923-z