Abstract

We propose an estimator of the conditional tail moment (CTM) when the data are subject to random censorship. The variable of main interest and the censoring variable both follow a Pareto-type distribution. We establish the asymptotic properties of our estimator and discuss bias-reduction. Then, the CTM is used to estimate, in case of censorship, the premium principle for excess-of-loss reinsurance. The finite sample properties of the proposed estimators are investigated with a simulation study and we illustrate their practical applicability on a dataset of motor third party liability insurance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The estimation of tail parameters is crucial in many fields where extreme events with possible catastrophic impacts can happen occasionally. In these contexts, extreme value theory (EVT) appears to be the natural tool for modelling this type of events. However, the sample on which the estimation procedure has to be done can be censored. This problem of censoring, where only partial information on a random variable is available, typically that it exceeds a given threshold, is common in many scientific disciplines. For instance, when studying advanced age mortality, one often has that some individuals of a birth cohort are still alive at the time of follow-up, meaning that only a lower bound for their actual lifetime is available. This motivates the study of EVT in the censoring framework, a topic originally considered in the literature by Beirlant et al. (2007) and Einmahl et al. (2008) in all the domains of attraction. However, most recent publications on EVT in the censoring context are concerned with heavy-tailed distributions, motivated by applications in insurance where accurate modelling the upper tail of the claim size distribution is crucial for the risk management but can be difficult since long developments of claims are encountered. This implies that at the evaluation of a portfolio some claims might not be completely settled, and hence they are only known to exceed what has already been paid at the evaluation time. Concretely, this means that the real payments are right censored. We refer for instance to Beirlant et al. (2016, 2018, 2019), Bladt et al. (2021) or Worms and Worms (2014, 2016, 2018), among others.

In this paper, we will also focus on heavy-tailed distributions, i.e., we will assume that our variable of interest X has a distribution function \(F_X\) which satisfies

where \(\gamma _X>0\) is called the extreme value index of X, and \(\ell _X(.)\) is a slowly varying function at infinity, i.e., a function which satisfies

This variable X is censored by another random variable, say Y, independent of X, which has also a heavy-tailed distribution \(F_Y\) satisfying

with \(\gamma _Y>0\) and \(\ell _Y(.)\) again a slowly varying function at infinity. In the censoring framework, only \(Z:=\min (X, Y)\) is observed together with the indicator \(\delta :=\mathbbm {1}_{\{X\le Y\}}\) which specifies whether or not X has been observed. Given a sample \((Z_i, \delta _i), 1\le i\le n\), of independent copies of \((Z, \delta )\), our aim is to make inference on the right tail of the in practice unknown distribution function \(F_X\). This setup, where both the variable of main interest, X, and the censoring variable, Y, are heavy-tailed, and assumed independent is common in the extreme value literature, and was also assumed in, among others, Beirlant et al. (2007), Einmahl et al. (2008) and Bladt et al. (2021). The situation of dependent right censoring in an extreme value context was, to the best of our knowledge, only studied by Stupfler (2019), and this approach requires an assumption on the dependence between X and Y.

In particular, first interest is in the estimation of \(\gamma _X\), despite the fact that the \(X-\)sample was not observed. To understand how to proceed, first remark that, if \(F_Z\) denotes the distribution function of Z, then (1) and (2) imply that

where \(\gamma _Z:={\gamma _X \, \gamma _Y \over \gamma _X + \gamma _Y}\), and \(\ell _Z:=\ell _X\, \ell _Y\). That means that the distribution function of Z is also heavy-tailed. Thus, if we turn a blind eye by ignoring the censorship and decide simply to use a classical estimator of the extreme value index such as the Hill estimator (Hill 1975) based on the \(Z-\)sample and defined as

where \(Z_{i,n}\), \(1\le i \le n\), denote the order statistics associated to the \(Z-\)sample and k the number of random variables taken into account in the estimation procedure, then \(\widehat{\gamma }_k^{(H)}\) estimates the extreme value index \(\gamma _Z\) of Z instead of the one of interest, \(\gamma _X\), of X. Since \(\gamma _Z \le \gamma _X\), by using \(\widehat{\gamma }_k^{(H)}\), one underestimates the risk if one does not take censoring into consideration. To resolve this problem, Beirlant et al. (2007) proposed to divide the estimator (4) by the proportion of non-censored observations in the k largest Z’s, i.e., they introduced the adjusted estimator

where \(\delta _{[1,n]}, \ldots , \delta _{[n,n]}\) denote the \(\delta \)’s corresponding to \(Z_{1,n}, \ldots , Z_{n,n}\), respectively. This denominator in (5) estimates \({\gamma _Y \over \gamma _X + \gamma _Y}\), and thus \(\widehat{\gamma }_k^{(H,c)}\) is an extreme value index estimator for \(\gamma _X\).

Einmahl et al. (2008) established, under suitable assumptions, the convergence in distribution of the adjusted estimator \(\widehat{\gamma }_k^{(H,c)}\) with the explicit asymptotic bias and variance. Similar results for alternative estimators have been recently proposed in Beirlant et al. (2019) and Bladt et al. (2021).

Since the estimation of extreme value index in the censoring framework is nowadays well-known in the literature, we will assume in the sequel that we have at our disposal an extreme value index estimator adjusted to censoring, denoted \(\widehat{\gamma }_k^{(c)}\), for which the following convergence in distribution holds

under suitable conditions. Here \(\Gamma \) follows a normal distribution, with a mean and variance depending on the estimator of the extreme value index used. We refer to Sect. 2 for specific examples.

Our first aim will be then the estimation of the conditional tail moment (CTM) for a positive random variable X, defined for some \(\zeta >0\) as

in case \({\mathbb {E}}(X^\zeta )<\infty \), where \(U_X(\cdot ):=\inf \{x: F_X(x)\ge 1-1/\cdot \}=F_X^\leftarrow \left( 1-1/\cdot \right) \) is the tail quantile function of X, and when X is censored. This corresponds, for an insurance company, to the case where some claims can be considered as open in the sense that the company does not know yet the full extent of the loss. Then, using the CTM estimator adapted to censoring, we will look at some well-known risk measures, such as the premium for excess-of-loss reinsurance. By using different values of \(\zeta \), we will be able in particular to recover the mean and variance of the payment by the reinsurer. Note that this topic has been recently considered in Goegebeur et al. (2022) in case of no-censoring.

The remainder of the paper is organized as follows. In Sect. 2, we introduce an estimator for the conditional tail moment adapted to censoring and we establish its main asymptotic properties. Then, in Sect. 3, we look at the premium principle for excess-of-loss reinsurance by providing estimates for the mean and variance of the payment by the reinsurer in case of censoring and we establish their asymptotic behaviors. Finally, Sect. 4 is devoted to a simulation study, whereas Sect. 5 illustrates the performance of the estimators on a real dataset from motor third party liability insurance. All the proofs are postponed to the Supplementary Information, which contains also additional simulation results.

2 Estimation of the conditional tail moment in case of censoring

We assume that X and Y follow a second-order Pareto-type model. Let \(RV_\psi \) denote the class of regularly varying functions at infinity with index \(\psi \in {\mathbb {R}}\), i.e., positive measurable functions f satisfying \(f(tx)/f(t)\rightarrow x^\psi \), as \(t\rightarrow \infty \), for all \(x > 0\). In the below, \(\bullet \) denotes either X or Y.

Assumption \(({\mathcal {D}})\) The survival function of X and Y satisfies

where \(A_\bullet >0\), \(\gamma _\bullet >0\), and \(\vert \delta _\bullet (\cdot )\vert \in RV_{-\beta _\bullet }\), \(\beta _\bullet >0\).

Clearly, the associated tail quantile function \(U_\bullet (\cdot )\) satisfies

where \(a_\bullet (x)=\delta _\bullet (U_\bullet (x))(1+o(1))\), and thus \(\vert a_\bullet (\cdot )\vert \in RV_{-\beta _\bullet \gamma _\bullet }.\)

Note that the survival function of Z also satisfies the form of Assumption \(({{\mathcal {D}}})\) with \(A_Z:=A_XA_Y\), \(\gamma _Z:={\gamma _X \, \gamma _Y \over \gamma _X + \gamma _Y}\) and \(\vert \delta _Z\vert \) being regularly varying with index \(-\beta _Z\), where \(\beta _Z:= \min (\beta _X,\beta _Y)\), and hence \(U_Z\) will satisfy (7).

We can now expand the CTM under our Pareto-type model on X.

Proposition 1

If X satisfies Assumption \(({\mathcal {D}})\) with \(\gamma _X<1/\zeta \), then for \(p\downarrow 0\), we have

Note that Proposition 1 is a reformulation of Theorem 2.1 in Goegebeur et al. (2022) under our new Assumption \(({\mathcal {D}})\) instead of their second-order condition.

As a result, a natural estimator for \(\theta _{p,\zeta }\) in the case of censoring is given by

where \({\widehat{U}}^{(c)}_X\left( {1\over p}\right) \) is an extreme quantile estimator for \(U_X\left( {1\over p}\right) \) adapted to censoring. For the latter, we can use a Weissman-type estimator (see Weissman 1978) defined as

where \({\widehat{F}}_n(\cdot )\) denotes the Kaplan–Meier product-limit estimator (Kaplan and Meier 1958), defined as

As a preliminary result, we will prove the convergence in distribution of \({\widehat{U}}^{(c)}_X\left( {1\over p}\right) \).

Theorem 1

Assume \(({\mathcal {D}})\) with \(F_X\) continuous and that the convergence (6) holds. Then for \(k,n \rightarrow \infty \), with \(k/n\rightarrow 0\) and \(\sqrt{k} \delta _X(U_Z(n/k))\rightarrow \lambda \in {\mathbb {R}}\), and p satisfying \(\frac{\log (k/(np^{(\gamma _X+\gamma _Y)/\gamma _Y})) }{\sqrt{k} } \rightarrow 0 \) and \({k\over np}\rightarrow \infty \), we have

Note that the assumption \(\frac{\log (k/(np^{(\gamma _X+\gamma _Y)/\gamma _Y})) }{\sqrt{k} } \rightarrow 0\) implies that \({\log ((1-{\widehat{F}}_n(Z_{n-k,n}))/p) \over \sqrt{k}} {\mathop {\rightarrow }\limits ^{{\mathbb {P}}}} 0 \), see (B2) in the proof of Theorem 1 in the Supplementary Information.

We have now all the ingredients to prove the convergence in distribution of \(\widehat{\theta }_{p,\zeta }^{(c)}\).

Theorem 2

Under the same assumptions as in Theorem 1, for \(\gamma _X<1/\zeta \), we have

Note that, in case of no-censoring, this estimator is different from the one proposed in Goegebeur et al. (2022) but has the same limiting distribution under a weaker condition on the tail index, that is \(\gamma _X<1/\zeta \), instead of \(\gamma _X<1/(2\zeta )\).

If we set \(\widehat{\gamma }_k^{(c)}=\widehat{\gamma }_k^{(H,c)}\) in (9) and (10), then we can make explicit the asymptotic bias and variance of the CTM estimator, denoted in that case by \(\widehat{\theta }_{p,\zeta }^{(H,c)}\), under the following sub-model of \(({\mathcal {D}})\):

Assumption \(({\mathcal {D}}_H)\) The survival function of X and Y satisfies Assumption \(({\mathcal {D}})\) with

and \(\delta _\bullet (x)\) is differentiable, with derivative \(\delta '_\bullet (x)=-B_\bullet \beta _\bullet x^{-\beta _\bullet -1}(1+o(1)).\) Moreover, the function \(x\rightarrow \delta _\bullet (x)\) is monotone for x large enough.

Note that assumption (11) corresponds to the well-known Hall and Welsh (1985) model often used in the literature, for instance in Beirlant et al. (2016).

Corollary 1

Assume \(({\mathcal {D}}_H)\) holds. Then for \(k,n \rightarrow \infty \), with \(k/n\rightarrow 0\) and \(\sqrt{k} \delta _Z(U_Z(n/k))\rightarrow \lambda _Z\in {\mathbb {R}}\), and p satisfying \(\frac{\log (k/(np^{(\gamma _X+\gamma _Y)/\gamma _Y})) }{\sqrt{k} } \rightarrow 0 \) and \({k\over np}\rightarrow \infty \), we have for \(\gamma _X<1/\zeta \)

where

Since the estimator for \(\theta _{p,\zeta }\), after normalisation, inherits the limiting distribution of the estimator that was used for \(\gamma _X\),we have that the use of \(\widehat{\gamma }_k^{(H,c)}\) will lead to a potentially biased estimator for \(\theta _{p,\zeta }\). As an alternative, one could use a bias-corrected estimator for \(\gamma _X\), like the one proposed in Beirlant et al. (2016) when \(\beta _Z\) is known, and given by

where

and, for \(s <0\),

The limiting distribution of \(\widehat{\gamma }_k^{(BC,c)}\), after proper normalisation, is given in Theorem 1 of Beirlant et al. (2016), and can be used to obtain the following corollary about \(\widehat{\theta }_{p,\zeta }^{(c)}\) when using \(\widehat{\gamma }_k^{(c)}=\widehat{\gamma }_k^{(BC,c)}\). This bias-corrected estimator for CTM will be denoted as \(\widehat{\theta }_{p,\zeta }^{(BC,c)}\).

Corollary 2

Assume \(({\mathcal {D}}_H)\) holds. Then for \(k,n \rightarrow \infty \), with \(k/n\rightarrow 0\) and \(\sqrt{k} \left( \frac{n}{k} \right) ^{-\min (\gamma _Z\beta _X,\beta _Z)} \rightarrow \lambda \in {\mathbb {R}}\), and p satisfying \(\frac{\log (k/(np^{(\gamma _X+\gamma _Y)/\gamma _Y})) }{\sqrt{k} } \rightarrow 0 \) and \({k\over np}\rightarrow \infty \), we have for \(\gamma _X<1/\zeta \)

Note that the asymptotic variance of the bias-corrected estimator \(\widehat{\theta }_{p,\zeta }^{(BC,c)}\) is larger than the one of the uncorrected estimator \(\widehat{\theta }_{p,\zeta }^{(H,c)}\), but this is expected since bias-reduction implies often an increase in the variance. Additionally, remark that the limiting distribution for \(\widehat{\gamma }_k^{(BC,c)}\) from Theorem 1 in Beirlant et al. (2016) is derived for the case where \(\beta _Z\) is known. In practice the true \(\beta _Z\) is typically unknown, and for this case Beirlant et al. (2016) propose to replace \(\beta _Z\) by \(\widehat{\beta }_Z:= -\rho _Z/\widehat{\gamma }_k^{(H)}\), where \(\rho _Z<0\) is the usual second-order parameter in extreme value statistics (in our context \(\rho _Z = -\beta _Z \gamma _Z\)), and where \(\rho _Z\) is fixed by the user, either at the correct value or mis-specified. In the framework of bias-corrected estimation it is quite common to fix the second-order rate parameter at some value, see, e.g., Feuerverger and Hall (1999), Gomes and Martins (2004), Dutang et al. (2014, 2016). Note that in case \(\rho _Z\) is mis-specified then one typically loses theoretically the bias-correction (in the sense that the mean of the limiting normal distribution is no longer zero), but the bias-corrected estimators continue to perform well with respect to bias, and usually outperform estimators that are not corrected for bias; we refer to the simulation results.

3 Premium calculation in case of censoring

Our aim in this section is to look at the premium principle for excess-of-loss reinsurance. In particular, we want to estimate in case of censorship and when \(\zeta =1\) or 2, the quantity

where \(A_+:=\max (A,0)\). This study is motivated by applications in reinsurance, where X denotes the claim size, \((X-U_X(1/p))_+\) the payment by the reinsurer, which arises when the amount of the claim exceeds the retention level \(U_X(1/p)\). Thus \(\Pi _\zeta (p)\) represents the mean and the second moment of the payment when \(\zeta =1\) or 2, respectively. Since some of the claims are still open at the time of the study, i.e., their specific amounts are not yet known, it is crucial to construct estimators which take this censoring into account.

According to Theorem 3.1 in Goegebeur et al. (2022), if X is of Pareto-type with a continuous distribution function \(F_X\), we have the following links between the CTMs and \(\Pi _\zeta (p)\) for \(\zeta =1\) or 2:

from which the natural estimators adapted for censoring follow:

From these, we can also introduce an estimator for the variance of the payment by the reinsurer, namely \({\widehat{V}}^{(c)}(p):= \widehat{\Pi }^{(c)}_2(p)-( \widehat{\Pi }^{(c)}_1(p) )^2\).

Theorem 3

Under the same assumptions as in Theorem 1, we have

Again, these estimators of \(\Pi _\zeta (p)\), for \(\zeta =1, 2\), are different from those proposed in Goegebeur et al. (2022) but with similar limiting distributions, and weaker restrictions on \(\gamma _X\). This is a nice feature since it allows to construct confidence intervals for \(\Pi _\zeta (p)\) for a wide range of values of \(\gamma _X\).

As before, if we set \(\widehat{\gamma }_k^{(c)}=\widehat{\gamma }_k^{(H,c)}\) in (9) and (10), then the asymptotic bias and variance of the estimator of \(\Pi _\zeta (p)\), denoted by \(\widehat{\Pi }^{(H,c)}_\zeta (p)\), can be made explicit.

Corollary 3

Under the same assumptions as in Corollary 1, we have for \(\zeta =1\) or 2

A similar result can also be obtained for the bias-corrected version, denoted by \(\widehat{\Pi }^{(BC,c)}_\zeta (p)\):

Corollary 4

Under the same assumptions as in Corollary 2, we have for \(\zeta =1\) or 2

4 Simulation study

In this section we evaluate the finite sample performance of the proposed estimator with a simulation experiment. We simulate from the Burr\((\eta ,\lambda ,\tau )\) distribution with distribution function

where \(\eta ,\, \lambda ,\, \tau >0\). This distribution function satisfies Assumption \(({\mathcal {D}}_H)\) with \(\gamma =1/(\lambda \tau )\), \(\beta =\tau \). We consider \(X \sim \text{ Burr }(1,1,4)\), giving \(\gamma _X=0.25\) and \(\beta _X=4\). We choose the distribution of Y to obtain a range of asymptotic censoring proportions as follows:

-

\(Y \sim \text{ Burr }(1,1/4.75,1)\), so \(\gamma _Y=4.75\), \(\beta _Y=1\), and an asymptotic fraction of censoring of 5%,

-

\(Y \sim \text{ Burr }(1,1/2.25,1)\), so \(\gamma _Y=2.25\), \(\beta _Y=1\), and an asymptotic fraction of censoring of 10%,

-

\(Y \sim \text{ Burr }(1,1,1)\), so \(\gamma _Y=1\), \(\beta _Y=1\), and an asymptotic fraction of censoring of 20%.

-

\(Y \sim \text{ Burr }(1,4.2,1)\), so \(\gamma _Y=0.238\), \(\beta _Y=1\), and an asymptotic fraction of censoring of approximately 51%.

The simulation results for some other distributions and parameter settings can be found in the Supplementary Information.

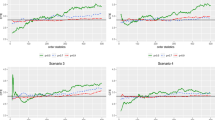

We simulate 200 datasets of size \(n=500\) from the Burr distributions mentioned above and consider estimation of \(\theta _{1/n,1}\), \(\theta _{1/(1.5n),1}\), \(\theta _{1/n,2}\), \(\Pi _1(1/n)\) and V(1/n), except for the distributions leading to 51% of censoring, where we only consider \(\theta _{1/n,1}\) and \(\Pi _1(1/n)\). In Fig. 1 we show the sample mean (left) and sample mean squared error (MSE) (right) of \(\widehat{\theta }_{1/n,1}^{(H,c)}\) (black solid line) and \(\widehat{\theta }_{1/n,1}^{(BC,c)}\) (blue dashed line), computed over the 200 simulation runs as a function of k for 5% (first row), 10% (second row), 20% (third row) and 51% (fourth row) of censoring. Figures 2, 3, 4 and 5 are similar, but concern estimation of \(\theta _{1/n,2}\), \(\Pi _1(1/n)\), V(1/n) and \(\theta _{1/(1.5n),1}\), respectively. As for the estimators based on \(\widehat{\gamma }_k^{(BC,c)}\), we follow Beirlant et al. (2016), where it was suggested to replace \(\beta _Z\) by \(\widehat{\beta }_Z:= -\rho _Z/\widehat{\gamma }_k^{(H)}\), whereafter the estimators for \(\theta _{p,\zeta }\), \(\Pi _1(p)\) and V(p) are computed for several values of \(\rho _Z\), and plotted as a function of k. The \(\rho _Z\)-value that leads to the most stable sample paths is then the one to be used for estimation. In our settings \(\rho _Z=-1.5\) gave the most stable results for all the distributions and estimators under consideration. From the simulations we can draw the following conclusions:

-

The estimator for the CTM with \(\zeta =1\) based on the Hill estimator for \(\gamma \) only works reasonably for small values of k compared to n, which is in line with the theoretical results. For increasing values of k the estimators diverge from the true value and hence show a considerable bias. For the CTM with \(\zeta =2\) the Hill-based estimator performs poorly with a large bias, even at small values of k.

-

On the other hand, the estimator for the CTM based on the bias-corrected estimator of Beirlant et al. (2016) for \(\gamma \) shows an average which is quite stable and close to the true value \(\theta _{p,\zeta }\) for a wide range of values for k. This confirms again our theoretical results which give that \(\widehat{\theta }_{p,\zeta }^{(c)}\), after normalisation, inherits the limiting distribution of the \(\gamma \)-estimator, so if one uses a bias-corrected estimator for \(\gamma \) then this bias-correction is carried over to \(\widehat{\theta }_{p,\zeta }^{(c)}\). Also, \(\widehat{\theta }_{p,\zeta }^{(BC,c)}\) has a minimum value of the MSE which is typically lower than the minimum value of the MSE for \(\widehat{\theta }_{p,\zeta }^{(H,c)}\). Moreover, the bias-corrected estimator has the advantage that the MSE values stay close to the value of the minimum MSE and this for a wide range of values for k. For practical use of the estimators, the stable sample paths of \(\widehat{\theta }_{p,\zeta }^{(BC,c)}\) make the typical issue of choosing k properly less critical, unlike the situation of \(\widehat{\theta }_{p,\zeta }^{(H,c)}\) where proper choice of k is quite crucial.

-

The conclusions above for the CTM also hold for \(\widehat{\Pi }_1^{(c)}(1/n)\) and \({\widehat{V}}^{(c)}(1/n)\). The estimators for \(\Pi _1(1/n)\) and V(1/n) based on \(\widehat{\gamma }_k^{(BC,c)}\) perform better than those based on \(\widehat{\gamma }_k^{(H,c)}\).

-

Estimation of \(\theta _{1/n,2}\) is more challenging than \(\theta _{1/n,1}\), and similarly, estimation of V(1/n) is more difficult than \(\Pi _1(1/n)\).

-

As expected, decreasing p makes the estimation more difficult. This is illustrated in Fig. 5 for the estimation of \(\theta _{1/(1.5n),1}\).

-

For all parameters, the estimation gets more challenging as the proportion of censoring increases, as illustrated by MSEs that get elevated with increasing censoring.

Mean (left) and MSE (right) of \(\widehat{\Pi }_1^{(c)}(1/n)\) based on \(\widehat{\gamma }_k^{(H,c)}\) (black solid line) and \(\widehat{\gamma }_k^{(BC,c)}\) (blue dashed line) as a function of k for 5% (first row), 10% (second row), 20% (third row) and 51% (fourth row) of censoring (colour figure online)

Next, we illustrate the selection of k for \(\widehat{\theta }_{p,\zeta }^{(H,c)}\). In order to make the dependence of \(\widehat{\theta }_{p,\zeta }^{(H,c)}\) on k explicit, we introduce the notation \(\widehat{\theta }_{p,\zeta }^{(H,c)}(k)\). Obviously, small values of k lead to a large variance of the estimator, while a too large k leads to a bias issue. Hence, it is natural to determine k by minimizing an approximation to the asymptotic mean squared error (AMSE) of \(\widehat{\theta }_{p,\zeta }^{(H,c)}(k)\), which, based on Corollary 1, is given by

where

The resulting value for k will be denoted as \({\widehat{k}}\). Note that \(\overline{\mu }_k\) is based on the first line of \(\mu \) in (12). This is motivated as follows. The unknown parameters in the above AMSE expression clearly need to be estimated in practice, see below. By doing so, it is unlikely that the estimates for \(\beta _X\) and \(\beta _Y\) will be equal, so we ignore the last line for \(\mu \) in (12). The second line in (12) is not useful for determining k, as the AMSE would then only depend on the variance component, leading to a selection of the largest possible value of k. Whence our proposal to use the first line in (12). Note that this might imply that we incorrectly use the first line of (12) while we should have used the second line. In such situations the value of k will be chosen too small, though with still an acceptable performance. As an alternative, we could also determine k by minimising the AMSE of \(\widehat{\gamma }_k^{(H,c)}\), given by

where \(\overline{\mu }_k\) is as above. The resulting value for k is denoted by \({\widetilde{k}}\). As mentioned, the above AMSE expressions depend on unknown parameters which need to be estimated from the data. For these we proceed as follows:

-

\(\gamma _X\) is estimated by \(\widehat{\gamma }_k^{(BC,c)}\),

-

The usual second-order parameter \(\rho _X\) in extreme value statistics is given by \(\rho _X=-\beta _X \gamma _X\). We propose to use the canonical choice \(\rho _X=-1\), and to estimate \(\beta _X\) as \(1/\widehat{\gamma }_k^{(BC,c)}\),

-

\(\gamma _Y/(\gamma _X+\gamma _Y)\) is estimated by the fraction of non-censored observations in the top k order statistics, i.e., by \({\widehat{d}}_k\),

-

\(\gamma _Z\) and \(\delta _Z(U_Z(n/k))\) are estimated by fitting the extended Pareto distribution to the relative excesses of the Z-data, see Beirlant et al. (2009).

In Fig. 6 we show the boxplots of \(\widehat{\theta }^{(H,c)}_{1/n,\zeta }({\widehat{k}})/\theta _{1/n,\zeta }\) and \(\widehat{\theta }^{(H,c)}_{1/n,\zeta }({\widetilde{k}})/\theta _{1/n,\zeta }\) for \(\zeta =1,\, 2\), and for the four distributions mentioned above. Overall, and as expected, estimation of the second conditional tail moment is more difficult than the estimation of the first moment, with more bias and variability. Also, \(\widehat{\theta }^{(H,c)}_{1/n,\zeta }({\widehat{k}})\) performs better than \(\widehat{\theta }^{(H,c)}_{1/n,\zeta }({\widetilde{k}})\), which is also expected as \({\widehat{k}}\) minimizes (an estimate of) \(AMSE\left( \widehat{\theta }_{p,\zeta }^{(H,c)}(k) \right) \), while \({\widetilde{k}}\) minimizes \(AMSE(\widehat{\gamma }_k^{(H,c)})\). Finally, as was indicated by Fig. 1, estimation of \(\theta _{1/n,1}\) is very difficult for the dataset with 51% censoring. However, as can be seen from the boxplot in Fig. 6, bottom right, combining \(\widehat{\theta }^{(H,c)}_{1/n,1}(k)\) with the AMSE-based procedure for determining k still leads to acceptable results, though the variability is large.

Boxplots of (a) \(\widehat{\theta }^{(H,c)}_{1/n,1}({\widehat{k}})/\theta _{1/n,1}\), (b) \(\widehat{\theta }^{(H,c)}_{1/n,1}({\widetilde{k}})/\theta _{1/n,1}\), (c) \(\widehat{\theta }^{(H,c)}_{1/n,2}({\widehat{k}})/\theta _{1/n,2}\) and (d) \(\widehat{\theta }^{(H,c)}_{1/n,2}({\widetilde{k}})/\theta _{1/n,2}\) for 5% (top left), 10% (top right), 20% (bottom left) and 51% (bottom right) of censoring

5 Application to insurance data

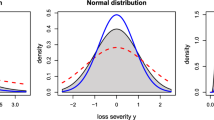

In this section we analyse a dataset from Motor Third Party Liability Insurance (MTPL) provided by a direct insurance company operating in the EU. The dataset contains the yearly payments of the insurance company, corrected for inflation, for claims by its policyholders over the period 1995–2010. The dataset consists of 837 claims, with about 60% of them being right censored (open) at 2010. We refer to Section 1.3.1 in Albrecher et al. (2017) for more details about this dataset. In Fig. 7, left panel, we show the log-claim sizes in chronological order, where the closed claims are depicted in blue and the open claims are in red. As expected, the more recently arrived claims show more censoring than older claims. In order to evaluate the assumption that the underlying distribution of the claim sizes is of Pareto-type we make an adapted Pareto QQ plot in case of right censored data, which is based on the Kaplan–Meier estimator, see Fig. 7, right panel. This Pareto QQ plot shows a clear linear pattern in the largest observations which confirms an underlying Pareto-type distribution. We refer to Beirlant et al. (2007) for more details on the construction and interpretation of such QQ plots. In Bladt et al. (2020) it was argued that the assumption of random right censoring is adequate for these data. Figure 8 shows the Hill estimate \(\widehat{\gamma }_k^{(H,c)}\) (black line) and the bias-corrected estimate \(\widehat{\gamma }_k^{(BC,c)}\) (blue line) for \(\gamma _X\) as a function of k. The Hill estimate is only stable for the smaller values of k, say for k between 50 and 100, while the bias-corrected estimate is stable for k up to 400. For the values of k where \(\widehat{\gamma }_k^{(H,c)}\) is stable it agrees quite well with \(\widehat{\gamma }_k^{(BC,c)}\). Both the Hill and bias-corrected estimate indicate a value for \(\gamma _X\) above 0.5 but below 1, which in view of the assumption \(\gamma _X < 1/\zeta \) indicates that expected values can be estimated but not second moments (and thus variances). According to these values for \(\gamma \) one can expect estimation results with large variability. The estimation of \(\Pi _1(p)\), with \(p=1/n\) as in the simulations, is illustrated in Fig. 9 (left panel), where the estimates based on \(\widehat{\gamma }_k^{(H,c)}\) are in the black solid line and those based on \(\widehat{\gamma }_k^{(BC,c)}\) are in the blue dashed line. The estimates based on \(\widehat{\gamma }_k^{(H,c)}\) are very variable and show hardly any stable part, while the premium estimates based on \(\widehat{\gamma }_k^{(BC,c)}\) show less variability and are more or less stable for k in the range 25 till 150. Finally, we illustrate the construction of confidence intervals for \(\Pi _1(p)\). These will be based on a log-scale version of Theorem 3, as suggested by Drees (2003) in the context of extreme quantile estimation, namely for

which has the same limiting distribution as in Theorem 3. These approximate \(100(1-\alpha )\%\) confidence intervals are then given by

Insurance data. \(\widehat{\Pi }_1^{(c)}(1/n)\) based on \(\widehat{\gamma }_k^{(H,c)}\) (black solid line) and \(\widehat{\gamma }_k^{(BC,c)}\) (blue dashed line) as a function of k (left) and \(\widehat{\Pi }_1^{(c)}(1/n)\) based on \(\widehat{\gamma }_k^{(BC,c)}\) with approximate 95% confidence intervals (right)

where \(\Phi ^{-1}\) denotes the quantile function of the standard normal distribution and \(\widehat{\sigma }_\gamma \) is an estimate of the standard deviation of \(\Gamma \). In Fig. 9 (right panel) we show the approximate 95% confidence intervals for \(\Pi _1(p)\) with \(p=1/n\) as a function of k in case the estimate is based on \(\widehat{\gamma }_k^{(BC,c)}\). The width of these confidence intervals fluctuates with k which is due to the variability of the random quantities appearing in the expression for the confidence interval, e.g., \(\widehat{\sigma }_\gamma \). Note that for this specific dataset the estimation is challenging due to the large values of \(\gamma \), indicating that the second moment does not exist, as well as the large proportion of censoring.

References

Albrecher H, Beirlant J, Teugels JL (2017) Reinsurance: actuarial and statistical aspects. Wiley, Chichester

Beirlant J, Bardoutsos A, de Wet T, Gijbels I (2016) Bias reduced tail estimation for censored Pareto type distributions. Stat Probab Lett 109:78–88

Beirlant J, Guillou A, Dierckx G, Fils-Villetard A (2007) Estimation of the extreme value index and extreme quantiles under random censoring. Extremes 10:151–174

Beirlant J, Joossens E, Segers J (2009) Second-order refined peaks-over-threshold modelling for heavy-tailed distributions. J Stat Plan Inference 139:2800–2815

Beirlant J, Maribe G, Verster A (2018) Penalized bias reduction in extreme value estimation for censored Pareto-type data, and long-tailed insurance applications. Insurance Math Econ 78:114–122

Beirlant J, Worms J, Worms R (2019) Estimation of the extreme value index in a censorship framework: asymptotic and finite sample behavior. J Stat Plan Inference 202:31–56

Bladt M, Albrecher H, Beirlant J (2020) Combined tail estimation using censored data and expert information. Scand Actuar J 6:503–525

Bladt M, Albrecher H, Beirlant J (2021) Trimmed extreme value estimators for censored heavy-tailed data. Electron J Stat 15:3112–3136

Drees H (2003) Extreme quantile estimation for dependent data, with applications to finance. Bernoulli 9:617–657

Dutang C, Goegebeur Y, Guillou A (2014) Robust and bias-corrected estimation of the coefficient of tail dependence. Insurance Math Econ 57:46–57

Dutang C, Goegebeur Y, Guillou A (2016) Robust and bias-corrected estimation of the probability of extreme failure sets. Sankhya A 78:52–86

Einmahl JHJ, Fils-Villetard A, Guillou A (2008) Statistics of extremes under random censoring. Bernoulli 14:207–227

Feuerverger A, Hall P (1999) Estimating a tail exponent by modelling departure from a Pareto distribution. Ann Stat 27:760–781

Goegebeur Y, Guillou A, Pedersen T, Qin J (2022) Extreme-value based estimation of the conditional tail moment with application to reinsurance rating. Insurance Math Econ 107:102–122

Gomes MI, Martins MJ (2004) Bias-reduction and explicit semi-parametric estimation of the tail index. J Stat Plan Inference 124:361–378

Hall P, Welsh AH (1985) Adaptive estimates of parameters of regular variation. Ann Stat 13:331–341

Hill BM (1975) A simple general approach to inference about the tail of a distribution. Ann Stat 3:1163–1174

Kaplan EL, Meier P (1958) Nonparametric estimation from incomplete observations. J Am Stat Assoc 53:457–481

Stupfler G (2019) On the study of extremes with dependent right-censoring. Extremes 22:97–129

Weissman I (1978) Estimation of parameters and large quantiles based on the \(k\) largest observations. J Am Stat Assoc 73:812–815

Worms J, Worms R (2014) New estimators of the extreme value index under random right censoring, for heavy-tailed distributions. Extremes 17:337–358

Worms J, Worms R (2016) A Lynden–Bell integral estimator for extremes of randomly truncated data. Stat Probab Lett 109:106–117

Worms J, Worms R (2018) Extreme value statistics for censored data with heavy tails under competing risks. Metrika 81:849–889

Acknowledgements

The authors sincerely thank the referees, the Associate Editor and Editor for their helpful comments on our paper. This work was supported by the French National Research Agency under Grant ANR-19-CE40-0013-01/ExtremReg project and by the CNRS International Research Network MaDeF.

Funding

Open access funding provided by University Library of Southern Denmark.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Goegebeur, Y., Guillou, A. & Qin, J. Conditional tail moment and reinsurance premium estimation under random right censoring. TEST 33, 230–250 (2024). https://doi.org/10.1007/s11749-023-00890-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-023-00890-x

Keywords

- Bias-reduction

- Conditional tail moment

- Excess-of-loss reinsurance

- Pareto-type distribution

- Random censorship