Abstract

Different cognitive aids have been recently developed to support the management of cardiac arrest, however, their effectiveness remains barely investigated. We aimed to assess whether clinicians using any cognitive aids compared to no or alternative cognitive aids for in-hospital cardiac arrest (IHCA) scenarios achieve improved resuscitation performance. PubMed, EMBASE, the Cochrane Library, CINAHL and ClinicalTrials.gov were systematically searched to identify studies comparing the management of adult/paediatric IHCA simulated scenarios by health professionals using different or no cognitive aids. Our primary outcomes were adherence to guideline recommendations (overall team performance) and time to critical resuscitation actions. Random-effects model meta-analyses were performed. Of the 4.830 screened studies, 16 (14 adult, 2 paediatric) met inclusion criteria. Meta-analyses of eight eligible adult studies indicated that the use of electronic/paper-based cognitive aids, in comparison with no aid, was significantly associated with better overall resuscitation performance [standard mean difference (SMD) 1.16; 95% confidence interval (CI) 0.64; 1.69; I2 = 79%]. Meta-analyses of the two paediatric studies, showed non-significant improvement of critical actions for resuscitation (adherence to guideline recommended sequence of actions, time to defibrillation, rate of errors in defibrillation, time to start chest compressions), except for significant shorter time to amiodarone administration (SMD − 0.78; 95% CI − 1.39; − 0.18; I2 = 0). To conclude, the use of cognitive aids appears to have benefits in improving the management of simulated adult IHCA scenarios, with potential positive impact on clinical practice. Further paediatric studies are necessary to better assess the impact of cognitive aids on the management of IHCA scenarios.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Despite improvements in cardiac arrest (CA) outcomes over the last couple of decades, clinical management remains challenging with low survival to hospital discharge for both out-of-hospital CA (OHCA) and in-hospital CA (IHCA). Survival rates are lower in adults compared with children (4.5–10% [1, 2] for OHCA and 20–25% [3] in IHCA compared with 4%-17.7% [1, 4, 5] and 27%–43% respectively) [6,7,8]. To optimize the management of CA and improve patients’ clinical outcomes, international resuscitation organisations periodically update and publish evidence-based resuscitation guidelines [6, 9,10,11]. Lay rescuers and healthcare providers, however, often struggle to adhere to resuscitation guidelines during management of CA [12,13,14,15,16,17,18,19,20,21,22], negatively impacting patient prognosis and outcomes [23].

Several educational strategies and novel technologies have been designed to assist healthcare providers in improving their adherence to guidelines for the management of CA [24,25,26,27,28,29]. Recently, different cognitive aids, in the format of paper-based and digital resources, have been developed for both lay rescuers and healthcare providers to support the management of OHCA and IHCA [30,31,32,33,34,35,36,37,38,39,40]. Systematic reviews on audio/video guidance and smartphone applications (apps) developed to support bystanders in managing OHCA showed that the use of these tools was associated with improved quality of bystanders’ cardiopulmonary resuscitation (CPR) [39,40,41]. However, the effectiveness of cognitive aids designed to assist health professionals in the medical management of IHCA remains uncertain.

The preliminary results of a recent systematic review conducted by the International Liaison Committee on Resuscitation (ILCOR) [42] showed that there are no studies evaluating the impact of cognitive aids in the management of CA in the real-life environment. In this study, we aimed to assess the effectiveness of paper-based (e.g. pocket cards, posters, or checklists) or electronic cognitive aids (e.g. applications/software for smartphones, tablets, laptops or augmented reality glasses) in improving the management of IHCA in the simulation setting. The use of cognitive aids during these uncommon and complex emergencies is challenging to assess in the natural environment and therefore simulation studies allow the generation of knowledge that can be transferred to clinical practice.

Methods

This systematic review and meta-analysis was performed following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [43]. The study protocol was registered in PROSPERO (CRD42020207323).

Search strategy

The bibliographic search focussed on three main concepts: cardiac arrest, cognitive aid, and simulation. The search strategy was composed of subject headings and keyword terms, translated and adjusted to the syntax of each of the searched databases: MEDLINE (PubMed Interface), Embase (OVID interface), the Cochrane Library and CINAHL. In addition, the United States clinical trials registry and the Cochrane Central Register of Controlled Trials were also searched for unpublished completed trial reports, using the same search terms. The World Health Organization International Clinical Trials Registry Platform (ICTRP) could not be explored due to heavy traffic generated by the COVID-19 outbreak. The databases searches were limited to publications from 1974, when the first guidelines on resuscitation were published [44], until December 31, 2021. The detailed comprehensive search strategies and the message indicated by the ICTRP portal are reported in the supplementary material (Supplementary files 1 and 2, respectively).

Study selection

We selected randomized controlled trials, non-randomized controlled trials, and observational studies with a comparative group, which involved healthcare professionals (physicians, residents, medical students, nurses) managing simulated scenarios of adult or paediatric IHCA with or without the use of a cognitive aid or comparing different types of cognitive aids.

The intervention of interest was the use of a cognitive aid, defined as the “presentation of prompts aimed to encourage recall of information to increase the likelihood of desired behaviours, decisions, and outcomes” [45]. Cognitive aids could be of any format, comprising paper-based types, such as pocket cards, pamphlets, checklists, posters, and laminated cards, and electronic mobile applications/software made available on different devices, such as mobile phones, smartphones, tablets, laptops, computers, and augmented reality glasses.

We included studies where the comparison(s) to the intervention were either: (i) no use of cognitive aids or (ii) the use of an alternative cognitive aid.

We excluded: (i) animal studies; (ii) incomplete/ongoing or unpublished studies (trial protocols, abstract/posters published only in conference/congress proceedings); (iii) studies assessing cognitive tools that support a single resuscitation task [e.g. feedback devices to guide depth/rate of chest compressions (CC); electronic support to exclusively guide drug preparation/administration]; (iv) studies assessing cognitive aids exclusively used as training/educational tools; (v) studies about neonatal resuscitation and (vi) studies exclusively about telemedicine, not assessing cognitive tools. No language or other restrictions were applied. Studies in languages other than English or Italian, if judged relevant after reading the abstract, were translated and evaluated.

Our primary outcomes were (i) overall team performance and adherence to guideline recommendations measured by novel or validated scoring systems/tools/checklists assessing team performance in managing the CA scenario or by pre-defined errors in actions (such as drug administration or defibrillations), delays or lack of performance of critical actions for resuscitation; and (ii) time to perform critical actions for resuscitation (i.e. initial clinical check for CA, start of CPR, start of ventilation, drug administration, defibrillation). The secondary outcomes of interest were: (i) CPR quality metrics (CC mean rate, mean depth, mean recoil, and CC/no-flow fraction or percentage of CPR compliance to guideline recommendations, no-blow fraction/ventilation fraction); (ii) non-technical skills; (iii) evaluation of cognitive aid usability; and (iv) evaluation of users’ perceived workload.

Relevant studies were identified through a three-stage process. First, two independent reviewers (EF and MA) performed a screening based on publication titles and abstracts. Then, to determine final inclusion, two additional independent reviewers (FC and GT) reviewed the full texts of the studies identified through the screening. Any disagreement at both stages was resolved by a third independent reviewer (SB). We used the Covidence systematic review management software [46] for these selection processes. We also hand-searched the reference lists of finally included articles to ensure key articles had not been overlooked.

Data collection process

Two authors (FC and EF) independently extracted information from the finally included articles in a standard report form prepared ad-hoc for this study. The following information was extracted: study design, characteristics of the study population, type of scenario (paediatric or adult cardiac arrest; shockable/non-shockable rhythm), details on the intervention and control, and outcome measures. Study authors were contacted to obtain relevant missing data. Results were presented for available data only.

Quality assessment and risk of bias in individual studies

Two authors (FC and GT) independently assessed each included study using the ‘Cochrane Risk of Bias’ RoB 2.0 tool [47]. Any difference in opinion between the two authors were settled by a third reviewer (SB). Due to the nature of the intervention, “blinding of participants and personnel” and “blinding of outcome assessors” was not possible. Consequently, the “blinding of participants and personnel” domain was excluded from the quality assessment and the “blinding of outcome assessors" domain was considered as the "blinding of statisticians” domain. In the domain “other sources of bias” we assessed the presence of a published/available protocol, its level of detail and the concordance between the protocol and the methods/results of the study.

Statistical analysis

When ≥ 2 adult studies adopting the same type of intervention and comparators reported at least a measure of central tendency and a measure of dispersion for results on any of the outcomes of interest we performed a meta-analysis. Meta-analyses of adult studies were stratified by the type of cognitive aids assessed. Similarly, results of paediatric studies were combined in a meta-analysis when they had sufficient similarities in study design, participants, type of interventions and comparators, and outcome measures.

We planned to summarize dichotomous outcomes, as risk ratios (RR) with 95% CI. Standardized mean differences (SMD) and 95% CI were used for studies reporting continuous outcomes. Meta-analyses were performed using the random effects model due to the high heterogeneity of the studies. We used the I2 statistics to assess the heterogeneity between studies. Due to the limited number of studies for which meta-analyses could be performed, only one subgroup analysis by type of cognitive aid was undertaken. All analyses were conducted using R (version 4.0.2.).

Results

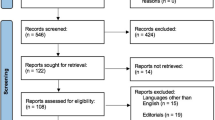

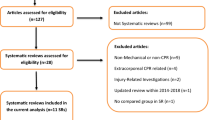

Of the 4224 screened studies, 16 met our inclusion criteria: 14 adult CA studies [32,33,34,35,36, 48,49,50,51,52,53,54,55,56] (for a total of 688 scenarios) and two paediatric CA studies [30, 31] (for a total of 46 scenarios). The PRISMA flow chart for the study selection process is shown in Fig. 1.

Adult studies

Studies characteristics

The characteristics of the 14 included adult studies are shown in Table 1.

The articles were published between 1995 and 2021, with nine (64%) published in the past three years [33, 34, 48, 49, 52,53,54,55,56]. Thirteen studies were RCTs: seven with a crossover design [35, 36, 49, 51, 54,55,56], five with a two-arm parallel design [32, 34, 48, 50, 53], and one with a three-arm parallel design [33]. Only one study was a non-randomized controlled crossover trial [52].

The type of cardiac rhythm evaluated in the 14 studies was: (i) mixed (both shockable and non-shockable rhythm) (n = 6) [34,35,36, 52, 54, 55]; (ii) shockable rhythm [ventricular fibrillation (VF)/pulseless ventricular tachycardia (pVT)] (n = 6) [33, 48, 50, 51, 53, 56]; (iii) pulseless electrical activity (PEA) (n = 1) [49]; (iv) and PEA that changed into VF (n = 1) [32].

Teams consisted of medical residents in eight studies [32, 33, 35, 50, 51, 53,54,55], registered nurses in six [34, 35, 48, 52, 54, 56], attending physicians in six [34, 35, 48, 49, 54, 56], nursing students in one [52] and medical students in another one [36].

Ten studies evaluated the effectiveness of interactive digital cognitive tools installed on an electronic device: four smartphone apps [32, 33, 51, 55], a tablet app [48], an electronic decision support system controlled by a handheld tablet device [52], a dynamic electronic cognitive aid on a large screen display [53], an interactive web-based application intended to be viewed by the entire team on a large screen [54], a computer-based prompting device [50], and a Decision Support Tool on Ipod Touch [36]. Four studies [34, 35, 49, 56] evaluated paper-based cognitive tools. Two studies considered the use of paper-based cognitive aids as a control group [33, 53], including the three-arm RCT [33] that compared a smartphone app with both a paper-based cognitive aid and no support.

Overall, of the 688 IHCA scenarios included in the selected studies, 235 involved the use of an electronic support, 135 a paper-based support and 318 no support. The number of scenarios per study ranged from eight [51, 52] to 53 [55] per arm.

Quality assessment

The summary assessment of risk of bias of the included RCTs is displayed in Table 2. The only non-randomized controlled trial [52], as inherently lower in quality than RCTs, was not evaluated. All but two studies were at high risk of bias in one or more domains, with the “other sources of bias” domain more frequently classified as high risk. There were no studies at low risk of bias, as the two studies that were not classified as high risk, both had an unclear risk of bias in one or two domains.

Synthesis of results and meta-analyses

The results for our review of primary and secondary outcomes assessed by the included studies are presented in the supplementary material (Supplementary file 3 and 4).

Eleven studies evaluated teams’ overall CA management. Three of them were excluded from the meta-analyses since they did not report a measure of central tendency (mean or median) and/or dispersion of data (standard deviation or interquartile range) in their results [35, 36, 54]. The meta-analyses conducted on the remaining eight studies [32,33,34, 48, 49, 51, 53, 55] showed that overall performance was significantly better in the groups that used an electronic support tool compared to the groups without any support (Fig. 2a; SMD 1.00, 95% CI 0.43;1.56, I2 = 74%), in the groups that used a paper-based cognitive aid compared to the group that had no support (Fig. 2a; SMD 1.54, 95% CI 0.28;2.80, I2 = 86%), and in the groups with an electronic or paper support tool in comparison to the groups that had no support (Fig. 2a; SMD 1.16, 95% CI 0.64; 1.69, I2 = 79%). Lastly, overall performance was significantly better in the groups that used an electronic cognitive aid in comparison to the groups that used a paper-based cognitive aid (Fig. 2b; SMD 1.87, 95% CI 1.31;2.44, I2 = 0%). As shown in Fig. 2, the studies by Koers [34] and Urman [49] are reported twice because they analysed and reported the results of two different CA scenarios separately, thus meta-analyses were conducted considering each scenario as an independent analysis unit. In addition, the study by Donzé et al. [33] is included in two meta-analyses (Figs. 2a, b) because this is a three-parallel-arm study that evaluated both electronic and paper-based cognitive aid versus no cognitive aid and electronic versus paper-based cognitive aid. Funnel plots for each meta-analysis are reported in the supplementary material (Supplementary file 5).

Remarkably, among all studies evaluating performance, only two [32, 36] used a previously validated tool (Supplementary file 3). Eight studies [36, 48, 50, 52,53,54,55,56] evaluated deviations from published guidelines, with approximately 50 different deviations being assessed, mostly omissions of critical actions (Supplementary file 4). Overall, fewer deviations were detected in the intervention groups. In particular, two studies evaluated the impact of electronic cognitive aids [52, 53] on failure to defibrillate and failure to administer adrenaline, and reported a significant reduction of these errors in the intervention groups using the electronic tool (Supplementary file 4).

Times to critical actions were analysed by three studies [36, 48, 54]. Two studies [36, 48] assessed the time to first defibrillation; no statistically significant difference was found between groups by Field et al. [36], while a shorter time was reported in the intervention group by Grundgeiger et al. [48], but a statistical comparison was not performed for this specific item (Supplementary file 3). Crabb et al. [54] analysed time differences from guideline recommended rhythm check time (every 2 min), defibrillation time (every 2 min) and adrenaline administration time (every 3 min). Only rhythm check time difference and the aggregate recommended intervention time difference were significantly lower in the intervention group (Supplementary file 3). Grundgeiger et al. [48] analysed time differences from guideline recommended chest compressor change and rhythm check finding lower times to achieve these actions in the intervention group, but the analysis was only descriptive and statistical significance was not reported.

In the only two studies assessing the quality of CPR, [48, 55] Hejjaji et al. [55] reported that the use of the smartphone app (Redivus Code Blue) resulted in a marginally, yet statistically significant, higher CC fraction, while in the study by Grundgeiger et al. [48], which used a different tablet app, a statistical comparison between the intervention and the control group was not performed for CPR metrics (Supplementary file 3).

The non-technical skills of the teams were assessed by three studies [33, 51, 53] which all used validated scores to assess this domain (Supplementary file 3). In the two studies evaluating the same smartphone app (MAX) [33, 51], a significant benefit of the app in improving non-technical skills of the teams was found. However, one study [41] did not report the scores divided by scenario, but only a combined overall score for all five types of scenarios of which only one was a CA case.

Only one study assessed the workload perceived by the participants [48] showing statistically significant lower team leaders’ mental and physical demand, and effort to achieve their performance in the intervention group respect to the control group.

Eight studies [32, 34, 35, 49, 50, 54,55,56] evaluated the cognitive aid usability. The cognitive supports, both electronic and paper-based, were rated as 'easy to use' in all studies [32, 34, 35] evaluating this domain. Additionally, five out of five studies [32, 34, 35, 54, 56] reported that the majority of health professionals would want to use the cognitive support tool in future real emergencies. Furthermore, most of the participants involved in three studies evaluating this aspect, reported they would like health professionals to use the cognitive support tool if they were themselves the victims of a CA [34, 54, 56].

Potential harm from the use of the cognitive aids under evaluation was also investigated by the included studies. One study [53] found more interruptions during compressions in the intervention group, while two studies [52, 55] highlighted how the use of the cognitive aid could be a source of distraction. Low et al. [32] reported that half of the participants feared a possible perception of non-professionalism by the patient or other health professionals, while Jones et al. [52] reported possible side effects related to the use of an electronic decision system such as unsafe shock delivering and drug administration delays.

Paediatric studies

Study characteristics

The characteristics of the two included paediatric studies [30, 31] are reported in Table 1. Both were recent RCTs conducted by the same research team and evaluated pVT scenarios managed by paediatric residents. They both tested an app-format cognitive support tool, one for tablets and one for augmented reality glasses (Google Glasses), compared to a paper-based cognitive aid (PALS pocket reference cards).

Quality assessment

Overall, the quality assessment showed a low risk of bias for most domains, with the exception of the "statistician blinding" domain, which was not reported by the two studies, and the "other risk of bias" domain which was rated as 'high' for both studies, due to the lack of publication of the study protocols (Table 2).

Synthesis of results and meta-analyses

Statistically significant improvements of the intervention compared to the control groups were detected only for time to amiodarone administration (SMD − 0.78, 95% CI − 1.39; − 0.18, I2 = 0%) (Fig. 3). A non-statistically significant improvement in the teams that used the electronic cognitive aid respect to the teams that used the paper-based cognitive aid was detected in time to defibrillation and start of CC, rate of errors in defibrillation attempts, and rate of teams that followed the correct sequence of actions, while no difference was shown in time to administer epinephrine (Fig. 3, Supplementary file 6).

With respect to the stress perceived by participants post scenario, this was significantly lower in the groups that used the electronic cognitive tools, compared with the control groups (Supplementary file 6; SMD − 0.72; 95% CI − 1.32; − 0.11; I2 = 0%).

The results of the additional outcome measures that were obtained in each of the two paediatric studies, and that could not be combined in meta-analyses, are reported in Supplementary file 7. Neither studies assessed the quality of CPR, the participants’ non-technical skills, and the usability of the cognitive aid.

Discussion

Our findings show that the use of cognitive aids appears to reduce deviations from guideline recommendations and improve overall resuscitation performance in the management of simulated IHCA scenarios. While the overall use of either a digital or paper-based cognitive aid is associated with an improvement of the assessed outcomes, the number and/or the heterogeneity of the included studies with respect to the outcome(s) assessed, the intervention and the comparator, substantially limited our ability to pool their results in a robust meta-analysis. Studies assessing the effectiveness of using cognitive aids for the management of IHCA in the simulation setting are still scant, especially in paediatrics, and the comparison of different types of cognitive aids limited. Only one of the 16 included studies endeavoured to compare a digital aid, versus a paper aid, versus no cognitive aid, while only three studies (2 paediatric and one adult) compared a digital versus a paper-based cognitive aid. Although the digital tools showed to be superior to the paper cognitive aids in all these studies, the evidence is not sufficient to recommend digital over paper-based cognitive aids, especially considering the heterogeneity between studies and individual study limitations.

While the past five years have seen an increase in interest in this field, with most of the studies published in this time frame, this is not yet on par with the literature about the use of cognitive aids for the management of OHCA, for which several studies and systematic reviews have already been published [39, 41, 57,58,59,60]. Notably, a main difference in the types of cognitive tools employed in OHCA and IHCA is that cognitive aids for OHCA are mostly designed to support lay rescuers to assist a victim of CA and are primarily focussed on asking for help and on the quality of CPR [39, 41, 58,59,60]. Thus, findings cannot be generalized across the two settings. The relative lack of studies investigating cognitive support aids for IHCA is also underlined by a recent systematic review about cognitive aid use during resuscitation, published by the ILCOR [42], which could not find any study assessing the management of IHCA real-life scenarios by healthcare professionals, except for one article [61]. This article was however excluded from the current review, since the examined cognitive aid did not support the overall management of CA, but only single aspects of resuscitation (i.e. clarifying roles and team coordination).

To the best of our knowledge, this review is the first to systematically evaluate the effectiveness of cognitive aids in helping healthcare professionals optimize the management of adult or paediatric simulated IHCA. Our study shows that cognitive aids improved overall performance and adherence to guidelines, especially in terms of fewer omission of actions, such as failure to defibrillate and failure to administer adrenaline for adult CA, and in terms of decreased errors (mainly related to defibrillation) for paediatric CA. These findings are clinically relevant because a greater number of deviations from guidelines have previously been associated with a lower probability of achieving Return Of Spontaneous Circulation (ROSC) and survival to hospital discharge [62, 63].

The times of critical actions also resulted to be shorter in the intervention groups. The reduction in time to perform interventions is also clinically relevant, as different studies report that a shorter time to interventions is associated with better clinical outcomes [16, 22, 64,65,66,67]. Moreover, in one study that evaluated the quality of CPR, the compression fraction was higher with the use of the cognitive aid; this is an essential component of resuscitation as it could independently predict a higher survival rate [68, 69].

The non-technical skills within the intervention teams were rated better than those of the control teams. An improvement in non-technical skills may result in improved overall resuscitation and patient outcome [70, 71].

Cognitive aids assessed for usability received positive reviews. However, concerns were raised regarding the possible risk for distraction, reduction of situational awareness and perception of unprofessionalism. For example, the use of the cognitive aid was associated with more interruptions during CPR in one study [53] and this could in turn be associated with a reduction in victim survival in real-life scenarios [72]. Moreover, it is important to consider that the use of cognitive support tools could increase users’ cognitive load. Remarkably, in the current meta-analysis, the perceived workload resulted to be significantly reduced in the app groups. A reduction of 'workload' can improve the performance of the resuscitation team [73]. Possible adverse effects must always be considered to refine cognitive tools and optimize users’ interaction with them, to avoid a negative influence on clinical outcomes.

The quality assessment of the included studies showed a very heterogeneous risk of bias. The paediatric studies received overall good evaluations; the risk of bias was linked to the absence of published protocols and to the unspecified "blinding" of the statistician. Adult studies showed a medium–high risk of bias linked not only to the lack of report on the “blinding” of the statistician and allocation concealment processes, but also to the absence of a published protocol. Only two studies [35, 56], evaluating paper cognitive aids, were not classified as high risk, but both had an unclear risk of bias in one or two domains. Furthermore, it is important to highlight that the number of scenarios per study arm was limited in most of the studies.

Finally, a limitation of this systematic review is the inclusion of studies conducted in the simulation setting. We focussed on simulation studies because, according to a recent systematic review conducted by the ILCOR group [42], no study at the time of the review had evaluated the impact of cognitive aids on the management of real-life CA. Additionally, as we limited our search to healthcare databases, possible relevant literature from the field of usability, human factor design or human computer interaction may have possibly been excluded. However, the use of a highly sensitive search strategy is very likely to have identified all relevant studies, limiting the number of studies that might have been missed. Furthermore, we included in the meta-analyses RCTs with a crossover design as if they were parallel group RCTs and this approach might have affected our results, given the learning effect related to the former study design. The three-adult crossover RCTs [49, 51, 55] included in the meta-analyses found non-significant or only mild improvement in the group using a cognitive aid, thus leading to potential underestimation of the benefit of using cognitive support tools. Lastly, we had to exclude ten potentially relevant records (0.24% of excluded studies) as we could not obtain complete information in the methods and results from contacting the authors.

Considering the findings of this systematic review, it is advisable that future studies follow the extensions of the CONSORT and STROBE Statements [74] for simulation-based research to guarantee a rigorous study methodology and comparable results. Given the high heterogeneity of included studies, especially in terms of outcome measures, we also suggest that future studies use the same validated tools for the assessment of their outcomes (i.e. team performance, non-technical skills, workload). As for the definition of time to perform critical interventions, the focus on clinically relevant actions for resuscitation and the use of clearly defined timeframes (i.e. from the recognition of cardiac arrest to the specific action analysed), will allow a correct interpretation of the results, the comparisons between different studies, and robust meta-analyses in the future.

Furthermore, as already pointed out by Marshall et al. [75], most studies on cognitive aids focus on content and less on product design, presentation and usability. A review performed by Metelman et al. [40] showed that most of the apps available in online stores for the management of OHCA are not tested for content, effectiveness and usability. Therefore, since cognitive aids should be developed based on users’ needs to be easy to use and intuitive to interact with, as reported in some promising experience [76,77,78], we strongly encourage that future studies assess the usability of cognitive aids employed for IHCA management with validated and reliable tools, such as the System Usability Scale [79].

Conclusions

Although published studies are scant and heterogeneous, the use of cognitive aids appears promising in reducing deviations from guideline recommendations in the management of simulated adult IHCA scenarios, with potential positive impact on clinical practice. As for paediatric studies, the low number of studies and scenarios included, without a control group using no cognitive aid, does not allow to conclude in favour or against the use of cognitive aids. Further studies using a rigorous methodology and comparable outcome measures will help provide a definitive answer on the effectiveness of cognitive aids in improving health professionals’ management of IHCA.

Data availability

All data generated or analysed during this study are included in this published article (and its supplementary information files).

Code availability

Not applicable.

References

Atkins DL, Everson-Stewart S, Sears GK, Daya M, Osmond MH, Warden CR, Berg RA; Resuscitation Outcomes Consortium Investigators (2009) Epidemiology and outcomes from out-of-hospital cardiac arrest in children: the Resuscitation Outcomes Consortium Epistry-Cardiac Arrest. Circulation 119:1484–91. https://doi.org/10.1161/CIRCULATIONAHA.108.802678

Brady WJ, Mattu A, Slovis CM (2019) Lay responder care for an adult with out-of-hospital cardiac arrest. N Engl J Med 381:2242–2251. https://doi.org/10.1056/NEJMra1802529

Andersen LW, Holmberg MJ, Berg KM, Donnino MW, Granfeldt A (2019) In-hospital cardiac arrest: a review. JAMA 321:1200–1210. https://doi.org/10.1001/jama.2019.1696

Donoghue AJ, Nadkarni V, Berg RA, Osmond MH, Wells G, Nesbitt L, Stiell IG, Investigators CPCA (2005) Out-of-hospital pediatric cardiac arrest: an epidemiologic review and assessment of current knowledge. Ann Emerg Med 46:512–522. https://doi.org/10.1016/j.annemergmed.2005.05.028

Nehme Z, Namachivayam S, Forrest A, Butt W, Bernard S, Smith K (2018) Trends in the incidence and outcome of paediatric out-of-hospital cardiac arrest: A 17-year observational study. Resuscitation 128:43–50. https://doi.org/10.1016/j.resuscitation.2018.04.030

Topjian AA, Raymond T, Atkins D, Chan M, Duff JP, Joyner BL Jr, Lasa JJ, Lavonas EJ, Levy A, Mahgoub M, Meckler GD, Roberts KE, Sutton RM, Schexnayder SM; Pediatric Basic and Advanced Life Support Collaborators (2020) Part 4: Pediatric Basic and Advanced Life Support: 2020 American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation 142(16_suppl_2):S469–523. https://doi.org/10.1161/CIR.0000000000000901

Nadkarni VM, Larkin GL, Peberdy MA, Carey SM, Kaye W, Mancini ME, Nichol G, Lane-Truitt T, Potts J, Ornato JP, Berg RA, Registry N, of Cardiopulmonary Resuscitation Investigators, (2006) First documented rhythm and clinical outcome from in-hospital cardiac arrest among children and adults. JAMA 295:50–57. https://doi.org/10.1001/jama.295.1.50

Girotra S, Spertus JA, Li Y, Berg RA, Nadkarni VM, Chan PS; American Heart Association Get With the Guidelines–Resuscitation Investigators (2013) Survival trends in pediatric in-hospital cardiac arrests: an analysis from get with the guidelines-resuscitation. Circ Cardiovasc Qual Outcomes 6(1):42-9. https://doi.org/10.1161/CIRCOUTCOMES.112.967968

Panchal AR, Bartos JA, Cabañas JG, Donnino MW, Drennan IR, Hirsch KG, Kudenchuk PJ, Kurz MC, Lavonas EJ, Morley PT, O'Neil BJ, Peberdy MA, Rittenberger JC, Rodriguez AJ, Sawyer KN, Berg KM; Adult Basic and Advanced Life Support Writing Group (2020) Part 3: Adult Basic and Advanced Life Support: 2020 American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 142(16_suppl_2):S366–468. https://doi.org/10.1161/CIR.0000000000000916

Soar J, Böttiger BW, Carli P, Couper K, Deakin CD, Djärv T, Lott C, Olasveengen T, Paal P, Pellis T, Perkins GD, Sandroni C, Nolan JP (2021) European resuscitation council guidelines 2021: adult advanced life support. Resuscitation 161:115–151. https://doi.org/10.1016/j.resuscitation.2021.02.010

Van de Voorde P, Turner NM, Djakow J, de Lucas N, Martinez-Mejias A, Biarent D, Bingham R, Brissaud O, Hoffmann F, Johannesdottir GB, Lauritsen T, Maconochie I (2021) European resuscitation council guidelines 2021: paediatric life support. Resuscitation 161:327–387. https://doi.org/10.1016/j.resuscitation.2021.02.015

Cheng A, Hunt EA, Grant D, Lin Y, Grant V, Duff JP, White ML, Peterson DT, Zhong J, Gottesman R, Sudikoff S, Doan Q, Nadkarni VM, Brown L, Overly F, Bank I, Bhanji F, Kessler D, Tofil N, Davidson J, Adler M, Bragg A, Marohn K, Robertson N, Duval-Arnould J, Wong H, Donoghue A, Chatfield J, Chime N; International Network for Simulation-based Pediatric Innovation, Research, and Education CPR Investigators (2015) Variability in quality of chest compressions provided during simulated cardiac arrest across nine pediatric institutions. Resuscitation 97:13–9. https://doi.org/10.1016/j.resuscitation.2015.08.024

Hunt EA, Vera K, Diener-West M, Haggerty JA, Nelson KL, Shaffner DH, Pronovost PJ (2009) Delays and errors in cardiopulmonary resuscitation and defibrillation by pediatric residents during simulated cardiopulmonary arrests. Resuscitation 80:819–825. https://doi.org/10.1016/j.resuscitation.2009.03.020

Hunt EA, Walker AR, Shaffner DH, Miller MR, Pronovost PJ (2008) Simulation of in-hospital pediatric medical emergencies and cardiopulmonary arrests: highlighting the importance of the first 5 minutes. Pediatrics 121:e34-43. https://doi.org/10.1542/peds.2007-0029

Labrosse M, Levy A, Donoghue A, Gravel J (2015) Delays and errors among pediatric residents during simulated resuscitation scenarios using Pediatric Advanced Life Support (PALS) algorithms. Am J Emerg Med 33:1516–1518. https://doi.org/10.1016/j.ajem.2015.07.049

Khera R, Chan PS, Donnino M, Girotra S; American Heart Association’s Get With The Guidelines-Resuscitation Investigators (2016) Hospital variation in time to epinephrine for nonshockable in-hospital cardiac arrest. Circulation. 134:2105–14. https://doi.org/10.1161/CIRCULATIONAHA.116.025459

Meaney PA, Bobrow BJ, Mancini ME, Christenson J, de Caen AR, Bhanji F, Abella BS, Kleinman ME, Edelson DP, Berg RA, Aufderheide TP, Menon V, Leary M; CPR Quality Summit Investigators, the American Heart Association Emergency Cardiovascular Care Committee, and the Council on Cardiopulmonary, Critical Care, Perioperative and Resuscitation (2013) Cardiopulmonary resuscitation quality: improving cardiac resuscitation outcomes both inside and outside the hospital. Circulation. 128:417–35. https://doi.org/10.1161/CIR.0b013e31829d8654

Sutton RM, Case E, Brown SP, Atkins DL, Nadkarni VM, Kaltman J, Callaway C, Idris A, Nichol G, Hutchison J, Drennan IR, Austin M, Daya M, Cheskes S, Nuttall J, Herren H, Christenson J, Andrusiek D, Vaillancourt C, Menegazzi JJ, Rea TD, Berg RA, Investigators ROC (2015) A quantitative analysis of out-of-hospital pediatric and adolescent resuscitation quality–A report from the ROC epistry-cardiac arrest. Resuscitation 93:150–157. https://doi.org/10.1016/j.resuscitation.2015.04.010

Niles DE, Duval-Arnould J, Skellett S, Knight L, Su F, Raymond TT, Sweberg T, Sen AI, Atkins DL, Friess SH, de Caen AR, Kurosawa H, Sutton RM, Wolfe H, Berg RA, Silver A, Hunt EA, Nadkarni VM; pediatric Resuscitation Quality (pediRES-Q) Collaborative Investigators (2018) Characterization of pediatric in-hospital cardiopulmonary resuscitation quality metrics across an international resuscitation collaborative. Pediatr Crit Care Med 19:421–32. https://doi.org/10.1097/PCC.0000000000001520

Abella BS, Sandbo N, Vassilatos P, Alvarado JP, O’Hearn N, Wigder HN, Hoffman P, Tynus K, Vanden Hoek TL, Becker LB (2005) Chest compression rates during cardiopulmonary resuscitation are suboptimal: a prospective study during in-hospital cardiac arrest. Circulation 111(4):428–434. https://doi.org/10.1161/01.CIR.0000153811.84257.59

Sutton RM, Niles D, French B, Maltese MR, Leffelman J, Eilevstjønn J, Wolfe H, Nishisaki A, Meaney PA, Berg RA, Nadkarni VM (2014) First quantitative analysis of cardiopulmonary resuscitation quality during in-hospital cardiac arrests of young children. Resuscitation 85(1):70–74. https://doi.org/10.1016/j.resuscitation.2013.08.014

Chan PS, Krumholz HM, Nichol G, Nallamothu BK ; American Heart Association National Registry of Cardiopulmonary Resuscitation Investigators (2008) Delayed time to defibrillation after in-hospital cardiac arrest. N Engl J Med 358(1):9-17. https://doi.org/10.1056/NEJMoa0706467

Wolfe HA, Morgan RW, Zhang B, Topjian AA, Fink EL, Berg RA, Nadkarni VM, Nishisaki A, Mensinger J, Sutton RM; American Heart Association’s Get With the Guidelines-Resuscitation Investigator (2020) Deviations from AHA guidelines during pediatric cardiopulmonary resuscitation are associated with decreased event survival. Resuscitation 149:89–99. https://doi.org/10.1016/j.resuscitation.2020.01.035

Cheng A, Nadkarni VM, Mancini MB, Hunt EA, Sinz EH, Merchant RM, Donoghue A, Duff JP, Eppich W, Auerbach M, Bigham BL, Blewer AL, Chan PS, Bhanji F; American Heart Association Education Science Investigators; and on behalf of the American Heart Association Education Science and Programs Committee, Council on Cardiopulmonary, Critical Care, Perioperative and Resuscitation; Council on Cardiovascular and Stroke Nursing; and Council on Quality of Care and Outcomes Research (2018) Resuscitation Education Science: Educational Strategies to Improve Outcomes From Cardiac Arrest: A Scientific Statement From the American Heart Association. Circulation 138:e82–122. https://doi.org/10.1161/CIR.0000000000000583

Cheng A, Magid DJ, Auerbach M, Bhanji F, Bigham BL, Blewer AL, Dainty KN, Diederich E, Lin Y, Leary M, Mahgoub M, Mancini ME, Navarro K, Donoghue A (2020) Part 6: Resuscitation Education Science: 2020 American Heart association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation 142:S551–S579. https://doi.org/10.1161/CIR.0000000000000903

Greif R, Bhanji F, Bigham BL, Bray J, Breckwoldt J, Cheng A, Duff JP, Gilfoyle E, Hsieh MJ, Iwami T, Lauridsen KG, Lockey AS, Ma MH, Monsieurs KG, Okamoto D, Pellegrino JL, Yeung J, Finn JC; Education, Implementation, and Teams Collaborators (2020) Education, implementation, and teams: 2020 international consensus on cardiopulmonary resuscitation and emergency cardiovascular care science with treatment recommendations. Circulation 142 (16_suppl_1):S222–83. https://doi.org/10.1161/CIR.0000000000000896

Wang SA, Su CP, Fan HY, Hou WH, Chen YC (2020) Effects of real-time feedback on cardiopulmonary resuscitation quality on outcomes in adult patients with cardiac arrest: a systematic review and meta-analysis. Resuscitation 155:82–90. https://doi.org/10.1016/j.resuscitation.2020.07.024

Lin CY, Hsia SH, Lee EP, Chan OW, Lin JJ, Wu HP (2020) Effect of audiovisual cardiopulmonary resuscitation feedback device on improving chest compression quality. Sci Rep 10:398. https://doi.org/10.1038/s41598-019-57320-y

Lakomek F, Lukas RP, Brinkrolf P, Mennewisch A, Steinsiek N, Gutendorf P, Sudowe H, Heller M, Kwiecien R, Zarbock A, Bohn A (2020) Real-time feedback improves chest compression quality in out-of-hospital cardiac arrest: a prospective cohort study. PLoS ONE 15:e0229431. https://doi.org/10.1371/journal.pone.0229431

Siebert JN, Ehrler F, Gervaix A, Haddad K, Lacroix L, Schrurs P, Sahin A, Lovis C, Manzano S (2017) Adherence to AHA guidelines when adapted for augmented reality glasses for assisted pediatric cardiopulmonary resuscitation: a randomized controlled trial. J Med Internet Res 19:e183. https://doi.org/10.2196/jmir.7379

Siebert JN, Lacroix L, Cantais A, Manzano S, Ehrler F (2020) Assessing the impact of a mobile device tablet app to increase adherence to american heart association guidelines during simulated pediatric cardiopulmonary resuscitation: randomized controlled trial. J Med Internet Res 22(5):e17792. https://doi.org/10.2196/17792

Low D, Clark N, Soar J, Padkin A, Stoneham A, Perkins GD, Nolan J (2011) A randomised control trial to determine if use of the iResus© application on a smart phone improves the performance of an advanced life support provider in a simulated medical emergency. Anaesthesia 66:255–262. https://doi.org/10.1111/j.1365-2044.2011.06649.x

Donzé P, Balanca B, Lilot M, Faure A, Lecomte F, Boet S, Tazarourte K, Sitruk J, Denoyel L, Lelaidier R, Lehot JJ, Rimmelé T, Cejka JC (2019) ‘Read-and-do’ response to a digital cognitive aid in simulated cardiac arrest: the medical assistance eXpert 2 randomised controlled trial. Br J Anaesth 123:e160–e163. https://doi.org/10.1016/j.bja.2019.04.049

Koers L, van Haperen M, Meijer CGF, van Wandelen SBE, Waller E, Dongelmans D, Boermeester MA, Hermanides J, Preckel B (2020) Effect of cognitive aids on adherence to best practice in the treatment of deteriorating surgical patients: a randomized clinical trial in a simulation setting. JAMA Surg 155:e194704. https://doi.org/10.1001/jamasurg.2019.4704

Arriaga AF, Bader AM, Wong JM, Lipsitz SR, Berry WR, Ziewacz JE, Hepner DL, Boorman DJ, Pozner CN, Smink DS, Gawande AA (2013) Simulation-based trial of surgical-crisis checklists. N Engl J Med 368:246–253. https://doi.org/10.1056/NEJMsa1204720

Field LC, McEvoy MD, Smalley JC, Clark CA, McEvoy MB, Rieke H, Nietert PJ, Furse CM (2014) Use of an electronic decision support tool improves management of simulated in-hospital cardiac arrest. Resuscitation 85:138–142. https://doi.org/10.1016/j.resuscitation.2013.09.013

Hunt EA, Heine M, Shilkofski NS, Bradshaw JH, Nelson-McMillan K, Duval-Arnould J, Elfenbein R (2015) Exploration of the impact of a voice activated decision support system (VADSS) with video on resuscitation performance by lay rescuers during simulated cardiopulmonary arrest. Emerg Med J 32:189–194. https://doi.org/10.1136/emermed-2013-202867

Zanner R, Wilhelm D, Feussner H, Schneider G (2007) Evaluation of M-AID®, a first aid application for mobile phones. Resuscitation 74:487–494. https://doi.org/10.1016/j.resuscitation.2007.02.004

Lin YY, Chiang WC, Hsieh MJ, Sun JT, Chang YC, Ma MH (2018) Quality of audio-assisted versus video-assisted dispatcher-instructed bystander cardiopulmonary resuscitation: a systematic review and meta-analysis. Resuscitation 123:77–85. https://doi.org/10.1016/j.resuscitation.2017.12.010

Metelmann B, Metelmann C, Schuffert L, Hahnenkamp K, Brinkrolf P (2018) Medical correctness and user friendliness of available apps for cardiopulmonary resuscitation: systematic search combined with guideline adherence and usability evaluation. JMIR Mhealth Uhealth 6:e190. https://doi.org/10.2196/mhealth.9651

Chen KY, Ko YC, Hsieh MJ, Chiang WC, Ma MHM (2019) Interventions to improve the quality of bystander cardiopulmonary resuscitation: a systematic review. PLoS ONE 14:e0211792. https://doi.org/10.1371/journal.pone.0211792

Gilfoyle E, Duff J, Bhanji F, Scholefield B, Bray J, Bigham B, Breckwoldt J, Cheng A, , Glerup Lauridsen K, Hsieh M, Iwami T, Lockey A, Ma M, Monsieurs K, Okamoto D, Pellegrino J, Yeung J, Finn J, Greif R (2020) Cognitive Aids in Resuscitation Training Consensus on Science with Treatment Recommendations. Brussels, Belgium, International Liaison Committee on Resuscitation (ILCOR) Education, Implementation and Teams Task Force. Available from: http://ilcor.org. (Accessed 27 September 2021, at https://costr.ilcor.org/document/cognitive-aids-in-resuscitation-eit-629-systematic-review)

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D (2009) The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 6:e1000100. https://doi.org/10.1016/j.jclinepi.2009.06.006

(1974) Standards for cardiopulmonary resuscitation (CPR) and emergency cardiac care (ECC). JAMA 227(7):833–68. https://doi.org/10.1001/jama.227.7.833

Fletcher KA, Bedwell WL (2014) Cognitive aids: design suggestions for the medical field. Proc Int Symp Hum Factors Ergonomics Health Care 3(1):148–152. https://doi.org/10.1177/2327857914031024

Covidence systematic review software, Veritas Heath Innovation, Melbourne, Australia. https://www.covidence.org/.

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, Savovic J, Schulz KF, Weeks L, Sterne JA; Cochrane Bias Methods Group; Cochrane Statistical Methods Group (2011) The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ 343:d5928. https://doi.org/10.1136/bmj.d5928

Grundgeiger T, Hahn F, Wurmb T, Meybohm P, Happel O (2021) The use of a cognitive aid app supports guideline-conforming cardiopulmonary resuscitations: A randomized study in a high-fidelity simulation. Resusc Plus 7:100152. https://doi.org/10.1016/j.resplu.2021.100152

Urman RD, August DA, Chung S, Jiddou AH, Buckley C, Fields KG, Morrison JB, Palaganas JC, Raemer D (2021) The effect of emergency manuals on team performance during two different simulated perioperative crises: a prospective, randomized controlled trial. J Clin Anesth 68:110080. https://doi.org/10.1016/j.jclinane.2020.110080

Schneider AJ, Murray WB, Mentzer SC, Miranda F, Vaduva S (1995) “Helper:” A critical events prompter for unexpected emergencies. J Clin Monit 11:358–364. https://doi.org/10.1007/BF01616741

Lelaidier R, Balança B, Boet S, Faure A, Lilot M, Lecomte F, Lehot JJ, Rimmelé T, Cejka JC (2017) Use of a hand-held digital cognitive aid in simulated crises: the MAX randomized controlled trial. Br J Anaesth 119:1015–1021. https://doi.org/10.1093/bja/aex256

Jones I, Hayes JA, Williams J, Lonsdale H (2019) Does electronic decision support influence advanced life support in simulated cardiac arrest? Br J Nurs 14:72–9. https://doi.org/10.12968/bjca.2019.14.2.72

Shear TD, Deshur M, Benson J, Houg S, Wang C, Katz J, Aitchison P, Ochoa P, Wang E, Szokol J (2018) The effect of an electronic dynamic cognitive aid versus a static cognitive aid on the management of a simulated crisis: a randomized controlled trial. J Med Syst 43:6. https://doi.org/10.1007/s10916-018-1118-z

Crabb DB, Hurwitz JE, Reed AC, Smith ZJ, Martin ET, Tyndall JA, Taasan MV, Plourde MA, Beattie LK (2021) Innovation in resuscitation: a novel clinical decision display system for advanced cardiac life support. Am J Emerg Med 43:217–223. https://doi.org/10.1016/j.ajem.2020.03.007

Hejjaji V, Malik AO, Peri-Okonny PA, Thomas M, Tang Y, Wooldridge D, Spertus JA, Chan PS (2020) Mobile app to improve house officers’ adherence to advanced cardiac life support guidelines: quality improvement study. JMIR Mhealth Uhealth 8:e15762. https://doi.org/10.2196/15762

Hall C, Robertson D, Rolfe M, Pascoe S, Passey ME, Pit SW (2020) Do cognitive aids reduce error rates in resuscitation team performance? Trial of emergency medicine protocols in simulation training (TEMPIST) in Australia. Hum Resour Health 18:1. https://doi.org/10.1186/s12960-019-0441-x

An M, Kim Y, Cho W-K (2019) Effect of smart devices on the quality of CPR training: a systematic review. Resuscitation 144:145–156. https://doi.org/10.1016/j.resuscitation.2019.07.011

Kirkbright S, Finn J, Tohira H, Bremner A, Jacobs I, Celenza A (2014) Audiovisual feedback device use by health care professionals during CPR: a systematic review and meta-analysis of randomised and non-randomised trials. Resuscitation 85:460–471. https://doi.org/10.1016/j.resuscitation.2013.12.012

Yeung J, Meeks R, Edelson D, Gao F, Soar J, Perkins GD (2009) The use of CPR feedback/prompt devices during training and CPR performance: a systematic review. Resuscitation 80:743–751. https://doi.org/10.1016/j.resuscitation.2009.04.012

Gruber J, Stumpf D, Zapletal B, Neuhold S, Fischer H (2012) Real-time feedback systems in CPR. Trends Anaesth Crit Care 2:287–294

Renna TD, Crooks S, Pigford AA, Clarkin C, Fraser AB, Bunting AC, Bould MD, Boet S (2016) Cognitive aids for role definition (CARD) to improve interprofessional team crisis resource management: an exploratory study. J Interprof Care 30:582–590. https://doi.org/10.1080/13561820.2016.1179271

Honarmand K, Mepham C, Ainsworth C, Khalid Z (2018) Adherence to advanced cardiovascular life support (ACLS) guidelines during in-hospital cardiac arrest is associated with improved outcomes. Resuscitation 129:76–81. https://doi.org/10.1016/j.resuscitation.2018.06.005

McEvoy MD, Field LC, Moore HE, Smalley JC, Nietert PJ, Scarbrough SH (2014) The effect of adherence to ACLS protocols on survival of event in the setting of in-hospital cardiac arrest. Resuscitation 85:82–87. https://doi.org/10.1016/j.resuscitation.2013.09.019

Bircher NG, Chan PS, Xu Y (2019) Delays in cardiopulmonary resuscitation, defibrillation, and epinephrine administration all decrease survival in in-hospital cardiac arrest. Anesthesiology 130:414–422. https://doi.org/10.1097/ALN.0000000000002563

Andersen LW, Berg KM, Saindon BZ, Massaro JM, Raymond TT, Berg RA, Nadkarni VM, Donnino MW, With AHAG, the Guidelines-Resuscitation Investigators, (2015) Time to epinephrine and survival after pediatric in-hospital cardiac arrest. JAMA 314:802. https://doi.org/10.1001/jama.2015.9678

Mitani Y, Ohta K, Yodoya N, Otsuki S, Ohashi H, Sawada H, Nagashima M, Sumitomo N, Komada Y (2013) Public access defibrillation improved the outcome after out-of-hospital cardiac arrest in school-age children: a nationwide, population-based, Utstein registry study in Japan. Europace 15:1259–1266. https://doi.org/10.1093/europace/eut053

Cheskes S, Schmicker RH, Verbeek PR, Salcido DD, Brown SP, Brooks S, Menegazzi JJ, Vaillancourt C, Powell J, May S, Berg RA, Sell R, Idris A, Kampp M, Schmidt T, Christenson J; Resuscitation Outcomes Consortium (ROC) investigators (2014) The impact of peri-shock pause on survival from out-of-hospital shockable cardiac arrest during the Resuscitation Outcomes Consortium PRIMED trial. Resuscitation 85:336–42. https://doi.org/10.1016/j.resuscitation.2013.10.014

Christenson J, Andrusiek D, Everson-Stewart S, Kudenchuk P, Hostler D, Powell J, Callaway CW, Bishop D, Vaillancourt C, Davis D, Aufderheide TP, Idris A, Stouffer JA, Stiell I, Berg R; Resuscitation Outcomes Consortium Investigators (2009) Chest compression fraction determines survival in patients with out-of-hospital ventricular fibrillation. Circulation 120:1241–7. https://doi.org/10.1161/CIRCULATIONAHA.109.852202

Talikowska M, Tohira H, Finn J (2015) Cardiopulmonary resuscitation quality and patient survival outcome in cardiac arrest: a systematic review and meta-analysis. Resuscitation 96:66–77. https://doi.org/10.1016/j.resuscitation.2015.07.036

Dumas RP, Vella MA, Chreiman KC, Smith BP, Subramanian M, Maher Z, Seamon MJ, Holena DN (2020) Team Assessment and Decision Making Is Associated With Outcomes: A Trauma Video Review Analysis. J Surg Res 2020;246:544–9. https://doi.org/10.1016/j.jss.2019.09.033

Boet S, Bould MD, Fung L, Qosa H, Perrier L, Tavares W, Reeves S, Tricco AC (2014) Transfer of learning and patient outcome in simulated crisis resource management: a systematic review. Can J Anaesth 61:571–582. https://doi.org/10.1007/s12630-014-0143-8

Brouwer TF, Walker RG, Chapman FW, Koster RW (2015) Association between chest compression interruptions and clinical outcomes of ventricular fibrillation out-of-hospital cardiac arrest. Circulation 132(11):1030–1037. https://doi.org/10.1161/CIRCULATIONAHA.115.014016

Fan J, Smith AP (2017) The Impact of Workload and Fatigue on Performance. In: Longo L, Leva M (ed) Human Mental Workload: Models and Applications. H-WORKLOAD 2017. Communications in Computer and Information Science, vol 726. Cham: Springer International Publishing, pp 90–105.

Cheng A, Kessler D, Mackinnon R, Chang TP, Nadkarni VM, Hunt EA, Duval-Arnould J, Lin Y, Cook DA, Pusic M, Hui J, Moher D, Egger M, Auerbach M; International Network for Simulation-based Pediatric Innovation, Research, and Education (INSPIRE) Reporting Guidelines Investigators (2016) Reporting Guidelines for Health Care Simulation Research: Extensions to the CONSORT and STROBE Statements. Simul Healthc 11:238–48. https://doi.org/10.1097/SIH.0000000000000150

Marshall S (2013) The use of cognitive aids during emergencies in anesthesia: a review of the literature. Anesth Analg 117:1162–1171. https://doi.org/10.1213/ANE.0b013e31829c397b

Grundgeiger T, Huber S, Reinhardt D, Steinisch A, Happel O, Wurmb T (2019) Cognitive Aids in Acute Care: Investigating How Cognitive Aids Affect and Support In-hospital Emergency Teams. Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI’19). 654 :1–14. https://doi.org/10.1145/3290605.3300884

Klein A, Kulp L, Sarcevic A (2018) Designing and optimizing digital applications for medical emergencies. In Proceedings of the Extended Abstracts of the 2018 Conference on Human Factors in Computing Systems (CHI'18). LBW588:1–6. https://doi.org/10.1145/3170427.3188678

Corazza F, Snijders D, Arpone M, Stritoni V, Martinolli F, Daverio M, Losi MG, Soldi L, Tesauri F, Da Dalt L, Bressan S (2020) Development and usability of a novel interactive tablet app (PediAppRREST) to support the management of pediatric cardiac arrest: pilot high-fidelity simulation-based study. JMIR Mhealth Uhealth 8(10):e19070. https://doi.org/10.2196/19070

Brooke J (1996) SUS—A quick and dirty usability scale. In: Jordan PW, Thomas B, Weerdmeester BA, and McClelland IL (ed) Usability evaluation in industry. 1st ed. London: Taylor and France, CRC Press, pp 189–194.

Acknowledgements

The authors thank Dr Lisa Dainese at the University of Padua health science library for her assistance in refining the databases search strategies, the Salus Pueri Foundation (Padua, Italy) for the financial support provided to Dr Marta Arpone, and Dr Marco Bazo for his critical review of the manuscript, English editing and support with manuscript formatting.

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement. This work was funded by an institutional funding programme of the Department of Women’s and Children’s Health, University of Padua, Italy (BIRD203279).

Author information

Authors and Affiliations

Contributions

FC, AC, LDD, and SB conceptualized and designed the study. FC, EF, MA, and SB defined the search strategy. EF and MA screened titles and abstracts, and FC and GT reviewed and screened the full text of the original studies. FC and GT performed the quality assessment. FC and EF extracted data from the original studies. ACF performed statistical analyses. LDD and SB supervised the work. FC, EF, MA wrote the original draft. GT, ACF, AC, LDD and SB reviewed and edited the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

None of the authors have any financial or nonfinancial competing interests to declare.

Ethics approval and consent to participate

Since this is a systematic review, consent for data collection was already given during enrolment in the original studies.

Consent to participate and for publication

Not applicable.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Corazza, F., Fiorese, E., Arpone, M. et al. The impact of cognitive aids on resuscitation performance in in-hospital cardiac arrest scenarios: a systematic review and meta-analysis. Intern Emerg Med 17, 2143–2158 (2022). https://doi.org/10.1007/s11739-022-03041-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11739-022-03041-6