Abstract

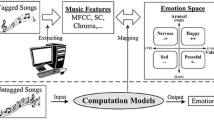

Music is the language of emotions. In recent years, music emotion recognition has attracted widespread attention in the academic and industrial community since it can be widely used in fields like recommendation systems, automatic music composing, psychotherapy, music visualization, and so on. Especially with the rapid development of artificial intelligence, deep learning-based music emotion recognition is gradually becoming mainstream. This paper gives a detailed survey of music emotion recognition. Starting with some preliminary knowledge of music emotion recognition, this paper first introduces some commonly used evaluation metrics. Then a three-part research framework is put forward. Based on this three-part research framework, the knowledge and algorithms involved in each part are introduced with detailed analysis, including some commonly used datasets, emotion models, feature extraction, and emotion recognition algorithms. After that, the challenging problems and development trends of music emotion recognition technology are proposed, and finally, the whole paper is summarized.

Similar content being viewed by others

References

Yang X Y, Dong Y Z, Li J. Review of data features-based music emotion recognition methods. Multimedia System, 2018, 24(4): 365–389

Cheng Z Y, Shen J L, Zhu L, Kankanhalli M, Nie L Q. Exploiting music play sequence for music recommendation. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence. 2017, 3654–3660

Cheng Z Y, Shen J L, Nie L Q, Chua T S, Kankanhalli M. Exploring user-specific information in music retrieval. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval. 2017, 655–664

Kim Y E, Schmidt E M, Migneco R, Morton B G, Richardson P, Scott J, Speck J A, Turnbull D. Music emotion recognition: a state of the art review. In: Proceedings of the 11th International Society for Music Information Retrieval Conference. 2010, 255–266

Yang Y H, Chen H H. Machine recognition of music emotion: a review. ACM Transactions on Intelligent Systems and Technology. 2011, 3(3): 1–30

Bartoszewski M, Kwasnicka H, Kaczmar M U, Myszkowski P B. Extraction of emotional content from music data. In: Proceedings of the 7th International Conference on Computer Information Systems and Industrial Management Applications. 2008, 293–299

Hevner K. Experimental studies of the elements of expression in music. The American Journal of Psychology, 1936, 48(2): 246–268

Russell J A. A circumplex model of affect. Journal of Personality and Social Psychology, 1980, 39(6): 1161–1178

Posner J, Russell J A, Peterson B S. The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychology. Development and Psychopathology, 2005, 17(3): 715–734

Chekowska-Zacharewicz M, Janowski M. Polish adaptation of the geneva emotional music scale (GEMS): factor structure and reliability. Psychology of Music, 2020, 57(6): 427–438

Thayer R. The Biopsychology of Mood and Arousal. 1st ed. Oxford: Oxford University Press, 1989

Weninger F, Eyben F, Schuller B W. On-line continuous-time music mood regression with deep recurrent neural networks. In: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing. 2014, 5412–5416

Yang Y H, Lin Y C, Su Y F, Chen H H. A regression approach to music emotion recognition. IEEE Transactions on Audio, Speech, and Language Processing, 2008, 16(2): 448–457

Li X X, Xianyu H S, Tian J S, Chen W X, Meng F H, Xu M X, Cai L H. A deep bidirectional long short-term memory based multi-scale approach for music dynamic emotion prediction. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal. 2016, 544–548

Fan J Y, Tatar K, Thorogood M, Pasquier P. Ranking-based emotion recognition for experimental music. In: Proceedings of the 18th International Society for Music Information Retrieval Conference. 2017, 368–375

Thammasan N, Fukui K I, Numao M. Multimodal fusion of EEG and musical features in music-emotion recognition. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. 2017, 4991–4992

Yang Y H, Chen H H. Prediction of the distribution of perceived music emotions using discrete samples. IEEE Transactions on Audio, Speech and Language Processing, 2011, 19(7): 2184–2196

Liu H P, Fang Y, Huang Q H. Music emotion recognition using a variant of recurrent neural network. In: Proceedings of the International Conference on Matheatics, Modeling, Simulation and Statistics Application. 2018, 15–18

Soleymani M, Caro M N, Schmidt E M, Sha C Y, Yang Y H. 1000 songs for emotional analysis of music. In: Proceedings of the 2nd ACM International Workshop on Crowdsourcing for Multimedia. 2013, 1–6

Turnbull D, Barrington L, Torres D, Lanckriet G. Towards musical query-by-semantic-description using the CAL500 data set. In: Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 2007, 439–446

Wang S Y, Wang J C, Yang Y H, Wang H M. Towards time-varying music auto-tagging on CAL500 expansion. In: Proceedings of the IEEE International Conference on Multimedia and Expo. 2014, 1–6

Chen Y A, Yang Y H, Wang J C, Chen H. The AMG1608 dataset for music emotion recognition. In: Proceedings of the IEEE International Conference on Acoustic, Speech and Signal Processing. 2015, 693–697

Aljanaki A, Yang Y H, Soleymani M. Developing a benchmark for emotional analysis of music. PLoS ONE, 2017, 12(3): e0173392

Speck J A, Schmidt E M, Morton B G, Kim Y E. A comparative study of collaborative vs. traditional musical mood annotation. In: Proceedings of the 12th International Society for Music Informational Retrieval Conference. 2011, 549–554

Eerola T, Vuoskoski J K. A comparison of the discrete and dimensional models of emotion in music. Psychology Music, 2011, 39(1): 18–49

Zentner M, Grandjean D, Scherer K R. Emotions evoked by the sound of music: characterization, classification, and measurement. Emotion, 2008, 8(4): 494–521

Mahieux T B, Ellis D P W, Whitman B, Lamere P. The million songs dataset. In: Proceedings of the 12th International Society for Music Information Retrieval Conference. 2011, 591–596

Tzanetakis G, Cook P. MARSYAS: a framework for audio analysis. Organised Sound, 2000, 4(3): 169–175

Mathieu B, Essid S, Fillon T, Prado J, Richard G. YAAFE, an easy to use and efficient audio feature extraction software. In: Proceedings of the 11th International Society for Music Information Retrieval Conference. 2010, 441–446

Lartillot O, Toiviainen P. MIR in MATLAB (II)A toolbox for musical feature extraction from audio. In: Proceedings of the 8th International Conference on Music Information Retrieval. 2007, 127–130

McEnnis D, Mckay C, Fujinaga I, Depalle P. jAudio: a feature extraction library. In: Proceedings of the 6th International Conference on Music Information Retrieval. 2005, 600–603

Liu X, Chen Q C, Wu X P, Liu Y, Liu Y. CNN based music emotion classification. 2017, arXiv preprint arXiv: 1704.5665

Han W J, Li H F, Ruan H B, Ma Lin. Review on speech emotion recognition (In Chinese). Journal of Software, 2014, 25(1): 37–50

Barthet M, Fazekas G, Sandler M. Multidisciplinary perspectives on music emotion recognition: implications for content and context-based model. In: Proceedings of the 9th International Symposium on Computer Music Modelling and Retrieval. 2012, 492–507

Chen P L, Zhao L, Xin Z Y, Qiang Y M, Zhang M, Li T M. A scheme of MIDI music emotion classification based on fuzzy theme extraction and neural network. In: Proceedings of the 12th International Conference on Computational Intelligence and Security. 2016, 323–326

Juslin P N, Laukka P. Expression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listening. Journal of New Music Research, 2004, 33(3): 217–238

Yang D, Lee W S. Disambiguating music emotion using software agents. In: Proceedings of the 5th International Conference on Music Information Retrieval. 2004, 218–223

He H, Jin J M, Xiong Y H, Chen B, Zhao L. Language feature mining for music emotion classification via supervised learning from lyrics. In: Proceedings of International Symposium on Intelligence Computation and Applications. 2008, 426–435

Hu X, Downie J S, Ehmann A F. Lyric text mining in music mood classification. In: Proceedings of the 10th International Society for Music Information Retrieval Conference. 2009, 411–416

Zaanen M V, Kanters P. Automatic mood classification using TF*IDF based on lyrics. In: Proceedings of the 11th International Society for Music Information Retrieval Conference. 2010, 75–80

Wang X, Chen X O, Yang D S, Wu Y Q. Music emotion classification of Chinese songs based on lyrics using TF*IDF and rhyme. In: Proceedings of the 12th International Society for Music Information Retrieval Conference. 2011, 765–770

Malheiro R, Panda R, Gomes P, Paiva R P. Emotionally-relevant features for classification and regression of music lyrics. IEEE Transactions on Affective Computing, 2018, 9(2): 240–254

Hu Y J, Chen X O, Yang D S. Lyric-based song emotion detection with affective lexicon and fuzzy clustering method. In: Proceedings of the 10th International Society for Music Information Retrieval Conference. 2009, 123–128

Yang D, Lee W S. Music emotion identification from lyrics. In: Proceedings of the 11th IEEE International Symposium on Multimedia. 2009, 624–629

Dakshina K, Sridhar R. LDA based emotion recognition from lyrics. Advanced Computing, Networking and Informatics, 2014, 27(1): 187–194

Thammasan N, Fukui K I, Numao M. Application of deep belief networks in EEG-based dynamic music-emotion recognition. In: Proceedings of the 2016 International Joint Conference on Neural Networks. 2016, 881–888

Hu X, Li F J, Ng D T J. On the relationships between music-induced emotion and physiological signals. In: Proceedings of the 19th International Society for Music Information Retrieval Conference. 2018, 362–369

Nawa N E, Callan D E, Mokhtari P, Ando H, Iversen J. Decoding music-induced experienced emotions using functional magnetic resonance imaging- Preliminary result. In: Proceedings of the 2018 International Joint Conference on Neural Networks. 2018, 1–7

Li T, Ogihara M. Detecting emotion in music. In: Proceedings of the 4th International Conference on Music Information Retrieval. 2003, 239–240

Laurier C, Grivolla J, Herrera P. Multimodal music mood classification using audio and lyrics. In: Proceedings of the 7th International Conference on Machine Learning and Applications. 2008, 688–693

Yang Y H, Lin Y C, Cheng H T, Liao I B, Ho Y C, Chen H. Toward multi-modal music emotion classification. In: Proceedings of the 9th Pacific Rim Conference on Multimedia. 2008, 70–79

Liu Y, Liu Y, Zhao Y, Hua K A. What strikes the strings of your heart? — feature mining for music emotion analysis. IEEE Transactions on Affective Computing, 2015, 6(3): 247–260

Wang J C, Yang Y H, Wang H M, Jeng S K. The acoustic emotion gaussians model for emotion-based music annotation and retrieval. In: Proceedings of the 20th ACM Multimedia Conference. 2012, 89–98

Chen Y A, Wang J C, Yang Y H, Chen H. Component tying for mixture model adaptation in personalization of music emotion recognition. IEEE ACM Transactions on Audio, Speech and Language Processing, 2017, 25(7): 1409–1420

Chen Y A, Wang J C, Yang Y H, Chen H. Linear regression-based adaptation of music emotion recognition models for personalization. In: Proceedings of the IEEE International Conference on Acoustic, Speech and Signal Processing. 2014, 2149–2153

Fukayama S, Goto M. Music emotion recognition with adaptive aggregation of Gaussian process regressors. In: Proceedings of the IEEE International Conference on Acoustic, Speech and Signal Processing. 2016, 71–75

Soleymani M, Aljanaki A, Yang Y H, Caro M N, Eyben F, Markov K, Schuller B, Veltkamp R C, Weninger F, Wiering F. Emotional analysis of music: a comparison of methods. In: Proceedings of the ACM International Conference on Multimedia. 2014, 1161–1164

Lu L, Liu D, Zhang H J. Automatic mood detection and tracking of music audio signals. IEEE Transactions on Audio, Speech and Language Processing, 2006, 14(1): 5–18

Schmidt E M, Turnbull D, Kim Y E. Feature selection for content-based, time-varying musical emotion regression. In: Proceedings of the 11th ACM SIGMM International Conference on Multimedia Information Retrieval. 2010, 267–274

Xianyu H S, Li X X, Chen W S, Meng F H, Tian J S, Xu M X, Cai L H. SVR based double-scale regression for dynamic emotion prediction in music. In: Proceedings of the 2016 IEEE International Conference on Acoustic, Speech and Signal Processing. 2016, 549–553

Huang M Y, Rong W G, Arjannikov T, Nan J, Xiong Z. Bi-modal deep Boltzmann machine based musical emotion classification. In: Proceedings of the 25th International Conference on Artificial Neural Network. 2016, 199–207

Keelawat P, Thammasan N, Kijsirikul B, Numao M. Subject-independent emotion recognition during music listening based on EEG using deep convolutional neural networks. In: Proceedings of the 2019 the 15th IEEE International Colloquium on Signal Processing & Its Application. 2019, 21–26

Sarkar R, Choudhury S, Dutta S, Roy A, Saha S K. Recognition of emotion in music based on deep convolutional neural network. Multimedia Tools and Application, 2020, 79(9): 765–783

Yang P T, Kuang S M, Wu C C, Hsu J L. Predicting music emotion by using convolutional neural network. In: Proceedings of the 22nd HCI International Conference. 2020, 266–275

Ma Y, Li X X, Xu M X, Jia J, Cai L H. Multi-scale context based attention for dynamic music emotion prediction. In: Proceedings of the 25th ACM International Conference on Multimedia Conference. 2017, 1443–1450

Chang W H, Li J L, Lin Y S, Lee C C. A genre-affect relationship network with task-specific uncertainty weighting for recognizing induced emotion in music. In: Proceedings of the 2018 IEEE International Conference on Multimedia and Expo. 2018, 1–8

Delbouys R, Hennequin R, Piccoli F, Letelier J R, Moussallam M. Music mood detection based on audio and lyrics with deep neural net. In: Proceedings of the 19th International Society for Music Information Retrieval Conference. 2018, 370–375

Dong Y Z, Yang X Y, Zhao X, Li J. Bidirectional convolutional recurrent sparse network (BCRSN): an efficient model for music emotion recognition. IEEE Transactions on Multimedia, 2019, 21(12): 3150–3163

Chowdhury S, Vall A, Haunscmid V, Widmer G. Towards explainable music emotion recognition: the route via mid-level features. In: Proceedings of the 20th International Society for Music Information Retrieval Conference. 2019, 237–243

Li X X, Tian J S, Xu M X, Ning Y S, Cai L H. DBLSTM-based multi-scale fusion for dynamic emotion prediction in music. In: Proceedings of the IEEE International Conference on Multimedia and Expo. 2016, 1–6

Chaki S, Doshi P, Patnaik P, Bhattacharya S. Attentive RNNs for continuous-time emotion prediction in music clips. In: Proceedings of the 3rd Workshop in Affective Content Analysis co-located with 34th AAAI Conference on Artificial Intelligence. 2020, 36–45

Panda R, Malheiro R, Paiva R P. Novel audio features for music emotion recognition. IEEE Transactions on Affective Computing, 2020, 11(4): 614–626

Deng S G, Wang D J, Li X T, Xu G D. Exploring user emotion in microblogs for music recommendation. Expert System with Applications, 2015, 42(1): 9284–9293

Ferreira L N, Whitehead J. Learning to generate music with sentiment. In: Proceedings of the 20th International Society for Music Information Retrieval Conference. 2019, 384–390

Acknowledgements

This work was supported by the National Nature Science Foundation of China (Grant Nos. 61672144, 61872072, 61173029) and the National Key R&D Program of China (2019YFB1405302)

Author information

Authors and Affiliations

Corresponding author

Additional information

Donghong Han received the PhD degree from Northeastern University, China in 2007. She is currently an associate professor with the School of Computer Science and Engineering, Northeastern University, China. She is the reviewer of Applied Intelligence, IEEE Transaction on Cybernetics, Frontiers of Information Technology & Electronic Engineering, etc. She has in total more than 40 publications till date. Her current research interests include data flow management, uncertain data flow analysis and social network sentiment analysis. She is a member of China Computer Federation (CCF), and a member of Chinese Information Processing Society, Social Media Processing.

Yanru Kong received the BS degree from Shandong University of Science and Technology, China in 2018. She is working toward the MS degree in computer science from Northeastern University, China. Her current research interests include natural language processing, sentiment analysis and music emotion recognition.

Jiayi Han received the BS degree from Northeastern University, China in 2018. He is currently pursuing a PhD degree in the Institute of Science and Technology for Brain-Inspired Intelligence at Fudan University, China. His research interests focus on facial expression recognition and medical imaging. He published paper on ICBEB.

Guoren Wang received the PhD degree in computer science from Northeastern University, China in 1996. He is currently a professor with the School of Computer Science & Technology, Beijing Institute of Technology, China. He has published about 300 journal and conference papers. He received The National Science Fund for Distinguished Young Scholars in 2010. His current research interests include uncertain data management, data intensive computing, visual media data management and analysis, unstructured data management, distributed query processing and optimization technology, bioinformatics. He is the vice chairman of China Computer Federation, Technical Committee on Databases (CCF TCDB). And he is an expert review member of National Nature Science Foundation of China, Information Science Department.

Electronic Supplementary Material

Rights and permissions

About this article

Cite this article

Han, D., Kong, Y., Han, J. et al. A survey of music emotion recognition. Front. Comput. Sci. 16, 166335 (2022). https://doi.org/10.1007/s11704-021-0569-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-021-0569-4