Abstract

This article derives a new scheme to an adaptive observer for a class of fractional order systems. Global asymptotic convergence for joint state-parameter estimation is established for linear time invariant single-input single-output systems. For such fractional order systems, it is proved that all the signals in the resulting closed-loop system are globally uniformly bounded, the state and parameter estimation errors converge to zero. Potential applications of the presented adaptive observer include online system identification, fault detection, adaptive control of fractional order systems, etc. Numerical simulation examples are presented to demonstrate the performance of the proposed adaptive observer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past decades, fractional order systems have attracted increasing attention from the control community, since many engineering plants and processes cannot be described concisely and precisely without introducing fractional order calculus[1, 2]. Due to more and more scholars devoting themselves to the fractional order field, a tremendous amount of valuable results on system identification[3,4], controllability and observability[5, 6], stability analysis[7–9] and controller synthesis[10–12] of fractional order systems have been reported in the literature. Many fundamentals and applications of fractional order control systems can be found in [3] and the reference therein.

The reconstruction of system state from its input and output has received a great deal of attention recently. Doye et al.[13] studied the fractional order Luenberger observer and observer-based controller design. Further, it was extended to the uncertain case via linear matrix inequality approach[14]. There are some special Luenberger state observers, such as the discrete form observer[15] and proportional integral (PI) observer[16]. With the frequency distributed model[17] of a fractional order system proposed, an interesting result is obtained that the conventional state is a pseudo state of the system, not the true state. Based on the frequency distributed model, Sabatier et al.[18, 19] discussed the observability of a class of fractional order systems and proposed a class of Luenberger observers.

Despite the plentiful achievements, some critical problems still need further investigation. The system parameters should be known. For the case where no a priori knowledge of the system parameters is available, the so-called adaptive observers should be introduced. The basic idea in the approach is to use a Luenberger observer that will continuously adapt to the parameters. The related research in the integer order area has been reported in [20–23], while there exist few results in the literature investigating this point in fractional order area. There are two main reasons. Firstly, it is difficult to prove the stability of the closed-loop system. Secondly, the parameter identification belongs to the category of identification of continuous systems[24], in which there are still many problems to be solved. To the best of our knowledge, looking for an adaptive observer for fractional order system still remains open.

Motivated by these observations, this article focuses on adaptive observation for fractional order system. The theoretical contributions of this work are as follows. Firstly, a novel adaptive observation scheme is derived, which continuously adapts to the parameters in the observer. Secondly, based on the identification of continuous system, a modified parameter identified algorithm of fractional order system is proposed. The former study solves the problem “viable or not”, making the impossible possible. The latter one improves the performance of the fractional order system identification.

The remainder of this article is organized as follows. Section 2 briefly provides some preliminaries for fractional order system and problem statement. A novel adaptive observation scheme is proposed in Section 3. In Section 4, two simulation examples are provided to illustrate the validity of the proposed approach. Finally, Section 5 concludes the study.

2 Preliminaries

Consider the following linear time invariant single-input single-output (LTI SISO) plant

where α ∈ (0, 1) is the fractional commensurate order, u(t) is assumed as a piecewise continuous bounded function of time, y(t) is the measureable output, a i (i = 1, 2, ⋯,n) and b j (j = 1, 2, ⋯, m) are constants but unknown, m, n are known positive integers and m < n, and the system is supposed to be stable.

In this study, the Caputo’s definition is adopted for fractional order derivative, i.e.,

where k is a positive integer and k − 1 ≤ α ≤ k. To simplify the notation, we denote the fractional derivative of order α as Dα instead of \(_0D_t^\alpha\) in this work.

This study aims at constructing a scheme that estimates both the plant parameters

as well as the system pseudo state x(t) using only input u(t) and output measurements y(t).

To construct the above scheme, we first introduce a definition as follows.

-

Definition 1. Matrix A of the system

$${D^\alpha}x(t) = Ax(t)$$(4)is said to be a stable matrix if and only if it satisfies

$$\vert \arg ({\rm{spec}}(A))\vert > {{\alpha \pi} \over 2}$$(5)where arg (z) denotes the principle argument of z and spec (A) denotes the spectrum of A.

3 Fractional order adaptive observer

3.1 Observer design

A minimal realization of the system (1) can be expressed as

where the stable matrix A ∈ Rn×n, B, CT ∈ Rn, {A, B} is controllable and {A, C} is observable, and the measurement noise v (t) is white noise with variance \(\sigma _v^2\).

On the basis of this transformation, the problem becomes how to construct a scheme that estimates both the plant parameters, i.e., A, B, C as well as the state vector x(t). A good starting point for designing an adaptive observer is the Luenberger observer[22, 23]

where L is chosen so that A − LC is a stable matrix and guarantees that \(\hat x(t)\) converges to x (t) exponentially for any initial condition x (0) and any input u (t).

In order to construct the structure of the adaptive observer, the unknown matrices A, B and C in the Luenberger observer (7) should be replaced with their estimates \(\hat A,\hat B\) and \(\hat C\) respectively, generated by some adaptive law.

There are mainly 3 disadvantages in such the structure of the adaptive observer:

-

1)

The estimate matrix \({\hat A}\) cannot be guaranteed as stable, which implies that the estimate state \(\hat x(t)\) may not be convergent.

-

2)

Since the state space realization result of the transfer matrix G(s) is not unique, matrices A, B and C are not unique either.

-

3)

The number of the parameters to be estimated is n2 + 2n, while the number of the unknown parameters in G(s) is m + n.

To eliminate these disadvantages, a special realization form called the observable canonical form is developed. Therefore, matrices A, B and C in (6) are given by

Consequently, there are m + n parameters to be estimated and we no longer need to estimate the matrix C. Then, related parameters in the adaptive observer (8) can be rewritten as

where \({{\hat a}_p}(t)\) and \({{\hat b}_p}(t)\) are the estimates of vectors a p and b p , respectively, and a o ∈ Rn is chosen such that

is a stable matrix.

-

Remark 1. Matrix A o in (11) is not unique. Regarding the stability, any stable matrices can be adopted for A o . In addition, the convergence speed of the state observation depends on A o .

3.2 Parameter estimation

The corresponding differential equation of system (1) can be expressed as

To obtain a regression-like form of system (12), two methods will be introduced.

In method 1, define the differential operator \({p^\alpha} = {D^\alpha},D({p^\alpha}) = {p^{n\alpha}} + {a_1}{p^{(n - 1)\alpha}} + \cdots + {a_n}\) and \(N({p^\alpha}) = {b_1}{p^{(m - 1)\alpha}} + {b_2}{p^{(m - 2)\alpha}} + \cdots + {b_m}\). Then, system (12) can be written in an alternative time-domain differential operator form as

Filtering the signals on both sides of (13) with the following stable filter

we have

By defining some new variabilities, (15) can be expressed in a regression-like form as

where

In method 2, considering the generalized Poisson moment functional

with κ > n, system (12) can be rewritten as

By defining \(\bar y(t) = {{{p^{n\alpha}}y(t)} \over {H({p^\alpha})}}\), system (18) can be written in a regression-like form as

where the regressor and the parameter vectors are now defined by

Since it is very similar to estimate a p and b p based on the least squares method through (16) and (19), respectively, only the former one is introduced.

With regard to (16), at any time instant t = t k , k = 1, 2, ⋯, L, the following standard linear regression-like form can be obtained as

Now, from L available samples of the input and output signals observed at discrete times t1, ⋯,t L , not necessarily uniformly spaced, the linear least squares based parameter estimates are given by

\(\hat \theta ({t_L})\) cannot converge to θ, because ϕ (t k ) is correlated with v (tk). To correct the deviations of the parameter estimate, we replace p (t k ) with

where \(\hat v({t_k})\) is the estimation of v (t k ).

Consequently, the modified solution \(\hat \theta ({t_L})\) can be expressed as

To estimate the parameter on line, the matrix inversion theorem is used to get the recursive least square algorithm as

where \(k > 0,\hat v({t_1}) = 0,\hat \theta ({t_1})\) can be chosen arbitrarily, and P (t1) is usually selected as 103∼8 Im+2n.

As a result, one can get the original system parameter estimation from

-

Remark 2. The filter F (pα) in (14) is not unique. Regarding the stability and the maximum order of nα, any filters can be adopted for F (pα).

-

Remark 3. The generalized Poisson moment functional \({1 \over {H({p^\alpha})}}\) in (17) is not unique. Although any κ ≥ n is feasible, one usually selects κ = n. In addition, ω can be selected to guarantee system (1) and \({1 \over {H({p^\alpha})}}\) have a similar bandwidth.

-

Remark 4. Actually, parameter c p can be calculated from parameter a p and the coefficients of H(pα) or F(pα). However, it is not affected that one can identify c p and a p independently.

-

Remark 5. The two methods realize the parameter adaptation by predicting the output y(i) and the filtered output \(\bar y(t)\), receptively. Additionally, the parameter estimation results depend on H(pα), F(pα) and other parameters. As a result, it is hard to say which one is better.

3.3 Stability analysis

For any time t k ≤ t < t k +1, we have

Based on the previous result, we are ready to present the adaptive observer for the fractional order system.

-

Theorem 1. For plant (6), if the adaptive observer is designed as (8), (10) and (25), then all the signals in the closed-loop adaptive system are global uniformly bounded. And if u(t) can guarantee that φ (t) is persistent excitation, then the parameter estimation and state observation are achieved as

$$\left\{{\matrix{{\mathop {\lim}\limits_{t \rightarrow \infty} [\theta - \hat \theta (t)] = 0} \hfill \cr{\mathop {\lim}\limits_{t \rightarrow \infty} [x(t) - \hat x(t)] = 0.} \hfill \cr}} \right.$$(29) -

Proof. The observer equation we design can be written as

$$\matrix{{{D^\alpha}\hat x(t) = {A_o}\hat x(t) + [\hat A(t) - {A_o}]x(t) +} \hfill \cr{\quad \quad \quad \quad {{\hat b}_p}(t)u(t) + L(t)v(t).} \hfill \cr}$$(30)

Since A o is a stable matrix and \({{\hat b}_p},\hat A(t),L(t),x(t),u(t),v(t)\) are bounded, it follows that \(\hat x(t)\) is bounded, which in turn implies that all signals are bounded.

Based on the convergence properties of the least squares for the linear regression form problem, one concludes that \(\hat \theta ({t_L})\) is an unbiased estimation of θ. In other words, the equation \(\mathop {lim}\limits_{t \rightarrow \infty} [\theta - \hat \theta (t)] = 0\) holds.

Define the state observation error as

Then, \(\tilde x(t)\) satisfies

where \({{\tilde a}_p}(t) = {a_p} - {{\hat a}_p}(t)\) and \({{\tilde b}_p}(t) = {b_p} - {{\hat b}_p}(t)\) are the parameter errors.

Since \(\theta - \hat \theta (t) \rightarrow 0\) as t → ∞, it follows that \({{\tilde a}_p}(t) \rightarrow 0\) and \({{\tilde b}_p}(t) \rightarrow 0\). Since u(t),y(t) and v(t) are bounded, the error equation consists of a homogenous part which is exponentially stable, and an input which is decaying to zero. This implies that \(\tilde x(t) \rightarrow 0\) as t → ∞. □

-

Remark 6. The application range of the proposed adaptive observer should be pointed out. Such an adaptive observer applies to the linear fractional order systems where the unknown parameters appear linearly in the dynamics. To estimate the unknown parameters precisely, the inputs to the systems must satisfy the conditions of persistent excitation.

4 Numerical examples

Numerical examples illustrated in this section are implemented via the piecewise numerical approximation algorithm. To get more information about the algorithm, one can refer to [25]. For all the numerical examples, consider the following LTI SISO fractional order plant

The pseudo state space realization of observable canonical form is

ThestablematrixAo is selected as

The variance of the white noise v(t) is selected as

.

The related initial conditions are selected as

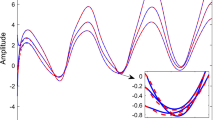

The multi-sine signal is selected as the system input u (t), which is shown in Fig. 1.

-

Example 1. Construct an adaptive observer with the parameter identification based on the regression-like form (16). Select the stable filter

$$F({p^\alpha}) = {p^{1.2}} + 4{p^{0.6}} + 4.$$ -

Example 2. Construct an adaptive observer with the parameter identification based on the regression-like form (19). Select the generalized Poisson moment functional

$${1 \over {H({p^\alpha})}} = {\left({{1 \over {{p^{0.6}} + 2}}} \right)^2}.$$

It can be seen from the results of Examples 1 and 2 that both adaptive observers make the state estimations approach the actual states precisely, despite the presence of unknown system parameters and measurement noise. However, the transient responses of the adaptive observer with method 1 are better than those of the adaptive observer with method 2. The observation error of adaptive observer due to method 1 is less than that of the latter one.

5 Conclusions

In this article, a new adaptive observer design scheme is proposed for LTI SISO fractional order plant. Both the state and the unknown parameters can be efficiently observed. Motivated by the noise estimation, a modified least squares solution of the parameters is developed. For the scheme of the proposed adaptive observer, the stability of the closed-loop system and the parameter convergence have been discussed in detail, with the parameters being identified rapidly and accurately. Simulation results from numerical examples are provided to demonstrate the advantages and effectiveness of the approaches proposed in this article. It is believed that the approaches provide a new avenue to solve the problem. Future research subjects will include how to extend the results to multiple-input multiple-output case and how to control the plant based on the proposed adaptive observer.

References

J. A. T. Machado, I. S. Jesus, A. Galhano, J. B. Cunha. Fractional order electromagnetics. Signal Processing, vol. 86, no. 10, pp. 2637–2644, 2006.

C. A. Monje, Y. Q. Chen, B. M. Vinagre, D. Y. Xue, V. Feliu. Fractional-order Systems and Controls: Fundamentals and Applications, London, England: Springer-Verlag, 2010.

L. Wang, P. Cheng, Y. Wang. Frequency domain subspace identification of commensurate fractional order input time delay systems. International Journal of Control, Automation and Systems, vol. 9, no. 2, pp. 310–316, 2011.

Z. Liao, Z. T. Zhu, S. Liang, C. Peng, Y. Wang. Subspace identification for fractional order hammerstein systems based on instrumental variables. International Journal of Control, Automation and Systems, vol. 10, no. 5, pp. 947–953, 2012.

M. Bettayeb, S. Djennoune. A note on the controllability and the observability of fractional dynamical systems. In Proceedings of the 2nd IFAC Workshop on Fractional Differentiation and its Workshop Applications, IFAC, Porto, Portugal, pp. 493–498, 2006.

K. Balachandran, V. Govindaraj, L. Rodríguez-Germa, J. J. Trujillo. Controllability results for nonlinear fractionalorder dynamical systems. Journal of Optimization Theory and Applications, vol. 156, no. 1, pp. 33–44, 2013.

Z. Liao, C. Peng, W. Li, Y. Wang. Robust stability analysis for a class of fractional order systems with uncertain parameters. Journal of the Franklin Institute, vol. 348, no. 6, pp. 1101–1113, 2011.

S. Liang, C. Peng, Y. Wang. Improved linear matrix inequalities stability criteria for fractional order systems and robust stabilization synthesis: The 0 < a < 1 case. Control Theory & Applications, vol. 30, no. 4, pp. 531–535, 2012. (in Chinese)

Y. H. Wei, M. Zhu, C. Peng, Y. Wang. Robust stability criteria for uncertain fractional order systems with time delay. Control and Decision, vol. 29, no. 3, pp. 511–516, 2014. (in Chinese)

K. Erenturk. Fractional-order PIλ-Dμ and active disturbance rejection control of nonlinear two-mass drive system. IEEE Transactions on Industrial Electronics, vol. 60, no. 9, pp. 3806–3813, 2013.

Y. H. Lan, W. J. Li, Y. Zhou, Y. P. Luo. Non-fragile observer design for fractional-order one-sided Lipschitz nonlinear systems. International Journal of Automation and Computing, vol. 10, no. 4, pp. 296–302, 2013.

F. Padula, S. Alcantara, R. Vilanova, A. Visioli. H∞ control of fractional linear systems. Automatica, vol. 49, no. 7, pp. 2276–2280, 2013.

I. N. Doye, M. Zasadzinski, M. Darouach, N. E. Radhy. Observer-based control for fractional-order continuous-time systems. In Proceedings of the 48th IEEE Conference on Decision and Control and 28th Chinese Control Conference, IEEE, Shanghai, China, pp. 1932–1937, 2009.

Y. H. Lan, Y. Zhou. LMI-based robust control of fractionalorder uncertain linear systems. Computers and Mathematics with Applications, vol. 62, no. 3, pp. 1460–1471, 2011.

A. Dzielinski, D. Sierociuk. Observer for discrete fractional order state-space systems. In Proceedings of the 2nd IFAC Workshop on Fractional Differentiation and its Applications, IFAC, Porto, Portugal, pp. 511–516, 2006.

J. C. Trigeassou, N. Maamri, J. Sabatier, A. Oustaloup. State variables and transients of fractional order differential systems. Computers and Mathematics with Applications, vol. 64, no. 10, pp. 3117–3140, 2012.

B. Shafai, A. Oghbaee. Positive observer design for fractional order systems. In Proceedings of the World Automation Congress, IEEE, Waikoloa, USA, pp. 531–536, 2014.

J. Sabatier, M. Merveillaut, L. Fenetau, A. Oustaloup. On observability of fractional order systems. In Proceedings of the ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, ASME, San Diego, USA, pp. 253–260, 2009.

J. Sabatier, C. Farges, M. Merveillaut, L. Feneteau. On observability and pseudo state estimation of fractional order systems. European Journal of Control, vol. 18, no. 3, pp. 260–271, 2012.

Y. S. Liu. Robust adaptive observer for nonlinear systems with unmodeled dynamics. Automatica, vol. 45, no. 8, pp. 1891–1895, 2009.

M. Alma, M. Darouach. Adaptive observers design for a class of linear descriptor systems. Automatica, vol. 50, no. 2, pp. 578–583, 2014.

I. D. Landau, R. Lozano, M. M. Saad, A. Karimi. Adaptive Control: Algorithms, Analysis and Applications, 2nd ed., London, England: Springer-Verlag, 2011.

M. S. Mahmoud, Y. Q. Xia. Applied Control Systems Design, London, England: Springer-Verlag, 2012.

S. Victor, R. Malti, H. Garnier, A. Oustaloup. Parameter and differentiation order estimation in fractional models. Automatica, vol. 49, no. 4, pp. 926–935, 2013.

Y. H. Wei, Q. Gao, C. Peng, Y. Wang. A rational approximate method to fractional order systems. International Journal of Control, Automation and Systems, vol. 12, no. 6, pp. 1180–1186, 2014.

Acknowledgement

The authors would like to thank the associate editor and the anonymous reviewers for their constructive comments, based on which the presentation of this paper has been greatly improved.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by National Natural Science Foundation of China (No. 61004017).

Recommended by Associate Editor Sheng Chen

Rights and permissions

About this article

Cite this article

Wei, YH., Sun, ZY., Hu, YS. et al. On fractional order adaptive observer. Int. J. Autom. Comput. 12, 664–670 (2015). https://doi.org/10.1007/s11633-015-0929-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11633-015-0929-3