Abstract

Background

Reducing hospital readmissions is a federal policy priority, and predictive models of hospital readmissions have proliferated in recent years; however, most such models tend to focus on the 30-day readmission time horizon and do not consider readmission over shorter (or longer) windows.

Objectives

To evaluate the performance of a predictive model of hospital readmissions over three different readmission timeframes in a commercially insured population.

Design

Retrospective multivariate logistic regression with an 80/20 train/test split.

Participants

A total of 2,213,832 commercially insured inpatient admissions from 2016 to 2017 comprising 782,768 unique patients from the Health Care Cost Institute.

Main Measures

Outcomes are readmission within 14 days, 15–30 days, and 31–60 days from discharge. Predictor variables span six different domains: index admission, condition history, demographic, utilization history, pharmacy, and environmental controls.

Key Results

Our model generates C-statistics for holdout samples ranging from 0.618 to 0.915. The model’s discriminative power declines with readmission time horizon: discrimination for readmission predictions within 14 days following discharge is higher than for readmissions 15–30 days following discharge, which in turn is higher than predictions 31–60 days following discharge. Additionally, the model’s predictive power increases nonlinearly with the inclusion of successive risk factor domains: patient-level measures of utilization and condition history add substantially to the discriminative power of the model, while demographic information, pharmacy utilization, and environmental risk factors add relatively little.

Conclusion

It is more difficult to predict distant readmissions than proximal readmissions, and the more information the model uses, the better the predictions. Inclusion of utilization-based risk factors add substantially to the discriminative ability of the model, much more than any other included risk factor domain. Our best-performing models perform well relative to other published readmission prediction models. It is possible that these predictions could have operational utility in targeting readmission prevention interventions among high-risk individuals.

Similar content being viewed by others

Introduction

Hospital readmissions reflect a complex function of patients’ underlying health status, quality of in-hospital care, discharge and transition planning, follow-up care, at-home supports, access to health care, and admitting behavior of clinicians. This complexity may explain the relatively poor performance of many readmission models compared to other outcomes such as mortality or prolonged length of stay, and, indeed, Kansagara and co-authors (2011) note in a widely cited review of hospital readmission prediction models that “models of patient-level risk factors such as medical comorbidities, basic demographic data, and clinical variables are much better able to predict mortality than readmission risk.” 1,2,3,4

This complexity is mirrored by the fragmented landscape of hospital readmission prediction research: studies proliferate, but methods and data sources differ significantly among researchers. Certain studies use information available only at admission5, while others use information available at discharge.6 Some studies predict readmissions within a given hospital system7, while others are able to predict readmissions across hospitals.8 Certain studies use only administrative data for risk factors9, while others use electronic health records.1 Finally, certain studies use only information present in hospital administrative records10, while other studies use the spectrum of institutional, physician, and pharmacy claims as sources for risk factors.11 Indeed, the current CMS hospital-wide readmission models rely only on hospital claims in order to develop patient-specific risk factors, and do not use the wealth of information found in non-hospital claims: that is, physician, other facility, and pharmacy claims.12 As a result, these CMS models fair poorly when applied to readmission prediction, performing little better than chance alone.13

While these differences in models no doubt reflect the varied nature in which these models can potentially be applied—either at admission, at discharge, within hospital system, or across systems—this heterogeneity renders comparison of model performance, and identification of salient risk factors, difficult. The objective of this research was to use standardized administrative claims to develop models of hospital readmission over three different time horizons (within 14 days, 15–30 days, and 31–60 days after discharge). We predict readmissions among a commercially insured population using risk factors developed from institutional, physician, and pharmacy claims, and assess model performance on holdout samples using the C-statistic. We sequentially add domains of predictor variables in order to quantify the change in model performance with the introduction of additional covariates.

Methods

Data

This study uses commercial health care claims from the Health Care Cost Institute (HCCI). This data source is widely used in health services research, and the data cover over 55 million individuals each year with both employer-sponsored insurance and Medicare Advantage.14,15 Our total study population consists of 2,213,832 inpatient admissions among commercially insured patients from 2016 to 2017, comprising 782,768 unique patients.

This data offers several advantages over other commonly used data sources for studies of hospital readmissions: first, unlike studies based on only hospital discharge summaries, we are able to use the full spectrum of administrative data (that is, hospital claims, physician claims, and pharmacy claims) in order to create and include risk factors on condition history, utilization, and pharmacy use. Second, unlike studies that use only a single hospital’s population, and thus are unable to account for readmissions that occur outside of a given health system, we can follow individuals across hospitals, thus accounting for the universe of readmissions for this study population. Finally, because of data availability, much research tends to focus on the Medicare fee-for-service (FFS) population; while Medicare FFS beneficiaries do account for a substantial fraction of inpatient admissions, the commercially insured population (including Medicare Advantage) has been growing over recent years.16

The study’s outcomes are readmissions for any reason within 14 days, 15–30 days, and 31–60 days following discharge from the index admission. Readmissions are treated as subsequent index admissions.

Model

We use multivariate logistic regression with an 80/20 train/test split in order to predict hospital readmission over varying timeframes: within 14 days after discharge, 15–30 days after discharge, and 31–60 days after discharge.

We use a large array of predictor variables to capture aspects of the index hospitalization (28 variables), condition history (91 variables), demographic factors (9 variables), utilization history (34 variables), pharmacy utilization (12 variables), and environmental risk factors (39 variables). Index hospitalization characteristics rely on information from the index admission (for example, length of stay and discharge disposition); the condition history, utilization history, and pharmacy utilization variables all rely on information up until, but not including, the index admission. Condition history factors are intended to control for patients’ underlying health status; utilization history variables are intended to control for patients’ access to care and history of care-seeking behaviors; and pharmacy utilization risk factors are intended to capture whether the patient has used select medications. Environmental risk factors are intended to capture social determinants of health.

The majority of these variables are defined as part of the Hilltop Institute’s Pre-AH Model, a risk prediction model that predicts avoidable hospital events in the Medicare FFS population in Maryland.17 We conducted a supplementary literature review in order to identify additional factors that have been shown to be relevant for predicting readmissions that were not in our existing risk factor library, and included these in the model. We operationalize these risk factors using the HCCI hospital, physician, and pharmacy health claims. A full list of variables used in each category is in Appendix 1. For additional information on the construction of the risk factors, readers should consult the codebook for the base model.18

We estimate eighteen models in total: six models in each of three different readmission timeframes. Model 1 includes only index admission characteristics; model 2 contains all the covariates from model 1, and condition history variables; model 3 contains all the covariates from model 2, and adds demographic information; model 4 contains all the covariates from model 3, and adds utilization covariates; model 5 contains all the covariates from model 4, and adds pharmacy utilization variables; and model 6 contains all the covariates from model 5, and adds ZIP code environmental risk factors.

We split the data in to 80% testing and 20% training samples, splitting on patient identifier in order to avoid prediction leakage. Model coefficients are generated using the 80% training sample (n = 1,771,550), and the coefficients are used to generate readmission risk scores in the holdout sample. Crucially, the prediction model coefficients are not estimated using the holdout sample; thus, the holdout sample predictions can be compared with actual readmission outcomes in order to test the accuracy of the model.

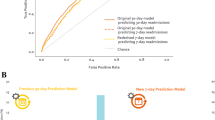

Predictive model performance can be assessed using the C-statistic, which is a measure of the area under the receiver operator curve (ROC).19 The ROC plots the true positive rate against the false positive rate for binary classifiers using successive cutoff thresholds, and the C-statistic “measures the probability that a randomly selected diseased subject has a higher predicted risk than a randomly selected non-diseased subject.”20 It is important to note that this only captures model discrimination, or the extent to which index admissions with a readmission are assigned a higher risk score than index admissions without a readmission. Model calibration is another important element of predictive model performance but is not addressed in this study.21

This study was deemed to be exempt from review by the University of Maryland Baltimore County Institutional Review Board. All analyses were conducted in SAS 9.4.22

Results

We first present summary statistics for our analytic sample of inpatient claims (Table 1). Across the 2,213,832 commercially insured inpatient admissions, 8.8% of inpatient admissions have a readmission within 14 days of discharge, 4.37% have a readmission from 15 to 30 days of discharge, and 4.94% have a readmission from 31 to 60 days of discharge. The preponderance of these inpatient admissions are individuals aged 55 and over, and in 61.14% of admissions, the patient has a history of hypertension; in 30.63%, the patient has a history of chronic kidney disease; in 29.62%, a history of diabetes; in 24.84%, a history of heart failure; and in 18.09%, a history of chronic obstructive pulmonary disorder.

We estimated six models for each of our readmission timeframes (within 14 days of discharge, 15–30 days after discharge, and 31–60 days after discharge). Results from the training dataset are presented in Table 2.

The model’s discriminatory power declines with readmission time horizon, and rises with the number of risk factor domains that are included. Model 1 includes only risk factors that are related to (and derived from) the index admission, and C-statistics range from 0.686 for the 14-day readmission horizon to 0.619 for the 31–60-day readmission window. The addition of condition flags, in model 2, improves the model’s discriminative ability across all readmission windows, but relatively more so among the longer time horizons. The performance in model 3 is virtually identical to that of model 2, indicating that the demographic factors—age bins and gender—add little to the model.

The inclusion of utilization history risk factors significantly improves the performance of the model. The C-statistic readmissions within 14 days increases from 0.737 in model 3 to 0.914 in model 4, for an improvement of 0.177. The other readmission time horizons experience a similar magnitude increase in discriminative power with the inclusion of utilization risk factors. Finally, the inclusion of pharmacy-based risk factors and environmental risk factors add relatively little to the model.

Finally, while we did not perform variable selection—and thus, in model 6, included over 200 predictors in both our model training and prediction—there is no evidence of overfitting. We present the analogous C-statistics for the holdout sample in Table 3, below, and note that these are almost identical to the C-statistics in the training sample.

Discussion

This study assessed the predictive accuracy of a hospital readmission prediction model using commercial claims from 2016 to 2017 across three separate time horizons. We document strong predictive performance for models that include index admission characteristics, demographic information, condition history, and utilization history risk factors. Moreover, we find that the predictive models are better able to predict proximal, rather than distant, events.

This research has two broad limitations. First, we do not distinguish between planned and unplanned readmissions. Researchers tend to filter out planned readmissions under the rationale that they are not indicative of poor hospital quality, and thus should not be prevented. We elected not to for two reasons: first, planned readmission algorithms tend to be subjective, and we are unaware of any widely used classification logic; and second, we strove for simplicity and reproducibility, and thus did not want to impose unneeded sample restrictions. Given that Horwitz et al. (2012) estimate planned readmissions to account for only 9% of all 30-day readmissions, we do not believe that the inclusion of planned readmissions meaningfully impacts our key results. The second limitation is that, as noted above, we do not assess model calibration. However, even without assessing calibration, high discriminative power of the model implies that patients can be accurately ranked according to their risk of readmission.

We believe that there are four significant contributions from this research. The first is that while researchers and policymakers tend to focus on readmissions in the Medicare FFS population, readmissions do occur in the commercially insured and Medicare Advantage (MA) populations (that is, the groups included in the HCCI data). While readmissions in the traditional Medicare FFS population have been used as the basis for the Hospital Readmission Reduction Program, the growing penetration of Medicare Advantage across the United States argues for a focus not just on traditional Medicare beneficiaries, but also individuals with MA coverage.16

The second contribution is that our models perform well relative to the published literature of readmission models. As noted in the introduction, prediction models of hospital readmission proliferate: a 2016 systematic review finds 60 studies with 73 unique predictive models published between 2011 and 2015.2 This same review finds that model performance varies significantly across studies, with reported C-statistics ranging from 0.21 to 0.88. An earlier systematic review includes 30 studies published in 2011 or earlier, and documents C-statistics ranging from 0.55 to 0.83.3 Other systematic reviews have documented similarly wide performance ranges.23,24

We are unaware of other readmission prediction models with C-statistics that exceed 0.91, and interpret this as a function of the explanatory power of our predictor domains. The risk factors in this model are the output of structured literature reviews and combine information from institutional, physician, and pharmacy claims, as well as publicly available environmental risk factors. Operationalizing these risk factors required a significant feature engineering effort, but the strong discriminative ability of the model may reflect the quality and scope of the risk factors.

As a corollary, our results demonstrate that methodologically transparent predictive models can perform well at predicting hospital readmissions over differing readmission time horizons. Given the general trend toward more complex, black-box models, we believe that this research provides an important counterweight for future researchers: multivariate logistic regression models, with a sufficient number of sufficiently high-quality predictors, can achieve very strong discriminative performance in predicting readmissions across multiple time horizons. Additionally, given that researchers have argued that a shorter readmission window may be more reflective of hospital quality or more reflective of preventable readmissions25,26,27, our model’s strong performance at predicting short-term readmissions (within 14 days of discharge) is particularly salient.

The third is that covariate domains matter for readmission prediction, but not all covariate domains matter equally. The inclusion of index-visit characteristics yields a relatively low amount of discriminative power for the model; the inclusion of condition history and utilization history improves model accuracy substantially. The outsized impact of the utilization history characteristics speaks to the implied importance of patients’ access to care and history of certain care-seeking behaviors in predicting readmission. Moreover, the modest predictive gains due to the inclusion of demographic factors, pharmacy utilization, and environmental risk factors indicate one of two possible explanations: either these factors are not causally linked to readmissions, or they do not encode information that is independent from that already captured by the other risk factor domains. We remain agnostic as to which of these possible explanations may dominate. Finally, we note that for individuals or organizations seeking to develop predictive models of hospital readmissions with limited data engineering resources, developing only the index admission risk factors, condition risk factors, and utilization risk factors may produce sufficiently discriminating predictive models.

The fourth contribution is the potential for these readmission models—or those like them—to inform policy. While the 30-day readmission measure has gained traction as a metric of hospital quality—forming the backbone of Medicare’s Hospital Readmission Reduction Program—there is relatively little evidence on the optimality of 30 days as a readmission benchmark. The 30-day cutoff is typically justified on the grounds that, while it is arbitrary, it picks up a signal reflective of hospital quality.28 However, some index admissions may be more (or less) susceptible to deficient in-hospital care than others, and the appropriate post-discharge window may differ from 30 days based on multiple factors.

An application of the modeling approach outlined in this study could be to identify—for a particular subset of patients admitted for a cause—the readmission window over which model performance is maximized according to some metric (thus, over which the signal-to-noise ratio is highest). Then, hospitals for which the observed number of readmissions exceeds the expected number for this patient population and this data-driven readmission window could, in a sense, be potentially thought of as providing deficient care for these patients with this particular cause of admission. This could be replicated across many disparate groups of patients and admission causes, thereby contributing to a nuanced, data-driven readmission policy.

Conclusion

This study assessed the predictive accuracy of a hospital readmission prediction model using commercial claims from 2016 to 2017. We estimate multivariate logistic regressions with stepwise inclusion of six predictor domains—index admission characteristics, condition history, demographics, utilization history, pharmacy utilization, and environmental risk factors—and model readmissions over three timeframes: within 14 days of discharge, 15–30 days of discharge, and 31–60 days of discharge. We document C-statistics for holdout samples ranging from 0.618 to 0.915, depending on readmission time horizon and included risk factor domains. The high discriminative accuracy of the model suggests that patients may be ranked by readmission risk with a high degree of precision, which may allow for proactive, targeted interventions for the highest-risk patients.

References

Morgan, D. J., Bame, B., Zimand, P., et al. (2019). Assessment of machine learning vs standard prediction rules for predicting hospital readmissions. JAMA Netw Open. 2(3), e190348-e190348.

Zhou, H., Della, P. R., Roberts, P., Goh, L., & Dhaliwal, S. S. (2016). Utility of models to predict 28-day or 30-day unplanned hospital readmissions: an updated systematic review. BMJ Open. 6(6).

Kansagara, D., Englander, H., Salanitro, A., et al. (2011). Risk prediction models for hospital readmission: a systematic review. JAMA. 306(15), 1688-1698.

Rajkomar, A., Oren, E., Chen, K., et al. (2018). Scalable and accurate deep learning with electronic health records. NPJ Digital Med. 1(1), 18.

Shadmi, E., Flaks-Manov, N., Hoshen, M., Goldman, O., Bitterman, H., & Balicer, R. D. (2015). Predicting 30-day readmissions with preadmission electronic health record data. Medical Care. 53(3), 283-289.

Yu, S., Farooq, F., Van Esbroeck, A., Fung, G., Anand, V., & Krishnapuram, B. (2015). Predicting readmission risk with institution-specific prediction models. Artif Intell Med. 65(2), 89-96.

Gildersleeve, R., & Cooper, P. (2013). Development of an automated, real time surveillance tool for predicting readmissions at a community hospital. Appl Clin Inform. 4(2), 153.

Shulan, M., Gao, K., & Moore, C. D. (2013). Predicting 30-day all-cause hospital readmissions. Health Care Manag Sci. 16(2), 167-175.

Brüngger, B., & Blozik, E. (2019). Hospital readmission risk prediction based on claims data available at admission: a pilot study in Switzerland. BMJ Open. 9(6), e028409.

Chen, S., Kong, N., Sun, X., Meng, H., & Li, M. (2019). Claims data-driven modeling of hospital time-to-readmission risk with latent heterogeneity. Health Care Manag Sci. 22(1), 156-179.

Collins, J., Abbass, I. M., Harvey, R., et al. (2017). Predictors of all-cause 30 day readmission among Medicare patients with type 2 diabetes. Curr Med Res Opin. 33(8), 1517-1523.

Yale New Haven Health Services Corporation; 2021 Hospital-Wide Readmission Measure Updates and Specifications Report – Version 10.0. Centers for Medicare & Medicaid Services (CMS). April 2021; accessed March 25, 2022. https://qualitynet.cms.gov/files/6094393d2be51c001edf6163?filename=2021_HWR_AUS_Report.pdf

Keenan, P. S., Normand, S. L. T., Lin, Z., et al. (2008). An administrative claims measure suitable for profiling hospital performance on the basis of 30-day all-cause readmission rates among patients with heart failure. Circ Cardiovascular Qual Outcomes. 1(1), 29-37.

Health Care Cost Institute. HCCI's external researcher publications powered by #HCCIdata. Health Care Cost Institute. Accessed September 21, 2022. https://healthcostinstitute.org/hcci-originals/external-research/

Maeda, J. L. K., & Nelson, L. (2018). How do the hospital prices paid by Medicare Advantage plans and commercial plans compare with Medicare fee-for-service prices?. INQUIRY: The Journal of Health Care Organization, Provision, and Financing. 55, 0046958018779654.

Jacobson, G., Freed, M., Damico, A., & Neuman, T. (2019). A dozen facts about Medicare Advantage in 2019. The Henry J. Kaiser Family Foundation. Retrieved from: https://www.kff.org/medicare/issue-brief/a-dozen-facts-about-medicare-advantage-in-2019/

Henderson, M., Han, F., Perman, C., Haft, H., & Stockwell, I. (2022). Predicting avoidable hospital events in Maryland. Health Serv Res. 57(1), 192-199.

Henderson, M., Han, F., & Stockwell, I. (2020, June 29). Maryland Primary Care Program (MDPCP) Pre-AH risk score specifications and codebook (Version 3). Baltimore, MD: The Hilltop Institute, UMBC.

Steyerberg, E. W., Vickers, A. J., Cook, N. R., et al. (2010). Assessing the performance of prediction models: A framework for some traditional and novel measures. Epidemiology. 21(1), 128.

Mauguen, A., & Begg, C. B. (2016). Using the Lorenz curve to characterize risk predictiveness and etiologic heterogeneity. Epidemiology. 27(4), 531

Alba, A. C., Agoritsas, T., Walsh, M., et al. (2017) Discrimination and calibration of clinical prediction models: Users’ guides to the medical literature. JAMA. 318(14), 1377-1384.

SAS Institute Inc. (2013). SAS® 9.4 Statements: Reference. Cary, NC: SAS Institute Inc.

Mahajan, S. M., Heidenreich, P., Abbott, B., Newton, A., & Ward, D. (2018). Predictive models for identifying risk of readmission after index hospitalization for heart failure: A systematic review. Eur J Cardiovasc Nurs. 17(8), 675-689.

Artetxe, A., Beristain, A., & Grana, M. (2018). Predictive models for hospital readmission risk: A systematic review of methods. Comput Methods Programs Biomed. 164, 49-64.

Chin, D. L., Bang, H., Manickam, R. N., & Romano, P. S. (2016). Rethinking thirty-day hospital readmissions: shorter intervals might be better indicators of quality of care. Health Affairs. 35(10), 1867-1875.

Graham, K. L., Auerbach, A. D., Schnipper, J. L., et al. (2018). Preventability of early versus late hospital readmissions in a national cohort of general medicine patients. Ann Intern Med. 168(11), 766-774.

Joynt, K. E., & Jha, A. K. (2012). Thirty-day readmissions—truth and consequences. N Engl J Med, 366(15), 1366-1369.

Horwitz, L., Partovian, C., Lin, Z., et al. Hospital-wide all-cause unplanned readmission measure: final technical report. Centers for Medicare and Medicaid Services.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 14 kb)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Henderson, M., Hirshon, J.M., Han, F. et al. Predicting Hospital Readmissions in a Commercially Insured Population over Varying Time Horizons. J GEN INTERN MED 38, 1417–1422 (2023). https://doi.org/10.1007/s11606-022-07950-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-022-07950-2