Abstract

Background

Physicians “purchase” many health care services on behalf of patients yet remain largely unaware of the costs of these services. Electronic health record (EHR) cost displays may facilitate cost-conscious ordering of health services.

Objective

To determine whether displaying hospital lab and imaging order costs is associated with changes in the number and costs of orders placed.

Design

Quasi-experimental study.

Participants

All patients with inpatient or observation encounters across a multi-site health system from April 2013 to October 2015.

Intervention

Display of order costs, based on Medicare fee schedules, in the EHR for 1032 lab tests and 1329 imaging tests.

Main Measures

Outcomes for both lab and imaging orders were (1) whether an order was placed during a hospital encounter, (2) whether an order was placed on a given patient-day, (3) number of orders placed per patient-day, and (4) cost of orders placed per patient-day.

Key Results

During the lab and imaging study periods, there were 248,214 and 258,267 encounters, respectively. Cost display implementation was associated with a decreased odds of any lab or imaging being ordered during the encounter (lab adjusted odds ratio [AOR] = 0.97, p = .01; imaging AOR = 0.97, p < .001), a decreased odds of any lab or imaging being ordered on a given patient-day (lab AOR = 0.95, p < .001; imaging AOR = 0.97, p < .001), a decreased number of lab or imaging orders on patient-days with orders (lab adjusted count ratio = 0.93, p < .001; imaging adjusted count ratio = 0.98, p < .001), and a decreased cost of lab orders and increased cost of imaging orders on patient-days with orders (lab adjusted cost ratio = 0.93, p < .001; imaging adjusted cost ratio = 1.02, p = .003). Overall, the intervention was associated with an 8.5 and 1.7% reduction in lab and imaging costs per patient-day, respectively.

Conclusions

Displaying costs within EHR ordering screens was associated with decreases in the number and costs of lab and imaging orders.

Similar content being viewed by others

INTRODUCTION

Despite the need to control health spending, the costs of specific health care services remain largely opaque to physicians and patients. Public and private initiatives, including consumer cost-searching websites, insurer reference pricing, and state price transparency regulations, have sought to increase availability and meaningfulness of cost information to patients. The potential role of physicians, however, who “purchase” most health care services on behalf of patients, has not been thoroughly examined. Physicians remain largely unaware of the costs of tests and therapies they order and find cost information inaccessible.1,2 Nevertheless, physicians desire cost information and believe it would change their ordering without negatively affecting patient care3,4 by prompting them to forego ordering low-value tests or to switch orders to less costly alternatives of equal clinical utility.

Studies from the 1990s suggested that cost information may influence physician ordering behavior and decrease spending,5,6,,7 yet cost information has not become a standard part of the ordering environment, and limited research in more recent health care contexts has shown conflicting findings.8,9,10,11,12,–13 Modern electronic health records (EHRs) with computerized physician order entry (CPOE) systems provide a scalable opportunity for real-time display of cost information to physicians during the ordering process. If EHR cost displays effectively alter physician ordering behavior, the technology could be widely disseminated as a means to promote cost-conscious ordering of health care services.

To address this gap in knowledge and to clarify the potential of EHR cost displays to alter ordering patterns, we conducted a large quasi-experimental longitudinal study on all hospital lab and imaging orders across a multi-site health system. Our objective was to test the hypothesis that real-time display of lab and imaging order costs within EHR ordering screens would reduce the daily cost of those orders.

METHODS

Setting and Participants

We conducted our study at an academic health system in the Northeast USA over a 30-month period from April 14, 2013, to September 30, 2015. Our health system contained three hospitals: a large academic center, an urban community hospital, and a community hospital serving an affluent population. We included all patients with inpatient or observation encounters at these hospitals during the study period. For these patients, we measured all lab and imaging orders placed by any provider, including physicians and advanced practice providers in addition to other staff such as pharmacists, dieticians, and nurses entering verbal orders from clinicians. We excluded orders placed by the Emergency Department staff, as differences in their ordering screens precluded their adoption of the cost display intervention.

Intervention

Our health system implemented its Epic EHR (Epic Systems Corporation; Verona, Wisconsin) across all three hospitals beginning April 2012. Costs were displayed in the ordering screens for lab tests beginning April 14, 2014, and for imaging tests beginning October 20, 2014. Each of these implementations occurred simultaneously across the health system because staging by hospital was not technically feasible in the EHR. The displayed lab costs were based on the 2014 Medicare Laboratory Fee Schedule, using our state-specific reimbursement rate. The displayed imaging costs were the sum of the facility reimbursement from the 2014 Medicare Hospital Outpatient Prospective Payment System Fee Schedule and the state-specific professional reimbursement from the 2014 Medicare Physician Fee Schedule. We displayed costs for 1032 unique lab tests and 1329 unique imaging tests. The cost display is described in detail in the Online Technical Appendix and is depicted in Fig. 1.

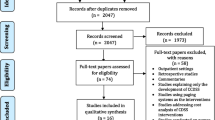

For lab orders, the study period was set as 12 months preceding and following the intervention start date (April 14, 2013–April 14, 2015). For imaging orders, the study period was originally set as 12 months preceding and following the intervention (October 20, 2013–October 20, 2015), but data collection was stopped 3 weeks early (September 30, 2015) to avoid potential confounding from the switch to International Classification of Diseases (ICD) 10 coding. (Online Appendix Fig. 1).

Data Collection

We collected data on characteristics of lab and imaging orders placed during the study period (e.g., date and time order was placed, displayed cost) and data about all encounters including admission and discharge dates, encounter type (inpatient/observation), primary payer, ICD diagnosis and procedure codes, and patient demographics (e.g. date of birth, sex, race, ethnicity). This study was approved by our Human Investigation Committee.

Outcome Measures

We sequentially modeled four outcomes for both the lab and imaging orders, including (1) whether any order was placed for the patient during a given hospital encounter (yes/no); (2) among encounters where at least one order was placed for the patient, whether any such order was placed on a given day (i.e., patient-day) during the encounter (yes/no); (3) among patient-days with any order, the number of orders that were placed (i.e., a count variable); and (4) among patient-days with any order, the total cost of orders that were placed (measured in dollars based on the costs that were displayed). Displayed costs were used to estimate order costs, and hence all costs were reported in 2014 US dollars. As a patient safety outcome, we also assessed in-hospital mortality.

Exposure Variable

For analysis of lab orders, encounters starting on or after April 14, 2014 were coded as post-intervention, while encounters starting earlier were coded as pre-intervention. Imaging orders were coded analogously, with encounters starting on or after October 20, 2014, coded as post-intervention. An encounter starting in the 6 months between the lab and imaging cost display implementations was coded post-intervention in the lab analysis and pre-intervention in the imaging analysis.

Statistical Methods

The unit of analysis was a patient-day, nested within hospital encounter. Data for labs and for imaging were analyzed separately using the same method. Because a substantial proportion of encounters and patient-days had zero orders, our approach to examine the association of the intervention with orders and costs had three parts: a logistic regression model at the encounter-level estimating the likelihood of having any order during an encounter, a logistic regression model at the patient-day-level estimating the likelihood of having any order in a patient-day among encounters where orders occurred, and a generalized linear model with a truncated Poisson distribution for the number of orders (or a generalized linear model with a gamma distribution for costs of orders) in a patient-day when orders occurred. Each model adjusted for several covariates, including patient age, sex, race, ethnicity, insurance type, Elixhauser comorbidity index, hospital site, calendar month on admission, and day number of hospital stay. Because our preliminary analysis suggested no evidence for statistically significant ordering differences as a function of the number of days pre- and post-intervention, we did not include parameters for temporal trend in our final models. Parameter estimates from our models were then used to calculate expected cost savings from the intervention in terms of overall order costs per patient-day. We used a bootstrapping methodology to construct 95% confidence intervals (CI) for the estimated cost savings. The full statistical modeling is described in the Online Technical Appendix.

In addition, we compared in-hospital mortality rates per patient-day before versus after the intervention using chi-squared tests. Analyses were conducted using SAS v9.4 (SAS Institute; Cary, NC) and Stata v14.1 (StataCorp, College Station, Texas). This report follows Standards for Quality Improvement Reporting Excellence (SQUIRE) guidelines.

RESULTS

Lab Cost Display

During the 24 months of the lab study period, there were 156,655 unique patients at our health system generating 248,214 inpatient or observation encounters and 1,322,073 patient hospital days, of which 47.1% occurred in the 12 months before the cost display implementation, and 52.9% occurred in the 12 months after the implementation (Table 1).

The lab cost display intervention was associated with a decreased proportion of patients with any lab ordered during their encounter (87.5 vs. 89.1%; adjusted odds ratio [AOR] 0.97; 95% CI 0.94–0.99). Among those patients with labs ordered during their encounter, the cost display was associated with a decreased proportion of patient-days with any lab ordered (54.4 vs. 55.6%; AOR 0.95; 95% CI 0.94–0.96). Among those patient-days in which labs were ordered, the cost display was associated with a decrease in the daily number of labs ordered (4.0 vs. 4.3; adjusted count ratio 0.93; 95% CI 0.93–0.93) and a decrease in the daily cost of labs ordered ($68.79 vs. $76.07; adjusted cost ratio 0.93; 95% CI 0.93–0.94) (Fig. 2a, Online Appendix Table 1).

The predicted lab cost per patient-day with the cost display was $35.96 (95% bootstrap CI, $34.57 to $37.36), compared to $39.32 (95% bootstrap CI, $37.73 to $40.86) without the cost display, resulting in an estimated savings of $3.35 per patient-day (95% bootstrap CI $1.97 to $4.72), or 8.5% (95% bootstrap CI 5.2 to 11.7%) (Fig. 3a). Among our bootstrap samples, all showed a cost savings associated with the cost display intervention.

Costs per patient hospital day relative to cost display intervention. a Lab costs. b Imaging costs. Red lines are predicted costs based on model parameters and covariates. Dashed purple lines after the cost display intervention are counterfactual costs, which represent predicted costs without the cost display intervention. The post-intervention time period for imaging costs was slightly shorter because data collection was stopped 3 weeks early to avoid a potential confounding effect of the switch to International Classification of Diseases (ICD) 10 coding.

Imaging Cost Display

During the 24 months of the imaging study period, there were 161,813 unique patients at our health system generating 258,267 inpatient or observation encounters and 1,363,752 patient hospital days, of which 51.1% occurred in the 12 months before the cost display implementation, and 48.9% occurred in the 12 months after the implementation (Table 1).

The imaging cost display intervention was associated with a decreased proportion of patients with any imaging ordered during their encounter (41.5 vs. 42.3%; AOR 0.97; 95% CI 0.95–0.98). Among those patients with imaging ordered during their encounter, the cost display was associated with a decreased proportion of patient-days with any imaging ordered (28.5 vs. 29.4%; AOR 0.97; 95% CI 0.96–0.98). Among those patient-days in which imaging was ordered, the cost display was associated with a decrease in the daily number of imaging tests ordered (1.4 vs. 1.5; adjusted count ratio 0.98; 95% CI 0.97–0.99) but an increase in the daily cost of imaging tests ordered ($243.17 vs. $239.40; adjusted cost ratio 1.02; 95% CI 1.01–1.03) (Fig. 2b, Online Appendix Table 2).

The predicted imaging cost per patient-day with the cost display was $37.74 (95% bootstrap CI $35.68 to $39.63), compared to $38.38 (95% bootstrap CI $36.18 to $40.28) without the cost display, resulting in an estimated savings of $0.65 per patient-day (95% bootstrap CI − $1.98 to $2.66), or 1.7% (95% bootstrap CI − 5.3 to 6.7%) (Fig. 3b). Among our bootstrap samples, 62.6% showed a cost savings associated with the cost display intervention.

Patient Outcomes

In-hospital mortality in the lab component of the study decreased from 1.71% during the pre-intervention period to 1.60% during the post-intervention period (p = 0.02). In the imaging component of the study, in-hospital mortality remained stable (1.66% during the pre-intervention period versus 1.62% during the post-intervention period, p = 0.44).

DISCUSSION

In this hospital-based study at a large academic health system, we found that passively displaying costs within the EHR for lab and imaging tests was associated with a decrease in the odds of patients having any lab or imaging order placed during their hospital encounter, the odds of patients having any lab or imaging order placed on a given hospital day, and the quantity of lab and imaging orders placed per hospital day. The cost displays were associated with a mean decrease of 8.5% in aggregate lab costs per patient-day and likely reduced imaging costs per patient-day, with no associated increase in in-hospital mortality.

Our findings fall amid conflicting recent reports on the impact of cost display interventions. For example, a 2013 study which displayed costs in an EHR for 30 inpatient lab tests found that order costs for these tests decreased by 9.6% per patient-day, while order costs for tests without costs displayed increased by 2.9% over 6 months.10 Another study observed that among 27 outpatient lab tests with costs displayed, five showed significant decreases in order volume of up to 15.2%.11 A recent randomized trial displaying costs for 30 lab test groups, however, found no decrease in test volume or cost compared to control lab tests.12 As noted by two recent systematic reviews, high-quality evidence for the impact of cost displays, especially within a modern context, remains limited.13,14 Among studies from the past decade that measured the impact of incorporating cost information into EHR ordering screens, none has implemented cost displays for an entire class of orders (e.g., lab, imaging, or medications) and measured the impact of cost display as a sole intervention on physician ordering behavior.8,9,10,11,–12,15,16 Our study improves on the existing studies by displaying costs for over 2000 lab and imaging tests across a diverse multi-hospital system, measuring ordering patterns associated with cost display over the largest number of hospital admissions and unique patients, breaking down the ordering outcome into the multiple components that together comprise total costs per patient-day, and employing a rigorous analysis to control for many potential confounders.

The decreases we observed in both the odds of any lab or imaging order being placed, and the quantity of those orders, suggest that the overall decrease in order costs may have been largely attributable to physicians omitting orders of lower clinical utility. The decrease in order costs could also be partially attributable to physicians substituting less expensive orders of equal clinical utility, although our analysis does not allow us to test this hypothesis. The only utilization measure that increased in association with the cost display was imaging costs per patient-day when imaging orders were placed. The concurrent decrease in both the quantity of imaging orders placed on those same days and the overall number of patient-days with any imaging order suggests that less essential low-cost imaging tests might have been omitted, while more essential high-cost tests remained. The reduction in patient-days with only low-cost imaging tests may thus have resulted in a higher average cost among the remaining days with imaging orders. This might explain why imaging costs on patient-days with imaging orders increased, while aggregate imaging cost per patient-day decreased.

We found a larger impact of the cost display on lab ordering than on imaging ordering, both in terms of order volume and order cost. This could have several explanations. First, physicians may more often have considered lab tests to be discretionary than they did imaging tests. Second, because our study was conducted in an academic system where house staff and advance practice providers enter most orders, it is possible that attending physicians—whose practice might not have been as affected by the cost display because they were not regularly exposed to it—were responsible for requesting a greater fraction of patients’ imaging tests than lab tests. Third, it is likely that physicians were more often able to substitute less expensive tests of equal clinical utility when ordering labs than when ordering imaging.

Our cost display was purely informational, did not modify workflow, did not force any change in behavior, and met a perceived need among physicians. The modest cost reductions we observed may reflect physicians not always noticing the unobtrusive display, considering low test costs not to warrant changing their ordering, or deciding most lab and imaging orders were clinically appropriate regardless of cost. Further research is needed to understand physicians’ experience with cost display interventions to identify opportunities for improvement. When applied over all patient-days in our post-intervention time period, the estimated cost reductions associated with the cost display equate to $2,341,807 and $433,178 in 12-month lab and imaging cost savings, respectively, across our health system. Although we recognize that these cost estimates based on Medicare fee schedules for individual tests may not reflect actual costs of care, these estimates helped isolate and quantify the approximate savings associated with reduced ordering of these services. While further research is still needed to assess the appropriateness of the orders omitted, cost displays may benefit patients by avoiding unnecessary lab and imaging tests with the attendant pain, disruption, and risk of false positive results. Electronic health records provide the ideal environment in which to display costs, but their functionality to do so would need improvement to enable broad adoption. Policy makers could facilitate this process by including cost display as part of EHR incentive programs. Additionally, cost displays could be expanded beyond lab and imaging orders to other order types—such as medications, procedures, or durable medical equipment.

There are some limitations to our study. First, we did not have a concurrent control group, and hence cannot fully account for possible confounding of temporal trends in lab and imaging ordering. However, we tried to account for a rich set of covariates to help minimize such impact, and there was no other major initiative influencing lab and imaging use at our health system during the study period. Given that order costs were stable before our intervention, dropped immediately after the cost display implementations, and then stabilized once again during the post-intervention period (Fig. 3), the likelihood that temporal trends confounded our findings is low. Future studies with a strong concurrent control group would help validate our findings. However, given the knowledge-based nature of cost display interventions, future research should be mindful of the unique challenges in selecting appropriate control groups. For example, when prior cost display studies, including recent randomized studies, have attempted to use concurrent control groups, contamination between groups or interference between units17 has frequently obscured the impact of the intervention.9,10,13,18 A second limitation is that our ability to reliably assess the impact of the cost display on patient safety outcomes was limited. Although based on unadjusted results, the lack of an increase in in-hospital mortality in our study provides modest reassurance and is in line with older reports of cost display interventions which also did not note an impact on safety outcomes.6,7,19,20 Third, our study was conducted at a single health system in the inpatient and observation settings only. Although the diversity of hospitals in our system helps improve the generalizability of our findings to other locations, our results may not extend to outpatient settings or to other health systems. Replication of the intervention at other health systems would provide additional insights.

In conclusion, we found that displaying costs to physicians within EHR ordering screens for lab and imaging orders was associated with modest decreases in the number and costs of these orders during hospital inpatient and observation encounters at our health system. Our results suggest that physicians can be “nudged” to be more cognizant of the cost implications of their ordering.

References

Allan GM, Lexchin J. Physician awareness of diagnostic and nondrug therapeutic costs: A systematic review. International journal of technology assessment in health care. 2008;24(2):158–165.

Long T, Silvestri MT, Dashevsky M, Halim A, Fogerty RL. Exit Survey of Senior Residents: Cost Conscious but Uninformed. J Grad Med Educ. 2016;8(2):248–251.

Allan GM, Lexchin J, Wiebe N. Physician awareness of drug cost: A systematic review. PLoS medicine. 2007;4(9):1486–1496.

Long T, Bongiovanni T, Dashevsky M, et al. Impact of laboratory cost display on resident attitudes and knowledge about costs. Postgraduate medical journal. 2016.

Hampers LC, Cha S, Gutglass DJ, Krug SE, Binns HJ. The effect of price information on test-ordering behavior and patient outcomes in a pediatric emergency department. Pediatrics. 1999;103(4 Pt 2):877–882.

Tierney WM, Miller ME, McDonald CJ. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests. The New England journal of medicine. 1990;322(21):1499–1504.

Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA : the journal of the American Medical Association. 1993;269(3):379–383.

Chien AT, Lehmann LS, Hatfield LA, et al. A Randomized Trial of Displaying Paid Price Information on Imaging Study and Procedure Ordering Rates. Journal of general internal medicine. 2017;32(4):434–448.

Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. Journal of the American College of Radiology : JACR. 2013;10(2):108–113.

Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA internal medicine. 2013;173(10):903–908.

Horn DM, Koplan KE, Senese MD, Orav EJ, Sequist TD. The impact of cost displays on primary care physician laboratory test ordering. Journal of general internal medicine. 2014;29(5):708–714.

Sedrak MS, Myers JS, Small DS, et al. Effect of a Price Transparency Intervention in the Electronic Health Record on Clinician Ordering of Inpatient Laboratory Tests: The PRICE Randomized Clinical Trial. JAMA internal medicine. 2017.

Silvestri MT, Bongiovanni TR, Glover JG, Gross CP. Impact of price display on provider ordering: A systematic review. Journal of hospital medicine : an official publication of the Society of Hospital Medicine. 2016;11(1):65–76.

Goetz C, Rotman SR, Hartoularos G, Bishop TF. The Effect of Charge Display on Cost of Care and Physician Practice Behaviors: A Systematic Review. Journal of general internal medicine. 2015.

Fang DZ, Sran G, Gessner D, et al. Cost and turn-around time display decreases inpatient ordering of reference laboratory tests: a time series. BMJ quality & safety. 2014.

Nougon G, Muschart X, Gerard V, et al. Does offering pricing information to resident physicians in the emergency department potentially reduce laboratory and radiology costs? European journal of emergency medicine : official journal of the European Society for Emergency Medicine. 2014.

Rosenbaum PR. Interference between units in randomized experiments. J Am Stat Assoc. 2007;102(477):191–200.

Bates DW, Kuperman GJ, Jha A, et al. Does the computerized display of charges affect inpatient ancillary test utilization? Archives of internal medicine. 1997;157(21):2501–2508.

Lin YC, Miller SR. The impact of price labeling of muscle relaxants on cost consciousness among anesthesiologists. J Clin Anesth. 1998;10(5):401–403.

McNitt J, Bode E, Nelson R. Long-term pharmaceutical cost reduction using a data management system. Anesthesia and Analgesia. 1998;87(4):837–842.

Acknowledgements

The authors would like to thank Dr. Thomas Balcezak, Mr. Stephen Allegretto, Ms. Lisa Stump, Dr. Allen Hsiao, and Dr. Hyung Paek of Yale New Haven Health System for their support in this project.

Contributors

Dr. Silvestri, Dr. Xu, Dr. Bernstein, Dr. Chaudhry, Mrs. Silvestri, Dr. Gross, and Dr. Krumholz were responsible for the conception and design of the work. Dr. Silvestri, Dr. Xu, Dr. Long, Dr. Bongiovanni, Dr. Stolar, Dr. Greene, Dr. Dziura, and Dr. Krumholz were responsible for the acquisition, analysis, and interpretation of data for the work. Dr. Silvestri, Dr. Xu, Dr. Stolar, Dr. Greene, and Dr. Krumholz were responsible for drafting the manuscript, which was critically reviewed for important intellectual content by Dr. Long, Dr. Bongiovanni, Dr. Bernstein, Dr. Chaudhry, Mrs. Silvestri, Dr. Dziura, and Dr. Gross. All authors gave final approval of the version to be published and agree to be accountable for the integrity and accuracy of all aspects of the work.

Funding

No external grants or funds were used to support this work. Dr. Silvestri was supported by the Robert Wood Johnson Foundation Clinical Scholars Program at Yale University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they do not have conflicts of interest.

Additional information

This work has not been previously presented.

Electronic Supplementary Material

ESM 1

(DOCX 762 kb)

Rights and permissions

About this article

Cite this article

Silvestri, M.T., Xu, X., Long, T. et al. Impact of Cost Display on Ordering Patterns for Hospital Laboratory and Imaging Services. J GEN INTERN MED 33, 1268–1275 (2018). https://doi.org/10.1007/s11606-018-4495-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-018-4495-6