Abstract

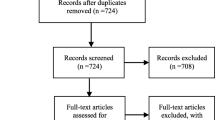

We conducted a review of articles published in 2013 to identify high-quality research in medical education that was relevant to general medicine education practice. Our review team consisted of six general internists with expertise in medical education of varying ranks, as well as a professional medical librarian. We manually searched 15 journals in pairs, and performed an online search using the PubMed search engine for all original research articles in medical education published in 2013. From the total 4,181 citations identified, we selected 65 articles considered most relevant to general medicine educational practice. Each team member then independently reviewed and rated the quality of each selected article using the modified Medical Education Research Study Quality Instrument. We then reviewed the quality and relevance of each selected study and grouped them into categories of propensity for inclusion. Nineteen studies were felt to be of adequate quality and were of moderate to high propensity for inclusion. Team members then independently voted for studies they felt to be of the highest relevance and quality within the 19 selected studies. The ten articles with the greatest number of votes were included in the review. We categorized the studies into five general themes: Improving Clinical Skills in UME, Inpatient Clinical Teaching Methods, Advancements in Continuity Clinic, Handoffs/Transitions in Care, and Trainee Assessment. Most studies in our review of the 2013 literature in general medical education were limited to single institutions and non-randomized study designs; we identified significant limitations of each study. Selected articles may inform future research and practice of medical educators.

Similar content being viewed by others

Undergraduate medical education (UME) and graduate medical education (GME) must rapidly change to meet the evolving societal needs of healthcare. Requirements for competency-based assessment of learners and measures of training outcomes,1 together with duty hour restrictions and new regulatory obligations, have both motivated innovation in medical education and limited the time available for general medicine educators to stay abreast of current advances in medical education. Accordingly, we conducted a review to identify and summarize the most relevant, high-quality research in medical education published in 2013 in order to inform general medicine educators in teaching, curricula development, and assessment.

Methods

Our review team consisted of six general internists with expertise in medical education, as well as a professional medical librarian (KD), reflecting the diverse experiences and perspectives of UME and GME leadership of varying seniority and clinician educators in teaching, curriculum development, implementation, assessment, and evaluation of learners across the continuum at geographically and culturally distinct academic medical centers.

We worked in pairs to manually search all studies published between January and December 2013 in 15 major clinical journals relevant to medical education (Table 1). Two authors reviewed the titles and abstracts in each journal, and all original medical education research articles were identified. Paired reviewers reached consensus on interesting, well-designed articles to discuss with the entire group. We conducted a PubMed search using the terms (research OR outcome OR evaluation) AND (“medical education”) AND (“medical students” OR intern OR interns OR PGY OR resident OR residents) for additional relevant titles not included in the 15 journals.

All authors independently rated the quality of articles using the modified Medical Education Research Study Quality Instrument (MERSQI),2 a reliable scale developed to measure the methodological quality of experimental, quasi-experimental, and observational studies in medical education.3 Additionally, our team of reviewers assessed each study’s relevance to general medicine educators by timeliness of topic and ease of implementation. For example, if the study assessed a curriculum, the authors considered it for inclusion only if the topic was one that would likely be of interest and could be practically implemented at other institutions without requiring unique funding sources or administrative leadership support. Accordingly, studies were classified into one of five categories of propensity for inclusion: “Yes”, “High-Maybe”, “Maybe”, “Low-Maybe”, and “No”. There was no predetermined cutoff for scores or number of articles to include. The authors then discussed each article with a majority rating of Yes, High-Maybe, or Maybe. Afterwards, each reviewer independently voted to include up to ten articles. We included studies with the highest number of votes in the final review. Any group member with a conflict of interest related to a particular study abstained from discussion and voting on that article. After articles were selected, the team sorted them into thematic groups.

Results

We selected 55 articles to review from 2,720 titles in 15 journals published in 2013. A further ten articles were considered from an additional 1,461 citations retrieved from PubMed. Group consensus for propensity for inclusion across studies was 7 “Yes”, 4 “High-Maybe”, 4 “Maybe”, 4 “Low-Maybe”, and 46 “No”. Most studies placed in the “No” category were due to low quality or lack of clear relevant outcomes. The ten studies with the most votes were included (Appendix) and then categorized into five general themes: Improving Clinical Skills in UME, Inpatient Clinical Teaching Methods, Advancements in Continuity Clinic, Handoffs/Transitions in Care, and Trainee Assessment.

Theme 1: Improving Clinical Skills in UME

Tolsgaard MG et al. Improving efficiency of clinical skills training: a randomized trial. J Gen Intern Med 2013;28(8):1072-7.

This study evaluated the effect of dyad versus solo training on patient encounter skills among pre-clerkship medical students.4 Forty-nine students participated in a 4-hour course on performing histories and physical examinations, and were then randomized to spend a 4-hour practice session with standardized patients (SP) in dyads or alone. Solo students had 25 minutes for the encounter and 25 minutes to develop a write-up for four cases. Dyad students were allowed to alternate between participating and observing, and could discuss the case with their partner.

Individual student performance on two new SP encounters, as assessed 2 weeks later by trained raters using a scoring form based on the Reporter-Interpreter-Manager-Educator framework, found higher mean scores in dyad students compared to solo students (41 % vs. 37 %, p = 0.04). On a 9-point Likert scale (1 = very insecure, 9 = very confident), dyad students had higher self-reported confidence in managing future clinical patient encounters compared to solo students (7.6 vs. 6.5, p < 0.01).

This was a small single-center study with a group of self-selected participants, limiting generalizability. Since testing was conducted soon after the practice session, the durability of learning is unknown. Also, overall performance scores were low in this clinically naïve group, implying that further clinical training is needed. Despite these limitations, this study suggests that clinical skills training in dyads is efficient and results in higher performance and confidence in SP encounters by preclinical students.

Myung SJ et al. Effect of enhanced analytic reasoning on diagnostic accuracy: a randomized controlled study. Med Teach 2013;35:248-50.

This study examined whether enhancement of analytic reasoning increases medical students’ diagnostic accuracy on an objective structured clinical examination (OSCE).5 A total of 145 fourth-year medical students completed a four-station OSCE and were randomized to either an analytic reasoning (AR, N = 65) or control group (N = 80). Students were given 10 minutes for the SP encounter and 5 minutes to complete an answer sheet. The AR group was required to complete a table with diagnoses, as well as signs and symptoms to support diagnoses, and to list the most probable diagnosis. Control group students were required to list the most probable diagnosis. Mean diagnostic accuracy score (perfect score = 4.0) was higher in the AR group than the control group (3.4 +/- 0.66 vs. 3.1 +/- 0.98, p = 0.02).

This was a small single-center study, and no validity evidence is available for the cases. However, efforts to facilitate analytic reasoning by reflecting on alternative diagnoses and supporting and refuting information may reduce diagnostic errors among medical students.

Theme 2: Inpatient Clinical Teaching Methods

Gonzalo JD, et al. The art of bedside rounds: A multi-center qualitative study of strategies used by experienced bedside teachers. J Gen Intern Med 2013;28(3):412-20.

These authors attempted to determine a core set of effective techniques used by faculty frequently performing bedside rounds.6 "Bedside rounds" was defined as a team activity including at least one resident and attending physician in which the patient’s history is presented at the bedside, the physical exam is reviewed, and medical issues and management are discussed in the patient’s presence.

The authors identified one person at ten academic institutions, who in turn identified at least three general internist faculty known to conduct bedside rounds at least three times per week while on-service for at least 2 weeks over a period of 2 years. Two investigators administered interviews with these faculty and analyzed transcripts for themes.

Faculty prepared for bedside rounds by orienting trainees and seeking buy-in, defining roles and expectations, and establishing a patient-centered climate. Attending physicians prepared by reading in advance about diagnoses and specific patients to be presented, and planned focused teaching goals for rounds. Patients were selected for bedside rounding due to acuity or new arrival, important clinical decisions, or general educational value. Patients were deferred from rounds because of distance, communication difficulty, unwillingness to participate, or sensitive issues regarding the patient’s care. Roles at the bedside included: introduction, presentation, examination, patient comfort, discussion, and closure.

This study provides a rubric for institutions and individuals who wish to expand bedside rounds. A key limitation is the absence of time estimate for faculty preparation. Also, suggested exclusion of patients with sensitive issues may result in missed opportunities to teach important content.

Cohen ER, et al. Making July safer: Simulation based mastery learning during intern boot camp. Acad Med 2013;88:233-39.

Incoming interns have variable skills and are often entering an unfamiliar work environment; this may partially explain the increased rates of patient morbidity and mortality observed in the month of July. These authors studied the impact of a simulation-based boot camp for incoming IM interns using outcome scores on a clinical skills examination (CSE).7

The program included 47 interns in June 2011; their performance on a five-station CSE was compared to 109 interns in the period 2009–2010. Boot camp occurred over 3 days, with 8 hours of teaching per day. Teaching was provided in the five domains of cardiac auscultation, paracentesis, lumbar puncture (LP), intensive care unit (ICU) clinical skills, and code status discussions (CSD). Interns practiced and retested until they reached a preset minimum standard for each content area.

Performance on the CSE was significantly improved in each domain as compared to historical controls, with the greatest improvement in the paracentesis and LP domains. Forty-five percent of interns reported prior experience with paracentesis, 49 % with LP, 72 % with both ICU skills and CSD, and 100 % with cardiac auscultation. Additional training for mastery for up to 1 hour was required in 4 % (LP) to 38 % (CSD) of interns per domain. Interns rated the curriculum highly. The total estimated cost was $34,282.

This single-institution study suggests that simulation-based boot camp training is well received by incoming medicine interns and improves skills. However, this study did not demonstrate an impact on patient outcomes, provide data on learning retention, or demonstrate that the chosen domains were relevant to the July effect.

Theme 3: Advancements in Continuity Clinic

Peccoralo LA, et al. Resident Satisfaction with Continuity Clinic and Career Choice in General Internal Medicine. J Gen Intern Med 2013;28(8):1020–7.

This study assessed resident satisfaction with the continuity clinic experience and the relationship between resident clinic experience and attitudes toward careers in general internal medicine (GIM).8 All categorical and primary care IM residents at Mount Sinai, Temple, and Johns Hopkins Bayview were surveyed regarding their continuity clinic experience and likelihood of choosing GIM as a career.

Two hundred twenty-five residents completed the survey (response rate (RR) 90 %), with 38 % planning to pursue GIM after graduation (p < 0.01). The continuity clinic experience made no difference in reported career choice for 59 % of residents, increased the likelihood of entering GIM for 11 % of residents, and decreased the likelihood of entering GIM for 28 % of residents. Eighty-three percent of residents were somewhat or very satisfied with the continuity clinic experience. Residents were most likely to consider a career in GIM based on their continuity clinic if they were considering careers in GIM prior to residency (OR 29.0, 95 % CI 24.0–34.8) or were very satisfied with the continuity of relationships with patients (OR 4.1, 95 % CI 2.50–6.64).

This study suggests that in order to produce more primary care physicians, we may need to increase their interest in GIM during medical school. Notably, programs included in this study had significantly more residents planning careers in GIM than the national average. Additionally, “GIM” was not defined, and the relationship between continuity clinic and intent to pursue a hospitalist career was not explored.

Weiland ML, et al. An Evaluation of Internal Medicine Residency Continuity Clinic Redesign to a 50/50 Outpatient–Inpatient Model. J Gen Intern Med 2013;28(8):1014–9.

This study assessed the impact of changing a continuity clinic model on clinical and educational outcomes.9 In the 2010–2011 academic year, the program adopted an alternating outpatient–inpatient monthly rotation model, with twice-weekly continuity clinics during outpatient months and no clinic during inpatient months. Previously, residents had had a weekly half-day of clinic throughout the year. The authors compared data pre- and post-clinic redesign and surveyed residents, preceptors, and patients.

Residents’ panel size increased (120.0 vs. 137.6), the percentage of missed clinic appointments decreased (13 % vs. 11 %; p ≤ 0.01), perceptions of teamwork and safety increased (3.6 vs. 4.1 on a 5-point scale; p ≤ 0.01), but individual physician–patient continuity of care decreased (63 % vs. 48 % from physician perspective; 61 % vs. 51 % from patient perspective; p ≤ 0.001 for both), with no change in team continuity. The quality of diabetes, hypertension, and preventive care was unchanged. Performance in-clinic improved (3.6 vs. 3.9 on a 5-point scale; p ≤ 0.01), and residents attended more teaching conferences (57 % vs. 64 %; p ≤ 0.01), were more able to focus on the clinic (30 % vs. 85 %, very or somewhat satisfied; p < 0.01) and were more satisfied with inpatient/outpatient balance (27 % vs. 71 % very or somewhat satisfied; p < 0.01).

This study must be interpreted with caution. The intervention also included new preceptor scheduling, providing more preceptor–learner continuity, and an extra continuity clinic-focused rotation, possibly biasing results positively.

Theme 4: Handoffs/Transitions in Care

Pincavage AT, et al. Results of an Enhanced Clinic Handoff and Resident Education on Resident Patient Ownership and Patient Safety. Acad Med 2013;88(6):785-801.

At the end of training, post-graduate year (PGY)-3 IM residents must transition the care of their patients to another resident, conferring risk for missed test results and diagnoses, delayed care, and medical errors. The authors developed an enhanced clinic handoff protocol for high-risk patients and assessed changes in resident perceptions and patient outcomes.10 The protocol included a 1-hour didactic session on identifying high-risk patients, documentation, and use of a standardized handoff template. Rising PGY-2 residents received handoffs from one PGY-3 and were allotted 1 hour of protected time in the clinic for telephone visits with new high-risk patients. For patients, both oral and written notification of the transition was improved, and clinic staff implemented priority scheduling for high-risk patients.

On average, 12 patients (range: 3-28) per PGY-3 resident were identified as high-risk and handed off using the protocol. Seventy-six percent of PGY-2 residents used telephone visits, with a mean 9.1 patients called; 44 % revealed missed tests. The intervention resulted in greater resident satisfaction with handoffs and ownership of new patients, but no reduction in stress. All patients were aware of the transition, more patients saw the correct provider (88 % vs. 44 %; p < 0.01), more tests were followed up appropriately, (67 % vs. 46 %; p = 0.02), and there was a trend towards decreased acute care utilization (20 % vs. 26 %; p = 0.06).

The intervention was performed in one IM resident clinic at a single institution, limiting generalizability. The handoff recipient was a rising PGY-2, not an incoming PGY-1, allowing for transition prior to the departure of graduating PGY-3s. A 1:1 handoff was possible during the assessment year, whereas some PGY-2s had received handoffs from two PGY-3s the prior year, possibly biasing resident survey results favorably towards the intervention. Despite limitations, results suggest that a focus on end-of-training clinic transitions may improve quality and safety.

Starmer AJ, et al. Rates of Medical Errors and Preventable Adverse Events Among Hospitalized Children Following Implementation of a Resident Handoff Bundle. JAMA 2013;310(21):2262-70.

This study combined several reported handoff strategies into a “handoff bundle”, and assessed changes in medical error rates, miscommunication rates, and resident workflow.11 Components of the handoff bundle included a 2-hour communications training session, instructions for residents on the use of the “SIGNOUT?” mnemonic, and the requirement that handoffs be performed by teams of residents and interns in a quiet space. A standard written template was provided in one unit, and another unit used a computerized template.

The authors assessed medical errors and adverse events via chart review, daily error reports, daily survey of overnight residents, and formal incident reports 3 months before and 3 months after the intervention. They also evaluated written and verbal handoffs and observed workflow.

The number of medical errors was reduced by 15.5 per 100 admissions. Written handoffs were more complete post-intervention, especially in the unit using a computerized tool. There was no change in verbal handoff time, but there were fewer interruptions. House staff spent more time with patients and families.

A comprehensive handoff bundle, including resident education and a computerized handoff tool, may reduce medical errors. However, much of the error reduction attributed to the handoff bundle may be due to residents gaining experience throughout the year, as post-intervention data was gathered later in the same academic year.

Theme 5: Trainee Assessment

Hauer KE, et al. Developing Entrustable Professional Activities as the Basis for Assessment of Competence in an Internal Medicine Residency: A Feasibility Study. J Gen Intern Med. 2013;28(8):1110-14.

This study describes a pilot and feasibility evaluation of two entrustable professional activities (EPAs), a new construct in GME for operationalization of competencies and milestones in the context of actual clinical activities.12 For EPA development, IM residency leaders selected two EPAs: (1) Inpatient Discharge (aligned with key institutional stakeholders for patient safety and quality), and (2) Family Meeting (provided within existing curricula in palliative care). To assess feasibility of the EPAs, PGY-1s and attending physicians were surveyed.

For the Inpatient Discharge EPA, the PGY-1 RR was 65 %. Forty-three percent of PGY-1 respondents participated in this assessment, representing 28 % of eligible PGY-1s. The majority of respondents felt the assessment process improved skills (67 %) and facilitated useful feedback (83 %), and recommended continuation (83 %). Of those who did not participate, 56 % were unaware of the EPA, 31 % didn’t have time, and 6 % forgot; 94 % were interested in participating. For the Family Meeting EPA assessment, the PGY-1 RR was 62 %, with 43 % participation, 75 % improved attention to family meeting education, and 50 % recommended continuation. Of non-participants, 100 % were unaware of the EPA assessment; 50 % were interested in participating.

Despite intensive efforts at this single institution, the participation rate amongst learners and evaluators was low, with many unaware of the program. These investigators acknowledge that these instruments alone would not establish entrustment, and would need to be part of a broader evaluation program. True assessment of competency in professional and clinical activities may be difficult to achieve.

Curtis JR, et al. Effect of Communication Skills Training for Residents and NPs on Quality of Communication with Patients with Serious Illness, A Randomized Trial. JAMA 2013;310(21):2271-81.

This randomized trial of a simulation-based communication skills-building workshop for IM residents, subspecialty fellows, and nurse practitioners assessed effects on patient-, family-, and clinician-reported outcomes.13 In a prior study, this workshop was associated with significant improvements in communication skills with regard to giving bad news and responding to emotion, assessed using SP encounters.

Of 1,068 eligible trainees from two academic medical centers, 44 % were randomized to the intervention workshop, consisting of eight 4-hour sessions, versus controls who were provided standard education. Sessions included didactics, role-play demonstration, skills practice using simulation, and reflective discussions. Outcomes were scores on (1) quality of communication (QOC) questionnaire (18 items for patients and clinicians; 19 for families), (2) quality of end-of-life care (QEOLC) questionnaire (26 items for patients and families; 10 for clinicians), (3) Patient Health Questionnaire (PHQ-8) depression assessment, and (4) functional status. Outcomes were assessed by means of 1,866 surveys submitted by patients who were eligible if they had metastatic cancer, oxygen-dependent chronic obstructive pulmonary disorder, stage 3–4 congestive heart failure, Child’s C liver disease, palliative care consult, do not resuscitate order, an ICU or hospital stay >72 hours, or were over the age of 80, and interacted with trainees enrolled in the study.

Survey RR was 44 % of patients, 68 % of families, and 57 % of clinicians. There was no significant difference in QOC scores, QEOLC, depression, or functional status among groups, with the exception of increased depressive symptoms in the intervention group (10.0; 95 % CI, 9.1–10.8) compared with the control and pre-intervention groups (8.8; 95 % CI, 8.4–9.2). Clinicians rated PGY-1s lower for QOC and QEOLC. In post hoc analysis, patients rating their health as “poor” had improvements in scores with the intervention. Propensity modeling showed no evidence that non-response or exclusion of surveys from analysis produced bias in the primary study findings.

This study included a large pool of patients who might have benefited from educational intervention; it is possible that study subjects used skills selectively. Interventions showing improved outcomes using simulated communication may not necessarily translate to improvement in communication or end-of-life care. We need to better understand the true impact of simulation with additional studies assessing patient outcomes.

Discussion

Our review of the 2013 literature relevant to general internal medicine educators highlighted ten articles within five major themes. We identified studies of highest quality and greatest relevance to general internist medical educators in today’s teaching and practice environment.

Our collaboration represented diverse geographical regions, academic rank, and educational roles. However, perspectives from community-based programs, clinician-educators without leadership roles, and non-physician clinicians were not represented in our group. Article selection, therefore, may be biased towards academic medical centers.

Although reviewed journals included articles on a wide variety of topics, many studies were excluded due to difficulty translating to other institutions because of institution-specific opportunities or challenges. Most articles reviewed were single-institution studies with non-randomized designs, also impairing generalizability; more multi-institutional studies are needed in the medical education literature. Though we identified and discussed articles of emerging importance, such as medical student burnout, emotional intelligence, cost consciousness, and clinical reasoning, these were excluded due to low quality or limitations in the ability of general internist educators to use findings. Also, although there is current emphasis on milestones and EPAs across the continuum of medical education, the literature is still sparse in multi-institutional assessments and best practices in faculty development in these areas. Studies regarding high-value care initiatives and interprofessional education typically assess learner perceptions, but not the impact on actual practice or patient outcomes. The use of technology in medical education continues to grow, but few articles describe significant results or reasons for the expansion in technology. We discussed limitations regarding the interpretation and scope of results for each included study.

Conclusions

The reviewed articles may inform medical educators as they are pressed to innovate to meet demands in the rapidly changing medical education environment. More randomized and multi-institutional studies are needed to strengthen the current literature in medical education. The reviewed studies should inform future research and practice among medical educators.

References

Holmboe ES, et al. Faculty development in assessment: the missing link in competency-based medical education. Acad Med. 2011;86(4):460–467.

Reed DA, et al. Association between funding and quality of published medical education research. JAMA. 2007;298(9):1002–1009.

Reed DA, Beckman TJ, Wright SM. An assessment of the methodologic quality of medical education research studies published in The American Journal of Surgery. Am J Surg. 2009;198(3):442–444.

Tolsgaard MG, et al. Improving efficiency of clinical skills training: a randomized trial. J Gen Intern Med. 2013;28(8):1072–1077.

Myung SJ, et al. Effect of enhanced analytic reasoning on diagnostic accuracy: a randomized controlled study. Med Teach. 2013;35(3):248–250.

Gonzalo JD, et al. The art of bedside rounds: a multi-center qualitative study of strategies used by experienced bedside teachers. J Gen Intern Med. 2013;28(3):412–420.

Cohen ER, et al. Making July safer: simulation-based mastery learning during intern boot cAMP. Acad Med. 2013;88(2):233–239.

Peccoralo LA, et al. Resident satisfaction with continuity clinic and career choice in general internal medicine. J Gen Intern Med. 2013;28(8):1020–1027.

Wieland ML, et al. An evaluation of internal medicine residency continuity clinic redesign to a 50/50 outpatient-inpatient model. J Gen Intern Med. 2013;28(8):1014–1019.

Pincavage AT, et al. Results of an enhanced clinic handoff and resident education on resident patient ownership and patient safety. Acad Med. 2013;88(6):795–801.

Starmer AJ, et al. Rates of medical errors and preventable adverse events among hospitalized children following implementation of a resident handoff bundle. JAMA. 2013;310(21):2262–2270.

Hauer KE, et al. Developing entrustable professional activities as the basis for assessment of competence in an internal medicine residency: a feasibility study. J Gen Intern Med. 2013;28(8):1110–1114.

Curtis JR, et al. Effect of communication skills training for residents and nurse practitioners on quality of communication with patients with serious illness: a randomized trial. JAMA. 2013;310(21):2271–2281.

Acknowledgments

All authors contributed to the selection of studies to include in the review and content of the manuscript.

Conflict of Interest

The authors have no conflict of interest to disclose.

Funding and Support

None

Prior Presentations

This work was previously presented as the "Update in Medical Education" during the Society of General Internal Medicine 37th Annual Meeting on April 24, 2014, in San Diego, CA.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

ᅟ

Rights and permissions

About this article

Cite this article

Roy, B., Willett, L.L., Bates, C. et al. For the General Internist: A Review of Relevant 2013 Innovations in Medical Education. J GEN INTERN MED 30, 496–502 (2015). https://doi.org/10.1007/s11606-015-3197-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-015-3197-6