ABSTRACT

BACKGROUND

Healthcare purchasers have created financial incentives for primary care practices to achieve medical home recognition. Little is known about how changes in practice structure vary across practices or relate to medical home recognition.

OBJECTIVE

We aimed to characterize patterns of structural change among primary care practices participating in a statewide medical home pilot.

DESIGN

We surveyed practices at baseline and year 3 of the pilot, measured associations between changes in structural capabilities and National Committee for Quality Assurance (NCQA) medical home recognition levels, and used latent class analysis to identify distinct classes of structural transformation.

PARTICIPANTS

Eighty-one practices that completed surveys at baseline and year 3 participated in the study.

MAIN MEASURES

Study measures included overall structural capability score (mean of 69 capabilities); eight structural subscale scores; and NCQA recognition levels.

RESULTS

Practices achieving higher year-3 NCQA recognition levels had higher overall structural capability scores at baseline (Level 1: 28.4 % of surveyed capabilities, Level 2: 40.9 %, Level 3: 48.7 %; p value = 0.001). We found no association between NCQA recognition level and change in structural capability scores (Level 1: 33.2 % increase, Level 2: 30.8 %, Level 3: 33.7 %; p value = 0.88). There were four classes of practice transformation: 27 % of practices underwent “minimal” transformation (changing little on any scale); 20 % underwent “provider-facing” transformation (adopting electronic health records, patient registries, and care reminders); 26 % underwent “patient-facing” transformation (adopting shared systems for communicating with patients, care managers, referral to community resources, and after-hours care); and 26 % underwent “broad” transformation (highest or second-highest levels of transformation on each subscale).

Conclusions and Relevance

In a large, state-based medical home pilot, multiple types of practice transformation could be distinguished, and higher levels of medical home recognition were associated with practices’ capabilities at baseline, rather than transformation over time. By identifying and explicitly incentivizing the most effective types of transformation, program designers may improve the effectiveness of medical home interventions.

Similar content being viewed by others

INTRODUCTION

Policymakers, researchers, professional associations, and practitioners have voiced support for medical home (or patient-centered medical home) transformation as an important strategy for improving the quality and efficiency of healthcare.1 In concept, medical home transformation is characterized by primary care practices adopting certain structural capabilities, such as team-based care, quality measurement and improvement, enhanced access, and care coordination.2 In recent and ongoing pilots, private and public payers have offered participating practices case management support, coaching, and payment incentives, to motivate them to achieve recognition as medical homes from the National Committee for Quality Assurance (NCQA) and other organizations offering medical home recognition programs.3 , 4 For example, a practice can receive Level 1, 2, or 3 NCQA recognition based on possession of capabilities such as enhanced access, care management, and self-management support.5

However, primary care practices that have several structural capabilities at baseline may be able to achieve high levels of medical home recognition simply by applying for medical home recognition, generating rewards without transformation. In addition, among those that do make structural changes, different types of transformation could be present, even among practices that achieve the same level of medical home recognition. For example, some practices might adopt new health information technology without enhancing patients’ access to after-hours care; others may make different choices.

The taxonomy and prevalence of specific patterns of structural transformation in medical home pilots has not been previously described. To better understand primary care practice transformation, we measured and analyzed structural changes among primary care practices participating in the first 3 years of the Pennsylvania Chronic Care Initiative (PACCI), a statewide medical home pilot.

METHODS

Setting and Participants

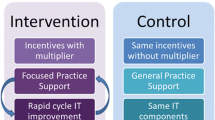

All 104 primary care practices that participated in the first 3 years from all four regions of the PACCI (i.e., southeast, south central, southwest, and northeast) were eligible for inclusion in our analyses. The PACCI, described in more detail elsewhere, encouraged participating practices to achieve recognition on the NCQA Physician Practice Connections-Patient Centered Medical Home (PPC-PCMH), and to participate in technical assistance activities.6 – 8 The southeast region was the first of the four regions to participate, beginning in June 2008, and the northeast region was the last, beginning in October 2009. Intervention components varied across the regions. For example, the payment intervention differed across regions: in the southeast, practices received per-member, per-month fees that were linked to early NCQA recognition; practices in the south central and southwest regions received per-member, per-month fees that were not linked to early NCQA recognition; and in the northeast, practices received shared savings incentives.

Practice Surveys

We created a written survey of practice capabilities based on a previous survey of the readiness of physician practices for the medical home.9 The original survey, which was designed in 2007 to assess potentially quality-enhancing structural capabilities of physician practices and practice readiness for medical home programs, was based on a review of existing physician practice surveys and associated published scientific literature.9 This original survey instrument underwent cognitive testing and validation with practice site visits, as described previously.9 , 10

For the current project, we modified the original survey by adding items in consultation with the conveners of the PACCI. These new items were intended to assess capabilities that were specifically encouraged by the PACCI intervention (e.g., systems to contact patients after hospitalizations). This new survey measured 69 structural capabilities commonly featured in medical home models, including presence of performance feedback, disease management, registries, reminder and outreach systems for patients with chronic disease, and electronic health record (EHR) capabilities. As described elsewhere, we mailed the survey to one leader from each of the 104 practices participating in the PACCI at two time points: first, to assess practice structural capabilities at baseline (before the pilot began in the corresponding region of the state), and second, to assess the same capabilities 3 years after the intervention began.8 Eighty-one practices responded to both the baseline and year-3 surveys (78 % response rate).

Measures

We categorized each of the 69 practice structural survey items into one of eight domains, based on discussion and consensus among the authors: shared systems for communicating with patients (13 survey items), care managers (7), referral to community services (2), use of EHRs (20), care reminders to clinicians (11), performance feedback to clinicians (10), patient registries (4), and after-hours care (2). The specific items in each scale are available in the Appendix.

Nearly all the survey items elicited binary (yes/no) responses about the presence or absence of a particular capability in the practice. Within each domain, we calculated scores for each practice at baseline and year 3 by calculating the proportion of structural capabilities in each domain that were reported as present (e.g., for the care manager domain, the numerator ranged 0 to 7, and the denominator was 7). The rate of item non-response was less than 3 % for all items.

For each practice, we calculated an overall structural capability score as the proportion of all 69 structural capabilities reported as present. Such an approach implicitly weights each of the eight domains based on the number of items within the domain, meaning that domains with more items would have greater influence on the overall score than domains with fewer items. In sensitivity analyses, we performed an alternate calculation of the overall structural capability score. To do this, we first calculated a separate score for each of the eight structural domains by averaging the item responses within the domain. We then computed the average score across each of the eight domains, weighting each domain score equally (regardless of the number of items comprising each domain).

NCQA Recognition Levels and Claims Data

We obtained data on NCQA recognition levels received by the pilot practices at the end of year 3 in each region from the pilot conveners. Because the PACCI pilot start date varied by region, some practices received NCQA recognition under the 2008 recognition criteria, while others were recognized under the 2011 criteria.11 We also obtained claims data from seven health plans that participated in the four PACCI regions under analysis, attributed patients to all 104 practices using methods described elsewhere, and calculated the gender, age, and comorbidities of patients served by these practices.8

Analysis

We calculated differences in practice size, specialty, and patient populations among the 81 practices that responded to both rounds of the structural capability survey and the 23 practices that did not respond, using Fischer exact tests for categorical variables and t-tests or Wilcoxon rank-sum tests, as appropriate, for continuous variables. We calculated changes in the structural capability scores by subtracting, for each practice, the baseline score from the year-3 score. We used paired t-tests to assess the statistical significance of changes in practices’ structural scores. We used ANOVA to evaluate relationships between overall structural capability scores at baseline, changes in overall structural capability scores between baseline and year 3, and NCQA recognition levels in year 3.

To distinguish and characterize classes of structural transformation, we performed a latent class analysis of baseline-to-year-3 changes in the scores on each of the eight structural domains.12 Latent class analysis is a type structural equation modeling that can be used to identify groups or “classes” of cases using multivariate data. We chose latent class analysis, because this technique is well suited to identifying patterns in multivariate data in an exploratory fashion, without imposing pre-specified assumptions about how structural transformation might vary within a medical home pilot.

To execute the latent class analysis, we estimated a mixture model with continuous latent class indicators using automatic starting values with random starts. We assessed model performance by calculating “relative entropy,” which is a measure of classification uncertainty that takes values between 0 and 1, with 1 representing complete certainty that the model has classified the practices into groups correctly.13 We also assessed the parsimony of the model using a parametric bootstrapped likelihood ratio test, which calculated the degree to which a model of k classes fit the data better than a model with k-1 classes. If the p value for this test was less than 0.05, the k class model was considered to be a “better” fit than the k-1 class model. Across the classes of practice transformation that emerged from the latent class analysis, we compared changes in the overall structural capability score using one-way ANOVA, and year-3 NCQA levels using Pearson chi-squared tests.

We considered two-tailed p values < 0.05 to be significant. We performed data management and analyses using STATA version 13.014 and MPLUS version 6.15 This study was approved by the RAND Human Subjects Protection Committee.

RESULTS

Compared to the 23 practices that did not complete both rounds of surveys, the 81 responding were more likely to have family medicine or internal medicine specialties and had higher percentages of patients with asthma (Table 1). None of the practices received NCQA recognition before the pilot began. By year 3 of the pilot, all but one responding practice had achieved NCQA recognition: 13 (16.0 %) at Level 1; 13 (16.0 %) at Level 2; and 54 (66.7 %) at Level 3. Responding practices were more likely than non-responding practice to receive NCQA recognition by year 3 of the pilot.

Practices’ mean overall structural capability score was 44.5 % at baseline (range, 2.9 % to 95.7 %) and 77.1 % at year 3 (range, 30.4 % to 98.5 %; p < 0.001 for difference between baseline and year 3) (Table 2). On average, the practices improved significantly on each of the eight structural domains, with magnitudes of score improvement ranging from 14.8 percentage points (after-hours care) to 48.1 percentage points (patient registries).

Compared to practices that received level 1 NCQA recognition by the end of pilot year 3, practices that received level 2 and level 3 recognition had higher overall structural capability scores at baseline (mean baseline score was 28.4 % for level 1 practices, 40.9 % for level 2, and 48.7 % for level 3; p = 0.001 for difference) (Fig. 1). However, baseline-to-year-3 changes in overall structural capability scores did not differ significantly across levels of year-3 NCQA recognition (score increases were 33.2 % for level 1, 30.8 % for level 2, and 33.7 % for level 3; p = 0.88).

Relationship between baseline-to-year-3 changes in overall structural capability scores and year-3 NCQA recognition levels. Note: each colored line represents baseline and year-3 scores for one practice. The black lines represent mean baseline and year-3 scores for practices with the indicated NCQA recognition level. One practice that did not receive NCQA recognition is excluded from the figure. *Mean baseline overall structural capability scores (28.4 % for NCQA level 1, 40.9 % for NCQA level 2, 48.7 % for NCQA level 3) differed significantly across NCQA recognition levels (p value = 0.001). **Mean baseline-to-year-3 change in overall structural capability scores (33.2 % for NCQA level 1, 30.8 % for NCQA level 2, 33.7 % for NCQA level 3) did not significantly differ across NCQA recognition levels (p value = 0.88).

The latent class analysis model distinguished four classes of practice transformation. In the first class of transformation (“minimal transformation”; n = 22 practices), practices exhibited relatively little transformation on any structural domain (Table 3). In the second class of transformation (“provider-facing transformation”; n = 17), practices exhibited relatively greater adoption of care reminders to clinicians, EHRs, and patient registries, but relatively little adoption of shared systems for communicating with patients and referral to community services. In the third class of transformation (“patient-facing transformation”; n = 21), practices exhibited relatively greater adoption of shared systems for communicating with patients, care managers, referral to community resources, and after-hours care, but relatively little adoption of care reminders to clinicians. Finally, practices in the fourth class of transformation (“broad transformation”; n = 21) had the highest or second-highest levels of transformation on each domain. The latent class analysis model had an entropy value of 0.88, indicating a high degree of classification certainty, and a parametric bootstrapped likelihood ratio test showing that the four-class model was parsimonious, fitting significantly better than a three-class model (p < 0.001) and no worse than a five-class model (p = 0.99).

Mean changes in overall structural capability scores varied across the four classes (p < 0.01), with “broad transformation” having the greatest mean increase (52.4 %) and “minimal transformation” having the least (9.5 %). There were no statistically significant differences in level of NCQA recognition across the classes of transformation. The results of sensitivity analyses using the alternative formulation of the overall structural capability score were similar to the main results.

CONCLUSION

Within a statewide medical home pilot, we found no association between the magnitude of practice structural transformation and the level of medical home recognition achieved by participating practices. Instead, recognition levels were associated significantly with practices’ structural capabilities at baseline (i.e., their “starting positions” before the pilot began). In addition, we found four distinct patterns of structural transformation among the pilot participants: minimal, provider-facing, patient-facing, and broad transformation patterns.

Our study adds to the literature on medical home interventions in at least two ways. First, like previous studies, we found that primary care practices participating in medical home pilots can achieve significant structural transformation and receive recognition as medical homes.16 – 20 However, to our knowledge no prior study has investigated whether the level of medical home recognition is a good proxy for the magnitude of practice transformation. Second, previous studies have shown that the extent of practice transformation in a medical home pilot can vary across structural domains (e.g., with greater adoption of EHR capabilities and less adoption of enhanced access).16 – 18 , 21 , 22 However, ours is the first to identify multiple, co-occurring subtypes of practice transformation that cannot be distinguished based on medical home recognition levels alone.

Many medical home pilots are predicated on the notion that financial incentives, technical assistance, and other new resources should motivate and enable practices to adopt new structural capabilities. The incentives and resources that constituted the PACCI intervention may have motivated such changes, since on average, participating practices did transform. However, our findings suggest that if financial incentives are based predominantly on medical home recognition levels, the allocation of the largest bonuses may be determined by practices’ preexisting structural capabilities rather than transformation. If substantial shares of participating practice receive incentives without undergoing structural change (i.e., receive incentives primarily for completing successful recognition applications rather than truly transforming), pilots may have limited effects on patient care.

The problem of rewarding achievement is not unique to medical home pilots or to NCQA recognition. Early pay-for-performance programs encountered similar challenges.23 Dynamic measures of medical home transformation may offer a useful adjunct to measuring the achievement of specific levels of medical home recognition. Explicitly measuring transformation (i.e., change over time) might enable policymakers to link incentives to such transformation, which could be especially beneficial to practices that start at relatively low levels of medical home capabilities and may be relatively unlikely to reach the highest recognition levels. Such an approach would be similar to the Centers for Medicare and Medicaid Services (CMS) Hospital Value-based Purchasing design,24 which rewards both improvement and attainment.

Our results demonstrating heterogeneity in the types of structural transformation among pilot practices may help explain the mixed results of medical home pilot evaluations. Different classes of transformation—and differences in the relative prevalence of these classes across medical home pilots—may produce different effects on patient care. Research that investigates relationships between types of practice transformation and changes in patient care might enable policymakers to identify and explicitly encourage, in future medical home interventions, the most effective types of transformation.

Our study has limitations. First, our sample includes practices from Pennsylvania only, and different findings in other states and medical home pilots are possible. Second, our survey of practice structural capabilities could not measure every aspect of structural transformation that might be important to improving patient care. Third, for comparisons across NCQA recognition levels, we lacked statistical power to detect differences smaller than ten percentage points in baseline-to-year-3 structural capability change scores. However, the observed differences in structural change scores across these recognition levels were small and inconsistent in direction. Fourth, our analysis was not designed to detect associations between structural transformation subtypes and changes in patient care. Such an analysis could be a fruitful area for future inquiry, once evaluations of PACCI regions beyond the southeast region are complete.

Transforming primary care practices into medical homes is a key feature of numerous ongoing efforts to improve the quality and contain the costs of healthcare in the United States. Our study demonstrates that while practices in medical home pilots can experience significant transformation, the magnitude of this transformation is not necessarily associated with scores on point-in-time medical home recognition criteria. Furthermore, practices can experience multiple types of transformation, even when they receive the same levels of recognition as medical homes. Using more dynamic and detailed measures of practice transformation may help payers, policy makers, and other stakeholders tailor their medical home incentives for greater precision and effectiveness.

REFERENCES

American Academy of Family Physicians, American Academy of Pediatrics, American College of Physicians, American Osteopathic Association. Joint principles of the patient-centered medical home. 2007; http://www.acponline.org/running_practice/delivery_and_payment_models/pcmh/demonstrations/jointprinc_05_17.pdf. Accessed June 12, 2013.

Friedberg MW, Lai DJ, Hussey PS, Schneider EC. A guide to the medical home as a practice-level intervention. Am J Manage Care. 2009;15(10 Suppl):S291–S299.

Agency for Healthcare Research and Quality. Patient centered medical home resource center: catalogue of federal PCMH activities. http://pcmh.ahrq.gov/page/federal-pcmh-activities. Accessed 2014, May 16.

Bitton A, Martin C, Landon BE. A nationwide survey of patient-centered medical home demonstration projects. J Gen Intern Med. 2010;25(6):584–592.

National Commitee for Quality Assurance. Patient-Centered Medical Home (PCMH) 2011: Frequently Asked Questions (FAQs). 2011; http://www.ncqa.org/Portals/0/Programs/Recognition/PCMH/PCMH_2011_FAQs_9_24_13.pdf. Accessed November 4, 2014.

Gabbay RA, Bailit MH, Mauger DT, Wagner EH, Siminerio L. Multipayer patient-centered medical home implementation guided by the chronic care model. Joint Comm J Qual Patient Saf / Joint Comm Resour. 2011;37(6):265–273.

Gabbay RA, Friedberg MW, Miller-Day M, Cronholm PF, Adelman A, Schneider EC. A positive deviance approach to understanding key features to improving diabetes care in the medical home. Ann Fam Med. 2013;11(Suppl 1):S99–S107.

Friedberg MW, Schneider EC, Rosenthal MB, Volpp KG, Werner RM. Association between participation in a multipayer medical home intervention and changes in quality, utilization, and costs of care. JAMA: J Am Med Assoc. 2014;311(8):815–825.

Friedberg MW, Safran DG, Coltin KL, Dresser M, Schneider EC. Readiness for the patient-centered medical home: structural capabilities of Massachusetts primary care practices. J Gen Intern Med. 2009;24(2):162–169.

Friedberg MW, Coltin KL, Safran DG, Dresser M, Zaslavsky AM, Schneider EC. Associations between structural capabilities of primary care practices and performance on selected quality measures. Ann Intern Med. 2009;151(7):456–463.

National Commitee for Quality Assurance. Comparison: PPC-PCMH 2008 With PCMH 2011. 2011; https://www.ncqa.org/Portals/0/Programs/Recognition/PPC-PCMH%202008%20vs%20PCMH%202011Crosswalk%20FINAL.pdf. Accessed April 1, 2014.

McCutcheon AL. Latent class analysis. Sage; 1987.

Templin J. Latent Class Analysis: Evaluating Model Fit. 2006; http://jonathantemplin.com/files/clustering/psyc993_14.pdf. Accessed February 3, 2014.

StataCorp. Stata Statistical Software: Release 13. College Station, TX: StataCopr LP; 2013.

Muthen LK, Muthen BO. Mplus user’s guide, 6th addition. Los Angeles: Muthen & Muthen; 2011.

Alidina S, Schneider EC, Singer SJ, Rosenthal MB. Structural capabilities in small and medium-sized patient-centered medical homes. Am J Manage Care. 2014;20(7):e265–e277.

Fifield J, Forrest DD, Martin-Peele M, et al. A randomized, controlled trial of implementing the patient-centered medical home model in solo and small practices. J Gen Intern Med. 2013;28(6):770–777.

Rosenthal MB, Friedberg MW, Singer SJ, Eastman D, Li Z, Schneider EC. Effect of a multipayer patient-centered medical home on health care utilization and quality: the rhode island chronic care sustainability initiative pilot program. JAMA Intern Med. 2013;173(20):1907–1913.

Jaen CR, Crabtree BF, Palmer RF, et al. Methods for evaluating practice change toward a patient-centered medical home. Ann Fam Med. 2010;8(Suppl_1):S9–S20.

Paustian ML, Alexander JA, El Reda DK, Wise CG, Green LA, Fetters MD. Partial and incremental PCMH practice transformation: implications for quality and costs. Health Serv Res. 2014;49(1):52–74.

Kern LM, Edwards A, Kaushal R. The patient-centered medical home, electronic health records, and quality of care. Ann Intern Med. 2014;160(11):741–749.

Rittenhouse DR, Schmidt L, Wu K, Wiley J. Contrasting trajectories of change in primary care clinics: lessons from New Orleans safety net. Ann Fam Med. 2013;11(Suppl 1):S60–S67.

Rosenthal MB, Frank RG, Ki Z, Epstein AM. Early experience with pay-for-performance: from concept to practice. JAMA: J Am Med Assoc. 2005;294(14):1788–1792.

Centers for Medicare and Medicaid Services. Hospital Value-Based Purchasing. 2014; http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/hospital-value-based-purchasing/index.html?redirect=/hospital-value-based-purchasing/. Accessed May 23, 2014.

Acknowledgements

The study was sponsored by the Agency for Healthcare Research and Quality (1R03HS002616-01). The data collection was sponsored by a previous grant from the Commonwealth Fund. No sponsor had a role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors would like to gratefully acknowledge Samuel Hirshman, BA (RAND Corporation) for assistance in programming and data management. Mr. Hirshman received compensation for his role on the project. This paper was presented at the Academy Health Annual Research Meeting in 2014.

Dr. Friedberg has received compensation from the US Department of Veterans Affairs for consultation related to medical home implementation and research support from the Patient-Centered Outcomes Research Institute via subcontract to the National Committee for Quality Assurance. No other author has a potential conflict of interest to disclose.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Martsolf, G.R., Kandrack, R., Schneider, E.C. et al. Categories of Practice Transformation in a Statewide Medical Home Pilot and their Association with Medical Home Recognition. J GEN INTERN MED 30, 817–823 (2015). https://doi.org/10.1007/s11606-014-3176-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-014-3176-3