Abstract

We are concerned with computing bid prices in network revenue management using approximate linear programming. It is well-known that affine value function approximations yield bid prices which are not sensitive to remaining capacity. The analytic reduction to compact linear programs allows the efficient computation of such bid prices. On the other hand, capacity-dependent bid prices can be obtained using separable piecewise linear value function approximations. Even though compact linear programs have been derived for this case also, they are still computationally much more expensive compared to using affine functions. We propose compact linear programs requiring substantially smaller computing times while, simultaneously, significantly improving the performance of capacity-independent bid prices. This simplification is achieved by taking into account remaining capacity only if it becomes scarce. Although our proposed linear programs are relaxations of the unreduced approximate linear programs, we conjecture equivalence and provide according numerical support. We measure the quality of an approximation by the difference between the expected performance of an induced policy and the corresponding theoretical upper bound. Using this paradigm in numerical experiments, we demonstrate the competitiveness of our proposed linear programs.

Similar content being viewed by others

1 Introduction and literature review

Network revenue management is concerned with the sale of multiple products using multiple perishable resources of finite capacity over a discrete time horizon. The standard reference for network revenue management is Talluri and van Ryzin [11]. Traditional models assume that for each product, demand does not depend on the availability of other products [1]. This restrictive assumption has been relaxed by introducing customer choice models [10]. One special case of a customer choice model is discrete pricing [3, 4]. Since discrete pricing problems can be reformulated as independent demand problems [16], assuming independent demand is not as restrictive as previously thought.

Determining an optimal control policy in network revenue management requires computing the value function using dynamic programming. Since capacity control involves multiple resources, the curse of dimensionality prohibits the exact computation of the value function. One stream of literature utilizes approximate linear programming to find approximate solutions. The dynamic programming recursion is reformulated as an exponentially large linear program. Then, a value function approximation based on a small number of basis functions is inserted into the linear program [9]. This way, the number of variables is reduced. However, this procedure does not decrease the number of constraints. To overcome this problem, algorithmic techniques such as column generation, constraint sampling, and constraint-violation learning are typically applied [1, 2, 7]. These approaches do not solve the linear program exactly but provide an approximation. It is thus preferable to find reformulations that can be solved directly using a commercial solver. This motivates the derivation of compact linear programs [12, 14]. In this paper, we call a linear program compact if the number of variables and constraints is polynomial in the number of resources, products, time steps and units of initial capacity, which means it is computationally tractable.

An important aspect of approximate linear programming is the choice of basis functions. Choosing basis functions which are separable across resources is appealing, and thus affine and separable piecewise linear functional approximations have received much attention in the literature [1, 4,5,6]. We refer to these two approximation types as AF and SPL, respectively. Compact reformulations have been derived for both types [12, 14]. While SPL bid prices depend on remaining capacity, the opposite is true for AF bid prices. The components of the SPL approximation are piecewise linear on equidistant grids where the distance between nodes is exactly 1. Consequently, the number of nodes equals the initial capacity size. In contrast, Meissner and Strauss [8] use piecewise linear value function approximations where the number and position of nodes may be chosen arbitrarily. We call this approximation type separable “genuinely” piecewise linear (SGPL) in order to distinguish it from SPL. To the best of our knowledge, there is no study comparing the computational efficiency of AF and SPL with SGPL using compact linear programs. In particular, Meissner and Strauss [8] apply column generation and do not provide compact linear programs.

We make the following contributions:

-

1.

For the independent demand model for network revenue management, we heuristically propose novel compact linear programs which are smaller than the SPL reduction yet improve the quality of AF bid prices by taking into account remaining capacity whenever it becomes scarce.

-

2.

We benchmark our proposed compact linear programs against the reductions for AF and SPL using network instances from the literature [13, 14]. We find that for many instances, AF’s optimality gap can be divided in half using significantly less than half of SPL’s computing time.

Outline In Sect. 2, we describe the underlying network revenue management model and recapitulate the compact linear programs for AF and SPL. We then propose compact linear programs associated with SGPL basis functions in Sect. 3. Although we do not prove equivalence of our proposed linear programs with their unreduced counterparts, we provide numerical support for a corresponding conjecture in Appendix A. In Sect. 4, we investigate the computational efficiency of SGPL by benchmarking it against AF and SPL.

2 Approximate linear programming in network revenue management

Model description Our model follows Adelman [1]. During a selling horizon of finitely many time steps \(t \in \{1,\dots , T\}\), a company sells multiple products \(j \in \{1,\dots , J\}\) with fares \(f_j\). We assume that at most one customer arrives per time step t. The probability that at time t product j is requested is denoted \(p_{t,j}\). We assume product \(j=1\) to be a dummy product representing the event that no customer arrives, which implies \(\sum _j p_{t,j} = 1, \forall t\). At the beginning of each time step t, the company must decide which requests will be accepted and which will be rejected. This decision is represented by the decision vector \((u_j) = \mathbf {u} \in \{0,1\}^J\).

There are multiple resources \(i \in \{1,\dots , I\}\) each of which may be used by several products. The consumption matrix \(\mathbf{A}= (a_{ij}) \in \{0,1\}^{I\times J}\) has corresponding entries: \(a_{ij} = 1\) if product j uses one unit of resource i, and \(a_{ij} = 0\) otherwise. A column \(\mathbf{A}^j\) thus corresponds to the set of resources used by product j. The vector \(\mathbf {c} = (c_1,\dots ,c_I)^T \in \mathbb N^I\) denotes the initial capacity at the beginning of the selling horizon. During the selling process, the remaining capacity is denoted by \(\mathbf {r} = (r_1,\dots ,r_I)^T\). At time \(T+1\), all of the remaining capacity becomes worthless.

Let \(\mathcal U_{\mathbf {r}} = \{\mathbf {u} \in \{0,1\}^J \mid \forall i,j: u_j a_{ij} \le r_i\}\) be the set of feasible decision vectors given the remaining capacity \(\mathbf {r}\). Furthermore, let

denote the state space at time t. The expected future revenue from time t on, given remaining capacity \(\mathbf{r}\) and using an optimal policy, is denoted by the (so-called) value function \(v_t(\mathbf {r})\). This function is recursively defined by the Bellman equation

The key element in this dynamic program is the term \(f_{j} - [v_{t+1}(\mathbf {r}) - v_{t+1}(\mathbf {r}-\mathbf{A}^j)]\), i.e., the difference between the revenue \(f_j\) resulting from the potential sale of product j at time t, and the marginal value \(v_{t+1}(\mathbf {r}) - v_{t+1}(\mathbf {r}-\mathbf{A}^j)\) of product j at time \(t+1\). An optimal policy accepts a request for product j if and only if its marginal value does not exceed the fare \(f_j\).

The recursion (1) suffers from the curse of dimensionality. In particular, there are exponentially many values \(v_t(\mathbf{r})\) that have to be computed. We therefore turn to the construction of approximate solutions.

Approximate linear programming It is well-known that \(v_1(\mathbf {c})\) defined by the Bellman equation (1) is the optimal value of the following linear program:

The size of (D) is growing exponentially in the number of both resources and products. We choose a small number of basis functions \(\phi _b(\mathbf {r}), b \in \mathcal B\), and insert the value function approximation \(v_t(\mathbf {r}) \approx \sum _{b \in \mathcal B} V_{t,b} \phi _{b}(\mathbf {r})\) into (D) to obtain the approximate linear program \((D_\phi )\). This reduces the number of variables to \((T+1)|\mathcal B|\). The corresponding dual is given by:

The optimal value of \((P_{\phi })\) is an upper bound on \(v_1(\mathbf{c})\). If the set of basis functions includes a constant function \(\phi _b(\cdot ) \equiv 1\), we can show by induction that the property \(\sum _{\mathbf {r},\mathbf {u}} X_{t,\mathbf {r},\mathbf {u}} = 1, \forall t\), holds for any feasible solution to \((P_{\phi })\). This observation allows us to interpret each value \(X_{t,\mathbf {r},\mathbf {u}}\) as the probability that at time t, the remaining capacity is \(\mathbf {r}\) and the decision is \(\mathbf {u}\).

Compact linear programs from the literature For the AF approximation \(v_t(\mathbf {r}) \approx \theta _t + \sum _i V_{t,i} r_i\), Tong and Topaloglu [12] as well as Vossen and Zhang [14] show equivalence between \((P_{\phi })\) and the compact linear program

Similar to the interpretation of \(X_{t,\mathbf {r},\mathbf {u}}\) as state-action probabilities, \(\mu _{t,j}\) represents the probability of decision \(u_j = 1\) in time step t. The variable \(\rho _{t,i}\) is an approximation of the expected value of \(r_i\) at time t.

For the SPL approximation \(v_t(\mathbf {r}) \approx \theta _t + \sum _i \sum _{k=1}^{c_i} V_{t,i,k} 1_{\{r_i \ge k\}}\), where \(1_{\{r_i \ge k\}}\) denotes the indicator function, Vossen and Zhang [14] show weak equivalence between \((P_{\phi })\) and the compact linear program

The interpretation of \(\mu _{t,j}\) is the same as above. The variable \(\sigma _{t,i,k}\) represents the probability that at time t, resource i has at least k units left. \(\zeta _{t,i,j,k}\) represents the joint probability that at time t, resource i has at least k units left and the decision \(u_j = 1\) is made. To enforce the probabilistic interpretation of \(\sigma\), one would expect that \((\widehat{P}_{SPL})\) includes the constriants \(\sigma _{t,i,1} \le 1, \forall t,i\) and \(\sigma _{t,i,k+1} \le \sigma _{t,i,k}, \forall t,i,k\). However, these constraints are redundant [14].

3 Genuinely piecewise linear approximation

We intend to decrease the size of \((\widehat{P}_{SPL})\) by considering genuinely piecewise linear functions where the number of nodes can be chosen arbitrarily. Concerning the position of the nodes, we remember that revenue management is most crucial whenever remaining capacity becomes scarce. Separately for each resource, our proposed value function approximation is thus piecewise linear with nodes \(0,1,2,\dots ,L_i-1,c_i\), where \(L_i \in \mathbb N\) satisfies \(1 \le L_i \le c_i\). Piecewise linear functions on this grid are spanned by the basis functions \(1_{\{r_i \ge k\}}, k = 1,\dots ,L_i-1\), together with the additional basis function \(\max \{0,r_i-L_i+1\}\). Therefore, our proposed value function approximation has the following form:

We now develop a compact linear program denoted \((\overline{P}_{G})\) associated with the value function approximation (7). This is done heuristically by modifying \((\widehat{P}_{SPL})\).

For a fixed time t, each constraint in (3) corresponds to a basis function \(1_{\{r_i \ge k\}}\). Since the value function approximation (7) includes the basis functions \(1_{\{r_i \ge k\}}\) for \(k \le L_i-1\), \((\overline{P}_{G})\) inherits the constraints (3) for \(k \le L_i-1\). Our main task is to construct analogue constraints for the basis functions \(\max \{0,r_i-L_i+1\}, i = 1,\dots ,I\). We first observe that the left hand side of (3), \(\sigma _{t,i,k}\), corresponds to the unreduced term \(\sum _{\mathbf {r} \in \mathcal R_t,\mathbf {u} \in \mathcal U_{\mathbf {r}}} X_{t,\mathbf {r},\mathbf {u}} 1_{\{r_i \ge k\}}\) on the left hand side of (2). This term is the expected value of \(1_{\{r_i \ge k\}}\) given the probability distribution \(X_t\). Adapting this probabilistic view for the basis function \(\max \{0,r_i-L_i+1\}\), we look for the expected value of \(\max \{0,r_i-L_i+1\}\) given the probability distribution \(X_t\). In terms of the probabilities \(\sigma _{t,i,k}\), this translates to \(\sum _{k=L_i}^{c_i} (\sigma _{t,i,k} - \sigma _{t,i,k+1}) (k-L_i+1) = \sum _{k=L_i}^{c_i} \sigma _{t,i,k}\). The fact that we end up with the sum of \(\sigma _{t,i,k}\) over \(k = L_i,\dots ,c_i\) suggests that the constraints we intend to construct result from summing (3) over \(k = L_i,\dots ,c_i\):

Here, \(\zeta _{t-1,i,j,L_i}\) on the right hand side is the result of a telescoping sum and the fact that \(\zeta _{t,i,j,c_i+1} = 0\). Summarized, we obtain \((\overline{P}_{G})\) as a relaxation of \((\widehat{P}_{SPL})\) by replacing the constraints (3) for \(k = L_i,\dots ,c_i\) with their sum. This constraint aggregation decreases the number of constraints and is thus a simplification of \((\widehat{P}_{SPL})\). To decrease the number of variables, we use the probabilistic interpretation of \(\sigma\) to argue as follows: For a given number \(\sigma _{t,i,L_i}\), the term \(\sum _{k=L_i}^{c_i} \sigma _{t,i,k}\) can take any value between \(\sigma _{t,i,L_i}\) and \(\sigma _{t,i,L_i}(c_i-L_i+1)\). The same is true for the term \(\sigma _{t,i,L_i} + \sigma _{t,i,c_i}(c_i - L_i)\). The variables \(\sigma _{t,i,L_i+1}, \dots , \sigma _{t,i,c_i-1}\) are thus superfluous, and the above constraints (8) become

Finally, we add the constraints \(\sigma _{t,i,k} \le 1\) and \(\sigma _{t,i,k+1} \le \sigma _{t,i,k}\) which were redundant for \((\widehat{P}_{SPL})\), and propose the following compact linear program:

Let \((P_{G})\) denote the linear program \((P_\phi )\) using the SGPL approximation (7), and let \(\overline{Z}_{G}\) and \(Z_{G}\) be the optimal values of \((\overline{P}_{G})\) and \((P_{G})\). It follows from the above discussion that \(\overline{Z}_{G}\) decreases as the number of nodes, \(L_i\), increases. Furthermore, standard arguments from variable aggregation show that the inequality \(\overline{Z}_{G} \ge Z_{G}\) holds. We conjecture that \((\overline{P}_{G})\) is indeed a reduction of \((P_{G})\) meaning that \(\overline{Z}_{G} = Z_{G}\). In any case, \(\overline{Z}_{G}\) provides an upper bound on the optimal expected revenue, i.e., \(\overline{Z}_{G} \ge v_1(\mathbf{c})\).

To support our conjecture \(\overline{Z}_{G} = Z_{G}\), we compare these two values on small random network instances, see Appendix A for details. The corresponding AMPL code is available on GitHub so that our results can be reproduced.Footnote 1

4 Numerical experiments

We experimentally benchmark SGPL against AF and SPL. We expect that SGPL outperforms AF and needs less computing time than SPL. It is not clear, however, how fast the quality improves as the number of nodes, \(L_i\), increases. We provide some guidance on how to choose the number of nodes, \(L_i\), during deployment where solving SPL is computationally too expensive.

All numerical experiments were carried out on a virtual machine with 256 GB RAM and 32 cores of 2.59 GHz processors. The linear programs are solved with CPLEX 20.1.0.0, using the interior-point solver “barrier” with standard tolerance \(10^{-8}\).

We use data filesFootnote 2 from the literature containing 48 network instances [13, 14]. The setup is a hub-and-spoke airline network with one single hub and N non-hub locations. Each non-hub location is connected with the hub via two legs, one for each direction. There are \(N(N+1)\) itineraries corresponding to all origin–destination pairs. For each itinerary, there are two fare classes, where the high fare is \(\kappa\) times higher than the low fare. Therefore, including the dummy product, there is a total of \(2N(N+1) + 1\) products. Finally, \(\alpha := \frac{\sum _{t,i,j} a_{ij} p_{t,j}}{\sum _i c_i}\) denotes the total load. Each network instance is identified with the tupel \((T,N,\alpha ,\kappa )\).

It turns out that solving the dual linear programs is computationally more efficient. This observation might be explained by the fact that “barrier” utilizes the matrix product of the constraint matrix and its transpose in each iteration. Since \((\overline{P}_{G})\) has approximately twice as many constraints as it has variables, this matrix product is smaller for the dual linear program.

Also, adding the concavity property \(V_{t,i,k+1} \le V_{t,i,k}, \forall t,i,k\), to the constraints of the dual of \((\widehat{P}_{SPL})\) speeds up its computing time. Similar results concerning the increase of efficiency by enforcing concavity of bid prices with respect to time for an affine value function approximation is discussed in [15].

For a fixed network instance \((T,N,\alpha ,\kappa )\), let \(\overline{Z}_{G}^{\mathbf {L}}\) denote the optimal value of \((\overline{P}_{G})\) given \(\mathbf {L} = (L_1,\dots ,L_I)\). We also call this value the upper bound. Using the value function approximation (7) to compute approximate marginal values, we simulate the corresponding policy 500 times. Let \(R_{G}^{\mathbf {L}}\) denote the resulting average revenue. We measure the quality of an SGPL approximation by the difference \(\overline{Z}_{G}^{\mathbf {L}} - R_{G}^{\mathbf {L}}\) which we call optimality gap. For a given number \(q \in (0,1)\), we define \(L_i^q:= \lceil qc_i\rceil , \forall i\). Let \(t_{\text {comp}}^q\) be the computing time for solving the dual of \((\overline{P}_{G})\). Since we always associate \(q = 0\) and \(q = 1\) with AF and SPL, we report the computing times for solving the dual of \((\widehat{P}_{AF})\) or \((\widehat{P}_{SPL})\) in these cases. We compute \(\overline{Z}_{G}^{\mathbf {L}^q}\) and \(R_{G}^{\mathbf {L}^q}\) for \(q = 0,\frac{1}{8},\dots ,\frac{7}{8},1\). Figure 1 shows the results for the network instance \((T,N,\alpha ,\kappa )=(200,4,1.0,4)\). On the left hand side, the results are plotted against the fraction q, and on the right hand side, they are plotted against the computing time \(t_{\text {comp}}^q\) in seconds. We observe large improvements for both the upper bound \(\overline{Z}_{G}^{\mathbf {L}}\) and the average revenue \(R_{G}^{\mathbf {L}}\) even for small fractions q and for computing times which are significantly smaller compared to SPL.

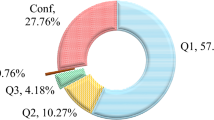

Fixing the value \(q = \frac{1}{4}\), SGPL’s optimality gap is less than half of AF’s optimality gap in 41 out of all 48 network instances. In general, let \(q_{\text {half}}\) be the smallest \(q \in \{\frac{1}{8},\dots ,\frac{7}{8},1\}\) for which the optimality gap is less than half of AF’s optimality gap. During deployment, we suggest to either 1) successively solve SGPL for \(q = 0,\frac{1}{8}, \dots , q_{\text {half}}\), or 2) use the fixed value \(q = \frac{1}{4}\). In Tables 1 and 2, we report results for strategy 1). Table 1 contains upper bounds and average revenues for AF, SPL and SGPL using \(q_{\text {half}}\). Table 2 contains the computing times for AF and SPL, i.e., \(t_{\text {comp}}^{0}\) and \(t_{\text {comp}}^{1}\), as well as relevant computing times concerning SGPL: We report both the computing time \(t_{\text {comp}}^{q_{\text {half}}}\) as well as the cumulated computing time \(t_{\text {comp}}^{0} + \cdots + t_{\text {comp}}^{q_{\text {half}}}\). To obtain an impression of the qualitative risk associated with strategy 2), Table 3 reports optimality gaps and computing times for AF, SPL and SGPL using the fixed value \(q = \frac{1}{4}\) for those instances where \(q_{\text {half}} > \frac{1}{4}\).

SGPL’s computing time using \(q_{\text {half}}\) is less than half of SPL’s computing time in 46 cases, less than a third in 39 cases, less than a fifth in 17 cases and less than a tenth in 3 cases. The cumulated computing time \(t_{\text {comp}}^{0} + \cdots + t_{\text {comp}}^{q_{\text {half}}}\) is less than half of SPL’s computing time in 34 cases, less than a third in 20 cases and less than a fifth in 5 cases. For those cases where \(q_{\text {half}} > \frac{1}{4}\), using \(q = \frac{1}{4}\) also substantially reduces AF’s optimality gap requiring computing times that are significantly smaller than half of SPL’s computing time in all but one instance.

5 Conclusion

We add to the literature concerning compact approximate linear programs in network revenue management by filling the gap between the AF and SPL value function approximation. The drawback of AF compared to SPL is mitigated by allowing bid prices to depend on remaining capacity whenever this quantity becomes scarce. At the same time, the computational complexity of SPL is decreased significantly. Our numerical experiments demonstrate that for many instances, AF’s optimality gap can be divided in half using only a small fraction of the computing time required to solve SPL.

Further research may be done to extend our work to more general customer choice models. Even though our results can be applied for discrete pricing problems, fields like the retail industry require more sophisticated choice models.

Data availability

All datasets analyzed in Sect. 4 are taken from the literature [13] and are available online here: https://people.orie.cornell.edu/huseyin/research/rm_datasets/rm_datasets.html. The AMPL code used in Appendix A is available online on GitHub: https://github.com/slaume/SGPL-Support-Equivalence-Conjecture.

References

Adelman, D.: Dynamic bid prices in revenue management. Oper. Res. 55(4), 647–661 (2007)

de Farias, D.P., Van Roy, B.: On constraint sampling in the linear programming approach to approximate dynamic programming. Math. Oper. Res. 29(3), 462–478 (2004)

Erdelyi, A., Topaloglu, H.: Using decomposition methods to solve pricing problems in network revenue management. J. Revenue Pricing Manag. 10(4), 325–343 (2011)

Ke, J., Zhang, D., Zheng, H.: An approximate dynamic programming approach to dynamic pricing for network revenue management. Prod. Oper. Manag. 28(11), 2719–2737 (2019)

Kunnumkal, S., Talluri, K.: A note on relaxations of choice network revenue management dynamic program. Oper. Res. 64(1), 158–166 (2016)

Kunnumkal, S., Talluri, K.: On a piecewise-linear approximation for network revenue management. Math. Oper. Res. 41(1), 72–91 (2016)

Lin, Q., Nadarajah, S., Soheili, N.: Revisiting approximate linear programming: Constraint-violation learning with applicatins to inventory control and energy storage. Manag. Sci. 66(4), 1544–1562 (2019)

Meissner, J., Strauss, A.K.: Network revenue management with inventory-sensitive bid prices and customer choice. Eur. J. Oper. Res. 216(2), 459–468 (2012)

Schweitzer, P.J., Seidmann, A.: Generalized polynomial approximations in Markovian decision processes. J. Math. Anal. Appl. 110(2), 568–582 (1985)

Talluri, K., van Ryzin, G.J.: Revenue management under a general discrete choice model of consumer behavior. Manag. Sci. 50(1), 15–33 (2004)

Talluri, K., van Ryzin, G.J.: The Theory and Practice of Revenue Management. Kluwer Academic Publishers, Norwell, MA (2004)

Tong, C., Topaloglu, H.: On the approximate linear programming approach for network revenue management problems. INFORMS J. Comput. 26(1), 121–134 (2014)

Topaloglu, H.: Using Lagrangian relaxation to compute capacity-dependent bid prices in network revenue management. Oper. Res. 57(3), 637–649 (2009)

Vossen, T.W.M., Zhang, D.: Reductions of approximate linear programs for network revenue management. Oper. Res. 63(6), 1352–1371 (2015)

Vossen, T.W.M., Zhang, D.: A dynamic disaggregation approach to approximate linear programs for network revenue management. Prod. Oper. Manag. 24(3), 469–487 (2015)

Walczak, D., Mardan, S., Kallesen, R.: Customer choice, fare adjustments and the marginal expected revenue data transformation: A note on using old yield management techniques in the brave new world of pricing. J. Revenue Pricing Manag. 9, 94–109 (2010)

Funding

Open access funding provided by University of Zurich.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Numerical support for conjectured equivalence

We provide numerical support for the conjecture \(\overline{Z}_{G} = Z_{G}\) using small random network instances. The computation of \(Z_{G}\) is made possible by a partial reduction of \((P_{G})\), see Appendix B for details.

The network has five nodes, A, B, C, D, E, and four legs, AC, BC, CD, CE. We set \(c_i = 7, \forall i\), and \(T = 30\). There are eight possible origin–destination pairs, AC, BC, CD, CE, AD, AE, BD, BE, out of which we randomly choose five. For each chosen origin–destination pair, there are two fares which are determined using a uniform distribution over \(\{10,\dots ,30\}\) and \(\{40,\dots ,120\}\), respectively. Demand is stationary and chosen randomly such that \(\sum _{t,j} p_{t,j} = T\). The number of nodes \(L_i \in \{1,\dots ,c_i\}\) is also chosen randomly for each resource i. We generate twenty such random instances and always observe \(\overline{Z}_{G} = Z_{G}\).

B Partial reduction

In Appendix A, we have to compute the optimal value of the unreduced linear program \((P_{G})\). \((P_{G})\) suffers from the curse of dimensionality concerning both the states \(\mathbf {r}\) and decisions \(\mathbf {u}\). We partially reduce \((D_{G})\), the dual of \((P_{G})\), concerning the states \(\mathbf {r}\). For the sake of simplicity, we abbreviate the SGPL approximation (7) as \(\theta _t + \sum _i v^G_{t,i}(r_i)\) where

Then, the dual of \((P_\phi )\), which results from inserting (7) into (D), is equal to

with boundary conditions \(\theta _{T+1} = V_{T+1,i,k} = 0, \forall i,k\). The unreduced linear program \((D_{G})\) is equivalent to the partially reduced linear program

where \(\mathcal R_{i,\mathbf {u}}:= \{r \in \{0,\dots ,c_i\} \mid u_j a_{ij} \le r, \forall j \}\). Moreover, since \(v^G_{t,i}(r)\) is linear for \(L_i-1 \le r \le c_i\), the constraints (11) only have to be enforced for \(r \in \{0,1,\dots ,L_i,c_i\} \cap \mathcal R_{i,\mathbf {u}}\).

Proof

Let \(\theta ,V\) be a feasible solution to \((D_{G})\). We obtain a feasible solution \(\theta ,V,\alpha\) to \((\widetilde{D}_{G})\) with equal optimal value by defining

Vice versa, given a feasible solution \(\theta ,V,\alpha\) to \((\widetilde{D}_{G})\), \(\theta ,V\) is automatically a feasible solution to \((D_{G})\) with equal optimal value, which follows from inserting (11) into (10).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Laumer, S. Efficient compact linear programs for network revenue management. Optim Lett 17, 437–451 (2023). https://doi.org/10.1007/s11590-022-01945-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-022-01945-y