Abstract

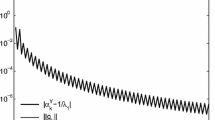

The quadratic termination property is important to the efficiency of gradient methods. We consider equipping a family of gradient methods, where the stepsize is given by the ratio of two norms, with two dimensional quadratic termination. Such a desired property is achieved by cooperating with a new stepsize which is derived by maximizing the stepsize of the considered family in the next iteration. It is proved that each method in the family will asymptotically alternate in a two dimensional subspace spanned by the eigenvectors corresponding to the largest and smallest eigenvalues. Based on this asymptotic behavior, we show that the new stepsize converges to the reciprocal of the largest eigenvalue of the Hessian. Furthermore, by adaptively taking the long Barzilai–Borwein stepsize and reusing the new stepsize with retard, we propose an efficient gradient method for unconstrained quadratic optimization. We prove that the new method is R-linearly convergent with a rate of \(1-1/\kappa\), where \(\kappa\) is the condition number of Hessian. Numerical experiments show the efficiency of our proposed method.

Similar content being viewed by others

References

Cauchy, A.: Méthode générale pour la résolution des systemes di’équations simultanées. Comp. Rend. Sci. Paris 25, 536–538 (1847)

Dai, Y.H., Yuan, Y.X.: Alternate minimization gradient method. IMA J. Numer. Anal. 23(3), 377–393 (2003)

Akaike, H.: On a successive transformation of probability distribution and its application to the analysis of the optimum gradient method. Ann. Inst. Stat. Math. 11(1), 1–16 (1959)

Forsythe, G.E.: On the asymptotic directions of the \(s\)-dimensional optimum gradient method. Numer. Math. 11(1), 57–76 (1968)

Huang, Y.K., Dai, Y.H., Liu, X.W., Zhang, H.: On the asymptotic convergence and acceleration of gradient methods. J. Sci. Comput. 90, 7 (2022)

Nocedal, J., Sartenaer, A., Zhu, C.: On the behavior of the gradient norm in the steepest descent method. Comput. Optim. Appl. 22(1), 5–35 (2002)

Zou, Q., Magoulès, F.: Fast gradient methods with alignment for symmetric linear systems without using Cauchy step. J. Comput. Math. 381, 113033 (2021)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Raydan, M.: On the Barzilai-Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13(3), 321–326 (1993)

Dai, Y.H., Liao, L.Z.: \(R\)-linear convergence of the Barzilai-Borwein gradient method. IMA J. Numer. Anal. 22(1), 1–10 (2002)

Fletcher, R.: On the Barzilai–Borwein method. In: Optimization and control with applications, pp. 235–256. Springer, New York (2005)

Raydan, M.: The Barzilai-Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7(1), 26–33 (1997)

Yuan, Y.X.: Step-sizes for the gradient method. AMS/IP Stud. Adv. Math. 42(2), 785–796 (2008)

Birgin, E.G., Martínez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 10(4), 1196–1211 (2000)

Birgin, E.G., Martínez, J.M., Raydan, M., et al.: Spectral projected gradient methods: review and perspectives. J. Stat. Softw. 60(3), 539–559 (2014)

Dai, Y.H., Huang, Y.K., Liu, X.W.: A family of spectral gradient methods for optimization. Comput. Optim. Appl. 74(1), 43–65 (2019)

Di Serafino, D., Ruggiero, V., Toraldo, G., Zanni, L.: On the steplength selection in gradient methods for unconstrained optimization. Appl. Math. Comput. 318, 176–195 (2018)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23(4), 707–716 (1986)

Huang, Y.K., Liu, H.: Smoothing projected Barzilai-Borwein method for constrained non-lipschitz optimization. Comput. Optim. Appl. 65(3), 671–698 (2016)

Huang, Y.K., Liu, H., Zhou, S.: Quadratic regularization projected Barzilai-Borwein method for nonnegative matrix factorization. Data Min. Knowl. Discov. 29(6), 1665–1684 (2015)

Jiang, B., Dai, Y.H.: Feasible Barzilai-Borwein-like methods for extreme symmetric eigenvalue problems. Optim. Method Softw. 28(4), 756–784 (2013)

Yuan, Y.X.: A new stepsize for the steepest descent method. J. Comput. Math. 24(2), 149–156 (2006)

Dai, Y.H., Yuan, Y.X.: Analysis of monotone gradient methods. J. Ind. Mang. Optim. 1(2), 181 (2005)

Huang, Y.K., Dai, Y.H., Liu, X.W.: Equipping the Barzilai-Borwein method with the two dimensional quadratic termination property. SIAM J. Optim. 31(4), 3068–3096 (2021)

Sun, C., Liu, J.P.: New stepsizes for the gradient method. Optim. Lett. 14(7), 1943–1955 (2020)

Dai, Y.H., Yang, X.: A new gradient method with an optimal stepsize property. Comput. Optim. Appl. 33(1), 73–88 (2006)

Elman, H.C., Golub, G.H.: Inexact and preconditioned Uzawa algorithms for saddle point problems. SIAM J. Numer. Anal. 31(6), 1645–1661 (1994)

Frassoldati, G., Zanni, L., Zanghirati, G.: New adaptive stepsize selections in gradient methods. J. Ind. Mang. Optim. 4(2), 299–312 (2008)

De Asmundis, R., Di Serafino, D., Hager, W.W., Toraldo, G., Zhang, H.: An efficient gradient method using the Yuan steplength. Comput. Optim. Appl. 59(3), 541–563 (2014)

Davis, T.A., Hu, Y.: The university of Florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1–25 (2011)

Crisci, S., Porta, F., Ruggiero, V., Zanni, L.: Spectral properties of Barzilai-Borwein rules in solving singly linearly constrained optimization problems subject to lower and upper bounds. SIAM J. Optim. 30(2), 1300–1326 (2020)

Dai, Y.H., Fletcher, R.: Projected Barzilai-Borwein methods for large-scale box-constrained quadratic programming. Numer. Math. 100(1), 21–47 (2005)

Dai, Y.H., Hager, W.W., Schittkowski, K., Zhang, H.: The cyclic Barzilai-Borwein method for unconstrained optimization. IMA J. Numer. Anal. 26(3), 604–627 (2006)

Huang, Y.K., Dai, Y.H., Liu, X.W., Zhang, H.: On the acceleration of the Barzilai-Borwein method. Comput. Optim. Appl. 81(3), 717–740 (2022)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Huang, Y.K., Dai, Y.H., Liu, X.W., Zhang, H.: Gradient methods exploiting spectral properties. Optim. Method Softw. 35(4), 681–705 (2020)

Li, X., Huang, Y.K.: A note on the \(R\)-linear convergence of nonmonotone gradient methods. arXiv preprint, arXiv:2207.05912 (2022)

Dai, Y.H.: Alternate step gradient method. Optimization 52(4–5), 395–415 (2003)

Huang, N.: On \(R\)-linear convergence analysis for a class of gradient methods. Comput. Optim. Appl. 81(1), 161–177 (2022)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 11701137) and Natural Science Foundation of Hebei Province (Grant No. A2021202010).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, X., Huang, Y. A gradient method exploiting the two dimensional quadratic termination property. Optim Lett 17, 1413–1434 (2023). https://doi.org/10.1007/s11590-022-01936-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-022-01936-z