Abstract

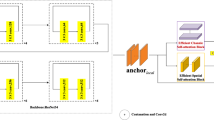

For self-driving cars and advanced driver assistance systems, lane detection is imperative. On the one hand, numerous current lane line detection algorithms perform dense pixel-by-pixel prediction followed by complex post-processing. On the other hand, as lane lines only account for a small part of the whole image, there are only very subtle and sparse signals, and information is lost during long-distance transmission. Therefore, it is difficult for an ordinary convolutional neural network to resolve challenging scenes, such as severe occlusion, congested roads, and poor lighting conditions. To address these issues, in this study, we propose an encoder–decoder architecture based on an attention mechanism. The encoder module is employed to initially extract the lane line features. We propose a spatial recurrent feature-shift aggregator module to further enrich the lane line features, which transmits information from four directions (up, down, left, and right). In addition, this module contains the spatial attention feature that focuses on useful information for lane line detection and reduces redundant computations. In particular, to reduce the occurrence of incorrect predictions and the need for post-processing, we add channel attention between the encoding and decoding. It processes encoding and decoding to obtain multidimensional attention information, respectively. Our method achieved novel results on two popular lane detection benchmarks (CULane F1-measure 76.2, TuSimple accuracy 96.85%), which can reach 48 frames per second and meet the real-time requirements of autonomous driving.

Similar content being viewed by others

References

Borkar, A., Hayes, M., Smith, M.T.: A novel lane detection system with efficient ground truth generation. IEEE Trans. Intell. Transp. Syst. 13(1), 365–374 (2011)

Bottou, L.: Large-scale machine learning with stochastic gradient descent. In: Proceedings of COMPSTAT'2010. Physica-Verlag HD, pp 177–186 (2010)

Cáceres Hernández, D., et al.: Real-time lane region detection using a combination of geometrical and image features. Sensors 16(11), 1935 (2016)

Chen, L.-C., et al.: Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017)

Chougule, S., et al.: Reliable multilane detection and classification by utilizing CNN as a regression network. In: Proceedings of the European conference on computer vision (ECCV) workshops (2018)

Deng, J., Han, Y.: A real-time system of lane detection and tracking based on optimized RANSAC B-spline fitting. In: Proceedings of the 2013 Research in Adaptive and Convergent Systems, pp 157–164 (2013)

Deusch, H., et al.: A random finite set approach to multiple lane detection. In: 2012 15th International IEEE Conference on Intelligent Transportation Systems. IEEE (2012)

Du, X., Tan, K.K.: Comprehensive and practical vision system for self-driving vehicle lane-level localization. IEEE Trans. Image Process. 25(5), 2075–2088 (2016)

Garnett, Noa, et al. "Real-time category-based and general obstacle detection for autonomous driving." Proceedings of the IEEE International Conference on Computer Vision Workshops. 2017.

Ghafoorian, M., et al.: El-GAN: Embedding loss driven generative adversarial networks for lane detection. In: Proceedings of the European conference on computer vision (ECCV) Workshops (2018)

Ghiasi, G., Lin, T.-Y., Le, Q.V.: Dropblock: A regularization method for convolutional networks. arXiv preprint arXiv:1810.12890 (2018)

Goyal, P., et al.: Accurate, large minibatch SGD: Training ImageNet in 1 hour. arXiv preprint arXiv:1706.02677 (2017)

Gurghian, A., et al.: Deeplanes: End-to-end lane position estimation using deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (2016)

Hillel, A.B., et al.: Recent progress in road and lane detection: a survey. Mach. Vis. Appl. 25(3), 727–745 (2014)

Hou, Y., et al.: Learning lightweight lane detection CNNs by self- attention distillation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (2019)

Hsu, Y-C, et al.: Learning to cluster for proposal-free instance segmentation. In: 2018 International Joint Conference on Neural Networks (IJCNN). IEEE, (2018)

Huval, B., et al.: An empirical evaluation of deep learning on highway driving. arXiv preprint arXiv:1504.01716 (2015)

Jung, H., Min, J., Kim, J.: An efficient lane detection algorithm for lane departure detection. In: 2013 IEEE Intelligent Vehicles Symposium (IV). IEEE (2013)

Jung, S., Youn, J., Sull, S.: Efficient lane detection based on spatiotemporal images. IEEE Trans. Intell. Transp. Syst. 17(1), 289–295 (2015)

Ko, Y., et al.: Key points estimation and point instance segmentation approach for lane detection. In: IEEE Transactions on Intelligent Transportation Systems (2021)

Krähenbühl, P., Koltun, V.: Efficient inference in fully connected crfs with gaussian edge potentials. Adv. Neural. Inf. Process. Syst. 24, 109–117 (2011)

Kwon, S., et al.: Multi-lane detection and tracking using dual parabolic model. Bull. Netw. Comput. Syst. Softw. 4(1), 65–68 (2015)

Lee, S., et al.: Vpgnet: Vanishing point guided network for lane and road marking detection and recognition. In: Proceedings of the IEEE International Conference on Computer Vision (2017)

Lee, C., Moon, J.-H.: Robust lane detection and tracking for real-time applications. IEEE Trans. Intell. Transp. Syst. 19(12), 4043–4048 (2018)

Levi, D., et al.: StixelNet: a deep convolutional network for obstacle detection and road segmentation. BMVC 1(2), 4 (2015)

Li, H., Li, X.: Flexible lane detection using CNNs. In: 2021 International Conference on Computer Technology and Media Convergence Design (CTMCD). IEEE (2021)

Liang, M., Zhou, Z., Song, Q.: Improved lane departure response distortion warning method based on Hough transformation and Kalman filter. Informatica 41(3) (2017).

Liu, T., et al.: Lane detection in low-light conditions using an efficient data enhancement: Light conditions style transfer. In: 2020 IEEE Intelligent Vehicles Symposium (IV). IEEE (2020)

Liu, Y.-B., Zeng, M., Meng, Q.-H.: Heatmap-based Vanishing Point boosts Lane Detection. arXiv preprint arXiv:2007.15602 (2020)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015)

Mishra, P., Sarawadekar, K.: Polynomial learning rate policy with warm restart for deep neural network. In: TENCON 2019–2019 IEEE Region 10 Conference (TENCON). IEEE (2019)

Neven, D., et al.: Towards end-to-end lane detection: an instance segmentation approach. In: 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, (2018)

Pan, X., et al.: Spatial as deep: Spatial CNN for traffic scene understanding. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Papandreou, G., Kokkinos, I., Savalle, P.-A.: Modeling local and global deformations in deep learning: Epitomic convolution, multiple instance learning, and sliding window detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015)

Paszke, A., et al.: Enet: A deep neural network architecture for real-time semantic segmentation. arXiv preprint arXiv:1606.02147 (2016)

Philion, J.: Fastdraw: Addressing the long tail of lane detection by adapting a sequential prediction network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2019)

Qin, Z., Wang, H., Li, X.: Ultrafast structure-aware deep lane detection. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXIV 16. Springer International Publishing (2020)

Romera, E., et al.: Erfnet: efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 19(1), 263–272 (2017)

Shin, B.-S., Tao, J., Klette, R.: A superparticle filter for lane detection. Pattern Recogn. 48(11), 3333–3345 (2015)

Son, J., et al.: Real-time illumination invariant lane detection for lane departure warning system. Expert Syst. Appl. 42(4), 1816–1824 (2015)

Su, J., et al.: Structure guided lane detection. arXiv preprint arXiv:2105.05403 (2021)

Tan, H., et al.: A novel curve lane detection based on Improved River Flow and RANSA. In: 17th International IEEE Conference on Intelligent Transportation Systems (ITSC). IEEE (2014)

Van Gansbeke, W., et al.: End-to-end lane detection through differentiable least-squares fitting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (2019)

Wang, Q., et al.: Supplementary material for ‘ECA-Net: Efficient channel attention for deep convolutional neural networks. Tech. Rep

Wang, Q., et al.: Multitask attention network for lane detection and fitting. In: IEEE Transactions on Neural Networks and Learning Systems (2020)

Wang, X., Yongzhong, W., Chenglin, W.: Robust lane detection based on gradient-pairs constraint. In: Proceedings of the 30th Chinese Control Conference. IEEE (2011)

Wang, Y., Dahnoun, N., Achim, A.: A novel system for robust lane detection and tracking. Signal Process. 92(2), 319–334 (2012)

Wu, P.C., Chin-Yu, C., Chang, H.L.: Lane-mark extraction for automobiles under complex conditions. Pattern Recogn. 47(8), 2756–2767 (2014)

Xu, H, et al.: Curvelane-nas: Unifying lane-sensitive architecture search and adaptive point blending. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XV 16. Springer International Publishing (2020)

Xu, S., et al.: Road lane modeling based on RANSAC algorithm and hyperbolic model. In: 2016 3rd International Conference on Systems and Informatics (ICSAI). IEEE (2016)

Xu, H., Li, H.: Study on a robust approach of lane departure warning algorithm. In: 2010 2nd International Conference on Signal Processing Systems, vol. 2. IEEE (2010)

Zhang, Y., et al.: Ripple-GAN: lane line detection with ripple lane line detection network and Wasserstein GAN. IEEE Trans. Intell. Transp. Syst. 22(3), 1532–1542 (2020)

Zheng, T., et al.: Resa: Recurrent feature-shift aggregator for lane detection. arXiv preprint arXiv:2008.13719 (2020)

Funding

National Natural Science Foundation of China (Grant no. 61601354).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhao, Q., Peng, Q. & Zhuang, Y. Lane line detection based on the codec structure of the attention mechanism. J Real-Time Image Proc 19, 715–726 (2022). https://doi.org/10.1007/s11554-022-01217-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-022-01217-z