Abstract

Purpose:

Identifying the stage of lung cancer accurately from histopathology images and gene is very important for the diagnosis and treatment of lung cancer. Despite the substantial progress achieved by existing methods, it remains challenging due to large intra-class variances, and a high degree of inter-class similarities.

Methods:

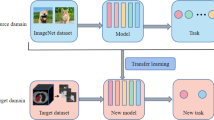

In this paper, we propose a phased Multimodal Multi-scale Attention Model (MMAM) that predicts lung cancer stages using histopathology image data and gene data. The model consists of two phases. In Phase1, we propose a Staining Difference Elimination Network (SDEN) to eliminate staining differences between different histopathology images, In Phase2, it utilizes the image feature extractor provided by Phase1 to extract image features, and sends the multi-scale image features together with gene features into our Adaptive Enhanced Attention Fusion (AEAF) module for multimodal multi-scale features fusion to enable prediction of lung cancer staging.

Results:

We evaluated the proposed MMAM on the TCGA lung cancer dataset, and achieved 88.51% AUC and 88.17% accuracy on classification prediction of lung cancer stages I, II, III, and IV.

Conclusion:

The method can help doctors diagnose the stage of lung cancer patients and can benefit from multimodal data.

Similar content being viewed by others

References

de Groot PM, Wu CC, Carter BW, Munden RF (2018) The epidemiology of lung cancer. Transl Lung Cancer Res 7(3):220

Giaquinto AN, Miller KD, Tossas KY, Winn RA, Jemal A, Siegel RL (2022) Cancer statistics for African American/black people 2022. CA A Cancer J Clin 72(3):202–229

Algohary A, Shiradkar R, Pahwa S, Purysko A, Verma S, Moses D, Shnier R, Haynes A-M, Delprado W, Thompson J, Tirumani S, Mahran A, Rastinehad A, Ponsky L, Stricker PD, Madabhushi A (2020) Combination of peri-tumoral and intra-tumoral radiomic features on bi-parametric MRI accurately stratifies prostate cancer risk: a multi-site study. Cancers 12(8):2200

Ghoniem RM, Algarni AD, Refky B, Ewees AA (2021) Multi-modal evolutionary deep learning model for ovarian cancer diagnosis. Symmetry 13(4):643

Shao W, Wang T, Sun L, Dong T, Han Z, Huang Z, Zhang J, Zhang D, Huang K (2020) Multi-task multi-modal learning for joint diagnosis and prognosis of human cancers. Med Image Anal 65:101795

Tosta TAA, de Faria PR, Neves LA, do Nascimento MZ (2019) Computational normalization of h &e-stained histological images: progress, challenges and future potential. Artif Intell Med 95:118–132

Mercan C, Mooij G, Tellez D, Lotz J, Weiss N, van Gerven M, Ciompi F (2020) Virtual staining for mitosis detection in breast histopathology. In: 2020 IEEE 17th international symposium on biomedical imaging (ISBI). IEEE, pp 1770–1774

Salehi P, Chalechale A (2020) Pix2pix-based stain-to-stain translation: a solution for robust stain normalization in histopathology images analysis. In: 2020 international conference on machine vision and image processing (MVIP). IEEE, pp 1–7

Lafarge MW, Pluim JP, Eppenhof KA, Moeskops P, Veta M (2017) Domain-adversarial neural networks to address the appearance variability of histopathology images. In: Deep learning in medical image analysis and multimodal learning for clinical decision support. Springer, pp 83–91

Dai Y, Gieseke F, Oehmcke S, Wu Y, Barnard K (2021) Attentional feature fusion. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 3560–3569

Ganin Y, Ustinova E, Ajakan H, Germain P, Larochelle H, Laviolette F, Marchand M, Lempitsky V (2016) Domain-adversarial training of neural networks. J Mach Learn Res 17(1):2030–2096

Klambauer G, Unterthiner T, Mayr A, Hochreiter S (2017) Self-normalizing neural networks. Adv Neural Inf Process Syst 30

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13713–13722

Braman N, Gordon J W, Goossens E T, Willis C, Stumpe MC, Venkataraman J (2021) Deep orthogonal fusion: Multimodal prognostic biomarker discovery integrating radiology, pathology, genomic, and clinical data. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 667–677

Dong Y, Hou L, Yang W, Han J, Wang J, Qiang Y, Zhao J, Hou J, Song K, Ma Y, Kazihise NGF, Cui Y, Yang X (2021) Multi-channel multi-task deep learning for predicting EGFR and KRAS mutations of non-small cell lung cancer on CT images. Quant Imaging Med Surg 11(6):2354

Zhou T, Fu H, Zhang Y, Zhang C, Lu X, Shen J, Shao L (2020) M2net: Multi-modal multi-channel network for overall survival time prediction of brain tumor patients. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 221–231

Hashimoto N, Fukushima D, Koga R, Takagi Y, Ko K, Kohno K, Nakaguro M, Nakamura S, Hontani H, Takeuchi I (2020) Multi-scale domain-adversarial multiple-instance CNN for cancer subtype classification with unannotated histopathological images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3852–3861

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 61872261); the National Natural Science Foundation of China (Grant No. U21A20469); the National Natural Science Foundation of China (Grant No. 61972274); and the Natural Science Foundation of Shanxi Province (Grant No. 202103021224066).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cai, M., Zhao, L., Zhang, Y. et al. A progressive phased attention model fused histopathology image features and gene features for lung cancer staging prediction. Int J CARS 18, 1857–1865 (2023). https://doi.org/10.1007/s11548-023-02844-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02844-y