Abstract

Purpose

Surgical workflow recognition and context-aware systems could allow better decision making and surgical planning by providing the focused information, which may eventually enhance surgical outcomes. While current developments in computer-assisted surgical systems are mostly focused on recognizing surgical phases, they lack recognition of surgical workflow sequence and other contextual element, e.g., “Instruments.” Our study proposes a hybrid approach, i.e., using deep learning and knowledge representation, to facilitate recognition of the surgical workflow.

Methods

We implemented “Deep-Onto” network, which is an ensemble of deep learning models and knowledge management tools, ontology and production rules. As a prototypical scenario, we chose robot-assisted partial nephrectomy (RAPN). We annotated RAPN videos with surgical entities, e.g., “Step” and so forth. We performed different experiments, including the inter-subject variability, to recognize surgical steps. The corresponding subsequent steps along with other surgical contexts, i.e., “Actions,” “Phase” and “Instruments,” were also recognized.

Results

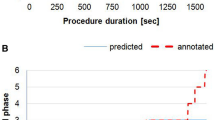

The system was able to recognize 10 RAPN steps with the prevalence-weighted macro-average (PWMA) recall of 0.83, PWMA precision of 0.74, PWMA F1 score of 0.76, and the accuracy of 74.29% on 9 videos of RAPN.

Conclusion

We found that the combined use of deep learning and knowledge representation techniques is a promising approach for the multi-level recognition of RAPN surgical workflow.

Similar content being viewed by others

Notes

Available at https://doi.org/10.5281/zenodo.1066831.

References

Blum T, Feussner H, Navab N (2010) Modeling and segmentation of surgical workflow from laparoscopic video. In: MICCAI international conference on medical image computing and computer-assisted-intervention, 2010. MICCAI 2010, vol 13(3), pp 400–407. https://doi.org/10.1007/978-3-642-15711-0_50

Khurshid AG, Esfahani ET, Raza SJ, Bhat R, Wang K, Hammond Y, Wilding G, Peabody JO, Chowriappa AJ (2015) Cognitive skills assessment during robot-assisted surgery: separating the wheat from the chaff. BJUI 155(1):166–174. https://doi.org/10.1111/bju.12657

Flin R, Youngson G, Yule S (2007) How do surgeons make intraoperative decisions? Qual Saf Health Care 16(3):235–239. https://doi.org/10.1136/qshc.2006.020743

Ferlay J, Steliarova-Foucher E, Lortet-Tieulent J, Rosso S, Coebergh JW, Comber H, Forman D, Bray F (2012) Cancer incidence and mortality patterns in Europe: estimates for 40 countries in 2012. Eur J Cancer 49(6):1374–403. https://doi.org/10.1016/j.ejca.2012.12.027

Abel EJ, Culp SH, Meissner M, Matin SF, Tamboli P, Wood CG (2010) Identifying the risk of disease progression after surgery for localized renal cell carcinoma. BJU Int 106(9):1227–83. https://doi.org/10.1111/j.1464-410x.2010.09337.x

Hu JC, Treat E, Filson CP, McLaren I, Xiong S, Stepanian S, Hafez KS, Weizer AZ, Porter J (2014) Technique and outcomes of robot-assisted retroperitoneoscopic partial nephrectomy: a multicenter study. Eur J Urol 66:542–549. https://doi.org/10.1016/j.eururo.2014.04.028

Government of Alberta (2018) Robot-assisted partial nephrectomy for renal cell carcinoma: mini review. https://open.alberta.ca/dataset/0e172257-2820-4eba-9915-f0add1d14f0d/resource/0e537ff8-f84a-4f7f-a00b-ebfbdea0289e/download/ahtdp-partial-nephrectomy-2017.pdf. Accessed 02 May 2018

Lin HC, Shafran I, Murphy TE, Okamura AM, Yuh DD, Hager GD (2005) Automatic detection and segmentation of robot-assisted surgical motions. In: International conference on medical image computing and computer-assisted intervention, vol 8(Pt 1), pp 802–810. https://doi.org/10.1007/11566465_99

Katić D, Julliard C, Wekerle AL, Kenngott H, Möller-Stich BP, Dillmann R, Speidel S, Jannin P, Gibaud B (2015) LapOntoSPM: an ontology for laparoscopic surgeries and its application to surgical phase recognition. Int J Comput Assist Radiol Surg 10(9):1427–34. https://doi.org/10.1007/s11548-015-1222-1

Nakawala H, Ferrigno G, De Momi E (2018) Development of an intelligent surgical training system for thoracentesis. Artif Intell Med 84:50–63. https://doi.org/10.1016/j.artmed.2017.10.004

Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2017) Endonet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36(1):86–97. https://doi.org/10.1109/TMI.2016.2593957

Jin Y, Doi Q, Chen H, Yu L, Qin J, Fu C-W, Heng P-A (2018) SV-RCNet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37(5):1114–1126. https://doi.org/10.1109/TMI.2017.2787657

Cadene R, Robert T, Thome N, Cord M (2016) M2CAI workflow challenge: convolutional neural network with time smoothing and hidden Markov model for video frames classification. arXiv preprint arXiv:1610.05541

Donahue J, Hendricks LA, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2014) Long-term recurrent convolutional networks for visual recognition and description. arXiv:1411.4389. https://doi.org/10.21236/ada623249

Szegedy C, Vanhoucke V, loffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR). https://doi.org/10.1109/cvpr.2016.308

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y

Kingma DP, Ba J (2017) Adam: a method for stochastic optimization. arXiv:1412.6980

Kaouk JH, Khalifeh A, Hillyer S, Haber G-P, Stein RJ, Autorino R (2012) Robot-assisted laparoscopic partial nephrectomy: step-by-step contemporary technique and surgical outcomes at a single high-volume institution. Eur Urol 62(3):553–561. https://doi.org/10.1016/j.eururo.2012.05.021

Neumuth T, Strau G, Meixensberger J, Lemke HU, Burgert O (2006) Acquisition of process descriptions from surgical interventions. In: Bressan S, Kung J, Wagner R (eds) DEXA 2006 LNCS, vol 4080. Springer, Heidelberg, pp 602–611

Gibaud B, Forestier G, Feldmann C, Ferrigno G, Gonçalves P, Haidegger T, Julliard C, Katić D, Kenngott H, Maier-Hein L, März K, de Momi E, Nagy DA, Nakawala H, Neumann J, Neumuth T, Balderrama JR, Speidel S, Wagner M, Jannin P (2018) Toward a standard ontology of surgical process models. Int J Comput Assist Radiol Surg 13(9):1397–1408. https://doi.org/10.1007/s11548-018-1824-5

Grenon P, Smith B (2004) SNAP and SPAN: towards dynamic spatial ontology. Spat Cogn Comput 4(1):69–104. https://doi.org/10.1207/s15427633scc0401_5

Rosse C, Mejino JLV (2007) The foundational model of anatomy ontology. In: Burger A, Davidson D, Baldock R (eds) Anatomy ontologies for bioinformatics. Computational biology, vol 6. Springer, London, pp 59–117. https://doi.org/10.1007/978-1-84628-885-2_4

Information Artifact Ontology. https://code.google.com/p/information-artifact-ontology. Accessed 17 Aug 2016

W3C Time Ontology. https://www.w3.org/TR/owl-time/. Accessed 20 Aug 2016

Xiang Z, Courtot M, Brinkman RR, Ruttenberg A, He Y (2010) OntoFox: web-based support for ontology reuse. BMC Res Notes 3(1):175. https://doi.org/10.1186/1756-0500-3-175

Protégé, Stanford Center for Biomedical Informatics Research. http://protege.stanford.edu. Accessed 12 Jan 2016

Horrocks I, Patel-Scheider P, Boley H, Tabet S, Grosof B, Dean M (2017) SWRL: a semantic web rule language combining OWL and RuleML. W3C Member Submission 2004. https://www.w3.org/Submission/SWRL. Accessed 23 Feb 2017

Chollet F (2015) Keras. https://github.com/fchollet/keras. Accessed 05 May 2017

Horridge M, Bechhofer S (2011) The OWL API: a Java API for OWL ontologies. Semant Web J 2(1):11–21

Sirin E, Parsia B, Grau BC, Kalyanpur A, Katz Y (2007) Pellet: a pratical OWL-DL reasoner. Web Semant Sci Serv Agents World Wide Web 5(2):51–53. https://doi.org/10.1016/j.websem.2007.03.004

Kipp M (2007) Anvil—a generic annotation tool for multimodal dialogue. In: 7th European conference on speech communication and technology (Eurospeech), pp 1367–1370

Sun R, Giles CL (2001) Sequence learning: from recognition and prediction to sequential decision making. IEEE Intell Syst 16(4):67–70

Maaten LV, Hinton G (2008) Visualize data using t-SNE. J Mach Learn Res 9:2579–2605

Nakawala N, De Momi E, Pescatori LE, Morelli A, Ferrigno G (2017) Inductive learning of the surgical workflow model through video annotations. In: IEEE 30th international symposium on computer-based medical systems, CBMS 2017, Thessaloniki, Greece. https://doi.org/10.1109/CBMS.2017.91

Acknowledgements

This project has received funding from the European Unions Horizon 2020 research and innovation programme under Grant Agreement No. H2020-ICT-2016-732515. The Titan Xp used for this research was donated by the NVIDIA Corporation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical standard

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Nakawala, H., Bianchi, R., Pescatori, L.E. et al. “Deep-Onto” network for surgical workflow and context recognition. Int J CARS 14, 685–696 (2019). https://doi.org/10.1007/s11548-018-1882-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-018-1882-8