Abstract

Many commonly used mathematical models in the field of mathematical biology involve challenges of parameter non-identifiability. Practical non-identifiability, where the quality and quantity of data does not provide sufficiently precise parameter estimates is often encountered, even with relatively simple models. In particular, the situation where some parameters are identifiable and others are not is often encountered. In this work we apply a recent likelihood-based workflow, called Profile-Wise Analysis (PWA), to non-identifiable models for the first time. The PWA workflow addresses identifiability, parameter estimation, and prediction in a unified framework that is simple to implement and interpret. Previous implementations of the workflow have dealt with idealised identifiable problems only. In this study we illustrate how the PWA workflow can be applied to both structurally non-identifiable and practically non-identifiable models in the context of simple population growth models. Dealing with simple mathematical models allows us to present the PWA workflow in a didactic, self-contained document that can be studied together with relatively straightforward Julia code provided on GitHub. Working with simple mathematical models allows the PWA workflow prediction intervals to be compared with gold standard full likelihood prediction intervals. Together, our examples illustrate how the PWA workflow provides us with a systematic way of dealing with non-identifiability, especially compared to other approaches, such as seeking ad hoc parameter combinations, or simply setting parameter values to some arbitrary default value. Importantly, we show that the PWA workflow provides insight into the commonly-encountered situation where some parameters are identifiable and others are not, allowing us to explore how uncertainty in some parameters, and combinations of parameters, regardless of their identifiability status, influences model predictions in a way that is insightful and interpretable.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interpreting experimental data using mechanistic mathematical models provides an objective foundation for discovery, prediction, and decision making across all areas of science and engineering. Interpreting data in this way is particularly relevant to applications in the life sciences where new types of measurements and data are continually being developed. Therefore, developing computational methods that can be used to explore the interplay between experimental data, mathematical models, and real-world predictions is of broad interest across many application areas in the life sciences.

Key steps in using mechanistic mathematical models to interpret data include: (i) identifiability analysis; (ii) parameter estimation; and, (iii) model prediction. Recently, we developed a systematic, computationally-efficient workflow, called Profile-Wise Analysis (PWA), that addresses all three steps in a unified way (Simpson and Maclaren 2023). In essence, the PWA workflow involves propagating profile-likelihood-based confidence sets for model parameters, to model prediction sets by isolating how different parameters, and different combinations of parameters, influences model predictions (Simpson and Maclaren 2023). This enables us to explore how variability in individual parameters, and variability in groups of parameters directly influence model predictions in a framework that is straightforward to implement and interpret in terms of the underlying mechanisms encoded into the mathematical models (Simpson et al. 2023; Simpson and Maclaren 2023). This insight is possible because we harness the targeting property of the profile likelihood to isolate the influence of individual parameters (Casella and Berger 2001; Cox 2006; Cole 2020; Pace and Salvan 1997; Pawitan 2001), and we propagate forward from parameter confidence sets into prediction confidence sets. This feature is convenient since we can target individual interest parameters, or lower-dimensional combinations of parameters, one at-a-time, thereby providing mechanistic insight into the parameter(s) of interest, as well as making our approach scalable since we can deal with single parameters, or combinations of parameters, without needing to evaluate the entire likelihood function, only profiles of it. Profile-wise prediction confidence sets can be combined in a very straightforward way to give an overall curvewise prediction confidence set that accurately approximates the gold standard prediction confidence set using the full likelihood function. One of the advantages of the PWA workflow is that it naturally leads to curvewise prediction sets that avoids challenges of computational overhead and interpretation that are inherent in working with pointwise approaches (Hass et al. 2016; Kreutz et al. 2013). The first presentation of the PWA workflow focused on idealised identifiable models only, where the quality and quantity of the available data meant that all parameters were identifiable. Here we address to the more practical scenario of exploring how the PWA workflow applies to non-identifiable models. This is important because non-identifiability is routinely encountered in mathematical biology, and other modelling applications in the life sciences (Simpson et al. 2021; Fröhlich et al. 2014).

The first component of the PWA workflow is to assess parameter identifiability. Parameter identifiability is often considered in terms of structural or practical identifiability (Wieland et al. 2021). Structural identifiability deals with the idealised situation where we have access to an infinite amount of ideal, noise-free data (Chiş et al. 2011, 2016). Structural identifiability for mathematical models based on ordinary differential equations (ODE) is often assessed using software that includes DAISY (Bellu et al. 2007) and GenSSI (Ligon et al. 2018). In brief, GenSSI uses Lie derivatives of the ODE model to generate a system of input–output equations, and the solvability properties of this system provides information about global and local structural identifiability. In contrast, practical identifiability deals with the question of whether finite amounts of imperfect, noisy data is sufficient to identify model parameters (Kreutz et al. 2013; Raue et al. 2009, 2013, 2014). Practical identifiability can be implicitly assessed through the failure of sampling methods to converge (Hines et al. 2014; Siekmann et al. 2012; Simpson et al. 2020). Other approaches to determine practical identifiability include indirectly analysing the local curvature of the likelihood (Browning and Simpson 2023; Gutenkunst et al. 2007; Chiş et al. 2016; Vollert et al. 2023) or directly assessing the global curvature properties of the likelihood function by working with the profile likelihood (Raue et al. 2009, 2013, 2014). Therefore, practical identifiability is a more strict requirement than structural identifiability, as demonstrated by the fact that many structurally identifiable models are found to be practically non-identifiable when considering the reality of dealing with finite amounts of noisy data (Simpson et al. 2020, 2022). Fröhlich et al. (2014) compared several computational approaches (bootstrapping, profile likelihood, Fisher information matrix, and multi-start based approaches) to diagnose structural and practical identifiability of several models encountered in the systems biology literature and concluded that the profile likelihood produced reliable results, whereas the other approaches could be misleading.

In this work we will explore how the PWA workflow can be applied to both structurally non-identifiable and practically non-identifiable mathematical models that are routinely encountered in modelling population biology phenomena. Though we have not explicitly considered PWA and profile likelihood methods for non-identified models previously, there is reason to expect that it may perform reasonably well despite these challenges. As mentioned above, previous work in the context of systems biology by Fröhlich et al. (2014) found that profile likelihood is the only method they consider that performs well in the presence of practical identifiability (Fröhlich et al. 2014). Investigations in other application areas has led to similar observations. In particular, in econometrics, Dufour (1997) considers the construction of valid confidence sets and tests for econometric models under poor identifiability. They first show that, lacking other constraints, valid confidence sets must typically be unbounded. They then show that Wald-style confidence intervals of the form ‘estimate plus or minus some number of standard errors’ lack this property and are always bounded. In contrast, likelihood-based methods, such as likelihood ratios and associated confidence intervals, can produce appropriately unbounded intervals that maintain correct (or higher) asymptotic coverage. Zivot et al. (1998) similarly compare confidence intervals for poorly identified econometric models based on inverting Lagrange multiplier, likelihood ratio, and Anderson–Rubin tests to Wald-style confidence intervals. As before, Zivot et al. (1998) show that the relatively flat likelihood corresponds to appropriately wide confidence intervals, maintaining or exceeding coverage is maintained. Further, they demonstrate that likelihood-based intervals perform much better than Wald intervals (Zivot et al. 1998). In particular, these intervals may generally be unbounded unless additional constraints are applied, as shown to be theoretically necessary by Dufour (1997). Zivot et al.’s study is computational, while (Wang and Zivot 1998; Dufour 1997) supplement this with additional asymptotic arguments. A more recent review of these and similar results, again in econometrics, is given by Andrews et al. (2019), who also discuss how naive application of the bootstrap typically fails in the poorly identified setting (which is consistent with our recent results in Warne et al. (2024) and those of Fröhlich et al. (2014)). In light of these results we expect that likelihood-based confidence intervals for parameters in poorly identified problems may still perform well. Though these will be unbounded in general, they will typically rule out some regions of the parameter space corresponding to e.g., rejected values of identified parameter combinations or one-sidedly bounded intervals. Furthermore, in most problems we can combine these intervals with simple a priori bounds (which are weaker assumptions than prior probability distributions; see Stark 2015) to obtain confidence sets with the same coverage as the unbounded sets (again, see Stark 2015).

In the context of prediction, our approach begins with a gold-standard full likelihood method that carries out prediction via initial parameter estimation in such a way that coverage for predictions is at least as good as coverage for parameters. Thus, the desirable properties for parameter confidence sets are expected to hold up for predictions, and predictions can also be thought of as functions or functionals of the parameters that may be better identified than the underlying parameters. The benefit of going via the parameter space rather than directly to predictions is that we can more easily generalise to new scenarios with mechanistic model components and parameters. In addition to this gold standard approach, we then introduce the PWA approach, which is based on the same general principles but requires less computation in exchange for potentially lower coverage. PWA also facilitates an understanding of the connection between individual parameters and predictions (Simpson and Maclaren 2023). Guided by these theoretical intuitions about general models, we carry out computational experiments for particular models. One limitation of our approach is that the theoretical underpinnings are asymptotic and that finite sample corrections may be needed when data is sparse and the parameter space is large. One approach to address this would be applying the calibration methods from Warne et al. (2024), which can be used with minimal modifications to our overall workflow. We leave this for future work.

An mentioned above, understanding the ability of the PWA workflow to deal with non-identifiable models is important because many mathematical models in the field of mathematical biology are non-identifiable. To demonstrate this point, we will briefly recall the history of mathematical models of avascular tumour spheroid growth. The study of tumour spheroids has been an active area of research for more than 50 years, starting with the seminal Greenspan model in 1972 (1972. Greenspan’s mathematical model involves a series of analytically tractable boundary value problems whose solutions provide a plausible explanation for the observed three-stage, spatially structured growth dynamics of avascular in vitro tumour spheroids (Greenspan 1972). Inspired by Greenspan, more than 50 years of further model development and refinement delivered continual generalisations of this modelling framework. This iterative process produced many detailed mathematical models that have incorporated increasingly sophisticated biological detail. This progression of ideas led to the development of time-dependent partial differential equation (PDE)-based models (Byrne et al. 2003), moving boundary PDE models (Byrne and Chaplain 1997), multi-phase PDE models with moving boundaries (Ward and King 1997, 1999), and multi-component PDE models that simultaneously describe spheroid development while tracking cell cycle progression (Jin et al. 2021). These developments in mathematical modelling sophistication did not consider parameter identifiability and it was not until very recently that questions of parameter identifiability of these models was assessed, finding that even the simplest Greenspan model from 1972 turns out to be practically non-identifiable when using standard experimental data focusing on the evolution of the outer spheroid radius only (Browning and Simpson 2023; Murphy et al. 2022). This dramatic example points to the need for the mathematical biology community to consider parameter identifiability in parallel with model development to ensure that increased modelling capability and modelling detail does not come at the expense of those models to deliver practical benefits.

Previous approaches for dealing with non-identifiable models in mathematical and systems biology and related areas are to introduce some kind of simplification by, for example, setting parameters to default values (Hines et al. 2014; Vollert et al. 2023; Simpson et al. 2020), or seeking to find parameter combinations that simplify the model (Cole 2020; VandenHeuvel et al. 2023). For structurally non-identifiable models, symbolic methods can be used to find locally identifiable reparameterisations (e.g., Catchpole et al. 1998; Cole et al. 2010, summarised in Cole 2020), though these are difficult to apply to the practically non-identifiable case and typically require symbolic derivatives to be available. Several approaches can be used to find parameter combinations in the practically non-identifiable scenario, such as the concept of sloppiness (Brown and Sethna 2003; Brown et al. 2004; Gutenkunst et al. 2007) where a log-transformation is used to explore the possibility of finding informative combinations of parameters, typically in the form of ratios of parameters or ratios of powers of parameters as a means of simplifying the model. In this work, we take a different approach and use the (expected Dufour 1997) desirable properties of likelihood-based confidence intervals for poorly identified models, along with the the targeting property of the profile likelihood as used within the PWA workflow, to propagate confidence sets in parameter values to confidence sets for predictions for non-identifiable parameters. This approach provides insight because the targeting property of the profile likelihood can illustrate that some parameters are identifiable while others are not, and importantly the PWA workflow directly links the relevance of different parameters, regardless of their identifiability, to model predictions. Overall, we illustrate that the PWA workflow can be used as a systematic platform for making predictions, regardless of whether dealing with identifiable or non-identifiable models. This is useful for the commonly-encountered situation of partly identifiable models where some parameters are well-identified by the data and others are not (Simpson et al. 2020). To make this presentation as accessible as possible we present results for very simple, widely adopted mathematical models relating to population dynamics. Results are presented in terms of two simple case studies, the first involves structural non-identifiability, and the second involves practical non-identifiability. The first, structurally non-identifiable case is sufficiently straightforward that all calculations are performed by discretising the likelihood function and using appropriate grid searches to construct various profile likelihoods. This approach has the advantage of being both conceptually and computationally straightforward. The second, practically non-identifiable case, is dealt with using numerical optimisation instead of grid searches. This approach has the advantage of being computationally efficient and more broadly applicable than using simple grid searchers. As we show, the PWA leads to sensible results for these two simple case studies, and we anticipate that the PWA will also leads to useful insights for other non-identifiable models because related likelihood-based approaches are known to perform well in the face of non-identifiability (Dufour 1997; Zivot et al. 1998; Fröhlich et al. 2014; Warne et al. 2024). Open source software written in Julia is provided on GitHub to replicate all results in this study.

2 Results and Discussion

We present this these methods in terms of two case studies that are based on very familiar, mathematical models of population dynamics. Before presenting the PWA details, we first provide some background about these mathematical models and briefly discuss some application areas that motivate their use.

2.1 Logistic Population Dynamics

The logistic growth model

with solution

is one of the most widely-used mathematical models describing population dynamics (Edelstein-Keshet 2005; Kot 2003; Murray 2002). This model can be used to describe the time evolution of a population with density \(C(t) > 0\), where the density evolves from some initial density C(0) to eventually reach some carrying capacity density, \(K > 0\). For typical applications with \(C(0)/K \ll 1\) the solution describes near exponential growth at early time, with growth rate \(\lambda \), before the density eventually approaches the carrying capacity in the long time limit, \(C(t) \rightarrow K^-\) as \(t \rightarrow \infty \). One of the reasons that the logistic growth model is so widely employed is that it gives rise to a sigmoid shaped solution curve that is so often observed in a range of biological phenomena across a massive range of spatial and temporal scales. Images in Fig. 1 show typical applications in population biology where sigmoid growth is observed. Figure 1a shows the location of a coral reef on the Great Barrier Reef that is located off the East coast of Australia. Populations of corals are detrimentally impacted by a range of events, such as tropical cyclones or coral bleaching associated with climate change (Hughes et al. 2021). Data in Fig. 1b shows measurements of the percentage of total hard coral cover after an adverse event that reduced the proportion of area covered by hard coral to just a few percent (Simpson et al. 2022, 2023; eAtlas 2023). The recovery in hard coral cover took more than a decade for the corals to regrow and colonise the reef, back to approximately 80% area occupancy. This recovery data can be described using the logistic growth model, where it is of particular interest to estimate the growth rate \(\lambda \) because this estimate can be used to provide a simple estimate of the timescale of regrowth, \(1/\lambda \) (Simpson et al. 2022, 2023).

Practical applications of logistic growth models in ecology and cell biology. a Location of Lady Musgrave Island (black disc) relative to the Australian mainland (inset, red disc). b Field data showing the time evolution of the percentage total hard coral cover, \(C^{\text {o}}(t)\) (blue discs) after some disturbance at Lady Musgrave Island monitoring site 1 (eAtlas 2023). Images in a–c reproduced with permission from Simpson et al. (2023). c Experimental images showing equatorial slices through tumour spheroids grown with melanoma cells. Images from left-to-right show spheroids harvested at 12, 14, 16, 18 and 21 days after formation (Browning et al. 2021). Each cross section shows that the spheroids grow as a three-layer compound sphere with the inner-most sphere containing dead cells, the central spherical shell containing live but arrested cells that are unable to progress through the cell cycle, and the outer-most spherical shell contains live cells progressing through the cell cycle. d Summarises the dynamics of the outer most radius of a group of spheroids of the same melanoma cell line grown from different numbers of cells, including spheroids initiated with 2500, 5000 and 10,000 cells, as indicated. Images in c–d reproduced with permission from Browning et al. (2021) (Color figure online)

Another classical application of the logistic growth model is to understand the dynamics of populations of tumour cells grown as in vitro avascular tumour spheroids. For example, the five experimental images in Fig. 1c show slices through the equatorial plane of a set of in vitro melanoma tumour spheroids, after 12, 14, 16, 18 and 21 days of growth after spheroid formation (Browning et al. 2021; Murphy et al. 2022). The structure of these spheroids approximately takes the form of a growing compound sphere. Dotted lines in Fig. 1c show three boundaries: (i) the outer-most boundary that defines the outer radius of the spheroid; (ii) the central boundary that separates a cells according to their cell cycle status; and, (iii) the inner-most boundary that separates the central shell composed of living but non-proliferative cells from the central region that is composed of dead cells. Cells in the outer-most spherical shell have access to sufficient nutrients that these cells are able to proliferate, whereas cells in the central spherical shell have limited access to nutrients which prevents these cells entering the cell cycle (Browning et al. 2021). Data in Fig. 1d shows the time evolution of the outer radius for groups of spheroids that are initiated using different numbers of cells (i.e. 2500, 5000, 10,000 cells, as indicated). Regardless of the initial size of the spheroids, we observe sigmoid growth with the eventual long-time spheroid radius is apparently independent of the initial size. This sigmoid growth behaviour is consistent with the logistic growth model, Eq. (1), where here we consider the variable C(t) to represent the spheroid radius (Murphy et al. 2022). In addition to modelling coral reef re-growth (Simpson et al. 2023) and tumour spheroid development (Browning et al. 2021; Browning and Simpson 2023), the logistic model has been used to model a wide range of cell biology population dynamics including in vitro wound healing (Maini et al. 2004) and in vivo tumour dynamics (Gerlee 2013; Sarapata and de Pillis 2014; West et al. 2001). Furthermore, the logistic equation and generalisations thereof are also routinely used in various ecological applications including modelling populations of sharks (Hisano et al. 2011), polyps (Melica et al. 2014), trees (Acevedo et al. 2012) and humans (Steele et al. 1998).

In terms of mathematical modelling, the simplicity of the logistic growth model introduces both advantages and disadvantages. The advantages of the logistic growth model include the fact that it is both conceptually straightforward and analytically tractable. In contrast, the simplicity of the logistic growth model means that it is not a high-fidelity representation of biological detail. Instead, it would be more accurate to describe the logistic growth model as a phenomenologically-based model that can be used to provide insightful, but indirect information about biological population dynamics. In response to the disadvantages of the logistic model, there have been many generalisations proposed, such as those reviewed by Tsoularis and Wallace (2002). For the purposes of this study we will work with two commonly-used generalisations. As we explain later, these two commonly-used generalisations introduce different challenges in terms of parameter identifiability.

The first generalisation we will consider is to extend the logistic growth model to include a separate linear sink term,

where \(d>0\) is a rate of removal associated with the linear sink term. Incorporating a linear sink term into the logistic growth model has been used to explicitly model population loss, either through some kind of intrinsic death process (Baker and Simpson 2010), a death/decay associated with external signals, such as chemotherapy in a model of tumour cell growth (Swanson et al. 2003) or external harvesting process (Miller and Botkin 1974; Brauer and Sánchez 1975), as is often invoked in models of fisheries (Cooke and Witten 1986; Xu et al. 2005). Re-writing Eq. (3) as

with \(\Lambda = \lambda - d\) and \(\kappa = K\Lambda /\lambda \), with \(\lambda > 0\), preserves the structure of the logistic growth equation so that the solution is

which now gives three long-term possibilities: (i) \(C(t) \rightarrow K\) as \(t \rightarrow \infty \) if \(\lambda > d\): (ii) \(C(t) \rightarrow 0\) as \(t \rightarrow \infty \) if \(\lambda < d\), and (iii) \(C(t) = C(0)\) for all \(0< t < \infty \) for the special case \(\lambda = d\). In this work we will refer to Eq. (3) as the logistic model with harvesting (Brauer and Sánchez 1975).

The second generalisation we will consider is to follow the work of Richards (1959) who generalised the logistic growth model to

where the free parameter \(\beta > 0\) was introduced by Richards to provide greater flexibility in describing a wider range of biological responses in the context of modelling stem growth in seedlings (Richards 1959). This model, which is sometimes called the Richards’ model, has since been used to study population dynamics in a range of applications, including breast cancer progression (Spratt et al. 1993). The solution of the Richards’ model can be written as

which clearly simplifies to the solution of the logistic equation when \(\beta = 1\). The Richards’ model is also related to the well-known von Bertalanffy growth model in which \(\beta = 1/3\) (Tsoularis and Wallace 2002). Instead of considering fixed values of \(\beta \), the Richards’ model incorporates additional flexibility by treating \(\beta \) as a free parameter that can be estimated from experimental data.

This work focuses on the development and deployment of likelihood-based methods to make predictions with poorly-identified models. A preliminary observation about the mathematical models that we have presented so far is that both the logistic model, Eq. (1), and the Richards’ model, Eq. (6) are structurally identifiable in terms of making perfect, noise-free observations of C(t) (Ligon et al. 2018). In contrast, the logistic model with harvesting is structurally non-identifiable since making perfect, noise-free observations of C(t) enables the identification of \(\Lambda \) and \(\kappa \) only, for which there are infinitely many choices of \(\lambda \), d and K that give the same C(t). We will now illustrate how the PWA workflow for parameter identifiability, estimation and prediction can be implemented in the face of both structural and practical non-identifiability.

2.2 Logistic Model with Harvesting

To solve Eq. (3) for C(t) we must specify an initial condition, C(0), together with three parameters \(\theta = (\lambda , d, K)^\top \). We proceed by assuming that the observed data, denoted \(C^\text {o}(t)\), corresponds to the solution of Eq. (3) corrupted with additive Gaussian noise with zero mean and constant variance so that \(C^\text {o}(t) \mid \theta = \mathcal {N}\left( C(t_i), \sigma ^2\right) \), where \(C(t_i)\) is the solution of Eq. (3) at a series of I time points, \(t_i\) for \(i=1,2,3,\ldots , I\). Within this standard framework the loglikelihood function can be written as

where \(\phi (x; \mu , \sigma ^2)\) denotes a Gaussian probability density function with mean \(\mu \) and variance \(\sigma ^2\), and C(t) is the solution of Eq. (3). We treat \(\ell (\theta \mid C^\text {o}(t))\) as a function of \(\theta \) for fixed data (Cassidy 2023), and in this first example, for the sake of clarity and simplicity, we treat C(0) and \(\sigma \) as known quantities (Hines et al. 2014). This has the benefit of reducing this problem to dealing with three unknown parameters, \(\theta = (\lambda , \beta , K)^\intercal \). This assumption will be relaxed later in Sect. 2.3. Synthetic data is generated with \(\lambda = 0.01\), \(d =0.002\), \(K=100\), \(C(0)=5\) and \(\sigma = 5\). Data is collected at \(t_i = 100\times (i-1)\) for \(i=1,2,3,\ldots ,11\) and plotted in Fig. 2a. The remainder of this mathematical exploration is independent of the units of these quantities, however to be consistent with the data in Fig. 1b the dimensions of C(t) and K would be taken to be densities expressed in terms of a percentage of area occupied by growing hard corals, and the rates \(\lambda \) and d would have dimensions of \([ / \text {day}]\). Similarly, work with the data in Fig. 1d the variables C(t) and K would be taken to represent spheroid radius with dimensions \(\mu \text {m}\), and the rates \(\lambda \) and d would have dimensions \([/ \text {day}]\) (Murphy et al. 2022).

a Synthetic data (orange dots) generated by taking the solution of Eq. (4) with \(\lambda = 0.01\), \(d =0.002\), \(K=100\), \(C(0)=5\) at \(t_i = 100\times (i-1)\) for \(i=1,2,3,\ldots ,11\) and incorporating additive Gaussian noise with zero mean and \(\sigma = 5\). The MLE solution (solid blue) corresponds to the solution of Eq. (4) with \(\hat{\lambda }= 0.0094\), \(\hat{d} =0.00192\), \(\hat{K}=99.90\) with \(C(0)=5\). The 95% prediction interval (shaded gold) is obtained by propagating parameter values where \(\hat{\ell } \ge \ell ^*\) forward to construct the prediction envelope. Here \(\ell ^{*} = -\Delta _{0.95,3}/2 = -3.91\), where \(\Delta _{q,n}\) refers to the qth quantile of the \(\chi ^2\) distribution with n degrees of freedom (Royston 2007). b Schematic of the \((\lambda , d, K)^\top \) parameter space (Color figure online)

We now apply the PWA framework for identifiability, estimation and prediction by evaluating \(\ell (\theta \mid C^\text {o}(t))\) across a broad region of parameter space that contains the true values: \(0.0001< \lambda < 0.05\); \(0< d < 0.01\); and \(50< K < 200\). Since we are dealing with a relatively simple three-dimensional parameter space, shown schematically in Fig. 2b, we can explore different parameter combinations by taking a uniform \(500^3\) regular discretisation of the parameter space and evaluate \(\ell (\theta \mid C^\text {o}(t))\) at each of the \(500^3\) parameter combinations defined by the uniform discretisation. Calculating the maximum value of \(\ell (\theta \mid C^\text {o}(t))\) over the \(500^3\) values, \(\displaystyle {\sup _{\theta } \ell (\theta \mid C^\text {o}(t))}\), allows us to work with the normalised loglikelihood function

so that the maximum normalised loglikelihood is zero. The value of \(\theta \) that maximises \(\ell (\theta \mid C^\text {o}(t))\) is denoted \(\hat{\theta }\), which is called the maximum likelihood estimate (MLE). In this instance we have \(\hat{\theta } = (0.0094, 0.00192, 99.90)^\top \), which is reasonably close to the true value, \(\theta = (0.01, 0.002, 100)^\top \), but this point estimate provides no insight into inferential precision which is associated with the curvature of the likelihood function (Cole 2020; Pace and Salvan 1997).

To assess the identifiability of each parameter we construct a series of univariate profile likelihood functions by partitioning the full parameter \(\theta \) into interest parameters \(\psi \) and nuisance parameters \(\omega \), so that \(\theta = (\psi , \omega )\). For a set of data \(C^\text {o}(t)\), the profile log-likelihood for the interest parameter \(\psi \), given the partition \((\psi ,\omega )\), is

which implicitly defines a function \(\omega ^*\left( \psi \right) \) of optimal values of \(\omega \) for each value of \(\psi \), and defines a surface with points \((\psi , \omega ^{*}(\psi ))\) in parameter space. In the first instance we construct a series of univariate profiles by taking the interest parameter to be a single parameter, which means that \((\psi , \omega ^{*}(\psi ))\) is a univariate curve that allows us to visualise the curvature of the loglikelihood function.

Since this first example deals with a relatively straightforward three-dimensional loglikelihood function where we have already evaluated \(\hat{\ell }(\theta \mid C^\text {o}(t))\) on a \(500^3\) regular discretisation of \(\theta \), the calculation of the profile likelihood functions is straightforward. For example, if our interest parameter is \(\psi = \lambda \) and the associated nuisance parameter is \(\omega = (d, K)^\top \), for each value of \(\lambda \) along the uniform discretisation of the interval \(0.0001< \lambda _j < 0.05\) for \(j=1,2,3,\ldots ,500\), we compute the indices k and l that maximises \(\hat{\ell }(\theta \mid C^\text {o}(t))\), where \(\theta \) is evaluated on the uniform discretisation \((\lambda _j, d_k, K_l)\). This optimisation calculation can be performed using a straightforward grid search. Repeating this procedure by setting \(\psi = d\) and \(\omega = (\lambda , K)^\top \), and \(\psi = K\) and \(\omega = (\lambda , d)^\top \) gives univariate profile likelihoods for d and K, respectively. The three univariate profile likelihood functions are given in Fig. 3 where each profile likelihood function is superimposed with a vertical green line at the MLE and a horizontal like at the 95% asymptotic threshold, \(\ell ^*\) (Royston 2007).

a–c Univariate profile likelihood functions for \(\lambda \), d and K, respectively. Each Profile likelihood function (solid blue) is superimposed with a vertical green line at the MLE and a horizontal red line shoing the asymptotic 95% threshold at \(\ell _p^{*} = -\Delta _{0.95,1}/2 = -1.92\), where \(\Delta _{q,n}\) refers to the qth quantile of the \(\chi ^2\) distribution with n degrees of freedom (Royston 2007), here \(n=1\) for univariate profiles. Parameter estimates and 95% confidence intervals are: \(\hat{\lambda } = 0.0094\) [0.0067, 0.0183], \(\hat{d} = 0.00192\) [0, 0.010] and \(\hat{K} = 99.90\) [74.05, 200] (Color figure online)

The univariate profile for \(\lambda \) in Fig. 3a indicates that the noisy data in Fig. 2a allows us to estimate \(\lambda \) reasonably well, at least within the 95% asymptotic confidence interval, \(0.0067< \lambda < 0.0183\). In contrast, the relatively flat profiles for d and K in Fig. 3b, c indicate that the noisy data in Fig. 2a does not identify these parameters. This is entirely consistent with our observation that this model is structurally non-identifiable. The univariate profile likelihoods indicate there are many choices of d and K that can be used to match the data equally well. This is a major problem because these flat profiles indicate that incorrect parameter values can match the observations. If, when using mechanistic models, our aim is to link parameter values to biological mechanisms, this means that we can use a model parameterised to reflect an incorrect mechanisms to explain our observations. Here, for example, our data is generated with \(K=100\), but our univariate profile likelihood indicates that we could match the data with \(K=90\) or \(K=190\) which is clearly unsatisfactory if we want to use our data to infer not only the value of K, but also to understand and quantify the mechanism implied by this parameter value.

Before considering prediction, we first explore the empirical, finite sample coverage properties for our parameter confidence intervals as they are only expected to hold asymptotically (Pawitan 2001). We generate 5000 data realisations in the same way that we generated the single data realisation in Fig. 3a, for the same fixed true parameters. For each realisation we compute the MLE and count the proportion of realisations with \(\hat{\ell } \ge \ell ^* = -\Delta _{0.95,3}/2\), where \(\hat{\ell }\) is evaluated at the true parameter values. This condition means the true parameter vector is within the sample-dependent, likelihood-based confidence set. This gives \(4900/5000 = 98.00\%\) which, unsurprisingly, exceeds the expected 95% asymptotic result due to the fact that the likelihood function is relatively flat. This result is very different to previous implementation of the PWA workflow for identifiable problems where finite sample coverage properties for parameter confidence intervals are very close to the expected asymptotic result (Simpson and Maclaren 2023; Murphy et al. 2024).

Estimating prediction uncertainties accurately is nontrivial, due to the nonlinear dependence of model characteristics on parameters (Villaverde et al. 2015, 2023). The recent PWA workflow addresses identifiability, estimation and prediction in a unified workflow that involves propagating profile-likelihood-based confidence sets for model parameters, to model prediction sets by isolating how different parameters, influences model prediction by taking advantage of the targeting property of the profile likelihood. Previous applications of PWA found that constructing bivariate profile likelihood functions is a useful way to explore relationships between pairs of parameters, and to construct accurate prediction intervals. However, these previous applications have focused on identifiable problems, whereas here we consider the more challenging and realistic question of dealing with non-identifiable and poorly-identifiable models. The idea of using bivariate profile likelihood functions to provide a visual indication of the confidence set of pairs of parameters and the nonlinear dependence of parameter estimates on each other has been long established in the nonlinear regression literature (Bates and Watts 1988). Here, and in the PWA, we use a series of bivariate profile likelihoods to provide a visual interpretation of the relationship between various pairs of parameters, as well as using these profile likelihood functions to make predictions so that we establish how the variability in parameters within a particular confidence set map to predictions that are more meaningful for collaborators in the life sciences. One of the benefits of working with relatively simple models with a small number of parameters is that we can take the union of predictions from various made using all possible bivariate profile likelihoods and compare these predictions from the full likelihood. Our previous work has compared making such predictions using the union of univariate profile likelihood-based predictions, and union of bivariate profile likelihood-based predictions and predictions from the full likelihood function, funding that working with bivariate profile likelihood-based predictions leads to accurate predictions at a significantly reduced computational overhead when using the full likelihood function.

To construct bivariate profile likelihood functions by setting: (i) \(\psi = (\lambda , d)^\top \) and \(\omega = K\); (ii) \(\psi = (\lambda , K)^\top \) and \(\omega = d\); and, (iii) \(\psi = (d, K)^\top \) and \(\omega = \lambda \) and evaluating Eq. (10). Again, just like the univariate profile likelihood functions, evaluating \(\hat{\ell }_p (\psi \mid C^\text {o}(t))\) is straightforward because we have already evaluated \(\hat{\ell }(\theta \mid C^\text {o}(t))\) on a \(500^3\) regular discretisation of \(\theta \). For example, to evaluate the bivariate profile likelihood for \(\psi = (\lambda , d)^\top \) we take each pair of \((\lambda _j, d_k)\) values on the discretisation of \(\theta \) and compute the index l that maximises \(\hat{\ell }(\theta \mid C^\text {o}(t))\), where \(\theta \) is evaluated on the uniform discretisation \((\lambda _j, d_k, K_l)\) to give the bivariate profile shown in Fig. 4a where we superimpose a curve at the 95% asymptotic threshold \(\ell ^*\) and the location of the MLE. The shape of the bivariate profile shows that there is a narrow region of parameter space where \(\hat{\ell }_p \ge \ell ^*\), and the shape of this region illustrates how estimates of \(\lambda \) and d are correlated (i.e. as \(\lambda \) increases the value of d required to match the data also increases). This narrow region of parameter space where \(\hat{\ell }_p \ge \ell ^*\) extends right across the truncated region of parameter space considered. As we pointed out in the Introduction, despite the fact that the logistic model with harvesting is structurally non-identifiable, we see that the likelihood-based confidence intervals for parameters performing well in the sense that each bivariate profile clearly rules out certain regions of the parameter space. This result is inline with the general conclusions of Fröhlich and co-workers (Fröhlich et al. 2014) who found that likelihood-based methods provided reliable results in the face of non-identifiability whereas other commonly-used approaches can be unreliable.

a–c Bivariate profile likelihood functions for \(\psi = (\lambda , d)\), \(\psi = (\lambda , K)\) and \(\psi = (d, K)\), respectively, shown in the left column, together with the associated prediction intervals in the right column. Contour plots in the left column show \(\hat{\ell }_p (\psi \mid C^\text {o}(t))\) for each choice of \(\psi \), as indicated. Each contour plot is superimposed with a contour showing the asymptotic threshold \(\ell ^* = -\Delta _{0.95,2}/2 = -3.00\) (solid red curve) and the location of the MLE (cyan disc). Each of the bivariate profiles in the left column is constructed using a \(500\times 500\) uniform mesh, and parameter values lying within the contour where \(\ell \ge \ell ^*\) are propagated forward to form the profile-wise prediction intervals in the right column (purple shaded region). Each prediction interval is superimposed with the MLE solution (cyan solid curve) (Color figure online)

To understand how the uncertainty in \(\psi = (\lambda , d)^\top \) impacts the uncertainty in the prediction of the mathematical model, Eq. (4), we take those values of \((\lambda _j, d_k)\) for which \(\hat{\ell }_p \ge \ell ^*\) (i.e. those values of \(\theta \) lying within the region enclosed by the threshold, including values of \(\theta \) along the boundary) and solve Eq. (4) using the associated value of the nuisance parameter, \(K_l\), that was identified when we computed the bivariate profile likelihood. Solving Eq. (4) for each choice of \((\lambda _j, d_k, K_l)\) within the set of parameters defined by the asymptotic threshold provides a family of solutions from which we can compute a curvewise prediction interval, shown in the right most panel of Fig. 4a where we have also superimposed the MLE solution. This prediction interval explicitly shows us how uncertainty in the values of \((\lambda ,d)^\top \) propagates into uncertainty in the model prediction, C(t).

We now repeat the process of computing the bivariate profile likelihoods for \(\psi = (\lambda , K)\) and \(\psi = (d, K)\), shown in Fig. 4b–c, respectively. Here we see that the bivariate profile likelihood functions provides additional insight beyond the univariate profiles in Fig. 3. For example, here we see that bivariate profile likelihood for \(\psi = (d, K)\) is similar to the bivariate profile for \(\psi = (\lambda , d)\) in the sense that there is a large region, extending right across the parameter space, where \(\hat{\ell }_p \ge \ell ^*\). In contrast, for \(\psi = (\lambda , K)\) we see that the curvature of the bivariate profile likelihood is such that the region where \(\hat{\ell }_p \ge \ell ^*\) is mostly contained within the region of parameter space considered. The profile wise prediction intervals for each bivariate profile likelihood is given in the right-most panel in Fig. 4a–c together with the MLE solution. Comparing the profile-wise prediction intervals in the Fig. 4 illustrates how differences in parameters affect these predictions. For example, the prediction intervals in Fig. 4b–c, which explicitly involves bivariate profile likelihoods involving K are wider at late time than the prediction interval in Fig. 4a. This reflects the fact that the carrying capacity density, K affects the late time solution of Eq. (3).

In addition to constructing profile-wise prediction intervals to explore how uncertainty in pairs of parameters impacts model predictions, we can also form an approximate prediction interval by taking the union of the three profile-wise prediction intervals. This union of prediction intervals, shown in Fig. 5, compares extremely well with the gold-standard prediction interval obtained using the full likelihood function. In this case it is straightforward to construct the full likelihood prediction interval because we have already evaluated \(\hat{\ell }(\theta \mid C^\text {o}(t))\) at each of the \(500^3\) mesh points, and we simply solve Eq. (3) for each value of \(\theta \) for which \(\hat{\ell } \ge \ell ^* = -\Delta _{0.95,3}/2 = -3.91\) and use this family of solutions to form the curvewise gold-standard prediction interval.

Prediction interval comparison. The prediction interval constructed from the gold standard full likelihood (solid gold region) is superimposed with the MLE solution (cyan solid curve) and the union of the upper and lower profile-wise intervals in Fig. 4 (dashed red curves) (Color figure online)

Our construction of the approximate full prediction interval is both practically and computationally convenient. This approach is practically advantageous in the sense that building profile-wise prediction intervals like we did in Fig. 4 provides insight into how different pairs of parameters impacts model predictions in a way that is not obvious when working with the full likelihood function. This process is computationally advantageous since working with pairs of parameters remains computationally tractable for models with larger number of parameters where either directly discretizing or sampling the full likelihood function becomes computationally prohibitive. Finally, this example indicates that the PWA workflow presented previously for identifiable problems can also be applied to structurally non-identifiable problems. The main difference between applying the PWA workflow for identifiable and structurally non-identifiable problems is that the former problems involve determining regions in parameter space where \(\hat{\ell }_p \ge \ell ^*\) implicitly defined by the curvature of the likelihood function. In contrast, the region of parameter space where \(\hat{\ell }_p \ge \ell ^*\) for structurally non-identifiable problems is determined both by the curvature of the likelihood function and the user-defined bounds on the regions of parameter space considered. These bounds can often be imposed naturally, such as requiring that certain parameters be non-negative as in the case of \(K > 0\) in Eq. (3). Alternatively these bounds can be also be prescribed based on the experience of the analyst, such as our results in Figs. 3 and 4 where we limited our parameter space to the region where \(0.0001< \lambda < 0.05\). In this case we chose the interval to be relatively broad, guided by our knowledge that the true value lies within this interval. Later, in Sect. 2.3 we will deal with a more practical case involving real measurements where we have no a priori knowledge of the true parameter values.

The results in this section for Eq. (3) are insightful in the sense that they provide the first demonstration that the PWA workflow can be applied to structurally non-identifiable problems, however we now turn our attention to a class problems that we believe to be of greater importance, namely a practically non-identifiable problem where some parameters are well identified by the data, whereas others are not.

2.3 Richards’ model

We now consider estimating parameters in the Richards’ model, Eq. (6) to describe the coral reef recovery data in Fig. 1a, b. To solve Eq. (6) we require values of \((\lambda , \beta , K, C(0))^\top \), and we assume that the noisy field data is associated with a Gaussian additive noise model with a pre-estimated standard deviation \(\sigma = 2\) (Hines et al. 2014; Simpson et al. 2020). Therefore, unlike the work in Sect. 2.2 where it was relatively straightforward to discretize the three-dimensional parameter space and calculate the MLE and associated profile likelihood functions simply by searching the discretised parameter space, we now perform all calculations using a standard implementation of the Nelder-Mead numerical optimisation algorithm using simple bound constraints within the NLopt routine (Johnson 2023). Figure 5a shows the data superimposed with the MLE solution of Eq. (6) where we have \(\hat{\theta } = (0.0055, 0.341, 81.73, 0.092)^\top \) and we see that the shape of the MLE solution provides a very good match to the data. The parameter \(\lambda \) has units of \([/\text {day}]\) and the exponent \(\beta \) is dimensionless. Both K and C(0) measure coral densities in terms of the % of area covered by hard corals.

a Field data showing the time evolution of the percentage total hard coral cover, \(C^{\text {o}}(t)\) (orange discs) after some disturbance at Lady Musgrave Island monitoring site 1 (Simpson et al. 2023). The MLE solution of the Richards’ model, Eq. (6) with \(\hat{\theta } = (\hat{\lambda }, \hat{\beta }, \hat{K}, \hat{C(0)})^\top = (0.0055, 0.341, 81.73, 0.092)^\top \) is superimposed (solid cyan curve). b Full likelihood-based prediction interval (gold region) superimposed on the MLE solution (solid cyan curve). The full likelihood prediction interval is formed by using rejection sampling to find 1000 values of \(\theta \) with \(\ell \ge \ell ^* = -\Delta _{0.95,4}/2 = -4.74\), and each value of \(\theta \) is then propagated forward, via Eq. (7), to give a family of solution curves from which we construct the prediction interval (Color figure online)

Before we proceed to consider the curvature of the likelihood function we construct a gold standard full likelihood prediction interval using rejection sampling to find 5000 estimates of \(\theta \) for which \(\ell \ge \ell ^* = -\Delta _{0.95,4}/2 = -4.74\). Evaluating the solution of the Richards’ model, Eq. (7) for each identified \(\theta \) gives us 5000 traces of C(t), which we use to construct the prediction interval shown by the gold shaded interval in Fig. 5b.

To proceed with the PWA workflow, we first assess the practical identifiability of each parameter by constructing four univariate profile likelihood functions by evaluating Eq. (10) with \(\psi = \lambda \), \(\psi = \beta \), \(\psi = K\) and \(\psi = C(0)\), respectively. Profiles are constructed across a uniform mesh of the interest parameter, and the profile likelihood is computed using numerical optimisation. The four univariate profile likelihood functions are given in Fig. 7 where each univariate profile likelihood is superimposed with a vertical green line at the MLE and horizontal line at the asymptotic 95% threshold, \(\ell ^*\). The univariate profile for \(\lambda \) is flat, meaning that the data is insufficient to precisely identify \(\lambda \). In contrast, profiles for \(\beta \), K and C(0) are relatively well-formed about a single maximum value at the MLE. Together, these profiles indicate that we have a commonly encountered situation whereby we are working with a structurally identifiable mathematical model, but the data we have access to does not identify all the parameters. Instead, the data identifies some of the parameters, \(\beta \), K and C(0), whereas the data does not contain sufficient information to identify \(\lambda \). This problem is practically non-identifiable, and we will now show that the PWA workflow can be applied to this problem and that this approach yields useful information that is not otherwise obvious.

As with the logistic model with harvesting, before considering prediction we first explore the empirical, finite sample coverage properties. We generate 5000 data realisations in the same way that we generated the single data realisation in Fig. 3a, for the same fixed true parameters. For each realisation we compute the MLE and count the proportion of realisations with \(\hat{\ell } \ge \ell ^* = -\Delta _{0.95,4}/2\), where \(\hat{\ell }\) is evaluated at the true parameter values. This gives \(4780/5000 = 95.60\%\), which is close to the expected asymptotic result, regardless of the non-identifiability of \(\lambda \).

a–d Univariate profile likelihood functions for \(\lambda \), \(\beta \), K and C(0), respectively. Each Profile likelihood function (solid blue) is superimposed with a vertical green line at the MLE and a horizontal red line shoing the asymptotic 95% threshold at \(\ell ^{*} = -\Delta _{0.95,1}/2 = -1.92\), where \(\Delta _{q,n}\) refers to the qth quantile of the \(\chi ^2\) distribution with n degrees of freedom (Royston 2007), here \(n=1\) for univariate profiles. Parameter estimates and 95% confidence intervals are: \(\hat{\lambda } = 0.0055\,\) [0.0021, 0.020], \(\hat{\beta } = 0.341\,\) [0.130, 1.283], \(\hat{K} = 81.73\,\) [78.460, 85.640] and \(\hat{C(0)} = 0.092\,\) [0.0001, 1.0257] (Color figure online)

We now consider prediction by computing various bivariate profile likelihoods. Results in Fig. 8a–f show the six bivariate profile likelihoods:

-

a)

\(\psi = (\lambda , \beta )^\top \) and \(\omega = (K, C(0))^\top \),

-

b)

\(\psi = (\lambda , K)^\top \) and \(\omega = (\beta , C(0))^\top \),

-

c)

\(\psi = (\lambda , C(0))^\top \) and \(\omega = (\beta , K)^\top \),

-

d)

\(\psi = (\beta , K)^\top \) and \(\omega = (\lambda , C(0))^\top \),

-

e)

\(\psi = (\beta , C(0))^\top \) and \(\omega = (\lambda , K)^\top \),

-

f)

\(\psi = (K, C(0))^\top \) and \(\omega = (\lambda , \beta )^\top \).

Each bivariate profile likelihood is superimposed with the MLE (cyan dot), and following Simpson and Maclaren (2023) we randomly identify 500 points along the boundary where \(\ell ^{*} = -\Delta _{0.95,2}/2\), corresponding to the asymptotic 95% threshold. This approach to propagate confidence sets in parameters to confidence sets in predictions is different to the approach we took in Sect. 2.3 where we propagated all gridded parameter values for which \(\ell _p \ge \ell ^{*}\), but here we focus only on the boundary values where \(\ell _p = \ell ^{*}\). As we will later show, this approach of focusing on the boundary values is a computationally convenient way to identify parameter values which, when propagated forward to the model prediction space, forms an accurate prediction interval in terms of comparison with the gold-standard full likelihood approach. Furthermore, the shape of these identified \(\ell _p = \ell ^{*}\) contours provides important qualitative insight into parameter identifiability. For example, the contours in Fig. 8a for the \(\psi = (\lambda , \beta )^\top \) bivariate profile is a classic banana-shaped profile consistent with our previous observation that \(\lambda \) is not identifiable. Similarly, the bivariate profile for \(\psi = (\lambda , K)^\top \) shows that this contour extends right across the truncated regions of parameter space considered. Again, this is consistent with \(\lambda \) not being identified by this data set. In contrast, other bivariate profiles such as \(\psi = (K, \beta )^\top \) and \(\psi = (C(0), \beta )^\top \) form closed contours around the MLE and are contained within the bounds of the parameter space.

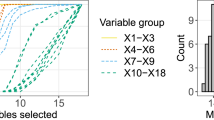

a–f Approximate contours of six bivariate profile likelihood functions for \(\psi = (\lambda , \beta )\), \(\psi = (\lambda , K)\), \(\psi = (\lambda , C(0))\), \(\psi = (\beta , K)\), \(\psi = (\beta , C(0))\) and \(\psi = (K, C(0))\), respectively, shown in the left of each subfigure. Each bivariate profile is presented adjacent to the associated prediction intervals in the right of each subfigure. Each bivariate profile likelihood is illustrated using 400 randomly identified points along the \(\ell _p^{*} = -\Delta _{0.95,2}/2 = -3.00\) contour (yellow dots) together with the MLE (cyan disc). For each bivariate profile likelihood we propagate each of the 500 values of \(\theta \) along the \(\ell ^{*} = -\Delta _{0.95,2}/2 = -3.00\) forward to give 500 traces of C(t) that are used to form the profile-wise prediction intervals in the right of each subfigure (purple shaded region). Each prediction interval is superimposed with the MLE solution (cyan solid curve) (Color figure online)

For each bivariate profile in Fig. 8 we propagate the 500 boundary values of \(\theta \) forward by solving Eq. (6) to give 500 traces of C(t) from which we can form the profile wise predictions shown in the right-most panel of each subfigure. Propagating the boundary values of \(\theta \) forward allows us to visualise and quantify how variability in pairs of parameters affects the prediction intervals. In this instance we can compare prediction intervals for identifiable pairs of parameters, such as those in Fig. 8d–e with the prediction intervals for pairs of parameters that are not identifiable, such as those in Fig. 8a, b. In this instance we see that the width of the prediction intervals dealing with identifiable target parameters appear to be, in general, narrower than the prediction intervals for target parameters that involve non-identifiable parameters.

Taking the union of the profile wise prediction intervals identified in Fig. 8 gives us the approximate prediction intervals shown in Fig. 6b where we see that the union of the profile-wise prediction intervals compares very well with the gold-standard prediction interval formed using the full likelihood approach. While this comparison of prediction intervals in Fig. 6b indicates that working with the full likelihood and the bivariate profile likelihoods leads to similar outcome interms of the overall prediction interval for C(t), this simple comparison masks the fact that working with the bivariate profile likelihood functions provides far greater insight by using the targeting property of the profile likelihood function to understand the how the identifiability/non-identifiability of different parameters impacts prediction.

3 Conclusion and Outlook

In this work we demonstrate how a systematic likelihood-based workflow for identifiability analysis, parameter estimation, and prediction, called Profile-Wise Analysis (PWA), applies to non-identifiable models. Previous applications of the PWA workflow have focused on identifiable problems where profile-wise predictions, based on a series of bivariate profile likelihood functions, provide a mechanistically insightful way to propagate confidence sets from parameter space to profile-wise prediction confidence sets. Taking the union of the profile-wise prediction intervals gives an approximate global prediction interval that compares well with the gold standard full likelihood prediction interval (Simpson and Maclaren 2023). In addition, empirical, finite sample coverage properties for the PWA workflow compare well with the expected asymptotic result. In this study we apply the same PWA workflow to structurally non-identifiable and practically non-identifiable mathematical models. Working with simple mathematical models allows us to present the PWA in a didactic format, as well as enabling a comparison of the approximate PWA prediction intervals with the gold-standard full likelihood prediction intervals. In summary we find that the PWA workflow approach can be applied to non-identifiable models in the same way that as for identifiable models. For the structurally non-identifiable models the confidence sets in parameter space are partly determined by user-imposed parameter bounds rather than being determined solely by the curvature of the likelihood function. Since the likelihood function is relatively flat we find that finite sample coverage properties exceed the expected asymptotic result. For the practically non-identifiable models we find that some parameters are well-identified by the data whereas other parameters are not. In this case the PWA workflow provides insight by mechanistically linking confidence sets in parameter space with confidence sets in prediction space regardless of the identifiability status of the parameter.

Demonstrating how the PWA workflow applies to non-identifiable mathematical models is particularly important for applications in mathematical biology because non-identifiability is commonly encountered. Previous approaches for dealing with non-identifiability have included introducing model simplifications, such as setting parameter values to certain default values (Simpson et al. 2020; Vollert et al. 2023), or seeking some combination of parameters that reduces the dimensionality of the parameter space (Gutenkunst et al. 2007; VandenHeuvel et al. 2023; Simpson et al. 2022). One of the challenges with these previous approaches is that they are often administered on a case-by-case basis without providing more general insight. In contrast, the PWA workflow can be applied to study parameter identifiability and to propagate confidence sets in parameter values to prediction confidence sets without needing to find parameter combinations to address non-identifiability, therefore providing a more systematic approach.

There are many options for extending the current study. In this work we have chosen to deal with relatively straightforward mathematical models by working with ODE-based population dynamics models that are standard extensions of the usual logistic growth model. Working with simple, canonical mathematical models allows us to compare our approximate PWA workflow prediction intervals with gold-standard full likelihood results, as well as making the point that parameter non-identifiability can arise even when working with seemingly simple mathematical models. In this work we have applied the PWA to simple models with just three or four parameters, and previous implementations have dealt with models with up to seven parameters (Murphy et al. 2022; Simpson et al. 2023), and future applications of the PWA to more complicated mathematical models with many more unknown parameters is of high interest. Unfortunately, however, under these conditions it becomes computationally infeasible to compare with predictions from the gold-standard full likelihood approach so we have not dealt with more complicated models in the current study. While we have focused on presenting ODE-based models with a standard additive Gaussian measurement model, the PWA approach can be applied to more complicated mathematical models including mechanistic models based on PDEs (Simpson et al. 2020; Ciocanel et al. 2023), as well as systems of ODEs and systems of PDEs (Murphy et al. 2024), or more complicated mathematical models that encompass biological heterogeneity, such as multiple growth rates (Banks et al. 2018). Here we have chosen to work here with Gaussian additive noise because this is by far the most commonly-used approach to relate noisy measurements with solutions of mathematical models (Hines et al. 2014), but our approach can be used for other measurement models, such as working with binomial measurement models (Maclaren et al. 2017; Simpson et al. 2024) or multiplicative measurement models that are often used to maintain positive predictions (Murphy et al. 2024), as well as correlated noise models (Lambert et al. 2023). Another point for future consideration is that the present study has dealt with prediction intervals in terms of the mean trajectories. When it comes to considering different measurement models it is also relevant to consider how trajectories for data realisations are impacted, since our previous experience has shown that different measurement models can lead to similar mean trajectories but very different data realisations (Simpson et al. 2024). In addition, these approaches can also be applied to stochastic models if a surrogate likelihood is available, as discussed in Simpson and Maclaren (2023) and implemented by Warne et al. (2024).

Data Availibility

Julia implementations of all computations are available on GitHub https://github.com/ProfMJSimpson/NonidentifiableWorkflow.

References

Acevedo MA, Marcano A, Fletcher RJ Jr (2012) A diffusive logistic growth model to describe forest recovery. Ecol Model 244:13–19. https://doi.org/10.1016/j.ecolmodel.2012.07.012

Andrews I, Stock JH, Sun L (2019) Weak instruments in instrumental variables regression: theory and practice. Annu Rev Econ 11:727–753. https://doi.org/10.1146/annurev-economics-080218-025643

Bates DM, Watts DG (1988) Nonlinear regression analysis and its applications. Wiley, New Jersey

Brown KS, Sethna JP (2003) Statistical mechanical approaches to models with many poorly known parameters. Phys Rev E 68:021904. https://doi.org/10.1103/PhysRevE.68.021904

Brown KS, Hill CC, Calero GA, Myers CR, Lee KH, Sethna JP, Cerione RA (2004) The statistical mechanics of complex signaling networks: nerve growth factor signalling. Phys Biol 1:184. https://doi.org/10.1088/1478-3967/1/3/006

Baker RE, Simpson MJ (2010) Correcting mean-field approximations for birth-death-movement processes. Phys Rev E 82:041905. https://doi.org/10.1103/PhysRevE.82.041905

Banks HT, Flores KB, Langolis CR, Serio TR, Sindi SS (2018) Estimating the rate of prion aggregate amplification in yeast with a generation and structurerd population model. Inverse Problems Sci Eng 26:257–279. https://doi.org/10.1080/17415977.2017.1316498

Bellu G, Saccomani MP, Audoly S, D’Angió L (2007) DAISY: a new software tool to test global identifiability of biological and physiological systems. Comput Methods Programs Biomed 88:52–61. https://doi.org/10.1016/j.cmpb.2007.07.002

Brauer F, Sánchez DA (1975) Constant rate population harvesting: equilibrium and stability. Theor Popul Biol 8:12–30. https://doi.org/10.1016/0040-5809(75)90036-2

Browning AP, Sharp JA, Murphy RJ, Gunasingh G, Lawson B, Burrage K, Haass NK, Simpson MJ (2021) Mathematical and statistical data analysis for the structure of tumour spheroids. eLife. 10:e73020. https://doi.org/10.7554/eLife.73020

Browning AP, Simpson MJ (2023) Geometric analysis enables biological insight from complex non-identifiable models using simple surrogates. PLoS Comput Biol 19:e1010844. https://doi.org/10.1371/journal.pcbi.1010844

Byrne HM, Chaplain MAJ (1997) Free boundary value problems associated with the growth and development of multicellular spheroids. Eur J Appl Math 8:639–658. https://doi.org/10.1017/S0956792597003264

Byrne HM, King JR, McElwain DLS, Preziosi L (2003) A two-phase model of solid tumour growth. Appl Math Lett 16:567–573. https://doi.org/10.1016/S0893-9659(03)00038-7

Casella G, Berger R (2001) Statistical inference. Duxbury, Belmont

Cassidy T (2023) A continuation technique for maximum likelihood estimators in biological models. Bull Math Biol 85:90. https://doi.org/10.1007/s11538-023-01200-0

Catchpole EA, Morgan J, Freeman SN (1998) Estimation in parameter-redundant models. Biometrika 85:462–468

Chiş O, Banga JR, Balsa-Canto E (2011) Structural identifiability of systems biology models: a critical comparison of methods. PLoS ONE 6:e27755. https://doi.org/10.1371/journal.pone.0027755

Chiş O, Villaverde AF, Banga JR, Balsa-Canto E (2016) On the relationship between sloppiness and identifiability. Math Biosci 282:147–161. https://doi.org/10.1016/j.mbs.2016.10.009

Ciocanel M-V, Ding L, Mastromatteo L, Reichheld S, Cabral S, Mowry K, Sandstede B (2023) Parameter identifiability in PDE models of fluorescence recovery after photobleaching. Bull Math Biol 86:36. https://doi.org/10.1007/s11538-024-01266-4

Cole DJ, Morgan BJ, Titterington DM (2010) Determining the parametric structure of models. Math Biosci 228:16–30. https://doi.org/10.1016/j.mbs.2010.08.004

Cole D (2020) Parameter redundancy and identifiability. CRC Press

Cooke KL, Witten M (1986) One-dimensional linear and logistic harvesting models. Math Model 7:301–340. https://doi.org/10.1016/0270-0255(86)90054-0

Cox DR (2006) Principles of statistical inference. Cambridge University Press, Cambridge

Dufour JM (1997) Some impossibility theorems in econometrics with applications to structural and dynamic models. Econom: J Econom Soc. 65:1365–1387. https://doi.org/10.2307/2171740

eAtlas (2023) Largest GBR coral reef survey data repository. Retrieved February 2024 eAtlas

Edelstein-Keshet L (2005) Mathematical models in biology. SIAM, Philadelphia

Fröhlich F, Theis FJ, Hasenauer J (2014) Uncertainty analysis for non-identifiable dynamical systems: profile likelihoods, bootstrapping and more. In: International conference on computational methods in systems biology, 61–72. Springer

Gerlee P (2013) The model muddle: in search of tumor growth laws. Can Res 73:2407–2411. https://doi.org/10.1158/0008-5472.CAN-12-4355

Greenspan HP (1972) Models for the growth of a solid tumor by diffusion. Stud Appl Math 51:317–340. https://doi.org/10.1002/sapm1972514317

Gutenkunst RN, Waterfall JJ, Casey FP, Brown KS, Myers CR, Sethna JP (2007) Universally sloppy parameter sensitivities in systems biology models. PLoS Comput Biol 3:e189. https://doi.org/10.1371/journal.pcbi.0030189

Hass H, Kreutz C, Timmer J, Kaschek D (2016) Fast integration-based prediction bands for ordinary differential equation models. Bioinformatics 32:1204–1210. https://doi.org/10.1093/bioinformatics/btv743

Hines KE, Middendorf TR, Aldrich RW (2014) Determination of parameter identifiability in nonlinear biophysical models: a Bayesian approach. J Gen Physiol 143:401. https://doi.org/10.1085/jgp.201311116

Hisano M, Connolly SR, Robbins WD (2011) Population growth rates of reef sharks with and without fishing on the Great Barrier Reef: robust estimation with multiple models. PLoS ONE 6:e25028. https://doi.org/10.1371/journal.pone.0025028

Hughes TP, Kerry JT, Connolly SR, Álvarez-Romero JG, Eakin CM, Heron SF, Gonzalez MA, Moneghetti J (2021) Emergent properties in the responses of tropical corals to recurrent climate extremes. Curr Biol 31:5393–5399. https://doi.org/10.1016/j.cub.2021.10.046

Jin W, Spoerri L, Haass NK, Simpson MJ (2021) Mathematical model of tumour spheroids with fluorescent cell cycle labels. Bull Math Biol 83:44. https://doi.org/10.1007/s11538-021-00878-4

Johnson SG (2023) The NLopt module for Julia. Retrieved February 2024 NLopt

Kot M (2003) Elements of mathematical ecology. Cambridge University Press, Cambridge

Kreutz C, Raue A, Kaschek D, Timmer J (2013) Profile likelihood in systems biology. FEBS J 280:2564–2571. https://doi.org/10.1111/febs.12276

Kreutz C, Raue A, Timmer J (2013) Likelihood based observability analysis and confidence intervals for predictions of dynamics models. BMC Syst Biol 6:120. https://doi.org/10.1186/1752-0509-6-120

Lambert B, Lei CK, Robinson M, Clerx M, Creswell R, Ghosh S, Tavener S, Gavaghan DJ (2023) Autocorrelated measurement processes and inference for ordinary differential equation models of biological systems. J R Soc Interface 2020:20220725. https://doi.org/10.1098/rsif.2022.0725

Ligon TS, Frölich F, Chiş O, Banga JR, Balsa-Canto E, Hasenauer J (2018) GenSSI 2.0: multi-experimental structural identifiability analysis of SBML models. Bioinformatics 34:1421–1423. https://doi.org/10.1093/bioinformatics/btx735

Maini PK, McElwain DLS, Leavesley DI (2004) Traveling wave model to interpret a wound-healing cell migration assay for human peritoneal mesothelial cells. Tissue Eng 10:475–482. https://doi.org/10.1089/107632704323061834

Maclaren OJ, Parker A, Pin C, Carding SR, Watson AJ, Fletcher AG, Byrne HM, Maini PK (2017) A hierarchical Bayesian model for understanding the spatiotemporal dynamics of the intestinal epithelium. PLoS Comput Biol 13(7):e1005688. https://doi.org/10.1371/journal.pcbi.1005688

Melica V, Invernizzi S, Caristi G (2014) Logistic density-dependent growth of Aurelia aurita polyps population. Ecol Model 10:1–5. https://doi.org/10.1016/j.ecolmodel.2014.07.009

Miller RS, Botkin DB (1974) Endangered species: models and predictions: simulation models of endangered populations may indicate the outcomes of various management alternatives. Am Sci 62:172–181

Murphy RJ, Browning AP, Gunasingh G, Haass NK, Simpson MJ (2022) Designing and interpreting 4D tumour spheroid experiments using mathematical models. Commun Biol 5:91. https://doi.org/10.1038/s42003-022-03018-3

Murphy RJ, Maclaren OJ, Calabrese AR, Thomas PB, Warne DJ, Williams ED, Simpson MJ (2022) Computationally efficient framework for diagnosing, understanding and predicting biphasic population growth. J R Soc Interface 19:20220560. https://doi.org/10.1098/rsif.2022.0560

Murphy RJ, Maclaren OJ, Simpson MJ (2024) Implementing measurement error models with mechanistic mathematical models in a likelihood-based framework for estimation, identifiability analysis and prediction in the life sciences. J R Soc Interface 21:20230402. https://doi.org/10.1098/rsif.2023.0402

Murray JD (2002) Mathematical biology I: an introduction, 3rd edn. Springer, New York

Pace L, Salvan A (1997) Principles of statistical inference from a Neo-Fisherian perspective. In: Advanced series on statistical science and applied probability, vol. 4. World Scientific, Singapore

Pawitan Y (2001) In all likelihood: statistical modelling and inference using likelihood. Oxford University Press, Oxford

Raue A, Kreutz C, Maiwald T, Bachmann J, Schilling M, Klingmüller U, Timmer J (2009) Structural and practical identifiability analysis of partially observed dynamical models by exploiting the profile likelihood. Bioinformatics 25:1923–1929. https://doi.org/10.1093/bioinformatics/btp358

Raue A, Kreutz C, Theis FJ, Timmer J (2013) Joining forces of Bayesian and frequentist methodology: a study for inference in the presence of non-identifiability. Philos Trans R Soc A: Math Phys Eng Sci 371:20110544. https://doi.org/10.1098/rsta.2011.0544

Raue A, Karlsson J, Saccomani MP, Jirstrand M, Timmer J (2014) Comparison of approaches for parameter identifiability analysis of biological systems. Bioinformatics 30:1440–1448. https://doi.org/10.1093/bioinformatics/btu006

Richards FJ (1959) A flexible growth function for empirical use. J Exp Biol 10:290–301. https://doi.org/10.1093/jxb/10.2.290

Royston P (2007) Profile likelihood for estimation and confidence intervals. Stand Genomic Sci 7:376–387. https://doi.org/10.1177/1536867X0700700305

Sarapata EA, de Pillis LG (2014) A comparison and cataolg of intrinsic tumor growth models. Bull Math Biol 76:2010–2024. https://doi.org/10.1007/s11538-014-9986-y

Siekmann I, Sneyd J, Crampin EJ (2012) MCMC can detect nonidentifiable models. Biophys J 103:2275–2286. https://doi.org/10.1016/j.bpj.2012.10.024

Simpson MJ, Baker RE, Vittadello ST, Maclaren OJ (2020) Parameter identifiability analysis for spatiotemporal models of cell invasion. J R Soc Interface 17:20200055. https://doi.org/10.1098/rsif.2020.0055

Simpson MJ, Browning AP, Drovandi C, Carr EJ, Maclaren OJ, Baker RE (2021) Profile likelihood analysis for a stochastic model of diffusion in heterogeneous media. Proc R Soc A: Math Phys Eng Sci 477:20210214. https://doi.org/10.1098/rspa.2021.0214

Simpson MJ, Browning AP, Warne DJ, Maclaren OJ, Baker RE (2022) Parameter identifiability and model selection for sigmoid population growth models. J Theor Biol 535:1100998. https://doi.org/10.1016/j.jtbi.2021.110998

Simpson MJ, Walker SA, Studerus EN, McCue SW, Murphy RJ, Maclaren OJ (2023) Profile likelihood-based parameter and predictive interval analysis guides model choice for ecological population dynamics. Math Biosci 355:108950. https://doi.org/10.1016/j.mbs.2022.108950

Simpson MJ, Maclaren OJ (2023) Profile-wise analysis: a profile likelihood-based workflow for identifiability analysis, estimation, and prediction with mechanistic mathematical models. PLoS Comput Biol 19:e1011515. https://doi.org/10.1371/journal.pcbi.1011515

Simpson MJ, Murphy RJ, Maclaren OJ (2024) Modelling count data with partial differential equation models in biology. J Theor Biol 580:111732. https://doi.org/10.1016/j.jtbi.2024.111732

Stark PB (2015) Constraints versus priors. SIAM/ASA J Uncertain Quant 3:586–598. https://doi.org/10.1137/130920721

Steele J, Adams J, Slukin T (1998) Modelling paleoindian dispersals. World Archaeol 30:286–305. https://doi.org/10.1080/00438243.1998.9980411

Spratt JA, von Fournier D, Spratt JS, Weber EE (1993) Decelerating growth and human breast cancer. Cancer 71:2013–2019. https://doi.org/10.1002/1097-0142(19930315)71:6%3C2013::AID-CNCR2820710615%3E3.0.CO;2-V

Swanson KR, Bridge C, Murray JD, Ellsworth EC Jr (2003) Virtual and real brain tumors: using mathematical modeling to quantify glioma growth and invasion. J Neurol Sci 216:1–10. https://doi.org/10.1016/j.jns.2003.06.001

Tsoularis A, Wallace J (2002) Analysis of logistic growth models. Math Biosci 179:21–55. https://doi.org/10.1016/S0025-5564(02)00096-2

VandenHeuvel DJ, Devlin B, Buenzli PR, Woodruff MA, Simpson MJ (2023) New computational tools and experiments reveal how geometry affects tissue growth in 3D printed scaffolds. Chem Eng J 475:145776. https://doi.org/10.1016/j.cej.2023.145776

Villaverde AF, Bongard S, Mauch K, Müller D, Balsa-Canto E, chmid J, Banga JR (2015) A consensus approach for estimating the predictive accuracy of dynamic models in biology. Computer Methods Programs Biomed 119:17–28. https://doi.org/10.1016/j.cmpb.2015.02.001

Villaverde AF, Raimúndez E, Hasenauer J, Banga JR (2023) Assessment of prediction uncertainty quantification methods in systems biology. IEEE/ACM Trans Comput Biol Bioinf 30:1725–1736. https://doi.org/10.1109/TCBB.2022.3213914

Vollert SA, Drovandi C, Monsalve-Bravo GM, Adams MP (2023) Strategic model reduction by analysing model sloppiness: a case study in coral calcification. Environ Model Softw 159:105578. https://doi.org/10.1016/j.envsoft.2022.105578