Abstract

We present a model for genome size evolution that takes into account both local mutations such as small insertions and small deletions, and large chromosomal rearrangements such as duplications and large deletions. We introduce the possibility of undergoing several mutations within one generation. The model, albeit minimalist, reveals a non-trivial spontaneous dynamics of genome size: in the absence of selection, an arbitrary large part of genomes remains beneath a finite size, even for a duplication rate 2.6-fold higher than the rate of large deletions, and even if there is also a systematic bias toward small insertions compared to small deletions. Specifically, we show that the condition of existence of an asymptotic stationary distribution for genome size non-trivially depends on the rates and mean sizes of the different mutation types. We also give upper bounds for the median and other quantiles of the genome size distribution, and argue that these bounds cannot be overcome by selection. Taken together, our results show that the spontaneous dynamics of genome size naturally prevents it from growing infinitely, even in cases where intuition would suggest an infinite growth. Using quantitative numerical examples, we show that, in practice, a shrinkage bias appears very quickly in genomes undergoing mutation accumulation, even though DNA gains and losses appear to be perfectly symmetrical at first sight. We discuss this spontaneous dynamics in the light of the other evolutionary forces proposed in the literature and argue that it provides them a stability-related size limit below which they can act.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Genome lengths span several order of magnitudes across all living species (Koonin 2008, 2009) and the origin of these variations is still unclear. Total genome size does not correlate well with organismal complexity, a paradox called “C-value paradox” in the 1970s (Thomas 1971). When it was discovered that DNA comprises not only genes but also a lot of non-coding sequences, it felt logical to rather search for a correlation between gene number and organismal complexity. There again, no obvious correlation was found, a phenomenon called the “G-value paradox” (Betrán and Long 2002; Hahn and Wray 2002). One reason could be the difficulty to define an objective and quantitative measure of organismal complexity. But even if such a good measure was available, its correlation with genome size or gene number could very well be low anyway. Indeed, genome size results from a tension between multiple evolutionary pressures, some acting at the mutation level, other at the selection level, some tending to make the genome grow, other tending to make it shrink. It is thus essential to understand how each pressure acts individually in order to disentangle their interactions.

The formalism we propose here sets a general framework for the study of the impact of mutational mechanisms on genome length with two important features: (i) genomes can undergo both small indels and large chromosomal rearrangements and (ii) genomes undergo a size-dependent number of mutations rather than limiting the mutations to one per replication. This framework allows us to give a simple condition for the existence and uniqueness of a stationary distribution for genome size in the absence of selection, and we characterize how each type of mutation impacts the spontaneous dynamics of genome size. We find that for a wide range of mutation rates, the spontaneous dynamics of genome size naturally prevents it from growing indefinitely.

We present the details of the mathematical model and the hypotheses that underlie the biological mechanisms for mutations and replication in Sect. 2. In Sect. 3, we analyze the evolution of genome size in the absence of selection. It shows that a stationary distribution exists even if duplications are twice as frequent as deletions. In Sect. 4, we use a continuous approximation to analyze further the outcome of mutations in a single generation and show that one generation is already enough for genome size to be bounded, independently from the initial sizes of the genomes. In Sect. 5, we generalize the results to various distributions for the size of mutations and to the presence of selection. As the bounds found in Sect. 4 apply for every generation, we argue that selection cannot help overcome the bounds found in Sect. 4 but determines how the population behaves with respect to these bounds. In order to illustrate how our results apply in biologically plausible situations, we propose numerical simulations of genome size evolution in mutation accumulation experiments in Sect. 6. We discuss the extensions and limits of the model, as well as the links with previous studies, in Sect. 7.

2 A Model for Genome Size Evolution

2.1 Definition of the Model

We consider four types of mutations that occur in natural genomes: small insertions and deletions (hereafter called indels), large deletions and duplications. In this study, we suppose that the impact of mutations on a genome of size \(s_0\) is as follows:

-

For small insertions, 1 to \(l_\mathrm{{ins}}\) bases are added to the genome. The size after one mutation belongs to \(\{s_0+1,\ldots ,s_0+l_\mathrm{{ins}}\}\). The transition probabilities can be defined arbitrarily, but we suppose they do not depend on the starting state \(s_0\). The state \(s_0=0\) can be escaped through small insertions.

-

For small deletions, 1 to \(l_\mathrm{{sdel}}\) bases are removed from the genome (if possible). The size after one mutation belongs to \(\{\max (0,s_0-l_\mathrm{{sdel}}),\ldots ,\max (0,s_0-1)\}\). The transition probabilities can be defined arbitrarily, but should not depend on the starting size \(s_0\). All the transitions that go below 0 are rewired to 0. If \(s_0=0\), the size after the small deletion is \(0\) with probability 1.

-

For duplications, 1 to \(s_0\) bases are added to the genome. The size after one mutation belongs to \(\{s_0+1,\ldots ,2s_0\}\). We suppose that each final state is reached with probability \(1/s_0\). If \(s_0=0\), the size after the duplication is \(0\) with probability 1.

-

For large deletions, 1 to \(s_0\) bases are removed from the genome. The size after one mutation belongs to \(\{0,\ldots ,s_0-1\}\). We suppose that each final state is reached with probability \(1/s_0\). If \(s_0=0\), the size after the large deletion is \(0\) with probability 1.

We suppose that small deletions (resp. insertions) and large deletions (resp. duplications) occur according to different mechanisms (thus at different rates). The fact that the indel distribution does not depend on \(s_0\) is not strictly necessary, but makes one part of the proof simpler (see Remark 4 in Appendix 1). The important assumption is that there is an upper bound on the size of indels (\(l_\mathrm{{ins}}\) for small insertions and \(l_\mathrm{{sdel}}\) for small deletions), but these bounds may be arbitrarily large (several kb for example). Indels can be thought as representing two kinds of events. First, replication slippage of the DNA polymerase can lead to the loss or gain of a few base pairs. Second, the transposition of transposable elements leads to the gain of up to 10 kb. They can be incorporated as small insertions in our model. However, note that here the insertion rate will be defined per base pair whereas the transposition rate is normally given per transposable element. One could imagine a more complex model where two organization levels are considered: the base pair level for some mutational mechanisms and the copy number level for other elements such as transposable elements or tandemly repeated sequences, but that would increase the number of parameters. By choosing the base pair level and expressing the mutation rate per base pair, the spontaneous rate of transposition will be higher than normally expected. Hence the pressure toward genome growth is high in the model. Therefore, the convergence toward finite sizes proved in this growth-prone model (Theorem 2) should arguably hold in the more realistic model where transposable elements replicate more moderately based on their copy number.

For large deletions and duplications, we have assumed that the number of base pairs that are lost or gained follows a uniform distribution between 1 and the current genome size. Mechanistically, if the two end points of a deletion (or a duplication) are taken at random along the genome, the resulting distribution of losses (or gains) is uniform. We use these distributions as a guideline through the paper but this is not necessary for showing that the genome size remains bounded. We will see that the proof holds for a more general family of distributions (see Sect. 5, Corollary 1). It is also important to note that these distributions reflect the spontaneous events. Estimations of the distributions of rearrangements based on fixed events (filtered by natural selection) yield exponential or, more generally, gamma distributions (Sankoff et al. 2005; Darling et al. 2008). The spontaneous distributions are generally not accessible because large events are likely to be lethal and thus not observable. Data from bacteria suggest that they could follow a lognormal law (see Sect. 6, Fig. 3).

There is evidence that large events occur in all species. In bacterial strains cultivated in laboratory conditions, amplifications and numerous large deletions through ectopic recombination have been observed, the size of single deletions reaching up to more than 200 kb under weak selection (Porwollik et al. 2004; Nilsson et al. 2005). What is more, at least locally, the deletion sizes might be uniform because of random insertions of transposable elements (Cooper et al. 2001). In the human genome, duplications and large deletions causing genetic diseases have been identified. For example, in half of the cases, the Charcot-Marie-Tooth disease is caused by a 1.4 Mb duplication. Another example is the Smith-Magenis syndrom, often associated with a partial deletion of chromosome 17, spanning from 950 kb to 9 Mb (Lupski 2007). For comparison purposes, a deletion of 9 Mb is approximately twice the size of the whole genome of E. coli K12 (4.6 Mb). Additionally, whole chromosomes, or even genomes, can be lost or duplicated because of segregation problems during cell division. Whole genome duplications have been selected frequently through the history of life and numerous genomes bear traces of such events (Jaillon et al. 2009).

For each type of mutation, we define a mutation rate expressed as a number of mutations per base pair per generation: \(\mu _\mathrm{{ins}}\) for small insertions, \(\mu _\mathrm{{sdel}}\) for small deletions, \(\mu _\mathrm{{ldel}}\) for large deletions and \(\mu _\mathrm{{dup}}\) for duplications. We call \(\mu = \mu _\mathrm{{ins}}+ \mu _\mathrm{{sdel}}+ \mu _\mathrm{{ldel}}+ \mu _\mathrm{{dup}}\) the total mutation rate per base pair per generation (note that in this paper, the term “mutations” refers to small indels and chromosomal rearrangements). For every generation, we suppose that the occurrences of mutations of type \(type\) along a genome follow independent Poisson processes with rate \(\mu _\mathrm{{type}}\), where \(\mu _\mathrm{{type}}\) is the rate of the mutation considered. The total number of mutations per generation is given by a Poisson law with parameter \(\mu s_0\), where \(s_0\) is the size of the genome considered at the beginning of the generation. As we shall see later (Sect. 5), allowing for several mutations per generation is essential when we include selection in the model. As a result of the independence of the Poisson processes, the probability that any given mutation is a small insertion (for example) is \(\mu _\mathrm{{ins}}/\mu \). We can write the mutations as transitions on \(\mathbb {N}\), the space of all possible genome sizes. We chose not to have a predefined maximal genome size to ensure that infinite growth is possible and that the convergence to a stationary distribution is not trivial.

We define two transition matrices on this space. The first, called \(\mathbf{M}_1\), describes the action of a single mutation. The second matrix, called \(\mathbf{M}_G\), gives the transitions for one generation, when all mutations have been drawn according to independent Poisson processes.

Definition 1

\(\mathbf{M}_1= ((\mathbf{M}_1)_{ij})_{i,j \in \mathbb {N}}\) where \((\mathbf{M}_1)_{ij}\) is the probability that a genome having initial size \(i\) ends up having size \(j\) after exactly one mutation. \(\mathbf{M}_1\) is a stochastic matrix. The transition rates from state \(i\) to state \(j\) are computed according to the definitions above. \(\mathbf{M}_1\) gives the evolution of genome size mutation after mutation.

Definition 2

\(\mathbf{M}_G= ((\mathbf{M}_G)_{ij})_{i,j \in \mathbb {N}\backslash \{0\}}\) where \((\mathbf{M}_G)_{ij}\) is the probability that a genome having initial size \(i\) ends up having size \(j\) after one generation. Several mutations can occur in one generation depending on the rates \(\mu _\mathrm{{sdel}}\), \(\mu _\mathrm{{ins}}\), \(\mu _\mathrm{{ldel}}\) and \(\mu _\mathrm{{dup}}\). \(\mathbf{M}_G\) gives the evolution of genome size generation after generation.

Importantly, we define \(\mathbf{M}_G\) on \(\mathbb {N}^*=\mathbb {N}\backslash \{0\}\) instead of \(\mathbb {N}\). All individuals ending up with length 0 after complete replication are automatically reassigned to the state with length 1. While 0 is not an absorbing state in the Markov chain \((\mathbb {N},\mathbf{M}_1)\) because of small insertions, it would be absorbing in the Markov chain \((\mathbb {N},\mathbf{M}_G)\) because the number of mutations per replication given by the Poisson law is 0. Therefore, if we kept this state, it would partially affect the spontaneous dynamics of genomes: even if genomes tended do grow, there would be a nonzero probability that they remain trapped in the absorbing state. In \((\mathbb {N}^*,\mathbf{M}_G)\), we made sure that there is no absorbing state (there is a nonzero probability to leave every state), thus no trivial stationary distribution.

We define a population vector \(\varvec{\nu }_t\) such that \(\forall t \in \mathbb {N}\), \(\varvec{\nu }_t\) is a probability measure on \(\mathbb {N}^*\), corresponding to the density of an infinite population. \(\varvec{\nu }_t(s)\) represents the fraction of genomes with size \(s\) at generation \(t\). We consider an arbitrary starting population \(\varvec{\nu }_0\). In the special case where all genome states confer the same probability of reproduction (no selection), the evolution of the population is given by

Because \(\mathbf{M}_G\) is stochastic and does not depend on \(t\), Eq. (1) can be interpreted as describing the evolution of the time-homogeneous Markov chain \((\mathbb {N}^*,\, \mathbf{M}_G)\) in the space of genome sizes.

2.2 Fundamental Properties of the Gain and Loss Distributions

Because mutations will accumulate with time (within a generation or along a lineage), it is essential to understand how the effects of these mutations on genome size will “add up” in order to understand whether genomes have a tendency to grow or to shrink. The model includes processes of different nature. Small indels have additive effects and the average impact of an indel does not depend on the initial genome size. For equal rates of small insertions and small deletions \((\mu _\mathrm{{ins}}=\mu _\mathrm{{sdel}})\) and for the same length distribution of insertions and small deletions \((l_\mathrm{{ins}}=l_\mathrm{{sdel}})\) in particular, the transitions due to small indels are symmetrical. On the contrary, duplications and large deletions have a multiplicative effect on genome size and the average gains and losses vary with the starting genome size \(s_0\). Explicitly, the average gains and losses are

As illustrated in Fig. 1a, for equal duplication and large deletion rates, the transitions might look symmetrical because for a given starting point, gains and losses compensate each other. However, this does not give a good indication for our process, as in fact we need to know whether a loss or a gain that was just undergone will be compensated, taking into account the fact that genome size has changed between the two mutations. In linear scale, this question is difficult to answer because average gains and losses keep changing. Indeed, as depicted on Fig. 1a, a smaller genome undergoes smaller average gains and losses. For example, if a genome undergoes a deletion followed by a duplication, the loss will be on average bigger than the gain, as the genome will have reached a smaller size between the two mutations. This remains true if it undergoes the duplication first, so we expect an average loss, even if the distributions are symmetrical and happen at the same rate. Hence, the overall average change in genome size is difficult to predict, as it is the sum of ever-changing average gains and losses.

In order to aggregate losses and gains efficiently, we need to find a scale in which the average impacts of deletions and duplications do not change with genome size, so we can simply add them up without worrying about intermediate states. This is the case in logarithmic scale (Fig. 1b), in which the gain and loss distributions become nearly invariant by translation. It becomes clear that the duplication/large deletion process is de facto biased toward shrinkage as, on average, approximately 2.59 duplications are needed to revert a deletion (see Property 1 below). For indels, the linear scale is well-adapted (Fig. 1c) but, as they are asymptotically negligible compared to duplications and large deletions (Fig. 1d), we choose logarithmic scale over linear scale.

Schematic densities of transitions in linear and logarithmic scales for two different starting states \(s_0\) (schematic transition density in gray) and \(s'_0\) (schematic transition density in black). The arrows indicate the size of the average jumps for each type of mutation. a In linear scale, for equal rates, the duplication and large deletion processes look symmetrical, but the average jumps depend on the starting point. b In logarithmic scale, the apparent symmetry is broken: there is a clear tendency to shrink and the average jumps become nearly equal for every starting point. c The linear scale is perfectly adapted for indels that occur at equal rates when the distribution does not depend on the starting size. d The logarithmic scales breaks the symmetrical properties of indels, but their impact becomes smaller with the initial size

Definition 3

We call \(S_n\) the random variable giving the state of \((\mathbb {N},\mathbf{M}_1)\) after \(n\) mutations. In probability notation, the starting point \(s_0 \in \mathbb {N}\) is written as a subscript, as in \(\mathrm{Pr}_{s_0}\left[ S_n = k\right] = (\mathbf{M}_1^n)_{s_0k}\), the probability that the size \(k \in \mathbb {N}\) is reached in \(n\) mutations, starting from \(s_0\). For simplicity, when the starting size \(s_0\) has no influence, we drop the subscript, as in \(\mathrm{Pr}_{s_0}\left[ S_{n+1} = j | S_n = i \right] = \mathrm{Pr}_{}\left[ S_{n+1} = j | S_n = i \right] = (\mathbf{M}_1)_{ij}\).

Property 1

Let \(\Delta (s)= \mathbb {E}_{}\left[ \log (S_{n+1})|S_n = s\right] -\mathbb {E}_{}\left[ \log (S_{n})|S_n = s\right] \), the average size of one-mutation jumps in logarithmic scale, starting from \(s\).

-

if the (n+1)th mutation is a large deletion, \(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow } -1\).

-

if the (n+1)th mutation is a duplication, \(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow } 2\log 2-1\).

-

if the (n+1)th mutation is an indel, \(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow } 0\).

The proof of this property is given at the beginning of Appendix 1 (restated as Property 5).

3 Existence and Uniqueness of a Stationary Distribution for the Generational Markov Chain \((\mathbb {N}^*,\mathbf{M}_G)\)

Equation (1) corresponds to the Markov chain \((\mathbb {N}^*,\mathbf{M}_G)\), it describes the evolution of genome size in the absence of selection. We will show the existence and uniqueness of a stationary distribution for genome size using the following extension of Doeblin’s condition.

Theorem 1

(Doeblin’s condition in \(g\) steps) Let \(\mathbf{M}\) be a transition probability matrix on a state space \(\mathbb {S}\) with the property that, for some integer \(g \ge 1\), some state \(i_f \in \mathbb {S}\) and \(\varepsilon > 0,\, (\mathbf{M}^g)_{ii_f} \ge \varepsilon \) for all \(i \in \mathbb {S}\). Then \(\mathbf{M}\) has a unique stationary probability vector \(\varvec{\pi },\, (\varvec{\pi })_{i_f} \ge \varepsilon \). In other words, for all initial distributions \(\varvec{\mu }\), the system converges to the distribution given by \(\pi \). Mathematically,

In this definition and in the rest of this article, the norm is the 1-norm, e.g., \(\Vert \varvec{\mu }\Vert = \sum _{i \in X} \left| \varvec{\mu }_i \right| \). A proof of this theorem can be found in Stroock (2005). In this section, we will use Doeblin’s condition in \(g=2\) steps (generations) to prove the following theorem.

Theorem 2

(Stationary distribution for genome size without selection) If \((2\log 2-1) {\mu _\mathrm{{dup}}} < {\mu _\mathrm{{ldel}}}\), then the Markov chain \((\mathbb {N}^*,\mathbf{M}_G)\) has a unique asymptotic stationary probability vector \(\varvec{\nu }_{\infty }\). For any initial distribution \(\varvec{\nu }_0\), the distribution of genome sizes converges to \(\varvec{\nu }_{\infty }\). Mathematically,

Biologically, the convergence of the distribution implies that, even after a long time of evolution, genome size does not tend to infinity: an arbitrary large part of genomes is located beneath a finite size. The rate of small insertions and small deletions does not impend the convergence of the system. In particular, the rate of transposition of transposable elements can be arbitrarily large, genomes will still converge toward finite sizes. What is more, genome size remains finite for a duplication rate \(\mu _\mathrm{{dup}}\) as large as \({\simeq }2.6\) higher than the rate of large deletions \(\mu _\mathrm{{ldel}}\).

The remainder of this section is dedicated to the proof of this theorem and can be skipped without impeding the understanding of the results.

To prove that Doeblin’s condition is met, we have to evaluate the generational transitions in \(\mathbf{M}_G\). In order to do this, we need to study the single mutation level first. In the mutational Markov chain \((\mathbb {N},\mathbf{M}_1)\), every state communicates with its neighbors. More precisely, it is possible to gain exactly one base pair through small insertions or duplications and to lose exactly one base pair by small deletions or large deletions. By combining these transitions, we can imagine a mutational path starting from any initial genome size to any final size, in a finite number of mutations. At the generation level, these mutational paths may exactly occur with some positive probability given by the Poisson processes. Thus all the states in \((\mathbb {N}^*,\mathbf{M}_G)\) can transit to any state in \(\mathbb {N}^*\) in one step (generation).

3.1 Concerns to Overcome

Even though all the transitions in \((\mathbb {N}^*,\mathbf{M}_G)\) are strictly positive, Doeblin’s condition is not trivially met. Because \(\mathbb {N}^*\) is infinite, the probability associated to some transitions may, and will, become arbitrarily small, so there is no trivial lower bound \(\varepsilon > 0\) as demanded in Doeblin’s condition. The infinite size of the matrix is the main concern here, because a number of classical theorems (such as Perron–Frobenius) do not apply. What is more, there is no absorbing state, so there is no trivial stationary distribution. The important property is that in logarithmic scale, duplications and large deletions overcome small indels and become invariant by translation (Property 1). The difficulty of the proof of Theorem 2 is that this behavior is only asymptotic (it is a good description for large genomes).

3.2 Sketch of the Proof

We will subdivide the space of genome states in two subspaces, a finite subspace \(X_\mathrm{{small}} \subset \mathbb {N}^*\) of genomes smaller than a specific size \(\tilde{s}\), and an infinite subspace \(X_\mathrm{{large}} \subset \mathbb {N}^*\) of genomes larger than \(\tilde{s}\). We will show that a final genome size \(s_f \le \tilde{s}\) can be reached in \(g=2\) generations with a probability greater than a certain \(\varepsilon > 0\), regardless of the starting genome size \(s_0\).

-

If \(s_0 \in X_\mathrm{{small}}\), this condition is easily met because the subspace is finite. This will be formally stated in Lemma 1.

-

If \(s_0 \in X_\mathrm{{large}}\), the probability to reach \(s_f\) in two generations is at least the probability to reach a state in \(X_\mathrm{{small}}\) at the first generation and then to reach \(s_f\) from there. For the first generation, we will show that as long as duplications are not much more frequent than deletions (\(\mu _\mathrm{{dup}}< 2.59\mu _\mathrm{{ldel}}\) approximately), large genomes tend to become smaller, reach a smaller size in a finite time and stay around this smaller size. This will be formally stated in Lemma 2. We will also use Chebyshev’s inequality to show that the number of mutations in one generation is indeed sufficient to shrink below \(\tilde{s}\). For the second generation, we will use again Lemma 1.

Lemma 1

Suppose we have a non-empty and finite subset of possible genome sizes \(X \subset \mathbb {N}^*\). Then there is \(s_f \in X\) and \(\varepsilon _1 > 0\) such that \((\mathbf{M}_G)_{s_is_f} \ge \varepsilon _1\) for all \(s_i \in X\).

Proof

Pick any \(s_f \in X\). As \(X\) is finite, the transition probabilities toward \(s_f\) are bounded below by a real value \(\varepsilon _1\). As just seen in the main text, every state is accessible by any other state in \((\mathbb {N}^*,\mathbf{M}_G)\) with strictly positive probability, thus \(\varepsilon _1>0\).\(\square \)

This lemma shows that Doeblin’s condition applies trivially for \(\mathbf{M}_G\) if we restrict genome size a priori. However, in our model, genomes may be arbitrarily large. We will show that no matter how large they are, they will reach the same finite set of states ultimately under the condition of the theorem.

Lemma 2

If \((2\log 2-1) {\mu _\mathrm{{dup}}} < {\mu _\mathrm{{ldel}}}\), there exists \(\delta >0\) and a size threshold \(\tilde{s}\in \mathbb {N}\) such that

-

(a)

\(\forall n \ge 0, \forall s \ge \tilde{s}, \quad \mathbb {E}_{}\left[ \log (S_{n+1})|S_n = s\right] \le \mathbb {E}_{}\left[ \log (S_{n})|S_n = s\right] - \delta \).

-

(b)

\(\exists \varepsilon ' >0,\) \(\forall n \ge 0,\)

$$\begin{aligned} \mathrm{Pr}_{s_0}\left[ S_n \le \tilde{s}\right] \ge {\left\{ \begin{array}{ll} \varepsilon ' &{} \text {if}\; s_0 \le \tilde{s}\\ \varepsilon '\left( 1-\frac{\log s_0}{\log \tilde{s}+ n \delta }\right) &{} \text {if}\; s_0 > \tilde{s}\end{array}\right. } \end{aligned}$$

(where \(\log \) is arbitrarily extended by \(\log 0 = 0\))

Details of the proof for Lemma 2 are presented in Appendix 1. This proof is done by looking at the general behavior for large genomes (with size \({>}\tilde{s}\)) and return times for small genomes (with size \({\le }\tilde{s}\)). It involves several steps. We begin by looking at the impact of each mutation on genome size in the scale adapted to the mutations that scales most with genome size. As stated in Property 1, asymptotically, large deletions and duplications overcome local mutations and determine the spontaneous behavior. The balance between duplications and deletions decides whether large genomes will tend to grow or to shrink. If \((2 \log 2 - 1)\mu _\mathrm{{dup}}< \mu _\mathrm{{ldel}}\), the tendency is toward smaller genomes and we can find a threshold \(\tilde{s}\) above which genomes shrink by at least some (relative) amount \(\delta \) on average. We show that the probability for genomes to get below the \(\tilde{s}\) threshold at least once progressively tends to 1, when the number \(n\) of mutations increases (parenthesized part of the lower part of claim \((b)\)).

In parallel, we show that a fixed fraction of genomes starting below \(\tilde{s}\) remains always there. To do so, we show that starting from \(\tilde{s}\) and aggregating with \(\tilde{s}\) the states below \(\tilde{s}\) represents a worst-case scenario in terms of genome growth. We study the time of first returns to \(\tilde{s}\) in this worst-case scenario. We prove that the expected value of the first return time is finite and, using a theorem based on return times, derive the upper part of claim \((b)\). This also implies that no matter how far above \(\tilde{s}\) the genome size starts, once it is reached, a fixed fraction remains there forever (explaining the presence of \(\varepsilon '\) in the two parts of claim \((b)\)).

As detailed below, we complete the demonstration of Theorem 2 by showing that for large genomes, the number of mutations in one generation is indeed sufficient to shrink below \(\tilde{s}\) (at least asymptotically) by linking the mutation chain \((\mathbb {N},\mathbf{M}_1)\) to the generation chain \((\mathbb {N}^*,\mathbf{M}_G)\).

Proof (of Theorem 2)

We call \(G_t\) the random variable that describes the state of \((\mathbb {N}^*,\mathbf{M}_G)\) at generation \(t\). Let \(\tilde{s}\in \mathbb {N}\) be the critical size given by Lemma 2. We subdivide the space of genome states into the finite subset \(X_\mathrm{{small}}=\{ 1 \le s \le \tilde{s}\}\) and the infinite subset \(X_\mathrm{{large}} = \{ s > \tilde{s}\} = \mathbb {N}\backslash X_\mathrm{{small}}\). We will show that some final genome size \(s_f \le \tilde{s}\) can be reached in \(g=2\) generations with a probability greater than \(\varepsilon > 0\), regardless of the starting genome size \(s_0\).

Case \(s_0 \le \tilde{s}\, (s_0 \in X_\mathrm{{small}})\): The probability to reach size \(s_f\) after two generations is at least the probability to reach \(s_f\) and then to stay on \(s_f\). We can apply Lemma 1 to \(X_\mathrm{{small}}\)

This is true in particular if \(s_0=s_f\).

Case \(s_0> \tilde{s}\, (s_0 \in X_\mathrm{{large}})\): The probability to reach \(s_f \le \tilde{s}\) in two generations is at least the probability to reach a state in \(X_\mathrm{{small}}\) at the first generation and then to reach size \(s_f\) from there. We begin by considering the first step, that is, \(\mathrm{Pr}_{}\left[ G_{t+1} \le \tilde{s}| G_t = s_0\right] \). This transition probability is obtained by summing the probability transitions after \(n\) mutations, \((S_n)_{n \in \mathbb {N}}\), weighted by the probability that \(n\) mutations occur within one generation. The number of mutations \(N\) follows a Poisson distribution with parameter \(\mu s_0\).

According to Lemma 2,

In order for this relation to be meaningful, we look for \(n^*(s_0)\) such that \(\forall n \ge n^*(s_0),\, \mathrm{Pr}_{s_0}\left[ S_n \le \tilde{s}\right] \ge \varepsilon ' /2\). We find \(n^*(s_0) = (2\log s_0 - \log \tilde{s}) / \delta \). This is the number of mutations that are needed to make sure that the probability of going below \(\tilde{s}\) at least once is more than \(1/2\). By dropping the first terms of the sum, we obtain

thus

When \(s_0\) goes to \(+\infty \), we have \((2\log s_0 - \log \tilde{s}) / \delta \ll \mu s_0 = \mathbb {E}_{}\left[ N\right] \). What is more, \(\sigma _{}\left[ N\right] = \sqrt{\mu s_0}\). This means that when \(s_0\) tends to infinity, \((2\log s_0 - \log \tilde{s}) / \delta \) is below \(\mathbb {E}_{}\left[ N\right] \) by a number of standard deviations that tends to infinity. The one-sided Chebyshev inequality implies that \(\mathrm{Pr}_{}\left[ N \ge (2\log s_0 - \log \tilde{s}) / \delta \right] \) tends to 1. Because this nonzero limits exists and because the probability is always strictly positive, it is necessarily bounded below by some positive number. Multiplication by \(\varepsilon '/2\) does not change that fact, hence

Once a state \(s_j \in X_\mathrm{{small}}\) is reached, we apply the relation given by Lemma 1 for the second generation with the same \(s_f\) as in (3)

Taking \(\varepsilon = \varepsilon _1 \times \min \{\varepsilon _1,\varepsilon _2\}\) gives the desired lower bound for Doeblin’s condition in two steps for \((\mathbb {N}^*,\mathbf{M}_G)\).\(\square \)

4 Quantitative Bounds for the Distribution Using a Continuous Approximation

Theorem 2 shows that without selection, the size distribution converges toward a specific distribution \(\varvec{\nu }_\infty \). From a theoretical point of view, the quantiles of this distribution give bounds that indicate where the population will asymptotically be found. However, the proof gives very little quantitative information about the location of these bounds. In order to be more precise, we need to take into account the second moments of the transition distributions. The proof of Theorem 2 relies on the fact that, asymptotically, the effect of indels become negligible compared to deletions and duplications (Property 1) already for the first-order moments. We use this remark to simplify the computations of the second moments and the bounds for the quantiles of the size distribution by considering a simplified and continuous model.

Definition 4

We consider a genome with starting size \(s_0 \in \mathbb {R}_+^*\) that undergoes only independent large deletions and duplications. We call \(\hat{S}_{n} \in \mathbb {R}_+^*\) the genome length after \(n\) mutations. The size evolution is given by \(\hat{S}_{n+1} = \lambda _n \hat{S}_n\), where \(\lambda _n \hookrightarrow \mathcal {U}([0,1])\) if the \(n\)th mutation is a deletion and \(\lambda _n \hookrightarrow \mathcal {U}([1,2])\) if it is a duplication. In log scale, \(\log \hat{S}_{n+1} = \log \lambda _n + \log \hat{S}_n\). We call \(J_n = \log \lambda _n\). As in the general case, we assume that in one generation the number of deletions and duplications are Poisson-distributed with parameters \(\mu _\mathrm{{ldel}}s_0\) and \(\mu _\mathrm{{dup}}s_0\) and follow independent Poisson processes. We call \(\hat{S}_f\) the genome size at the end of the generation.

Property 2

Because the mutations follow independent processes, the \((J_n)_{n\in \mathbb {N}}\) are independent, identically distributed and do not depend on \(\hat{S}_{n}\). What is more,

Similarly we can obtain the second moment (thus the standard deviation)

Proof

The results follow from integration by parts, namely \( \mathbb {E}_{}\left[ \log \lambda _n | \mathrm{del.}\right] = \int _0^1\log x dx = -1,\, \mathbb {E}_{}\left[ \log \lambda _n | \mathrm{dup.}\right] = \int _1^2\log x dx = 2\log 2 -1 \) and for the second moment \( \int _0^1\log ^2 x dx = 2,\; \int _1^2\log ^2 x dx = 2(1 - \log 2)^2\).\(\square \)

Property 3

\(\mathbb {E}_{s_0}\left[ \log \hat{S}_n\right] = \log s_0 + n\mathbb {E}_{}\left[ J_n\right] \) and \(\sigma _{s_0}\left[ \log \hat{S}_n\right] = \sqrt{n} \sigma _{}\left[ J_n\right] \) by the independence of the jumps. The Central Limit Theorem states that, asymptotically, \(\log \hat{S}_n\) is normally distributed.

Under the continuous approximation, we have a simple jumping process that is space-homogeneous in log scale. As the two first moments are finite, it asymptotically behaves like biased diffusion because of the central limit theorem. The standard deviation increases more slowly than the mean is shifted so the expected value gives a good description of the whole distribution. The bias condition is very simple: if \(\mathbb {E}_{}\left[ J_n\right] <0\), the genome shrinks on average, if \(\mathbb {E}_{}\left[ J_n\right] >0\), it grows on average with every mutation. The shrinkage condition is the same as for the discrete model: genomes asymptotically shrink if and only if \(\mu _\mathrm{{ldel}}> (2 \log 2 - 1) \mu _\mathrm{{dup}}\).

The main difference with the discrete case is that the relative amount by which genomes shrink is identical whatever the starting position (even for small genomes) and it can lead \(\log \hat{S}_n\) to have negative values, which was not possible in the discrete space. This means that once the genome becomes small (in the sense of the proof of Theorem 2) it keeps getting smaller so there is no need to prove that it will remain small (as we did in Lemma 2). This is because there are no local mutations, which could have a strong effect on small genomes.

Thus, for large genomes, the behavior of the discrete Markov chain \((\mathbb {N},\mathbf{M}_1)\) is close to the continuous approximation and is similar to biased diffusion. When genomes become smaller, the bias may become weaker because of indels and discretization effects. On the border, the state \(s = 0\) is a wall that cannot be crossed. Therefore, the discrete system is composed of a wall on one side, biased diffusion on the infinite side and an uncharacterized behavior in between. If the diffusion is biased toward the wall \((\mu _\mathrm{{ldel}}> (2 \log 2 - 1) \mu _\mathrm{{dup}})\), it is easy to imagine that the population will end up next to the wall, even though its exact final position is partly determined by small indels.

We now compute the distribution of genome size in the continuous model after one generation by weighting the \((\log \hat{S}_n)_{n\in \mathbb {N}}\) with the Poisson distribution.

Property 4

By definition, for all \(x \in \mathbb {R}\),

The expected value is \(\mathbb {E}_{s_0}\left[ \log \hat{S}_f\right] = \log s_0 + s_0 ((2\log 2 -1 )\mu _\mathrm{{dup}}- \mu _\mathrm{{ldel}})\) and the standard deviation is \( \sigma _{s_0}\left[ \log \hat{S}_f\right] =\sqrt{s_0} \sqrt{2(\log 2 - 1)^2\mu _\mathrm{{dup}}+2\mu _\mathrm{{ldel}}}.\)

The proof is given in Appendix 1. We introduce now a parameter \(k\) that will be used to compute the fraction of the population located beyond the mean of genome size after one generation plus \(k\) standard deviations. We begin by computing the latter quantity depending on \(s_0\).

Lemma 3

Let \(k \ge 1\) and \(Q_k(s_0) = \exp \left( \mathbb {E}_{s_0}\left[ \log \hat{S}_f\right] + k \sigma _{s_0}\left[ \log \hat{S}_f\right] \right) \). We call \(A = \mu _\mathrm{{ldel}}- (2\log 2 -1 )\mu _\mathrm{{dup}}\) and \(B=\sqrt{2(\log 2 - 1)^2\mu _\mathrm{{dup}}+2\mu _\mathrm{{ldel}}}\), so that \(Q_k(s_0) = \exp (\log s_0 - As_0 + kB\sqrt{s_0})\). If \((2\log 2-1)\mu _\mathrm{{dup}}<\mu _\mathrm{{ldel}}\),

-

1.

\(Q_k(s_0)\) reaches a maximum for

$$\begin{aligned} s_{0}^{\max ,(k)} = \frac{1}{A}+ k^2\frac{B^2}{8A^2}\left( 1+\sqrt{1+\frac{16A}{B^2}}\right) \end{aligned}$$ -

2.

\(Q_k(s_0)=s_0\) for a unique value \(s_\mathrm{{fixed}}^{(k)} = k^2B^2/A^2 \ge s_0^{\max ,(k)}\).

The proof is straightforward and detailed in Appendix 1. The general shape of the curve and the points \(s_0^{\max ,(k)}\) and \(s_\mathrm{{fixed}}^{(k)}\) are depicted on Fig. 2 in the case were \(k=1\) and \(\mu _\mathrm{{dup}}=\mu _\mathrm{{ldel}}=10^{-6}\). By using Chebyshev’s inequality, \(Q_k\) can be related to the quantiles of the distribution and we obtain the following proposition.

Upper bound for the median of the distribution (plot of \(Q_k\) with \(k=1,\,\mu _\mathrm{{dup}}=\mu _\mathrm{{ldel}}=10^{-6}\)). The \(x\) axis is the starting size and the \(y\) axis gives an upper bound for the median after one generation. \(s_\mathrm{{fixed}}^{(1)}\) is the point above which the probability that the genome shrinks is more than 0.5, \(s_{0}^{\max ,(1)}\) is the starting point from which growth seems to be the most likely. A genome starting from \(s_{0}^{\max ,(1)}\) may grow above \(s_\mathrm{{fixed}}^{(1)}\), but it will probably shrink in the next step. The gray area indicates this accessible but transient set of states

Proposition 1

Suppose \((2\log 2-1)\mu _\mathrm{{dup}}<\mu _\mathrm{{ldel}}\) and \(k\ge 1\).

-

1.

There is a bound \(\tilde{s}_{\max }^{(k)} \) such that

$$\begin{aligned} \forall s_0 \in \mathbb {R}_+^*, \quad \mathrm{Pr}_{s_0}\left[ \hat{S}_f \le \tilde{s}_{\max }^{(k)}\right] \ge 1 - \frac{1}{1+k^2} \end{aligned}$$In other words, we can find a threshold \(\tilde{s}_{\max }^{(k)}\) independent from \(s_0\) below which an arbitrary large part of the distribution can be found after one generation of the deletion/duplication process.

-

2.

Let

$$\begin{aligned} \tilde{s}_\mathrm{{fixed}}^{(k)} = k^2 \frac{2(\log 2 - 1)^2\mu _\mathrm{{dup}}+2\mu _\mathrm{{ldel}}}{(\mu _\mathrm{{ldel}}- (2\log 2 -1 )\mu _\mathrm{{dup}})^2} \end{aligned}$$we have

$$\begin{aligned} \forall s_0 \ge \tilde{s}_\mathrm{{fixed}}^{(k)}, \quad \mathrm{Pr}_{s_0}\left[ \hat{S}_f \le \tilde{s}_\mathrm{{fixed}}^{(k)}\right] \ge 1 - \frac{1}{1+k^2} \end{aligned}$$

Proof

The proposition is a restatement of Lemma 3 by using Cantelli’s inequality (or one-sided Chebyshev inequality). It states that

thus

The first part of the proposition follows by taking \(\tilde{s}_{\max }^{(k)}=Q_k(s_{0}^{\max ,(k)})\) where \(s_{0}^{\max ,(k)}\) is defined as in Lemma 3. The second part is obtained with \(\tilde{s}_\mathrm{{fixed}}^{(k)}=s_\mathrm{{fixed}}^{(k)}\) in Lemma 3 and by noting that \(Q'_k(s_0)<0\) for all \(s_0 \ge s_\mathrm{{fixed}}^{(k)}\), so that \(Q_k(s_0)\le Q_k(s_\mathrm{{fixed}}^{(k)})\).\(\square \)

Lemma 3 and Proposition 1 introduce two sequences of bounds that depend on a parameter \(k\). \(\tilde{s}_{\max }^{(k)}\) gives bounds for the quantiles of the distribution at generation \(t+1\) that work for every starting genome and thus any starting distribution at time \(t\). For \(k=1\), the probability to get below \(\tilde{s}_{\max }^{(1)}\) is at least 0.5 for every step. If the probabilities are seen as population densities, \(\tilde{s}_{\max }^{(1)}\) gives an upper bound for the median of the population at any step (except maybe the starting step). Increasing \(k\) increases the bound but gives even more restrictive conditions on the localization of the population at any step. For example, for \(k=2\), we have \(\tilde{s}_{\max }^{(2)} > \tilde{s}_{\max }^{(1)}\) but instead, we know that 80 % of the population is below \(\tilde{s}_{\max }^{(2)}\) at any step.

Note that this would remain true even if the individuals were selected and then mutated. Proposition 1 says that no matter which individuals are selected (i.e., no matter the set of starting sizes \(s_0\)), the offspring will most likely be located below \(\tilde{s}_{\max }^{(1)}\) at the next step. Figure 2 helps finding out the outcome of the selection-mutation process. To predict the impact of the mutations on size, one can interpret the \(x\)-axis as being the starting size and the \(y\)-axis as giving some likelihood about the final size. Contrary to what could be naively expected, a fitness function that would select the genomes around \(s_{0}^{\max ,(1)}\) would lead to the largest genomes at the next generation, whereas a fitness function that would strongly select very large genomes would lead to much smaller genomes, as these large genomes are unable to maintain their size, even for one generation.

We also illustrate bound \(\tilde{s}_\mathrm{{fixed}}^{(k)}\), which is not a bound that works for any starting distribution but whose expression is much simpler than that of \(\tilde{s}_{\max }^{(k)}\). Genomes starting from \(\tilde{s}_\mathrm{{fixed}}^{(1)}\) have a probability higher than 0.5 to shrink, showing that they are already strongly unstable. This shrinkage probability is far worse for genomes larger than \(\tilde{s}_\mathrm{{fixed}}^{(1)}\). The analysis shows that it is possible for genomes starting around \(s_{0}^{\max ,(k)}\) to increase above \(\tilde{s}_\mathrm{{fixed}}^{(k)}\) but due to the definition of \(\tilde{s}_\mathrm{{fixed}}^{(k)}\), this behavior can only be transient. \(\tilde{s}_\mathrm{{fixed}}^{(k)}\) is a plausible upper bound for the average behavior, even when selection is applied. In the simple case where \(\mu _\mathrm{{ldel}}=\mu _\mathrm{{dup}}=\mu _\mathrm{{dupdel}}\), we have

More generally, if duplication and deletion rates are mechanically linked such that they are proportional to each other, say \(\mu _\mathrm{{dup}}= \lambda \mu _\mathrm{{ldel}}\) with \(\lambda < 1 / (2\log 2 -1)\), then

These relations suggest that the bound on genome size would be roughly inversely proportional to the rate of large deletions and duplications.

This analysis was done for a simplified model (continuous approximation) but it is arguably a good approximation for large genomes (genomes for which indels are negligible as in the definition of \(\tilde{s}\)), even in the discrete model involving all types of mutations. We expect that Proposition 1 can be obtained, with some variations, for the discrete case by showing not only that the first moment is biased (see \(\delta \) in Lemma 2), but also that the standard deviation increases more slowly with the number \(n\) of mutations than the first moment decreases. In this case, this would mean that, regardless of the selection applied to the genomes, we can capture an arbitrarily large part of the distribution in a finite domain at any time step.

5 Generalizations and Interpretations

Theorem 2 shows that there is an asymptotic distribution \(\varvec{\nu }_{\infty }\) for genome sizes in the absence of selection. The convergence of the distribution implies that an arbitrary large part of genomes is located beneath a finite size. What is more, the convergence does not depend on the rate of small insertions (possibly including transposable elements) and small deletions. For uniform distributions of duplications and large deletions, the distribution of genome sizes converges for equal rates of duplication and large deletions and even if duplications are twice as frequent and deletions. However, as mentioned in the presentation of the model, the uniform distribution for the sizes of duplications and large deletions is not necessary for the proof. In the first subsection below, we give a more general condition for the existence of a stationary distribution that encompasses a larger family of distributions. In the remainder of the section, we relate the results obtained to a more general model with selection in the case of an infinite population and their implications for a finite population.

5.1 Extension of Theorem 2 to More General Distributions for Duplications and Deletions

We have initially hypothesized these distributions to be uniform for mathematical convenience, but all the proofs remain true under conditions similar to Property 1. All the results hold if the expected change of genome size for small indels, for duplications and large deletions converges to a constant in a specific scale given by a positive and increasing function \(f\). This is the idea of invariance by translation illustrated in Fig. 1. In the general case, the existence of a stationary distribution is also determined by a condition on duplication and deletion rates (and in extreme cases indel rates) that depends only on the average size of jumps.

Corollary 1

(Generalization of Theorem 2) Suppose we have distributions of duplications, large deletions and indels, such that there exists a positive and increasing scaling function \(f\) that verifies the following conditions. For \(\Delta (s) = \mathbb {E}_{}\left[ f(S_{n+1}) - f(S_n) | S_n=s\right] \):

-

if the (n+1)th mutation is a deletion, \(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow }\delta _\mathrm{{ldel}} \).

-

if the (n+1)th mutation is a duplication,\(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow } \delta _\mathrm{{dup}}\).

-

if the (n+1)th mutation is an small insertion, \(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow } \delta _\mathrm{{ins}}\).

-

if the (n+1)th mutation is an small deletion, \(\Delta (s) \underset{s\rightarrow +\infty }{\longrightarrow } \delta _\mathrm{{sdel}}\).

where \(\delta _\mathrm{{ldel}}\le 0,\, \delta _\mathrm{{dup}}\ge 0,\, \delta _\mathrm{{ins}}\ge 0\) and \(\delta _\mathrm{{sdel}}\le 0\) are constants among which at least one is nonzero.

Then the Markov chain \((\mathbb {N}^*,\mathbf{M}_G)\) has a unique asymptotic stationary probability vector \(\varvec{\nu }_{\infty }\) if

If the duplications and deletions scale more rapidly than indels, \(\delta _\mathrm{{ins}}=\delta _\mathrm{{sdel}}=0\) and the condition simplifies to

except for \(\delta _\mathrm{{dup}}=0\), in which case there is always a stationary distribution.

The proof is the same as for Theorem 2, by replacing \((2\log 2-1)\mu _\mathrm{{dup}}-\mu _\mathrm{{ldel}}\) by the left hand-side of the new condition (inequality (6)), in particular for the definition of the global \(\delta \) that incorporates the average impact of all mutations.

If the duplication and deletion processes are of multiplicative nature (but not necessarily uniform), \(f = \log \) is the natural choice in the formula above, as used throughout the manuscript. If the width of the deletion and duplication distribution does not scale proportionally to \(s_0\), another choice of \(f\) has to be made, such that the expected change tends to a constant. In the extreme case where the average jump size already tends to a constant in normal scale \((f=id_\mathbb {N})\) for both deletions and duplications, the proof still works but the condition also incorporates the indel rates and their mean jump size. However we expect that, if the impact of indels is bounded as in our model, they will become negligible and they will not appear in the condition for realistic duplication and deletion distributions.

For example, we can consider all distributions of quasi-multiplicative nature. Corollary 1 typically applies if the losses and gains considered are relative. Roughly speaking, this happens if the distribution of gains and losses for some fraction of the genome is always the same (e.g., there is always the same probability to lose less than 5, 10% or any other fraction of the genome, no matter the initial size). In this case, the relation above applies no matter whether the distribution is exponentially decreasing, uniform, multimodal, gamma, etc. What is more, if the relative gains and losses are symmetrical (in linear scale), there will be a stationary distribution for equal duplication and deletion rates and also for duplication rates moderately higher than deletion rates (the exact relation has to be computed for each distribution).

If the second moment converges to a finite value in the scale given by \(f\), as is the case for multiplicative or quasi-multiplicative distributions, the line of analysis given in Sect. 4 can be used. In other words, according to Chebyshev’s inequality, it will be possible to find bounds that will hold for every step of the generation process. As a result, as discussed below, selection will not be able to lead to infinite genome growth in these cases also. We expect that the proof can be extended for a second moment that does not converge in the scale given by \(f\), but is bounded. In this case, the analysis may become more technical and the bounds given by Chebyshev’s inequality very weak, but would still imply that selection cannot lead to infinite genome growth.

5.2 Interpretation for General Genome Structures in the Presence of Selection

We did not introduce selection in the model presented until here. In this section, we propose a more general framework for which our results hold but for which selection can be based on any feature of the genome or of the population. Let \(\Omega \) be the space of all genome states corresponding to different genome architectures, e.g., all sequences of base pairs drawn from {A,C,G,T}. We define a population vector \(\pi _t\) such that \(\forall t \in \mathbb {N}\), \(\pi _t\) is a probability measure on \(\Omega \), corresponding to the density of an infinite population. We consider an arbitrary starting population \(\pi _0\). For every generation, we assume that we can distinguish the selection process from the mutation process. We call \(\mathrm{Sel}_t\) the selection operator. Selection may change with time. The outcome of selection has to be a probability measure whose support is expected to be identical to the support of \(\pi _t\). Once selection has operated, we suppose that the population is mutated according to an operator \(M\). Again, the outcome is a probability measure.

The density of the population at step \(t+1\) in the space \(\Omega \) of genome states is given by

We suppose that genomes undergo the same mutations as those studied until here (small indels, large deletions, duplications) and other mutations that do not change genome size but the detailed architecture (e.g., point mutations, inversions, translocations). Our results apply to this model under the following condition:

Projection condition We suppose that there is a projection \(\varphi :\Omega \rightarrow \mathbb {N}\) that is compatible with transitions induced by mutations. Here, the projection is \(\mathrm{size}:\Omega \rightarrow \mathbb {N}\) that associates a genome with its size in number of base pairs. For two genomes \(\omega _1,\omega _2 \in \Omega \) such that \(\mathrm{size}(\omega _1)=\mathrm{size}(\omega _2)=s_0\), the probability that \(\omega _1\) or \(\omega _2\) end up having some size \(s_f\in \mathbb {N}\) after one mutation is exactly the same, even if \(\omega _1\) and \(\omega _2\) have a different detailed architecture (e.g., the position or the number of genes). The transitions in the genome size space depend only on the initial genome size.

In this case, the transitions in terms of size are given by the matrix \(\mathbf{M}_G\) (the additional mutations do not change genome size and thus they do not change the mutation paths in \((\mathbb {N},\mathbf{M}_1))\). To link the models formally, we define a projection \(\mathbf{size}_{\varvec{\pi }}\) to obtain, from the population density \(\pi _t\) in \(\Omega \), the population density in the space \(\mathbb {N}^*\) of genome sizes:

In matrix notation, the density of the population at step \(t+1\) in the space \(\mathbb {N}^*\) of genome sizes is given by

In the absence of selection, the selection operator is the identity function, thus

which is the equation studied in this article. According to Theorem 2, in the absence of selection, the marginal distribution of genome size of the population \(\pi _t\) is going to converge if duplications are not more than 2.6 times more frequent than large deletions. However, we cannot show that the marginal distribution of genome size will converge in the presence of selection. Instead, we can characterize upper bounds for its quantiles.

If we consider the general model in Eq. (7) or its projection on size in Eq. (8), we see that selection occurs prior to mutations. The selection operator returns a vector \(\mathrm{Sel}_t(\pi _t)\) for which the bounds found in Proposition 1 will hold. For example, by choosing \(k\) for \(\tilde{s}_{\max }^{(k)}\), we can say that for all \(t \ge 1\), at most \(100/(1+k^2) \%\) of the population contained in \(\pi _{t+1}\) will have a size larger than \(\tilde{s}_{\max }^{(k)}\), no matter how selection operated. Because we can find a bound that works at any generation, the spontaneous mechanism we have just described cannot be overcome by selection.

As these are upper bounds, they impose an upper limit to viable genome size but do not describe accurately where a population will be able to stabilize. This will be determined by the selection operator and the details of all mutation processes. Without further details on the selection operator, it is impossible to say whether the population will reach a stationary distribution and how far from the bounds they will evolve. Nonetheless, Fig. 2 already gives some intuition about the interactions between the selection and the mutation operators, as the size of the selected genomes has a strong impact on the outcome. As a result, selection determines how the population stabilizes with respect to these bounds and how close to the bounds individuals eventually get.

5.3 Generalization to a Finite Population

The remarks for an infinite population hold to a lesser extent for finite-sized populations of independently mutating individuals because the results of Sects. 3 and 4 hold for a single individual from a probabilistic point of view. If we decouple the selection and the mutation steps, we can have information on the probabilities of genomes being below some bound using Proposition 1.

Basically, the idea is the same as in the infinite population case, except that the evolution of individuals is stochastic and Eq. (7) is not a good description for this kind of processes. However, if we assume that for generation \(t,\, I_t\) individuals belonging to \(\Omega \) survived, we can use the conclusions of Proposition 1. As explained for an infinite population, Proposition 1 allows us to choose a threshold \(\tilde{s}_{\max }^{(k)}\) such that the probability that any of the \(I_t\) mutating genomes goes above \(\tilde{s}_{\max }^{(k)}\) is at most \(1/(1+k^2)\), where \(k\) can be chosen arbitrarily large. Because the individuals mutate independently, an upper bound on the number of genomes that are above \(\tilde{s}_{\max }^{(k)}\) at any generation is given by a binomial distribution \(\mathcal {B}(I_t,1/(1+k^2))\). The proportion of genomes supposed to be above \(\tilde{s}_{\max }^{(k)}\) is the same on average for all \(I_t \in \mathbb {N}: 100/(1+k^2) \%\), but the standard deviation around this proportion becomes smaller when \(I_t\) increases. When \(I_t\) tends to infinity, we find the same result as in the infinite case.

6 Numerical Illustration and Practical Implications for the Study of Real Genomes

The theoretical results presented above may have important practical implications for the study of real genomes. To illustrate this, we present here a quantitative, numerical example of spontaneous genome size evolution, with parameter values taken from experimental data. Specifically, we simulated a “mutation accumulation” experiment on a genome made up of a single 4-Mb chromosome—which is roughly the size of the genomes of Escherichia coli and Salmonella enterica—, with spontaneous mutation rates and event size distribution derived from experimental data in both species (see details below). Mutation accumulation experiments aim at unraveling the rates and spectrum of spontaneous mutations. To do so, several lineages are propagated independently in the laboratory, starting from the same ancestor. Each lineage experiences regular, frequent single-cell population bottlenecks, so that natural selection cannot operate efficiently and evolution proceeds almost only by genetic drift. A key example of a mutation experiment with E. coli can be found in Kibota and Lynch (1996). Here, we mimicked an ideal mutation accumulation experiment, by simulating the evolution of genome size in 10,000 independent lines, all starting with an initial size of \(4\times 10^6\) bp, during 1,000 generations, with a single-cell bottleneck at each generation. This is an “ideal” experiment in the sense that, contrary to the real laboratory experiments, we do not have to let the population grow between two bottlenecks or to pick up only the viable organisms. In the simulations, natural selection cannot act at all. No mutation will be filtered by natural selection, thereby allowing for a direct access to the spontaneous rates and spectrum of mutations.

In these simulated lineages, four types of mutations could occur: segmental duplications, large deletions, small insertions and small deletions. At each replication, the number of each type of event was drawn from a Poisson law with mean \(\mu _\mathrm{{type}}s\), where \(\mu _\mathrm{{type}}\) is the per-bp rate of this type of mutation and \(s\) is the size of the genome before the replication. The events were performed in a random order.

The rate of large deletions \(\mu _\mathrm{{ldel}}\) was set to \(3.778\times 10^{-9}\) per bp, which yields, in the initial state, 0.017 deletions per genome per generation, as measured in Salmonella enterica (Nilsson et al. 2005). To simulate an unbiased process, we also set the segmental duplication rate \(\mu _\mathrm{{dup}}\) to \(3.778\times 10^{-9}\) per bp. The rates of small insertions and small deletions were both set to twice the rate of large deletions and duplications. The size of a small indel was uniformly drawn between 1 and 40 bp, regardless of the current chromosome size.

For the size of duplications and large deletions, we tested two size distributions: (i) the uniform distribution between 1 and the current chromosome size, as introduced in Sect. 2, and (ii) a lognormal distribution truncated at the current chromosome size, as explained below. We obtained this lognormal distribution by fitting the size distribution of 127 rearrangements observed in evolving cultures of Escherichia coli (D. Schneider, personal communication) and Salmonella enterica (Nilsson et al. 2005; Sun et al. 2012). Only experimentally verified duplications, deletions and inversions were considered. Figure 3 (left) shows the empirical cumulative distribution function for this dataset, as well as the fitted lognormal distribution. Its mean is 10.1214 in natural logarithmic scale (4.3957 in \(log_{10}\) scale) and its standard deviation is 2.5602 (1.1119 in \(log_{10}\) scale). This lognormal distribution is a rather accurate representation of the events occurring on the initial 4 Mb genome. No experimental data are available, however, for the size distribution of the events occurring in mutant E. coli or Salmonella with a significantly different genome size. More generally, we do not know how the size of the spontaneous events scales with genome size in a particular species. We decided to simulate here the weakest possible scaling: instead of varying the parameters of the lognormal distribution with genome size, we used the same lognormal distribution \(\ln \mathcal {N}(10.1214, 2.5602)\) for any genome size, except that this distribution was truncated at the current chromosome size. Indeed, a segmental duplication (resp. deletion) cannot duplicate (resp. delete) more than the complete chromosome. In practice, an event size was drawn from the distribution \(\mathcal {N}(10.1214, 2.5602)\) with independent redraw as long as the event size exceeded the current genome size. As shown by Fig. 3 (right), this truncation induces a variation of the mean event size with the size of the genome. This variation is much weaker than the one of the uniform distribution, but it will prove important in the outcome of the simulations.

Left Empirical cumulative distribution function for the size (in bp) of for 107 experimentally verified rearrangements, observed in evolving cultures of Escherichia coli (D. Schneider, personal communication) and Salmonella enterica (Nilsson et al. 2005; Sun et al. 2012). The green curve is the distribution function of the lognormal distribution \(\ln \mathcal {N}(10.1214, 2.5602)\). Right Expected size of a duplication or a deletion as a function of genome size, if the size is drawn from a uniform distribution between 1 and the size of the genome (dotted line), or if the size is drawn from the lognormal distribution \(\ln \mathcal {N}(10.1214, 2.5602)\) truncated at the size of the genome (solid line)

In terms of Corollary 1, for the uniform distribution, the scaling function \(f\) is the logarithm. In this logarithmic scale, we know from Property 1 that \(\delta _\mathrm{{ldel}} = -1,\, \delta _\mathrm{{dup}} = 2\log 2 - 1\), and \(\delta _\mathrm{{ins}} = \delta _\mathrm{{sdel}}=0\). With \(\mu _\mathrm{{dup}} = \mu _\mathrm{{ldel}} = 3.778\times 10^{-9}\), we obtain \(\mu _\mathrm{{dup}}\delta _\mathrm{{dup}} + \mu _\mathrm{{ldel}}\delta _\mathrm{{ldel}} + \mu _\mathrm{{ins}}\delta _\mathrm{{ins}} + \mu _\mathrm{{sdel}}\delta _\mathrm{{sdel}} \simeq -2.32\times 10^{-9}\). This negative value tells us that genome size will reach a unique asymptotic stationary distribution, and hence that it will not grow infinitely.

For the truncated lognormal distribution, the scaling function \(f\) is simply the identity function, thus we stay in the normal scale. For infinite genome sizes, the truncated lognormal distribution converges to the complete lognormal distribution. Hence, the event size tends to a constant for infinite genomes, and we have \(\delta _\mathrm{{ldel}} = - e^{10.1214+\frac{2.5602^2}{2}} \simeq - 6.59\times 10^5,\, \delta _\mathrm{{dup}} \simeq + 6.59\times 10^5\). For the small events, we have \(\delta _\mathrm{{ins}} = + 20\) and \(\delta _\mathrm{{sdel}} = - 20\). With \(\mu _\mathrm{{dup}} = \mu _\mathrm{{ldel}}\) and \(\mu _\mathrm{{ins}} = \mu _\mathrm{{sdel}}\), we obtain \(\mu _\mathrm{{dup}}\delta _\mathrm{{dup}} + \mu _\mathrm{{ldel}}\delta _\mathrm{{ldel}} + \mu _\mathrm{{ins}}\delta _\mathrm{{ins}} + \mu _\mathrm{{sdel}}\delta _\mathrm{{sdel}} = 0\). With this null value, we cannot predict the asymptotic behavior of genome size. The simulations below will show, however, that the variation of event size for “small” genome sizes (Fig. 3) suffices to induce a shrinkage.

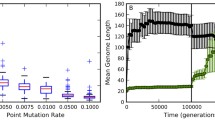

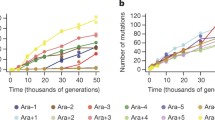

Indeed, after 1,000 generations without selection, genome shrinkage was observed in 99 % of the lines for the uniform distribution, and in 61 % of the lines for the truncated lognormal distribution, although (i) the rates of duplications and large deletions were identical, (ii) the rates of small insertions and small deletions were identical, (iii) the event size distributions were also identical for gains and losses. Both proportions are significantly different from 0.5 (\(\chi ^2\) test with 1 degree of freedom, both p values \(< 2\times 10^{-16}\)). The median DNA loss was \(-3.8\times 10^6\) bp in the uniform case, and \(-6.3\times 10^5\) bp in the lognormal case. In both cases, this DNA loss is statistically different from 0 (Wilcoxon signed rank test, both p values \(< 2\times 10^{-16}\)). As shown by Table 1, this shrinkage was neither due to a bias in the small indel counts nor to an excess of deletions over duplications, but to deletions being on average longer than duplications. By looking at these polymorphisms only, one might be tempted to conclude that the size distribution of the spontaneous events is different for the deletions and for the duplications, while we know here that both types of events actually had the same size distribution for any starting genome size.

To further illustrate this spontaneous mutational dynamics toward shrinkage—despite equal rates of duplication and deletion, and despite identical spontaneous size distributions for both event types—, we propagated 100 mutation accumulation lines, again without any selection, until they reached the size of \(10^4\) bp, starting from \(4\times 10^6\) bp as previously. As shown by Fig. 4, for all lines, this shrinkage of two orders of magnitude occurs in less than 75,000 generations for the uniform case, and in less than 250,000 generations for the truncated lognormal case.

Spontaneous evolution of genome size in 100 mutation accumulation lines, under the uniform distribution (left) or under the truncated lognormal distribution (right) for the size of the rearrangements. The thick line indicates the median of the 100 lines. The simulations were stopped when genome size became inferior to \(10^4\) (which would correspond to fewer than 10 genes in a typical bacterial genome)

The practical implication of this spontaneous dynamics toward shrinkage is that mutation accumulation experiments where deletions are longer than duplications should be interpreted with caution, as this pattern can be obtained with identical event size distributions. Moreover, additional simulations with higher duplication rates indicate that the median change in genome size after 1,000 generations remains negative for \(\mu _\mathrm{{dup}} =1.2 \mu _\mathrm{{ldel}}\) for the truncated lognormal distribution, or for \(\mu _\mathrm{{dup}} = 2 \mu _\mathrm{{ldel}}\) for the uniform distribution (in agreement with Theorem 2). Thus, a net decrease in genome size in a mutation accumulation experiment neither implies that the deletion rate is higher than the duplication rate, nor that spontaneous deletions tend to be longer than spontaneous duplications for a given starting point. It might just as well come from a variation of event count and event size with chromosome size.

7 Discussion

In this section, we discuss the relevance of our two main results: (i) the condition for the existence of a stationary distribution for genome size, and (ii) the upper bounds for the quantiles of the genome size distribution at any time step. Finally, we investigate the link between our results and current theories on genome size evolution.

7.1 On the condition for the existence of a stationary distribution for genome size

The model and the results presented here allow to determine the global spontaneous behavior of genome size in a variety of conditions. It allows for a large family of distributions for indels, duplications and deletions. Conveniently, the condition for the existence of an asymptotic distribution only depends on the first moment of these distributions in a scale adapted to the distribution that scales most with genome size (usually large deletions and/or duplications). The most difficult part may be finding the scaling function, but once it is found, the global dynamics is dictated by a very simple condition on the mutation rates, given in Corollary 1. Usually, the condition will only involve the ratio of duplication over large deletion rates. When the condition is met and no selection is applied, the genomes converge toward an asymptotic stationary distribution. From a biological point of view, this means that genomes do not grow indefinitely. As our proof highlights, there is a threshold above which they will undergo systematic shrinkage.

Finding the appropriate scale might be one of the most important challenges but can also be very simple for some families of distributions. For example, if the duplication and deletion processes are of quasi-multiplicative nature, this condition is obtained in logarithmic scale, as illustrated throughout the paper and in Fig. 1. Biologically speaking, the strength of this scaling is that it breaks apparent symmetries. In the case illustrated in Fig. 1, it might look as if the duplications and deletions are symmetrical because for every starting position, losses and gains compensate each other. Naively, one might conclude that the process is unbiased. However, from the genome’s point of view as a walker along a Markov chain, symmetry means reversibility of jumps: do the losses and gains that I undergo when I move compensate each other? If there is a scaling in which the average size of jumps does not depend (asymptotically) on the starting position, we can answer the question. In the rescaling shown in Fig. 1, it is clear that the process that we thought unbiased at first, is in fact biased toward losses. Indeed, after a loss is undergone, the average size of rearrangement diminishes with genome size, such that the average loss will always be larger than the next average gain.

As shown in Sect. 6, even if we choose a function that does not scale asymptotically (average gains and losses due to rearrangements tend to a constant for large genomes in normal scale), a scaling in gains and losses for small genomes will still induce a bias toward losses. The process based on the truncated lognormal distributions for rearrangements that we illustrated might not converge in theory for equal duplication and deletion rates (Corollary 1 does not apply) but, in practice, the bulk of genomes will still undergo shrinkage because average losses are larger than average gains, notwithstanding the symmetry of the rearrangement distributions.

From a mathematical point of view, our model displays similarities with models for the so-called mini- and micro satellite loci, where a short sequence of DNA is highly repeated (Charlesworth et al. 1994). Mathematical models were designed for the dynamics of the number of repeats in microsatellites, incorporating additive mechanisms similar to indels in our model and/or multiplicative mechanisms due to recombination. In models incorporating only additive effects, the dynamics of the number of elements is relatively simple as it reflects the difference between average gains and losses (Krüger and Vogel 1975; Walsh 1987; Moody 1988; Basten and Moody 1991; Caliebe et al. 2010). However, this implies that selection is necessary to prevent the number of repeats from going down to one or from going up to infinity. When multiplicative effects are introduced, the dynamics become less trivial, as noted in the present study. Distributions of the number of elements can converge around a finite number of elements in situations where average gains seemed to be higher than average losses (Stephan 1987; Falush and Iwasa 1999).

Adding additive effects (such as indels) to a multiplicative model does not change the existence of the stationary distribution but changes some important features. Corollary 1 implies that when multiplicative and additive processes are at work, we can have non-trivial stationary distributions without the need for selection. This is particularly true in non-intuitive cases, when the apparent bias is toward gains, but the rescaled analysis predicts average loss. In those cases, the mode of the distribution cannot be trivially predicted to lie at the origin or to diverge. For example, Falush and Iwasa (1999) predicted a non-trivial stationary distribution for the number of repeats, where most individuals own more than one or two copies. Compared to the microsatellite models, the strength of our result is that it can be used to predict the exact threshold condition with simple calculations, without having to make any approximation. Moreover, in most models where the size of the mutation scales with the number of elements, each mutation type is allowed to occur at most once per reproduction, which may be appropriate for the study of microsatellites, but unrealistic for large genomes.

The interplay between additive and multiplicative processes might also be underlined in the study of genome reduction. Mira et al. (2001) have argued that indel biases and losses through large deletions are good candidates to explain the reductive genome evolution undergone by some bacterial species. Several models and papers have used this general idea but have focused only on biases in the small indel patterns (Petrov 2000; Leushkin et al. 2013). Our numerical examples (Sect. 6) show that such a bias is not necessary for observing genome shrinkage, as it suffices that there be a positive scaling in the size of rearrangements. A symmetrical distribution of gains and losses, or even a slight bias toward gains will result in genome size tending to decrease with time (Fig. 4). The “rearrangement bias” (due to the scaling of rearrangements) and the indel bias are two different biases that might be complementary. According to our model and simulations, the rearrangement bias would set an upper size to genome size but would not necessarily lead to the convergence toward a particular value below this bound (notably in the presence of selection), while the indel bias could not lead to infinite growth but would strongly affect the convergence of genome size within stable genomes (see discussion and figure in Sect. 7.3).

7.2 On the Upper Bounds for the Quantiles of the Genome Size Distribution

In the model presented here, we took into account the possibility of several mutations in one generation. This does not change the condition for a stationary distribution given by the first-order dynamics, but highlights a fragility of large genomes, which we quantified by calculating upper bounds for the quantiles of the genome size distribution after one generation. Contrary to the mutational Markov chain, the generational Markov chain shows that there is a non-negligible probability that the genomes located above a given threshold, no matter how large they may be, collapse. Strikingly, as already hinted in Lemma 2 and the proof of Theorem 2, once the threshold is crossed, the probability of collapsing even increases with genome size, because the average loss due to the increasing number of mutations grows more rapidly than the genome size.

In order to quantify this phenomenon further, including the second moment gave a more precise picture. In the case of multiplicative processes, the standard deviation is small compared to the average shifts, such that the average behavior identified in Sect. 3 gives in fact a good picture of the fate of large genomes. This is illustrated in Fig. 2, where it appears that after one generation, the genomes which manage to maintain or increase their size with probability higher than 0.5 are restricted to a finite domain.

The existence of these bounds has two main implications. First, they are not bounds on the stationary distribution but on the whole process. This means that even if a selective force is applied, they are verified for every surviving individual. In other words, selection cannot help overcome these bounds, even if the selection operator favors the largest genomes. On the contrary, as depicted on Fig. 2, very large genomes that may be selected are going to be the least robust, as they become even smaller than the rest of the population and their genome is going to undergo major shuffling. This result is in contrast with other models for evolution with selection on a unbounded fitness space, which showed that the growth speed of the first moment of the fitness distribution converges to a positive constant, even when a density-dependent cut-off prevented the fittest individuals to replicate (Tsimring et al. 1996; Brunet and Derrida 1997). We do not need here such a cut-off to prevent infinite genome growth, even if selection would favor the largest genomes. To analyze more rigorously our model in the presence of selection, a possibility would be to consider a Markov process in the space of measures on \(X\), as in the evolutionary models proposed by Fleming and Viot (1979), Champagnat et al. (2006).