Abstract

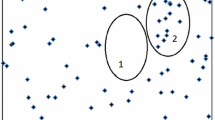

Software testing is an approach that ensures the quality of software through execution, with a goal being to reveal failures and other problems as quickly as possible. Test case selection is a fundamental issue in software testing, and has generated a large body of research, especially with regards to the effectiveness of random testing (RT), where test cases are randomly selected from the software’s input domain. In this paper, we revisit three of our previous studies. The first study investigated a sufficient condition for partition testing (PT) to outperform RT, and was motivated by various controversial and conflicting results suggesting that sometimes PT performed better than RT, and sometimes the opposite. The second study aimed at enhancing RT itself, and was motivated by the fact that RT continues to be a fundamental and popular testing technique. This second study enhanced RT fault detection effectiveness by making use of the common observation that failure-causing inputs tend to cluster together, and resulted in a new family of RT techniques: adaptive random testing (ART), which is random testing with an even spread of test cases across the input domain. Following the successful use of failure-causing region contiguity insights to develop ART, we conducted a third study on how to make use of other characteristics of failure-causing inputs to develop more effective test case selection strategies. This third study revealed how best to approach testing strategies when certain characteristics of the failure-causing inputs are known, and produced some interesting and important results. In revisiting these three previous studies, we explore their unexpected commonalities, and identify diversity as a key concept underlying their effectiveness. This observation further prompted us to examine whether or not such a concept plays a role in other areas of software testing, and our conclusion is that, yes, diversity appears to be one of the most important concepts in the field of software testing.

Similar content being viewed by others

References

Armour P G. Not-defect, the mature discipline of testing. Commun ACM, 2004, 47: 15–18

Chen T Y, Cheung S C, Yiu S M. Metamorphic Testing: a New Approach for Generating Next Test Cases. Technical Report HKUST-CS98-01. Hong Kong University of Science and Technology, 1998

Pacheco C, Lahiri S K, Ernst M D, et al. Feedback-directed random test generation. In: Proceedings of the 29th International Conference on Software Engineering, Washington, DC, 2007. 75–84

Groce A, Holzmann G J, Joshi R. Randomized differential testing as a prelude to formal verification. In: Proceedings of the 29th International Conference on Software Engineering, Washington, DC, 2007. 621–631

Bati H, Giakoumakis L, Herbert S, et al. A genetic approach for random testing of database systems. In: Proceedings of the 33rd International Conference on Very Large Data Bases, Vienna, 2007. 1243–1251

Arcuri A, Iqbal M Z, Briand L. Black-box system testing of real-time embedded systems using random and search-based testing. In: Proceedings of the 22nd IFIP WG 6.1 International Conference on Testing Software and Systems, Natal, 2010. 95–110

Regehr J. Random testing of interrupt-driven software. In: Proceedings of the 5th International Conference on Embedded Software, Jersey City, 2005. 290–298

Chen T Y, Yu Y T. On the relationship between partition and random testing. IEEE Trans Softw Eng, 1994, 20: 977–980

Chen T Y, Tse T H, Yu Y T. Proportional sampling strategy: a compendium and some insights. J Syst Softw, 2001, 58: 65–81

Chen T Y, Yu Y T. The universal safeness of test allocation strategies for partition testing. Inform Sci, 2000, 129: 105–118

Chen T Y, Yu Y T. On the expected number of failures detected by subdomain testing and random testing. IEEE Trans Softw Eng, 1996, 22: 109–119

Chan F T, Chen T Y, Mak I K, et al. Proportional sampling strategy: guidelines for software testing practitioners. Inform Softw Technol, 1996, 28: 775–782

Chen T Y, Leung H, Mak I K. Adaptive random testing. In: Proceedings of the 9th Asian Computing Science Conference, Chiang Mai, 2004. 320–329

Chen T Y, Kuo F-C, Merkel R G, et al. Adaptive random testing: the ART of test case diversity. J Syst Softw, 2010, 83: 60–66

Chen T Y, Merkel R. An upper bound on software testing effectiveness. ACM Trans Softw Eng Methodol, 2008, 17: 1–27

Weyuker E J, Jeng B. Analyzing partition testing strategies. IEEE Trans Softw Eng, 1991, 17: 703–711

Chen T Y, Merkel R, Eddy G, et al. Adaptive random testing through dynamic partitioning. In: Proceedings of the 4th International Conference on Quality Software, Washington, DC, 2004. 79–86

Mayer J. Lattice-based adaptive random testing. In: Proceedings of the 20th IEEE/ACM International Conference on Automated Software Engineering, New York, 2005. 333–336

Chan K P, Chen T Y, Towey D. Restricted random testing: adaptive random testing by exclusion. Int J Softw Eng Knowl, 2006, 16: 553–584

Ciupa I, Leitner A, Oriol M, et al. ARTOO: adaptive random testing for object-oriented software. In: Proceedings of the 30th International Conference on Software Engineering, Leipzig, 2008. 71–80

Tappenden A F, Miller J. A novel evolutionary approach for adaptive random testing. IEEE Trans Rel, 2009, 58: 619–633

Shahbazi A, Tappenden A F, Miller J. Centroidal voronoi tessellations—a new approach to random testing. IEEE Trans Softw Eng, 2013, 39: 163–183

Tappenden A F, Miller J. Automated cookie collection testing. ACM Trans Softw Eng Methodol, 2014, 23: 3

Hemmati H, Arcuri A, Briand L C. Achieving scalable model-based testing through test case diversity. ACM Trans Softw Eng Methodol, 2013, 22: 6

Chen T Y, Kuo F-C, Merkel R G, et al. Mirror adaptive random testing. Inform Softw Technol, 2004, 46: 1001–1010

Chen T Y, Kuo F-C, Merkel R G. On the statistical properties of testing effectiveness measures. J Syst Softw, 2006, 79: 591–601

Anand S, Burke E, Chen T Y, et al. An orchestrated survey on automated software test case generation. J Syst Softw, 2013, 86: 1978–2001

Hamlet D, Taylor R. Partition testing does not inspire confidence. IEEE Trans Softw Eng, 1990, 16: 1402–1411

Morasca S, Serra-Capizzano S. On the analytical comparison of testing techniques. In: Proceedings of the ACM SIGSOFT International Symposium on Software Testing and Analysis, Boston, 2004. 154–164

Liu H, Kuo F-C, Towey D, et al. How effectively does metamorphic testing alleviate the oracle problem? IEEE Trans Softw Eng, 2014, 40: 4–22

Harman M, McMinn P, Shahbaz M, et al. A Comprehensive Survey of Trends in Oracles for Software Testing. Technical Report CS-13-01, University of Sheffield. 2013

Dijkstra E W. Structured programming. In: Buxton J N, Randell B, eds. Software Engineering Techniques. NATO Science Committee, 1970

Xie X, Wong W E, Chen T Y, et al. Metamorphic slice: an application in spectrum-based fault localization. Inform Softw Technol, 2013, 55: 866–879

Rao P, Zheng Z, Chen T Y, et al. Impacts of test suite’s class imbalance on spectrum-based fault localization techniques. In: Proceedings of the 13th International Conference on Quality Software, Nanjing, 2013. 260–267

Hamel G. The Future of Management. Boston: Harvard Business School Press, 2007

Kingl A. Diversity helps to discern: why cross-company dialogue delivers (Part 2). South China Morning Post: Education Post, March 6, 2013

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, T.Y., Kuo, FC., Towey, D. et al. A revisit of three studies related to random testing. Sci. China Inf. Sci. 58, 1–9 (2015). https://doi.org/10.1007/s11432-015-5314-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11432-015-5314-x