Abstract

The rapid development of deep learning provides great convenience for production and life. However, the massive labels required for training models limits further development. Few-shot learning which can obtain a high-performance model by learning few samples in new tasks, providing a solution for many scenarios that lack samples. This paper summarizes few-shot learning algorithms in recent years and proposes a taxonomy. Firstly, we introduce the few-shot learning task and its significance. Secondly, according to different implementation strategies, few-shot learning methods in recent years are divided into five categories, including data augmentation-based methods, metric learning-based methods, parameter optimization-based methods, external memory-based methods, and other approaches. Next, We investigate the application of few-shot learning methods and summarize them from three directions, including computer vision, human-machine language interaction, and robot actions. Finally, we analyze the existing few-shot learning methods by comparing evaluation results on miniImageNet, and summarize the whole paper.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton G. ImageNet classification with deep convolutional neural networks. Adv Neural Infor Process Syst, 2012, 25: 1097–1105

Cai G R, Yang S M, Du J. Convolution without multiplication: A general speed up strategy for CNNs. Sci China Tech Sci, 2021, 64: 2627–2639

Zeiler M D, Fergus R. Visualizing and understanding convolutional networks. In: Proceedings of the European Conference Computer Vision. Zurich, 2014. 818–833

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: Proceedings of the IEEE Conference Computer Vision and Pattern Recognition. 2014

Geng Q, Zhou Z, Cao X. Survey of recent progress in semantic image segmentation with CNNs. Sci China Inf Sci, 2018, 61: 051101

Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions. In: Proceedings of the IEEE Conference Computer Vision and Pattern Recognition. 2015. 1–9

He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference Computer Vision and Pattern Recognition. 2016. 770–778

Huang G, Liu Z, Maaten L V D, et al. Densely connected convolutional networks. In: Proceedings of the IEEE Conference Computer Vision and Pattern Recognition. 2017. 2261–2269

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9: 1735–1780

Cho K, Merrienboer B V, Bahdanau D, et al. On the properties of neural machine translation: Encoder-decoder approaches. arXiv: 1409.1259

Jiang Y H, Yu Y F, Huang J Q. Li-ion battery temperature estimation based on recurrent neural networks. Sci China Tech Sci, 2021, 64: 1335–1344

Shi Y, Yao K, Tian L, et al. Deep LSTM based feature mapping for query classification. In: Proceedings of the Conference North American Chapter of the Association for Computational Linguistics. San Diego, 2016. 1501–1511

Zhang H, Cisse M, Dauphin Y N, et al. Mixup: Beyond empirical risk minimization. arXiv: 1710.09412

Yun S, Han D, Oh S J, et al. Cutmix: Regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE International Conference on Computer Vision. 2019. 6023–6032

Inoue H. Data augmentation by pairing samples for images classification. arXiv: 1801.02929

Goodfellow I J, Pouget-Abadie J, Mirza M, et al. Generative adversarial networks. Adv Neural Infor Process Syst, 2014, 2: 2672–2680

Cubuk E D, Zoph B, Mane D, et al. Autoaugment: Learning augmentation strategies from data. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019

Dai W, Yang Q, Xue G, et al. Boosting for transfer learning. In: Proceedings of the International Conference on Machine Learning. Corvallis, 2007. 193–200

Yao Y, Doretto G. Boosting for transfer learning with multiple sources. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2010. 1855–1862

Ben-David S, Blitzer J, Crammer K, et al. Analysis of representations for domain adaptation. In: Proceedings of the International Conference on Neural Information Processing Systems. Vancouver, 2006. 137–144

Pan S J, Kwok J T, Yang Q. Transfer learning via dimensionality reduction. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2008. 677–682

Williams C, Bonilla E V, Chai K M. Multi-task Gaussian process prediction. In: Proceedings of the International Conference on Neural Information Processing Systems. Daegu, 2007. 153–160

Gao J, Fan W, Jiang J, et al. Knowledge transfer via multiple model local structure mapping. In: Proceedings of ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Las Vegas, 2008. 283–291

Mihalkova L, Mooney R. Transfer learning with Markov logic networks. In: Proceedings of the ICML-06 Workshop on Structural Knowledge Transfer for Machine Learning. Pittsburgh, 2006

Davis J, Domingos P. Deep transfer via second-order Markov logic. In: Proceedings of the Annual International Conference on Machine Learning. Montreal, Canada, 2009. 217–224

Kwitt R, Hegenbart S, Niethammer M. One-shot learning of scene locations via feature trajectory transfer. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. 78–86

Wang Y, Girshick R, Hebert M, et al. Low-shot learning from imaginary data. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 7278–7286

Wang Y, Xu C, Liu C, et al. Instance credibility inference for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 12833–12842

Dixit M, Kwitt R, Niethammer M, et al. Aga: Attribute guided augmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. 7455–7463

Schwartz E, Karlinsky L, Shtok J, et al. Delta-encoder: An effective sample synthesis method for few-shot object recognition. In: Proceedings of the International Conference on Neural Information Processing Systems. Montreal, 2018. 2845–2855

Liu B, Wang X, Dixit M, et al. Feature space transfer for data augmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 9090–9098

Cheny Z, Fuy Y, Zhang Y. Multi-level semantic feature augmentation for one-shot learning. IEEE Trans Image Process, 2019, 28: 4594–4605

Hariharan B, Girshick R. Low-shot visual recognition by shrinking and hallucinating features. In: Proceedings of the IEEE International Conference on Computer Vision. 2017. 3037–3046

Li K, Zhang Y, Li K, et al. Adversarial feature hallucination networks for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020

Gao H, Shou Z, Zareian A, et al. Low-shot learning via covariance-preserving adversarial augmentation networks. In: Proceedings of Neural Information Processing Systems. Montreal, 2018. 975–985

Antoniou A, Storkey A, Edwards H. Augmenting image classifiers using data augmentation generative adversarial networks. In: Proceedings of International Conference on Artificial Neural Networks. Kuala Lumpur, 2018. 594–603

Chen Z, Fu Y, Wang Y, et al. Image deformation meta-networks for one-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 8672–8681

Wang Y, Gonzalez-Garcia A, Berga D, et al. MineGAN: Effective knowledge transfer from GANs to target domains with few images. In: Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition. 2020. 9329–9338

Zhang H, Zhang J, Koniusz P. Few-shot learning via saliency-guided hallucination of samples. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 2765–2774

Ren M, Triantafillou E, Ravi S, et al. Meta-learning for semi-supervised few-shot classification. In: Proceedings of International Conference on Learning Representations. Vancouver, 2018

Douze M, Szlam A, Hariharan B, et al. Low-shot learning with large-scale diffusion. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 3349–3358

Yu Z, Chen L, Cheng Z, et al. TransMatch: A transfer-learning scheme for semi-supervised few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 12853–12861

Laffont P Y, Ren Z, Tao X. Transient attributes for high-level understanding and editing of outdoor scenes. ACM Trans Graph, 2014, 33: 1–11

Patterson G, Xu C, Su H. The SUN attribute database: Beyond categories for deeper scene understanding. Int J Comput Vis, 2014, 108: 59–81

Song S, Lichtenberg S P, Xiao J. Sun RGB-D: A RGB-D scene understanding benchmark suite. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015. 567–576

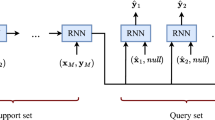

Vinyals O, Blundell C, Lillicrap T, et al. Matching networks for one shot learning. In: Proceedings of International Conference on Neural Information Processing Systems. Barcelona, 2016. 3637–3645

Krizhevsky A, Hinton G. Learning multiple layers of features from tiny images. Handbook of Systemic Autoimmune Diseases. Elsevier, 2009

Wah C, Branson S, Welinder P, et al. The Caltech-UCSD Birds-200–2011 Dataset. Computation and Neural Systems Technical Report. California Institute of Technology, Pasadena, 2011

Griffin G, Holub A, Perona P. Caltech-256 object category dataset. Computation and Neural Systems Technical Report. California Institute of Technology, Pasadena, 2007

Hariharan B, Girshick R. Low-shot visual recognition by shrinking and hallucinating features. In: Proceedings of the IEEE International Conference on Computer Vision. 2017. 3018–3027

Lake B, Salakhutdinov R, Gross J, et al. One shot learning of simple visual concepts. In: Proceedings of annual meeting of the cognitive science society. Boston, 2011

Lecun Y, Bottou L, Bengio Y. Gradient-based learning applied to document recognition. Proc IEEE, 1998, 86: 2278–2324

Koniusz P, Tas Y, Zhang H, et al. Museum exhibit identification challenge for the supervised domain adaptation and beyond. In: Proceedings of the European conference on computer vision, 2018: 788–804

Bertinetto L, Henriques J F, Torr P H S, et al. Meta-learning with differentiable closed-form solvers. arXiv: 1805.08136

Thomee B, Shamma D A, Friedland G. YFCC100M. Commun ACM, 2016, 59: 64–73

Koch G, Zemel R, Salakhutdinov R. Siamese neural networks for one-shot image recognition. In: Proceedings of International Conference on Machine Learning. Lille, 2015

Kang D, Kwon H, Min J, et al. Relational embedding for few-shot classification. In: Proceedings of the IEEE International Conference on Computer Vision. 2021. 8822–8833

Ye M, Guo Y. Deep triplet ranking networks for one-shot recognition. arXiv: 1804.07275

Mehrotra A, Dukkipati A. Generative adversarial residual pairwise networks for one shot learning. arXiv: 1703.08033

Zhang C, Cai Y, Lin G, et al. DeepEMD: Few-shot image classification with differentiable Earth mover’s distance and structured classifiers. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 12200–12210

Snell J, Swersky K, Zemel R S. Prototypical networks for few-shot learning. In: Proceedings of International Conference on Neural Information Processing Systems. Long Beach, 2017. 4080–4090

Sung F, Yang Y, Zhang L, et al. Learning to compare: Relation network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 1199–1208

Garcia V, Bruna J. Few-shot learning with graph neural networks. arXiv: 1711.04043

Prol H, Dumoulin V, Herranz L. Cross-modulation networks for few-shot learning. arXiv: 1812.00273

Lu S, Ye H J, Zhan D C. Tailoring embedding function to heterogeneous few-shot tasks by global and local feature adaptors. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2021. 8776–8783

Zhang L, Liu J, Luo M. Scheduled sampling for one-shot learning via matching network. Pattern Recognit, 2019, 96: 106962

Li H, Eigen D, Dodge S, et al. Finding task-relevant features for few-shot learning by category traversal. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 1–10

Ye H J, Hu H, Zhan D C, et al. Few-shot learning via embedding adaptation with set-to-set functions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 8805–8814

Zheng Y, Wang R, Yang J. Principal characteristic networks for few-shot learning. J. Visual Commun Image Represent, 2019, 59: 563–573

Zhang B Q, Li X T, Ye Y M, et al. Prototype completion with primitive knowledge for few-shot learning. arXiv: 2009.04960

Gao T Y, Han X, Liu Z Y, et al. Hybrid attention-based prototypical networks for noisy few-shot relation classification. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2019. 6407–6414

Wang Y, Wu X M, Li Q, et al. Large margin few-shot learning. arXiv: 1807.02872

Li X, Yu L, Fu C W. Revisiting metric learning for few-shot image classification. Neurocomputing, 2020, 406: 49–58

Li A, Huang W, Lan X, et al. Boosting few-shot learning with adaptive margin loss. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 12573–12581

Hao F, Cheng J, Wang L. Instance-level embedding adaptation for few-shot learning. IEEE Access, 2019, 7: 100501

Oreshkin B N, Lacoste A, Rodriguez P. TADAM: Task dependent adaptive metric for improved few-shot learning. In: Proceedings of International Conference on Neural Information Processing Systems. Montreal, 2018. 719–729

Zhou Z, Qiu X, Xie J, et al. Binocular mutual learning for improving few-shot classification. In: Proceedings of the IEEE International Conference on Computer Vision. 2021. 8402–8411

Xing C, Rostamzadeh N, Oreshkin B N, et al. Adaptive cross-modal few-shot learning. Adv Neural Infor Process Syst, 2019, 32: 4848–4858

Hu P, Sun X, Saenko K, et al. Weakly-supervised compositional feature aggregation for few-shot recognition. arXiv: 1906.04833

Sun S, Sun Q, Zhou K, et al. Hierarchical attention prototypical networks for few-shot text classification. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong, 2019. 476–485

Simon C, Koniusz P, Nock R, et al. Adaptive subspaces for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 4135–4144

Hilliard N, Phillips L, Howland S, et al. Few-shot learning with metric-agnostic conditional embeddings. arXiv: 1802.04376

Li W, Xu J, Huo J, et al. Distribution consistency based covariance metric networks for few-shot learning. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2019. 8642–8649

Zhang X, Sung F, Qiang Y, et al. Deep comparison: Relation columns for few-shot learning. arXiv: 1811.07100

Hu J, Shen L, Albanie S. Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell, 2020, 42: 2011–2023

Li W, Wang L, Xu J, et al. Revisiting local descriptor based image-to-class measure for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 7253–7260

Zhang H, Koniusz P. Power normalizing second-order similarity network for few-shot learning. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision. 2019. 1185–1193

Koniusz P, Zhang H, Porikli F. A deeper look at power normalizations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 5774–5783

Huang S, Zhang M, Kang Y, et al. Attributes-guided and pure-visual attention alignment for few-shot recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2021. 7840–7847

Hui B, Zhu P, Hu Q, et al. Self-attention relation network for few-shot learning. In: Proceedings of the IEEE International Conference on Multimedia and Expo Workshops. 2019. 198–203

Kim J, Kim T, Kim S, et al. Edge-labeling graph neural network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 11–20

Liu Y, Lee J, Park M, et al. Learning to propagate labels: Transductive propagation network for few-shot learning. In: Proceedings of International Conference on Learning Representations. New Orleans, 2019

Yao H, Zhang C, Wei Y, et al. Graph few-shot learning via knowledge transfer. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2020. 6656–6663

Gidaris S, Komodakis N. Generating classification weights with GNN denoising autoencoders for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 21–30

Yang L, Li L, Zhang Z, et al. DPGN: Distribution propagation graph network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 13387–13396

Huang H, Zhang J, Zhang J, et al. PTN: A poisson transfer network for semi-supervised few-shot learning. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2021. 1602–1609

Venkateswara H, Eusebio J, Chakraborty S, et al. Deep hashing network for unsupervised domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. 5018–5027

Li F F, Fergus R, Perona P. One-shot learning of object categories. IEEE Trans Pattern Anal Machine Intell, 2006, 28: 594–611

Khosla A, Jayadevaprakash N, Yao B, et al. Novel dataset for finegrained image categorization: Stanford dogs. In: Proceedings of CVPR Workshop on Fine-Grained Visual Categorization. Colorado Springs, 2011

Krause J, Stark M, Deng J, et al. 3D object representations for finegrained categorization. In: Proceedings of the IEEE International Conference on Computer Vision Workshops. 2013. 554–561

Hamilton W L, Ying R, Leskovec J. Inductive representation learning on large graphs. In: Proceedings of the International Conference on Neural Information Processing Systems. 2017. 1025–1035

Velickovic P, Cucurull G, Casanova A, et al. Graph attention networks. arXiv: 1710.10903

Hariharan B, Girshick R. Low-shot visual recognition by shrinking and hallucinating features. In: Proceedings of the IEEE International Conference on Computer Vision. 2017. 3018–3027

Finn C, Abbeel P, Levine S. Model-agnostic meta-learning for fast adaptation of deep networks. Int Conf Mach Learn, 2017, 70: 1126–1135

Nichol A, Achiam J, Schulman J. On first-order meta-learning algorithms. arXiv: 1803.02999

Ravi S, Larochelle H. Optimization as a model for few-shot learning. In: Proceedings of International Conference on Machine Learning. Sydney, 2017

Li Z, Zhou F, Fei C, et al. Meta-SGD: Learning to learn quickly for few-shot learning. arXiv: 1707.09835

Xiang J, Havaei M, Chartrand G, et al. On the importance of attention in meta-learning for few-shot text classification. arXiv: 1806.00852

Elsken T, Staffier B, Metzen J H, et al. Meta-learning of neural architectures for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 12362–12372

Ahn P, Hong H G, Kim J. Differentiable architecture search based on coordinate descent. IEEE Access, 2021, 9: 48544–48554

Baik S, Choi J, Kim H, et al. Meta-learning with task-adaptive loss function for few-shot learning. In: Proceedings of the IEEE International Conference on Computer Vision. 2021. 9465–9474

Jamal M A, Qi G J, Shah M. Task-agnostic meta-learning for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 11711–11719

Rusu A A, Rao D, Sygnowski J, et al. Meta-learning with latent embedding optimization. In: Proceedings of International Conference on Learning Representations. New Orleans, 2019

Lee Y and Choi S. Gradient-based meta-learning with learned layer-wise metric and subspace. In: Proceedings of International Conference on Machine Learning. Stockholm, 2018. 2927–2936

Baik S, Hong S, Lee K M. Learning to forget for meta-learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 2379–2387

Yoon J, Kim T, Dia O, et al. Bayesian model-agnostic meta-learning. In: Proceedings of International Conference on Neural Information Processing Systems. Montreal, 2018. 7343–7353

Finn C, Xu K, Levine S. Probabilistic model-agnostic meta-learning. In: Proceedings of International Conference on Neural Information Processing Systems. Montreal, 2018. 9537–9548

Grant E, Finn C, Levine S, et al. Recasting gradient-based meta-learning as hierarchical bayes. In: Proceedings of International Conference on Learning Representations. Vancouver, Canada, 2018

Zhou F, Wu B, Li Z. Deep meta-learning: Learning to learn in the concept space. arXiv: 1802.03596

Bertinetto L, Henriques J F, Valmadre J, et al. Learning feed-forward one-shot learners. In: Proceedings of Neural Information Processing Systems. Barcelona, 2016. 523–531

Zhao F, Zhao J, Yan S, et al. Dynamic conditional networks for few-shot learning. In: Proceedings of European Conference on Computer Vision. Munich, Germany, 2018. 20–36

Wang Y X, Hebert M. Learning to learn: Model regression networks for easy small sample learning. In: Proceedings of European Conference on Computer Vision. Amsterdam, 2016. 616–634

Qi H, Brown M, Lowe D G. Low-shot learning with imprinted weights. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 5822–5830

Guo Y, Cheung N M. Attentive weights generation for few shot learning via information maximization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020

Gidaris S, Komodakis N. Dynamic few-shot visual learning without forgetting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 4367–4375

Qiao S, Liu C, Wei S, et al. Few-shot image recognition by predicting parameters from activations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 7229–7238

Nilsback M E, Zisserman A. Automated flower classification over a large number of classes. In: Proceedings of Indian Conference on Computer Vision, Graphics and Image Processing. Bhubaneswar, 2008. 722–729

Yao B, Jiang X, Khosla A, et al. Human action recognition by learning bases of action attributes and parts. In: Proceedings of the IEEE International Conference on Computer Vision. 2011. 1331–1338

Quattoni A, Torralba A. Recognizing indoor scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2009. 413–420

Kaiser L, Nachum O, Roy A, et al. Learning to remember rare events. In: Proceedings of International Conference on Learning Representations. Toulon, 2017

Santoro A, Bartunov S, Botvinick M, et al. Meta-learning with memory-augmented neural networks. In: Proceedings of the International Conference on Machine Learning. 2016. 1842–1850

Mishra N, Rohaninejad M, Chen X, et al. A simple neural attentive meta-learner. In: Proceedings of International Conference on Learning Representations. Vancouver, 2018

Ramalho T, Garnelo M. Adaptive posterior learning: Few-shot learning with a surprise-based memory module. In: Proceedings of the International Conference on Learning Representations. 2019

Munkhdalai T, Yu H. Meta networks. In: Proceedings of International Conference on Machine Learning. Sydney, 2017. 2554–2563

Munkhdalai T, Yuan X, Mehri S, et al. Rapid adaptation with conditionally shifted neurons. In: Proceedings of International Conference on Machine Learning. Stockholm, 2018. 3664–3673

Cai Q, Pan Y, Yao T, et al. Memory matching networks for one-shot image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 4080–4088

Tokmakov P, Wang Y, Hebert M. Learning compositional representations for few-shot recognition. In: Proceedings of the IEEE International Conference on Computer Vision. 2019. 6371–6380

Peng Z, Li Z, Zhang J, et al. Few-shot image recognition with knowledge transfer. In: Proceedings of the IEEE International Conference on Computer Vision. 2019. 441–449

Zhang H G, Koniusz P, Jian S L, et al. Rethinking class relations: Absolute-relative supervised and unsupervised few-shot learning. arXiv: 2001.03919

Zhou L, Cui P, Jia X, et al. Learning to select base classes for few-shot classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 4623–4632

Triantafillou E, Zemel R, Urtasun R. Few-shot learning through an information retrieval lens. arXiv: 1707.02610

Li A, Luo T, Lu Z, et al. Large-scale few-shot learning: Knowledge transfer with class hierarchy. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 7212–7220

Tao X, Hong X, Chang X, et al. Few-shot class-incremental learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 12183–12192

Mazumder P, Singh P, Rai P. Few-shot lifelong learning. arXiv: 2103.00991

Frikha A, Krompa D, Kopken H G, et al. Few-shot one-class classification via meta-learning. arXiv: 2007.04146

Fan Q, Zhuo W, Tang C K, et al. Few-shot object detection with attention-rpn and multi-relation detector. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. 4012–4021

Dong X, Zheng L, Ma F. Few-example object detection with model communication. IEEE Trans Pattern Anal Mach Intell, 2019, 41: 1641–1654

Wang K, Liew J H, Zou Y, et al. PANet: Few-shot image semantic segmentation with prototype alignment. In: Proceedings of the IEEE International Conference on Computer Vision. 2019. 9196–9205

Zhang C, Lin G, Liu F, et al. CANet: Class-agnostic segmentation networks with iterative refinement and attentive few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. 5212–5221

Chen Y, Hao C, Yang Z X. Fast target-aware learning for few-shot video object segmentation. Sci China Inf Sci, 2022, 65: 182104

Yang H, He X, F Porikli. One-shot action localization by learning sequence matching network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. 1450–1459

Cheng G, Li R, Lang C, et al. Task-wise attention guided part complementary learning for few-shot image classification. Sci China Inf Sci, 2021, 64: 120104

Chen M, Wang X, Luo H, et al. Learning to focus: Cascaded feature matching network for few-shot image recognition. Sci China Inf Sci, 2021, 64: 192105

Pang N, Zhao X, Wang W, et al. Few-shot text classification by leveraging bi-directional attention and cross-class knowledge. Sci China Inf Sci, 2021, 64: 130103

Tjandra A, Sakti S, Nakamura S. Machine speech chain with one-shot speaker adaptation. arXiv: 1803.10525

Xu J, Tan X, Ren Y, et al. LRSpeech: Extremely low-resource speech synthesis and recognition. In: Proceedings of ACM SIGKDD Conference on Knowledge Discovery and Data Mining. San Diego, 2020. 2802–2812

Madotto A, Lin Z, Wu C S, et al. Personalizing dialogue agents via meta-learning. In: Proceedings of Annual Meeting of the Association for Computational Linguistics. Seattle, 2019. 5454–5459

Qian K, Yu Z. Domain adaptive dialog generation via meta learning. In: Proceedings of Annual Meeting of the Association for Computational Linguistics. Seattle, 2019. 2639–2649

Wen G, Fu J, Dai P. DTDE: A new cooperative multi-agent reinforcement learning framework. Innovation, 2021, 2: 100162

Abdo N, Kretzschmar H, Spinello L, et al. Learning manipulation actions from a few demonstrations. In: Proceedings of the IEEE International Conference on Robotics and Automation. 2013. 1268–1275

Duan Y, Andrychowicz M, Stadie B C, et al. One-shot imitation learning. In: Proceedings of International Conference on Neural Information Processing Systems. Long Beach, 2017. 1087–1098

Yu T, Finn C, Xie A, et al. One-shot imitation from observing humans via domain-adaptive meta-learning. arXiv: 1802.01557

Hamaya M, Matsubara T, Noda T, et al. Learning assistive strategies from a few user-robot interactions: Model-based reinforcement learning approach. In: Proceedings of the IEEE International Conference on Robotics andc Automation. 2016. 3346–3351

Lee K, Maji S, Ravichandran A, et al. Meta-learning with differentiable convex optimization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, 2019. 10657–10665

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Key R&D Program of China (Grant No. 2019YFB2102400), and the National Natural Science Foundation of China (Grant No. 92067204).

Rights and permissions

About this article

Cite this article

Wang, J., Liu, K., Zhang, Y. et al. Recent advances of few-shot learning methods and applications. Sci. China Technol. Sci. 66, 920–944 (2023). https://doi.org/10.1007/s11431-022-2133-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-022-2133-1