Abstract

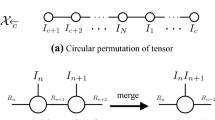

Nonnegative tensor ring (NTR) decomposition is a powerful tool for capturing the significant features of tensor objects while preserving the multi-linear structure of tensor data. The existing algorithms rely on frequent reshaping and permutation operations in the optimization process and use a shrinking step size or projection techniques to ensure core tensor nonnegativity, which leads to a slow convergence rate, especially for large-scale problems. In this paper, we first propose an NTR algorithm based on the modulus method (NTR-MM), which constrains core tensor nonnegativity by modulus transformation. Second, a low-rank approximation (LRA) is introduced to NTR-MM (named LRA-NTR-MM), which not only reduces the computational complexity of NTR-MM significantly but also suppresses the noise. The simulation results demonstrate that the proposed LRA-NTR-MM algorithm achieves higher computational efficiency than the state-of-the-art algorithms while preserving the effectiveness of feature extraction.

Similar content being viewed by others

References

Liu W, Zheng N, You Q. Nonnegative matrix factorization and its applications in pattern recognition. Chin Sci Bull, 2006, 51: 7–18

Lee D D, Seung H S. Learning the parts of objects by non-negative matrix factorization. Nature, 1999, 401: 788–791

Zhou G, Cichocki A, Zhao Q, et al. Nonnegative matrix and tensor factorizations: An algorithmic perspective. IEEE Signal Process Mag, 2014, 31: 54–65

Zhou G, Cichocki A, Zhao Q, et al. Efficient nonnegative tucker decompositions: Algorithms and uniqueness. IEEE Trans Image Process, 2015, 24: 4990–5003

Zhou G X, Yang Z Y, Xie S L, et al. Online blind source separation using incremental nonnegative matrix factorization with volume constraint. IEEE Trans Neural Netw, 2011, 22: 550–560

Zhou G, Zhao Q, Zhang Y, et al. Linked component analysis from matrices to high-order tensors: Applications to biomedical data. Proc IEEE, 2016, 104: 310–331

Zhou G, Cichocki A, Zhang Y, et al. Group component analysis for multiblock data: Common and individual feature extraction. IEEE Trans Neural Netw Learning Syst, 2016, 27: 2426–2439

Liang N, Yang Z, Li Z, et al. Semi-supervised multi-view clustering with graph-regularized partially shared non-negative matrix factorization. Knowledge-Based Syst, 2020, 190: 105185

Liang N, Yang Z, Li Z, et al. Multi-view clustering by non-negative matrix factorization with co-orthogonal constraints. Knowledge-Based Syst, 2020, 194: 105582

Jia Y, Kwong S, Hou J, et al. Semi-supervised non-negative matrix factorization with dissimilarity and similarity regularization. IEEE Trans Neural Netw Learning Syst, 2020, 31: 2510–2521

Shang F, Jiao L C, Wang F. Graph dual regularization non-negative matrix factorization for co-clustering. Pattern Recognition, 2012, 45: 2237–2250

He W, Yao Q, Li C, et al. Non-local meets global: An integrated paradigm for hyperspectral image restoration. IEEE Trans Pattern Anal Mach Intell, 2020, doi: https://doi.org/10.1109/TPAMI.2020.3027563

Li J, Zhou G, Qiu Y, et al. Deep graph regularized non-negative matrix factorization for multi-view clustering. Neurocomputing, 2020, 390: 108–116

Chen X, Zhou G, Wang Y, et al. Accommodating multiple tasks’ disparities with distributed knowledge-sharing mechanism. IEEE Trans Cybern, 2020, doi: https://doi.org/10.1109/TCYB.2020.3002911

Yu J, Zhou G, Li C, et al. Low tensor-ring rank completion by parallel matrix factorization. IEEE Trans Neural Netw Learning Syst, 2021, 32: 3020–3033

Wang K, Gu X F, Yu T, et al. Classification of hyperspectral remote sensing images using frequency spectrum similarity. Sci China Tech Sci, 2013, 56: 980–988

Hu W, Tao D, Zhang W, et al. The twist tensor nuclear norm for video completion. IEEE Trans Neural Netw Learning Syst, 2017, 28: 2961–2973

Cui G C, Zhu L, Gui L H, et al. Multidimensional clinical data de-noising via Bayesian CP factorization. Sci China Tech Sci, 2020, 63: 249–254

Huang Z, Qiu Y, Sun W. Recognition of motor imagery EEG patterns based on common feature analysis. Brain-Comput Interfaces, 2020, 1: 1–9

Qiu Y, Zhou G, Wang Y, et al. A generalized graph regularized nonnegative tucker decomposition framework for tensor data representation. IEEE Trans Cybern, 2020, doi: https://doi.org/10.1109/TCYB.2020.2979344

Qiu Y, Zhou G, Zhang Y, et al. Graph regularized nonnegative tucker decomposition for tensor data representation. In: Proceedings of the ICASSP 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Brighton: IEEE, 2019. 8613–8617

Yu Y, Zhou G, Zheng N, et al. Graph regularized nonnegative tensor ring decomposition for multiway representation learning. arXiv: 2010.05657

Zhao Q, Zhou G, Xie S, et al. Tensor ring decomposition. arXiv: 1606.05535

Zhao Q, Sugiyama M, Yuan L, et al. Learning efficient tensor representations with ring-structured networks. In: Proceedings of the ICASSP 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Brighton: IEEE, 2019. 8608–8612

Lee D, Seung H S. Algorithms for non-negative matrix factorization. Adv Neural Info Process Syst, 2000, 13: 556–562

Cichocki A, Zdunek R, Amari S I. Hierarchical als algorithms for non-negative matrix and 3d tensor factorization. In: Proceedings of the International Conference on Independent Component Analysis and Signal Separation. Springer, 2007. 169–176

Guan N, Tao D, Luo Z, et al. NeNMF: An optimal gradient method for nonnegative matrix factorization. IEEE Trans Signal Process, 2012, 60: 2882–2898

Zheng N, Hayami K, Yin J F. Modulus-type inner outer iteration methods for nonnegative constrained least squares problems. SIAM J Matrix Anal Appl, 2016, 37: 1250–1278

Dong J L, Jiang M Q. A modified modulus method for symmetric positive-definite linear complementarity problems. Numer Linear Algebra Appl, 2009, 16: 129–143

Bai Z Z. Modulus-based matrix splitting iteration methods for linear complementarity problems. Numer Linear Algebra Appl, 2010, 17: 917–933

Zhang Y, Zhou G, Zhao Q, et al. Fast nonnegative tensor factorization based on accelerated proximal gradient and low-rank approximation. Neurocomputing, 2016, 198: 148–154

Zhou G, Cichocki A, Xie S. Fast nonnegative matrix/tensor factorization based on low-rank approximation. IEEE Trans Signal Process, 2012, 60: 2928–2940

Wang P, He Z, Xie K, et al. A hybrid algorithm for low-rank approximation of nonnegative matrix factorization. Neurocomputing, 2019, 364: 129–137

Paatero P. The multilinear enginea table-driven, least squares program for solving multilinear problems, including the n-way parallel factor analysis model. J Comput Graph Stat, 1999, 8: 854–888

Kolda T G, Bader B W. Tensor decompositions and applications. SIAM Rev, 2009, 51:455–500

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Grant Nos. 62073087, 61973087 and 61973090), and the Key-Area Research and Development Program of Guangdong Province (Grant No. 2019B010154002).

Rights and permissions

About this article

Cite this article

Yu, Y., Xie, K., Yu, J. et al. Fast nonnegative tensor ring decomposition based on the modulus method and low-rank approximation. Sci. China Technol. Sci. 64, 1843–1853 (2021). https://doi.org/10.1007/s11431-020-1820-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1820-x